343

20

PointerPatchingAssets

Jason Hughes

Steel Penny Games, Inc.

20.1Introduction

Console development has never been harder. The clock speeds of processors

keep getting higher, the number of processors is increasing, the number of

megabytes of memory available is staggering, even the storage size of optical

media has ballooned to over 30 GB. Doesn’t that make development easier, you

ask? Well, there’s a catch. Transfer rates from optical media have not improved

one bit and are stuck in the dark ages at 8 to 9 MB/s. That means that in the best

possible case of a single contiguous read request, it still takes almost a full

minute to fill 512 MB of memory. Even with an optimistic 60% compression,

that’s around 20 seconds.

As long as 20 seconds sounds, it is hard to achieve without careful planning.

Most engines, particularly PC engines ported to consoles, tend to have the

following issues that hurt loading performance even further:

■ Inter- or intra-file disk seeks can take as much as 1/20th of a second.

■ Time is spent on the CPU processing assets synchronously after loading each

chunk of data.

BadSolutions

There are many ways to address these problems. One popular old-school way to

improve the disk seeks between files is to log out all the file requests and

rearrange the file layout on the final media so that seeks are always forward on

the disk. CD-ROM and DVD drives typically perform seeks forward more

quickly than backward, so this is a solution that only partially addresses the heart

344 20.PointerPatchingAssets

of the problem and does nothing to handle the time wasted processing the data

after each load occurs. In fact, loading individual files encourages a single-

threaded mentality that not only hurts performance but does not scale well with

modern multithreaded development.

The next iteration is to combine all the files into a giant metafile for a level,

retaining a familiar file access interface, like the

FILE type, fopen() function,

and so on, but adding a large read-ahead buffer. This helps cut down further on

the bandwidth stalls, but again, suffers from a single-threaded mentality when

processing data, particularly when certain files contain other filenames that need

to be queued up for reading. This spider web of dependencies exacerbates the

optimization of file I/O.

The next iteration in a system like this is to make it multithreaded. This

basically requires some accounting mechanism using threads and callbacks. In

this system, the order of operations cannot be assured because threads may be

executed in any order, and some data processing occurs faster for some items

than others. While this does indeed allow for continuous streaming in parallel

with the loaded data initialization, it also requires a far more complicated scheme

of accounting for objects that have been created but are not yet “live” in the game

because they depend on other objects that are not yet live. In the end, there is a

single object called a level that has explicit dependencies on all the subelements,

and they on their subelements, recursively, which is allowed to become live only

after everything is loaded and initialized. This undertaking requires clever

management of reference counts, completion callbacks, initialization threads, and

a lot of implicit dependencies that have to be turned into explicit dependencies.

Analysis

We’ve written all of the above solutions, and shipped multiple games with each,

but cannot in good faith recommend any of them. In our opinion, they are

bandages on top of a deeper-rooted architectural problem, one that is rooted in a

failure to practice a clean separation between what is run-time code and what is

tools code.

How do we get into these situations? Usually, the first thing that happens on

a project, especially when an engine is developed on the PC with a fast hard disk

drive holding files, is that data needs to be loaded into memory. The fastest and

easiest way to do that is to open a file and read it. Before long, all levels of the

engine are doing so, directly accessing files as they see fit. Porting the engine to a

console then requires writing wrappers for the file system and redirecting the

calls to the provided file I/O system. Performance is poor, but it’s working. Later,

20.1Introduction 345

some sad optimization engineer is tasked with getting the load times down from

six minutes to the industry standard 20 seconds. He’s faced with two choices:

1. Track down and rewrite all of the places in the entire engine and game where

file access is taking place, and implement something custom and appropriate

for each asset type. This involves making changes to the offline tools

pipeline, outputting data in a completely different way, and sometimes

grafting existing run-time code out of the engine and into tools. Then, deal

with the inevitable ream of bugs introduced at apparently random places in

code that has been working for months or years.

2. Make the existing file access system run faster.

Oh, and one more thing—his manager says there are six weeks left before the

game ships. There’s no question it’s too late to pick choice #1, so the intrepid

engineer begins the journey down the road that leads to the Bad Solution. The

game ships, but the engine’s asset-loading pipeline is forever in a state of

maintenance as new data files make their way into the system.

AnAlternative,High‐Performance,Solution

The technique that top studios use to get the most out of their streaming

performance is to use pointer patching of assets. The core concept is simple.

Many blocks of data that are read from many separate files end up in adjacent

memory at run time, frequently in exactly the same order, and often with pointers

to each other. Simply move this to an offline process where these chunks of

memory are loaded into memory and baked out as a single large file. This has

multiple benefits, particularly that the removal of all disk seeks that are normally

paid for at run time are now moved to a tools process, and that as much data as

possible is preprocessed so that there is extremely minimal operations required to

use the data once it’s in memory. This is as much a philosophical adjustment for

studios as it is a mechanical one.

Considerations

Unfortunately, pointer patching of assets is relatively hard to retrofit into existing

engines. The tools pipeline must be written to support outputting data in this

format. The run time code must be changed to expect data in this format,

generally implying the removal of a lot of initialization code, but more often than

not, it requires breaking explicit dependencies on loading other files directly

during construction and converting that to a tools-side procedure. This sort of

346 20.PointerPatchingAssets

dynamic loading scheme tends to map cleanly onto run-time lookup of symbolic

pointers, for example. In essence, it requires detangling assets from their disk

access entirely and relegating that chore to a handful of higher-level systems.

If you’re considering retrofitting an existing engine, follow the 80/20 rule. If

20 percent of the assets take up 80 percent of the load time, concentrate on those

first. Generally, these should be textures, meshes, and certain other large assets

that engines tend to manipulate directly. However, some highly-processed data

sets may prove fruitful to convert as well. State machines, graphs, and trees tend

to have a lot of pointers and a lot of small allocations, all of which can be done

offline to dramatically improve initialization performance when moved to a

pointer patching pipeline.

20.2OverviewoftheTechnique

The basic offline tools process is the following:

1. Load run-time data into memory as it is represented during run time.

2. Use a special serialization interface to dump each structure to the pointer

patching system, which carefully notes the locations of all pointers.

3. When a coherent set of data relating to an asset has been dumped, finalize the

asset. Coherent means there are no unresolved external dependencies.

Finalization is the only complicated part of the system, and it comprises the

following steps:

(a) Concatenate all the structures into a single contiguous block of memory,

remembering the location to which each structure was relocated.

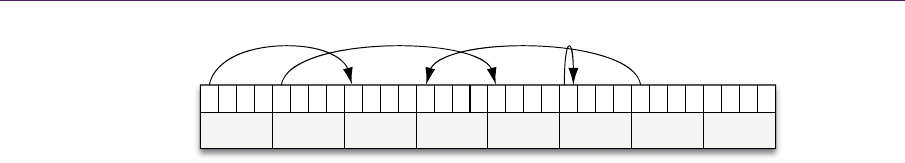

(b) Iterate through all the pointers in each relocated structure and convert the

raw addresses stored into offsets into the concatenated block. See

Figure 20.1 for an example of how relative offsets are used as relocatable

pointers. Any pointers that cannot be resolved within the block are

indications that the serialized data structure is not coherent.

(c) Append a table of pointer locations within the block that will need to be

fixed up after the load has completed.

(d) Append a table of name/value pairs that allows the run-time code to

locate the important starting points of structures.

4. Write out the block to disk for run-time consumption.

20.2OverviewoftheTechnique 347

Figure 20.1. This shows all the flavors of pointers stored in a block of data on disk.

Forward pointers are relative to the pointer’s address and have positive values. Backward

pointers are relative to the pointer’s address and have negative values. Null pointers store

a zero offset, which is a special case when patched and is left zero.

DesirableProperties

There are many ways to get from the above description to actual working code.

We’ve implemented this three different ways over the years and each approach

has had different drawbacks and features. The implementation details of the

following are left as an exercise to the reader, but here are some high-level

properties that should be considered as part of your design when creating your

own pointer patching asset system:

■ Relocatable assets. If you can patch pointers, you can unpatch them as well.

This affords you the ability to defragment memory, among other things—the

holy grail of memory stability.

■ General compression. This reduces load times dramatically and should be

supported generically.

■ Custom compression for certain structures. A couple of obvious candidates

are triangle list compression and swizzled JPEG compression for textures.

There are two key points to consider here. First, perform custom compression

before general compression for maximum benefit and simplest

implementation. This is also necessary because custom compression (like

JPEG/DCT for images) is often lossy and general compression (such as LZ77

with Huffman or arithmetic encoding) is not. Second, perform in-place

decompression, which requires the custom compressed region to take the

same total amount of space, only backfilled with zeros that compress very

well in the general pass. You won’t want to be moving memory around

during the decompression phase, especially if you want to kick off the

decompressor functions into separate jobs/threads.

■ Offline block linking. It is very useful to be able to handle assets generically

and even combine them with other assets to form level-specific packages,

without having to reprocess the source data to produce the pointer patched

0008000C 0000FFF4

Pointer

1

Pointer

2

Pointer

3

Pointer

4

DATA DA TA DATA DATA

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.