Knowing how time was highlighted as an important factor that governs user satisfaction, high priority must be assigned to improve unacceptable client wait time. However, this statement holds enough ambiguity making it hardly solvable from the application development perspective. Hence, performance testing is applied to help break down this wait time in order to identify the portion that is outside the range of an allowed delay and subsequently the components that are causing it.

While testing the performance of an application, three main architectural components play a role in delimiting the different partitions of an operation time. The three components are the client, server, and network. So, the overall time needed for an operation to execute is actually a summation of smaller time portions as the request progresses from the client to the server and back to the client. Therefore, we are going to define a common glossary of the terms to utilize when we speak about the application time performance.

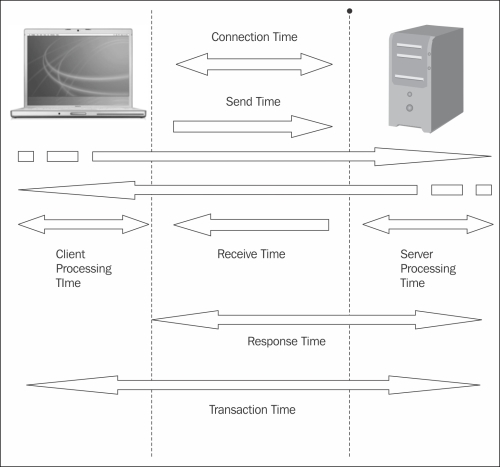

The following diagram depicts the different stages of a request as it propagates between different web application components:

Performance testing time notions

During a transaction, the time spent at the client side, which comprises the request generation time (for example, establishing a connection with the server or handling server data), parsing a query result to draw the corresponding user chart, or any other type of processing related to generating or reading web application requests, is known as client-side latency time, and we will refer to it as client processing time.

The time spent at the server side to process client requests, such as the time taken by the backend library to analyze the requests based on the application business rule or to execute the command on the database, is known as server-side latency time, and we will refer to it as server processing time.

The time spend on the network when transferring data between various workstations is known as network latency time. First of all, it comprises connection time, which is the time spend on the network when the client and the server are trying to establish a connection. Send time is the time spend on the network while the request circulates from the client to the server. The other way around is called receive time.

Other important time notions are response time and transaction time. The response time is known as the time which starts from the moment the user submits a request till the first byte of the result is received. It is calculated as follows:

Response time = send time + server processing time + receive time

The transaction time is the total time needed for a transaction to complete. A transaction is one unit of work and can contain various activities at different application architecture components, such as building the client request to establish a connection, sending the request over the network, parsing the request at the server side and validating credentials, sending the authentication result to the client over the network, and processing the result at the client side.

Now that we have seen the details of transaction time, it is clear how performance testing divides down operations to identify the specific portion that is causing time latencies greater than expected. Following this, the components that are causing performance degradation are identified as bottlenecks and later on targeted for transaction time improvement.