12 Estimation of Mathematical Expectation

12.1 Conditional Functional

Let the analyzed Gaussian stochastic process ξ(t) possess the mathematical expectation defined as

E(t)=E0s(t)(12.1)

the correlation function R(t1, t2), and be observed within the limits of the finite time interval [0, T]. We assume that the law of variation of the mathematical expectation s(t) and correlation function R(t1, t2) are known. Thus, the received realization takes the following form:

x(t)=E(t)+x0(t)=E0s(t)+x0(t), 0≤t≤T,(12.2)

where

x0(t)=x(t)−E(t)(12.3)

is the centered Gaussian stochastic process. The problem with estimating the mathematical expectation is correlated to the problem with estimating the amplitude E(t) of the deterministic signal in the additive Gaussian noise.

The pdf functional of the Gaussian process given by (12.2) takes the following form:

F[x(t)|E0]=B0exp{−0.5T∫0T∫0ϑ(t1,t2)[x(t1)−E0s(t1)]×[x(t2)−E0s(t2)dt1dt2]}.(12.4)

where B0 is the factor independent of the estimated parameter E0; the function

ϑ(t1,t2)=ϑ(t2,t1)(12.5)

is defined from the integral equation

T∫0R(t1,t)ϑ(t1,t2)dt=δ(t2−t1).(12.6)

Introduce the following function:

υ(t)=T∫0s(t1)ϑ(t1,t)dt1,(12.7)

which is a solution of the following integral equation:

T∫0R(t,τ)υ(τ)dτ=s(t).(12.8)

Since the received realization of the Gaussian stochastic process does not depend on the current value of estimation E0, the pdf functional of Gaussian stochastic process can be written in the following form:

F[x(t)|E0]=B1exp{E0T∫0x(t)υ(t)dt−0.5E20T∫0s(t)υ(t)dt},(12.9)

where B1 is the factor independent of the estimated parameter E0.

As applied to analysis of the stationary stochastic process, we can write

{s(t)=1,R(t1,t2)=R(t2−t1)=R(t1−t2).(12.10)

In doing so, the function υ(t) and the pdf functional are determined as

T∫0R(t−τ)υ(τ)dτ=1;(12.11)

F[x(t)|E0]=B1exp{E0T∫0x(t)υ(t)dt−0.5E20T∫0υ(t)dt}.(12.12)

In practice, the stationary stochastic processes occur very often and their correlation functions can be written in the following form:

R(τ)=σ2exp{−α|τ|},(12.13)

R(τ)=σ2exp{−α|τ|}[cosω1ταω1sinω1|τ|],(12.14)

where σ2 is the variance of stationary stochastic process. These correlation functions correspond to the stationary stochastic processes obtained as a result of excitation of the RC-circuit, α = (RC)−1, and the RLC-circuit, ω21=ω20−α2,ω20=(LC)−1>α=R(2L)−1 inputs by the “white” noise.

Solution of (12.8) for the correlation functions given by (12.13) and (12.14) takes the following form, respectively:

υ(t)=α2σ2[s(t)−α−2s″(t)]+1σ2{[s(0)−α−1s′(0)]δ(t)+[s(T)+α−1s′(T)]δ(T−t)},(12.15)

υ(t)=14σ2αω20[s″″(t)+2(ω20−2α2)s″(t)+ω40s(t)]+12σ2αω20{[s″′(0)+(ω20−4α2)s′(0)+2αω20s(0)]δ(t)−[s″′(T)+(ω20−4α2)s′(T)−2αω20s(T)]δ(t−T)+[s″(0)−2αs′(0)+ω20s(0)]δ′(t)−[s″(T)+2αs′(T)+ω20s(T)]δ′(t−T)}.(12.16)

The notations s′(t), s″(t), s′″(t), s″″(t) mean the derivatives of the first, second, third, and fourth order with respect to t, respectively. If the function s(t) and its derivatives at t = 0 and t = T become zero, then (12.15) and (12.16) have a simple form. As applied to stochastic process at s(t) = 1 = const, (12.15) and (12.16) have the following form:

υ(t)=α2σ2+1σ2[δ(t)+δ(T−t)],(12.17)

υ(t)=ω204ασ2+1σ2[δ(t)+δ(t−T)]+12ασ2[δ′(t)−δ′(t−T)].(12.18)

The following spectral densities

S(ω)=2ασ2α2+ω2(12.19)

and

S(ω)=4ασ2(ω21+α2)ω4−2ω2(ω21−α2)+(ω21+α2)2(12.20)

correspond to the correlation functions given by (12.13) and (12.14), respectively. It is necessary to note that there is no general procedure to solve (12.8). However, if the correlation function of stochastic process depends on the absolute value of difference of arguments |t2 − t1| and the observation time T is much more than the correlation interval defined as

τcor=1σ2∞∫0|R(τ)|dτ=∞∫0|ℛ(τ)|dτ,(12.21)

where

ℛ(τ)=R(τ)σ2(12.22)

is the normalized correlation function, and the function s(t) and its derivatives at t = 0 and t = T become zero, it is possible to define the approximate solution of the integral equation (12.8) using the Fourier transform. Applying the Fourier transform to the left and right side of the following equation

∞∫−∞R(t−τ)˜υ(τ)dτ=s(t),(12.23)

it is not difficult to use the inverse. Fourier transform in order to obtain

˜υ(t)=12π∞∫−∞S(ω)S(ω)exp{jωt}dω.(12.24)

where

S (ω) is the Fourier transform of the correlation function R(τ)

S(ω) is the Fourier transform of mathematical expectation of the function s (t), which can be defined as

S(ω)=∞∫−∞R(τ)exp{−jωτ}dτ,(12.25)

S(ω)=∞∫−∞s(t)exp{−jωt}dt.(12.26)

The inverse Fourier transform gives us the following formulae:

R(τ)=12π∞∫−∞S(ω)exp{jωτ}dω,(12.27)

s(t)=12π∞∫−∞S(ω)exp{jωτ}dω.(12.28)

If the function s(t) and its derivatives do not become zero at t = 0 and t = T and the function S(ω) is a ratio of two polynomials of pth and dth orders, respectively, with respect to ω2 and d > p, then there is a need to add the delta function δ(t) and its derivative δ′(t) taken at t = 0 and t = T. Thus, there is a need to define the solution for Equation 12.8 in the following form:

υ(t)=˜υ(t)+d−1∑μ=0[bμδμ(t)+cμδμ(t−T)].(12.29)

Here, the coefficients bμ and cμ are defined from the solutions of equations obtained under the substitution of (12.29) in (12.8); δμ(t) is the delta function derivative of μth order with respect to the time.

In the case of stationary stochastic process, we have s(t) = 1. In this case, the spectral density takes the following form:

S(ω)=2πδ(ω).(12.30)

From (12.24) and (12.29), we have

υ(t)=S−1(ω=0)+d−1∑μ=0[bμδμ(t)+cμδμ(t−T)].(12.31)

As applied to the stationary stochastic process with the spectral density given by (12.19), we have that d = 1. For this reason, we can write

υ(t)=α2σ2+b0δ(t)+c0δ(t−T).(12.32)

Substituting (12.13) and (12.32) into (12.5), we obtain

0.5[αT∫0exp{−α|t−τ|}dτ+b0σ2exp{−αt}+c0σ2exp{−α(T−t)}]=1.(12.33)

Dividing the integration intervals on two intervals, namely, 0 ≤ τ ≤ t and t ≤ τ ≤ T, after integration we obtain

(b0σ2−1)exp{−αt}+(c0σ2−1)exp{−α(t−T)}=0.(12.34)

This equality is correct if the coefficient of the terms exp{−αt} and exp{−α(t − T)} is equal to zero, that is, b0 = c0 = σ−2. Substituting b0 and c0 into (12.32), we obtain the formula given by (12.17).

Now, consider the exponential function in (12.9). The formula

ρ21=T∫0s(t)υ(t)dt(12.35)

is the deterministic component or, in other words, the signal when the estimated parameter E0 = 1. The random component

T∫0x0(t)υ(t)dt(12.36)

is the noise component. The variance of the noise component taking into consideration (12.8) is defined as

〈[T∫0x0(t)υ(t)dt]2〉=T∫0T∫0〈x0(t1)x1(t2)〉υ(t1)υ(t2)dt1dt2=T∫0s(t)υ(t)dt=ρ21.(12.37)

As we can see from (12.37), ρ21 is the ratio between the power of the signal and the power of the noise. Because of this, we can say that (12.37) is the signal-to-noise ratio (SNR) when the estimated parameter value E0 = 1.

12.2 Maximum Likelihood Estimate of Mathematical Expectation

Consider the conditional functional given by (12.9) of the observed stochastic process. Solving the likelihood equation with respect to the parameter E, we obtain the mathematical expectation estimate

EE=T∫0x(t)υ(t)dtT∫0s(t)υ(t)dt.(12.38)

As applied to analysis of stationary stochastic process, (12.38) becomes simple, namely,

EE=T∫0x(t)υ(t)dtT∫0υ(t)dt.(12.39)

In doing so, at the condition Tτ−1cor→∞ we can neglect the values of stochastic process and its derivatives at t = 0 and t = T under estimation of the mathematical expectation; that is, in other words, we can think that the following approximation is correct:

υ(t)=S−1(ω=0).(12.40)

In this case, we obtain the asymptotical formula for the mathematical expectation estimate of stationary stochastic process, namely,

EE=limT→∞1TT∫0x(t)dt,(12.41)

which is widely used in the theory of stochastic processes to define the mathematical expectation of ergodic stochastic processes with arbitrary pdf. At the large and finite values Tτ−1cor, we can neglect an effect of values of the stochastic process and its derivatives at t = 0 and t = T on the mathematical expectation estimate. As a result, we can write

EE≈1TT∫0x(t)dt.(12.42)

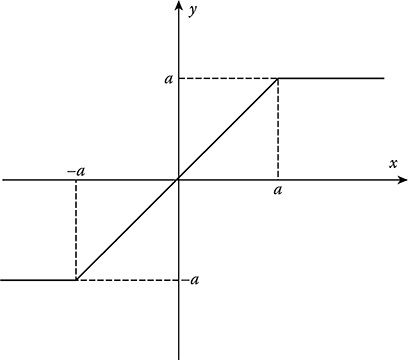

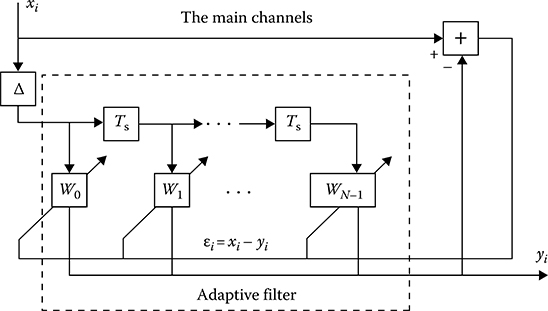

FIGURE 12.1 Optimal structure to define the mathematical expectation estimate.

Although the obtained formulae for the mathematical expectation estimate are optimal in the case of Gaussian stochastic process, these formulae will be optimal for the stochastic process differed from the Gaussian pdf in the class of linear estimations. Equations 12.38 and 12.39 are true if the a priori interval of changes of the mathematical expectation is not limited. Equation 12.38 allows us to define the optimal device structure to estimate the mathematical expectation of the stochastic process (Figure 12.1). The main function is the linear integration of the received realization x (t). with the weight υ(t) that is defined based on the solution of the integral equation (12.8). The decision device issues the output process at the instant t = T. To obtain the current value of the mathematical expectation estimate, the limits of integration in (12.38) must be t − T and t, respectively. Then the parameter estimation is defined as

EE(t)=t∫t−Tx(τ)υ(τ)dτt∫t−Ts(τ)υ(τ)dτ.(12.43)

The weight integration can be done by the linear filter with corresponding impulse response. For this purpose, we introduce the function

υ(τ)=h(t−τ) or h(τ)=υ(t−τ)(12.44)

and substitute this function into (12.43) instead of υ(t) introducing a new variable t − τ = z. Then (12.43) can be transformed to the following form:

EE(t)=T∫0x(t−z)h(z)dzT∫0s(t−z)h(z)dz.(12.45)

The integrals in (12.45) are the output responses of the linear filter with the impulse response h (t) given by (12.44) when the filter input is excited by x (t) and s (t), respectively. The mathematical expectation of estimate

〈EE〉=1ρ21T∫0〈x(t)〉υ(t)dt=E0,(12.46)

that is, the estimate of the maximum likelihood of the mathematical expectation of stochastic process is both the conditionally and unconditionally unbiased estimate. The conditional variance of the mathematical expectation estimate can be presented in the following form:

Var{EE|E0}=〈E2E〉−〈EE〉2=1ρ41T∫0T∫0〈x0(t1)x0(t2)〉υ(t1)υ(t2)dt1dt2=ρ−21,(12.47)

that is, the variance of estimate is unconditional. Since, according to (12.38), the integration of Gaussian stochastic process is a linear operation, the estimate EE is subjected to the Gaussian distribution.

Let the analyzed stochastic process be a stationary process and possess the correlation function given by (12.13). Substituting instead of the function υ(t) its value from (12.17) into (12.47) and integrating with delta functions, we obtain

Var{EE}=2σ22+(T/τcor)=2σ22+αT=2σ22+p,(12.48)

where p is a ratio between the time required to analyze the stochastic process and the correlation interval of the same stochastic process. In doing so, according to (12.38), the formula for the optimal estimate takes the following form:

EE=x(0)+x(T)+αT∫0x(t)dt2+p.(12.49)

If p ≫ 1, we have

Var{EE}≈2σ2p.(12.50)

Formulae (12.48) and (12.49) can be obtained without determination of the pdf functional. For this purpose, the value defined by the following equation:

E*=T∫0h(t)x(t)dt(12.51)

can be considered as the estimate. Here h(t) is the weight function defined based on the condition of unbiasedness of the estimate that is equivalent to

T∫0h(t)dt=1,(12.52)

and minimization of the variance of estimate,

Var{E*}=T∫0T∫0h(t1)h(t2)R(t1,t2)dt1dt2.(12.53)

Transform the formula for the variance of estimate into a convenient form. For this purpose, introduce new variables in the double integral, namely,

τ=t2−t1andt1=z,(12.54)

and change the order of integration. Taking into consideration that R(x) = R(−t), we obtain

Var{E*}=2T∫0R(τ)T−τ∫0h(z)h(z+τ)dzdτ.(12.55)

As was shown in Ref. [1], a definition of optimal form of the weight function h(t) is reduced to a solution of the integral Wiener-Hopf equation

T∫0h(τ)R(τ−s)dτ−Varmin{E}=0, 0≤s≤T,(12.56)

where Varmin{E} is the minimal estimate variance, jointly with the condition given by (12.52). However, the solution of (12.56) is complicated.

Define the formula for an optimal estimate of mathematical expectation of the stationary sto-chastic process possessing the correlation function given by (12.14) and weight function given by (12.18). Substituting (12.18) into the formula for mathematical expectation estimate of the stochastic process defined as (12.38) and calculating the corresponding integrals, the following is obtained:

EE=1TT∫0x(t)dt+2αω20T[x(0)+x(T)]+1ω20T[x′(0)−x′(t)]1+4αω20T.(12.57)

In doing so, the variance of the mathematical expectation estimate is defined as

Var{EE}=4ασ2ω20T+4α.(12.58)

If α ≪ ω0 and ω0T ≫ 1, the formula for the mathematical expectation estimate of the stationary Gaussian stochastic process transforms to the well-known formula of the mathematical expectation definition of the ergodic stochastic process given by (12.42), and the variance of the mathematical expectation estimate is defined as

Var{EE}≈4ασ2ω20T.(12.59)

At ω1 = 0 (ω0 = α), the correlation function given by (12.14), can be transformed into the following form

R(τ)=σ2exp{−α|τ|}(1+α|τ|),τcor=2α(12.60)

by limiting process. In particular, the given correlation function corresponds to the stationary stochastic process at the output of two RC circuits connected in series when the “white” noise excites the input. In this case, the formulae for the mathematical expectation estimate and variance take the following form:

EE=1TT∫0x(t)dt+2αT[x(0)+x(T)]+1α2T[x′(0)−x′(T)]1+4αT,(12.61)

Var{EE}=4σ2αT+4.(12.62)

Relationships between the definition of the estimate and the estimate variance of the mathematical expectation of the stochastic processes with other types of correlation functions can be defined analogously.

As we assumed before, the a priori domain of definition of the mathematical expectation is not limited. Thus, we consider a domain of possible values of the mathematical expectation as a function of the mathematical expectation estimate. Let the a priori domain of definition of the math-ematical expectation be limited both by the upper bound and by the lower bound, that is,

EL≤E≤E0.(12.63)

In the considered case, the mathematical expectation estimate Ê cannot be outside the considered interval given by (12.63), even though it is defined as a position of the absolute maximum of the likelihood functional logarithm (12.9). The likelihood functional logarithm reaches its maximum at E = EE. As a result, when EE ≤ EL the likelihood functional logarithm becomes a monotonically decreasing function within the limits of the interval [EL, EU] and reaches its maximum value at E= EL. If EE ≥ EU, the likelihood functional logarithm becomes a monotonically increasing function within the limits of the interval [EL, EU] and, consequently, reaches its maximum value at E = EU. Thus, in the case of the limited a priori domain of definition of the mathematical expectation, the estimate of mathematical expectation of stochastic process can be presented in the following form:

ˆE={EUifEE>EU,EEifEL≤EE≤EU,ELifEE<EL.(12.64)

Taking into consideration the last relationship, the structure of optimal device for the mathemat-ical expectation estimate determination in the case of the limited a priori domain of mathematical expectation definition can be obtained by the addition of a linear limiter with the following characteristic:

g(z)={EUifz>EU,zifEL≤z≤EU,ELifz<EL(12.65)

to the circuit shown in Figure 12.1. Using the well-known relationships [2] to transform the Gaussian random variable pdf of by a nonlinear inertialess system with the chain characteristic g (z), we can define the conditional pdf of the mathematical expectation estimate as follows:

ρ(ˆE|E0)={PLδ(ˆE−EL)+PUδ(ˆE−EU)+1√2πVar(EE|E0)exp{−(ˆE−E0)22Var(EE|E0)}0, at ˆE<EL,ˆE>EU. at EL≤EE≤EU,(12.66)

Here

{PL=1−Q(EL−E0√Var(EE|E0)),PU=Q(EL−E0√Var(EE|E0));(12.67)

where

Q(z)=1√2π∞∫zexp{−0.5y2}dy(12.68)

is the Gaussian Q function [3, 4]; Var(EE|E0) is the variance given by (12.47). The conditional bias is defined as

b(ˆE|E0)=〈ˆE〉−E0=∞∫−∞(ˆE−E0)p(ˆE|E0)dˆE=PL(EL−E0)+PU(EU−E0)+√Var(EE|E0)2π{exp[−(EL−E0)22Var(EE|E0)]−exp[−(EU−E0)22Var(EE|E0)]}.(12.69)

Thus, in the case of the limited a priori domain of possible values of the mathematical expectation of stochastic process, the maximum likelihood estimate of the stochastic process mathematical expectation is conditionally biased. However, at small variance values of the maximum likelihood estimate of stochastic process mathematical expectation, that is, Var(EE|E0) → 0, as it follows from (12.67) and (12.69), we obtain the asymptotical expression

limVar(EE|E0)→0b(EE|E0)=0;(12.70)

that is, at Var(EE|E0) → 0, the maximum likelihood estimate of mathematical expectation of sto-chastic process is asymptotically unbiased. At the high variance values of the maximum likelihood estimate of stochastic process mathematical expectation, that is, Var(EE|E0) → ∞, the bias of the maximum likelihood estimate of stochastic process mathematical expectation tends to approach

b(EE|E0)=0.5(EL+EU−2E0).(12.71)

The conditional dispersion of the maximum likelihood estimate of stochastic process mathematical expectation is defined as

D(EE|E0)=∞∫−∞(ˆE−E0)2f(EE|E0)dˆE=PL(EL−E0)2+PU(EL−E0)2+Var(1−PU−PL)+√Var(EE|E0)2π{(EL−E0)exp[−(EL−E0)22Var(EE|E0)]−(EU−E0)exp[−(EU−E0)22Var(EE|E0)]}.(12.72)

At small variance values of the maximum likelihood estimate of stochastic process mathematical expectation

Var(EE|E0)EU−EL≪1 and EL<E<EU(12.73)

if the limiting process is carried out at EL → − ∞ and EU → ∞, the conditional dispersion of the maximum likelihood estimate of stochastic process mathematical expectation coincides with the variance of estimate given by (12.47). If the true value of the mathematical expectation coincides with one of two bounds of the a priori domain of possible values of the mathematical expectation, then the following approximation is true:

D(EE|E0)≈0.5Var(EE|E0);(12.74)

that is, the dispersion of estimate is twice as less compared to the unlimited a priori domain case. With increasing variance of the maximum likelihood estimate of stochastic process mathematical expectation Var(EE|E0) → ∞, the conditional dispersion of the maximum likelihood estimate of the stochastic process mathematical expectation tends to approach the finite value since PL = PU = 0.5

D(EE|E0)→0.5[(EL−E0)2+(EU−E0)2],(12.75)

whereas the dispersion of the maximum likelihood estimate of stochastic process mathematical expectation within the unlimited a priori domain of possible values of the maximum likelihood estimate of stochastic process mathematical expectation is increased without limit as Var(EE|E0) → ∞. It is important to note that although the bias and dispersion of the maximum likelihood estimate of stochastic process mathematical expectation are defined as the conditional values, they are never-theless independent of the true value of the mathematical expectation E0 and are the unconditional estimates simultaneously.

Determine the unconditional bias and dispersion of maximum likelihood estimate of stochastic process mathematical expectation in the case of the limited a priori domain of possible estimate values. For this purpose, it is necessary to average the conditional characteristics given by (12.69) and (12.72) with respect to possible values of estimated parameter, assuming that the a priori pdf of estimated parameter is uniform within the limits of the interval [EL, EU]. In this case, we observe that the unconditional estimate is unbiased, and the unconditional dispersion is determined in the following form:

D(ˆE)=Var{1−2Q[EU−EL√Var(EL|E0)]}+23(EU−EL)2Q[EU−EL√Var(EE|E0)]−2Var(EE|E0)√Var(EE|E0)3√2π(EU−EL){1−exp{−(EU−EL)22Var(EE|E0)}}−2√Var(EE|E0)(EU−EL)3√2πexp{−(EU−EL)22Var(EE|E0)}.(12.76)

At the same time, it is not difficult to see that at small values of the variance, that is, Var(EE|E0) → 0, the unconditional dispersion transforms into a dispersion of the estimate obtained under the unlimited a priori domain of possible values, D (Ê) → Var(EE|E0). Otherwise, at high values of variance, that is, Var(EE|E0) → ∞, the dispersion of the estimate given by (12.47) increases without limit and the unconditional dispersion given by (12.76) has a limit equal to the average square of the a priori domain of possible values of the estimate, that is, (EU − EL)2/3.

12.3 Bayesian Estimate of Mathematical Expectation: Quadratic Loss Function

As before, we analyze the realization x(t) of stochastic process given by (12.2). The a posteriori pdf of estimated stochastic process parameter E can be presented in the following form:

ppost(E)=pprior(E)exp{ET∫0x(t)υ(t)dt−E22T∫0s(t)υ(t)dt}∞∫−∞pprior(E)exp{ET∫0x(t)υ(t)dt−E22T∫0s(t)υ(t)dt}dE,(12.77)

where

pprior(E) is the a priori pdf of estimated stochastic process parameter

υ(t) is the solution of the integral equation given by (12.8)

In accordance with the definition given in Section 11.4, the Bayesian estimate γE is the estimate minimizing the unconditional average risk given by (11.29) at the given loss function. As applied to the quadratic loss function defined as

ℒ(γ,E)=(γ−E)2,(12.78)

the average risk coincides with the dispersion of estimate. In doing so, the Bayesian estimate γE is obtained based on minimization of the a posteriori risk at each fixed realization of observed data

γE=∞∫−∞Eppost(E)dE.(12.79)

To define the estimate characteristics, that is, the bias and dispersion, it is necessary to determine two first moments of the random variable γE. However, in the case of the arbitrary a priori pdf of estimated stochastic process parameter E, it is impossible to determine these moments in a general form. In accordance with this statement, we consider the discussed problem for the case of a priori Gaussian pdf of estimated parameter; that is, we assume [5]

pprior(E)=1√2πVarprior(E)exp{−(E−Eprior)22Varprior(E)},(12.80)

where Eprior and Varprior(E) are the a priori values of the mathematical expectation and variance of the mathematical expectation estimate. Substituting (12.80) into the formula defining the Bayesian estimate and carrying out the integration, we obtain

γE=Varprior(E)T∫0x(t)υ(t)dt+EpriorVarprior(E)T∫0s(t)υ(t)dt+1.(12.81a)

It is not difficult to note that if Varprior(E) → ∞, the a priori pdf of estimate is approximated by the uniform pdf of the estimate and the estimate becomes the maximum likelihood estimate (12.38). In the opposite case, that is, Varprior(E) → 0, the a priori pdf of estimate degenerates into the Dirac delta function δ(E − Eprior) and, naturally, the estimate γE will match with Eprior. The mathematical expectation of estimate can be presented in the following form:

〈γE〉=Varprior(E)ρ21E0+EpriorVarprior(E)ρ21+1,(12.81b)

where ρ21 is given by (12.35). In doing so, the conditional bias of the considered estimate is defined as

b(γE|E0)=〈γE〉−E0=Eprior−E0Varprior(E)ρ21+1.(12.82)

Averaging the conditional bias by all possible a priori values E0, we obtain that in the case of the quadratic loss function the Bayesian estimate for the Gaussian a priori pdf is the unconditionally unbiased estimate.

The conditional dispersion of the obtained estimate can be presented in the following form:

D(γE|E0)=〈(γE−E0)2〉=(Eprior−E0)2+Var2prior(E)ρ21{Var2prior(E)ρ21+1}2.(12.83)

We see that the unconditional dispersion coincides with the unconditional variance and is defined as

Var(γE)=D(γE)=Varprior(E)Varprior(E)ρ21+1.(12.84)

If Varprior(E)ρ21≫1, then the variance of the considered Bayesian estimate coincides with the variance of the maximum likelihood estimate given by (12.47). In the opposite case, if Varprior(E)ρ21≪1, the variance of estimate tends to approach

Var(γE)≈Varprior(E){1−Varprior(E)ρ21}.(12.85)

As applied to arbitrary pdfs of estimate, we can obtain the approximated formulae for the bias and dispersion of estimate. For this purpose, we can transform (12.77) by substituting the realization x (t) given by (12.2). Then, we can write

ET∫0x(t)υ(t)dt−E22T∫0s(t)υ(t)dt=ρ2S(E)+ρN(E),(12.86)

where

ρ2=E20ρ21;(12.87)

S(E)=E(2E0−E)2E20;(12.88)

N(E)=EE0ρ1T∫0x0(t)υ(t)dt.(12.89)

The introduced function S (E) and N (E) can be called normalized signal and noise components, respectively. In doing so, they are normalized in such a way that the function S (E) can reach the maximum equal to 0.5 at E = E0:

S(E)max=S(E=E0)=0.5.(12.90)

The noise component N (E) has zero mathematical expectation, and its correlation function is defined as

〈N(E1)N(E2)〉=E1E2E20.(12.91)

At E = E0, the variance of noise component can be presented in the following form:

〈N2(E0)〉=1.(12.92)

As a result, the Bayesian estimate of the mathematical expectation of stochastic process can be written in the following form:

γE=∞∫−∞Epprior(E)exp{ρ2S(E)+ρN(E)}dE∞∫−∞pprior(E)exp{ρ2S(E)+ρN(E)}dE.(12.93)

Consider two limiting cases: the weak and powerful signals or, in other words, the low and high SNR p2.

12.3.1 LOW SIGNAL-TO-NOISE RATIO (ρ2 ≪ 1)

As we can see from (12.93), at low values of the SNR (ρ → 0), the exponential function tends to approach the unit and, as a result, the Bayesian estimate γE coincides with the a priori mathematical expectation

γE(ρ→0)=γ0=∞∫−∞Epprior(E)dE=Eprior.(12.94)

At finite values of the SNR, the difference γE − γ0 is not equal to zero. Closeness γE to γ0 at ρ ≪ 1 allows us to find the estimate characteristics if we are able to define a deviation of γE from γ0 in the form of corresponding approximations and, consequently, the deviation of γE from the true value of the estimated parameter E0, since in general Eprior ≠ E0. At ρ ≪ 1, the estimate γE can be defined in the following approximated form [6]:

γE=γ0+ργ1+ρ2γ2+ρ3γ3+….(12.95)

Considering the exponential function exp{ρ2S (E) + ρN (E)} in (12.93) as a function of ρ, we can expand it in Maclaurin series by ρ. Then, neglecting the terms with an order of more than.4, we can write

∞∫−∞(γE−E)pprior(E)exp{ρ2S(E)+ρN(E)}dE=∞∫−∞(γ0−E+ργ1+ρ2γ2+ρ3γ3+…)pprior(E)×{1+ρN(E)+12ρ2[N2(E)+2S(E)]+16ρ3[N3(E)+6N(E)S(E)]+…}dE=0.(12.96)

Equating with zero the coefficients of terms with the same order ρ, we obtain the formulae for corresponding approximations:

γ0=∞∫−∞Epprior(E)dE=Eprior;(12.97)

γ1=∞∫−∞(E−Eprior)pprior(E)N(E)dE;(12.98)

γ2=∞∫−∞(E−Eprior)pprior(E)[0.5N2(E)+S(E)]dE−γ1∞∫−∞pprior(E)N(E)dE;(12.99)

γ3=16∞∫−∞(E−Eprior)pprior(E)[N3(E)+6N(E)S(E)]dE−γ2∞∫−∞pprior(E)N(E)dE−γ1∞∫−∞pprior(E)[0.5N2(E)+S(E)]dE.(12.100)

To define the approximate values of bias and dispersion of the estimate, it is necessary to determine the corresponding moments of approximations γ1, γ2, and γ3. Taking into consideration that all odd moments of the stochastic process x0(t) are equal to zero, we obtain

〈γ1〉=〈γ3〉=0,(12.101)

〈γ2〉=Varprior(E0−Eprior)E20,(12.102)

〈γ21〉=Var2priorE20,(12.103)

where

Varprior=∞∫−∞E2pprior(E)dE−E2prior(12.104)

is the variance of a priori distribution.

Based on (12.101) through (12.104), we obtain the conditional estimate bias in the following form:

b(γE|E0)=Eprior+ρ2VarpriorE0−EpriorE20−E0=(Eprior−E0)(1−ρ21Varprior).(12.105)

Formula for the conditional bias coincides with the approximation given by (12.82) at low values of SNR ρ21, that is, Varpriorρ21≪1. We can see that the unconditional estimate of mathematical expectation that is averaged with respect to all possible values E0 is unbiased. The conditional dispersion of estimate with accuracy of the order ρ4 and higher is defined in the following form:

D(γE|E0)=〈(γE−E0)2〉≈(Eprior−E0)2+ρ2[〈γ21〉+2(Eprior−E0)(γ2)].(12.106)

Substituting the determined moments, we obtain

D(γE|E0)≈(Eprior−E0)2(1−2Varpriorρ21)+ρ21Var2prior.(12.107)

Averaging (12.107) by all possible values of estimated parameter E0 with the a priori pdf pprior(E0) matched with the pdf pprior(E), we can define the unconditional dispersion of the mathematical expectation estimate defined by approximation in (12.85)

12.3.2 HIGH SIGNAL-TO-NOISE RATIO (ρ2 ≫ 1)

Bayesian estimate of the stochastic process mathematical expectation given by (12.93) can be written in the following form:

γE=∞∫−∞Epprior(E)exp{−ρ2Z(E)}dE∞∫−∞pprior(E)exp{−ρ2Z(E)}dE,(12.108)

where

Z(E)=[S(EE)+ρ−1N(EE)]−[S(E)+ρ−1N(E)];(12.109)

EE is the maximum likelihood estimate given by (12.38). We can see that at the maximum likelihood point E = EE, the function Z(E) reaches its minimum and is equal to zero, that is, Z(E) = 0.

At high values of the SNR ρ2, we can use the asymptotic Laplace formula [7] to determine the integrals in (12.108)

limλ→∞b∫aφ(x)exp{λh(x)}dx≈√2πλh″(x0)exp{λh(x0)}φ(x0),(12.110)

where a < x0 < b and the function h (x) has a maximum at x = x0. Substituting (12.110) into an initial equation for the Bayesian estimate (12.108), we obtain γE ≈ EE. Thus, at high values of the SNR, the. Bayesian estimate of the stochastic process mathematical expectation coincides with the maximum likelihood estimate of the same parameter.

12.4 Applied Approaches to Estimate the Mathematical Expectation

Optimal methods to estimate the stochastic process mathematical expectation envisage the need for having accurate and complete knowledge of other statistical characteristics of the considered stochastic process. Therefore, as a rule, various nonoptimal procedures based on (12.51) are used in practice. In doing so, the weight function is selected in such a way that the variance of estimate tends to approach asymptotically the variance of the optimal estimate.

Thus, let the estimate be defined in the following form:

E*=T∫0h(t)x(t)dt.(12.111)

The function of the following form

h(t)={T−1if0≤t≤T,0ift<0,t>T,(12.112)

is widely used as the weighted function h(t). In doing so, the mathematical expectation estimate of stochastic process is defined as

E*=1TT∫0x(t)dt.(12.113)

Procedure of the estimate definition given by (12.113) coincides with approximation in (12.42) that was delivered based on the optimal rule of estimation in the case of large interval in comparison with the interval of correlation of the considered stochastic process. A device operating according to the rule given by (12.113) is called the ideal integrator.

The variance of mathematical expectation estimate is defined as

Var(E*)=1T2T∫0T∫0R(t2−t1)dt1dt2.(12.114)

We can transform the double integral introducing the new variables, namely, τ = t2 − t1 and t2 = t. Then,

Var(E*)=1T2T∫0{0∫−tR(τ)dτ+T−t∫0R(τ)dτ}dt.(12.115)

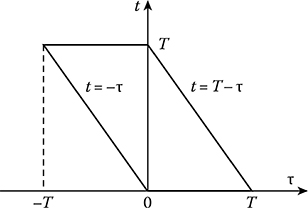

FIGURE 12.2 Integration domains.

The integration domain is shown in Figure 12.2. Changing the integration order, we obtain

Var(E*)=2TT∫0(1−τT)R(τ)dτ.(12.116)

If the interval of observation [0, T] is much more than the correlation interval τcor, we can change the upper integration limit in (12.116) by infinity and neglect the integrand term τ/T in comparison with unit. Then

Var(E*)=2Var(EE|E0)T∞∫0R(τ)dτ.(12.117)

If the normalized correlation function R (τ) is not a sign-changing function of the argument τ, the formula (12.117) takes a simple and obvious form:

Var(E*)=2Var(EE|E0)Tτcor.(12.118)

Consequently, if the ideal integrator integration time is sufficiently large in comparison with the correlation interval of stochastic process, then to determine the variance of mathematical expectation estimate of stochastic process there is a need to know only the values of variance and the ratio between the observation interval and correlation interval.

In the case of the sign-changing normalized correlation function with the argument τ, we can write

T∫0|R(τ)|dτ>∞∫0R(τ)dτ.(12.119)

Thus, at T ≫ τcor the formula for the variance of the mathematical expectation estimate in the case of arbitrary correlation function of stationary stochastic process can be written in the following form:

Var(E*)≤2Var(EE|E0)Tτcor.(12.120)

If T≫ τcor, the formula for the variance of the mathematical expectation estimate can be presented in the form using the spectral density S (co) of stochastic process that is related to the correlation function by the Fourier transform given by (12.25) and (12.27). In doing so, the formula for variance of the mathematical expectation estimate given by (12.117) takes the following form:

Var(E*)≈1T∞∫−∞R(τ)dτ=1T∞∫−∞S(ω){12π∞∫−∞exp{jωτ}dτ}dω.(12.121)

Taking into consideration that

δ(ω)=12π∞∫−∞exp{jωτ}dτ,(12.122)

we obtain

Var(E*)≈1TS(ω)|ω=0.(12.123)

Thus, the variance of the mathematical expectation estimate of stochastic process is proportional to the spectral density value of fuctuation component of the considered stochastic process at co = 0 when the ideal integrator is used as a smoothing circuit. In other words, in the considered case, the variance of the mathematical expectation estimate of stochastic process is defined by spectral components about zero frequency. To obtain the current value of the mathematical expectation estimate and to investigate the realization of stochastic process within the limits of large interval of observation, we use the following estimate:

E*(t)=T∫0h(τ)x(τ)dτ.(12.124)

Evidently, this estimate has the same statistical characteristics as the estimate defined by (12.111).

In practice, the linear low-pass filters with constant parameters defined by the impulse response

h(t)={h(t)att≥0,0att<0,(12.125)

are used as averaging devices. In this case, the formula describing the process at the low-pass filter output, taking into consideration the unbiasedness of estimate, takes the following form:

E*(t)=cT∫0h(τ)x(T−τ)dτ,(12.126)

where the constant factor c can be determined from the following condition:

c=1T∫0h(τ)dτ.(12.127)

If a difference between the measurement instant and instant of appearance of stochastic process at the low-pass filter input is much more than the correlation interval τcor of the considered stochastic process and the low-pass filter time constant, then we can write

E*(t)=∞∫0h(t−τ)x(τ)dτ∞∫0h(τ)dτ.(12.128)

The variance of the mathematical expectation estimate of stochastic process is defined as

Var(E*)=c2T∫0T∫0R(τ1−τ2)h(τ1)h(τ2)dτ1dτ2.(12.129)

Introducing new variables τ1 − τ2 = τ and τ2 = t and changing the order of integration, the formula for the variance of the mathematical expectation estimate can be presented in the following form:

Var(E*)=2c2T∫0R(τ)rh(τ)dτ,(12.130)

where, if T ≫ xcor we can change the upper integration limit on infinity, and the introduced function

rh(τ)=T−τ∫0h(t)h(t+τ)dt, τ>0,(12.131)

corresponds to the correlation function of stochastic process forming at the output of filter with the impulse response h(t) when the “white” noise with the correlation function R(τ) = δ(τ) [8] excites the filter input.

When the process at the low-pass filter is stationary, that is, duration of exciting input stochastic process is much more in comparison with the low-pass filter constant time, the formula for the variance of the mathematical expectation estimate of stochastic process can be written in the following form:

Var(E*)=12π∞∫−∞S(ω)|S(jω)|2dω,(12.132)

using the spectral density

S(jω)=∞∫0h(t)exp{−jωt}dt,(12.133)

that is, the Fourier transform of the impulse response or frequency characteristic of the low-pass filter.

Consider an example where we compute the normalized variance of the mathematical expectation estimate Var(E*)/σ2 as a function of the ratio T/τcor by averaging the investigated stochastic process by the ideal integrator with the pulse response given by (12.112) and RC-circuit, the impulse response of which takes the following form:

h(t)={βexp{−βτ}at0≤t≤T,0atτ<0,τ>T.(12.134)

The corresponding frequency characteristics take the following form:

S(jω)=1−exp{−jωT}jωT;(12.135)

S(jω)=β{1−exp{−(β+jω)T}}β+jω.(12.136)

As an example, consider the stationary stochastic process with the exponential correlation function given by (12.13) where the parameter α is inversely proportional to the correlation interval, that is,

α=1τcor.(12.137)

Substituting (12.13) into (12.116) and (12.130), we obtain the normalized variance of the mathematical expectation estimate for the ideal integrator and RC filter:

Var1(E*)σ2=2p2[p−1+exp{−p}];(12.138)

Var1(E*)σ2=λ1−λ+2λexp{−p(1+λ)}−(1+λ)exp{−2pλ}(1−λ2)[1−exp{−λp}]2,(12.139)

where

p=αT=Tτcor and λ=βα=τcorτRC(12.140)

are the ratio between the observation interval duration and the correlation interval and the ratio between the correlation interval and the RC filter time constant, respectively. The RC filter time constant τRC is defined as the correlation interval (see (12.21)).

If the observation time interval is large, that is, the condition λp ≫ 1 is satisfied, for example, for the RC filter, then the normalized variance of the mathematical expectation estimate will be limited by the RC filter, that is,

Var2(E*)σ2≈λ1+λ.(12.141)

At λ ≪ 1, that is, when the spectral bandwidth of considered stochastic process is much more than the averaging RC filter bandwidth, we can think that the RC filter plays the role of the ideal integrator and the estimate variance formula (12.139) under limiting transition, that is, λ → 0, takes a form given by (12.138).

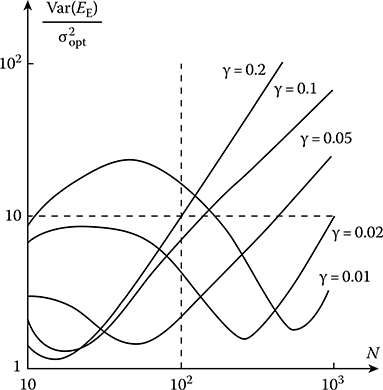

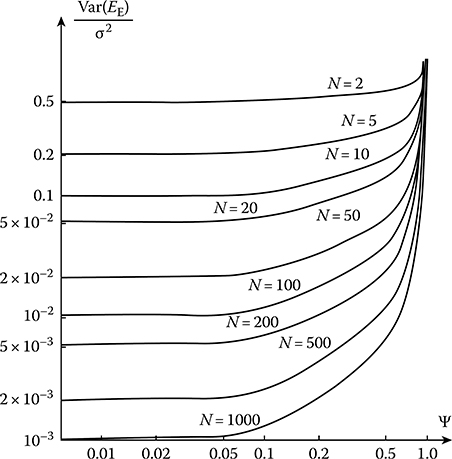

FIGURE 12.3 Normalized variance of the mathematical expectation estimate versus p at various values of λ.

Dependences of the normalized mathematical expectation estimate variances Var(E*)/σ2.ver-sus the ratio p between the observation time interval and the correlation interval for the ideal integrator (the continuous line) and the RC filter (the dashed lines) are presented in Figure 12.3, where λ serves as the parameter. As we can see from Figure 12.3, in the case of the ideal inte-grator, the variance of estimate decreases proportionally to the increase in the observation time interval, but in the case of the RC filter, the variance of estimate is limited by the value given by (12.141). Taking into consideration λ ≪ 1, the normalized variance of the mathematical expectation estimate is limited by the value equal to the ratio between the correlation interval and the RC filter time constant in the limiting case.

It is worthwhile to compare the mathematical expectation estimate using the ideal integrator with the optimal estimate, the variance of which Var(EE) is given by (12.48). Relative increase in the variance using the ideal integrator in comparison with the optimal estimate is defined as

κ=Var1(E*)−Var(EE)Var(EE)=(2+p)[p−1+exp{−p}]p2−1.(12.142)

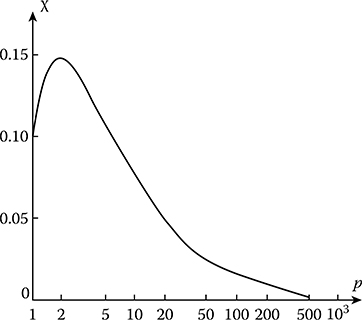

FIGURE 12.4 Relative increases in variance as a function of T/τcor.

Relative increase in the variance as a function of T/τcor is shown in Figure 12.4. As we can see from Figure 12.4, the relative increase in the variance of the mathematical expectation estimate of stochastic process possessing the correlation function given by (12.13) using the ideal integrator is less than 0.01, in comparison with the optimal estimate. At the same time, the maximum relative increase in the variance is 0.14 and corresponds to p≈ 2.7. This maximum increase is caused by a rapid decrease in the optimal estimate variance in comparison with the estimate obtained by the ideal integrator at small values of the observation time interval. However, as p → ∞, both estimates are equivalent, as it was expected.

Consider the normalized variances of the mathematical expectation estimates of stochastic process using the ideal integrator for the following normalized correlation functions that are widely used in practice. We analyze two RCfilters connected in series and the “white” noise excites the input of this linear system. In this case, the normalized correlation function takes the following form:

R(τ)=(1+α|τ|)exp{−α|τ|},α=1RC.(12.143)

In doing so, the normalized variance of the mathematical expectation estimate of stochastic process is defined as

Var3(E*)σ=2[2p1−3+(3+p1)exp{−p1}]p21,(12.144)

where

p1=αT=2Tτcor and τcor=2α.(12.145)

The set of considered stochastic processes has the normalized correlation functions that are approx-imated in the following form:

R(τ)=exp{−α|τ|}cosϖτ.(12.146)

Depending on the relationships between the parameters α and ϖ, the normalized correlation function (12.146) describes both the low-frequency (α ≫ ϖ) and high-frequency (α ≪ ϖ) stochastic processes. The normalized variance of the mathematical expectation estimate of stochastic process with the normalized correlation function given by (12.146) takes the following form:

Var4(E*)σ2=2[p1(1+η2)−(1−η2)]+2exp{−p1}[(1−η2)cosp1η−2ηsinp1η]p21(1+η2)2,(12.147)

where η = ϖαϖ1. At ϖ = 0 (η = 0) in (12.147), as in the particular case, we obtain (12.138); that is, we obtain the normalized variance of the mathematical expectation estimate of stochastic process with the exponential correlation function given by (12.13) under integration using the ideal integrator. In this case, (12.147) is essentially simplified at ϖ = α:

Var4(E*)σ2=p1−exp{−p1}sinp1p21, at ϖ=α.(12.148)

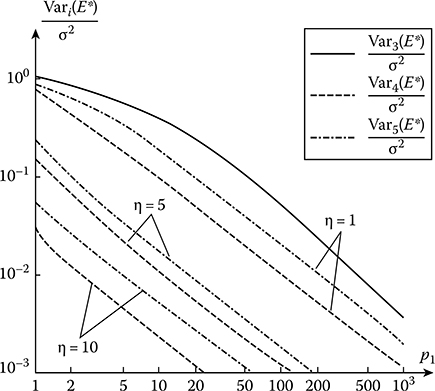

FIGURE 12.5 Normalized variances given by (12.144), (12.147), and (12.149) as functions of p1 with the parameter η.

As applied to the correlation function given by (12.14), the normalized variance of the mathematical expectation estimate of stochastic process is defined by

Var5(E*)σ2=2[2p1(1+η21)−(3−η21)]+2exp{−p1}[(3−η21)cosp1η1−3η1+η−11sin(p1η1)]p21(1+η21)2,(12.149)

where η1 = ϖ1α−1. As ϖ1 → 0 η1 → 0), the correlation function given by (12.14) can be written as the correlation function given by (12.143), and the formula (12.149) is changed to (12.144).

The normalized variances of the mathematical expectation estimate of stochastic process given by (12.144), (12.147), and (12.149) as a function of the parameter p1 with the parameter r), are shown in Figure 12.5. As expected, at the same value of the parameter p1, the normalized variance of the mathematical expectation estimate of stochastic process decreases corresponding to an increase r) characterizing the presence of quasiharmonical components in the considered stochastic process.

Discussed procedures to measure the mathematical expectation assume that there are no limitations of instantaneous values of the considered stochastic process in the course of measurement. Presence of limitations leads to additional errors while measuring the mathematical expectation of stochastic process.

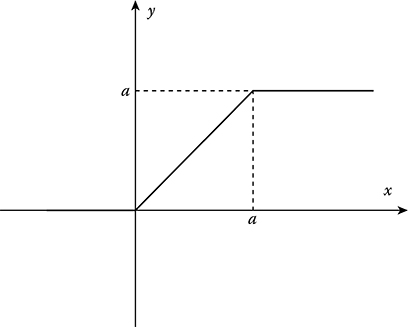

Determine the bias and variance of estimate applied both to the symmetrical inertialess signal limiter (see Figure 12.6) and to the asymmetrical inertialess signal limiter (see Figure 12.7) when the input of the signal limiter is excited by the Rayleigh stochastic process. In doing so, we assume that the mathematical expectation is defined according to (12.113) where we use y(t) = g[x(t)] instead of x(t) and g(x) as the characteristic functions of transformation. The variance of the mathematical expectation estimate of stochastic process is defined by (12.116) where under the correlation function R(x) we should understand the correlation function Ry(τ) defined as

Ry(τ)=∞∫−∞∞∫−∞g(x1)g(x2)p2(x1,x2;τ)dx1dx2−E2y.(12.150)

FIGURE 12.6 Symmetric inertialess signal limiter performance.

FIGURE 12.7 Asymmetric inertialess signal limiter performance.

Let the Gaussian stochastic process excite the input of nonlinear device (Figure 12.6) and the trans-formation be described by the following function:

y=g(x)={aifx>a,xif−a≤x≤a,−aifx<−a.(12.151)

The bias of estimate is defined as

b(E*)=∞∫−∞g(x)p(x)dx−E0=−a−a∫−∞p(x)dx+a∫−axp(x)dx+a∞∫ap(x)dx−E0.(12.152)

Substituting the one-dimensional pdf of Gaussian stochastic process

p(x)=1√2πVar(x)exp{−(x−E0)22Var(x)}(12.153)

into (12.152), we obtain the bias of the mathematical expectation estimate of stochastic process in the following form:

b(E*)=√Var(x){χ[Q(χ−q)−Q(χ+q)]−q[Q(χ+q)+Q(χ−q)]−+1√2π{exp[−0.5(χ+q)2]−exp[−0.5(χ−q)2]}},(12.154)

where

χ=α√Var(x0) and q=E0√Var(x0)(12.155)

are the ratio of the limitation threshold and the mathematical expectation for the square root of the variance of the observed realization of stochastic process; Q(x) is the Gaussian Q function given by (12.68).

To determine the variance of mathematical expectation estimate of stochastic process according to (12.116) and (12.150), there is a need to expand the two-dimensional pdf of Gaussian stochastic process in series [9]

p2(x1,x2;τ)=1Var(x)∞∑v=0Q(v+1)(x1−E0√Var(x))Q(v+1)(x2−E0√Var(x))ℛv(τ)v!,(12.156)

where ℛ(τ) is the normalized correlation function of the initial stochastic process ξ(t);

Q(v+1)(z)=dvdzv[exp{−0.5z2}√2π], v=0,1,2,….(12.157)

are the derivatives of (v + 1)th order of the Gaussian Q function.

Substituting (12.156) into (12.150) and (12.1167) and taking into consideration that

Ey=1σ2∞∫−∞g(x)Q′(x−E0σ)dx,(12.158)

we obtain

Var(E*)=1σ2∞∑v=11v!{∞∫−∞g(x)Q(v+1)(x−E0σ)dx}22TT∫0(1−τT)ℛv(τ)dτ.(12.159)

Computing the integral in the braces, we obtain

Var(E*)=σ2∞∑v=11v![Q(v−1)(χ−q)−Q(v−1)(−χ−q)]22TT∫0(1−τT)ℛv(τ)dτ.(12.160)

As χ → ∞, the derivatives of the Gaussian Q function tend to approach zero. As a result, only the term at ν = 1 remains and we obtain the initial formula (12.116).

In practical applications, the stochastic process measurements are carried out, as a rule, under the conditions of “weak” limitations of instantaneous values, that is, under the condition (χ − |q|) ≥ 1.5 ÷ 2. In this case, the first term at ν = 1 in (12.160) plays a very important role:

Var(E*)≈[1−Q(χ−q)−Q(χ+q)]22σ2TT∫0(1−τT)ℛ(τ)dτ,(12.161)

where, at sufficiently high values, that is, (χ − q) ≥ 3, the term in square brackets is very close to unit and we may use (12.116) to determine the variance of mathematical expectation estimate of stochastic process.

In practice, the Rayleigh stochastic processes are widely employed because this type of stochastic processes have a wide range of applications. In particular, the envelope of narrow-band Gaussian stochastic process described by the Rayleigh pdf can be presented in the following form:

z(t)=x(t)cos[2πf0t+φ(t)],(12.162)

where

x(t) is the envelope

φ(t) is the phase of stochastic process

Representation in (12.162) assumes that the spectral density of narrow-band stochastic process is concentrated within the limits of narrow bandwidth Δf.with the central frequency f0 and the condition f0 ≫ Δf. As applied to the symmetrical spectral density, the correlation function of stationary narrow-band stochastic process takes the following form:

Rz(τ)=σ2ℛ(τ)cos(2πf0τ).(12.163)

In doing so, the one-dimensional Rayleigh pdf can be written as

f(x)=xσ2exp{−x22σ2},x≥0.(12.164)

The first and second initial moments and the normalized correlation function of the Rayleigh stochastic process can be presented in the following form:

{〈ξ(t)〉=√π2σ2,〈ξ2(t)〉=2σ2,ρ(τ)≈ℛ2(τ).(12.165)

As applied to the Rayleigh stochastic process and nonlinear transformation (see Figure 12.7) given as

y=g(x)={aifx>a,xif0≤x≤a,(12.166)

the bias of the mathematical expectation estimate takes the following form:

b(E*)=Ey−E0=∞∫0g(x)f(x)dx−E0=√2πσ2Q(χ),(12.167)

where χ is given by (12.155). As χ → ∞, the mathematical expectation estimate, as it would be expected, is unbiased.

Determining the variance of mathematical expectation estimate of stochastic process in accordance with (12.116) and (12.150) is very difficult in the case of Rayleigh stochastic process. It is evident that to determine the variance of mathematical expectation estimate of stochastic process in the first approximation, the formula (12.116) has to be true, provided the condition χ ≥ 2–3 is satisfied, which is analogous to the Gaussian stochastic process at weak limitation.

12.5 Estimate of Mathematical Expectation at Stochastic Process Sampling

In practice, we use digital measuring devices to measure the parameters of stochastic processes after sampling. Naturally, we do not use a part of information that is outside a sample of stochastic process.

Let the Gaussian stochastic process χ(t) be observed at some discrete instants ti. Then, there are a set of samples xi = x(t), i = 1, 2,…, N at the input of digital measuring device. As a rule, a sample clamping of the observed stochastic process is carried out over equal time intervals Δ = ti+1 − ti. Each sample value can be presented in the following form:

xi=Ei+x0i=Esi+x0i(12.168)

as in (12.2), where Ei = E si = Es(ti) is the mathematical expectation and x0i = x0 (ti) is the realization of the centralized Gaussian stochastic process at the instant t = ti. A set of samples xi are characterized by the conditional N-dimensional pdf

fN(x1,…,xN|E)=1(2π)−0.5N√det‖Rij‖exp{−0.5N∑i=1N∑j=1(xi−Ei)(xj−Ej)Cij},(12.169)

where

det ||Rij|| is the determinant of the correlation matrix ||Rij|| = R of the N × N order

Cij is the elements of the matrix ||Cij|| = C, which is the reciprocal matrix with respect to the correlation matrix and the elements Cij are defined from the following equation:

N∑l=1CijRij=δij={1ifi=j,0ifi≠j.(12.170)

The conditional multidimensional pdf in (12.169) is the multidimensional likelihood function of the parameter E of stochastic process. Solving the likelihood equation with respect to the parameter E, we obtain the formula for the mathematical expectation estimate of stochastic process:

EE=∑Ni=1,j=1xisjCij∑Ni=1,j=1sisjCij.(12.171)

This formula can be written in a simple form if we introduce the weight coefficients

υi=N∑j=1sjCij,(12.172)

which satisfy, as the function υ(t) given by (12.7), the system of equations

N∑l=1Rilυl=si, i=1,2,…,N.(12.173)

In doing so, the mathematical expectation estimate can be presented in the following form:

EE=∑Ni=1xiυi∑Ni=1siυi.(12.174)

The mathematical expectation of estimate takes the following form:

〈EE〉=∑Ni=1〈xi〉υi∑Ni=1siυi=E0.(12.175)

The variance of estimate in accordance with (12.172) can be presented in the following form:

Var(EE)=∑Ni=1,j=1Rijυiυj[∑Ni=1siυi]2=1∑Ni=1siυi.(12.176)

The weight coefficients are determined using a set of linear equations:

{σ2υ1+R1υ2+R2υ3+⋯+RN−1υN=s1,R1υ1+σ2υ2+R1υ3+⋯+RN−2υN=s2,⋮ ⋮RN−1υ1+RN−2υ2+RN−3υ3+…+σ2υN=sN,(12.177)

where Rl = R (lΔ) are the elements of the correlation matrix of difference in time |i − j|Δ = lΔ, l = 0, 1,…, N − 1. The solution derived from this system of linear equations can be presented in the following form:

υj=det‖Gij‖det‖Rij‖, j=1,2,…,N,(12.178)

where det ||Gij|| is the determinant of matrix obtained from the matrix ||Rij|| = R by substituting the column containing elements s1, s2,…, sN instead of the jth column. In the case of independent samples of stochastic process, that is, Rij = 0 at i ≠ j and Rii = σ2, the correlation matrix and its recip-rocal matrix will be diagonal. In doing so, for all i = 1, 2,…, N the weight coefficients are defined as

υi=siσ2.(12.179)

Substituting (12.179) into (12.174) and (12.176) we obtain

EE=∑Ni=1xisi∑Ni=1s2i,(12.180)

Var(EE)=σ2∑Ni=1s2i.(12.181)

If the observed stochastic process is stationary, that is, si = 1 ∀i, i = 1, 2,…, N, even in the presence of independent samples, we obtain the mean and variance of the mean

E*=1NN∑i=1xi;(12.182)

Var(E*)=σ2N,(12.183)

respectively.

Now, consider the estimate of stationary stochastic process mathematical expectation with the correlation function given by (12.13). Denote ψ = exp{−αΔ}. In this case, the correlation matrix takes the following form:

‖Rij‖=σ2N[1ψψ2…ψN−1ψ1ψ…ψN−2ψ2ψ1…ψN−3⋮⋮ψN−1ψN−2ψN−3…1].(12.184)

The determinant of this matrix and its reciprocal matrix are defined in the following form [10]:

det‖Rij‖=σ2N(1−ψ2)N−1,(12.185)

‖Cij‖=1(1−ψ2)σ2[1−ψ0…0−ψ1+ψ2−ψ…00−ψ1+ψ2…0⋮⋮000…1].(12.186)

It is important to note that all elements of the reciprocal matrix are equal to zero, except for the elements of the main diagonal and the elements flanking the main diagonal from right and left. As we can see from (12.172) and (12.186), the optimal values of weight coefficients are defined as

{υ1=υN=1(1+ψ)σ2;υ2=υ3=…=υN−1=1−ψ(1+ψ)σ2.(12.187)

Substituting the obtained weight coefficients into (12.174) and (12.176), we have

EE=(x1+xN)+(1−ψ)∑N−1i=2xiN−(N−2)ψ;(12.188)

Var(EE)=σ21+ψN−(N−2)ψ.(12.189)

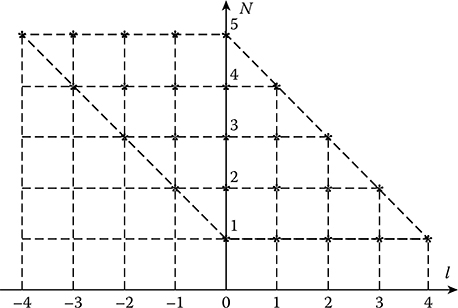

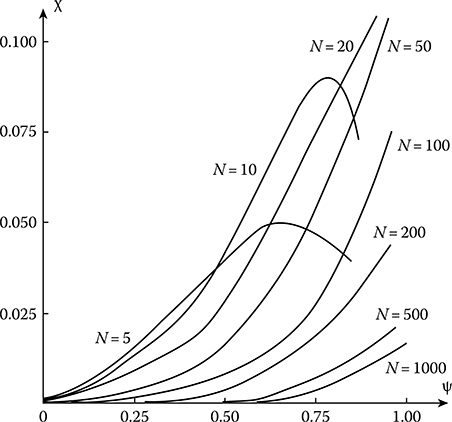

Dependence of the normalized variance of the optimal mathematical expectation estimate versus the values ψ of the normalized correlation function between the samples and the various numbers of samples N is shown in Figure 12.8. As we can see from Figure 12.8, starting from ψ ≥ 0.5, the variance of estimation increases rapidly corresponding to an increase in the value of the normalized correlation function, which tends to approach the variance of the observed stochastic process as ψ → 1.

We can obtain the formulae (12.188) and (12.189) by another way without using the maximum likelihood method. For this purpose, we suppose that

E*=N∑i=1xihi(12.190)

FIGURE 12.8 Normalized variance of the optimal mathematical expectation estimate versus ψ and the number of samples N.

can be used as the estimate, where hi are the weight coefficients satisfying the following condition

N∑i=1hi=1(12.191)

for the unbiased estimations. The weight coefficients are chosen from the condition of minimization of the variance of mathematical expectation estimate. As applied to observation of stationary stochastic process possessing the correlation function given by (12.13), the weight coefficients hi are defined in Ref. [11] and related with the obtained weight coefficients (12.187) by the following relationship:

hi=υi∑Ni=1υi.(12.192)

In the limiting case, as Δ → 0 the formulae in (12.188) and (12.189) are changed into (12.48) and (12.49), respectively. Actually, as Δ → 0 and if (n − 1) Δ = T = const and exp{−αΔ} ≈ 1 − αΔ, the summation in (12.188) is changed by integration and x1 and xN are changed in x (0) and x (T), respectively. In practice, the equidistributed estimate (the mean) given by (12.182) is widely used as the mathematical expectation estimate of stationary stochastic process that corresponds to the constant weight coefficients hi ≈ N−1, i = 1, 2,…, N given by (12.190).

Determine the variance of the mathematical expectation estimate assuming that the samples are equidistant from each other on the value Δ. The variance of the mathematical expectation estimate of stochastic process is defined as

Var(E*)=1N2N∑i=1,j=1R(t1−tj)=1N2N∑i=1,j=1R[(i−j)Δ].(12.193)

FIGURE 12.9 Domain of indices.

The double summation in (12.193) can be changed in a more convenient form. For this purpose, there is a need to change indices, namely, l = i − j and j = j, and change the summation order in the domain shown in Figure 12.9. In this case, we can write

Var(E*)=1N2N∑j=1N−j∑l=−jℛ(lΔ)=1N2{Nσ2+2N−1∑i=1(N−i)ℛ(iΔ)}=σ2N{1+2N−1∑i=1(1−iN)ℛ(iΔ)},(12.194)

where ℛ(iΔ) is the normalized correlation function of observed stochastic process. As we can see from (12.194), if the samples are not correlated the formula (12.183) can be considered as a particular case.

If the correlation function of observed stochastic process is described by (12.13), the variance of the equidistributed estimate of mathematical expectation is defined as

Var(E*)=σ2N(1−ψ2)+2ψ(ψN−1)N2(1−ψ)2,(12.195)

where, as before, ψ = exp{−αΔ}. We have just obtained (12.195) by taking into consideration the formula for summation [12]

N−1∑i=0(a+ir)qi=a−[a+(N−1)r]qN1−q+rq(1−qN−1)1−q2.(12.196)

Computations made by the formula (12.195) show that the variance of the equidistributed estimate of mathematical expectation differs from the variance of the optimal estimate (12.189). Figure 12.10. represents a relative increase in the variance of the equidistributed estimate of mathematical expec-tation in comparison with the variance of the optimal estimate

ε=Var(E*)−Var(EE)Var(EE)(12.197)

FIGURE 12.10 Relative increase in the variance of equidistributed estimate of mathematical expectation as a function of the normalized correlation function between samples.

as a function of values of the normalized correlation function between the samples ψ for various numbers of samples. Naturally, if the relative increase in the variance is low, then the magnitude of the normalized correlation function between samples will be low as well. Similar to the mathematical expectation estimate by the continuous realization of stochastic process, the presence of maxima is explained by the fact that in the case of small numbers of samples N and sufficiently large magnitude ψ, the variance of the optimal estimate decreases rapidly in comparison with the variance of the equidistributed estimate of mathematical expectation. As we can see from Figure 12.10, the magnitude of the normalized correlation function between samples is less than ψ = 0.5, then the optimal and equidistributed estimates coincide practically.

As applied to the normalized correlation function (12.146), the normalized variance of estimate is defined as

Var(E*)σ2=N(1−ψ2)[1+ψ2−2ψcos(Δϖ)]+2ψ(2N+1){cos[(N+1)Δϖ]−2ψcos(NΔϖ)+ψ2cos[(N−1)Δϖ]}N2[1+ψ2−2ψcos(Δϖ)]2−2ψ2[(1+ψ2)cos(Δϖ)−2ψ]N2[1+ψ2−2ψcos(Δϖ)]2,(12.198)

where, as before, ψ = exp{−αΔ}. At ϖ = 0, we obtain the formula (12.195). In the case of large numbers of samples, the formula (12.198) is simplified

Var(E*)σ2≈(1−ψ2)N[1+ψ2−2ψcos(Δϖ)],NΔα≫1.(12.199)

As we can see from (12.199), in the case of stochastic process with the correlation function given by (12.146) the equidistributed estimate may possess the estimate variance that is less than the variance of estimate by the same number of the uncorrelated samples. Actually, if the samples are taken over the interval

Δ=π+2πkϖ, k=0,1,…,(12.200)

then the minimal magnitude of the normalized variance of estimate can be presented in the following form:

Var(E*)σ2|min≈1N×1−ψ1+ψ, NΔα≫1.(12.201)

Otherwise, if the interval between samples is chosen such that

Δ=2πkϖ, k=0,1,….(12.202)

then the maximum value of the normalized variance of estimate takes the following form:

Var(E*)σ2|max≈1N×1+ψ1−ψ.(12.203)

Thus, for some types of correlation functions, the variance of the equidistributed estimate of mathematical expectation by the correlated samples can be lesser than the variance of estimate by the same numbers of uncorrelated samples.

If the interval between samples Δ is taken without paying attention to the conditions discussed previously, then the value Δϖ = φ can be considered as the random variable with the uniform distribution within the limits of the interval [0, 2π]. Averaging (12.199) with respect to the random variable φ uniformly distributed within the limits of the interval [0, 2π], we obtain the variance of the mathematical expectation estimate of stochastic process by N uncorrelated samples

{Var(E*)σ2}φ=1−ψ2N2π∫0dφ1+ψ2−2ψcosφ=1N.(12.204)

Of definite interest for the definition of the mathematical expectation estimate is the method to measure the stochastic process parameters by additional signals [13, 14]. In this case, the realization x(ti) = xi of the observed stochastic process ξ(ti) = ξi is compared with the realization v(ti) = vi of the additional stochastic process ζ(ti) = ζi. A distinct feature of this measurement method is that the values xi of the observed stochastic process realization must be with the high probability within the limits of the interval of possible values of the additional stochastic process. Usually, it is assumed that the values of the additional stochastic process are independent from each other and from the values of the observed stochastic process.

To further simplify an analysis of the stochastic process parameters and the definition of the mathematical expectation, we believe that the values xi are independent of each other and the random variables ζi are uniformly distributed within the limits of the interval [−A, A], that is,

f(v)=12A, −A≤v≤A.(12.205)

As applied to the pdf given by (12.203), the following condition must be satisfied

P[−A≤ξ≤A]≈1(12.206)

in the case of the considered method to measure the stochastic process parameters. As a result of comparison, a new independent random variable sequence ςi is formed:

ςi=xi−vi.(12.207)

The random variable sequence ςi can be transformed to the new independent random variable sequence ηi by the nonlinear inertialess transformation g(ε)

ηi=g(εi)=sgn[ςi=ξi−ζi]={1,ξi≥ζi,−1,ξ<ζi.(12.208)

Determine the mathematical expectation of random variable ηi under the condition that the random variable ξi takes the fixed value x and the following condition |x| ≤ A is satisfied:

〈(ηi|x)〉=1×P(v<x)−1×P(v>x)=2×P(v<x)−1=xA.(12.209)

The unconditional mathematical expectation of the random variable ηi can be presented in the following form:

〈ηi〉=A∫−A〈(ηi|x)〉p(x)dx≈1A∞∫−∞xp(x)dx=E0A.(12.210)

Based on the obtained representation, we can consider the following value

˜E=ANN∑i=1yi,(12.211)

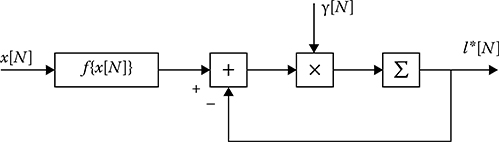

as the mathematical expectation estimate of random variable, where yi is the sample of random sequence ηi. At that point, it is not difficult to see that the considered mathematical expectation estimate is unbiased for the accepted conditions. The structural diagram of device measuring the mathematical expectation using the additional signals is shown in Figure 12.11. The counter defines a difference between the positive and negative pulses forming at the transformer output g(ε).The functional purpose of other elements is clear from the diagram.

FIGURE 12.11 Measurer of mathematical expectation.

If we take into consideration the condition that P(x < −A) ≠ 0 and P(x > A) ≠ 0, then the mathematical expectation estimate given by (12.211) has a bias defined as

b(˜E)=˜E−E0=−{−A∫−∞xp(x)dx+∞∫Axp(x)dx}.(12.212)

The variance of the mathematical expectation given by (12.211) can be presented in the following form:

Var(˜E)=A2N2N∑i=1,j=1〈yiyj〉−E20.(12.213)

Taking into consideration the statistical independence of the samples yi, we have

〈yiyj〉={〈y2i〉,i=j,〈yi〉〈yj〉,i≠j.(12.214)

According to (12.208),

〈η2i〉=〈(ηi|x)2〉=η2i=y2i=1.(12.215)

Consequently, the variance of the mathematical expectation estimate is simplified and takes the following form:

Var(˜E)=A2N(1−E20A2).(12.216)

As we can see from (12.216), since E20<A2, the variance of the mathematical expectation of stochastic process is defined completely by half-interval of possible values of the additional random sequence.

Comparing the variance of the mathematical expectation estimate given by (12.216) with the variance of the mathematical expectation estimate by N independent samples given by (12.183)

Var(˜E)Var(E*)=A2σ2(1−E20A2),(12.217)

we see that the considered procedure to estimate the mathematical expectation possesses the high variance since A2 > σ2 and E20<A2.

If we know a priori that the observed stochastic sequence v(ti) = vi is a positive value, then we can use the following pdf:

p(v)=1A,0≤v≤A,(12.218)

and the following function:

ηi=g(εi)={1,ξi≥ζi,0,ξ<ζi(12.219)

as the nonlinear transformation η = g(ε). In doing so, the following condition must be satisfied:

P[0≤ξ≤A]≈1.(12.220)

As we can see from (12.220), this condition is analogous to the condition given by (12.206).

The conditional mathematical expectation of random variable ηi at ξi = x takes the following form:

〈(ηi||x|)〉=1×P(v<x)+0×P(v>x)=x∫0p(v)dv=xA.(12.221)

In doing so, the unconditional mathematical expectation of the random variable ηi is defined in the following form:

〈ηi〉≈1A∞∫0xp(x)dx=E0A.(12.222)

For this reason, if the mathematical expectation estimate of the random sequence ξi is defined by (12.211) it will be unbiased at the first approximation.

The variance of the mathematical expectation estimate, as we discussed previously, is given by (12.213). In doing so, the conditional second moment of the random variable ηi is determined analogously as shown in (12.221):

〈(ηi|x)2〉=x∫0p(v)dv=xA.(12.223)

The unconditional moment is given by

〈η2i〉=〈y2i〉=∞∫0〈(ηi|x)2〉p(x)dx=E0A.(12.224)

Taking into consideration (12.223) and (12.224) under definition of the variance of the mathematical expectation estimate, we have

Var(˜E)=AE0N(1−E0A).(12.225)

Thus, in the considered case, the variance of the mathematical expectation estimate is defined by the interval of possible values of the additional stochastic sequence and is independent of the variance of the observed stochastic process and is forever more than the variance of the equidistributed estimate of the mathematical expectation by independent samples. For example, if the observed stochastic sequence subjected to the uniform pdf coinciding in the limiting case with (12.218), then the variance of the mathematical expectation for the considered procedure is defined as

Var(˜E)=A24N(12.226)

and the variance of the mathematical expectation in the case of equidistributed estimate of the mathematical expectation is given by

Var(E*)=A212N;(12.227)

that is, the variance of the mathematical expectation estimate is three times more than the variance of the mathematical expectation in the case of equidistributed estimate of the mathematical expectation under the use of additional stochastic signals in the considered limiting case when the observed and additional random sequences are subjected to the uniform pdf. At other conditions, a difference in variances of the mathematical expectation estimate is higher.

As applied to (12.219), the flowchart of the mathematical expectation measurer using additional stochastic signals shown in Figure 12.11 is the same, but the counter defines the positive pulses only in accordance with (12.219).

12.6 Mathematical Expectation Estimate Under Stochastic Process Amplitude Quantization

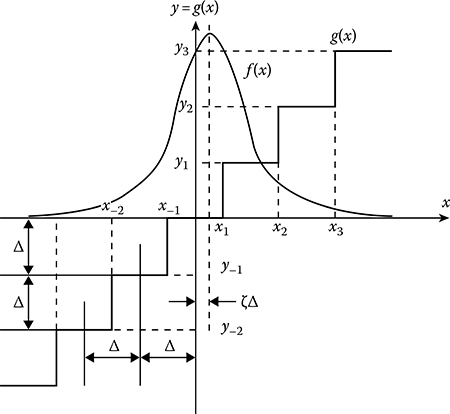

Define an effect of stochastic process quantization by amplitude on the estimate of its mathematical expectation. With all this going on, we assume that a quantization can be considered as the inertia-less nonlinear transformation with the constant quantization step and the number of quantization levels is so high that the quantized stochastic process cannot be outside the limits of staircase characteristic of the transform g(x), the approximate form of which is shown in Figure 12.12. The pdf p(x) of observed stochastic process possessing the mathematical expectation that does not match with the middle between the quantization thresholds xi and xi+1 is presented in Figure 12.12.

Transformation or quantization characteristic y = g(x) can be presented in the form of summation of the rectangular functions shown in Figure 12.13, the width and height of which are equal to the quantization step:

g(x)=∞∑k=−∞kΔa(x−kΔ),(12.228)

where a(z) is the rectangular function with unit height and the width equal to Δ. Hence, we can use the following mathematical expectation estimate

E=∞∑k=−∞kΔTkT,(12.229)

FIGURE 12.12 Staircase characteristic of quantization.

FIGURE 12.13 Rectangular function.

where Tk = Σiτi is the total time when the observed realization is within the limits of the interval (k ± 0.5)Δ during its observation within the limits of the interval [0, T]. In doing so,limT→∞TkT is the probability that the stochastic process is within the limits of the interval (k ± 0.5)Δ.

The mathematical expectation of the realization y(t) forming at the output of inertialess element (transducer) with the transform characteristic given by (12.228) when the realization x(t) of the stochastic process ξ(t) excites the input of inertialess element is equal to the mathematical expectation of the mathematical expectation estimate given by (12.229) and is defined as

〈E〉=∞∫−∞g(x)p(x)dx=Δ∞∑k=−∞k(k+0.5)Δ∫(k−0.5)Δp(x)dx,(12.230)

where p(x) is the one-dimensional pdf of the observed stochastic process. In general, the mathematical expectation of estimate 〈E〉 differs from the true value E0; that is, as a result of quantization we obtain the bias of the mathematical expectation of the mathematical expectation estimate defined as

b(E)=〈E〉−E0.(12.231)

To determine the variance of the mathematical expectation estimate according to (12.116), there is a need to define the correlation function of process forming at the transducer output, that is,

Ry(τ)=∞∫−∞∞∫−∞g(x1)g(x2)p2(x1,x2;τ)dx1dx2−〈E〉2,(12.232)

where p2(x1, x2; τ) is the two-dimensional pdf of the observed stochastic process.

Since the mathematical expectation and the correlation function of process forming at the transducer output depend on the observed stochastic process pdf, the characteristics of the mathematical expectation estimate of stochastic process quantized by amplitude depend both on the correlation function and on the pdf of observed stochastic process.

Apply the obtained results to the Gaussian stochastic process with the one-dimensional pdf given by (12.153) and the two-dimensional pdf takes the following form:

p2(x1,x2;τ)=12πσ2√1−R2(τ)exp{−(x1−E0)2+(x2−E0)2−2R(τ)(x1−E0)(x2−E0)2σ2[1−R2(τ)]}.(12.233)

Define the mathematical expectation E0 using the quantization step Δ:

E0=(c+d)Δ,(12.234)

where c is the integer; −0.5 < d < 0.5. The value dΔ is equal to the deviation of the mathematical expectation from the middle of quantization interval (step). Further, we will take into consideration that for the considered staircase characteristic of transducer (transformer) the following relation is true:

g(x+wΔ)=g(x)+wΔ,(12.235)

where w = 0, ±1, ±2,… is the integer. Substituting (12.153) into (12.230) and taking into consideration (12.234) and (12.235), we can define the conditional mathematical expectation in the following form:

〈E|d〉=1√2πσ2∞∫−∞g(x)exp{−[(x−cΔ)−dΔ]22σ2}dx=∞∑k=−∞kΔ{Q[(k−0.5−d)λ]−Q[(k+0.5−d)λ]}+cΔ,(12.236)

where

λ=Δσ(12.237)

is the ratio between the quantization step and root-mean-square deviation of stochastic process; Q(x) is the Gaussian Q function given by (12.68).

The conditional bias of the mathematical expectation estimate can be presented in the following form:

b(E|d)=Δ∞∑k=−∞k{Q[(k−0.5−d)λ]−Q[(k+0.5−d)λ]}−dΔ.(12.238)

It is easy to see that the conditional bias is the odd function d, that is,

and at that, if d = 0 and d = ±0.5, the mathematical expectation estimate is unbiased. If λ ≫ 1, in practice at λ ≥ 5, (12.238) is simplified and takes the following form:

At λ < 1, the conditional bias can be simplified. For this purpose, we can expand the function in braces in (12.238) into the Taylor series about the point (k − d)λ, but limit to the first three terms of expansion. As a result, we obtain

If λ ≪ 1, the sum in (12.241) can be changed by the integral. Denoting x = λk and dx = λ, we obtain

As we can see from (12.241) and (12.242), at λ ≪ 1, that is, the quantization step is much lower than the root-mean-square deviation of stochastic process, the mathematical expectation estimate is unbiased for all practical training.

To obtain the unconditional bias we assume that d is the random variable uniformly distributed within the limits of the interval [−0.5; 0.5]. Let us take into consideration that

Averaging (12.238) by all possible values of d we obtain

The terms of expansion in series at k = p and k = −p are equal by module and are inverse by sign. Because of this, b(E) = 0; that is, the mathematical expectation estimate of stochastic process quantized by amplitude is unconditionally unbiased. Substituting (12.233) into (12.232), introducing new variables

and taking into consideration (12.235), we obtain

where p2(z1, z2; τ) is the two-dimensional pdf of Gaussian stochastic process with zero mathematical expectation. To determine (12.246) we can present the two-dimensional pdf as expansion in series by derivatives of the Gaussian Q function (12.156) assuming that x = z and E0 = 0 for the last formula. Substituting (12.156) and (12.228) into (12.246) and taking into consideration a parity of the function Q(1)(z/σ) and oddness of the function g(z), we obtain

Taking the integral in the braces by parts and taking into consideration that Q(v)(±∞) = 0 at ν ≥ 1, we obtain

According to (12.228),

where δ(z) is the Dirac delta function. Then the correlation function given by (12.247) takes the following form:

where

and at that the coefficients av are equal to zero at even v; that is, we can write

Substituting (12.250) into (12.116) and taking into consideration (12.252), we obtain the normalized variance of the mathematical expectation estimate of stochastic process:

where

In the limiting case as Δ → 0, we have

As we can see from (12.253), based on (12.255) we obtain (12.116). Computations carried out, λC2v−1, for the first five magnitudes v show that at λ ≤ 1.0 the formula (12.255) is approximately true with the relative error less than 0.02. Taking into consideration this statement, we observe a limitation caused by the first term in (12.253) for the indicated magnitudes A, especially for the reason that the contribution of terms with higher order in the total result in (12.253) decreases proportionally to the factors Thus, if the quantization step is not more than the root-mean-square deviation of the observed Gaussian stochastic process, (12.116) is approximately true for the definition of the variance of mathematical expectation estimate.

12.7 Optimal Estimate of Varying Mathematical Expectation of Gaussian Stochastic Process

Consider the estimate of varying mathematical expectation E(t) of Gaussian stochastic process ξ(t) based on the observed realization x(t) within the limits of the interval [0, T]. At that time, we assume that the centralized stochastic process ξ0(t) = ξ(t) − E(t) is the stationary stochastic process and the time-varying mathematical expectation of stochastic process can be approximated by a linear summation in the following form:

where

αi indicates unknown factors

φi(t) is the given function of time

If the number of terms in (12.256) is finite, that is, if N is finite, there will be a difference between E (t) and expansion in series. However, with increasing N, the error of approximation tends to approach zero:

In this case, we say that the series given by (12.256) approaches the average.

Based on the condition of approximation error square minimum, we conclude that the factors αi are defined from the system of linear equations:

In the case of representation E(t) in the series form (12.256), the functions φi(t) are selected in such a way to ensure fast series convergence. However, in some cases, the main factor in selection of the functions φ(t) is the simplicity of physical realization (generation) of these functions. Thus, the problem of definition of the mathematical expectation E(t) of stochastic process ξ(t) by a single realization x(t) within the limits of the interval [0, T] is reduced to estimation of the coefficients αi in the series given by (12.256). In doing so, the bias and dispersion of the mathematical expectation estimate E(t) of the observed stochastic process caused by measurement errors of the coefficients αi are given in the following form:

where is the estimate of the coefficients αi. Statistical characteristics (the bias and dispersion) of the mathematical expectation estimate of stochastic process averaged within the limits of the observation interval [0, T] take the following form:

The functional of the observed stochastic process pdf with accuracy, until the terms remain independent of the unknown mathematical expectation E(t), can be presented by analogy with (12.4) in the following form:

where

and the function υi(t) is defined by the following integral equation:

Solving the likelihood equation with respect to unknown coefficients αi

we can find the system of N linear equations

with respect to the estimates . Based on (12.268), we can obtain the estimates of coefficients

where

is the determinant of the system of linear equations given by (12.268),

is the determinant obtained from the determinant A given by (12.270) by changing the column cim by the column yi; Aim is the algebraic supplement of the mth column elements (the column yi). In doing so, the following relationship

is true for the quadratic matrix ||cij||.

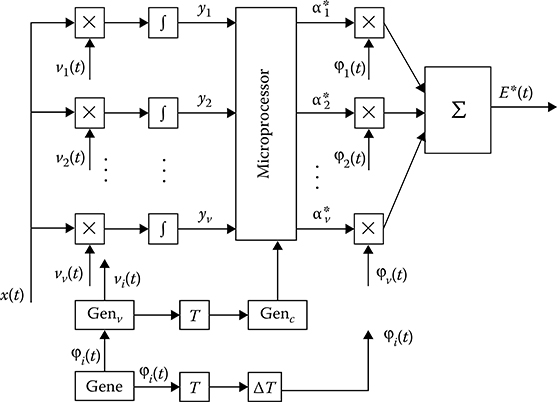

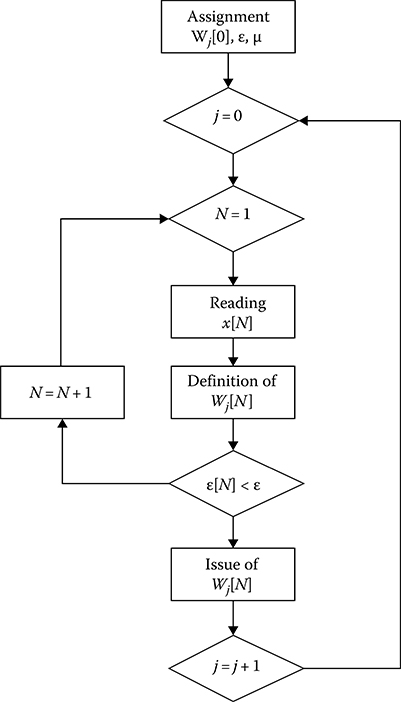

A flowchart of the optimal measurer of varying mathematical expectation of Gaussian stochastic process is shown in Figure 12.14. The measurer operates in the following way. Based on the previously mentioned system of functions φi(t) generated by the “Genφ” and a priori information about the correlation function R(τ) of observed stochastic process, the generator “Genv” forms the system of linear functions vi(t) in accordance with the integral equation (12.266). According to (12.265), the generator “GenC” generates the system of coefficients cij sent to “Microprocessor”. The magnitudes yi come in at the input of “Microprocessor” and also from the outputs of the “Integrator”. Based on a solution of N linear equations given by (12.268) with respect to unknown coefficients αi, the “Microprocessor” generates their estimates. Using the estimates and system of functions φi(t), the estimate E*(t) of time-varying mathematical expectation E(t) is formed according to (12.256). The generated estimate E(t) will have a delay with respect to the true value on T + ΔT that is required to compute the random variables yi and to solve the m linear equations by “Microprocessor”. The delay blocks T and ΔT are used by the flowchart with this purpose.

Substituting x(t) given by (12.2) into (12.264), we can write

where

FIGURE 12.14 Optimal measurer of time-varying mathematical expectation estimate.

Taking into consideration (12.272) and (12.273), the determinant given by (12.271) can be presented in the form of sum of two determinants

where