15 Estimate of Stochastic Process Frequency-Time Parameters

15.1 Estimate of Correlation Function

Considered parameters of the stochastic process, namely, the mathematical expectation, the variance, the probability distribution and the density functions, do not describe statistic dependence between the values of stochastic process at different instants. We can use the following parameters of the stochastic process, such as the correlation function, spectral density, characteristics of spikes, central frequency of narrowband stochastic process, and others, to describe the statistic dependence subject to specific problem. Let us briefly consider the methods to measure these parameters and define the methodological errors associated, in general, with the finite time of observation and analysis applied to the ergodic stochastic processes with zero mathematical expectation.

As applied to the ergodic stochastic process with zero mathematical expectation, the correlation function can be presented in the following form:

R(τ)=limT→∞1TT∫0x(t)x(t−τ)dt.(15.1)

Since in practice the observation time or integration limits (integration time) are finite, the correlation function estimate under observation of stochastic process realization within the limits of the finite time interval [0, T] can be presented in the following form:

R*(τ)=1TT∫0x(t)x(t−τ)dt.(15.2)

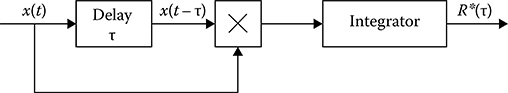

As we can see from (15.2), the main operations involved in measuring the correlation function of ergodic stationary process are the realization of fixed delay τ, process multiplication, and integration or averaging of the obtained product. Flowchart of the measurer or correlator is depicted in Figure 15.1. To obtain the correlation function estimate for all possible values of τ, the delay must be variable. The flowchart shown in Figure 15.1 provides a sequential receiving of the correlation function for various values of the delay τ. To restore the correlation function within the limits of the given interval of delay values τ, the last, as a rule, varies discretely with the step Δτ= τk+1 − τk, k = 0,1,2,…. If the spectral density of investigated stochastic process is limited by the maximum frequency value fmax, then according to the sampling theorem or the Kotelnikov’s theorem, in order to restore the correlation function, we need to employ the intervals equal to

Δτ=12fmax.(15.3)

FIGURE 15.1 Correlator.

However, in practice, it is not convenient to restore the correlation function employing the sampling theorem. As a rule, there is a need to use an interpolation or smoothing of obtained discrete values. As it was proved empirically [1], it is worthwhile to define the discrete step in the following form:

Δτ≈15−10fmax.(15.4)

In doing so, 5 – 10 discrete estimates of correlation function correspond to each period of the highest frequency fmax of stochastic process spectral density.

Approximation to select the value Δτ based on the given error of straight-line interpolation of the correlation function estimate was obtained in Ref. [2]:

Δτ≈1ˆf√0.2|Δℛ|,(15.5)

where |Δℛ| is the maximum allowable value of interpolation error of the normalized correlation function ℛ(τ);

ˆf=√∫∞0f2S(f)df∫∞0S(f)df(15.6)

is the mean-square frequency value of the spectral density S(f) of the stochastic process ξ(t).

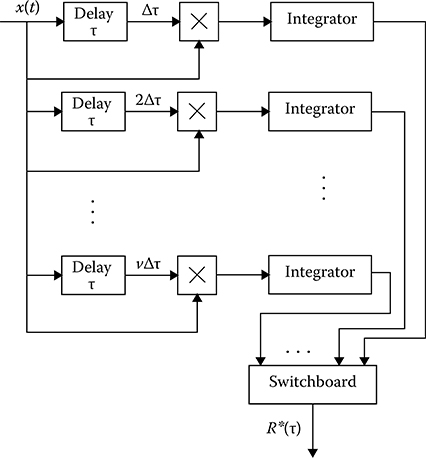

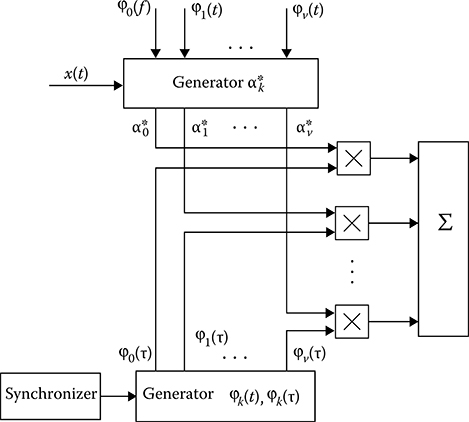

Sequential measurement of the correlation function at various values of τ = kΔτ, k = 0,1,2,…, v is not acceptable forever owing to long-time analysis, in the course of which the measurement conditions can change. For this reason, the correlators operating in parallel can be employed. The flowchart of multichannel correlator is shown in Figure 15.2. The voltage proportional to the discrete value of the estimated correlation function is observed at the output of each channel of the multichannel correlator. This voltage is supplied to the channel commutator, and the correlation function estimate, as a discrete time function, is formed at the commutator output. For this purpose, the commutator output is connected with the low-pass filter input and the low-pass filter possesses the filter time constant adjusted with request speed and a priori behavior of the investigated correlation function.

In principle, a continuous variation of delay is possible too, for example, the linear variation. In this case, the additional errors of correlation function measurements will arise. These errors are caused by variations in delay during averaging procedure. The acceptable values of delay variation velocity are investigated in detail based on the given additional measurement error.

Methods of the correlation function estimate can be classified into three groups based on the principle of realization of delay and other elements of correlators: analog, digital, and analog-to-digital. In turn, the analog measurement procedures can be divided on the methods employing a representation of investigated stochastic process both as the continuous process and as the sampled process. As a rule, physical delays are used by analog methods with continuous representation of investigated stochastic process. Under discretization of investigated stochastic process in time, the physical delay line can be changed by corresponding circuits. While using digital procedures to measure the correlation function estimate, the stochastic process is sampled in time and transformed into binary number by analog-to-digital conversion. Further operations associated with the signal delay, multiplication, and integration are carried out by the shift registers, summator, and so on.

FIGURE 15.2 Multichannel correlator.

Define the statistical characteristics of the correlation function estimate (the bias and variance) given by (15.2). Averaging the correlation function estimate by an ensemble of realizations we obtain the unbiased correlation function estimate. The variance of correlation function estimate can be presented in the following form:

Var{R*(τ)}=1T2T∫0T∫0〈x(t1)x(t1−τ)x(t2)x(t2−τ)〉dt1dt2−R2(τ).(15.7)

The fourth moment 〈x(t1)x(t1 − τ)x(t2)x(t2 − τ)〉 of Gaussian stochastic process can be determined as

〈x(t1)x(t1−τ)x(t2)x(t2−τ)〉=R2(τ)+R2(t2−t1)+R(t2−t1−τ)R(t2−t1+τ).(15.8)

Substituting (15.8) in (15.7) and transforming the double integral into a single one by introduction of the new variable t2 − t1 = τ, we obtain

Var{R*(τ)}=2TT∫0(1−zT)[R2(z)+R(z−τ)(z+τ)]dz.(15.9)

If the observation time interval [0, T] is much more in comparison with the correlation interval of the investigated stochastic process, (15.9) can be simplified as

Var{R*(τ)}=2TT∫0[R2(z)+R(z−τ)R(z+τ)]dz.(15.10)

Thus, we can see that maximum value of variance of the correlation function estimate corresponds to the case τ = 0 and is equal to the variance of stochastic process variance estimate given by (13.61) and (13.62). Minimum value of variance of the correlation function estimate corresponds to the case when τ ≫ τcor and is equal to one half of the variance of the stochastic process variance estimate.

In principle, we can obtain the correlation function estimate, the variance of which tends to approach to zero as τ → T. In this case, the estimate

˜R(τ)=1TT−|τ|∫0x(t)x(t−τ)dt(15.11)

can be considered as the correlation function estimate when the mathematical expectation of stochastic process is equal to zero. This correlation function estimate is characterized by the bias

b[˜R(τ)]=|τ|TR(τ)(15.12)

and variance

Var{˜R(τ)}=2TT−|τ|∫0(1−z+|τ|T)[R2(z)+R(z−τ)R(z+τ)]dz.(15.13)

In practice, a realization of the estimate given by (15.11) is more difficult to achieve compared to a realization of the estimate given by (15.2) since we need to change the value of the integration limits or observation time interval simultaneously with changing in the delay τ employing the one-channel measurer or correlator. Under real conditions of correlation function measurement, the observation time interval is much longer compared to the correlation interval of stochastic process. Because of this, the formula (15.13) can be approximated by the formula (15.10). Note that the correlation function measurement process is characterized by dispersion of estimate. If the correlation function estimate is given by (15.11), in the limiting case, the dispersion of estimate is equal to the square of estimate bias. For example, in the case of exponential correlation function given by (12.13) and under the condition T ≫ τcor, based on (15.10) we obtain

Var{R*(τ)}=σ4αT[1+(2ατ+1)exp{−2ατ}].(15.14)

As applied to the estimate given by (15.11) and the exponential correlation function, the variance of correlation function estimate can be presented in the following form [3]:

Var{˜R(τ)}=σ4αT[1+(2ατ+1)exp{−2ατ}]−σ42α2T2[2ατ+1(4ατ+6α2T2)exp{−2ατ}],(15.15)

when the conditions T ≫ τcor and τ ≫ T are satisfied. Comparing (15.15) and (15.14), we can see that the variance of correlation function estimate given by (15.11) is lesser than the variance of correlation function estimate given by (15.2). When αT ≫ 1, we can discard this difference because it is defined as (αT)−1.

In addition to the ideal integrator, any linear system can be used as an integrator to obtain the correlation function estimate analogously as under the definition of estimates of the variance and the mathematical expectation of the stochastic process. In this case, the function

R*(τ)=c∞∫0h(z)x(t−z)x(t−τ−z)dz(15.16)

can be considered as the correlation function estimate, where, as before, the constant c is chosen from the condition of estimate unbiasedness

c∞∫0h(z)dz=1.(15.17)

As applied to the Gaussian stochastic process, the variance of correlation function estimate as t → ∞ can be presented in the following form:

Var{R*(τ)}=c2T∫0T∫0h(z1)h(z2)[R2(z2−z1)+R(z2−z1−τ)R(z2−z1+τ)]dz1dz2.(15.18)

Introducing new variables z2 − z1 = z, z1 = υ we obtain

Var{R*(τ)}=c2T∫0[R2(z)+R(z−τ)R(z+τ)]dzT−τ∫0h(z+υ)h(υ)dυ+0∫−T[R2(z)+R(z−τ)R(z+τ)]dzT∫0h(z+υ)h(υ)dυ.(15.19)

Introducing the variables y = −z, υ − y and as T → ∞, we obtain

Var{R*(τ)}=c2∞∫0[R2(z)+R(z−τ)R(z+τ)]rh(z)dz,(15.20)

where the function rh(z) is given by (12.131) as T − τ → ∞.

Now, consider how a sampling in time of stochastic process acts on the characteristics of correlation function estimate assuming that the investigated stochastic process is a stationary process. If a stochastic process with zero mathematical expectation is observed and investigated at discrete instants, the correlation function estimate can be presented in the following form:

R*(τ)=1NN∑i=1x(ti)x(ti−τ),(15.21)

where N is the sample size. The correlation function estimate is unbiased, and the variance of correlation function estimate takes the following form:

Var{R*(τ)}=1N2N∑i=1N∑j=1〈x(ti)x(ti−τ)x(tj)x(tj−τ)〉−R2(τ).(15.22)

As applied to the Gaussian stochastic process, the general formula, by analogy with the formula for the variance of correlation function estimate under continuous observation and analysis of stochastic process realization, is simplified and takes the following form:

Var{R*(τ)}=2NN∑i=1(1−iN)[R2(iTp)+R(iTp−τ)(iTp+τ)],(15.23)

where we assume that the samples are taken over equal time intervals, that is, T = ti − ti−1.

If samples are pairwise independent, a definition of the variance of correlation function estimate given by (15.22) can be simplified; that is, we can use the following representation:

Var{R*(τ)}=1N2N∑i=1〈x2(ti)x2(ti−τ)〉−1NR2(τ).(15.24)

As applied to the Gaussian stochastic process with pairwise independent samples, we can write

Var{R*(τ)}=σ4N[1+ℛ2(τ)],(15.25)

where ℛ(τ) is the normalized correlation function of the observed stochastic process. As we can see from (15.25), the variance of correlation function estimate increases with an increase in the absolute magnitude of the normalized correlation function.

The obtained results can be generalized for the estimate of mutual correlation function of two mutually stationary stochastic processes, the realizations of which are x(t) and y(t), respectively:

R*xy(τ)=1TT∫0x(t)y(t−τ)dt.(15.26)

At this time, we assume that the investigated stochastic processes are characterized by zero mathematical expectations. Flowcharts to measure the mutual correlation function of stochastic processes are different from the flowcharts to measure the correlation functions presented in Figures 15.1 and 15.2 by the following: The processes x(t) and y(t − τ) or x(t − τ) and y(t) come in at the input of the mixer instead of the processes x(t) and x(t − τ). The mathematical expectation of mutual correlation function estimate can be presented in the following form:

〈R*xy(τ)〉=1TT∫0〈x(t)y(t−τ)〉dt=Rxy(τ)(15.27)

that means the mutual correlation function estimate is unbiased.

As applied to the Gaussian stochastic process, the variance of mutual correlation function estimate takes the following form:

Var{R*xy(τ)}=1T2T∫0T∫0{Rxx(t2−t1)Ryy(t2−t1)+Rxy[τ−(t2−t1)]Rxy[τ+(t2−t1)]}dt1dt2.(15.28)

As before, we introduce the variables t2 − t1 = z and t1 = υ and reduce the double integral to a single one. Thus, we obtain

{R*xy(τ)}=2TT∫T(1−zT)[Rxr(z)Ryy(z)+Rxy(τ−z)Rxy(τ+z)]dz.(15.29)

At T ≫ τcorx, T ≫ τcory, T ≫ xcorxy, where xcorxy is the interval of mutual correlation of stochastic processes and is determined analogously to (12.21), the integration limits can be expanded until [0, ∞) and we can neglect z/T in comparison with unit. Since the mutual correlation function can reach the maximum value at τ ≠ 0, the maximum variance of mutual correlation function estimate can be obtained at τ ≠ 0.

Now, consider the mutual correlation function between stochastic processes both at the input of linear system with the impulse response h(t) and at the output of this linear system. We assume the input process is the “white”. Gaussian stochastic process with zero mathematical expectation and the correlation function

Rxx(τ)=N02δ(τ).(15.30)

Denote the realization of stochastic process at the linear system input as x(t). Then, a realization of stochastic process at the linear system output in stationary mode can be presented in the following form:

y(t)=∞∫0h(υ)x(t−υ)dυ=∞∫0h(t−υ)x(υ))dυ.(15.31)

The mutual correlation function between x(t − τ) and y(t) has the following form:

Ryx(τ)=〈y(t)x(t−τ)〉=∞∫0h(υ)Rxr(υ−τ)dυ={0.5N0h(τ),τ≥0,0,τ<0.(15.32)

In (15.32) we assume that the integration process covers the point υ = 0.

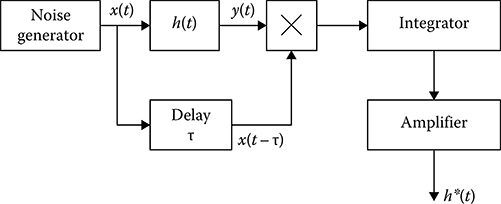

As we can see from (15.32), the mutual correlation function of the stochastic process at the output of linear system in stationary mode, when the “white”. Gaussian noise excites the input of linear system, coincides with the impulse characteristic of linear system accurate with the constant factor. Thus, the method to measure the impulse response is based on the following relationship:

h*(τ)=2N0R*yx(τ)=2N01TT∫0y(t)x(t−τ)dt,(15.33)

FIGURE 15.3 Measurement of linear system impulse response.

where y(t) is given by (15.31). The measuring process of the impulse characteristic of linear system is shown in Figure 15.3. Operation principles are clear from Figure 15.3.

Mathematical expectation of the impulse characteristic estimate

〈h*(τ)〉=2N0TT∫0∞∫0h(υ)〈x(t−υ)x(t−τ)〉dυdt=h(τ),(15.34)

that is, the impulse response estimate is unbiased. The variance of impulse characteristic estimate takes the following form:

Var{h*(τ)}=4N20T2T∫0T∫0〈y(t1)y(t2)x(t1−τ)x(t2−τ)〉dt1dt2−h2(τ).(15.35)

Since the stochastic process at the linear system input is Gaussian, the stochastic process at the output of the linear system is Gaussian, too. If the condition T ≫ τcory is satisfied, where τcory is the correlation interval of the stochastic process at the output of linear system, then the stochastic process at the output of linear system is stationary. For this reason, we can use the following representation for the variance of impulse characteristic estimate

Var{h*(τ)}=4N20T2T∫0T∫0[Rxx(t2−t1)Ryy(t2−t1)+Ryx(t2−t1−τ)Ryx(t2−t1+τ)]dt1dt2.(15.36)

Introducing new variables t2 − t1 = z, as before, the variance of impulse characteristic estimate can be presented in the following form:

Var{h*(τ)}=8N20TT∫0(1−zT)[Rxx(z)Ryy(z)+Ryx(τ−z)Ryx(τ+z)]dt1dt2+2TT∫0(1−zT)h(τ−z)h(τ+z)dz,(15.37)

where [3]

Ryy(z)=N02∞∫0h(υ)h(υ+|z|)dυ(15.38)

is the correlation function of the stochastic process at the linear system output in stationary mode. While calculating the second integral in (15.37), we assume that the observation time interval [0, T] is much more compared to the correlation interval of the stochastic process at the output of linear system τcory. For this reason, in principle, we can neglect the term zT−1 compared to the unit. In this case, the upper integration limits can be approximated by ∞. However, taking into consideration the fact that at τ < 0 the impulse response of linear system is zero, that is, h(τ) = 0, the integration limits with respect to the variable z must satisfy the following conditions:

{0<z<∞,τ−z>0,τ+z>0.(15.39)

Based on (15.39), we can find that 0 < z < τ. As a result, we obtain

Var{h*(τ)}=2T{∞∫0h2(υ)dυ+τ∫0h(τ−υ)h(τ+υ)dυ}.(15.40)

As applied to the impulse responses of the form

h1(τ)=1T1,0<τ<T1,T>T1(15.41)

and

h2(τ)=αexp(−ατ),(15.42)

the variances of impulse characteristic estimates can be presented in the following form:

Var{h*1(τ)}=2TT1×{1+τT1,0≤τ≤0.5T1,2−τT1,0.5T1≤τ≤T1,(15.43)

Var{h*2(τ)}=αT[1+2ατ×exp{−2ατ}].(15.44)

15.2 Correlation Function Estimation Based on its Expansion in Series

The correlation function of stationary stochastic process can be presented in the form of expansion in series with respect to earlier-given normalized orthogonal functions φ(t):

R(τ)=∞∑k=0αkφk(τ),(15.45)

where the unknown coefficients αk are given by

αk=∞∫−∞φk(τ)R(τ)dτ,(15.46)

and (14.123) is true in the case of normalized orthogonal functions. The number of terms of the expansion in series (15.45) is limited by some magnitude v. Under approximation of correlation function by the expansion in series with the finite number of terms, the following error

ε(τ)=R(τ)−v∑k=0αkφk(τ)=R(τ)−Rv(τ)(15.47)

exists forever. This error can be reduced to an earlier-given negligible value by the corresponding selection of the orthogonal functions φ(t) and the number of terms of expansion in series.

Thus, the approximation accuracy will be characterized by the total square of approximated correlation function Rv(τ) deviation from the true correlation function R(i) for all possible values of the argument τ

ε2=∞∫−∞ε2(τ)dτ∞∫−∞R2(τ)dτ−v∑k=0α2k.(15.48)

Formula (15.48) is based on (15.45). The original method to measure the correlation function based on its representation in the form of expansion in series

R*v(τ)=v∑k=0α*kφk(τ)(15.49)

by the earlier-given orthogonal functions φk(t) and measuring the weight coefficients αk was discussed in Ref. [4]. According to (15.46), the following representation

αk=limT→∞∞∫−∞φk(τ){1TT∫0x(t)x(t−τ)dt}dτ(15.50)

is true in the case of ergodic stochastic process with zero mathematical expectation. In line with this fact, the estimates of unknown coefficients of expansion in series can be obtained based on the following representation:

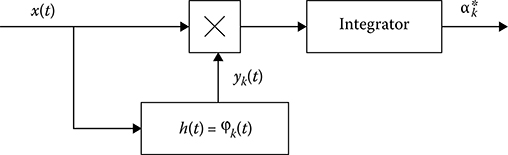

α*k=1TT∫0x(t){∞∫0x(t−τ)φk(τ)dτ}dt.(15.51)

The integral in the braces

yk(t)=∞∫0x(t−τ)φk(τ)dτ(15.52)

is the signal at the output of linear filter operating in stationary mode with impulse response given by

hk(t)=π{0ift<0,φk(t)ift≥0,(15.53)

matched with the earlier-given orthogonal function φ(t). As we can see from (15.51), the mathematical expectation of estimate

〈α*k〉=∞∫0φk(τ){1TT∫0〈x(t)x(t−τ)〉dt}dτ=∞∫0φk(τ)R(τ)dτ=αk(15.54)

is matched with the true value; in other words, the estimate of coefficients of expansion in series is unbiased.

The estimate variance of expansion in series coefficients can be presented in the following form:

Var{α*k}=1T2T∫0T∫0〈x(t1)x(t2)yk(t1)yk(t2)〉dt1dt2−{1TT∫0〈x(t)yk(t)〉dt}2.(15.55)

As applied to the stationary Gaussian stochastic process, the stochastic process forming at the output of orthogonal filter will also be the stationary Gaussian stochastic process for the considered case. Because of this, we can write

Var{α*k}=1T2T∫0T∫0[R(t2−t1)Ryk(t2−t1)+Rxyk(t2−t1)Rykx(t2−t1)]dt1dt2,(15.56)

where

Ryk(τ)=∞∫0∞∫0R(τ+v−κ)φk(κ)φk(v)dκdv;(15.57)

Rxyk(τ)=∞∫0R(τ−v)φk(v)dv;(15.58)

Rykx(τ)=∞∫0R(τ+v)φk(v)dv.(15.59)

Introducing new variables t2 − t1 = τ, t1 = t and changing the order of integration analogously as shown in Section 12.4, we obtain

Var{α*k}=2TT∫0(1−τT)T∫0T∫0[R(τ)Ryk(τ)+Rxyk(τ)Rykx(τ)]dτ.(15.60)

FIGURE 15.4 One-channel measurer of coefficients αk.

Taking into consideration that

〈(α*k)2〉=Var{α*k}+α2k(15.61)

and the condition given by (14.123), we can define the “integral” variance of correlation function estimate

Var{R*v(τ)}=∞∫−∞〈[R*v(τ)−〈R*v(τ)〉]2〉 dτ=v∑k=0Var{α*k}.(15.62)

As we can see from (15.62), the variance of correlation function estimate increases with an increase in the number of terms of expansion in series v. Because of this, we must take into consideration choosing the number of terms under expansion in series.

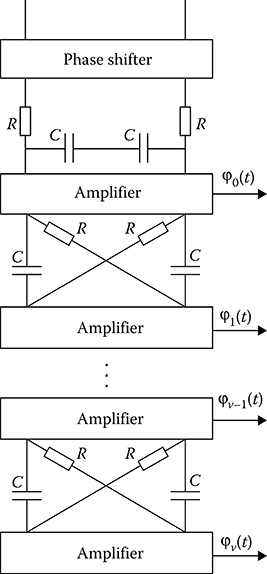

The foregoing formulae allow us to present the flowchart of correlation function measurer based on the expansion of this correlation function in series and the estimate of coefficients of this expansion in series Figure 15.4 represents the one-channel measurer of the current value of the coefficient α*k. Operation of measurer is clear from Figure 15.4. One of the main elements of block diagram of the correlation function measurer is the generator of orthogonal signals or functions (the orthogonal filters with the impulse response given by [15.53]). If the pulse with short duration τp that is much shorter than the filter constant time and amplitude τ-1p excites the input of the orthogonal filter, then a set of orthogonal functions φk(t) are observed at the output of orthogonal filters.

The flowchart of correlation function measurer is shown in Figure 15.5. Operation control of the correlation function measurer is carried out by the synchronizer that stimulates action on the generator of orthogonal signals and allows us to obtain the correlation function estimate with period that is much longer compared to the orthogonal signal duration.

The functions using the orthogonal Laguerre polynomials given by (14.72) and presented in the following form

Lk(αt)=exp{αt}dk[tkexp{−αt}]dtk=k∑μ=0(−αt)μ(k!)2(k−μ)!(μ!)2(15.63)

are the simplest among a set of orthogonal functions, where α characterizes a polynomial scale in time. To satisfy (14.123), in this case, the orthogonal functions φk(t) take the following form:

φk(t)=1k!√αexp{−0.5αt}Lk(αt).(15.64)

FIGURE 15.5 Measurer of correlation function.

Carrying out the Laplace transform

φk(p)=∞∫0exp{−pt}φk(t)dt,(15.65)

we can find that the considered orthogonal functions correspond to the transfer functions

φk(p)=2√α×0.5αp+0.5α[p−0.5αp+0.5α]k.(15.66)

The multistep filter based on RC elements, that is, α = 2(RC)−1, which has the transfer characteristic given by (15.66) accurate with the constant factor 2α−0.5 is shown in Figure 15.6. The phase inverter is assigned to generate two signals with equal amplitudes and shifted by phase on 90° and the amplifiers compensate attenuation in filters and ensure decoupling between them.

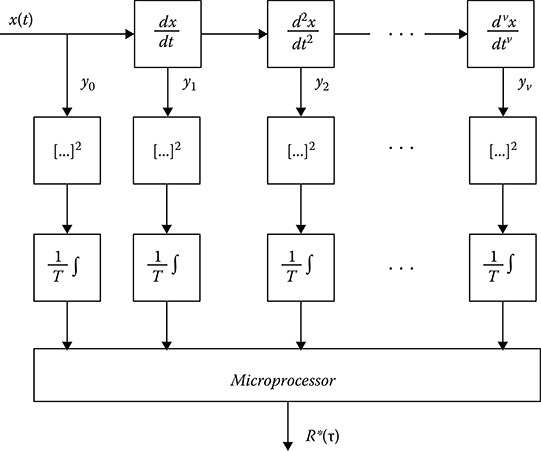

If the stationary stochastic process is differentiable ν times, the correlation function R(τ) of this process can be approximated by expansion in series about the point τ = 0:

R(τ)≈Rv(τ)=v∑i=0d2iR(τ)dτ2i|τ=0×τ2i(2i)!.(15.67)

The approximation error of the correlation function is defined as

ε(τ)=R(τ)−Rv(τ).(15.68)

The even 2ith derivatives of the correlation function at the point τ = 0 accurate within the coefficient (−1)i are the variances of ith derivatives of the stochastic process

(−1)id2iR(τ)dτ2i|τ=0=〈[diξ(t)dti]2〉=Vari.(15.69)

FIGURE 15.6 Multistep RC filter.

As applied to the ergodic stochastic process, the coefficients of expansion in series given by (15.67) can be presented in the following form:

αi=d2iR(τ)dτ2i|τ=0=(−1)ilimT→∞1TT∫0{dix(t)dti}2dt.(15.70)

In the case when the observation time interval is finite, the estimate α*i of the coefficient αi is defined as

α*i=(−1)i1TT∫0{dix(t)dti}2dt.(15.71)

The correlation function estimate takes the following form:

R*(τ)=v∑i=1α*iτ2i(2i)!.(15.72)

Flowchart of measurer based on definition of the coefficients of correlation function expansion in power series is shown in Figure 15.7. The investigated realization x(t) is differentiable ν times. The obtained processes yi(t) = dix(t)/dti are squared and integrated within the limits of the observation time interval [0, T] and come in at the input of calculator with corresponding signs. According to (15.72), the correlation function estimate is formed at the calculator output. Define the statistical characteristics of the coefficient estimate α*i. The mathematical expectation of the estimate α*i has the following form:

FIGURE 15.7 Correlation function measurement.

〈α*i〉=(−1)i1TT∫0〈{dix(t)dti}2〉dt.(15.73)

We can see that

{dix(t)dti}2=∂2iR(t1−t2)∂ri1∂ri2|t1=t2=t=(−1)id2iR(τ)dτ2i|τ=0.(15.74)

An introduction of new variables t2 − t1 = τ, t2 = t is made. Substituting (15.74) into (15.73), we see that the estimates of coefficients of expansion in series are unbiased. The correlation function of estimates is

Rip=〈α*iα*p〉−〈α*i〉〈α*p〉,(15.75)

where

〈α*iα*p〉=(−1)(i+p)1T2T∫0T∫0〈{dix(t1)dti1}2{dpx(t1)dtp2}2〉dt1dt2.(15.76)

As applied to the Gaussian stochastic process, its derivative is Gaussian too. Because of this, we can write

〈{dix(t1)dti1}2{dpx(t1)dtp2}2〉=〈{dix(t1)dti1}2〉〈{dpx(t1)dtp2}2〉+2{∂(i+p)〈x(t1)x(t2)〉∂ri1∂rp2}2=Vari×Varp+2{∂(i+p)R(t2−t1)∂ri1∂rp2}2.(15.77)

Substituting (15.77) into (15.76) and then into (15.75), we obtain

Rip=(−1)(i+p)2T2T∫0T∫0{∂(i+p)R(t2−t1)∂ri1∂rp2}2dt1dt2.(15.78)

Introducing new variables t2 − t1 = τ, t2 = t and changing the order of integration, we obtain

Rip=4TT∫0(1−τT){d(i+p)R(τ)dτ(i+p)}2dτ.(15.79)

If the observation time interval is much longer than the correlation interval of stochastic process and its derivatives, we can write

Rip=2T∞∫−∞{d(i+p)R(τ)dτ(i+p)}2dτ.(15.80)

As applied to the conditions, for which (15.80) is appropriate, the derivatives of the correlation function can be written using the spectral density S(ω) of the stochastic process:

d(i+p)R(τ)dτ(i+p)=12π∞∫−∞(jω)(i+p)S(ω)exp{jωτ}dω.(15.81)

Then

Rip=1Tπ∞∫−∞ω2(i+p)S2(ω)dω.(15.82)

The variance of the estimate α*i of expansion in series coefficients can be presented in the following form:

Var{α*i}=1Tπ∞∫−∞ω4iS2(ω)dω.(15.83)

Let us define the deviation of correlation function estimate from the approximated value, namely,

ε(τ)=Rv(τ)−R*v(τ).(15.84)

Averaging ɛ(τ) by realizations of the investigated stochastic process, we can see that in the considered case 〈ɛ(τ)〉 = 0, which means the bias of correlation function estimate does not increase due to the finite observation time interval.

The variance of correlation function estimate can be presented in the following form:

Var{R*v(τ)}=v∑i=1v∑p=1τ2(i+p)(2i)!(2p)!4TT∫0(1−τT){d(i+p)R(τ)dτ(i+p)}2dτ.(15.85)

At T ≫ τcor, we can write

Var{R*v(τ)}=1Tπv∑i=1v∑p=1τ2(i+p)(2i)!(2p)!∞∫−∞ω2(i+p)S2(ω)dω.(15.86)

Let the correlation function of the stochastic process be approximated by

R(τ)=σ2exp{−α2τ2},(15.87)

which corresponds to the spectral density defined as

S(ω)=σ2√παexp{−ω24α2}.(15.88)

Substituting (15.88) into (15.86), we obtain

Var{R*v(τ)}=σ4√2πTαv∑i=1v∑p=1[2(i+p)−1]!!(2i)!(2p)!(ατ)2(i+p),(15.89)

where

[2(i+p)−1]!=1×3×5×...×[2(i+p)−1].(15.90)

As we can see from (15.89), the variance of correlation function estimate increases with an increase in the number of terms under correlation function expansion in power series.

15.3 Optimal Estimation of Gaussian Stochastic Process Correlation Function Parameter

In some practical conditions, the correlation function of stochastic process can be measured accurately with some parameters defining a character of its behavior. In this case, a measurement of correlation function can be reduced to measurement or estimation of unknown parameters of correlation function. Because of this, we consider the optimal estimate of arbitrary correlation function parameter assuming that the investigated stochastic process. ξ(t) is the stationary. Gaussian stochastic process observed within the limits of time interval [0, T] in the background of Gaussian stationary noise ζ(t) with known correlation function.

Thus, the following realization

y(t)=x(t,l0)+n(t),0≤t≤T(15.91)

comes in at the measurer input, where x(t, l0) is the realization of the investigated Gaussian stochastic process with the correlation function Rx(t1, t2, l) depending on the estimated parameter l; n(t) is the realization of Gaussian noise with the correlation function Rn(t1, t2). True value of estimated parameter of the correlation function Rx(t1, t2, l) is l0. Thus, we assume that the mathematical expectation of both the realization x(t, l0) and the realization n(t) is equal to zero and the realizations. x(t, l0) and n(t) are statistically independent of each other. Based on input realization, the optimal receiver should form the likelihood ratio functional Λ(l) or some monotone function of the likelihood ratio. The stochastic process η(t) with realization given by (15.91) is the Gaussian stochastic process with zero mathematical expectation and the correlation function

Ry(t1,t2,l)=Rx(t1,t2,l)+Rn(t1,t2).(15.92)

As applied to the investigated stochastic process η(t), the likelihood functional can be presented in the following form [5]:

Λ(l)=exp{12T∫0T∫0y(t1)y(t2)[ϑn(t1,t2)−ϑx(t1,t2;l)]dt1dt2−12H(l)}.(15.93)

We can write the derivative of the function H(l) in the following form:

dH(l)dl=T∫0T∫0∂Rx(t1,t2,l)∂lϑx(t1,t2;l)dt1dt2(15.94)

and the functions ϑx(t1, t2; l) and ϑn(t1, t2) can be found from the following equations:

T∫0[Rx(t1,t2,l)+Rn(t1,t2)]ϑx(t1,t2;l)dt=δ(t2−t1),(15.95)

T∫0Rn(t1,t2)ϑn(t1,t2)dt=δ(t2−t1).(15.96)

Evidently, we can use the logarithmic term of the likelihood functional depending on the observed data as the signal at the receiver output

M1(l)=12T∫0T∫0y(t1)y(t2)ϑ(t1,t2;l)dt1dt2,(15.97)

where

ϑ(t1,t2;l)=ϑn(t1,t2)−ϑx(t1,t2;l).(15.98)

We suppose that the correlation intervals of the stochastic processes ξ(t) and ζ(t) are sufficiently small compared to the observation time interval [0, T]. In this case, we can use infinite limits of integration. Under this assumption, we have

{ϑx(t1,t2;l)=ϑx(t1−t2;l),ϑn(t1,t2)=ϑn(t1−t2),(15.99)

and

ϑ(t1,t2;l)=ϑ(t1−t2;l).(15.100)

Introducing new variables t2 − t1 = τ, t2 = t and changing the order of integration, we obtain

M1(l)=12{T∫0ϑ(τ;l)T−τ∫0y(t+τ)y(t)dtdτ+0∫−Tϑ(τ;l)T∫0y(t+τ)y(t)dtdτ}.(15.101)

Introducing new variables τ = −τ′, t′ = t + τ = τ − τ′ and taking into consideration that the correlation interval of stochastic process η(t) is shorter compared to the observation time interval [0, T], we can write

M1(l)=TT∫0R*y(τ)ϑ(τ;l)dτ,(15.102)

where

R*y(τ)=1TT−τ∫0y(t)y(t+τ)dt≈1TT∫0y(t)y(t−τ)dt(15.103)

is the correlation function estimate of investigated input process consisting of additive mixture of the signal and noise.

Applying the foregoing statements to (15.94), we obtain

dH(l)dl=2TT∫0∂Rx(τ;l)∂lϑx(τ;l)dτ.(15.104)

Substituting (15.102) and (15.104) into (15.93), we obtain

Λ(l)=exp{TT∫0R*y(τ)ϑ(τ;l)dτ−12H(l)}.(15.105)

In the case, when the observation time interval [0, T] is much longer compared to the correlation interval of the investigated stochastic process, we can use the spectral representation of the function given by (15.100)

ϑ(τ;l)=12π∞∫−∞[ϑn(ω)−ϑx(ω;l)]exp{jωτ}dω.(15.106)

Then (15.104) takes the following form:

dH(l)dl=T2πT∫0∂Sx(ω;l)∂lϑx(ω;l)dω.(15.107)

In (15.106) and (15.107), ϑn(ω), ϑx(ω; l), and Sx(ω; l) are the Fourier transforms of the corresponding functions ϑn(τ), ϑx(τ; l), and Rx(τ; l). Applying the Fourier transform to (15.95) and (15.96), we obtain

{{Rn(ω)ϑn(ω)=1,ϑx(ω;l)[Sn(ω)+Rx(ω;l)]=1.(15.108)

Taking into consideration (15.107) and (15.108), we can write

ϑ(τ;l)=12π∞∫−∞Sx(ω;l)S−1n(ω)Sx(ω;l)+Sn(ω)exp{jωτ}dω,(15.109)

H(l)=T2π∞∫−∞ln[1+Sx(ω;l)Sn(ω)]dω.(15.110)

The signal at the optimal receiver output takes the following form:

M(l)=TT∫0R*y(τ)ϑ(τ;l)dτ−12H(l).(15.111)

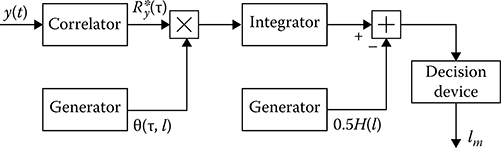

Flowchart of the optimal measurer is shown in Figure 15.8. This measurer operates in the following way. The correlation function R*y(τ) is defined based on the input realization of additive mixture of the signal and noise. This correlation function is integrated as the weight function with the signal ϑ(τ; l). The signals forming at the outputs of the integrator and generator of the function H(l) come

FIGURE 15.8 Optimal measurement of correlation function parameter.

in at the input of summator. Thus, the receiver output signal is formed. The decision device issues the value of the parameter lm, under which the output signal takes the maximum value.

If the correlation function of the investigated Gaussian stochastic process has several unknown parameters l = {l1, l2, …, l}, then the likelihood ratio functional can be found by (15.93) changing the scalar parameter l. on the vector parameter l and the function H(l) is defined by its derivatives

∂H(l)∂li=T∫0T∫0∂Rx(t1,t2;l)∂liϑx(t1,t2;l)dt1dt2.(15.112)

The function ϑx(t1, t2; l) is the solution of integral equation that is analogous to (15.95).

To obtain the estimate of maximum likelihood ratio of the correlation function parameter the optimal measurer (receiver) should define an absolute maximum lm of logarithm of the likelihood ratio functional

M(l)=12T∫0T∫0y(t1)y(t2)[ϑn(t1,t2)−ϑx(t1,t2,l)]dt1dt2−12H(l).(15.113)

To define the characteristics of estimate of the maximum likelihood ratio lm introduce the signal

s(l)=〈M(l)〉(15.114)

and noise

n(l)=M(l)−〈M(l)〉(15.115)

functions. Then (15.113) takes the following form:

M(l)=s(l)+n(l).(15.116)

Prove that if the noise component is absent in (15.116), that is, n(l) = 0, the logarithm of likelihood ratio functional reaches its maximum at l = l0, that is, when the estimated parameter takes the true value. Define the first and second derivatives of the signal function given by (15.114) at the point l = l0:

ds(l)dl|l=l0=〈dM(l)dl〉|l=l0.(15.117)

Substituting (15.113) into (15.117) and averaging by realizations y(t), we obtain

ds(l)dl|l=l0={−12T∫0T∫0[Rn(t1,t2)+Rx(t1,t2;l)]∂ϑx(r1,r2;l)∂ldt1dt2−12T∫0T∫0ϑx(t1,t2;l)∂Rx(t1,t2;l)∂ldt1dt2}l=l0=−12{ddtT∫0T∫0[Rn(t1,t2)+Rx(t1,t2;l)]ϑx(t1,t2;l)dt1dt2}l=l0.(15.118)

Since

R(t1,t2;l)=Rn(t1,t2)+Rx(t1,t2;l)=R(t2,t1;l),(15.119)

in accordance with (15.95) we have

ds(l)dl|l=l0=−12T∫0{ddlT∫0[Rn(t2,t1)+Rx(t2,t1:l)]ϑx(t1,t2;l)dt1}l=l0dt2=0.(15.120)

Now, let us define the second derivative of the signal component at the point l = l0:

d2s(l)dl2|l=l0=〈d2M(l)dl2〉|l=l0=12{T∫0T∫0∂Rx(t2,t1:l)∂l∂ϑx(t1,t2;l)∂ldt1dt2−d2dl2T∫0T∫0[Rn(t2,t1)+Rx(t2,t1:l)]ϑx(t1,t2;l)dt1dt2}l=l0(15.121)

In accordance with (15.95) we can write

{d2dl2T∫0T∫0[Rn(t2,t1)+Rx(t2,t1:l)]ϑx(t1,t2;l)dt1dt2}l=l0 =T∫0{d2dl2T∫0[Rn(t2,t1)+Rx(t2,t1:l)]ϑx(t1,t2;l)dt1}l=l0dt2=0.(15.122)

Because of this

d2s(l)dl2|l=l0=12{T∫0T∫0∂Rx(t2,t1:l)∂l∂ϑx(t1,t2;l)∂ldt1dt2}l=l0.(15.123)

Let us prove that the condition

d2s(l)dl2|l=l0<0(15.124)

is satisfied forever. For this purpose, we define the averaged quadratic first derivative of the likelihood ratio functional logarithm at the point l = l0

m2=〈{dM(l)dl|l=l0}2〉=〈{dN(l)dl|l=l0}2〉,(15.125)

which is a positive value. Substituting (15.91) into (15.113), differentiating by l, and averaging by realizations y(t), we obtain the second central moment of the first derivative of the likelihood ratio functional logarithm:

∂2∂l1∂l2〈[M(l1)−〈M(l1)〉][M(l2)−〈M(l2)〉]〉=12T∫0T∫0T∫0T∫0[Rn(t1,t3)+Rx(t1,t3;l0)][Rn(t2,t4)+Rx(t2,t4;l0)]×∂ϑx(r1,r2;l1)∂l1∂ϑx(t3,t4;l2)∂l2dt1dt2dt3dt4.(15.126)

Assuming l2 = l1 = l0 and taking into consideration that

T∫0[Rn(t1,t)+Rx(t1,t;l0)]∂ϑx(t1,t2;l)∂l1dt=−T∫0ϑx(t,t2;l)∂Rx(t1,t;l)∂ldt,(15.127)

we obtain

m2=−{12T∫0T∫0T∫0T∫0[Rn(t2,t4)+Rx(t2,t4;l0)]ϑx(t1,t2;l)∂Rx(t1,t3;l)∂l∂ϑx(t3,t4;l)∂ldtldt2dt3dt4}l=l0=−{12T∫0T∫0∂Rx(t1,t2;l)∂l∂ϑx(t1,t2;l)∂ldt1dt2}l=l0.(15.128)

In (15.128) we have implemented (15.95) again. Comparing (15.128) with (15.123), we can see that

d2s(l)dl2|l=l0=−m2(15.129)

and, consequently, (15.124) is satisfied forever

Introduce the signal-to-noise ratio (SNR)

SNR=s2(l0)〈n2(l0)〉(15.130)

and the normalized signal and noise functions

{S(l)=s(l)s(l0),N(l)=n(l)√〈n2(l0)〉.(15.131)

Taking into consideration (15.120), (15.124), and (15.131), we can see that

{maxS(l)=S(l0)=1,〈N2(l0)〉=1.(15.132)

In addition, as follows from the definition, the mathematical expectation of the noise function n(l) is zero. Taking into consideration the introduced notations, we can write the logarithm of the likelihood ratio functional in the following form:

M(l)=s(l0)[S(l)+εN(l)],(15.133)

where ɛ=1/√SNR. Taking into consideration (15.133), the likelihood ratio equation for the estimate of correlation function parameter of Gaussian stochastic process can be presented in the following form:

{dS(l)dl+εdN(l)dl}l=lm=0.(15.134)

Usually, under the measurement of stochastic process parameters, SNR is high and, consequently, ɛ ≪ 1. Then, by analogy with Ref. [5], the approximated solution of likelihood ratio equation can be searched in the form of expansion in power series

lm=l0+εl1+ε2l2+ε3l3+....(15.135)

To define the approximations l1, l2, l3 and grouping the terms with small value ɛ of the same power, we obtain

s1+ε(l1s2+n1)+ε2(l2s2+l1n2+0.5l21s3)+ε3(l3s2+l2n2+0.5l21n3+l31s46+l1l3s2)+...=0,(15.136)

where we use the following notations:

{si=diS(l)dli|l=l0,ni=diN(l)dli|l=l0.(15.137)

Since the system of functions 1, x, x2, ….is linearly independent, the equality given by (15.136) is satisfied for any ɛ if and only if all coefficients of terms with power equal to ɛ are equal to zero. Zero approximation is matched with the true value of the parameter l0 since S(l) reaches its absolute maximum at

l=l0.(15.138)

Equating to zero the coefficients at ɛ, ɛ2, and ɛ3, we obtain equations to define l1, l2, and l3. We can write solutions of these equations in the following form:

{l1=−n1s2,l2=−l1n2+0.5l21s3s2,l3=−l2n2+05l21n3+6−1l31s4+l1l2s3s2.(15.139)

Taking into consideration the first three approximations l1, l2, and l3, the conditional bias and variance of maximum likelihood ratio take the following form:

b(lm|l0)=ε〈l1〉+ε2〈l2〉+ε3〈l〉3,(15.140)

Var{lm|l0}=ε2[〈l21〉−〈l1〉2]+2ε3[〈l1l2〉−〈l1〉〈l2〉]+ε4[〈l22〉−〈l2〉2+2〈l1l3〉−2〈l1〉〈l3〉].(15.141)

Averaging is carried out by all possible realizations of the total stochastic process η(t) at the fixed value of estimated parameter l0. The relative error of estimate bias and variance that can be defined as the ratio of the first term with small order to the first term of expansion takes the order ɛ2.

We are limited by consideration of the first approximation. In doing so, the random error of a single measurement can be presented in the following form:

Δl=lm−l0=εl1=−εdN(l)dld2S(l)dl2|l=l0=dn(l)dld2s(l)dl2|l=l0.(15.142)

For the first approximation, the estimate of arbitrary parameter of correlation function will be unbiased, since 〈n(l)〉 = 0. Taking into consideration (15.128) and (15.129), the variance of estimate can be presented in the following form:

Var{lm|l0}=∂2∂l1∂l2[〈n(l1)n(l2)〉|l=l0[d2S(l)dl2]2|l=l0=m−2.(15.143)

If the observation time interval is much longer than the correlation interval of the investigated stochastic process η(t), a flowchart of optimal measurer is significantly simplified. In this case, the logarithm of the likelihood ratio functional is given by (15.111), where the signal function can be described in the following form:

s(l)=TT∫T〈R*y(τ)〉ϑ(τ;l)dτ−12H(l).(15.144)

The first term in (15.144) can be presented in the following form:

T∫0〈R*y(τ)〉ϑ(τ;l)dτ=12T∫−T[Rn(τ)+Rx(τ;l0)]ϑ(τ;l)dτ≈12∞∫−∞[Rn(τ)+Rx(τ;l0)]ϑ(τ;l)dτ=14π∞∫−∞[Sn(ω)+Sx(ω;l0)]ϑ(ω;l)dω=14π∞∫−∞Sx(ω;l)[Sn(ω)+Sx(ω;l0)]Sn(ω)[Sn(ω)+Sx(ω;l)]dω.(15.145)

Substituting (15.145) into (15.114) and taking into consideration (15.110), we obtain the signal function in the following form:

s(l)=T4π∞∫−∞{Sx(ω;l)[Sn(ω)+Sx(ω;l0)]Sn(ω)[Sn(ω)+Sx(ω;l)]−ln[1+Sx(ω;l)Sn(ω)]}dω.(15.146)

The signal function reaches its maximum at l = l0:

s(l0)=T4π∞∫−∞{Sx(ω;l0)Sn(ω)−ln[1+Sx(ω;l0)Sn(ω)]}dω.(15.147)

Analogously, we can define the variance of noise component n(l) given by (15.115)

〈n2(l)〉=T4π∞∫−∞S2x(ω;l0)S2n(ω)dω.(15.148)

Consequently, SNR given by (15.130) can be presented in the following form:

SNR=T4π{∫∞−∞{Sx(ω;l0)Sn(ω)−ln[1+Sx(ω;l0)Sn(ω)]}dω}2∫∞−∞S2x(ω;l0)S2n(ω)dω.(15.149)

If SNR is high, the variance of correlation function estimate is defined by (15.143), where the value m2 given by (15.129) can be presented in the following form using the spectral density components:

m2m2=−TT∫0∂Rx(τ;l)∂l×∂ϑx(τ;l)∂ldτ|l=l0≈−T4π∞∫−∞∂Sx(ω;l)∂l×∂ϑx(ω;l)∂ldω|l=l0=T4π∞∫−∞[∂Sx(ω;l)∂l]2[Sn(ω)+Sx(ω;l)]2|l=l0.(15.150)

We can define the second set of approximations for the bias and variance of arbitrary parameter estimate of the correlation function of the investigated stochastic process in accordance with (15.140) and (15.141). After cumbersome mathematical transformations, we obtain that the bias and variance of estimate take the following form:

b(lm|l0)=−12J1112(J2010)−2,(15.151)

Var{lm|l0}=2J2010+4[J2010]3{12J4010−6J2112−J1113+1J2010[72[J1112]2+6J1112J3010−12[J3010]2]},(15.152)

where

Jpqij=T2π∞∫−∞{∂iSx(ω;l)∂li}ql=l0{∂jSx(ω;l)∂lj}pl=l0[Sx(ω;l0)+Sn(ω)]−(p+q)dω.(15.153)

Comparing (15.129), (15.150), and (15.153), we see that

m2=12J2010.(15.154)

Based on the formulae obtained, we can define the statistical characteristics of the estimate of correlation function parameter α of the investigated stochastic process additively mixed with the white noise possessing the one-sided power spectral density N0

Rx(τ;α)=σ2exp{−α|τ|}.(15.155)

To reduce mathematical transformations and computations, the bias of estimate of the correlation function parameter α is defined taking into consideration the second approximation given by (15.151) and the variance of estimate of the correlation function parameter α is defined taking into consideration the first approximation given by (15.129) (the first term in the right side of (15.152)).

The correlation function parameter α defines the effective bandwidth of spectral density of stochastic process, that is, Δfef = 0.25α. Thus, we can write

{Sx(ω;α)=2ασ2α2+ω2,Sn(ω)=N02.(15.156)

Introduce the following notations:

{q2=4σ2N0α,p=Tτcor,β=√1+q2,(15.157)

q2 is the ratio of the investigated stochastic process variance to the white noise power within the limits of effective bandwidth of the signal; p is the ratio between the observation time interval of the investigated stochastic process and its correlation interval. Using these notations, we can write

J2010=pq4(β3−β2+3β+1)α20β3(1+β)3;(15.158)

J1112=−2pq4(2β3−β2+4β+1)α20β3(1+β)4,(15.159)

where α0 is the true value of estimated correlation function parameter. Substituting (15.158) and (15.159) into (15.151) and (15.152), we obtain

b(αm|α0)=α0(1+β)2β3(2β3−β2+4β+1)pq4(β3−β2+3β+1)2;(15.160)

Var{αm|α0}=2α20(1+β)3β3pq4(β3−β2+3β+1)2.(15.161)

When q2 ≪ 1 and 4σ2T N−10 ≫ 1, in this case (15.160) and (15.161) are correct, then β ≈ 1 and (15.160) and (15.161) take a simple form:

b(αm|α0)≈3α02pq4=3N20α2032σ4T;(15.162)

Var{αm|α0}≈4α20pq4=N20α304σ4T.(15.163)

If q2 ≪ 1 and β ≈ q, (15.162) and (15.163) take the following form:

b(αm|α0)=α0pq2;(15.164)

Var{αm|α0}=2α20pq.(15.165)

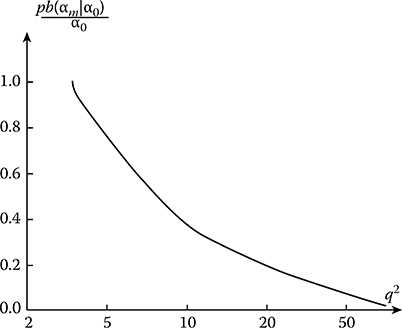

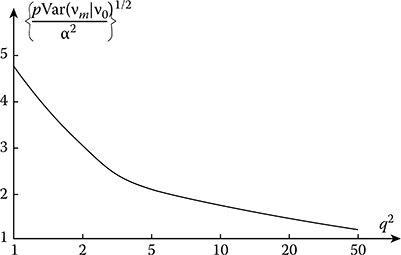

The relative shift of estimate bias pb(αm | α0)/α0 and relative root-mean-square deviation √(pVar{αm|α0})/2α20 as a function of ratio between the variance of investigated stochastic process to power noise q2 within the limits of effective spectral bandwidth are presented in Figures 15.9 and 15.10.

Consider the second example. For this purpose, we analyze the correlation function of the narrowband stochastic process ξ(t)

Rx(τ;v)=σ2ρen(τ)cosvτ,(15.166)

FIGURE 15.9 Relative estimate bias shift as a function of the ratio between the variance of the investigated stochastic process and power noise.

FIGURE 15.10 Relative root-mean-square deviation of estimate as a function of the ratio between the variance of the investigated stochastic process and power noise.

where ρen(τ) is the envelope of normalized correlation function and the condition

2πΔfef<<v(15.167)

is satisfied. We estimate the parameter ν. In the narrowband stochastic process case, the parameter ν plays a role of the central spectral density frequency. We assume that the stochastic process with the correlation function given by (15.166) is investigated in the white noise with the correlation function

Rn(τ)=N02δ(τ),(15.168)

where δ(τ) is the Dirac delta function and the observation time interval [0, T] is much longer than the correlation stochastic process interval.

In accordance with (15.111), the logarithm of likelihood ratio functional can be presented in the following form:

M(v)=M1(v)−0.5H(v),(15.169)

where M1(ν) and H(ν) are given by (15.102) and (15.104), respectively. Consider the second term in (15.169) taking into consideration that

{Sn(ω)=N02,Sx(ω,v)=12σ2[ℱ1(ω−v)+ℱ1(ω+v)],(15.170)

where ℱ1(ω) is the Fourier transform of the normalized correlation function envelope ρen(τ). Introducing new variable ω′ = ω - ν and taking into consideration that the investigated stochastic process is the narrowband process, we can write

H(v)≈Tπ∞∫−∞ln[1+σ2N0ℱ1(ω)]dω=const.(15.171)

Consequently, the logarithm of likelihood ratio functional accurate with the constant factor coincides with the output signal

M(v)=T0∫TR*y(τ)ϑ(τ;v)dτ.(15.172)

Taking into consideration (15.106), (15.109), and the fact that the stochastic process ξ(t) is the narrowband process, we obtain

ϑ(τ;v)=σ2πN0∞∫−∞[ℱ1(ω−v)+ℱ1(ω+v)]exp{jωτ}σ2[ℱ1(0)−v)+ℱ1(ω+v)]+N0dω=ℱ1(τ)cosvτ,(15.173)

where

ℱ1(τ)=2σ2πN0∞∫−∞ℱ1(ω)exp{jωτ}σ2ℱ1(ω)+N0dω.(15.174)

Thus, the estimation of parameter v can be carried out by position of absolute maximum of the function

M1(v)=TT∫0R*y(τ)˜ℱ1(τ)cosvτdτ,(15.175)

where R*y(τ) is the correlation function estimate of the total process given by (15.103).

Define the bias and variance of estimate of the parameter v limiting only by the first approximation. Under this approximation, the estimate will be unbiased and, in accordance with (15.143) and (15.150), the variance of estimate can be presented in the following form:

Var{vm|v0}=2πTσ4∫∞−∞{dℱ1(ω)dω}2dω[σ2ℱ1(ω)+N0]2.(15.176)

If the normalized correlation function envelope takes the form

ρen(τ)=exp{−α|τ|},(15.177)

the Fourier transform can be presented as

ℱ1(ω)=2αα2+ω2.(15.178)

As a result, the variance of the correlation function parameter estimate takes the following form:

Var{vm|v0}=α2(1+√1+q2)3√1+q2q4p,(15.179)

where

q2=2σ2N0α.(15.180)

If q2 ≪ 1 and 2σ2TN−10 ≫ 1, the variance of correlation function parameter estimate is simplified

Var{vm|v0}=8α2q4p.(15.181)

If q2 ≫ 1, then

Var{vm|v0}=α2p,(15.182)

or the variance of the central frequency estimate of stochastic process spectral density is inversely proportional to the product between the correlation interval and observation time interval. Figure 15.11 presents the root-mean-square deviation √pVar{vm|v0}/α2 as a function of ratio between the variance of the investigated stochastic process and the power noise q2 within the limits of effective spectral bandwidth. In doing so, we assume that for all values of q2 the following inequality q2p ≫ 1 is satisfied.

The optimal estimate of stochastic process correlation function can be found in the form of estimations of the elements Rij of the correlation matrix R or elements Cij of the inverse matrix C. In the case of Gaussian stationary stochastic process with the multidimensional probability density function given by (12.169), the solution of likelihood ratio equation

∂fN(x1,x2,...,xN|C)∂Cij=0(15.183)

allows us to obtain the estimates Cij and, consequently, the estimates of elements Rij of correlation matrix R.

FIGURE 15.11 Relative root-mean-square deviation √pVar{vm|v0}/α2 as a function of the ratio between the variance of the investigated stochastic process and power noise.

15.4 Correlation Function Estimation Methods Based on other Principles

Under practical realization of analog correlators based on the estimate given by (15.2), a multiplication of two stochastic processes is most difficult to carry out. We discussed previously that for this purpose there is a need to use the circuits performing a multiplication in accordance with (13.201). The described flowchart consists of two quadrators. There are two methods [1] called the interference and compensation methods using a single quadrator. In doing so, it is assumed that the variance of investigated stochastic process is known very well. The interference method is based on the following relationship:

R(τ)=±{12〈[x(t−τ)±x(t)]2〉∓σ2}.(15.184)

It is natural to use the following function to estimate the correlation function given by (15.184)

˜R(τ)=±{12TT∫0[x(t−τ)±x(t)]2dt∓σ2}.(15.185)

As we can see from (15.184) and (15.185), the estimate of correlation function is not biased.

Let us define the variance of correlation function estimate assuming that the investigated process is Gaussian. Suppose that we use the sign “+” in the square brackets in (15.184) and (15.185). Then

Var{˜R(τ)}=〈{1TT∫0x(t)x(t−τ)dt}2〉−R2(τ)+1T2T∫0T∫0〈x(t1)x(t1−τ)[x2(t2)+x2(t2−τ)]〉dt1dt2−2σ2R(τ)+〈{12TT∫0[x2(t)+x2(t−τ)]dt}2〉−σ4.(15.186)

The first and second terms in the right side of (15.186) represent the variance of the correlation function estimate R*(x) according to (15.2). Other terms on the right side of (15.186) characterize an increase in the variance of the correlation function estimate R*(x) according to (15.185) compared to the estimate given by (15.2). Making mathematical transformations with the introduction of new variables, as it was done earlier, we can write

Var{˜R(τ)}=Var{R*(τ)}+1TT∫0(1−τT)×{2R2(z)+R2(z+τ)+R2(z−τ)+4R(z)[R(z+τ)+R(z−τ)]}dz.(15.187)

As τ → 0

Var{˜R(0)}≈16TT∫01−τTR2(z)dz,(15.188)

and, in this case, the variance of correlation function estimate given by (15.185) exceeds by four times the variance of correlation function estimate given by (15.2).

If the condition. T ≫ τcor is satisfied, the variance of correlation function estimate given by (15.185) can be presented in the following form:

At T ≫ τ ≫ τcor, we have

Under accepted initial conditions, we obtain

As applied to the exponential correlation function given by (12.13) and if the condition T ≫ τcor is satisfied, the variance of correlation function estimate given by (15.185) can be written in the following form:

Using the compensation method to measure the correlation function, the function

is formed and a selection of the factor γ ensuring a minimum of the function μ(τ, γ) is performed. In doing so, the factor γ becomes numerically equal to the normalized correlation function value. Thus, likelihood defining the minimum of the function μ(τ, γ) based on the condition

we obtain

Consequently, the compensation measurer of correlation function should generate the function of the following form:

Minimizing the function μ*(τ, γ) given by (15.196) with respect to the parameter γ, we are able to obtain the estimate of normalized correlation function γ = ℛ(τ). Solving the equation

we can see that the procedure to define the estimate of the correlation function R*(τ) is equivalent to the estimate that can be presented in the following form:

As was shown in Ref. [1], as applied to the estimate by minimum of the function μ(τ, γ) given by (15.196), the requirements of quadrator are less stringent compared to the requirements of quadrators used by the previously discussed methods of correlation function measurement.

Determine the statistical characteristics of normalized correlation function estimate of the Gaussian stochastic process. For this purpose, we present the numerator and denominator in (15.198) in the following form:

As discussed earlier,

Their variances are given by (15.9) and (13.62), respectively. Henceforth, we assume that the condition T ≫ τcor is satisfied. In this case, the error of variance estimate is negligible compared to the true value of variance

Because of this, we can use the following approximation of estimate given by (15.198)

Under the definition of the bias and variance of estimate, a limitation is imposed by the terms containing the moments of random variables Δℛ(τ) and ΔVar/σ2, and the order of these terms is not higher than 2. Under this approximation, the mathematical expectation of estimate of the normalized correlation function (15.204) can be presented in the following form:

Thus, the estimate of the normalized correlation function given by (15.198) is characterized by the bias

The product moment 〈Δℛ(τ)ΔVar〉 can be presented in the following form:

Determining the fourth product moment and making transformations and introducing new variables under the condition T ≫ τcor, as it was done before, we can write

Taking into consideration (15.208) and the variance of variance estimate given by (13.63), we obtain the estimate bias in the following form:

To define the variance of the normalized correlation function estimate

we determine accurate with the terms of the moments and ΔVar of the second order

Taking into consideration (15.205) and the earlier-given moments, we obtain

As we can see from (15.209) and (15.212), as τ → 0 the bias and variance of estimate given by (15.198) tend to approach zero, since at τ = 0, according to (15.198), the normalized correlation function estimate is not a random variable.

As applied to the Gaussian stochastic process with the exponential correlation function given by (12.13), the bias and variance of the normalized correlation function estimate take the following form:

The sign or polar methods of measurements allow us to simplify essentially the experimental investigation of correlation and mutual correlation functions. Delay and multiplication of stochastic processes can be realized very simply by circuitry. The sign methods of correlation function measurements are based on the existence of a functional relationship between the correlation functions of the initial stochastic process ξ(t) and the transformed stochastic process η(t) = sgn ξ(t). The stochastic process η(t) is obtained by nonlinear inertialess transformation of initial stochastic process ξ(t) by the ideal two-sided limiter with transformation characteristic given by (12.208).

As applied to the Gaussian stochastic process, its normalized correlation function is related to the correlation function ρ(τ) of the transformed stochastic process η(t) = sgn ξ(t) by the following relationship:

where

is the probability of coincidence between the positive signs of functions η(t) and η(t − τ). The estimate of the probability P+(τ) can be obtained as a signal averaging by time at the matching network output of positive values of the stochastic functions η(t) and η(t − τ) realizations. If the stochastic process is non-Gaussian, a relationship between the correlation functions of initial and transformed by the ideal limiter stochastic processes is very complex. For this reason, the said method of correlation function measurement is restricted. The method of correlation function measurement using additional processes by analogy with the discussed method of estimation of the mathematical expectation and variance of stochastic process is widely used.

Assume that the investigated stochastic process η(t) has zero mathematical expectation. Consider two sign functions

where the mutual independent additional stationary stochastic processes μ1(t) and (μ2t) have the same uniform probability density functions given by (12.205) and the condition (12.206) is satisfied. As mentioned previously, the conditional stochastic processes η1(t|x1) and η2[(t − τ)|x2] are mutually independent at the fixed values ξ(t) = x1 and η(t − τ) = x2. Taking into consideration (12.209), we obtain

The unconditional mathematical expectation of product between two stochastic functions can be presented in the following form:

Thus, the function

where y1i and y2i are the samples of stochastic sequences ηi1 and η2i, can be considered as the estimate of correlation function to be used for the investigation of stochastic process at discrete instants. In this case, the estimate will be unbiased. When additional stochastic functions are carried out to estimate the variance (see Section 13.3), the operations of product and summation in (15.220) are easily changed by operations of defnition of estimate difference between the probability of polarity coincidence and noncoincidence of sampled values y1i and y2i. Delay operations of sign functions can be implemented by circuitry.

Determine the variance of correlation function estimate given by (15.220) assuming that the samples are pairwise independent, that is,

we obtain

The double sum can be presented in the form of two sums by analogy with (13.94). At this time, (13.55) is true. Define the conditional product moment 〈(η1iη1jη2j| x1i, x2i, x1j, x2j)〉 if i ≠ j under the condition

Taking into consideration a statistical independence between η1 and η2, mutual independence between the conditional random values η1(ti|x1i ) and η2 [(ti − τ)|x2i], and (12.209), the conditional product moment can be written in the following form:

Given that the random variables ηi and ηj are independent of each other, the unconditional product moment can be presented in the following form:

Substituting (13.96) and (15.225) into (13.94) and then into (15.222), we obtain

According to (15.220), the correlation function estimate satisfies the condition given by (12.206), that is, σ2 ≪ A2. For this reason, the variance of correlation function estimate is defined by half-intervals of possible values of additional stochastic processes. Comparing (15.226) and (15.25), we obtain

As we can see from (15.227), since the condition σ2 ≪ A2 is satisfied, the correlation function estimate given by (15.220) is worse compared to the correlation function estimate given by (15.21).

15.5 Spectral Density Estimate Of Stationary Stochastic Process

By defnition, the spectral density of stationary stochastic process is the Fourier transform of correlation function

The inverse Fourier transform takes the following form:

As we can see from (15.229), at τ = 0 we obtain the variance of stochastic process:

As applied to the ergodic stochastic process with zero mathematical expectation, the correlation function is defined by (15.1). Because of this, we can rewrite (15.1) in the following form:

The received realization of stochastic process can be presented in the following form:

In the case of physically realized stochastic processes, the following condition is satisfied:

For the realization of the stochastic process, the Fourier transform takes the following form:

Introducing a new variable z = t − τ, we can write

Substituting (15.235) into (15.231) and taking into consideration (15.234), we obtain

Formula (15.236) is not correct for the defnition of spectral density as the characteristic of stochastic process averaged in time. This phenomenon is caused by the fact that the function T-1|X(jω)|2 is the stochastic function of the frequency ω. As the stochastic function x(t), this function changes randomly by its mathematical expectation and possesses the variance that does not tend to approach zero with an increase in the observation time interval. Because of this, to obtain the averaged characteristic corresponding to the defnition of spectral density according to (15.228), the spectral density S1(ω) should be averaged by a set of realizations of the investigated stochastic process and we need to consider the function

Consider the statistical characteristics of estimate of the function

where the random spectrum X(jω) of stochastic process realization is given by (15.234).

The mathematical expectation of spectral density estimate given by (15.238) takes the following form:

After introduction of new variables τ = t2.- t1.and t2 = t, the double integral in (15.239) can be transformed into a single integral, that is,

If the condition T ≫ τcor is satisfied, we can neglect the second term compared to the unit in paren-thesis in (15.240), and the integration limits are propagated on ±∞. Consequently, as T → ∞, we can write

that is, as T → ∞, the spectral density estimate of stochastic process is unbiased.

Determine the correlation function of spectral density estimate

As applied to the Gaussian stochastic process, (15.242) can be reduced to

Taking into consideration (15.229) and (12.122) and as T → ∞, we obtain

If the condition ωT ≫ 1 is satisfied, we can use the following approximation:

If

then

which means, if the frequencies are detuned on the value multiple 2πT-1, the spectral components are uncorrelated. When 0.5(ω1 - ω2) T ≫ 1, we can neglect the correlation function between the estimates of spectral density given by (15.238).

The variance of estimate of the function S((ω1) can be defined by (15.244) using the limiting case at ω1 = ω2 = ω:

If the condition ωT ≫ 1 is satisfied (as T → ∞), we obtain

Thus, according to (15.238), in spite of the fact that the estimate of spectral density ensures unbi-asedness, it is not acceptable because the value of the estimate variance is larger than the squared spectral density true value.

Averaging the function by a set of realizations is not possible, as a rule. Some indirect methods to average the function are discussed in Refs. [3,5,6]. The first method is based on implementation of the spectral density averaged by frequency bandwidth instead of the estimate of spectral density defined at the point (the estimate of the given frequency). In doing so, the more the frequency range, within the limits of which the averaging is carried out, at T = const, the lesser the variance of estimate of spectral density. However, as a rule, there is an estimate bias that increases with an increase in the frequency range, within the limits of which the averaging is carried out. In general, this averaged estimate of spectral density can be presented in the following form:

where W(ω) is the even weight function of frequency ω or as it is called in other words the function of spectral window. The widely used functions W(ω) can be found in Refs. [3,5,6].

As T → ∞, the bias of spectral density estimate can be presented in the following form:

As applied to the narrowband spectral window W(ω), the following expansion

is true, where S′(ω) and S″(ω) are the derivatives with respect to the frequency ω. Because of this, we can write

If the condition ωT ≫ 1 is satisfied (as T → ∞), we obtain [1]

As we can see from (15.254), as T → ∞, ; that is, the estimate of the spectral density is consistent.

The second method to obtain the consistent estimate of spectral density is to divide the observation time interval [0, T] on N subintervals with duration T0 < T and to define the estimate for each subinterval and subsequently to determine the averaged estimate

Note that according to (15.240), at T = const, an increase in N(or decrease in T0) leads to bias of the estimate . If the condition T0 ≫ τcor is satisfied, the variance of estimate can be approximated by the following form:

As we can see from (15.256), as T → ∞, the estimate given by (15.255) will be consistent.

In radar applications, sometimes it is worthwhile to obtain the current estimate instead of the averaged summation given by (15.225). Subsequently, the obtained function of time is smoothed by the low-pass filter with the filter constant time τfilter ≫ T0. This low-pass filter is equivalent to estimate by ν uncorrelated estimations of the function , where . In practice, it makes sense to consider only the positive frequencies f = ω(2π)−1. Taking into consideration that the correlation function and spectral density remain even, we can write

According to (15.228) and (15.229), the spectral density G(f) and the correlation function R(x) take the following form:

In this case, the current estimate, by analogy with (15.238), can be presented in the following form:

where the squared current spectral density can be presented in the following form:

where and are the cosine and sine components of the current spectral density of realization x(t). As a result of smoothing the estimate by the filter with the impulse response h(t), we can write the averaged estimate of spectral density in the following form:

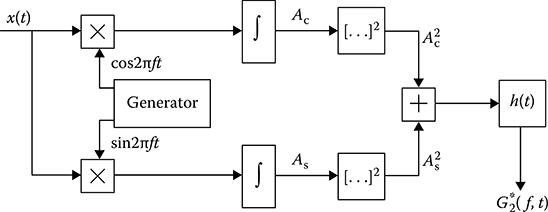

If

the variance of spectral density estimate can be approximated by

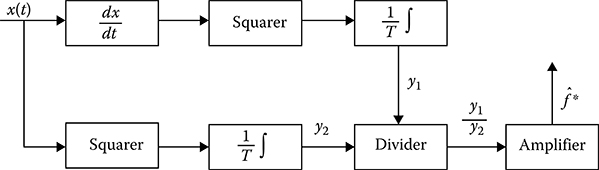

The flowchart illustrating how to define the current estimate of spectral density is shown in Figure 15.12. The input realization of stochastic process is multiplied by the sine and cosine signals of the reference generator in quadrature channels, correspondingly. Obtained products are integrated, squared, and come in at the summator input. The current estimate forming at the summator output comes in at the smoothing filter input. The smoothing filter possesses the impulse response h(t). The smoothed estimate G(f, t) of spectral density is issued at the filter output.

FIGURE 15.12 Defnition of the current estimate of spectral density.

To measure the spectral density within the limits of whole frequency range we need to change the reference generator frequency discretely or continuously, for example, by the linear law.

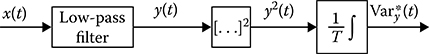

In practice, the filtering method is widely used. The essence of filtering method is the following. The investigated stationary stochastic process is made to pass through the narrowband (compared to the stochastic process spectrum bandwidth) filter with the central frequency ω0 = 2πf0. The ratio between the variance of stochastic process at the narrowband filter output and the bandwidth Δf of the filter is considered as the estimate of spectral density of stochastic process.

Let h(t) be the impulse response of the narrowband filter. The transfer function corresponding to the impulse response h(t) is ℋ(jω). The stationary stochastic process forming at the filter output takes the form:

Spectral density at the filter output can be presented in the following form:

where ℋ(f) is the filter transfer function module with the maximum defined as ℋmax(f) = ℋmax. The narrowband filter bandwidth can be defined as

The variance of stochastic process at the filter output in stationary mode takes the following form:

Assume that the filter transfer function module is concentrated very closely about the frequency f0 and we can think that the spectral density is constant within the limits of the bandwidth Δf, that is, G(f) ≈ G(f0). Then

Naturally, the accuracy of this approximation increases with concomitant decrease in the filter bandwidth Δf, since as Δf → 0 we can write

As applied to the ergodic stochastic processes, under defnition of variance, the averaging by realization can be changed based on the averaging by time as T → ∞

For this reason, the value

is considered as the estimate of spectral density under designing and construction of measurers of stochastic process spectral density. The values Δf and are known before. Because of this, a measurement of the stochastic process spectral density is reduced to estimate of stochastic process variance at the filter output. We need to note that (15.272) envisages a correctness of the condition TΔf ≫1, which means the observation time interval is much longer compared to the narrowband filter time constant.

Based on (15.272), we can design the flowchart of spectral density measurer shown in Figure 15.13. The spectral density value at the fixed frequency coincides accurately within the constant factor with the variance of stochastic process at the filter output with known bandwidth. Operation principles of the spectral density measurer are evident from Figure 15.13. To define the spectral density for all possible values of frequencies, we need to design the multichannel spectrum analyzer and the central frequency of narrowband filter must be changed discretely or continuously. As a rule, a shift by spectrum frequency of investigated stochastic process needs to be carried out using, for example, the linear law of frequency transformation instead of filter tuning by frequency. The structure of such measurer is depicted in Figure 15.14. The sawtooth generator controls the operation of measurer that changes the frequency of heterodyne.

FIGURE 15.13 Measurement of spectral density.

FIGURE 15.14 Measurement of spectral density by spectrum frequency shift.

Define the statistical characteristics for using the filter method to measure the spectral density of stochastic process according to (15.272). The mathematical expectation of spectral density estimate at the frequency f0 takes the following form:

In a general case, the estimate of spectral density will be biased, that is,

The variance of spectral density estimate is defined by the variation in the variance estimate of stochastic process y(t,f0) at the filter output. If the condition T ≫ τcor is satisfied for the stochastic process y(t,f0), the variance of estimate is given by (13.64), where instead of S(ω) we should understand

As applied to introduced notations G(f) and ℋ(f), we can write

To define the bias and variance of spectral density estimate of stochastic process we assume that the module of transfer function is approximated by the following form:

where Δf = δf. We apply an expansion in power series about the point. f = f0 for the spectral density G(f) and assume that there is a limitation imposed by the first three terms of expansion in series, namely,

where G′(f0) and G″(f0) are the first and second derivatives with respect to the frequency f at the point f0. Substituting (15.278) and (15.277) into (15.273) and (15.274), we obtain

Thus, the bias of spectral density estimate of stochastic process is proportional to the squared bandwidth of narrowband filter. To define the variance of estimate for the first approximation, we can assume that the condition G(f) ≈ G(f0) is true within the limits of the narrowband filter bandwidth. Then, according to (15.276), we obtain

The dispersion of spectral density estimate of the stochastic process takes the following form:

15.6 Estimate of Stochastic Process Spike Parameters

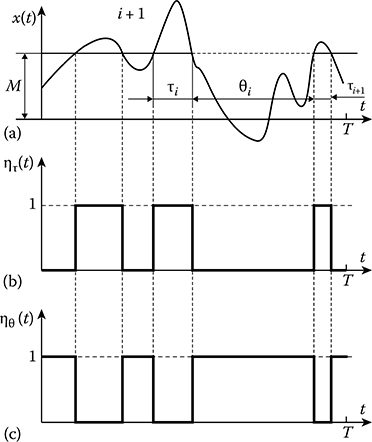

In many application problems we need to know the statistical parameters of stochastic process spike (see Figure 15.15a): the spike mean or the average number of down-up cross sections of some horizontal level M within the limits of the observation time interval [0, T], the average duration of the spike, and the average interval between the spikes. In Figure 15.15a, the variables τi and θi mean the random variables of spike duration and the interval between spikes, correspondingly. To measure these parameters of spikes, the stochastic process realization x(t) is transformed by the nonlinear transformer (threshold circuitry) into the pulse sequence normalized by the amplitude ητ with duration τi (Figure 15.15b) or normalized by the amplitude ηθ with duration θi (Figure 15.15c), correspondingly:

Using the pulse sequences ητ and ηθ, we can define the aforementioned parameters of stochastic process spike. Going forward, we assume that the investigated stochastic process is ergodic, as mentioned previously, and the following condition T≫ τcor is satisfied.

FIGURE 15.15 Transformation of stochastic process realization x(t) into the pulse sequence: (a) Example of stochastic process spike; (b) Pulse sequence normalized by the amplitude ητ with duration Ti; (c) Pulse sequence normalized by the amplitude ηθ with duration θi.

15.6.1 Estimation of Spike Mean

Taking into consideration the assumptions stated previously, the estimate of the spike number in the given stochastic process realization x(t) within the limits of the observation time interval [0, T] at the level M can be defined approximately as

where τav and θav are the average duration of spike and the average interval between spikes within the limits of the observation time interval [0, T] of the given stochastic process realization at the level M. The true values of the average duration of spikes and the average interval between spikes obtained as a result of averaging by a set of realizations are defined in accordance with Ref. [1] in the following form:

where

F(M) is the probability distribution function

is the average number of spikes per unit time at the level M defined as

where p2(M, ẋ) is the two-dimensional pdf of the stochastic process and its derivative at the same instant.

Note that corresponds to the level M0 defined from the equality

If the condition M ≫ M0 is satisfied, the probability of event that on average there will be noninteger number of intervals between the stochastic process spikes θi within the limits of the observation time interval [0, T] high; otherwise, if the condition M ≪ M0 is satisfied, the probability of event that on average there will be the noninteger number of spike duration τi within the limits of the observation time interval [0, T] is high. This phenomenon leads, on average, to more errors while measuring N* using the only formula (15.284). Because of this, while determining the statistical characteristics of the estimate of the average number of spikes, the following relationship

can be considered as the first approximation, where we assume that

For this reason, we use the approximations and .

The mathematical expectation of the average number of stochastic process spikes can be deter-mined in the following form:

According to (15.282), (15.283), (15.285), and (15.286), we obtain

Substituting (15.291) and (15.292) into (15.290), we obtain

In other words, the estimate of average number of the stochastic process spikes at the level M within the limits of the observation time interval [0, T] is unbiased as a first approximation.

The estimate variance of the average number of stochastic process spikes at the level M can be presented in the following form:

In the case of ergodic stochastic processes, the average values can be written in the following form:

and

As we can see from (15.295) and (15.296), the average values are equal to the probabilities of nonexceeding and exceeding the level M by the stochastic process realization x(t) at the instants t1 and t2

Taking into consideration. (15.294). through. (15.298), introducing new variables. t = t1 − t2, and changing the order of integration, we can write

where is the normalized variance of the average number of stochastic process spikes or the relative variance of the average number of stochastic process spikes.

As applied to the Gaussian and Rayleigh stochastic processes, we can present the two-dimensional probability distribution functions in the form (14.50) and (14.71). In the case of Gaussian stochastic process with zero mathematical expectation, that is, F(M0) = 1 − F(M0) at M0 = 0 Substituting (14.50) into (15.297) and (15.298), we obtain

In accordance with (15.299), we obtain

where av and cv are defined analogously as in (14.56) and (14.58). In doing so, the values of the coefficients av are presented in Table 14.1 as a function of v and the normalized level z = Mσ−1.

Since

we can see from (15.302) that the normalized variance of the average number of stochastic process spikes is symmetrical with respect to the level M/σ = 0. Because of this, we can write

As applied to the Gaussian stochastic process with the normalized correlation function

we obtain

where p = αT. If p ≫ 1

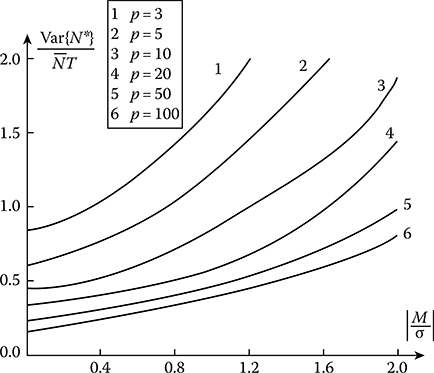

The normalized squared deviation of the average number of stochastic process spikes as a function of the normalized level |z = Mσ−1| for realizations of stochastic process with fixed duration, the parameter p = αT is shown in Figure 15.16. As we can see from

FIGURE 15.16 Normalized squared deviation of the average number of stochastic process spikes as a function of the normalized level for Gaussian realizations of a stochastic process with fixed duration.

Figure 15.16, the normalized squared deviation of the average number of stochastic process spikes is increased with increasing in the absolute level value |z = Mσ−1| and decreasing the parameter p = αT. At p = αT ≥ 10 and |z = Mσ−1| = 0, we can write

As applied to the Rayleigh stochastic process, we have that when

Substituting (14.71) into (15.297) and (15.298), we obtain

In accordance with (15.299), we obtain

where bv and dv are defined analogously as in (14.86) and (14.83). The values of coefficients are given in Table 14.3. The normalized squared deviation of the average number of stochastic process spikes as a function of the normalized level at in the case of the exponential normalized correlation function given by (15.305) is shown in Figure 15.17. As we can see from Figure 15.17, the normalized squared deviation of the average number of stochastic process spikes increases with an increase in the deviation of the normalized level with respect to the median of the probability distribution function at the fixed . This phenomenon is explained by a decrease in the number of spikes higher or lower than the level .