13 Estimation of Stochastic Process Variance

13.1 Optimal Variance Estimate of Gaussian Stochastic Process

Let the stationary Gaussian stochastic process ξ(t) with the correlation function

R(t1,t2)=σ2ℛ(t1,t2)(13.1)

be observed at N equidistant discrete time instants ti, i = 1, 2,…, N in such a way that

ti+1−tj=Δ=const.(13.2)

Then, at the measurer input we have a set of samples xi = x(ti). Furthermore, we assume that the mathematical expectation of observed stochastic process is zero. Then, the conditional N-dimensional pdf of Gaussian stochastic process can be presented in the following form:

p(x1,x2,...,xN|σ2)=1(2πσ2)N/2√det‖ℛij‖exp{−12σ2N∑i=1,j=1xixjCij}(13.3)

where

det ‖ℛij‖ is the determinant of matrix consisting of elements of the normalized correlation function ℛ(ti, tj) = ℛij

Cij are the elements of the matrix that is reciprocal to the matrix ‖ℛ‖

In doing so, the elements Cij are defined from the equation that is analogous to (12.170)

N∑l=1Cilℛlj=δij.(13.4)

The conditional multidimensional pdf (13.3) is the multidimensional likelihood function of the parameter σ2. Solving the likelihood equation, we obtain the variance estimate

VarE=1NN∑i=1,j=1xixjCij=1Ni=1∑i=1xxivj,(13.5)

where

vi=N∑j=1xjCij.(13.6)

The random variables vi possess zero mean and are subjected to the Gaussian pdf. Prove that the random variables vi are dependent on each other and are mutually independent of the samples xp at p ≠ i. Actually,

〈vjvq〉=N∑j=1,p=1CijCqp〈xjxp〉=σ2N∑j=1CijN∑p=1Cqpℛjp=σ2N∑j=1Cijδjq=σ2Ciq;(13.7)

〈xpvj〉=j=1∑j=1Cij〈xjxp〉=σ2N∑j=1Cijℛjp=σ2δip,(13.8)

where σ2 is the true variance of the stochastic process ξ(t).

Determine the statistical characteristics of variance estimate, namely, the mathematical expectation and variance. The mathematical expectation of variance estimate is given by

E{VarE}=1NN∑i=1,j=1Cij〈xjxj〉=σ2,(13.9)

that is, the variance estimate is unbiased.

The variance of variance estimate can be presented in the following form:

Var{VarE}=1N2N∑i=1,j=1N∑p=1,q=1〈xixjxpxq〉CijCpq−σ4.(13.10)

Determining the mixed fourth moment of the Gaussian random variable x

〈xixjxpxq〉=σ4[ℛijℛpq+ℛipℛjq+ℛiqℛjp](13.11)

and substituting (13.11) into (13.10) and taking into consideration (13.4), we obtain

Var{VarE}=2σ4N.(13.12)

As we can see from (13.12), the variance of the optimal variance estimate is independent of the values of the normalized correlation function between the samples of observed stochastic process. This fact may lead to some results that are difficult to explain with the physical viewpoint or cannot be explained absolutely. Actually, increasing the number of samples within the limits of the finite small time interval, we can obtain the estimate of variance with the infinitely small estimate variance according to (13.12). Approaching the variance of optimal variance estimate of Gaussian stochastic process to zero is especially evident while passing from discrete to continuous observation of stochastic process. Considering

N=N∑i=1N∑j=1Cijℛij(13.13)

and applying the limiting process to (13.5) from discrete observation to continuous one at T = const (Δ → 0, N → ∞), we obtain

VarE=∫T0∫T0ϑ(t1,t2)x(t1)x(t2)dt1dt2∫T0∫T0ϑ(t1,t2)ℛ(t1,t2)dt1dt2,(13.14)

where the function ϑ(t1, t2), similar to (12.6), can be defined from the following equation:

T∫0ϑ(t1,t)ℛ(t1,t2)dt=ℛ(t2−t1).(13.15)

Determining the mathematical expectation of variance estimate, we can see that it is unbiased. The variance of the variance estimate can be presented in the following form:

Var{VarE}=2σ4∫T0∫T0ϑ(t1,t2)ℛ(t1,t2)dt1dt2.(13.16)

Since

limτ→t2T∫0ϑ(t1,t2)ℛ(τ,t1)dt1=δ(0)→∞,(13.17)

we can see from (13.16) that the variance of the optimal variance estimate of Gaussian stochastic process approaches symmetrical to zero for any value of the observation interval [0, T].

Analogous statement appears for a set of problems in statistical theory concerning optimal signal processing in high noise conditions. In particular, given the accurate measurement of the stochastic process variance, it is possible to detect weak signals in powerful noise within the limits of a short observation interval [0, T]. In line with this fact, in [1] it was assumed that for the purpose of resolving detection problems, including problems related to zero errors, we should reject the accurate knowledge of the correlation function of the observed stochastic processes or we need to reject the accurate measurement of realizations of the input stochastic process. Evidently, in practice these two factors work. However, depending on which errors are predominant in the analysis of errors, limitations arise due to insufficient knowledge about the correlation function or due to inaccurate measurement of realizations of the investigated stochastic process.

It is possible that errors caused by inaccurate measurement of the investigated stochastic process have a characteristic of the additional “white” noise with Gaussian pdf. In other words, we think that the additive stochastic process

y(t)=x(t)+n(t)(13.18)

comes in at the input of measurer instead of the realization x(t) of stochastic process ξ(t), where n(t) is the realization of additional “white” noise with the correlation function defined as Rn(τ) = 0.5N0σ(τ) and N00 is the one-sided power spectral density of the “white” noise.

To define the characteristics of the optimal variance estimate in signal processing in noise we use the results discussed in Section 15.3 for the case of the optimal estimate of arbitrary parameter of the correlation function R(τ, l) of the Gaussian stochastic process combined with other Gaussian stochastic processes with the known correlation function. As applied to a large observation interval compared to the correlation interval of the stochastic process and to the variance estimate (l ≡ σ2), the variance estimate, in the first approximation, is unbiased. The variance of optimal variance estimate of the correlation function parameter given by (15.152) can be simplified and presented in the following form:

Var{VarEσ2}=1{T4π∫∞−∞S2(ω)dω[σ2S(ω)+0.5N0]2}2,(13.19)

where S(ω) is the spectral power density of the stochastic process ξ(t) with unit variance.

To investigate the stochastic process possessing the power spectral density given by (12.19), the variance of the variance estimate in the first approximation is determined in the following form:

Var{VarEσ2}=4σ2√αN0σ2αT.(13.20)

Denote

Pn=N0Δfeff(13.21)

as the noise power within the limits of effective spectrum band of investigated stochastic process, and according to (12.284) Δfeff = 0.25α, and

q2=Pnσ2(13.22)

as the ratio of the noise power to the variance of investigated stochastic process, in other words, the noise-to-signal ratio. As a result, we obtain the relative variation in the variance estimate of the stochastic process with an exponential normalized correlation function:

r=Var{VarE}σ4=8qp,(13.23)

where p=αT=Tτ−1cor, as mentioned earlier, is the ratio of the observation time to correlation interval of stochastic process. In practice, the measurement errors of instantaneous values xi = x(ti) can be infinitely small. At the same time, the measurement error of the normalized correlation function depends, in principle, on measurement conditions and, first of all, the observation time of stochastic process. Note that in practice, as a rule, the normalized correlation function is defined in the course of the joint estimate of the variance and normalized correlation function.

It is interesting to compare the earlier-obtained optimal variance estimate given by (13.3) based on the sample with the variance estimate obtained according to the widely used in mathematics statistical rule:

Var*=1NN∑i=1x2i.(13.24)

We can easily see that the variance estimate of stationary Gaussian stochastic process with zero mean carried out according to (13.24) is unbiased, and the variance of the variance estimate is defined as

Var{Var*}=2σ4N2N∑i=1.j=1ℛ[(i−j)Δ]=2σ4N2{1+2N−1∑i=1(1−iN)ℛ2(iΔ)}.(13.25)

By analogy with (12.193) and (12.194), the new indices were introduced and the order of summation was changed; A = ti+1 − ti is the time interval between samples. If the samples are uncorrelated (in a general case, independent) then (13.25) is matched with (13.12).

Thus, the optimal variance estimate of Gaussian stochastic process based on discrete sample is equivalent to the error given by (13.24) for the same sample size (the number of samples). This finding can be explained by the fact that under the optimal signal processing, the initial sample multiplies by the newly formed uncorrelated sample. However, if the normalized correlation function is unknown or is found to be inaccurate, then the optimal variance estimate of the stochastic process has the finite variance depending on the true value of the normalized correlation function. To simplify the investigation of this problem, we need to compute the variation in the variance estimate of Gaussian stochastic process with zero mean by two samples applied to the estimate by using the maximum likelihood function for the following cases: the normalized correlation function or, as it is often called, the correlation coefficient [2], in completely known, unknown, and erroneous conditions.

Applying the known correlation coefficient ρ to the optimal variance estimate of the stochastic process with the variance estimate yields the definition

VarE=x21+x22−2ρx1x22(1−ρ2),(13.26)

where

ρ=〈x1x2〉σ2(13.27)

is the correlation coefficient between the samples. The variance of the optimal variance estimate can be defined based on (13.12)

Var{VarE}=σ4.(13.28)

If the correlation coefficient ρ is unknown, then it must be considered as the variance or the unknown parameter of pdf. In this case, we need to solve the problem to obtain the joint estimate of the variance Var and the correlation coefficient ρ. As applied to the considered two-dimensional Gaussian sample, the conditional pdf (the likelihood function) takes the following form:

p2(x1,x2|Var,ρ)=12πVar√1−ρ2exp{−x21+x22−2ρx1x22Var(1−ρ2)}.(13.29)

Solving the likelihood equation

{∂p(x1,x2|Var,ρ)∂Var=0;∂p(x1,x2|Var,ρ)∂ρ=0(13.30)

with respect to the estimations VarE and ρE, we obtain the following estimations of the variance and the correlation coefficient:

VarE=x21+x222,(13.31)

ρE=2x1x2x21+x22.(13.32)

As we can see from.(13.31), the variance estimate is unbiased and the variance of the variance estimate can be presented in the following form:

Var{VarE}=σ4(1+ρ2),(13.33)

that is, in the case when the correlation coefficient is unknown, the variance of the variance estimate of the Gaussian stochastic process depends on the absolute value of the correlation coefficient.

Let the correlation coefficient ρ be known with the random error ε, that is,

ρ=ρ0+ε,(13.34)

where ρ0 is the true value of the correlation coefficient between the samples of the investigated stochastic process. At the same time, it can be assumed that the random error ε is statistically independent of specific sample values x1 and x2. Furthermore, we assume that the mathematical expectation of the random error ε is zero, that is, 〈ε〉 = 0 and Varε = 〈ε2〉 is the variance to be used for defining the true value ρ0.

Using the assumptions made based on (13.26), it is possible to conclude that the variance estimate is unbiased and the variance of the variance estimate can be presented in the following form:

Var{Var*E}=σ4[1+Varε1+2ρ201−ρ20].(13.35)

If the random error ε is absent under the definition of the correlation coefficient and we can use only the true value ρ0, that is, Varε = 0, the variance of variance estimate by two samples is defined as σ2 and is independent of the true value ρ0 of the correlation coefficient. The random error under definition of the variance of variance estimate

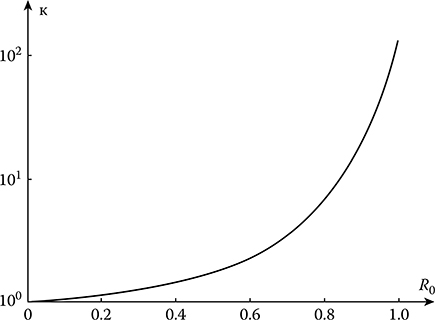

FIGURE 13.1 Variance of the variance estimate versus the true value of the correlation coefficient.

κ=1+2ρ201−ρ20(13.36)

as a function of the true value ρ0 of the correlation coefficient is shown in Figure 13.1. The value κ shows how much the variance of variance estimate increases with the increase in the error under definition of the correlation coefficient ρ. As we can see from (13.35) and Figure 13.1, with increase in the absolute value of the correlation coefficient, the errors in variance estimate also rise rapidly. In doing so, if the module of the correlation coefficient between samples tends to approach unit, then independent of the small variance Varε of the definition of the correlation coefficient the variance of the variance estimate of stochastic process increases infinitely. Compared to ρ0 = 0, at |ρ0| = 0.95, the value κ increases 30 times and at |ρ0| = 0.99 and |ρ0| = 0.999, 150 and 1500 times, respectively. For this reason, at sufficiently high values of the module of correlation coefficient between samples we need to take into consideration the variance of definition of the correlation coefficient ρ. Qualitatively, this result is correct in the case of the optimal variance estimate of the Gaussian stochastic process under multidimensional sampling.

Although in applications we define the correlation function with errors, in particular cases, when we carry out a simulation, we are able to satisfy the simulation conditions, in which the normalized correlation function is known with high accuracy. This circumstance allows us to verify experimentally the correctness of the definition of optimal variance estimate of Gaussian stochastic process by sampling with high values given by the module of correlation coefficient between samples.

Experimental investigations of the optimal (13.5) and nonoptimal (13.24) variance estimations by two samples with various values of the correlation coefficient between them were carried out. To simulate various values of the correlation coefficient between samples, the following pair of values was formed:

xi=1LL∑p=1yi−p,(13.37)

xi−k=1L∑Lp=1yi−k−p,k=0,1,…,L.(13.38)

As we can see from (13.37) and (13.38), the samples xi and xi-k are the sums of independent samples yp obtained from the stationary Gaussian stochastic process with zero mathematical expectation.

FIGURE 13.2 Normalized correlation function of stationary Gaussian stochastic process.

The samples xi and xi-k are distributed according to the Gaussian pdf and possess zero mathematical expectations. The correlation function between the newly formed samples can be presented in the following form:

Rk=〈xixi−k〉=1L2L−|k|∑p=1〈y2p〉=σ2L(1−|k|L).(13.39)

To obtain the samples xi and xi-k subjected to the Gaussian pdf we use the stationary Gaussian stochastic process with the normalized correlation function presented in Figure 13.2. As we can see from Figure 13.2, the samples of stochastic process yp with the sampling period exceeding 0.2 ms are uncorrelated practically. In the course of test, to ensure a statistical independence of the samples yp we use the sampling rate equal to 512 Hz, which is equivalent to 2 ms between samples. Experimental test with the sample size equal to 106 shows that the correlation coefficient between neighboring readings of the sample yp is not higher than 2.5 × 10−3.

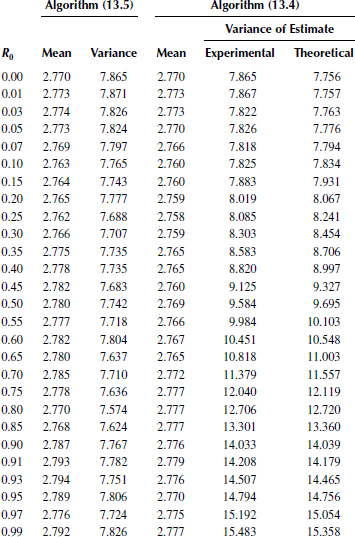

Statistical characteristics of estimations given by (13.5) and (13.24) at N = 2 are computed by the microprocessor system. The samples xi and xi-k are obtained as a result of summing 100 independent readings of yi-p and yi-k-p, respectively, which guarantees a variation of the correlation coefficient from 0 to 1 at the step 0.01 when the value k changes from 100 to 0. The statistical characteristics of estimates of the mathematical expectation 〈Varj〉 and the variance Var{Varj} at j = 1 corresponding to the optimal estimate given by (13.5) and at j = 2 corresponding to the estimate given by (13.24) are determined by 3 × 105 pair samples xi and xi-k. In the course of this test, the mathematical expectation and variance have been determined based on 106 samples of xi. In doing so, the mathematical expectation has been considered as zero, not high 1.5 × 10−3, and the variance has been determined as σ2 = 2.785.

Experimental statistical characteristics of variance estimate obtained with sufficiently high accuracy are matched with theoretical values. The maximal relative errors of definition of the math-ematical expectation estimate and the variance estimate do not exceed 1% and 2.5%, correspondingly Table 13.1 presents the experimental data of the mathematical expectations and variations in the variance estimate at various values of the correlation coefficient ρ0 between samples. Also, the theoretical data of the variance of the variance estimate based on the algorithm given by (13.24) and determined in accordance with the formula

Var{Var*}=σ2(1+ρ20)(13.40)

are presented for comparison in Table 13.1. Theoretical value of the variance of the optimal variance estimate is equal to Var{VarE} = 7.756 according to (13.5). Thus, the experimental data prove the previously mentioned theoretical definition of estimates and their characteristics, at least at N = 2.

TABLE 13.1

Experimental Data of Definition of the Mathematical Expectation and Variance of the Variance Estimate

13.2 Stochastic Process Variance Estimate Under Averaging in Time

In practice, under investigation of stationary stochastic processes, the value

Var*=T∫0h(t)[x(t)−E]2dt(13.41)

is considered as the variance estimate, where x(t) is the realization of observed stochastic process; E is the mathematical expectation; h(t) is the weight function with the optimal form defined based on the condition of unbiasedness of the variance estimate

T∫0h(t)dt=1(13.42)

and minimum of the variance of the variance estimate. As applied to the stationary Gaussian stochastic process, the variance of the variance estimate can be presented in the following form:

Var{Var*}=4σ2T∫0ℛ2(τ)rh(τ)dτ,(13.43)

when the mathematical expectation is known by analogy with (12.130), where σ2 is the true value of variance, ℛ(τ) is the normalized correlation function of the observed stochastic process, and the function rh(τ) is given by (12.131).

The optimal weight function applied to stochastic process with the exponential normalized correlation function given by (12.13) is discussed in Ref. [3]. The optimal variance estimate in the sense of the rule given by (13.41) and the minimal value of the variance of the variance estimate take the following form:

Var*opt=x20(0)+x20(T)+2α∫T0x2(t)dt2(1+αT);(13.44)

Var{Var*opt}=2σ41+αT.(13.45)

To obtain the considered optimal estimate we need to know the correlation function with high accuracy that is not possible forever. For this reason, as a rule, the weight function is selected based on the simplicity of realization, but the estimate would be unbiased and with increasing observation time interval, the variance of the variance estimate would tend to approach zero monotonically decreased. The function given by (12.112) is widely used as the weight function.

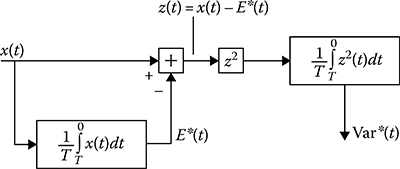

We assume that the investigated stochastic process is a stationary one and the current estimate of the stochastic process variance within the limits of the observation time interval [0, T] has the following value:

Var*(t)=1TT∫0{x(τ)−1TT∫0x(z)dz}2=1TT∫0[x(τ)−E*(t)]2dτ,(13.46)

where E*(t) is the mathematical expectation estimate at the instant t. The flowchart of measurer operating in accordance with (13.46) is shown in Figure 13.3.

FIGURE 13.3 Flowchart of measurer operating in accordance with (13.46).

Determine the mathematical expectation and the variance of the variance estimate assuming that the investigated stochastic process is a stationary Gaussian process with unknown mathematical expectation. The mathematical expectation of variance estimate is given by

〈Var*〉=1TT∫0〈x2(τ)〉dτ−1T2T∫0T∫0〈x(τ1)x(τ2)〉dτ1dτ2.(13.47)

Taking into consideration that

x0(t)=x(t)-E0(13.48)

and transforming the double integral into a single one introducing new variables, we obtain

〈Var*〉=σ2{1−2TT∫0(1−τT)ℛ(τ)dτ}.(13.49)

As we can see from (13.49), while estimating the variance of stochastic process with the unknown mathematical expectation, there is a bias of estimate defined as

b{Var*}=2σ2TT∫0(1−τT)ℛ(τ)dτ.(13.50)

In other words, the bias of variance estimate coincides with the variance of mathematical expectation estimate of stochastic process (12.116). The bias of variance estimate depends on the observation time interval and the correlation function of investigated stochastic process. If the observation time interval is much more than the correlation interval of the investigated stochastic process and the normalized correlation function is not an alternative function, the bias of variance estimate is defined by (12.118).

Analogous effect is observed in the case of sampling the stochastic process. We consider the following value as the estimate of stochastic process variance:

Var*=1N−1N∑i=1{xi−1NN∑j=1xj}2,(13.51)

where N is the total number of samples of stochastic process at the instants t = iΔ, i = 1, 2, …, N. The mathematical expectation of variance estimate is determined in the following form:

〈Var*〉=σ2N−1{N−1NN∑i=1,k=1ℛ[(i−k)Δ]}.(13.52)

Carrying out the transformation as it was done in Section 12.5, we obtain

〈Var*〉=σ2{1−2N−1N∑i=1(1−iN)ℛ(iΔ)}.(13.53)

If the samples are uncorrelated, we have

〈Var*〉=σ2.(13.54)

In other words, we see from (13.54) that under the uncorrelated samples the variance estimate (13.51) is unbiased.

In a general case of the correlated samples, the bias of estimate of stochastic process variance is given by

b{Var*}=2σ2N−1N∑i=1(1−iN)ℛ(iΔ).(13.55)

In doing so, if the number of investigated samples is much more than, the ratio of the correlation interval of stochastic process to the sampling period, (13.55) is simplified and takes the following form:

b{Var*}=2σ2N−1N∑i=1ℛ(iΔ).(13.56)

For measuring the variance of stochastic processes, we can often assume that the mathematical expectation of stochastic process is zero and the variance estimate is carried out based on smoothing the squared stochastic process by the linear filter with the impulse response h(t), namely,

Var*(t)=∫T0h(τ)x2(t−τ)dτ∫T0h(τ)dτ.(13.57)

If the observation time interval is large, that is, a difference between the instants of estimation and beginning to excite the filter input by stochastic process much more than the correlation interval of the investigated stochastic process and filter time constant, then

Var*(t)=∫∞0h(τ)x2(t−τ)dτ∫∞0h(τ)dτ.(13.58)

Under sampling the stochastic process with known mathematical expectation, the value

Var*=1NN∑i=0(xj−E0)2(13.59)

is taken as the variance estimate that ensures the estimate unbiasedness.

Let us define the variance of the variance estimate caused by the finite observation time interval of stochastic process. Let the investigated stochastic process be the stationary one and we use the ideal integrator as the smoothing filter. In this case, the variance estimate is carried out in accordance with (13.46) and the variance of the variance estimate takes the following form:

Var{Var*}=1T2T∫0T∫0〈x2(t1)x2(t2)〉dt1dt2−2T3T∫0T∫0T∫0〈x2(t1)x(t2)x(t3)〉dt1dt2dt3+1T4T∫0T∫0T∫0T∫0〈x(t1)x(t2)x(t3)x(t4)〉dt1dt2dt3dt4−σ4{1−2TT∫0(1−τT)ℛ(τ)dτ}2.(13.60)

Determination of moments in the integrands (13.60) is impossible for the arbitrary pdf of the investigated stochastic process. For this reason, to define the main regularities we assume that the investigated stochastic process is Gaussian with the unknown mathematical expectation, the true value of which is E0. Then, after the corresponding transformations, we have

Var{Var*}=4σ4T{T∫0(1−τT)ℛ2(τ)dτ+2T{T∫0(1−τT)ℛ(τ)dτ}2−1T2T∫0T∫0T∫0ℛ(t1−t2)ℛ(t1−t3)dt1dt2dt3}.(13.61)

If the mathematical expectation of the investigated stochastic process is known accurately, then according to (13.46) the variance estimate is unbiased and the variance of the variance estimate takes the following form:

Var{Var*}=4σ4TT∫0(1−τT)ℛ2(τ)dτ.(13.62)

When the observation time interval is sufficiently large, that is, the condition T ≫ τcor is satisfied, (13.62) is simplified and takes the following form:

Var{Var*}≈4σ4T∞∫0ℛ2(τ)dτ≤4σ4τcorT.(13.63)

Using (12.27) and (12.122), at the condition T ≫ τcor, variance of the variance estimate can be presented in the following form:

Var{Var*}≈2T∞∫−∞R2(τ)dτ=2πT∞∫0S2(ω)dω.(13.64)

As applied to the exponential correlation function given by (12.13), the normalized variance of the variance estimate can be presented in the following form:

Var{Var*}σ4=2p−1+exp{−2p}p2,(13.65)

where p is given by (12.48). Analogous formulae for the variance of the variance estimate can be obtained when the stochastic process is sampling. Naturally, at that time, the simplest expressions are obtained in the case of independent readings of the investigated stochastic process.

Determine the variance of the variance estimate of stochastic process according to (13.51) for the case of independent samples. For this case, we transform (13.51) in the following form:

Var*=1N−1{N∑i=1y2i−1N{N∑p=1yp}2},(13.66)

where yi = xi − E. As we can see, the variance estimate is unbiased. The variance of the variance estimate of stochastic process can be determined in the following form:

Var{Var*}=1(N−1)2{N∑i=1,j=1〈y2iy2j〉−2NN∑i=1,p=1,q=1〈y2iypyq〉+1N2N∑i=1,j=1,p=1,q=1〈yiyjypyq〉}−σ4.(13.67)

To compute the sums in the brackets we select the terms with the same indices. Taking into consideration the independence of the samples, we have

N∑i=1,j=1〈y2iy2j〉=N∑i=1〈y4i〉+N∑i=1,j=1,i≠j〈y2i〉〈y2j〉=Nμ4+N(N−1)μ22,(13.68)

where

μvi=〈yvi〉=〈(xj−E)v〉(13.69)

is the central moment of the vth order and, naturally, μ2 = σ2. Analogously, we obtain

N∑i=1,p=1,q=1〈y2iypyq〉=Nμ4+N(N−1)μ22;(13.70)

v∑i=1,j=1,p=1,q=1〈yiyjypyq〉=Nμ4+3N(N−1)μ22.(13.71)

Substituting (13.70) and (13.71) into (13.67), we obtain

Var{Var*}=μ4−{1−(2/(N−1))}σ2N.(13.72)

In the case of Gaussian stochastic process we have μ4 = 3σ4. As a result we obtain

Var{Var*}=2σ4N−1.(13.73)

If the mathematical expectation is known, then the unbiased variance estimate is defined as

Var*=1NN∑i=1(xi−E0)2,(13.74)

and the variance of the variance estimate takes the following form:

Var{Var*}=μ4−04N.(13.75)

In the case of Gaussian stochastic process, variation in the variance estimate has the following form:

Var{Var*}=2σ4N.(13.76)

Comparing (13.76) with (13.73), we can see that at N ≫ 1 these formulas are coincided.

As applied to the iterative variance estimate of stochastic process with zero mathematical expectation, based on (12.342) and (12.345) we obtain

In the case of discrete stochastic process

Var*{N}=Var*[N−1]+γ[N]{x2[N]−Var*[N−1]};(13.77)

In the case of continuous stochastic process

dVar*(t)dt=γ(t){x2(t)−Var*(t)}.(13.78)

As shown in Section 12.9, we can show that the optimal value of the factor γ[N] is equal to N−1.

13.3 Errors Under Stochastic Process Variance Estimate

As we can see from formulas for the variance estimate of stochastic process, a squaring of the stochastic process realization (or its samples) is a very essential operation. The device carrying out this operation is called the quadratic transformer or quadrator. The difference in quadrator performance from square-law function leads to additional errors arising under measurement of the stochastic process variance. To define a character of these errors we assume that the stochastic process possesses zero mathematical expectations and the variance estimate is carried out based on investigation of independent samples. The characteristic of transformation y = g(x) can be presented by the polynomial function of the μth order

y=g(x)=μ∑k=0akxk.(13.79)

Substituting (13.79) into (13.59) instead of xi and carrying out averaging, we obtain the mathematical expectation of variance estimate:

〈Var*〉=μ∑k=0ak〈xk〉=a0+a2σ2+μ∑k=3ak〈xk〉.(13.80)

The estimate bias caused by the difference between the transformation characteristic and the square-law function can be presented in the following form:

b{Var*}=σ4(a2−1)+a0+μ∑k=3ak〈xk〉.(13.81)

For the given transformation characteristic the coefficients ak can be defined before. For this reason, the bias of variance estimate caused by the coefficients a0 and a2 can be taken into consideration. The problem is to take into consideration the sum in (13.81) because this sum depends on the shape of pdf of the investigated stochastic process:

〈xk〉=∞∫−∞xkf(x)dx.(13.82)

In the case of Gaussian stochastic process, the moment of the kth order can be presented in the following form:

〈xk〉={1⋅3⋅5...(k−1)σ0.5k,if k is even;0,if k is odd;(13.83)

Difference between the transformation characteristic and the square-law function can lead to high errors under definition of the stochastic process variance. Because of this, while defining the stochastic process variance we need to pay serious attention to transformation performance. Verification of the transformation performance is carried out, as a rule, by sending the harmonic signal of known amplitude at the measurer input.

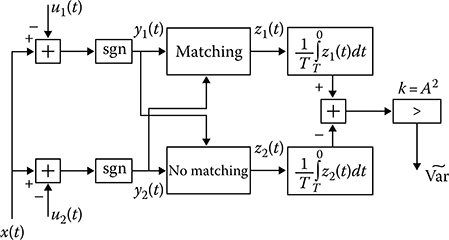

While measuring the stochastic process variance, we can avoid the squaring operation. For this purpose, we need to consider two sign functions (12.208)

{η1(t)=sgn[ξ(t)−μ1(t)]η2(t)=sgn[ξ(t)−μ2(t)],(13.84)

instead of the initial stochastic process ξ(t) with zero mathematical expectation, where μ(t) and μ2(t) are additional independent stationary stochastic processes with zero mathematical expectations and the same pdf given by (12.205). At the same time, the condition given by (12.206) is satisfied.

The functions η1(t) and η2(t) are the stationary stochastic processes with zero mathematical expectations. In doing so, if the fixed value ξ(t) = x the conditional stochastic processes η1(t|x) and η2(t|x) are statistically independent, that is,

〈η1(t|x)η2(t|x)〉=〈η1(t|x)〉〈η2(t|x)〉.(13.85)

Taking into consideration (12.209), we obtain

〈η1(t|x)η2(t|x)〉=x2A2.(13.86)

The unconditional mathematical expectation of product can be presented in the following form:

〈η1(t|x)η2(t|x)〉=1A2∞∫−∞x2p(x)dx=σ2A2.(13.87)

Denote the realizations of stochastic processes η1(t) and η2(t) by the functions y1(t) and y2(t), respectively. Consequently, if we consider the following value as the variance estimate,

˜Var=A2TT∫0y1(t)y2(t)dt,(13.88)

then the variance estimate is unbiased.

The realizations y1(t) and y2(t) take the values equal to ±1. For this reason, the multiplication and integration in (13.88) can be replaced by individual integration of new unit functions obtained as a result of coincidence and noncoincidence of polarities of the realizations y1(t) and y2(t):

T∫0y1(t)y2(t)dt=T∫0z1(t)dt−T∫0z2(t)dt,(13.89)

where

z1(t)={1if {y1(t)>0,y2(t)>0;y1(t)<0,y2(t)<0;0otherwise;(13.90)

z2(t)={1if {y1(t)>0,y2(t)<0;y1(t)<0,y2(t)>0;0otherwise.(13.91)

FIGURE 13.4 Measurer based on additional signals.

The flowchart of measuring device based on the implementation of additional signals is shown in Figure 13.4.

In the case of discrete process, the variance estimate can be presented in the following form:

˜Var=A2NN∑i=1y1iy2i,(13.92)

where y1i = y1(ti) and y2 = y2(ti). In this case, the integrator can be replaced by the summator in Figure 13.4.

Determine the variance of the variance estimate ˜Var applied to investigation of stochastic process at discrete instants for the purpose of simplifying further analysis that the samples y1i and y2i are independent. Otherwise, we should know the two-dimensional pdfs of additional uniformly distributed stochastic processes μ1(t) and μ2(t). The variance of the variance estimate can be presented in the following form:

Var{˜Var}=A4N2N∑i=1,j=1〈y1iy1jy2iy2j〉−〈˜Var〉2.(13.93)

In double sum, we can select the terms with i = j. Then

N∑i=1,j=1〈y1iy1jy2iy2j〉=N∑i=1〈(y1iy2i)2〉+N∑i=1,j=1,i≠j〈y1iy1jy2iy2j〉,(13.94)

where

〈y1iy1jy2iy2j〉=〈η1iη1jη2iη2j〉.(13.95)

Based on a definition of sign functions, we can obtain from (13.84) the following condition:

[y1(t)y2(t)]2=1.(13.96)

Define the cumulative moment 〈η1iη1jη2iη2j|xixj〉 at the condition that ξ(ti) = xi and ξ(tj) = xj. Taking into consideration the statistical independence of the samples η1 and η2, the statistical mutual independence of the stochastic values η1(t|x) and η2(t|x), and (12.209), the conditional cumulative moment can be presented in the following form:

〈η1iη1jη2iη2j|xjxj〉=x2ix2jA4.(13.97)

Averaging (13.97) by possible values of independent random variables xi and xj, we obtain

〈η1iη1jη2iη2j〉=〈x2ix2j〉A4=〈x2i〉〈x2j〉A4=σ4A4.(13.98)

Substituting (13.96) and (13.98) into (13.93), we obtain the variance of the variance estimate (13.92)

Var{Var•}=A4N(1−σ4A4).(13.99)

The variance estimate according to (13.92) envisages that the condition (12.206) is satisfied. Consequently, the inequality σ2 ≪ A2 must be satisfied. Because of this, as in the case of estimation of the mathematical expectation with employment of additional signals, the variance of the variance estimate ˜Var is completely defined by the half intervals of possible values of additional random sequences. Comparing the obtained variance (13.99) with the variance of the variance estimate in (13.76) by N independent samples, we can find that

Var{˜Var}Var{Var*}=A42σ4(1−σ4A4).(13.100)

Thus, we can conclude that the method of measurement of the stochastic process variance using the additional signals is characterized by the higher variance compared to the algorithm (13.74). This is based on the example of definition of the variance estimate of stochastic sample with the uniform pdf coinciding with (12.205), σ2 = A2/3. As a result, we have

Var{˜Var}Var{Var*}=4.(13.101)

The methods that are used to measure the variance assume the absence of limitations of instantaneous values of the investigated stochastic process. The presence of these limitations leads to additional errors while measuring the variance. Determine the bias of variance estimate of the Gaussian stochastic process when there is a limiter of the type (12.151) presented in Figure 12.6 applied to zero mathematical expectation and the true value of the variance σ2. The variance estimate is defined by (13.41). The ideal integrator h(t) = T−1 plays a role in averaging or smoothing filter. Substituting the realization y(t) = g[x(t)] into (13.41) instead of x(t) and carrying out averaging of the obtained formula with respect to x(t), the bias of variance estimate of Gaussian stochastic process can be written in the following form:

b{Var}*=∞∫−∞g2(x)p(x)dx−σ2=−2σ2{(1−γ2)Q(γ)−γ√2πexp{−0.5γ2}},(13.102)

FIGURE 13.5 Bias of variance estimate of Gaussian stochastic process as a function of the normalized limitation level.

where

γ=aσ(13.103)

is the normalized limitation level. At γ ≫ 1, we obtain that the bias of variance estimate tends to approach zero, which is expected. The bias of variance estimate of Gaussian stochastic process as a function of the normalized limitation level is shown in Figure 13.5.

13.4 Estimate of Time-Varying Stochastic Process Variance

To define the current value of nonstationary stochastic process variance there is a need to have a set of realizations xi(t) of this process. Then the variance estimate of stochastic process at the instant t0 can be presented in the following form:

Var*(t0)=1NN∑i=1[xj(t0)−E(t0)]2,(13.104)

where

N is the number of realizations xi(t) of stochastic process

E(t0) is the mathematical expectation of stochastic process at the instant t0

As we can see from (13.104), the variance estimate is unbiased and the variance of the variance estimate under observation of N independent realizations can be presented in the following form:

Var{Var*(t0)}=Var2(t0)N;(13.105)

that is, the variance estimate according to (13.104) is the consistent estimate.

In most cases, the researcher defines the variance estimate on the basis of investigation of a single realization of stochastic process. While estimating time-varying variance based on a single realization of time-varying stochastic process, there are similar problems as seen with the estimation of the time-varying mathematical expectation. On the one hand, to decrease the variance estimate caused by the finite observation time interval, the last must be as soon as large. On the other hand, we need to choose the integration time as short as possible for the best definition of variations in variance. Evidently, there must be a compromise.

The simplest way to define the time-varying stochastic process variance at the instant t0 is averaging the transformed input data of stochastic process within the limits of finite time interval. Thus, let x(t) be the realization of stochastic process ξ(t) with zero mathematical expectation. Measurement of variance of this stochastic process at the instant t0 is carried out by averaging the quadrature ordinates x(t) within the limits of the interval about the given value of argument [t0 − 0.5T, t0 + 0.5T]. In this case, the variance estimate takes the following form:

Var*(t0,T)=1Tt0+0.5T∫t0−0.5Tx2(t)dt=1T0.5T∫−0.5Tx2(t+t0)dt.(13.106)

Averaging the variance estimate by realizations, we obtain

〈Var*(t0,T)〉=1T0.5T∫−0.5TVar(t+t0)dt.(13.107)

Thus, as in the case of time-varying mathematical expectation, the estimate of the variance of the time-varying mathematical expectation does not coincide with its true value in a general case, but it is obtained by smoothing the variance within the limits of finite time interval [t0 − 0.5T, t0 + 0.5T]. As a result of such averaging, the bias of variance of stochastic process can be presented in the following form:

b{Var*(t0,T)}=1T0.5T∫−0.5T[Var(t+t0)−Var(t0)]dt.(13.108)

If we would like to have the unbiased estimate, a variance of the variance Var(t) within the limits of time interval.[t0 − 0.5T, t0 + 0.5T] must be the odd function and, in the simplest case, the linear function of time

Var(t0+t)≈Var(t0)+Var′(t0)t,−0.5T≤t≤0.5T.(13.109)

Evaluate the influence of deviation of the current variance Var(t) from linear function on its estimate. In doing so, we assume that the estimated current variance has the continuous first and second derivatives with respect to time. Then, according to the Taylor expansion we can write

Var(t)=Var(t0)+(t−t0)+Var′(t)+0.5(t−t0)Var″[t0+θ(t−t0)],(13.110)

where 0 < θ < 1. Substituting (13.110) into (13.108), we obtain

b{Var*(t0,T)}=12T0.5T∫−0.5Tt2Var″(t0+tθ)dt.(13.111)

Denoting MVar the maximal absolute value of the second derivative of the current variance Var(t) with respect to time, we obtain the high bound value of the variance estimate bias by absolute value

|b{Var*(t0,T)}|≤T2MVar24.(13.112)

The maximum value of the second derivative of the time-varying variance Var(t) can be evaluated, as a rule, based on the analysis of specific physical problems.

To estimate the difference in the time-varying variance a square of the investigated realization of the stochastic process can be presented in the following form of two sums:

ξt(t)=Var+(t)+ζ(t),(13.113)

where

Var(t) is the true value of the stochastic process variance at the instant t

ζ(t) are the fluctuations of square of the stochastic process realization with respect to its math-ematical expectation at the same instant t

Then the variation in the variance estimate of the investigated nonstationary stochastic process can be presented in the following form:

Var{Var*(t0,T)}=1T20.5T∫−0.5T0.5T∫−0.5TRζ(t1+t0,t2+t0)dt1dt2,(13.114)

where

Rζ(t1,t2)=〈[x2(t1)−Var(t1)][x2(t2)−Var(t2)]〉(13.115)

is the correlation function of the random component of the investigated squared stochastic process with respect to its variance at the instant t.

To define the approximate value of the variance of slowly varying in time variance estimate we can assume that the centralized stochastic process ζ(t) within the limits of the finite time interval [t0 − 0.5T, t0 + 0.5T] is the stationary stochastic process with the correlation function defined as

Rζ(t,t+τ)≈Varζ(t0)ℛζ(τ).(13.116)

Taking into consideration the approximation given by (13.116), the variance of the variance estimate presented in (13.114) can be presented in the following form:

Var{Var*(t0,T)}=Varζ(t0)2TT∫0(1−τT)ℛζ(τ)dτ.(13.117)

As applied to the Gaussian stochastic process at the given assumptions, we can write

Rζ(t,t+τ)≈2σ4(t0)ℛ2(τ),(13.118)

and the variance of the variance estimate at the instant t0 takes the following form:

Var{Var*(t0,T)}=4σ4(t0)TT∫0(1−τT)ℛ2(τ)dτ.(13.119)

Consider the characteristics of the estimate of the time-varying stochastic process variance for the case, when the investigated stochastic process variance can be presented in the series expansion form

Var(t)≈N∑i=1βiψj(t),(13.120)

where

βi are some unknown numbers

ψi(t) are the given functions of time

With increase in the number of terms in series given by (13.120), the approximation errors can decrease to an infinitesimal value. Similar to (12.258), we can conclude that the coefficients βi are defined by the system of linear equations

∑Ni=1βjT∫0ψi(t)ψj(t)dr=T∫0Var(t)ψj(t)dt,j=1,2,...,N(13.121)

based on the condition of minimum of quadratic error of approximation (13.120). Thus, the problem with definition of the stochastic process variance estimate by a single realization observed within the limits of the interval [0, T] can be reduced to estimation of the coefficients βi in the series given by (13.120). In doing so, the bias and the variance of the variance estimate of the investigated stochastic process caused by errors occurring while measuring the coefficients βi take the following form:

b{Var*(t)}=Var(t)−Var*(t)=N∑i=1ψj(t)[βj−〈β*j〉],(13.122)

Var{Var*(t)}=v∑i=1,j=1ψi(t)ψj(t)〈(βi−β*j)(βj−β*j)〉.(13.123)

The bias and the variance of the variance estimate averaged within the limits of the time interval of observation of stochastic process can be presented in the following form, respectively:

b{Var*(t)}=1TN∑i=1[βj−〈β*j〉]T∫0ψi(t)dt,(13.124)

Var{Var*(t)}=1TN∑i=1,j=1〈(βj−β*j)(βj−β*j)〉T∫0ψi(t)ψj(t)dt.(13.125)

The values minimizing the function

ε2(β1,β2,...,βN)=1TT∫0{x2(t)−N∑i=1βiψj(t)}2dt(13.126)

can be considered as estimations of the coefficients βi. As we can see from (13.126), the estimation of the coefficients βi is possible if we have a priori information that Var(t) and ψi(t) are slowly varying in time functions compared to the averaged velocity of component

z(t)=x2(t)−Var(t).(13.127)

The function z(t) is the realization of stochastic process ζ(t) and possesses zero mathematical expectation. In other words, (13.126) is true for the definition of coefficients βi if the frequency band ΔfVar of the time-varying variance is lower than the effective spectrum bandwidth of the investigated stochastic process ζ(t). This fact corresponds to the case when the correlation function of the investigated stochastic process can be written in the following form:

R(t,t+τ)≈Var(t)ℛ(t).(13.128)

The following stochastic process corresponds to this correlation function:

ξ(r)=a(t)η(t),(13.129)

where

η(t) is the stationary stochastic process

a(t) is the slow time-varying deterministic function time in comparison with the function η(t)

Based on the condition of minimum of the function ε2, that is,

∂ε2∂βm=0,(13.130)

we obtain the system of equations to estimate the coefficients βm

∑Ni=1β*iT∫0ψi(t)ψm(t)dt=T∫0x2(t)ψm(t)dt,m=1,2,...,N.(13.131)

Denote

T∫0ψi(t)ψm(t)dt=cim;(13.132)

T∫0x2(t)ψm(t)dt=ym.(13.133)

Then

β*m=1A∑Ni=1Aimyi=AmA,m=1,2,...,N,(13.134)

where the determinant A of linear equation system (13.131) and the determinant Ap are defined in accordance with (12.270) and (12.271).

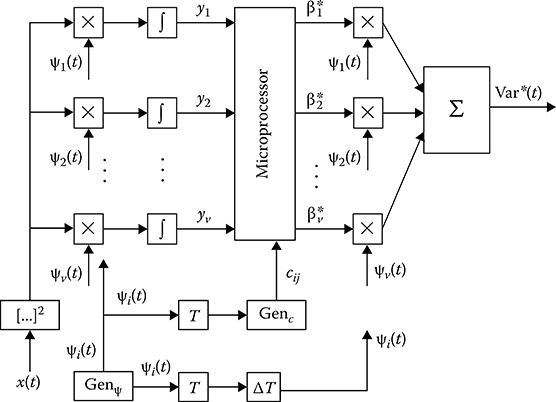

Flowchart of measurer of the time-varying variance Var(t) of the investigated stochastic process is shown in Figure 13.6. The measurer operates in the following way. The coefficients cij are generated by the generator “Genc” in accordance with (13.132) based on the functions ψi(t) that are issued by the generator “Genψ.”. Microprocessor system solves the system of linear equations with respect to the estimations β*i of coefficients βi based on the coefficients cij and values yi obtained according to (13.133). The estimate of time-varying variance Var*(t) is formed based on previously obtained data. The variance estimate has a delay T + ΔT with respect to the true value, and this delay is used to compute the values yi and to solve the system of N linear equations, respectively. The delay blocks denoted as T and ΔT are used for this purpose. Definition of coefficient estimations β*i is essentially simplified if the functions ψi( t) are orthonormalized:

T∫0ψi(t)ψj(t)dt={1ifi=j,0ifi≠j.(13.135)

FIGURE 13.6 Measurer of variance in time.

In this case, the series coefficient estimations are defined by a simple integration of the quadratic realization of stochastic process with the corresponding weight function ψm(t):

β*m=T∫0x2(t)ψm(t)dt.(13.136)

In doing so, the flowchart presented in Figure 13.6 is essentially simplified because there is no need to generate the coefficients cij and to solve the system of linear equations. The number of delay block is decreased too.

Determine the bias and mutual correlation functions between the estimates β*m and β*q. Taking into consideration (13.127) we can rewrite (13.133) in the following form:

yi=T∫0z(t)ψi(t)dt+T∫0Var(t)ψi(t)dt=gi+βmcim+∑q=1,q≠mβqciq,(13.137)

where

gi=T∫0z(t)ψj(t)dt.(13.138)

As in Section 12.7, we can write the estimate β*m of the coefficients in the following form:

β*m=1A∑Ni=1giAim+βm,m=1,2,...,N,(13.139)

where Aim are the algebraic complement of the determinant Am ≡ Bm (12.276) in which cip and gi are given by (13.132) and (13.138).

Since 〈ζ(t)〉 = 0, then 〈gi〉 = 0 and, consequently, the estimations β*m of the coefficients in series given by (13.120) are unbiased. The correlation functions R(β*m,β*q) and the variance Var(β*m) are defied by formulas that are analogous to (12.333) and (12.334). For these formulas, we have

Bij=T∫0T∫0〈z(t1)z(t2)〉ψi(t1)ψj(t2)dt1dt2=T∫0T∫0ℛζ(t1,t2)ψi(t1)ψj(t2)dt1dt2.(13.140)

We can show that by applying the correlation function R(t1, t2) to the Gaussian stochastic process we have

Rζ(t1,t2)=2R2(t1,t2).(13.141)

If the functions ψi(t) are the orthonormalized functions the correlation function of the estimations β*m and β*q of coefficients is defined by analogy with (12.338):

R(β*m,β*q)=Bmq.(13.142)

At that time, the formulas for the current and averaged variance of the time-varying variance estimate can be presented as in (12.339) and (12.240)

Var{Var*(t)}=N∑i=1,j=1Bijψi(t)ψj(t),(13.143)

Var{Var*}=1TN∑i=1Bij.(13.144)

If in addition to the condition of orthonormalization of the functions ψi(t), we assume that the frequency band of time-varying variance is much lower than the effective spectrum bandwidth of the investigated stochastic process ξ(t), the correlation function of the centralized stochastic process ζ(t) can be presented in the following form:

Rζ(t1,t2)≈Var(t1)δ(t1−t2).(13.145)

Then, in the case of arbitrary functions ψi(t), based on (13.140), we obtain

Bij=T∫0Var(t)ψi(t)ψj(t)dt.(13.146)

If the functions ψi( t) are the orthonormalized functions satisfying the Fredholm equation of the second type

ψi(t)=λjT∫0Rζ(t1,t2)ψi(τ)dτ,(13.147)

we obtain from (13.140) that

Bij={1λiif i=j,0if i≠j.(13.148)

In doing so, the variance of estimations β*m of the coefficients and the current and averaged variations in the variance estimate can be presented in the following form:

Var{β*m}=1λm,(13.149)

Var{Var*(t)}=N∑i=1ψ2i(t)λj,(13.150)

Var{Var*}=1TN∑i=11λi.(13.151)

As we can see from (13.151), at the fixed time interval T, with increasing number of terms under approximation of the variance Var(t) by the series given by (13.120) the averaged variance of time-varying variance estimate increases, too.

13.5 Measurement of Stochastic Process Variance in Noise

The specific receivers called the radiometer-type receiver are widely used to measure the variance or power of weak noise signals [4]. Minimal increment in the variance of the stochastic signal is defined by the threshold of sensitivity. In doing so, the threshold of sensitivity of methods that measure the stochastic process variance is called the value of the investigated stochastic process variance equal to the root-mean-square deviation of measurement results. The threshold of sensitivity of radiometer depends on many factors, including the intrinsic noise and random parameters of receiver and the finite time interval of observation of input stochastic process.

Radiometers are classified into four groups by a procedure to measure the stochastic process variance: compensation method, method of comparison with variance of reference source, correlation method, and modulation method. Discuss briefly a procedure to measure the variance of stochastic process by each method.

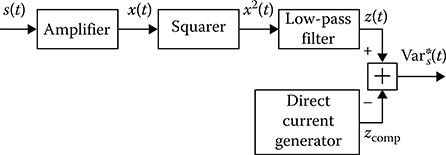

13.5.1 COMPENSATION METHOD OF VARIANCE MEASUREMENT

Flowchart of compensation method to measure the stochastic process variance is shown in Figure 13.7. While carrying out the compensation method, the additive mixture of the realization s(t) of the investigated stochastic process ζ(t) and the realization n(t) of the noise ς(t) is amplified and squared. The component z(t) that is proportional to the variance of total signal is selected by the smoothing low-pass filter (or integrator). In doing so, the constant component zconst formed by the intrinsic noise of amplifer is essentially compensated by constant bias of voltage or current. There is a need to note that under amplifer we understand the amplifers of radio and intermediate frequencies.

Under practical realization of compensation method to measure the stochastic process variance, the squarer, low-pass filter, and compensating device are often realized as a single device based on the lattice network and the squarer transformer is included in one branch of this lattice network. Branches of lattice network are chosen in such a way that a diagonal indicator could present zero voltage, for example, when the investigated stochastic process is absent. Presence of slow variations in the variance of intrinsic receiver noise and random variations of amplifer coefficient leads to imbalance in the compensation condition that, naturally, decreases the sensitivity of compensation method to measure the stochastic process variance. We consider the low-pass filter as an ideal integrator with the integration time equal to T for obtaining accurate results.

FIGURE 13.7 Compensation method.

Define the dependence of the variation in the stochastic process variance estimate on the main characteristics of investigated stochastic process at the measurer input by the compensation method. We assume that the measurement errors of compensating device are absent with the compensation of the amplifer noise constant component and the spectral densities of the investigated and measured stochastic process ζ(t) and receiver noise c,(t) are distributed uniformly within the limits of amplifer bandwidth.

The realization x(t) of stochastic process ξ(t) at the squarer input can be presented in the following form:

x(t)=[1+υ(t)][s(t)+n(t)](13.152)

accurate within the constant coefficient characterizing the average value of amplifer coefficient, where υ(t) is the realization of random variations of the receiver amplifer coefficient β(t). Since the amplifer coefficient is a positive characteristic, the pdf of the process 1 + β(t) must be approximated by the positive function, too. In practice, measurement of weak signals is carried out, as a rule, under the condition of small value of the variance Varβ of variations of the amplifer coefficient compared to its mathematical expectation that is equal to unit in our case. In other words, the condition Varβ ≪ 1 must be satisfied.

Taking into consideration the foregoing statements and to simplify analysis, we assume that all stochastic processes ζ(t), ς(t), and β(t) are the stationary Gaussian stochastic processes with zero mathematical expectation and correlation function defined as

〈ζ(t1)ζ(t2)〉=Rs(t2−t1)=Varsℛs(t2−t1)=Varsrs(t2−t1)cos[ω0(t2−t1)];(13.153)

〈ζ(t1)ζ(t2)〉=Rn(t2−t1)=Varnℛn(t2−t1)=Varnrn(t2−t1)cos[ω0(t2−t1)];(13.154)

〈β(t1)β(t2)〉=Rβ(t2−t1)=Varβℛβ(t2−t1)=Varβrβ(t2−t1).(13.155)

We assume that the measured stochastic process and receiver noise are the narrow-band stochastic processes. The stochastic process β(t) characterizing the random variations of the amplifer coefficient is the low-frequency stochastic process. In addition, we consider situations when the stochastic processes ζ(t), ς(t), and β(t) are mutually independent.

Realization of stochastic process at the ideal integrator output can be presented in the following form:

z(t)=1Tt∫T−tx2(t)dt.(13.156)

The variance estimate of the investigated stochastic process after cancellation of amplifer noise takes the following form:

Var*s(t)=z(t)−zconst.(13.157)

To define the sensitivity of compensation procedure we need to determine the mathematical expectation and the variance of estimate z(t). We can obtain that

Ez=(1+Varβ)(Vars+Varn).(13.158)

After cancellation of variance of the amplifer noise (1 + Varβ)Varn, the mathematical expectation of the output signal with accuracy within the coefficient 1 + Varβ corresponds to the true value of variance of the observed stochastic process. Thus, in the case of random variations of the amplification coefficient, the variance possesses the following bias:

b{Var*s}=VarβVars.(13.159)

Determine the variance of the variance estimate that limits the sensitivity of compensation procedure to measure a variance of the investigated stochastic process. Given that the considered stochastic processes are stationary, we can change the integration limits in (13.156) from 0 to T. In this case, the variance of the variance estimate takes the following form:

Var{Var*s}=2T2(1+q)2Var2sT∫0T∫0{[1+2ℛ2(t2,t1)][R2β(t2,t1)+2Rβ(t2,t1)]+(1+Varβ)2ℛ2(t2,t1)}dt1dt2,(13.160)

where

q=VarnVars(13.161)

is the ratio between the amplifer noise and the noise of investigated stochastic process within the limits of amplifer bandwidth. Double integral in (13.160) can be transformed into a single integral by introducing new variables and changing the order of integration. Taking into consideration the condition Varβ ≪ 1 and neglecting the integrals with double frequency 2ω0, we obtain

Var{Var*s}=2(1+q)2Var2sTT∫0(1−τT)[r2(τ)+4rβ(τ)Varβ]dτ.(13.162)

The time interval of observation corresponding to the sensitivity threshold is determined as

D{Var*s}=b2{Var*s}+Var{Var*s}=Var2s.(13.163)

As applied to the compensation procedure of measurement, we obtain

Var2β+2(1+q)2TT∫0(1−τT)[r2(τ)+4rβ(τ)Varβ]dτ=1.(13.164)

As an example, the stochastic processes with exponential normalized correlation functions can be considered:

{r(τ)=exp{−α|τ|};rβ(τ)=exp{−γ|τ|},(13.165)

where α and γ are the characteristics of effective spectrum bandwidth of their corresponding stochastic processes. As a result, we have

Var{Var*s}=2Var2s(1+q)2{2αT−1+exp{−2αT}4α2T2+4VarβγT−1+exp{−γT}γ2T2}.(13.166)

The obtained general and particular formulas for the variation in the variance estimate of stochastic process are essentially simplified in practice since the time interval of observation is much more than the correlation interval of stochastic processes ζ(t) and ς(t). In other words, the inequalities αT ≫ 1 and α ≫ γ are satisfied. In this case, (13.162) and (13.166) take the following form, correspondingly

Var{Var*s}=2(1+q)2Var2sT{∞∫0r2(τ)dτ+4VarβT∫0(1−τT)rβ(τ)dτ},(13.167)

Var{Var*s}=(1+q)2Var2sαT{1+8αγVarβγT−1+exp{−γT}γT}.(13.168)

When the random variations of the amplifier coefficient are absent (Varβ = 0) the variance of the variance estimate can be presented in the following form:

Var{Var*s}=(1+q)2αTVar2s.(13.169)

Consequently, when there are random variations of the amplification coefficient, there is an increase in the variance of the variance estimate of the investigated stochastic process on the value

ΔVar{Var*s}=8(1+q)2Var2sVarβ(γT)2[γT−1+exp{−γT}].(13.170)

As we can see from (13.170), with an increase in average time (the parameter γT) the additional random errors decrease correspondingly, and in the limiting case at γT ≫ 1 we have

ΔVar{Var*s}=8(1+q)2Var2sVarβγT.(13.171)

Let T0 be the time required to measure the variance of investigated stochastic process with the given root-mean-square deviation if random variations of the amplification coefficient are absent. Then, Tβ is the time required to measure the variance of investigated stochastic process with the given root-mean-square deviation if random variations of the amplification coefficient are present and is given by

1T0=[1+8αγVarβγTβ−1+exp{−γTβ}γTβ]1Tβ.(13.172)

FIGURE 13.8 Relative increase of the variance of the variance estimate as a function of the parameter γT = 1 at 8(α/γ)Varβ = 1.

The relative increase in the variance of variance estimate

λ=ΔVar{Var*s}Var{Var*s}=8αγVarβγT−1+exp{−γT}γT(13.173)

as a function of the parameter γT when 8(α/γ)Varβ = 1 is shown in Figure 13.8. Since Varβ ≪ 1, this case corresponds to the condition (α/γ) ≫ 1; that is, the spectrum bandwidth of the investigated stochastic processes is much more than the spectrum bandwidth of variations in amplification coefficient. Formula (13.173) is simplified for two limiting cases γT ≪ 1 and γT ≫ 1. At γT ≪ 1; in this case, the correlation interval of the amplification coefficient is greater than the time interval of observation of stochastic process, that is,

λ≈4αT Varβ.(13.174)

In the opposite case, that is, γT ≫ 1, we have

λ=8αγVarβ.(13.175)

As we can see from Figure.13.8, at definite conditions the random variations in the amplification coefficient increase essentially the variance of the variance estimate of stochastic process; that is, the radiometer sensitivity is decreased.

Formula (13.169) allows us to obtain a value of the time interval of observation corresponding to the sensitivity threshold at Varβ = 0. This time can be defined as

T=(1+q)2α.(13.176)

As we can see from (13.176), the time interval of observation essentially increases with an increase in the variance of amplifer noise. The amplifer intrinsic noise can be presented in the form of product between the independent stationary Gaussian stochastic processes, namely, the narrow-band stochastic process χ(t) and the low-frequency stochastic process θ(t) with zero mathematical expectations. In doing so, we assume that the stochastic process θ(t) possesses the unit variance, that is, 〈θ2(t)〉 = 1, and the correlation functions can be presented in the following form:

〈χ(t1)χ(t2)〉=Varnexp{−α|τ|}cosω0τ;(13.177)

〈θ(t1)θ(t2)〉=exp{−η|τ|},τ=t2−t1.(13.178)

Moreover, we assume that αT ≫ 1, η, α ≫ γ. In this case, the variation in the variance estimate or the variance of the signal at the ideal integrator output can be presented in the following form:

Var{Var*s}=(1+q)2Var2sαT{1+8αγVarβγT−1+exp{−γT}γT}+Var2n{2ηT−1+exp{−2ηT}η2T2+16Varβ(2η+γ)T−1+exp{−(2η+γ)T}(2η+γ)2T2}.(13.179)

Comparing (13.179) with (13.168), we see that owing to the low-frequency fluctuations of amplifer intrinsic noise the variance of the variance estimate increases as a result of the value yielded by the second term of (13.179).

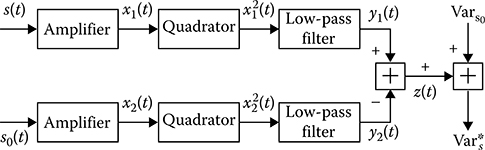

13.5.2 METHOD OF COMPARISON

This method is based on a comparison of constant signal components formed at the output of two-channel amplifer by the investigated stochastic process and the generator signal coming in at the amplifer input. Moreover, the generator signal is calibrated by power or variance. The wideband Gaussian stochastic process with known power spectral density or the deterministic harmonic signal can be employed as the calibrated generator signal. Flowchart of stochastic process variance measurer employing a comparison of signals at the low-pass filter output is presented in Figure 13.9.

Assuming that the receiver channels are identical and independent, the signal at the squarer inputs can be presented in the following form:

{x1(t)=[1+υ1(t)][s(t)+n1(t)];x2(t)=[1+υ2(t)][s0(t)+n2(t)],(13.180)

FIGURE 13.9 Flowchart of the stochastic process variance measurer.

where

υ1(t) and υ2(t) are the realizations of. Gaussian stationary stochastic processes describing the random variations β1(t) and β2(t) relative to the amplification coefficient value at the first and second channels of signal amplifer

n1(t) and n2(t) are the realizations of signal amplifer noise at the first and second channels

s(t) and s0(t) are the investigated stochastic process and the reference signal, respectively

Let the reference signal be the stationary Gaussian stochastic process with the variance Vars0. The signal at the subtractor output takes the following form:

z(t)=1TT∫0[x21(t)−x22(t)]dt.(13.181)

The variance estimate is determined in the following form:

Var*s(t)=z(t)+Vars0.(13.182)

Define the mathematical expectation and the variance of the variance estimate under assumptions made earlier

{〈β21(t)〉=〈β22(t)〉=Varβ;〈n21(t)〉=〈n22(t)〉=Varn.(13.183)

The mathematical expectation of variance estimate can be presented in the following form:

〈Var*s〉=〈z〉+Vars0=(1+Varβ)(Vars−Vars0)+Vars0.(13.184)

As we can see from (13.184), the bias of variance estimate can be presented in the following form:

b{Var*s}=Varβ(Vars−Vars0).(13.185)

If the variance of the reference stochastic process is controlled then the variance measurement process can be reduced to zero signal aspects at the output indicator. This procedure is called the method with zero instant. Naturally, the variance estimate is unbiased.

The variance of the variance estimate can be presented in the following form:

Var{Var*s}=4VarβTT∫0(1−τT)rβ(τ)[2+rβ(τ)Varβ]×{(Vars+Varn)2+(Vars0+Varn)2+2{[Rs(τ)+Rn(τ)]2+[Rs0(τ)+Rn(τ)]2}}dτ+(1+Varβ)24TT∫0(1−τT){[Rs(τ)+Rn(τ)]2+[Rs0(τ)+Rn(τ)]2}dτ.(13.186)

We can simplify (13.186) applied to zero measurement procedure. In doing so, owing to identity of two channels of amplifer we can think that Rs(τ) = Rs0(τ). Taking into consideration the condition that Varβ ≪ 1 and neglecting the terms with double frequency 2ω0, we can write

Var{Var*s}=(1+q)24Var2sTT∫T(1−τT)[r2(τ)+4rβ(τ)Varβ+4r2(τ)rβ(τ)Varβ]dτ.(13.187)

By analogy with the compensation method, (13.187) allows us to obtain the time interval of observation corresponding to the sensitivity threshold. To satisfy this condition, the following equality needs to be satisfied:

Var{Var*s}Var2s=1.(13.188)

Comparing (13.187) with (13.162), we can see that under the considered procedure the variance of the variance estimate is twice as high as the variance of the variance estimate at the compensation method. This increase in the variance of the variance estimate is explained by the presence of the second channel, which results in an increase in the total variance of the output signal. We need to note that although there is an increase in the variance of the variance estimate for the considered procedure, the present method is a better choice compared to the compensation method if the variance of intrinsic noise of amplifer varies after compensation procedure.

Let the normalized correlation functions, as before, be described by (13.165). Then (13.187) based on the following conditions αT ≫1 and α ≫ γ can be presented in the following form:

Var{Var*s}=(1+q)22Var2sαT{1+8αγVarβγT−1+exp{−γT}γT}.(13.189)

In other words, the variance of the variance estimate in the case of the considered procedure of measurement is twice as high as the variance of the variance estimate given by (13.168) obtained using compensation method. This is true also when the random variations of the amplification coefficient are absent. In doing so, the time interval of observation corresponding to the sensitivity threshold increases twofold compared to the time interval of observation of the investigated stochastic process while using the compensation method to measure the variance.

Using the deterministic harmonic signal

s0(t)=A0cos(ω0t+φ0)(13.190)

as a reference signal, applied to the zero measurement procedure, that, Vars0=0.5A20, and when the random variations of the amplification coefficient are absent, the variance of the variance estimate can be presented in the following form:

Var{Var*s}=2Var2s(1+2q+2q2)×1TT∫T(1−τT)r2(τ)dτ.(13.191)

As applied to the exponential normalized correlation function given by (13.165), we can transform (13.191) into the following form:

Var{Var*s}=2Var2s(1+2q+2q2)2αT−1+exp{−2αT}(2αT)2.(13.192)

At αT ≫ 1, we have

Var{Var*s}≈Var2s(1+2q+2q2)αT.(13.193)

Comparing (13.193) with (13.189) at Varβ = 0, we can see that in the case of weal signals, that is, the signal-to-noise ratio is small or q ≪ 1, with the use of the harmonic signal the variance of the variance estimate is twice as less as when the wideband stochastic process is used. Otherwise, at the high signal-to-noise ratio, q > 1, under the use of the harmonic signal the variance of the variance estimate can be higher compared to the case when we use the stochastic process. This phenomenon is explained by the presence of uncompensated components of the output signal formed by the terms of high order under expansion of the amplifer noise in series. In addition, the considered procedure possesses the errors caused by the nonidentity of channels and presence of statistical dependence between amplifer channels.

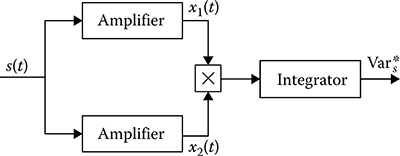

13.5.3 CORRELATION METHOD OF VARIANCE MEASUREMENT

While using the correlation method to measure the stochastic process variance, the stochastic process comes in at the inputs of two channels of amplifer. The intrinsic amplifer channel noise samples are independent of each other. Because of this, their mutual correlation functions are zero and the mutual correlation function of the investigated stochastic process is not equal to zero and the coincidence instants are equal to the variance of the investigated stochastic process. A flowchart of the variance measurement made using the correlation method is presented in Figure 13.10.

Let the independent channels of amplifer operate at the same frequency. Then the stochastic processes forming at the amplifer outputs can come in at the mixer inputs directly. We assume, as before, that the integrator is the ideal, that is, h(t) = T−1. The signal at the integrator output defines the variance estimate

Var*s=1TT∫0x1(t)x2(t)dt,(13.194)

where

{x1(t)=[1+υ1(t)][s(t)+n1(t)];x2(t)=[1+υ2(t)][s(t)+n2(t)](13.195)

FIGURE 13.10 Correlation method of variance measurement.

are the realizations of stochastic processes at the outputs of the first and second channels, respectively. The mathematical expectation of estimate can be presented in the following form:

〈Var*x〉=1TT∫0〈s2(t)〉dt=Vars;(13.196)

that is, the variance estimate is unbiased under the correlation method of variance measurement and when the identical channels are independent.

The variance of the output signal or the variance of the variance estimate of the input stochastic process in the case when the input stochastic process is Gaussian takes the following form:

Var{Var*s}=2Var2sTT∫0(1−τT)[Rβ1(τ)+Rβ2(τ)+Rβ1(τ)Rβ2(τ)]dτ+2TT∫0(1−τT)[1+Rβ1(τ)+Rβ2(τ)+Rβ1(τ)Rβ2(τ)]×[2R2s(τ)+Rn1(τ)Rs(τ)+Rn2(τ)Rs(τ)+Rn1(τ)Rn2(τ)]dτ,(13.197)

where Rβ1(τ) and Rβ2(τ) are the correlation functions of random components of the amplification coefficients of the first and second channels of amplifier.

We can simplify (13.197) taking into consideration that the correlation interval of random components of amplification coefficients is longer compared to the correlation interval of the intrinsic noise n1(t) and n2(t) and the investigated stochastic process ς(t). At the same time, we can introduce the function Rβ(0) = Varβ instead of the correlation function Rβ(τ) in (13.197). Then, taking into consideration the condition accepted before, that is, Varβ < 1 or Var2β≪1, identity of channels, and (13.153) through (13.155), we obtain

Var{Var*s}=2Var2sT(1+q+0.5q2)T∫0(1−τT)r2(τ)dτ+4Var2sVarβTT∫0(1−τT)rβ(τ)dτ.(13.198)

Formula (13.198) allows us to define the time interval of observation T corresponding to the sensitivity threshold at the condition given by (13.188). Let the normalized correlation functions r(τ) and rβ(τ) be defined by the exponents given by (13.165) and substituting into (13.198), we obtain

Var{Var*s}=2Var2s(1+q+0.5q2)2αT−1+exp{−2αT}(2αT)2+4Var2sVarβγT−1+exp{−γT}(γT)2.(13.199)

When the random variations of the amplification coefficients are absent and the condition T ≫ τcor is satisfied, the variance of the variance estimate can be presented in the following form:

Var{Var*s}=Var2s1+q+0.5q2αT.(13.200)

As we can see from (13.200), it is not difficult to define the time interval of observation corresponding to the sensitivity threshold in the case of the correlation method of measurement of the stochastic process variance.

Comparing (13.198) through (13.200) and (13.162), (13.166), and (13.169), we can see that the sensitivity of the correlation method of the stochastic process variance measurement is higher compared to the compensation method sensitivity. This difference is caused by the compensation of noise components with high order while using the correlation method and by the compensation of errors caused by random variations of the amplification coefficients. However, when the channels are not identical and there is a statistical relationship between the intrinsic receiver noise and random variations of the amplification coefficients, there is an estimate bias and the variance of estimate also increases, which is undesirable.

The correlation method used for variance measurement leads to additional errors caused by the difference in the performance levels of the used mixers and the ideal mixers. As a rule, to multiply two processes, we use the following operation:

(a+b)2−(a−b)2=4ab.(13.201)

In other words, a multiplication of two stochastic processes is reduced to quadratic transformation of sum and difference of multiplied stochastic processes and subtraction of quadratic forms. The highest error is caused by quadratic operations.

Consider briefly an effect of spurious coupling between the amplifer channels on the accuracy of measurement of the stochastic process variance. Denote the mutual correlation functions of the amplification coefficients and intrinsic noise of amplifer at the coinciding instants as

{〈β1(r)β2(r)〉=Rβ12,〈n1(t)n2(t)〉=Rn12.(13.202)

As we can see from (13.195), the bias of variance estimate is defined as

b{Var*}=Varn(1+Rβ12)+Rβ12Vars.(13.203)

Naturally, the variance of estimate increases due to the relationship between the amplifer channels. However, the spurious coupling, as a rule, is very weak and can be neglected in practice.

13.5.4 MODULATION METHOD OF VARIANCE MEASUREMENT

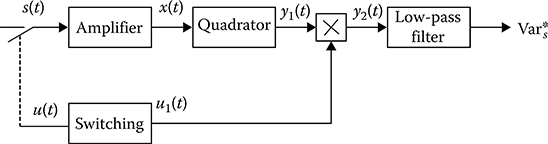

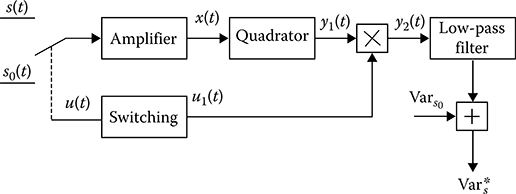

While using the modulation method to measure the stochastic process variance, the received realization x(t) of stochastic process is modulated at the amplifer input by the deterministic signal u(t) with audio frequency. Modulation, as a rule, is carried out by periodical connection of the investigated stochastic process to the amplifer input (see Figure 13.11) or amplifer input to the investigated stochastic processes and reference process (see Figure 13.12). After amplification and quadratic transformation, the stochastic processes come in at the mixer input, the second input of which is used by the deterministic signal u1(t) of the same frequency of the signal u(t). The mixer output process comes in at the low-pass filter input that make smoothing or averaging of stochastic fluctuations. As a result, we obtain the variance estimate of the investigated stochastic process.

FIGURE 13.11 Modulation method of variance measurement.

FIGURE 13.12 Modulation method of variance measurement using the reference process.

Define the characteristics of the modulation method of the stochastic process variance measurement assuming that the quadratic transformer has no inertia. The ideal integrator with the integration time T serves as the low-pass filter. Under these assumptions and conditions we can write

u(t)={1atkT0≤t≤kT0+0.5T0;0atkT0+0.5T0<t<(k+1)T0;k=0,±1,…;(13.204)

u1(t)={1atkT0≤t≤kT0+0.5T0;−1atkT0+0.5T0<t<(k+1)T0;k=0,±1,…(13.205)

As we can see from (13.204), u2(t) = u(t) and the average value in time of the function u(t) can be presented as ¯u(t)=0.5. Analogously we have

[1−u(t)]2=1−u(t).(13.206)

Note that due to the orthogonality of the functions u(t) and 1 − u(t), their product is equal to zero.

At first, we consider the modulation method of the stochastic process variance measurement presented in Figure 13.11. The total realization of stochastic process coming in at the quadrator input can be presented in the following form:

x(t)=[1+υ(t)]{[s(t)+n(t)]u(t)+[1−u(t)]n(t)}.(13.207)

The process at the quadrator output takes the following form:

The process at the mixer output takes the following form:

The signal at the ideal integrator output (the variance estimate) takes the following form:

The factor 2 before the integral is not important, in principle, but it allows us, as we can see later, to obtain the unbiased variance estimate and the variance of the variance estimate that are convenient to compare with the variance of the variance estimates obtained by other procedures and methods.

By averaging the random estimate , we obtain

As we can see from (13.211), when the random variations of the amplification coefficients are absent Varβ = 0, the variance estimate of stochastic process using the modulation method of variance measurement is unbiased as is the case with the compensation method. To determine the variance of the variance estimate we need to present the function u(t) by the Fourier series expansion

where

is the switching function frequency.

Ignoring oscillating terms and taking into consideration that Varβ ≪ 1 and the correlation interval of random component of the amplification coefficients is much more the correlation interval of the investigated stochastic process, the variance of the variance estimate, can be presented in the following form:

Taking into consideration (13.153) through (13.155), (13.214) can be written in the following form:

In practice, the correlation interval of the investigated stochastic process is much less than the modulation period T0 and, consequently, the time interval T of observation, since in real applications, the effective spectrum bandwidth of the investigated stochastic process is more than 104 − 105 Hz and at the same time, the modulation frequency fmod = Ω × (2π)−1 is several hundred hertz. In this case, we can assume that the functions cos[(2k − 1)Ωτ] are not variable functions within the limits of the correlation interval, and we can think that this function can be approximated by unit, that is, cos[(2k − 1)Ωτ] ≈ 1. This statement is true for the components of variance of the variance estimate caused by stochastic character of variation of the processes s(t) and n(t). Taking into consideration that

we obtain

The approximation cos[(2k − 1)Ωτ] ≈ 1 is true within the limits of the correlation interval of investigated stochastic process owing to fast convergence of the series (13.216) to its limit. If, in addition to the aforementioned condition, the correlation interval of the amplification coefficients is much less than the time interval of observation, that is, τβ ≪ 1 but τβ > T0, then

When the random variations of the amplification coefficients are absent, based on (13.217), we can write

Comparing (13.219) and (13.162) at Varβ = 0 while using the compensation method to measure variance, we can see that the relative value of the variance of the variance estimate defining the sensitivity of the modulation method is twice as high compared to the relative variation in the variance estimate obtained by the compensation method at the same conditions of measurement. Physically this phenomenon is caused by a twofold decrease in the time interval of observation of the investigated stochastic process owing to switching.

Now, consider the modulation method used for variance measurement based on a comparison between the variance Vars of the observed stochastic process ζ(t) within the limits of amplifer bandwidth and the variance Vars0 of the reference calibrated stochastic process s0(t) in accordance with the block diagram shown in Figure 13.12. In this case, the signal at the amplifer input can be presented in the following form:

The signal at the ideal integrator output can be presented in the following form:

The variance estimate is given by

Averaging by realizations, we have

that is, the bias of variance estimate can be presented in the following form:

When the random variations of the amplification coefficients are absent, the process at the modulation measurer output can be calibrated in values of difference between the variance of investigated stochastic process and the variance of reference stochastic process. This measurement method is called the modulation method of variance measurement with direct reading. When the variances of investigated and reference stochastic processes are the same, the bias of the considered variance estimate is zero. If the variance of the reference stochastic process can be controlled, the measurement is reduced to fixation of zero readings. This method is called the modulation method of measurement with zero reading. The modulation method with zero reading can be automated by a tracking device.