Chapter 15

Test Planning, Management, Execution, and Reporting

In this chapter—

15.1 INTRODUCTION

In this chapter, we will look at some of the project management aspects of testing. The Project Management Institute [PMI-2004] defines a project formally as a temporary endeavor to create a unique product or service. This means that every project has a definite beginning and a definite end and that the product or service is different in some distinguishing way from all similar products or services.

Testing is integrated into the endeavor of creating a given product or service; each phase and each type of testing has different characteristics and what is tested in each version could be different. Hence, testing satisfies this definition of a project fully.

Given that testing can be considered as a project on its own, it has to be planned, executed, tracked, and periodically reported on. We will look at the test planning aspects in the next section. We will then look into the process that drives a testing project. Subsequently, we will look at the execution of tests and the various types of reporting that takes place during a testing project. We will conclude this chapter by sharing some of the best practices in test management and execution.

15.2 TEST PLANNING

15.2.1 Preparing a Test Plan

Testing—like any project—should be driven by a plan. The test plan acts as the anchor for the execution, tracking, and reporting of the entire testing project and covers

- What needs to be tested—the scope of testing, including clear identification of what will be tested and what will not be tested.

- How the testing is going to be performed—breaking down the testing into small and manageable tasks and identifying the strategies to be used for carrying out the tasks.

- What resources are needed for testing—computer as well as human resources.

- The time lines by which the testing activities will be performed.

- Risks that may be faced in all of the above, with appropriate mitigation and contingency plans.

Failing to plan is planning to fail.

15.2.2 Scope Management: Deciding Features to be Tested/Not Tested

As was explained in the earlier chapters, various testing teams do testing for various phases of testing. One single test plan can be prepared to cover all phases and all teams or there can be separate plans for each phase or for each type of testing. For example, there needs to be plans for unit testing integration testing, performance testing, acceptance testing, and so on. They can all be part of a single plan or could be covered by multiple plans. In situations where there are multiple test plans, there should be one test plan, which covers the activities common for all plans. This is called the master test plan.

Scope management pertains to specifying the scope of a project. For testing, scope management entails

- Understanding what constitutes a release of a product;

- Breaking down the release into features;

- Prioritizing the features for testing;

- Deciding which features will be tested and which will not be; and

- Gathering details to prepare for estimation of resources for testing.

It is always good to start from the end-goal or product-release perspective and get a holistic picture of the entire product to decide the scope and priority of testing. Usually, during the planning stages of a release, the features that constitute the release are identified. For example, a particular release of an inventory control system may introduce new features to automatically integrate with supply chain management and to provide the user with various options of costing. The testing teams should get involved early in the planning cycle and understand the features. Knowing the features and understanding them from the usage perspective will enable the testing team to prioritize the features for testing.

The following factors drive the choice and prioritization of features to be tested.

Features that are new and critical for the release The new features of a release set the expectations of the customers and must perform properly. These new features result in new program code and thus have a higher susceptibility and exposure to defects. Furthermore, these are likely to be areas where both the development and testing teams will have to go through a learning curve. Hence, it makes sense to put these features on top of the priority list to be tested. This will ensure that these key features get enough planning and learning time for testing and do not go out with inadequate testing. In order to get this prioritization right, the product marketing team and some select customers participate in identification of the features to be tested.

Features whose failures can be catastrophic Regardless of whether a feature is new or not, any feature the failure of which can be catastrophic or produce adverse business impact has to be high on the list of features to be tested. For example, recovery mechanisms in a database will always have to be among the most important features to be tested.

Features that are expected to be complex to test Early participation by the testing team can help identify features that are difficult to test. This can help in starting the work on these features early and line up appropriate resources in time.

Features which are extensions of earlier features that have been defect prone As we have seen in Chapter 8, Regression Testing, certain areas of a code tend to be defect prone and such areas need very thorough testing so that old defects do not creep in again. Such features that are defect prone should be included ahead of more stable features for testing.

A product is not just a heterogeneous mixture of these features. These features work together in various combinations and depend on several environmental factors and execution conditions. The test plan should clearly identify these combinations that will be tested.

Given the limitations on resources and time, it is likely that it will not be possible to test all the combinations exhaustively. During planning time, a test manager should also consciously identify the features or combinations that will not be tested. This choice should balance the requirements of time and resources while not exposing the customers to any serious defects. Thus, the test plan should contain clear justifications of why certain combinations will not be tested and what are the risks that may be faced by doing so.

15.2.3 Deciding Test Approach/Strategy

Once we have this prioritized feature list, the next step is to drill down into some more details of what needs to be tested, to enable estimation of size, effort, and schedule. This includes identifying

- What type of testing would you use for testing the functionality?

- What are the configurations or scenarios for testing the features?

- What integration testing would you do to ensure these features work together?

- What localization validations would be needed?

- What “non-functional” tests would you need to do?

We have discussed various types of tests in earlier chapters of this book. Each of these types has applicability and usefulness under certain conditions. The test approach/strategy part of the test plan identifies the right type of testing to effectively test a given feature or combination.

The test strategy or approach should result in identifying the right type of test for each of the features or combinations. There should also be objective criteria for measuring the success of a test. This is covered in the next sub-section.

15.2.4 Setting up Criteria for Testing

As we have discussed in earlier chapters (especially chapters on system and acceptance testing) there must be clear entry and exit criteria for different phases of testing. The test strategies for the various features and combinations determined how these features and combinations would be tested. Ideally, tests must be run as early as possible so that the last-minute pressure of running tests after development delays (see the section on Risk Management below) is minimized. However, it is futile to run certain tests too early. The entry criteria for a test specify threshold criteria for each phase or type of test. There may also be entry criteria for the entire testing activity to start. The completion/exit criteria specify when a test cycle or a testing activity can be deemed complete. Without objective exit criteria, it is possible for testing to continue beyond the point of diminishing returns.

A test cycle or a test activity will not be an isolated, continuous activity that can be carried out at one go. It may have to be suspended at various points of time because it is not possible to proceed further. When it is possible to proceed further, it will have to be resumed. Suspension criteria specify when a test cycle or a test activity can be suspended. Resumption criteria specify when the suspended tests can be resumed. Some of the typical suspension criteria include

- Encountering more than a certain number of defects, causing frequent stoppage of testing activity;

- Hitting show stoppers that prevent further progress of testing (for example, if a database does not start, further tests of query, data manipulation, and so on are is simply not possible to execute); and

- Developers releasing a new version which they advise should be used in lieu of the product under test (because of some critical defect fixes).

When such conditions are addressed, the tests can resume.

15.2.5 Identifying Responsibilities, Staffing, and Training Needs

Scope management identifies what needs to be tested. The test strategy outlines how to do it. The next aspect of planning is the who part of it. Identifying responsibilities, staffing, and training needs addresses this aspect.

A testing project requires different people to play different roles. As discussed in the previous two chapters, there are the roles of test engineers, test leads, and test managers. There is also role definition on the dimensions of the modules being tested or the type of testing. These different roles should complement each other. The different role definitions should

- Ensure there is clear accountability for a given task, so that each person knows what he or she has to do;

- Clearly list the responsibilities for various functions to various people, so that everyone knows how his or her work fits into the entire project;

- Complement each other, ensuring no one steps on an others’ toes; and

- Supplement each other, so that no task is left unassigned.

Role definitions should not only address technical roles, but also list the management and reporting responsibilities. This includes frequency, format, and recipients of status reports and other project-tracking mechanisms. In addition, responsibilities in terms of SLAs for responding to queries should also be addressed during the planning stage.

Staffing is done based on estimation of effort involved and the availability of time for release. In order to ensure that the right tasks get executed, the features and tasks are prioritized the basis of on effort, time, and importance.

People are assigned to tasks that achieve the best possible fit between the requirements of the job and skills and experience levels needed to perform that function. It may not always be possible to find the perfect fit between the requirements and the skills available. In case there are gaps between the requirements and availability of skills, they should be addressed with appropriate training programs. It is important to plan for such training programs upfront as they are usually are de-prioritized under project pressures.

15.2.6 Identifying Resource Requirements

As a part of planning for a testing project, the project manager (or test manager) should provide estimates for the various hardware and software resources required. Some of the following factors need to be considered.

- Machine configuration (RAM, processor, disk, and so on) needed to run the product under test

- Overheads required by the test automation tool, if any

- Supporting tools such as compilers, test data generators, configuration management tools, and so on

- The different configurations of the supporting software (for example, OS) that must be present

- Special requirements for running machine-intensive tests such as load tests and performance tests

- Appropriate number of licenses of all the software

In addition to all of the above, there are also other implied environmental requirements that need to be satisfied. These include office space, support functions (like HR), and so on.

Underestimation of these resources can lead to considerable slowing down of the testing efforts and this can lead to delayed product release and to de-motivated testing teams. However, being overly conservative and “safe” in estimating these resources can prove to be unnecessarily expensive. Proper estimation of these resources requires co-operation and teamwork among different groups—product development team, testing team, system administration team, and senior management.

15.2.7 Identifying Test Deliverables

The test plan also identifies the deliverables that should come out of the test cycle/testing activity. The deliverables include the following, all reviewed and approved by the appropriate people.

- The test plan itself (master test plan, and various other test plans for the project)

- Test case design specifications

- Test cases, including any automation that is specified in the plan

- Test logs produced by running the tests

- Test summary reports

As we will see in the next section, a defect repository gives the status of the defects reported in a product life cycle. Part of the deliverables of a test cycle is to ensure that the defect repository is kept current. This includes entering new defects in the repository and updating the status of defect fixes after verification. We will see the contents of some of these deliverables in the later parts of this chapter.

15.2.8 Testing Tasks: Size and Effort Estimation

The scope identified above gives a broad overview of what needs to be tested. This understanding is quantified in the estimation step. Estimation happens broadly in three phases.

- Size estimation

- Effort estimation

- Schedule estimation

We will cover size estimation and effort estimation in this sub-section and address schedule estimation in the next sub-section.

Size estimate quantifies the actual amount of testing that needs to be done. Several factors contribute to the size estimate of a testing project.

Size of the product under test This obviously determines the amount of testing that needs to be done. The larger the product, in general, greater is the size of testing to be done. Some of the measures of the size of product under test are as follows.

- Lines of code (LOC) is a somewhat controversial measure as it depends on the language, style of programming, compactness of programming, and so on. Furthermore, LOC represents size estimate only for the coding phase and not for the other phases such as requirements, design, and so on. Notwithstanding these limitations, LOC is still a popular measure for estimating size.

- A function point (FP) is a popular method to estimate the size of an application. Function points provide a representation of application size, independent of programming language. The application features (also called functions) are classified as inputs, outputs, interfaces, external data files, and enquiries. These are increasingly complex and hence are assigned increasingly higher weights. The weighted average of functions (number of functions of each type multiplied by the weight for that function type) gives an initial estimate of size or complexity. In addition, the function point methodology of estimating size also provides for 14 environmental factors such as distributed processing, transaction rate, and so on.

This methodology of estimating size or complexity of an application is comprehensive in terms of taking into account realistic factors. The major challenge in this method is that it requires formal training and is not easy to use. Furthermore, this method is not directly suited to systems software type of projects.

- A somewhat simpler representation of application size is the number of screens, reports, or transactions. Each of these can be further classified as “simple,” “medium,” or “complex.” This classification can be based on intuitive factors such as number of fields in the screen, number of validations to be done, and so on.

Extent of automation required When automation is involved, the size of work to be done for testing increases. This is because, for automation, we should first perform the basic test case design (identifying input data and expected results by techniques like condition coverage, boundary value analysis, equivalence partitioning, and so on.) and then scripting them into the programming language of the test automation tool.

Number of platforms and inter-operability environments to be tested If a particular product is to be tested under several different platforms or under several different configurations, then the size of the testing task increases. In fact, as the number of platforms or touch points across different environments increases, the amount of testing increases almost exponentially.

All the above size estimates pertain to “regular” test case development. Estimation of size for regression testing (as discussed in Chapter 8) involves considering the changes in the product and other similar factors.

In order to have a better handle on the size estimate, the work to be done is broken down into smaller and more manageable parts called work breakdown structure (WBS) units. For a testing project, WBS units are typically test cases for a given module, test cases for a given platform, and so on. This decomposition breaks down the problem domain or the product into simpler parts and is likely to reduce the uncertainty and unknown factors.

Size estimate is expressed in terms of any of the following.

- Number of test cases

- Number of test scenarios

- Number of configurations to be tested

Size estimate provides an estimate of the actual ground to be covered for testing. This acts as a primary input for estimating effort. Estimating effort is important because often effort has a more direct influence on cost than size. The other factors that drive the effort estimate are as follows.

Productivity data Productivity refers to the speed at which the various activities of testing can be carried out. This is based on historical data available in the organization. Productivity data can be further classified into the number of test cases that can be developed per day (or some unit time), the number of test cases that can be run per day, the number of pages of pages of documentation that can be tested per day, and so on. Having these fine-grained productivity data enables better planning and increases the confidence level and accuracy of the estimates.

Reuse opportunities If the test architecture has been designed keeping reuse in mind, then the effort required to cover a given size of testing can come down. For example, if the tests are designed in such a way that some of the earlier tests can be reused, then the effort of test development decreases.

Robustness of processes Reuse is a specific example of process maturity of an organization. Existence of well-defined processes will go a long way in reducing the effort involved in any activity. For example, in an organization with higher levels of process maturity, there are likely to be

- Well-documented standards for writing test specifications, test scripts, and so on;

- Proven processes for performing functions such as reviews and audits;

- Consistent ways of training people; and

- Objective ways of measuring the effectiveness of compliance to processes.

All these reduce the need to reinvent the wheel and thus enable reduction in the effort involved.

Effort estimate is derived from size estimate by taking the individual WBS units and classifying them as “reusable,” “modifications,” and “new development.” For example, if parts of a test case can be reused from existing test cases, then the effort involved in developing these would be close to zero. If, on the other hand, a given test case is to be developed fully from scratch, it is reasonable to assume that the effort would be the size of the test case divided by productivity.

Effort estimate is given in person days, person months, or person years. The effort estimate is then translated to a schedule estimate. We will address scheduling in the next sub-section.

15.2.9 Activity Breakdown and Scheduling

Activity breakdown and schedule estimation entail translating the effort required into specific time frames. The following steps make up this translation.

- Identifying external and internal dependencies among the activities

- Sequencing the activities, based on the expected duration as well as on the dependencies

- Identifying the time required for each of the WBS activities, taking into account the above two factors

- Monitoring the progress in terms of time and effort

- Rebalancing schedules and resources as necessary

During the effort estimation phase, we have identified the effort required for each of the WBS unit, factoring in the effect of reuse. This effort was expressed in terms of person months. If the effort for a particular WBS unit is estimated as, say, 40 person months, it is not possible to trade the “persons” for “months,” that is, we cannot indefinitely increase the number of people working on it, expecting the duration to come down proportionally. As stated in [BROO-74], adding more people to an already delayed project is a sure way of delaying the project even further! This is because, when new people are added to a project, it increases the communication overheads and it takes some time for the new members to gel with the rest of the team. Furthermore, these WBS units cannot be executed in any random order because there will be dependencies among the activities. These dependencies can be external dependencies or internal dependencies. External dependencies of an activity are beyond the control and purview of the manager/person performing the activity. Some of the common external dependencies are

- Availability of the product from developers;

- Hiring;

- Training;

- Acquisition of hardware/software required for training; and

- Availability of translated message files for testing.

Internal dependencies are fully within the control of the manager/person performing that activity. For example, some of the internal dependencies could be.

- Completing the test specification

- Coding/scripting the tests

- Executing the tests

The testing activities will also face parallelism constraints that will further restrict the activities that can be done at a time. For example, certain tests cannot be run together because of conflicting conditions (for example, requiring different versions of a component for testing) or a high-end machine may have to be multiplexed across multiple tests.

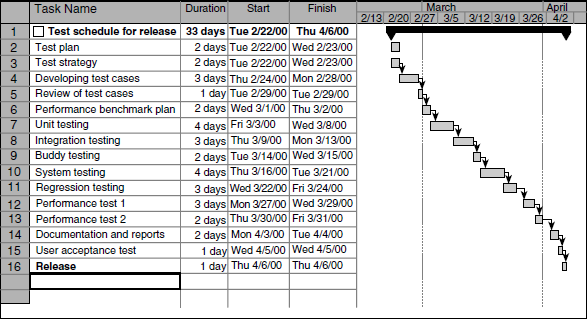

Based on the dependencies and the parallelism possible, the test activities are scheduled in a sequence that helps accomplish the activities in the minimum possible time, while taking care of all the dependencies. This schedule is expressed in the form of a Gantt chart as shown in Figure 15.1. The coloured figure is available on Illustrations.

15.2.10 Communications Management

Communications management consists of evolving and following procedures for communication that ensure that everyone is kept in sync with the right level of detail. Since this is intimately connected with the test execution and progress of the testing project, we will take this up in more detail in Section 15.3 when we take up the various types of reports in a test cycle.

15.2.11 Risk Management

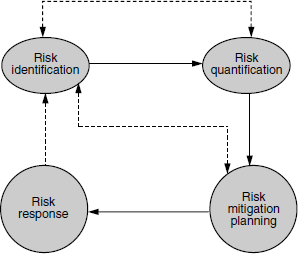

Just like every project, testing projects also face risks. Risks are events that could potentially affect a project's outcome. These events are normally beyond the control of the project manager. As shown in Figure 15.2, risk management entails

- Identifying the possible risks;

- Quantifying the risks;

- Planning how to mitigate the risks; and

- Responding to risks when they become a reality.

As some risks are identified and resolved, other risks may surface. Hence as risks can happen any time, risk management is essentially a cycle, which goes through the above four steps repeatedly.

Risk identification consists of identifying the possible risks that may hit a project. Although there could potentially be many risks that can hit a project, the risk identification step should focus on those risks that are more likely to happen. The following are some of the common ways to identify risks in testing.

- Use of checklists Over time, an organization may find new gleanings on testing that can be captured in the form of a checklist. For example, if during installation testing, it is found that a particular step of the installation has repeatedly given problems, then the checklist can have an explicit line item to check that particular problem. When checklists are used for risk identification, there is also a great risk of the checklist itself being out of date, thereby pointing to red herrings instead of risks!

- Use of organizational history and metrics When an organization collects and analyzes the various metrics (see Chapter 17), the information can provide valuable insights into what possible risks can hit a project. For example, the past effort variance in testing can give pointers to how much contingency planning is required.

- Informal networking across the industry The informal networking across the industry can help in identifying risks that other organizations have encountered.

Risk quantification deals with expressing the risk in numerical terms. There are two components to the quantification of risk. One is the probability of the risk happening and the other is the impact of the risk, if the risk happens. For example, the occurrence of a low-priority defect may have a high probability, but a low impact. However, a show stopper may have (hopefully!) a low probability, but a very high impact (for both the customer and the vendor organization). To quantify both these into one number, Risk exposure is used. This is defined as the product of risk probability and risk impact. To make comparisons easy, risk impact is expressed in monetary terms (for example, in dollars).

Risk mitigation planning deals with identifying alternative strategies to combat a risk event, should that risk materialize. For example, a couple of mitigation strategies for the risk of attrition are to spread the knowledge to multiple people and to introduce organization-wide processes and standards. To be better prepared to handle the effects of a risk, it is advisable to have multiple mitigation strategies.

When the above three steps are carried out systematically and in a timely manner, the organization would be in a better position to respond to the risks, should the risks become a reality. When sufficient care is not given to these initial steps, a project may find itself under immense pressure to react to a risk. In such cases, the choices made may not be the most optimal or prudent, as the choices are made under pressure.

The following are some of the common risks encountered in testing projects and their characteristics.

Unclear requirements The success of testing depends a lot on knowing what the correct expected behavior of the product under test is. When the requirements to be satisfied by a product are not clearly documented, there is ambiguity in how to interpret the results of a test. This could result in wrong defects being reported or in the real defects being missed out. This will, in turn, result in unnecessary and wasted cycles of communication between the development and testing teams and consequent loss of time. One way to minimize the impact of this risk is to ensure upfront participation of the testing team during the requirements phase itself.

Schedule dependence The schedule of the testing team depends significantly on the schedules of the development team. Thus, it becomes difficult for the testing team to line up resources properly at the right time. The impact of this risk is especially severe in cases where a testing team is shared across multiple-product groups or in a testing services organization (see Chapter 14). A possible mitigation strategy against this risk is to identify a backup project for a testing resource. Such a backup project may be one of that could use an additional resource to speed up execution but would not be unduly affected if the resource were not available. An example of such a backup project is chipping in for speeding up test automation.

Insufficient time for testing Throughout the book, we have stressed the different types of testing and the different phases of testing. Though some of these types of testing—such as white box testing—can happen early in the cycle, most of the tests tend to happen closer to the product release. For example, system testing and performance testing can happen only after the entire product is ready and close to the release date. Usually these tests are resource intensive for the testing team and, in addition, the defects that these tests uncover are challenging for the developers to fix. As discussed in performance testing chapter, fixing some of these defects could lead to changes in architecture and design. Carrying out such changes into the cycle may be expensive or even impossible. Once the developers fix the defects, the testing team would have even lesser time to complete the testing and is under even greater pressure. The use of the V model to at least shift the test design part of the various test types to the earlier phases of the project can help in anticipating the risks of tests failing at each level in a better manner. This in turn could lead to a reduction in the last-minute crunch. The metric days needed for release (see Chapter 17) when captured and calculated properly, can help in planning the time required for testing better.

“Show stopper” defects When the testing team reports defects, the development team has to fix them. Certain defects which are show stoppers may prevent the testing team to proceed further with testing, until development fixes such show stopper defects. Encountering this type of defects will have a double impact on the testing team: Firstly, they will not be able to continue with the testing and hence end up with idle time. Secondly, when the defects do get fixed and the testing team restarts testing, they would have lost valuable time and will be under tremendous pressure with the deadline being nearer. This risk of show stopper defects can pose a big challenge to scheduling and resource utilization of the testing teams. The mitigation strategies for this risk are similar to those seen on account of dependence on development schedules.

Availability of skilled and motivated people for testing As we saw in Chapter 13, People Issues in Testing, hiring and motivating people in testing is a major challenge. Hiring, retaining, and constant skill upgrade of testers in an organization is vital. This is especially important for testing functions because of the tendency of people to look for development positions.

Inability to get a test automation tool Manual testing is error prone and labor intensive. Test automation, as discussed in Chapter 16, alleviates some of these problems. However, test automation tools are expensive. An organization may face the risk of not being able to afford a test automation tool. This risk can in turn lead to less effective and efficient testing as well as more attrition. One of the ways in which organizations may try to reduce this risk is to develop in-house tools. However, this approach could lead to an even greater risk of having a poorly written or inadequately documented in-house tool.

These risks are not only potentially dangerous individually, but even more dangerous when they occur in tandem. Unfortunately, often, these risks do happen in tandem! A testing group plans its schedules based on development schedules, development schedules slip, testing team resources get into an idle time, pressure builds, schedules slip, and the vicious cycle starts all over again. It is important that these risks be caught early or before they create serious impact on the testing teams. Hence, we need to identify the symptoms for each of these risks. These symptoms and their impacts need to be tracked closely throughout the project.

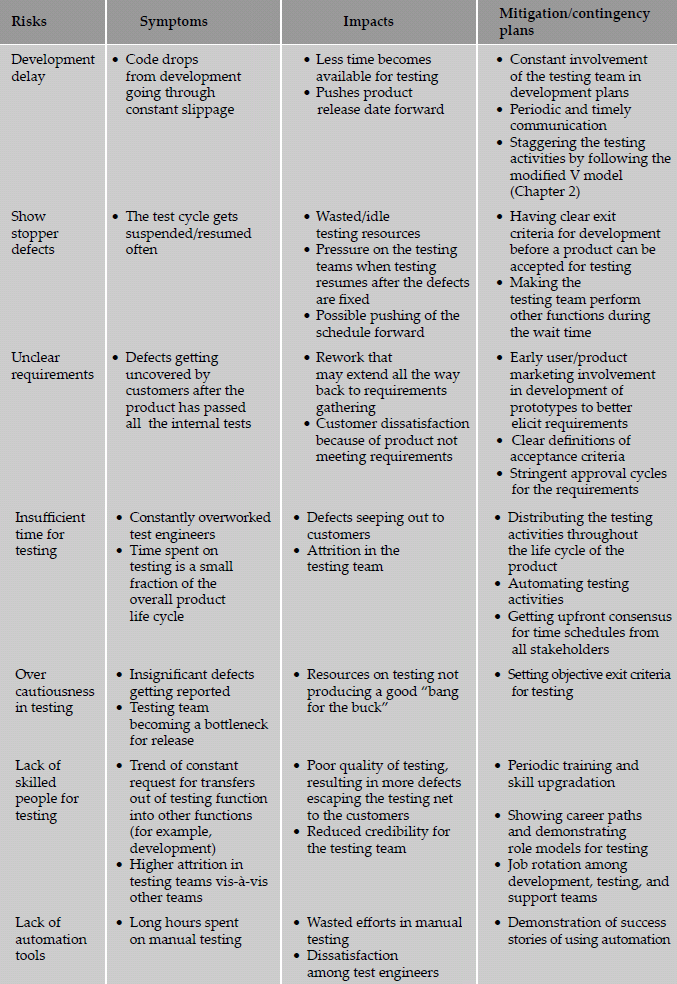

Table 15.1 gives typical risks, their symptoms, impacts and mitigation/contingency plans.

15.3 TEST MANAGEMENT

In the previous section, we considered testing as a project in its own right and addressed some of the typical project management issues in testing. In this section, we will look at some of the aspects that should be taken care of in planning such a project. These planning aspects are proactive measures that can have an across-the-board influence on all testing projects.

15.3.1 Choice of Standards

Standards comprise an important part of planning in any organization. Standards are of two types—external standards and internal standards. External standards are standards that a product should comply with, are externally visible, and are usually stipulated by external consortia. From a testing perspective, these standards include standard tests supplied by external consortia and acceptance tests supplied by customers. Compliance to external standards is usually mandated by external parties.

Internal standards are standards formulated by a testing organization to bring in consistency and predictability. They standardize the processes and methods of working within the organization. Some of the internal standards include

- Naming and storage conventions for test artifacts;

- Document standards;

- Test coding standards; and

- Test reporting standards.

Naming and storage conventions for test artifacts Every test artifact (test specification, test case, test results, and so on) have to be named appropriately and meaningfully. Such naming conventions should enable

- Easy identification of the product functionality that a set of tests are intended for; and

- Reverse mapping to identify the functionality corresponding to a given set of tests.

As an example of using naming conventions, consider a product P, with modules M01, M02, and M03. The test suites can be named as PM01nnnn.<file type>. Here nnnn can be a running sequence number or any other string. For a given test, different files may be required. For example, a given test may use a test script (which provides details of the specific actions to be performed), a recorded keystroke capture file, an expected results file. In addition, it may require other supporting files (for example, an SQL script for a database). All these related files can have the same file name (for example, PMO1nnnn) and different file types (for example, .sh, .SQL, .KEY, .OUT). By such a naming convention, one can find

All files relating to a specific test (for example, by searching for all files with file name PM01nnnn), and

All tests relating to a given module (for example, those files starting with name PM01 will correspond to tests for module M01) those

With this, when the functionality corresponding to module M01 changes, it becomes easy to locate tests that may have to be modified or deleted.

This two-way mapping between tests and product functionality through appropriate naming conventions will enable identification of appropriate tests to be modified and run when product functionality changes.

In addition to file-naming conventions, the standards may also stipulate the conventions for directory structures for tests. Such directory structures can group logically related tests together (along with the related product functionality). These directory structures are mapped into a configuration management repository (discussed later in the chapter).

Documentation standards Most of the discussion on documentation and coding standards pertain to automated testing. In the case of manual testing, documentation standards correspond to specifying the user and system responses at the right level of detail that is consistent with the skill level of the tester.

While naming and directory standards specify how a test entity is represented externally, documentation standards specify how to capture information about the tests within the test scripts themselves. Internal documentation of test scripts are similar to internal documentation of program code and should include the following.

- Appropriate header level comments at the beginning of a file that outlines the functions to be served by the test.

- Sufficient in-line comments, spread throughout the file, explaining the functions served by the various parts of a test script. This is especially needed for those parts of a test script that are difficult to understand or have multiple levels of loops and iterations.

- Up-to-date change history information, recording all the changes made to the test file.

Without such detailed documentation, a person maintaining the test scripts is forced to rely only on the actual test code or script to guess what the test is supposed to do or what changes happened to the test scripts. This may not give a true picture. Furthermore, it may place an undue dependence on the person who originally wrote the tests.

Test coding standards Test coding standards go one level deeper into the tests and enforce standards on how the tests themselves are written. The standards may

- Enforce the right type of initialization and clean-up that the test should do to make the results independent of other tests;

- Stipulate ways of naming variables within the scripts to make sure that a reader understands consistently the purpose of a variable. (for example, instead of using generic names such as i, j, and so on, the names can be meaningful such as network_init_flag);

- Encourage reusability of test artifacts (for example, all tests should call an initialization module init_env first, rather than use their own initialization routines); and

- Provide standard interfaces to external entities like operating system, hardware, and so on. For example, if it is required for tests to spawn multiple OS processes, rather than have each of the tests directly spawn the processes, the coding standards may dictate that they should all call a standard function, say, create_os_process. By isolating the external interfaces separately, the tests can be reasonably insulated from changes to these lower-level layers.

Test reporting standards Since testing is tightly interlinked with product quality, all the stakeholders must get a consistent and timely view of the progress of tests. Test reporting standards address this issue. They provide guidelines on the level of detail that should be present in the test reports, their standard formats and contents, recipients of the report, and so on. We will revisit this in more detail later in this chapter.

Internal standards provide a competitive edge to a testing organization and act as a first-level insurance against employee turnover and attrition. Internal standards help bring new test engineers up to speed rapidly. When such consistent processes and standards are followed across an organization, it brings about predictability and increases the confidence level one can have on the quality of the final product. In addition, any anomalies can be brought to light in a timely manner.

15.3.2 Test Infrastructure Management

Testing requires a robust infrastructure to be planned upfront. This infrastructure is made up of three essential elements.

- A test case database (TCDB)

- A defect repository

- Configuration management repository and tool

A test case database captures all the relevant information about the test cases in an organization. Some of the entities and the attributes in each of the entities in such a TCDB are given in Table 15.2.

Table 15.2 Content of a test case database.

| Entity | Purpose | Attributes |

|---|---|---|

| Test case | Records all the “static” information about the tests |

|

| Test case - Product cross- reference | Provides a mapping between the tests and the corresponding product features; enables identification of tests for a given feature |

|

| Test case run history | Gives the history of when a test was run and what was the result; provides inputs on selection of tests for regression runs (see Chapter 8) |

|

| Test case—Defect cross-reference | Gives details of test cases introduced to test certain specific defects detected in the product; provides inputs on the selection of tests for regression runs |

|

A defect repository captures all the relevant details of defects reported for a product. The information that a defect repository includes is given in Table 15.3.

Table 15.3 Information in a defect repository.

| Entity | Purpose | Attributes |

|---|---|---|

| Defect details | Records all the “static” information about the tests |

|

| Defect test details | Provides details of test cases for a given defect. Cross-references the TCDB |

|

| Fix details | Provides details of fixes for a given defect; cross-references the configuration management repository |

|

| Communication | Captures all the details of the communication that transpired for this defect among the various stakeholders. These could include communication between the testing team and development team, customer communication, and so on. Provides insights into effectiveness of communication |

|

The defect repository is an important vehicle of communication that influences the work flow within a software organization. It also provides the base data in arriving at several of the metrics we will discuss in Chapter 17, Metrics and Measurements. In particular, most of the metrics classified as testing defect metrics and development defect metrics are derived out of the data in defect repository.

Yet another infrastructure that is required for a software product organization (and in particular for a testing team) is a software configuration management (SCM) repository. An SCM repository also known as (CM repository) keeps track of change control and version control of all the files/entities that make up a software product. A particular case of the files/entities is test files. Change control ensures that

- Changes to test files are made in a controlled fashion and only with proper approvals.

- Changes made by one test engineer are not accidentally lost or overwritten by other changes.

- Each change produces a distinct version of the file that is recreatable at any point of time.

- At any point of time, everyone gets access to only the most recent version of the test files (except in exceptional cases).

Version control ensures that the test scripts associated with a given release of a product are baselined along with the product files. Baselining is akin to taking a snapshot of the set of related files of a version, assigning a unique identifier to this set. In future, when anyone wants to recreate the environment for the given release, this label would enable him or her to do so.

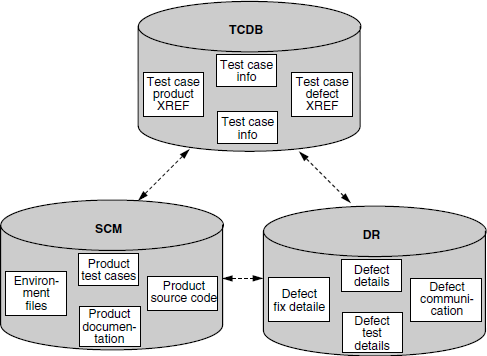

TCDB, defect repository, and SCM repository should complement each other and work together in an integrated fashion as shown in Figure 15.3. For example, the defect repository links the defects, fixes, and tests. The files for all these will be in the SCM. The meta data about the modified test files will be in the TCDB. Thus, starting with a given defect, one can trace all the test cases that test the defect (from the TCDB) and then find the corresponding test case files and source files from the SCM repository.

Similarly, in order to decide which tests to run for a given regression run,

- The defects recently fixed can be obtained from the defect repository and tests for these can be obtained from the TCDB and included in the regression tests.

- The list of files changed since the last regression run can be obtained from the SCM repository and the corresponding test files traced from the TCDB.

- The set of tests not run recently can be obtained from the TCDB and these can become potential candidates to be run at certain frequencies

15.3.3 Test People Management

Developer: These testing folks… they are always nitpicking!

Tester: Why don't these developers do anything right?!

Sales person: When will I get a product out that I can sell?!

People management is an integral part of any project management. Often, it is a difficult chasm for engineers-turned-managers to cross. As an individual contributor, a person relies only on his or her own skills to accomplish an assigned activity; the person is not necessarily trained on how to document what needs to be done so that it can be accomplished by someone else. Furthermore, people management also requires the ability to hire, motivate, and retain the right people. These skills are seldom formally taught (unlike technical skills). Project managers often learn these skills in a “sink or swim” mode, being thrown head-on into the task.

Most of the above gaps in people management apply to all types of projects. Testing projects present several additional challenges. We believe that the success of a testing organization (or an individual in a testing career) depends vitally on judicious people management skills. Since the people and team-building issues are significant enough to be considered in their own right, we have covered these in detail in Chapter 13, on People Issues in Testing, and in Chapter 14, on Organization Structures for Testing Teams. These chapters address issues relevant to building and managing a good global testing team that is effectively integrated into product development and release.

We would like to stress that these team-building exercises should be ongoing and sustained, rather than be done in one burst. The effects of these exercises tend to wear out under the pressure of deadlines of delivery and quality. Hence, they need to be periodically recharged. The important point is that the common goals and the spirit of teamwork have to be internalized by all the stakeholders. Once this internalization is achieved, then they are unlikely to be swayed by operational hurdles that crop up during project execution. Such an internalization and upfront team building has to be part of the planning process for the team to succeed.

15.3.4 Integrating with Product Release

Ultimately, the success of a product depends on the effectiveness of integration of the development and testing activities. These job functions have to work in tight unison between themselves and with other groups such as product support, product management, and so on. The schedules of testing have to be linked directly to product release. Thus, project planning for the entire product should be done in a holistic way, encompassing the project plan for testing and development. The following are some of the points to be decided for this planning.

- Sync points between development and testing as to when different types of testing can commence. For example, when integration testing could start, when system testing could start and so on. These are governed by objective entry criteria for each phase of testing (to be satisfied by development).

- Service level agreements between development and testing as to how long it would take for the testing team to complete the testing. This will ensure that testing focuses on finding relevant and important defects only.

- Consistent definitions of the various priorities and severities of the defects. This will bring in a shared vision between development and testing teams, on the nature of the defects to focus on.

- Communication mechanisms to the documentation group to ensure that the documentation is kept in sync with the product in terms of known defects, workarounds, and so on.

The purpose of the testing team is to identify the defects in the product and the risks that could be faced by releasing the product with the existing defects. Ultimately, the decision to release or not is a management decision, dictated by market forces and weighing the business impact for the organization and the customers.

15.4 TEST PROCESS

15.4.1 Putting Together and Baselining a Test Plan

A test plan combines all the points discussed above into a single document that acts as an anchor point for the entire testing project. A template of a test plan is provided in Appendix B at the end of this chapter. Appendix A gives a check list of questions that are useful to arrive at a Test Plan.

An organization normally arrives at a template that is to be used across the board. Each testing project puts together a test plan based on the template. Should any changes be required in the template, then such a change is made only after careful deliberations (and with appropriate approvals). The test plan is reviewed by a designated set of competent people in the organization. It then is approved by a competent authority, who is independent of the project manager directly responsible for testing. After this, the test plan is baselined into the configuration management repository. From then on, the baselined test plan becomes the basis for running the testing project. Any significant changes in the testing project should thereafter be reflected in the test plan and the changed test plan baselined again in the configuration management repository. In addition, periodically, any change needed to the test plan templates are discussed among the different stake holders and this is kept current and applicable to the testing teams.

15.4.2 Test Case Specification

Using the test plan as the basis, the testing team designs test case specifications, which then becomes the basis for preparing individual test cases. We have been using the term test cases freely throughout this book. Formally, a test case is nothing but a series of steps executed on a product, using a pre-defined set of input data, expected to produce a pre-defined set of outputs, in a given environment. Hence, a test case specification should clearly identify

- The purpose of the test: This lists what feature or part the test is intended for. The test case should follow the naming conventions (as discussed earlier) that are consistent with the feature/module being tested.

- Items being tested, along with their version/release numbers as appropriate.

- Environment that needs to be set up for running the test case: This can include the hardware environment setup, supporting software environment setup (for example, setup of the operating system, database, and so on), setup of the product under test (installation of the right version, configuration, data initialization, and so on).

- Input data to be used for the test case: The choice of input data will be dependent on the test case itself and the technique followed in the test case (for example, equivalence partitioning, boundary value analysis, and so on). The actual values to be used for the various fields should be specified unambiguously (for example, instead of saying “enter a three-digit positive integer,” it is better to say “enter 789”). If automated testing is to be used, these values should be captured in a file and used, rather than having to enter the data manually every time.

- Steps to be followed to execute the test: If automated testing is used, then, these steps are translated to the scripting language of the tool. If the testing is manual, then the steps are detailed instructions that can be used by a tester to execute the test. It is important to ensure that the level of detail in documenting the steps is consistent with the skill and expertise level of the person who will execute the tests.

- The expected results that are considered to be “correct results.” These expected results can be what the user may see in the form of a GUI, report, and so on and can be in the form of updates to persistent storage in a database or in files.

- A step to compare the actual results produced with the expected results: This step should do an “intelligent” comparison of the expected and actual results to highlight any discrepancies. By “intelligent” comparison, we mean that the comparison should take care of “acceptable differences” between the expected results and the actual results, like terminal ID, user ID, system date, and so on.

- Any relationship between this test and other tests: This can be in the form of dependencies among the tests or the possibility of reuse across the tests.

15.4.3 Update of Traceability Matrix

As we have discussed in Chapter 4, Black Box Testing, a requirements traceability matrix ensures that the requirements make it through the subsequent life cycle phases and do not get orphaned mid-course. In particular, the traceability matrix is a tool to validate that every requirement is tested. The traceability matrix is created during the requirements gathering phase itself by filling up the unique identifier for each requirement. Subsequently, as the project proceeds through the design and coding phases, the unique identifier for design features and the program file name is entered in the traceability matrix. When a test case specification is complete, the row corresponding to the requirement which is being tested by the test case is updated with the test case specification identifier. This ensures that there is a two-way mapping between requirements and test cases.

15.4.4 Identifying Possible Candidates for Automation

The test case design forms the basis for writing the test cases. Before writing the test cases, a decision should be taken as to which tests are to be automated and which should be run manually. We have described test automation in detail in Chapter 16. Suffice to say here, some of the criteria that will be used in deciding which scripts to automate include

- Repetitive nature of the test;

- Effort involved in automation;

- Amount of manual intervention required for the test; and

- Cost of automation tool.

15.4.5 Developing and Baselining Test Cases

Based on the test case specifications and the choice of candidates for automation, test cases have to be developed. The development of test cases entails translating the test specifications to a form from which the tests can be executed. If a test case is a candidate for automation, then, this step requires writing test scripts in the automation language. If the test case is a manual test case, then test case writing maps to writing detailed step-by-step instructions for executing the test and validating the results. In addition, the test case should also capture the documentation for the changes made to the test case since the original development. Hence, the test cases should also have change history documentation, which specifies

- What was the change;

- Why the change was necessitated;

- Who made the change;

- When was the change made;

- A brief description of how the change has been implemented; and

- Other files affected by the change.

All the artifacts of test cases—the test scripts, inputs, scripts, expected outputs, and so on—should be stored in the test case database and SCM, as described earlier. Since these artifacts enter the SCM, they have to be reviewed and approved by appropriate authorities before being baselined.

15.4.6 Executing Test Cases and Keeping Traceability Matrix Current

The prepared test cases have to be executed at the appropriate times during a project. For example, test cases corresponding to smoke tests may be run on a daily basis. System testing test cases will be run during system testing.

As the test cases are executed during a test cycle, the defect repository is updated with

- Defects from the earlier test cycles that are fixed in the current build; and

- New defects that get uncovered in the current run of the tests.

The defect repository should be the primary vehicle of communication between the test team and the development team. As mentioned earlier, the defect repository contains all the information about defects uncovered by testing (and defects reported by customers). All the stakeholders should be referring to the defect repository for knowing the current status of all the defects. This communication can be augmented by other means like emails, conference calls, and so on.

As discussed in the test plan, a test may have to be suspended during its run because of certain show stopper defects. In this case, the suspended test case should wait till the resumption criteria are satisfied. Likewise, a test should be run only when the entry criteria for the test are satisfied and should be deemed complete only when the exit criteria are satisfied.

During test design and execution, the traceability matrix should be kept current. As and when tests get designed and executed successfully, the traceability matrix should be updated. The traceability matrix itself should be subject to configuration management, that is, it should be subject to version control and change control.

15.4.7 Collecting and Analyzing Metrics

When tests are executed, information about test execution gets collected in test logs and other files. The basic measurements from running the tests are then converted to meaningful metrics by the use of appropriate transformations and formulae, as described in Chapter 17, Metrics and Measurements.

15.4.8 Preparing Test Summary Report

At the completion of a test cycle, a test summary report is produced. This report gives insights to the senior management about the fitness of the product for release. We will see details of this report later in the chapter.

15.4.9 Recommending Product Release Criteria

One of the purposes of testing is to decide the fitness of a product for release. As we have seen in Chapter 1, testing can never conclusively prove the absence of defects in a software product. What it provides is an evidence of what defects exist in the product, their severity, and impact. As we discussed earlier, the job of the testing team is to articulate to the senior management and the product release team

While “under testing,” a product could be a risk “over testing” a product trying to remove “that last defect” could be as much of a risk!

- What defects the product has;

- What is the impact/severity of each of the defects; and

- What would be the risks of releasing the product with the existing defects.

The senior management can then take a meaningful business decision on whether to release a given version or not.

15.5 TEST REPORTING

Testing requires constant communication between the test team and other teams (like the development team). Test reporting is a means of achieving this communication. There are two types of reports or communication that are required: test incident reports and test summary reports (also called test completion reports).

15.5.1.1 Test incident report

A test incident report is a communication that happens through the testing cycle as and when defects are encountered. Earlier, we described the defect repository. A test incident report is nothing but an entry made in the defect repository. Each defect has a unique ID and this is used to identify the incident. The high impact test incidents (defects) are highlighted in the test summary report.

15.5.1.2 Test cycle report

As discussed, test projects take place in units of test cycles. A test cycle entails planning and running certain tests in cycles, each cycle using a different build of the product. As the product progresses through the various cycles, it is to be expected to stabilize. A test cycle report, at the end of each cycle, gives

- A summary of the activities carried out during that cycle;

- Defects that were uncovered during that cycle, based on their severity and impact;

- Progress from the previous cycle to the current cycle in terms of defects fixed;

- Outstanding defects that are yet to be fixed in this cycle; and

- Any variations observed in effort or schedule (that can be used for future planning).

15.5.1.3 Test summary report

The final step in a test cycle is to recommend the suitability of a product for release. A report that summarizes the results of a test cycle is the test summary report.

There are two types of test summary reports.

- Phase-wise test summary, which is produced at the end of every phase

- Final test summary reports (which has all the details of all testing done by all phases and teams, also called as “release test report”)

A summary report should present

- A summary of the activities carried out during the test cycle or phase

- Variance of the activities carried out from the activities planned. This includes

- the tests that were planned to be run but could not be run (with reasons);

- modifications to tests from what was in the original test specifications (in this case, the TCDB should be updated);

- additional tests that were run (that were not in the original test plan);

- differences in effort and time taken between what was planned and what was executed; and

- any other deviations from plan.

- Summary of results should include

- tests that failed, with any root cause descriptions; and

- severity of impact of the defects uncovered by the tests.

- Comprehensive assessment and recommendation for release should include

- “Fit for release” assessment; and

- Recommendation of release.

15.5.1 Recommending Product Release

Based on the test summary report, an organization can take a decision on whether to release the product or not.

Ideally, an organization would like to release a product with zero defects. However, market pressures may cause the product to be released with the defects provided that the senior management is convinced that there is no major risk of customer dissatisfaction. If the remnant defects are of low priority/impact, or if the conditions under which the defects are manifested are not realistic, an organization may choose to release the product with these defects. Such a decision should be taken by the senior manager only after consultation with the customer support team, development team, and testing team so that the overall workload for all parts of the organization can be evaluated.

15.6 BEST PRACTICES

Best practices in testing can be classified into three categories.

- Process related

- People related

- Technology related

15.6.1 Process Related Best Practices

A strong process infrastructure and process culture is required to achieve better predictability and consistency. Process models such as CMMI can provide a framework to build such an infrastructure. Implementing a formal process model that makes business sense can provide consistent training to all the employees, and ensure consistency in the way the tasks are executed.

Ensuring people-friendly processes makes for process-friendly people.

Integrating processes with technology in an intelligent manner is a key to the success of an organization. A process database, a federation of information about the definition and execution of various processes can be a valuable addition to the repertoire of tools in an organization. When this process database is integrated with other tools such as defect repository, SCM tool, and TCDB, the organization can maximize the benefits.

15.6.2 People Related Best Practices

Best practices in testing related to people management have been covered in detail in Chapter 13, People Issues in Testing. We will summarize here those best practices that pertain to test management.

The key to successful management is to ensure that the testing and development teams gel well. This gelling can be enhanced by creating a sense of ownership in the overarching product goals. While individual goals are required for the development and testing teams, it is very important to get to a common understanding of the overall goals that define the success of the product as a whole. The participation of the testing teams in this goal-setting process and their early involvement in the overall product-planning process can help enhance the required gelling. This gelling can be strengthened by keeping the testing teams in the loop for decision-making on product-release decisions and criteria used for release.

As discussed earlier in this chapter, job rotation among support, development, and testing can also increase the gelling among the teams. Such job rotation can help the different teams develop better empathy and appreciation of the challenges faced in each other's roles and thus result in better teamwork.

15.6.3 Technology Related Best Practices

As we saw earlier, a fully integrated TCDB — SCM — defect repository can help in better automation of testing activities. This can help choose those tests that are likely to uncover defects. Even if a full-scale test automation tool is not available, a tight integration among these three tools can greatly enhance the effectiveness of testing. In Chapter 17, Metrics and Measurements, we shall look at metrics like defects per 100 hours of testing, defect density, defect removal rate, and so on. The calculation of these metrics will be greatly simplified by a tight integration among these three tools.

As we will discuss in Chapter 16, Test Automation, automation tools take the boredom and routine out of testing functions and enhance the challenge and interest in the testing functions. Despite the high initial costs that may be incurred, test automation tends to pay significant direct long-term cost savings by reducing the manual labor required to run tests. There are also indirect benefits in terms of lower attrition of test engineers, since test automation not only reduces the routine, but also brings some “programming” into the job, that engineers usually like.

Twenty-first century tools with nineteenth-century processes can only lead to nineteenth-century productivity!

When test automation tools are used, it is useful to integrate the tool with TCDB, defect repository, and an SCM tool. In fact, most of the test automation tools provide the features of TCDB and defect repository, while integrating with commercial SCM tools.

A final remark on best practices. The three dimensions of best practices cannot be carried out in isolation. A good technology infrastructure should be aptly supported by effective process infrastructure and be executed by competent people. These best practices are inter-dependent, self-supporting, and mutually enhancing. Thus, the organization needs to take a holistic view of these practices and keep a fine balance among the three dimensions.

APPENDIX A: TEST PLANNING CHECKLIST

Scope Related

- Have you identified the features to be tested?

- Have you identified the features not to be tested?

- Have you justified the reasons for the choice of features not to be tested and ascertained the impact from product management/senior management?

- Have you identified the new features in the release ?

- Have you included in the scope of testing areas which failures can be catastrophed ?

- Have you included for testing those area that are defect prove or complex to test ?

Environment Related

- Do you have a software configuration management tool in place?

- Do you have a defect repository in place?

- Do you have a test case data base in place?

- Have you set up institutionalized procedures to update any of these?

- Have you identified the necessary hardware and software to design and run the tests?

- Have you identified the costs and other resource requirements of any test automation tools that may be needed?

Criteria Definition Related

- Have you defined the entry and exit criteria for the various test phases?

- Have you defined the suspension and resumption criteria for the various tests?

Test Case Related

- Have you published naming conventions and other internal standards for designing, writing, and executing test cases?

- Are the test specifications documented adequately according to the above standards?

- Are the test specifications reviewed and approved by appropriate people?

- Are the test specifications baselined into the SCM repository?

- Are the test cases written according to specifications?

- Are the test cases reviewed and approved by appropriate people?

- Are the test cases baselined into the SCM repository?

- Is the traceability matrix updated once the test specifications/test cases are baselined?

Effort Estimation Related

- Have you translated the scope to a size estimate (for example, number of test cases)

- Have you arrived at an estimate of the effort required to design and construct the tests?

- Have you arrived at an effort required for repeated execution of the tests?

- Have the effort estimates been reviewed and approved by appropriate people?

Schedule Related

- Have you put together a schedule that utilizes all the resources available?

- Have you accounted for any parallelism constraints?

- Have you factored in the availability of releases from the development team?

- Have you accounted for any show stopper defects?

- Have you prioritized the tests in the event of any schedule crunch?

- Has the schedule been reviewed and approved by appropriate people?

Risks Related

- Have you identified the possible risks in the testing project?

- Have you quantified the likelihood and impact of these risks?

- Have you identified possible symptoms to catch the risks before they happen?

- Have you identified possible mitigation strategies for the risks?

- Have you taken care not to squeeze in all the testing activities towards then end of the development cycle?

- What mechanisms have you tried to distribute the testing activities throughout the life cycle (for example, doing an early test design like in the V model)?

- Have you prepared for the risk of idle time because of tests being suspended?

People Related

- Have you identified the number and skill levels of people required?

- Have you identified the gaps and prepared for training and skill upgradation?

Execution Related

- Are you executing the tests as per plan? If there is any deviation, have you updated the plan?

- Did the test execution necessitate changing any test cases design? If so, is the TCDB kept current?

- Have you logged any defects that come up during testing in the defect repository?

- Have you updated the defect repository for any defects that are fixed in the current test cycle?

- Have you kept the traceability matrix current with the changes?

Completion Related

- Have you prepared a test summary report?

- Have you clearly documented the outstanding defects, along with their severity and impact?

- Have you put forth your recommendations for product release?

APPENDIX B: TEST PLAN TEMPLATE

- Introduction

1.1

Scope

What features are to be tested and what features will not be tested; what combinations of environment are to be tested and what not. - References

(Gives references and links to documents such as requirement specifications, design specifications, program specifications, project plan, project estimates, test estimates, process documents, internal standards, external standards, and so on.) - Test Methodology and Strategy/Approach

- Test Criteria

4.1

Entry Criteria

4.2

Exit Criteria

4.3

Suspension Criteria

4.4

Resumption Criteria

- Assumptions, Dependencies, and Risks

5.1

Assumptions

5.2

Dependencies

5.3

Risks and Risk Management Plans

- Estimations

6.1

Size Estimate

6.2

Effort Estimate

6.3

Schedule Estimate

- Test Deliverables and Milestones

- Responsibilities

- Resource Requirements

9.1

Hardware Resources

9.2

Software Resources

9.3

People Resources (Number of people, skills, duration, etc.)

9.4

Other Resources

- Training Requirements

10.1

Details of Training Required

10.2

Possible Attendees

10.3

Any Constraints

- Defect Logging and Tracking Process

- Metrics Plan

- Product Release Criteria

[PMI-2004] is a comprehensive guide that covers all aspects of project management. [RAME-2002] discusses the activities of managing a globally distributed project, including topics like risk management, configuration management, etc. [BURN-2004] provides inputs on test planning. [FAIR-97] discussed methods of work breakdown structure applicable in general to projects. [HUMP-86] is the initial work that introduced the concept of process models for software development that eventually led to the Capability Maturity Model (CMM). [CMMI-2002] covers the Capability Maturity Model Integrated, a popular process model. [ALBE-79] and [ALBE-83] discuss function points, a method of size estimation for applications. [IEEE-829] presents the various templates and documentation required for testing like test plans, test case specifications, test incident report, etc. [IEEE-1059], [IEEE-1012] also contain useful information for these topics.

- Someone made the remark, “Testing heavily depends on development — so I cannot plan a testing project.” How will you argue against this statement?

- Consider a new version of an operating system. While deciding the scope of what needs to be tested in that version, which of these features would be essential to test?

- The OS introduces support for a new network protocol which is becoming a de facto standard

- The OS has been modified to work in an embedded system on a manned flight to Mars

- The OS feature for support for long file names has been stable and working for the past five versions and has not undergone any major change

- The caching part of the file system of the OS has often given performance problems and every slight change seems to upset the apple cart

- The features of OS are expected works on different chips and in a network, different machines may be running on different chips

- Give some typical entry, exit, suspension, and resumption criteria for unit testing. Considering the classification of white box and black box testing for the case of unit testing, should there be such criteria for separating white box and black box testing parts of unit testing?

- When we discussed identifying responsibilities and staffing needs, we were looking at the requirements of the testing team. What kind of SLAs or responsibilities of other involved teams (e.g., development teams) should a test plan document? How would one enforce or track such responsibilities?

- For estimating the size of a testing project, give the pros and cons of using the lines of code of the product as a basis. Would this apply only to white box testing or also to black box testing?

- What would be the base data required for estimating the size and effort of a testing project that involved automation?

- Assuming you have historical productivity data for the various activities like test planning, design, execution and defect fixing, what factors would you consider before adapting/modifying the historical figures to more directly be applicable to your project?

- In the text Risk Exposure was quantified as the product of probability of the risk happening and the impact of the risk, if the risk happens. How would you estimate each of these two parameters? When quantitative data is not available for these two parameters, how would you go about quantifying riak?

- We discussed the various infrastructure components (TCDB, Defect Repository, Configuration Management Repository). How would you make these tools operate in unison to effectively:

- Choose tests for regression testing for a given release

- Predict the time required for releasing a product, after fixing the identified defects

- Maintain test cases based on their effectiveness of detecting defects

- Adapt the test plan template given in this chapter to suit the needs of your organization

- Can the Defect Repository be accessible by customers? If so, what security aspects would you have to take into account?

- What should be the contents of a test incident report, keeping in mind the goal that the defects found in a test should eventually be fixed.

- What factors would an organization take into account to decide the fitness of a product for release?