Chapter 16

Software Test Automation

In this chapter—

16.1 WHAT IS TEST AUTOMATION?

In the previous chapters we have seen several types of testing and how test cases can be developed for those testing types. When these test cases are run and checked, the coverage and quality of testing (and the quality of the product) will definitely improve. However, this throws up a challenge that additional time is required to run those test cases. One way to overcome that challenge is to automate running of most of the test cases that are repetitive in nature.

Developing software to test the software is called test automation. Test automation can help address several problems.

Automation saves time as software can execute test cases faster than human do This can help in running the tests overnight or unattended. The time thus saved can be used effectively for test engineers to

- Develop additional test cases to achieve better coverage;

- Perform some esoteric or specialized tests like ad hoc testing; or

- Perform some extra manual testing.

The time saved in automation can also be utilized to develop additional test cases, thereby improving the coverage of testing. Moreover, the automated tests can be run overnight, saving the elapsed time for testing, thereby enabling the product to be released frequently.

Test automation can free the test engineers from mundane tasks and make them focus on more creative tasks We read about ad hoc testing in Chapter 10. This testing requires intuition and creativity to test the product for those perspectives that may have been missed out by planned test cases. If there are too many planned test cases that need to be run manually and adequate automation does not exist, then the test team may spend most of its time in test execution. This creates a situation where there is no scope for intuition and creativity in the test team. This also creates fatigue and boredom in the test team. Automating the more mundane tasks gives some time to the test engineers for creativity and challenging tasks. As we saw in Chapter 13, People Issues in Testing, motivating test engineers is a significant challenge and automation can go a long way in helping this cause.

Automated tests can be more reliable When an engineer executes a particular test case many times manually, there is a chance for human error or a bias because of which some of the defects may get missed out. As with all machine-oriented activities, automation can be expected to produce more reliable results every time, and eliminates the factors of boredom and fatigue.

Automation helps in immediate testing Automation reduces the time gap between development and testing as scripts can be executed as soon as the product build is ready. Automation can be designed in such a way that the tests can be kicked off automatically, after a successful build is over. Automated testing need not wait for the availability of test engineers.

Automation can protect an organization against attrition of test engineers Automation can also be used as a knowledge transfer tool to train test engineers on the product as it has a repository of different tests for the product. With manual testing, any specialized knowledge or “undocumented ways” of running tests gets lost with the test engineer's leaving. On the other hand, automating tests makes the test execution less person dependent.

Test automation opens up opportunities for better utilization of global resources Manual testing requires the presence of test engineers, but automated tests can be run round the clock, twenty-four hours a day and seven days a week. This will also enable teams in different parts of the world, in different time zones, to monitor and control the tests, thus providing round the-clock coverage.

Certain types of testing cannot be executed without automation Test cases for certain types testing such as reliability testing, stress testing, load and performance testing, cannot be executed without automation. For example, if we want to study the behavior of a system with thousands of users logged in, there is no way one can perform these tests without using automated tools.

Automation makes the software to test the software and enables the human effort to be spent on creative testing.

Automation means end-to-end, not test execution alone Automation does not end with developing programs for the test cases. In fact, that is where it starts. Automation should consider all activities such as picking up the right product build, choosing the right configuration, performing installation, running the tests, generating the right test data, analyzing the results, and filing the defects in the defect repository. When talking about automation, this large picture should always be kept in mind.

Automation should have scripts that produce test data to maximize coverage of permutations and combinations of inputs and expected output for result comparison. They are called test data generators. It is not always easy to predict the output for all input conditions. Even if all input conditions are known and the expected results are met, the error produced by the software and the functioning of the product after such an error may not be predictable. The automation script should be able to map the error patterns dynamically to conclude the result. The error pattern mapping is done not only to conclude the result of a test, but also to point out the root cause. The definition of automation should encompass this aspect.

While it is mentioned in the above paragraphs, that automation should try to cover the entire spectrum of activities for testing, it is important for such automation to relinquish the control back to test engineers in situations where a further set of actions to be taken are not known or cannot be determined automatically (for example, if test results cannot be ascertained by test scripts for pass/fail). If automated scripts determine the results wrongly, such conclusions can delay the product release. If automated scripts are found to have even one or two such problems, irrespective of their quality, they will lose credibility and may not get used during later cycles of the release and the team will depend on manual testing. This may lead the organization to believe that the automation is the cause of all problems. This in turn will result in automation being completely ignored by the organization. Hence automation not only should try to cover the entire operation of activities but also should allow human intervention where required.

Once tests are automated keeping all the requirements in mind, it becomes easier to transfer or rotate the ownership for running the tests. As the objective of testing is to catch defects early, the automated tests can be given to developers so that they can execute them as part of unit testing. The automated tests can also be given to a CM engineer (build engineer) and the tests can be executed soon after the build is ready. As we discussed in Chapter 8, on regression tests, many companies have a practice of Daily build and smoke test, to ensure that the build is ready for further testing and that existing functionality is not broken due to changes.

16.2 TERMS USED IN AUTOMATION

We have been using the term “test case” freely in this book. As formally defined in Chapter 15, a test case is a set of sequential steps to execute a test operating on a set of predefined inputs to produce certain expected outputs. There are two types of test cases—automated and manual. As the names suggest, a manual test case is executed manually while an automated test case is executed using automation. Test cases in this chapter refer to automated test cases, unless otherwise specified. A test case should always have an expected result associated when executed.

A test case (manual or automated) can be represented in many forms. It can be documented as a set of simple steps, or it could be an assertion statement or a set of assertions. An example of an assertion is “Opening a file, which is already opened should fail.” An assertion statement includes the expected result in the definition itself, as in the above example, and makes it easy for the automation engineer to write the code for the steps and to conclude the correctness of result of the test case.

As we have seen earlier, testing involves several phases and several types of testing. Some test cases are repeated several times during a product release because the product is built several times. Not only are the test cases repetitive in testing, some operations that are described as steps in the test cases too are repetitive. Some of the basic operations such as “log in to the system” are generally performed in a large number of test cases for a product. Even though these test cases (and the operations within them) are repeated, every time the intent or area of focus may keep changing. This presents an opportunity for the automation code to be reused for different purposes and scenarios.

Table 16.1 describes some test cases for the log in example, on how the log in can be tested for different types of testing.

Table 16.1 Same test case being used for different types of testing.

| S.No. | Test cases for testing | Belongs to what type of testing |

|---|---|---|

| 1 | Check whether log in works | Functionality |

| 2 | Repeat log in operation in a loop for 48 hours | Reliability |

| 3 | Perform log in from 10000 clients | Load/stress testing |

| 4 | Measure time taken for log in operations in different conditions | Performance |

| 5 | Run log in operation from a machine running Japanese language | Internationalization |

Table 16.1 can be further extended for other types of testing. From the above example, it is obvious that certain operations of a product (such as log in) get repeated when we try to test the product for different types of testing. If this is kept in mind, the code written for automating the log in operation can be reused in many places, thereby saving effort and time.

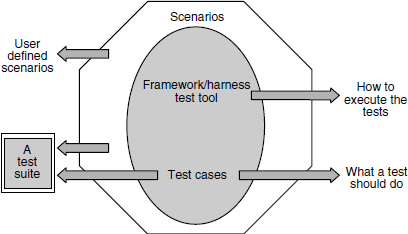

If we closely go through the above table, one can observe that there are two important dimensions: “What operations have to be tested,” and “how the operations have to be tested.” The how portion of the test case is called scenarios (shown in Italics in the above table). “What an operation has to do” is a product-specific feature and “how they are to be run” is a framework-spetific requirement. The framework-/test tool-specific requirements are not just for test cases or products. They are generic requirements for all products that are being tested in an organization.

The automation belief is based on the fact that product operations (such as log in) are repetitive in nature and by automating the basic operations and leaving the different scenarios (how to test) to the framework/test tool, great progress can be made. This ensures code re-use for automation and draws a clear boundary between “what a test suite has to do” and “what a framework or a test tool should complement.” When scenarios are combined by basic operations of the product, they become automated test cases.

When a set of test cases is combined and associated with a set of scenarios, they are called “test suite.” A Test suite is nothing but a set of test cases that are automated and scenarios that are associated with the test cases.

Figure 16.1 depicts the terms discussed in the above paragraphs. The coloured figure is available on Illustrations.

16.3 SKILLS NEEDED FOR AUTOMATION

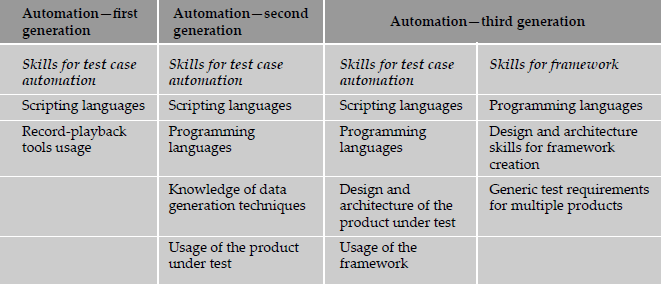

There are different "Generations of Automation." The skills required for automation depends on what generation of automation the company is in or desires to be in the near future.

The automation of testing is broadly classified into three generations.

First generation—Record and Playback Record and playback avoids the repetitive nature of executing tests. Almost all the test tools available in the market have the record and playback feature. A test engineer records the sequence of actions by keyboard characters or mouse clicks and those recorded scripts are played back later, in the same order as theywere recorded. Since a recorded script can be played back multiple times, it reduces the tedium of the testing function. Besides avoiding repetitive work, it is also simple to record and save the script. But this generation of tool has several disadvantages. The scripts may contain hard-coded values, thereby making it difficult to perform general types of tests. For example, when a report has to use the current date and time, it becomes difficult to use a recorded script. The handling error condition is left to the testers and thus, the played back scripts may require a lot of manual intervention to detect and correct error conditions. When the application changes, all the scripts have to be rerecorded, thereby increasing the test maintenance costs. Thus, when there is frequent change or when there is not much of opportunity to reuse or re-run the tests, the record and playback generation of test automation tools may not be very effective.

Second generation—Data-driven This method helps in developing test scripts that generates the set of input conditions and corresponding expected output. This enables the tests to be repeated for different input and output conditions. The approach takes as much time and effort as the product.

However, changes to application does not require the automated test cases to be changed as long as the input conditions and expected output are still valid. This generation of automation focuses on input and output conditions using the black box testing approach.

Automation bridges the gap in skills requirement between testing and development; at times it demands more skills for test teams.

Third generation—Action-driven This technique enables a layman to create automated tests. There are no input and expected output conditions required for running the tests. All actions that appear on the application are automatically tested, based on a generic set of controls defined for automation. The set of actions are represented as objects and those objects are reused. The user needs to specify only the operations (such as log in, download, and so on) and everything else that is needed for those actions are automatically generated. The input and output conditions are automatically generated and used. The scenarios for test execution can be dynamically changed using the test framework that is available in this approach of automation. Hence, automation in the third generation involves two major aspects—"test case automation” and “framework design.” We will see the details of framework design in the next section.

From the above approaches/generations of automation, it is clear that different levels of skills are needed based on the generation of automation selected. The skills needed for automation are classified into four levels for three generations as the third generation of automation introduces two levels of skills for development of test cases and framework, as shown in Table 16.2.

16.4 WHAT TO AUTOMATE, SCOPE OF AUTOMATION

The first phase involved in product development is requirements gathering; it is no different for test automation as the output of automation can also be considered as a product (the automated tests). The automation requirements define what needs to be automated looking into various aspects. The specific requirements can vary from product to product, from situation to situation, from time to time. We present below some generic tips for identifying the scope for automation.

16.4.1 Identifying the Types of Testing Amenable to Automation

Certain types of tests automatically lend themselves to automation.

Stress, reliability, scalability, and performance testing These types of testing require the test cases to be run from a large number of different machines for an extended period of time, such as 24 hours, 48 hours, and so on. It is just not possible to have hundreds of users trying out the product day in and day out—they may neither be willing to perform the repetitive tasks, nor will it be possible to find that many people with the required skill sets. Test cases belonging to these testing types become the first candidates for automation.

Regression tests Regression tests are repetitive in nature. These test cases are executed multiple times during the product development phases. Given the repetitive nature of the test cases, automation will save significant time and effort in the long run. Furthermore, as discussed earlier in this chapter, the time thus gained can be effectively utilized for ad hoc testing and other more creative avenues.

Functional tests These kinds of tests may require a complex set up and thus require specialized skill, which may not be available on an ongoing basis. Automating these once, using the expert skill sets, can enable using less-skilled people to run these tests on an ongoing basis.

In the product development scenario, a lot of testing is repetitive as a good product can have a long lifetime if the periodic enhancements and maintenance releases are taken into account. This provides an opportunity to automate test cases and execute them multiple times during release cycles. As a thumb rule, if test cases need to be executed at least ten times in the near future, say, one year, and if the effort for automation does not exceed ten times of executing those test cases, then they become candidates for automation. Of course, this is just a thumb rule and the exact choice of what to automate will be determined by various factors such as availability of skill sets, availability of time for designing automated test scripts vis-à-vis release pressures, cost of the tool, availability of support, and so on.

The summary of arriving at the scope of what to automate is simply that we should choose to automate those functions (based on the above guidelines) that can amortize the investments in automation with minimum time delay.

16.4.2 Automating Areas Less Prone to Change

In a product scenario, the changes in requirements are quite common. In such a situation, what to automate is easy to answer. Automation should consider those areas where requirements go through lesser or no changes. Normally change in requirements cause scenarios and new features to be impacted, not the basic functionality of the product. As explained in the car manufacturing example in the extreme testing section of Chapter 10, the basic components of the car such as steering, brake, and accelerator have not changed over the years. While automating, such basic functionality of the product has to be considered first, so that they can be used for "regression test bed" and "daily builds and smoke test."

User interfaces normally go through significant changes during a project. To avoid rework on automated test cases, proper analysis has to be done to find out the areas of changes to user interfaces, and automate only those areas that will go through relatively less change. The non-user interface portions of the product can be automated first. While automating functions involving user interfaces-and non-user interface-oriented ("backend") elements, clear demarcation and “pluggability” have to be provided so that they can be executed together as well as executed independently. This enables the non-GUI portions of the automation to be reused even when GUI goes through changes.

16.4.3 Automate Tests that Pertain to Standards

One of the tests that products may have to undergo is compliance to standards. For example, a product providing a JDBC interface should satisfy the standard JDBC tests. These tests undergo relatively less change. Even if they do change, they provide backward compatibility by which automated scripts will continue to run.

Automating for standards provides a dual advantage. Test suites developed for standards are not only used for product testing but can also be sold as test tools for the market. A large number of tools available in the commercial market were internally developed for in-house usage. Hence, automating for standards creates new opportunities for them to be sold as commercial tools.

In case there are tools already available in the market for checking such standards, then there is no point in reinventing the wheel and rebuilding these tests. Rather, focus should be towards other areas for which tools are not available and in providing interfaces to other tools.

Testing for standards have certain legal and organization requirements. To certify the software or hardware, a test suite is developed and handed over to different companies. The certification suites are executed every time by the supporting organization before the release of software and hardware. This is called "certification testing" and requires perfectly compliant results every time the tests are executed. The companies that do certification testing may not know much about the product and standards but do the majority of this testing. Hence, automation in this area will go a long way. This is definitely an area of focus for automation. For example, some companies develop test suites for their software product and hardware manufacturers execute them before releasing a new hardware platform. This enables the customers to ascertain that the new hardware that is being released is compatible with software products that are popular in the market.

16.4.4 Management Aspects in Automation

Prior to starting automation, adequate effort has to be spent to obtain management commitment. Automation generally is a phase involving a large amount of effort and is not necessarily a one-time activity. The automated test cases need to be maintained till the product reaches obsolesence. Since it involves significant effort to develop and maintain automated tools, obtaining management commitment is an important activity. Since automation involves effort over an extended period of time, management permissions are only given in phases and part by part. Hence, automation effort should focus on those areas for which management commitment exists already.

What to automate takes into account the technical and management aspects, as well as the long-term vision.

Return on investment is another aspect to be considered seriously. Effort estimates for automation should give a clear indication to the management on the expected return on investment. While starting automation, the effort should focus on areas where good permutations and combinations exist. This enables automation to cover more test cases with less code. Secondly, test cases which are easy to automate in less time should be considered first for automation. Some of the test cases do not have pre-associated expected results and such test cases take long to automate. Such test cases should be considered for the later phases of automation. This satisfies those amongst the management who look for quick returns from automation.

In line with Stephen Covey's principle of “First Things First,” [COVE-89] it is important to automate the critical and basic functionalities of a product first. To achieve this, all test cases need to be prioritized as high, medium, and low, based on customer expectations, and automation should start from high priority and then cover medium and low-priority requirements.

16.5 DESIGN AND ARCHITECTURE FOR AUTOMATION

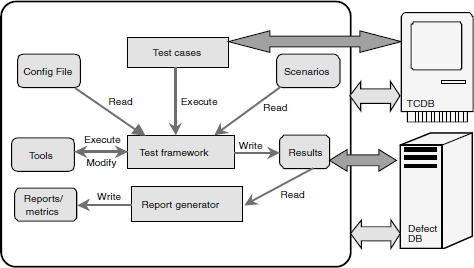

Design and architecture is an important aspect of automation. As in product development, the design has to represent all requirements in modules and in the interactions between modules. As we have seen in Chapter 5, Integration Testing, both internal interfaces and external interfaces have to be captured by design and architecture. In Figure 16.2, the thin arrows represent the internal interfaces and the direction of flow and thick arrows show the external interfaces. All the modules, their purpose, and interactions between them are described in the subsequent sections. The coloured figure is available on Illustrations.

Architecture for test automation involves two major heads: a test infrastructure that covers a test case database and a defect database or defect repository. These are shown as external modules in Figure 16.2. Using this infrastructure, the test framework provides a backbone that ties the selection and execution of test cases.

16.5.1 External Modules

There are two modules that are external modules to automation—TCDB and defect DB. We have described details of these in Chapter 15. To recall, all the test cases, the steps to execute them, and the history of their execution (such as when a particular test case was run and whether it passed/failed) are stored in the TCDB. The test cases in TCDB can be manual or automated. The interface shown by thick arrows represents the interaction between TCDB and the automation framework only for automated test cases. Please note that manual test cases do not need any interaction between the framework and TCDB.

Defect DB or defect database or defect repository contains details of all the defects that are found in various products that are tested in a particular organization. It contains defects and all the related information (when the defect was found, to whom it is assigned, what is the current status, the type of defect, its impact, and so on). Test engineers submit the defects for manual test cases. For automated test cases, the framework can automatically submit the defects to the defect DB during execution.

These external modules can be accessed by any module in automation framework, not just one or two modules. In Figure 16.2, the “green” thick arrows show specific interactions and “blue” thick arrows show multiple interactions.

16.5.2 Scenario and Configuration File Modules

As we have seen in earlier sections, scenarios are nothing but information on “how to execute a particular test case.”

A configuration file contains a set of variables that are used in automation. The variables could be for the test framework or for other modules in automation such as tools and metrics or for the test suite or for a set of test cases or for a particular test case. A configuration file is important for running the test cases for various execution conditions and for running the tests for various input and output conditions and states. The values of variables in this configuration file can be changed dynamically to achieve different execution, input, output, and state conditions.

16.5.3 Test Cases and Test Framework Modules

A test case in Figure 16.2 means the automated test cases that are taken from TCDB and executed by the framework. Test case is an object for execution for other modules in the architecture and does not represent any interaction by itself.

A test framework is a module that combines “what to execute” and “how they have to be executed.” It picks up the specific test cases that are automated from TCDB and picks up the scenarios and executes them. The variables and their defined values are picked up by the test framework and the test cases are executed for those values.

The test framework is considered the core of automation design. It subjects the test cases to different scenarios. For example, if there is a scenario that requests a particular test case be executed for 48 hours in a loop, then the test framework executes those test cases in the loop and times out when the duration is met. The framework monitors the results of every iteration and the results are stored. The test framework contains the main logic for interacting, initiating, and controlling all modules. The various requirements for the test framework are covered in the next section.

A test framework can be developed by the organization internally or can be bought from the vendor. Test framework and test tool are the two terms that are used interchangeably in this chapter. To differentiate between the usage of these two terms (wherever needed), in this chapter “framework” is used to mean an internal tool developed by the organization and “test tool” is used to mean a tool obtained from a tool vendor.

16.5.4 Tools and Results Modules

When a test framework performs its operations, there are a set of tools that may be required. For example, when test cases are stored as source code files in TCDB, they need to be extracted and compiled by build tools. In order to run the compiled code, certain runtime tools and utilities may be required. For example, IP Packet Simulators or User Login Simulators or Machine Simulators may be needed. In this case, the test framework invokes all these different tools and utilities.

When a test framework executes a set of test cases with a set of scenarios for the different values provided by the configuration file, the results for each of the test case along with scenarios and variable values have to be stored for future analysis and action. The results that come out of the tests run by the test framework should not overwrite the results from the previous test runs. The history of all the previous tests run should be recorded and kept as archives. The archive of results help in executing test cases based on previous test results. For example, a test engineer can request the test framework to “execute all test cases that are failed in previous test run.” The audit of all tests that are run and the related information are stored in the module of automation. This can also help in selecting test cases for regression runs, as explained in Chapter 8, Regression Testing.

16.5.5 Report Generator and Reports/Metrics Modules

Once the results of a test run are available, the next step is to prepare the test reports and metrics. Preparing reports is a complex and time-consuming effort and hence it should be part of the automation design. There should be customized reports such as an executive report, which gives very high level status; technical reports, which give a moderate level of detail of the tests run; and detailed or debug reports which are generated for developers to debug the failed test cases and the product. The periodicity of the reports is different, such as daily, weekly, monthly, and milestone reports. Having reports of different levels of detail and different periodicities can address the needs of multiple constituents and thus provide significant returns.

The module that takes the necessary inputs and prepares a formatted report is called a report generator. Once the results are available, the report generator can generate metrics.

All the reports and metrics that are generated are stored in the reports/metrics module of automation for future use and analysis.

16.6 GENERIC REQUIREMENTS FOR TEST TOOL/FRAMEWORK

In the previous section, we described a generic framework for test automation. We will now present certain detailed criteria that such a framework and its usage should satisfy.

While illustrating the requirements, we have used examples in a hypothetical metalanguage to drive home the concept. The reader should verify the availability, syntax, and semantics of his or her chosen automation tool.

Requirement 1: No hard coding in the test suite

One of the important requirements for a test suite is to keep all variables separately in a file. By following this practice, the source code for the test suite need not be modified every time it is required to run the tests for different values of the variables. This enables a person who does not know the program to change the values and run the test suite. As we saw earlier, the variables for the test suite are called configuration variables. The file in which all variable names and their associated values are kept is called configuration file. It is quite possible that there could be several variables in the configuration file. Some of them could be for the test tool and some of them for the test suite. The variables belonging to the test tool and the test suite need to be separated so that the user of the test suite need not worry about test tool variables. Moreover, inadvertently changing test tool variables, without knowing their purpose, may impact the results of the tests. Providing inline comment for each of the variables will make the test suite more usable and may avoid improper usage of variables. An example of such a well-documented configuration file is provided below.

# Test framework Configuration Parameters

# WARNING: DO NOT MODIFY THIS SET WITHOUT CONSULTING YOUR SYADMIN

| TOOL_PATH=/tools | # Path for test tool |

| COMMONLIB_PATH=/tools/crm/lib | # Common Library Functions |

| SUITE_PATH=/tools/crm | # Test suite path |

# Parameters common to all the test cases in the test suite

| VERBOSE_LEVEL=3 | # Messaging Level to screen |

| MAX_ERRORS=200 | # Maximum allowable errors before the |

# Test suite exits |

|

| USER_PASSWD=hello123 | # System administrator password |

# Test Case 1 parameters

| TC1_USR_CREATE=0 | # Whether users to be created, 1 = yes, 0 = no |

| TC1_USR_PASSWD=hello1 | # User Password |

| TC1_USR_PREFI×=user | # User Prefix |

| TC1_MAX_USRS=200 # | # Maximum users |

In a product scenario involving several releases and several test cycles and defect fixes, the test cases go through large amount of changes and additionally there are situations for the new test cases to be added to the test suite. Test case modification and new test case insertion should not result in the existing test cases failing. If such modifications and new test cases result in the quality of the test suite being impacted, it defeats the purpose of automation, and maintenance requirements of the test suite become high. Similarly, test tools are not only used for one product having one test suite. They are used for various products that may have multiple test suites. In this case, it is important for the test suites be added to the framework without affecting other test suites. To summarize

- Adding a test case should not affect other test cases

- Adding a test case should not result in retesting the complete test suite

- Adding a new test suite to the framework should not affect existing test suites

Requirement 2: Test case/suite expandability

As we have seen in the “log in” example, the functionality of the product when subjected to different scenarios becomes test cases for different types of testing. This encourages the reuse of code in automation. By following the objectives of framework and test suite to take care of the “how” and “what” portions of automation respectively, reuse of test cases can be increased. The reuse of code is not only applicable to various types of testing; it is also applicable for modules within automation. All those functions that are needed by more than one test case can be separated and included in libraries. When writing code for automation, adequate care has to be taken to make them modular by providing functions, libraries and including files. To summarize

- The test suite should only do what a test is expected to do. The test framework needs to take care of “how,” and

- The test programs need to be modular to encourage reuse of code.

Requirement 3: Reuse of code for different types of testing, test cases

For each test case there could be some prerequisite to be met before they are run. The test cases may expect some objects to be created or certain portions of the product to be configured in a particular way. If this portion is not met by automation, then it introduces some manual intervention before running the test cases. When test cases expect a particular setup to run the tests, it will be very difficult to remember each one of them and do the setup accordingly in the manual method. Hence, each test program should have a “setup” program that will create the necessary setup before executing the test cases. The test framework should have the intelligence to find out what test cases are executed and call the appropriate setup program.

Requirement 4: Automatic setup and cleanup

A setup for one test case may work negatively for another test case. Hence, it is important not only to create the setup but also “undo” the setup soon after the test execution for the test case. Hence, a “cleanup” program becomes important and the test framework should have facilities to invoke this program after test execution for a test case is over.

We discussed test case expandability in requirement 2. The test cases need to be independent not only in the design phase, but also in the execution phase. To execute a particular test case, it should not expect any other test case to have been executed before nor should it implicitly assume that certain other test case will be run after it. Each test case should be executed alone; there should be no dependency between test cases such as test case-2 to be executed after test case-1 and so on. This requirement enables the test engineer to select and execute any test case at random without worrying about other dependencies.

Requirement 5: Independent test cases

Contrary to what was discussed in the previous requirement, sometimes there may be a need for test cases to depend on others. Making test cases independent enables any one case to be selected at random and executed. Making a test case dependent on an other makes it necessary for a particular test case to be executed before or after a dependent test case is selected for execution. A test tool or a framework should provide both features. The framework should help to specify the dynamic dependencies between test cases.

Requirement 6: Test case dependency

Insulating test cases from the environment is an important requirement for the framework or test tool. At the time of test case execution, there could be some events or interrupts or signals in the system that may affect the execution. Consider the example of automatic pop-up screens on web browsers. When such pop-up screens happen during execution, they affect test case execution as the test suite may be expecting some other screen based on an earlier step in the test case.

Requirement 7: Insulating test cases during execution

Hence, to avoid test cases failing due to some unforeseen events, the framework should provide an option for users to block some of the events. There has to be an option in the framework to specify what events can affect the test suite and what should not.

Coding standards and proper directory structures for a test suite may help the new engineers in understanding the test suite fast and help in maintaining the test suite. Incorporating the coding standards improves portability of the code. The test tool should have an option to specify (and sometimes to force) coding standards such as POSIX, XPG3, and so on. The test framework should provide an option or force the directory structure to enable multiple programmers to develop test suites/test cases in parallel, without duplicating the parts of the test case and by reusing the portion of the code.

Requirement 8: Coding standards and directory structure

A framework may have multiple test suites; a test suite may have multiple test programs; and a test program may have multiple test cases. The test tool or a framework should have a facility for the test engineer to select a particular test case or a set of test cases and execute them. The selection of test cases need not be in any order and any combination should be allowed. Allowing test engineers to select test cases reduces the time and limits the focus to only those tests that are to be run and analyzed. These selections are normally done as part of the scenario file. The selection of test cases can be done dynamically just before running the test cases, by editing the scenario file.

Requirement 9: Selective execution of test cases

In the above scenario line, the test cases 2, 4, 1, 7, 8, 9, 10 are selected for execution in the same order mentioned. The hyphen (-) is used to mention the test cases in the chosen range—(7-10) have all to be executed. If the test case numbers are not mentioned in the above example, then the test tool should have the facility to execute all the test cases.

Requirement 10: Random execution of test cases

While it is a requirement for a test engineer to select test cases from the available test cases as discussed in requirement 8 above, the same test engineer may sometimes need to select a test case randomly from a list of test cases. Giving a set of test cases and expecting the test tool to select the test case is called random execution of test cases. A test engineer selects a set of test cases from a test suite; selecting a random test case from the given list is done by the test tool. Given below are two examples to demonstrate random execution.

|

Example 1: random test-program-name 2, 1, 5 |

Example 2: random test-programl (2, 1, 5 ) test-program2 test-program3 |

In the first example, the test engineer wants the test tool to select one out of test cases 2, 1, 5 and executed. In the second example, the test engineer wants one out of test programs 1, 2, 3 to be randomly executed and if program 1 is selected, then one out of test cases 2, 1, 5 to be randomly executed. In this example if test programs 2 or 3 are selected, then all test cases in those programs are executed.

Requirement 11: Parallel execution of test cases

There are certain defects which can be unearthed if some of the test cases are run at the same time. In a multi-tasking and multi processing operating systems it is possible to make several instances of the tests and make them run in parallel. Parallel execution simulates the behavior of several machines running the same test and hence is very useful for performance and load testing.

|

Example 1: instances,5 Test-program1(3) |

Example 2: Time_loop, 5 hours test-program1(2, 1, 5) test-program2 test-program3 |

In the first example above, 5 instances of test case 3 from test programl, are created; in the second example, 5 instances of 3 test programs are created. Within each of the five instances that are created, the test programs 1, 2, 3 are executed in sequence.

Requirement 12: Looping the test cases

As discussed earlier, reliability testing requires the test cases to be executed in a loop. There are two types of loops that are available. One is the iteration loop which gives the number of iterations of a particular test case to be executed. The other is the timed loop, which keeps executing the test cases in a loop till the specified time duration is reached. These tests bring out reliability issues in the product.

|

Example 1: Repeat_loop, 50 Test-program1(3) |

Example 2: Time_loop, 5 hours test-program1(2, 1, 5) test-program2 test-program3 |

In the first example, test case 3 from test programl is repeated 50 times and in the second example, test cases 2, 1, 5 from test program1 and all test cases from test programs 2 and 3 are executed in order, in a loop for five hours.

Requirement 13: Grouping of test scenarios

We have seen many requirements and scenarios for test execution. Now let us discuss how we can combine those individual scenarios into a group so that they can run for a long time with a good mix of test cases. The group scenarios allow the selected test cases to be executed in order, random, in a loop all at the same time. The grouping of scenarios allows several tests to be executed in a predetermined combination of scenarios.

The following is an example of a group scenario.

Example:

group_scenario1

parallel, 2 AND repeat, 10 @ scenl

scen1

test_program1 (2, 1, 5)

test_program2

test_program3

In the above example, the group scenario was created to execute two instances of the individual scenario “scen1” in a loop for 10 times. The individual scenario is defined to execute test program1 (test cases 2, 1 and 5), test program2 and test program3. Hence, in the combined scenario, all test programs are executed by two instances simultaneously in an iteration loop for 10 times.

Requirement 14: Test case execution based on previous results

As we have seen in Chapter 8, regression test methodology requires that test cases be selected based on previous result and history. Hence, automation may not be of much help if the previous results of test execution are not considered for the choice of tests. Not only for regression testing, it is a requirement for various other types of testing also. One of the effective practices is to select the test cases that are not executed and test cases that failed in the past and focus more on them. Some of the common scenarios that require test cases to be executed based on the earlier results are

- Rerun all test cases which were executed previously;

- Resume the test cases from where they were stopped the previous time;

- Rerun only failed/not run test cases; and

- Execute all test cases that were executed on “Jan 26, 2005.”

With automation, this task becomes very easy if the test tool or the framework can help make such choices.

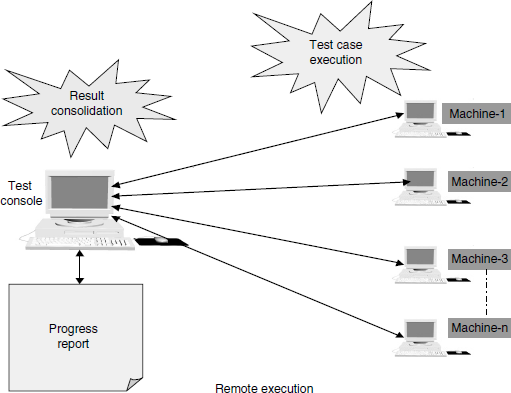

Requirement 15: Remote execution of test cases

Most product testing requires more than one machine to be used. Hence there is a facility needed to start the testing on multiple machines at the same time from a central place. The central machine that allocates tests to multiple machines and co-ordinates the execution and result is called test console or test monitor. In the absence of a test console, not only does executing the results from multiple machines become difficult, collecting the results from all those machines also becomes difficult. In the absence of a test console, the results of tests need to be collected manually and consolidated. As it is against the objective of automation to introduce a manual step, this requirement is important for the framework to have. To summarize

- It should be possible to execute/stop the test suite on any machine/set of machines from the test console.

- The test results and logs can be collected from the test console.

- The progress of testing can be found from the test console.

Figure 16.3 illustrates the role played be a test console and the multiple test machine. The coloured figure is available on Illustrations.

In requirement 13, we have seen that test cases are repeated on the basis of previous results. To confirm the results or to reproduce the problem, it is not enough repeat the test cases. The test cases have to be repeated the same way as before, with the same scenarios, same configuration variables and values, and so on. This requires that all the related information for the test cases have to be archived. Hence, this requirement becomes very important for repeating test cases and for analysis. Archival of test data must include

- What configuration variables were used;

- What scenario was used; and

- What programs were executed and from what path.

Requirement 16: Automatic archival of test data

Every test suite needs to have a reporting scheme from where meaningful reports can be extracted. As we have seen in the design and architecture of framework, the report generator should have the capability to look at the results file and generate various reports. Hence, though the report generator is designed to develop dynamic reports, it is very difficult to say what information is needed and what not. Therefore, it is necessary to store all information related to test cases in the results file.

Requirement 17: Reporting scheme

It is not only configuration variables that affect test cases and their results. The tunable parameters of the product and operating system also need to be archived to ensure repeatability or for analyzing defects.

Audit logs are very important to analyze the behavior of a test suite and a product. They store detailed information for each of the operations such as when the operation was invoked, the values for variables, and when the operation was completed, For performance tests, information such as when a framework was invoked, when a scenario was started, when a particular test case started and their corresponding completion time are important to calculate the performance of the product. Hence; a reporting scheme should include

- When the framework, scenario, test suite, test program, and each test case were started/completed;

- Result of each test case;

- Log messages;

- Category of events and log of events; and

- Audit reports.

While coding for automation, there are some test cases which are easier coded using the scripting language provided by the tool, some in C and some in C++ and so on. Hence, a framework or test tool should provide a choice of languages and scripts that are popular in the software development area. Irrespective of the languages/scripts are used for automation, the framework should function the same way, meeting all requirements. Many test tools force the test scripts to be written in a particular language or force some proprietary scripts to be used. This needs to be avoided as it affects the momentum of automation because a new language has to be learned. To summarize

- A framework should be independent of programming languages and scripts.

- A framework should provide choice of programming languages, scripts, and their combinations.

- A framework or test suite should not force a language/script.

- A framework or test suite should work with different test programs written using different languages and scripts.

- A framework should have exported interfaces to support all popular, standard languages, and scripts.

- The internal scripts and options used by the framework should allow the developers of a test suite to migrate to better framework.

Requirement 18: Independent of languages

With the advent of platform-independent languages and technologies, there are many products in the market that are supported in multiple OS and language platforms. Products being cross-platform and test framework not working on some of those platforms are not good for automation. Hence, it is important for the test tools and framework to be cross-platform and be able to run on the same diversity of platforms and environments under which the product under test runs.

Requirement 19: Portability to different platforms

Having said that the test tools need to be cross-platform, it is important for the test suite developed for the product also be cross-platform or portable to other platforms with minimum amount of effort.

With a checklist for cross-platform capabilities of the test tools, it is important to look at platform architectures also. For example, 64-bit operating systems and products that work in 64-bit architecture are available in the market. The product being 64-bit and test suite functioning as a 32-bit application may not be a big issue, since all platforms support compatibility with legacy applications running 32 bits. However, this prevents some of the defects that are in the product from being unearthed. In a pure 64-bit environment which does not provide backward compatibility (for example, Digital Tru64), these 32-bit test suite cannot be run at all. To summarize

- The framework and its interfaces should be supported on various platforms.

- Portability to different platforms is a basic requirement for test tool/test suite.

- The language/script used in the test suite should be selected carefully so that it run on different platforms.

- The language/script written for the test suite should not contain platform-specific calls.

16.7 PROCESS MODEL FOR AUTOMATION

There is no hard and fast rule on when automation should start and when it should end. The work on automation can go simultaneously with product development and can overlap with multiple releases of the product. Like multiple-product releases, automation also has several releases. One specific requirement for automation is that the delivery of the automated tests should be done before the test execution phase so that the deliverables from automation effort can be utilized for the current release of the product. The requirements for automation span multiple phases for multiple releases, like product requirements. Test execution may stop soon after releasing the product but automation effort continues after a product release.

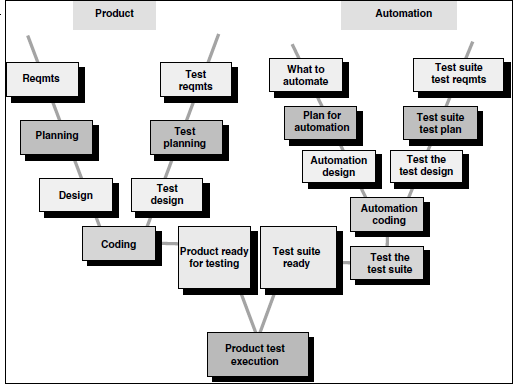

Given the above similarities between the product development and automation development, the process and life cycle model followed for automation and product development can also be very similar. In the majority of cases, the software development life cycle (SDLC) model followed for the product is followed for automation also. This section focuses on the V model and its extensions to explain the process of test automation. Let us look at the phases involved in product development and phases in automation in Figure 16.4 to understand the similarities.

As we have seen in this chapter, test automation life cycle activities bear a strong similarity to product development activities. Just as product requirements need to be gathered on the product side, automation requirements too need to be gathered. Similarly, just as product planning, design, and coding are done, so also during test automation are automation planning, design, and coding.

It is important to test the test suite as we test the product. If there are defects in the test suite, much effort is involved in debugging to find out whether the defect is from the product or from the test suite. As mentioned in Chapter 1, “Test the test first”—an automated test suite has to be tested first before it can be used to test the product. If test suites report wrong defects, it will severely impact the credibility of test automation and will hamper further automation efforts. Hence, it is important to deliver trustworthy quality to the automated test suite. To produce test suites of trustworthy quality, some test phases become important as in product development. Figure 16.5, extends Figure 16.4 to introduce testing phases for the product and automated test suite. The coloured figure is available on Illustrations.

A set of four activities can be performed at each phase of product and automation development. While collecting development requirements for the product in the requirements phase, test requirements to test the product, requirements for development of automation, and test requirements for automation can be done simultaneously in the same phase. Similarly, the set of four activities can be performed at the planning and design phases. The coding for product and automation forms the coding phase in this W model where product and test suite get delivered. The coloured figure is available on Illustrations.

Product and automation are like the two lines in a railway track; they go parallel in the same direction with similar expectations.

Test suites form one of the the deliverables from automation. In addition, the test framework itself can be considered as a deliverable from automation, the testing phases discussed in the above paragraph are only for the test suite, as the latter needs to be tested thoroughly for use in the product testing.

After introducing testing activities for both the product and automation, now the picture includes two parallel sets of activities for development and two parallel sets of activities for testing. When they are put together, it becomes a “W” model. Hence, for a product development involving automation, it will be a good choice to follow the W model to ensure that the quality of the product as well as the test suite developed meet the expected quality norms.

When talking about the W model it should not be interpreted that all activities that appear in a particular phase should start and finish at the same time. For example, in Figure 16.5, “design,” “test design,” “automation design,” and “test design for test suite” appear in same phase (appearing side by side in the figure). The start and end date for the product and automation can be different for these activities. The model is only to ensure the flow of activities; there is no binding on the start and end date. Product development and automation can have independent schedules and can be handled as two different projects.

Another reason why there cannot be the same start and end date is because in many organizations the same test team is involved in testing the product and in developing the test suite. Obviously then the schedule in such case will be different. The start and end dates for activities are determined by project schedules based on resource availability and other dependencies.

Where a dedicated team exists for automation in an organization, the schedule for automation can be independent of product releases, with some (tested) deliverables marked for each product release. This enables the recently developed test suite to be used for testing the current release of the product.

16.8 SELECTING A TEST TOOL

Having identified the requirements of what to automate, a related question is the choice of an appropriate tool for automation. Even though we have listed these as two sequential steps, oftentimes, they are tightly interlinked.

Selecting the test tool is an important aspect of test automation for several reasons as given below.

- Free tools are not well supported and get phased out soon. It will be extremely dangerous to see a release stalled because of a problem in a test tool.

- Developing in-house tools takes time. Even though in-house tools can be less expensive and can meet needs better, they are often developed by the personal interest shown by a few engineers. They tend to have poor documentation and thus, once the person who developed the tools leaves the organization, the tools become unusable. Furthermore, such tool development takes a back seat if the pressure of actual product testing and delivery dates comes into play. Hence, this cannot be a sustained effort.

- Test tools sold by vendors are expensive. In absolute dollar terms, the standard test automation tools in the market are expensive. Most organizations, especially small and medium enterprises, will have to carefully evaluate the economic impact of making such a significant investment.

- Test tools require strong training. Test automation cannot be successful unless the people using the tools are properly trained. Such training usually involves getting familiar with the scripting languages that come with the tool, customizing the tool for use, and adding extensions or plug-ins for the tool. The effort involved in using the tools even after such training is quite high.

- Test tools generally do not meet all the requirements for automation. Since tools are meant to be generic, they may not fully satisfy the needs of a particular customer. That is why customization and extensibility become key issues.

- Not all test tools run on all platforms. To amortize the costs of automation, the tools and the automated tests should be reusable on all the platforms on which the product under test runs. Portability of the tool and the scripts to multiple platforms is therefore a key factor in deciding the test automation tool.

For all the above strong reasons, adequate focus needs to be provided for selecting the right tool for automation. As mentioned earlier, it is very important to ensure that we understand the requirements of automation while choosing the tools. The requirements should cover both the short-term and the long-term requirements. These requirements form the basis for tool selection.

16.8.1 Criteria for Selecting Test Tools

In the previous section, we looked at some reasons for evaluating the test tools and how requirements gathering will help. These change according to context and are different for different companies and products. We will now look into the broad categories for classifying the criteria. The categories are

- Meeting requirements;

- Technology expectations;

- Training/skills; and

- Management aspects.

16.8.1.1 Meeting requirements

Firstly, there are plenty of tools available in the market but rarely do they meet all the requirements of a given product or a given organization. Evaluating different tools for different requirements involves significant effort, money, and time. Given of the plethora of choice available (with each choice meeting some part of the requirement), huge delay is involved in selecting and implanting test tools.

Secondly, test tools are usually one generation behind and may not provide backward or forward compatibility (for example, JAVA SDK support) with the product under test. For example, if a product uses the latest version of SDK, say, version 2, the supported version of SDK in test tool could be 1.2. In this case some of the features that are coded in the product using version 2.0 new features, cannot be tested using the test tool. The time lag required for the test tool to catch up with the technology change in the product is something that a product organization may not be able to afford.

Thirdly, test tools may not go through the same amount of evaluation for new requirements. For example during Year 2000 testing, some of the tools could not be used for testing as they had similar problems (of not handling the date properly) as that of the products. Like the products, the test tools were not adequately tested for these new requirements. True to the dictum of “test the tests first” discussed in Chapter 1, the test tool must be tested and found fit for testing a product.

Finally, a number of test tools cannot differentiate between a product failure and a test failure. This causes increased analysis time and manual testing. The test tools may not provide the required amount of troubleshooting/debug/error messages to help in analysis. This can result in increased log messages and auditing in the test suite or may result in going through the test manually. In the case of testing a GUI application, the test tools may determine the results based on messages and screen coordinates at run-time. Therefore, if the screen elements of the product are changed, it requires the test suite to be changed. The test tool must have some intelligence to proactively find out the changes that happened in the product and accordingly analyze the results.

16.8.1.2 Technology expectations

Firstly, test tools in general may not allow test developers to extend/modify the functionality of the framework. So extending the functionality requires going back to the tool vendor and involves additional cost and effort. Test tools may not provide the same amount of SDK or exported interfaces as provided by the products. Very few tools available in the market provide source code for extending functionality or fixing some problems. Extensibility and customization are important expectations of a test tool.

Secondly, a good number of test tools require their libraries to be linked with product binaries. When these libraries are linked with the source code of the product, it is called "instrumented code." This causes portions of the testing be repeated after those libraries are removed, as the results of certain types of testing will be different and better when those libraries are removed. For example, instrumented code has a major impact on performance testing since the test tools introduce an additional code and there could be a delay in executing the additional code.

Finally, test tools are not 100% cross-platform. They are supported only on some operating system platforms and the scripts generated from these tools may not be compatible on other platforms. Moreover, many of the test tools are capable of testing only the product, not the impact of the product/test tool to the system or network. When there is an impact analysis of the product on the network or system, the first suspect is the test tool and it is uninstalled when such analysis starts.

16.8.1.3 Training skills

While test tools require plenty of training, very few vendors provide the training to the required level. Organization-level training is needed to deploy the test tools, as the users of the test suite are not only the test team but also the development team and other areas like configuration management. Test tools expect the users to learn new language/scripts and may not use standard languages/scripts. This increases skill requirements for automation and increases the need for a learning curve inside the organization.

16.8.1.4 Management aspects

A test tool increases the system requirement and requires the hardware and software to be upgraded. This increases the cost of the already-expensive test tool. When selecting the test tool, it is important to note the system requirements, and the cost involved in upgrading the software and hardware needs to be included with the cost of the tool. Migrating from one test tool to another may be difficult and requires a lot of effort. Not only is this difficult as the test suite that is written cannot be used with other test tools, but also because of the cost involved. As the tools are expensive and unless the management feels that the returns on the investment are justified, changing tools are generally not permitted.

Deploying a test tool requires as much effort as deploying a product in a company. However, due to project pressures, test tools the effort at deploying gets diluted, not spent. Later, thus becomes one of the reasons for delay or for automation not meeting expectations. The support available on the tool is another important point to be considered while selecting and deploying the test tool.

Table 16.3 summarizes the above discussion.

16.8.2 Steps for Tool Selection and Deployment

The objective of this section is to put seven simple steps to select and deploy a test tool in an organization based on aspects discussed in the earlier sections of this chapter. The steps are

- Identify your test suite requirements among the generic requirements discussed. Add other requirements (if any).

- Make sure experiences discussed in previous sections are taken care of.

- Collect the experiences of other organizations which used similar test tools.

- Keep a checklist of questions to be asked to the vendors on cost/effort/support.

- Identify list of tools that meet the above requirements (give priority for the tool which is available with source code).

- Evaluate and shortlist one/set of tools and train all test developers on the tool.

- Deploy the tool across test teams after training all potential users of the tool.

Tools have very high entry, maintenance, and exit costs and hence careful selection is required.

16.9 AUTOMATION FOR E×TREME PROGRAMMING MODEL

As we have seen in Chapter 10, the extreme programming model is based on basic concepts such as

- Unit test cases are developed before coding phase starts;

- Code is written for test cases and are written to ensure test cases pass;

- All unit tests must run 100% all the time; and

- Everyone owns the product; they often cross boundaries.

The above concepts in the extreme programming model makes automation an integral part of product development. For this reason, the model gets a special mention in this chapter. Automation in extreme programming is not considered as an additional activity. The requirements for automation are available at the same time as product requirements are. This enables the automation to be started from the first day of product development.

The test cases are written before the coding phase starts in extreme programming. The developers write code to ensure the test cases pass. This keeps the code and the test cases in sync all the time. It also enables the code written for the product to be reused for automation. Moreover, since the objective of the code is to ensure that the test cases pass, automated test cases are developed automatically by the developer while coding.

All unit test cases should be run with 100% pass rate in extreme programming all the time. This goal gives additional importance to automation because without automation the goal cannot be met every time the code is touched.

The gap between development skills and coding skills for automation is not an issue in a team that follows extreme programming. Hence people cross boundaries to perform various roles as developers, testers, and so on. In the extreme programming model. Development, testing, and automation skills are not put in separate water-tight compartments.

The above concepts make automation an integral part of the product development cycle for the extreme programming model.

16.10 CHALLENGES IN AUTOMATION

As can be inferred from the above sections, test automation presents some very unique challenges. The most important of these challenges is management commitment.

As discussed in Chapter 1, automation should not be viewed as a panacea for all problems nor should it be perceived as a quick-fix solution for all the quality problems in a product. Automation takes time and effort and pays off in the long run. However, automation requires significant initial outlay of money as well as a steep learning curve for the test engineers before it can start paying off. Management should have patience and persist with automation. The main challenge here is because of the heavy front-loading of costs of test automation, management starts to look for an early payback. Successful test automation endeavors are characterized by unflinching management commitment, a clear vision of the goals, and the ability to set realistic short-term goals that track progress with respect to the long-term vision. When any of these attributes are found lacking in the management team, it is likely that the automation initiative will fail. Worse, it may even wrongly start a negative mindset about automation, much as it did for the farmer in Chapter 1.

16.11 SUMMARY

In Chapter 1, we discussed the importance of testing the test suite in “Test the tests first.” Testing the test suite properly and ensuring it meets the required expectations on quality needs to be done before the test suite is used for testing the product. As discussed earlier, there is an effort and a debate involved in finding out whether a defect happened due to a test suite or product, if there is a suspicion on the quality of the test suite. To ensure that such doubts do not arise and to ensure a smooth running of tests, all quality requirements and process steps need to be followed as in product development.

The quality requirements for the test suite are equal to or more stringent than that of the product.

There is a perception that automation means automating the process of test execution. The scope of automation should get extended to all the phases involved in product development involving several people and several roles in the organization. Test execution is where automation starts as this is a phase where good repetition exists and manual effort involved is more. Automation does not stop with automating the execution of test cases alone. The test suite needs to be linked with other tools and activities for increased effectiveness (for example, test case database, defect filing, sending automatic mails on test and build status, preparing automatic reports, and so on). Having said that about automation, not everything can be automated and 100% automation is not always possible. A combination of test suite with a number of test cases automated with creative manual testing will make testing more effective. Automation does not stop with recording and playing back the user commands. An automated test suite should be intelligent enough to say what was expected, why a test case failed, and give manual steps to reproduce the problem. Automation should not be done for saving effort alone; it should be an effort to increase the coverage and improve repeatability. Sometimes, manual test cases that are documented need to be modified to suit automation requirements. Where automation exists, the documentation need not be elaborate, as the steps for test cases can at any point of time be derived by looking at automation scripts.

A good automation can help in 24 × 7 test execution, saving effort and time.

The progress in automation is not only hampered because of technical complexities but also because of lack of people resources allocated. Even when people resources are allocated, they are pulled out when the product is close to release or when it reaches a critical state. This urgency is understandable, as releasing the product on time, meeting the commitments given to the customer, should receive relatively higher priority than automation. However, a frequent repeat of this situation is not good for automation. A separate team in the organization looking at automation requirements, tool evaluation, and developing generic test suites would add more value. An independent team for automation can realize the long-term goals of the organization on automation.

There is a career in tool usage and administration and expertise. There are certification courses available on test tool usage and there are many career options available in automation. Hence it is important to consider automation as an important area and a role where developers and testers can grow.

At times automation is more complex than product development. Plan to have your best development and test engineers in the automation team.

Automation should not be considered as a stopgap arrangement to engage test engineers (“when there is no test execution work, do automation!”) when they are have free time. A broad vision is needed to make automation more useful. A plan should exist to maintain the test suites as is done for product maintenance. Automation should be considered as a product, not as a project. Like a product road map, automation should also have a road map (denoted as “space map” in the adjacent box).

Selecting test tools without proper analysis will result in expensive test tools gathering dust on the shelf. This is termed as shelf ware.

Selecting the proper test tool represents only one part of the success for automation. Timeliness and the test suite meeting the testing requirements with required quality are other factors to be considered. The test suite thus developed should be delivered before test execution starts and automation objectives for the product should be delivered before the product reaches the end of its life. Else, success in automation alone will be like the proverbial Operation successful but patient dead. A successful automation is one where the product requirements are met along with automation requirements.

Having talked about factors for successful automation, it is time to discuss certain observations on failures. Generally, there is lack of ownership on failures for automation that are brought out by certain surveys and testing books. This view is supported by the fact that 30% of test tools remain as shelf ware. We have discussed “automation syndrome” in Chapter 1, where the farmer blames the failure of the crop on automation. Automation does not fail by itself, and perceptions that are around automation need to be taken care of. For example, there is a perception that automation will reduce the staffing level in the organization. Automation does not reduce staffing levels. It makes test engineers focus on more creative tasks that have a direct bearing on product quality.

Automation makes life easier for testers for better reproduction of test results, coverage and, of course, reduction in effort as a side product. Automation should be considered as a part of life in product development and should be maintained as a basic habit for the success of products and organizations. Finally, with automation, one can produce better and more effective metrics that can help in understanding the state of health of a product in a quantifiable way, thus taking us to the next change.

Keeping a road map does not help while flying; both “space map” and “road map” are needed to fly and to reach your place.

Automation in testing is covered by several books and in the articles that are available in the web. However, this chapter tries to unify all concepts and methods in a simple way. [KANE-200l] is a good place to look at some of the experiences that are faced in automation and how they can be resolved. [TET] is a tool that can explored further to understand the requirements of a generic framework or a test tool.

- We talked of end-to-end automation of testing both in this chapter as well as in earlier chapters. Consider the automation requirements and evaluate the options of tools available for each:

- Designing test cases from requirements/design/program specifications

- Generation of test data

- Choice of test cases for a given release, based on the changes made to code

- Tools for automatic analysis of correctness of tests

- Tools for automatic assignment of right people to work on defects uncovered by tests

- Tools for performance testing

- Tools for test reporting

- Design a simple schema that enables mapping between test case database, configuration management tool, and test history to perform some of the automation listed in Problem 1.

- What aspects of white box testing lend themselves to automation? What tools would be required?

- What are some of the challenges in automating the testing of GUI portions of an application? How do these compare with the automation of back-end testing?

- We have discussed metrics in the final chapter. Look at the various metrics that are presented and present how the schema in Problem 2 could be useful in generating these metrics. List the queries in a language like SQL that can be used to generate the above metrics.

- Consider writing automated test cases for testing out the various query options of a database. What type of initialization and cleanup would be required for test scripts?

- While using automated scripts, derive standards that can enhance and promote reuse.

- We had mentioned about not hard coding any values in tests. We also gave an example of where there are diff erent configuration parameters for diff erent layers of soft ware. Outline of some of the challenges in maintaining such configuration files that contain parameters for diff erent levels. How will you overcome some of these challenges?