In this chapter, we will cover the following recipes:

- Initializing Cascalog and Hadoop for distributed processing

- Querying data with Cascalog

- Distributing data with Apache HDFS

- Parsing CSV files with Cascalog

- Executing complex queries with Cascalog

- Aggregating data with Cascalog

- Defining new Cascalog operators

- Composing Cascalog queries

- Transforming data with Cascalog

Over the course of the last few chapters, we've been progressively moving outward. We started with the assumption that everything will run on one processor, probably in a single thread. Then we looked at how to structure our program without this assumption, performing different tasks on many threads. We then tried to speed up processing by getting multiple threads and cores working on the same task. Now we've pulled back about as far as we can, and we're going to take a look at how to break up work in order to execute it on multiple computers. For large amounts of data, this can be especially useful.

In fact, big data has become more and more common. The definition of big data is a moving target as disk sizes grow, but you're working with big data if you have trouble processing it in memory or even storing it on disk. There's a lot of information locked in that much data, but getting it out can be a real problem. The recipes in this chapter will help address these issues.

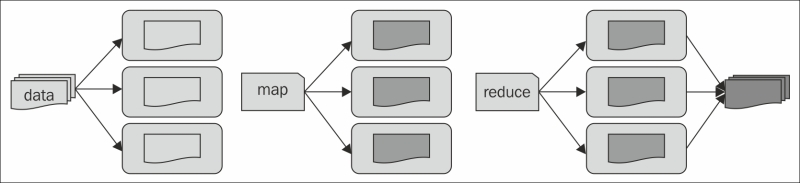

Currently, the most common way to distribute computation in production is to use the MapReduce algorithm, which was originally described in a research paper by Google, although the company has moved away from using this algorithm (http://research.google.com/archive/mapreduce.html). The MapReduce process should be familiar to anyone who uses Clojure, as MapReduce is directly inspired by functional programming. Data is partitioned over all the computers in the cluster. An operation is mapped across the input data, with each computer in the cluster performing part of the processing. The outputs of the map function are then accumulated using a Reduce operation. Conceptually, this is very similar to the reducers library that we discussed in some of the recipes in Chapter 4, Improving Performance with Parallel Programming. The following diagram illustrates the three stages of processing:

Clojure itself doesn't have any features for distributed processing. However, it has an excellent interoperability with the Java Virtual Machine, and Java has a number of libraries and systems for creating and using distributed systems. In the recipes of this chapter, we'll primarily use Hadoop (http://hadoop.apache.org/), and we'll especially focus on Cascading (http://www.cascading.org/) and the Clojure wrapper for this library, Cascalog (https://github.com/nathanmarz/cascalog). This toolchain makes distributed processing simple.

All these systems also work using just a single server, such as a developer's working computer. This is what we'll use in most of the recipes in this chapter. The code should work in a multiserver, cluster environment too.