A Ceph cluster typically consists of several nodes having multiple disk drives. And, these disk drives can be of mixed types. For example, your Ceph nodes might contain disks of the types SATA, NL-SAS, SAS, SSD, or even PCIe, and so on. Ceph provides you with flexibility to create pools on specific drive types. For example, you can create a high performing SSD pool from a set of SSD disks, or you can create a high capacity, low cost pool using the SATA disk drives.

In this recipe, we will understand how to create a pool named ssd-pool backed by SSD disks, and another pool named sata-pool, which is backed by SATA disks. To achieve this, we will edit CRUSH maps and make the necessary configurations.

The Ceph cluster that we deployed and have played around with in this book is hosted on virtual machines and does not have real SSD disks backing it. Hence, we will be assuming we have a few virtual disks as SSD disks for learning purposes. There will be no change if you are performing this exercise on a real SSD disk-based Ceph cluster.

For the following demonstration, let's assume that osd.0, osd.3, and osd.6 are SSD disks, and we would be creating an SSD pool on these disks. Similarly, let's assume osd.1, osd.5, and osd.7 are SATA disks, which would be hosting the SATA pool.

Let's begin the configuration:

- Get the current CRUSH map and decompile it:

# ceph osd getcrushmap -o crushmapdump # crushtool -d crushmapdump -o crushmapdump-decompiled

- Edit the

crushmapdump-decompiledCRUSH map file and add the following section after the root default section:

- Create the CRUSH rule by adding the following rules under the rules section of the CRUSH map, and then, save and exit the file:

- Compile and inject the new CRUSH map in the Ceph cluster:

# crushtool -c crushmapdump-decompiled -o crushmapdump-compiled # ceph osd setcrushmap -i crushmapdump-compiled

- Once the new CRUSH map has been applied to the Ceph cluster, check the OSD tree view for the new arrangement, and notice the

ssdandsataroot buckets:# ceph osd tree

- Create and verify the

ssd-pool.- Create the

ssd-pool:# ceph osd pool create ssd-pool 8 8 - Verify the

ssd-pool; notice that thecrush_rulesetis0, which is by default:# ceph osd dump | grep -i ssd

- Let's change the

crush_rulesetto 1 so that the new pool gets created on the SSD disks:# ceph osd pool set ssd-pool crush_ruleset 1 - Verify the pool and notice the change in

crush_ruleset:# ceph osd dump | grep -i ssd

- Create the

- Similarly, create and verify

sata-pool.

- Let's add some objects to these pools:

- Since these pools are new, they should not contain any objects, but let's verify this by using the

radoslist command:# rados -p ssd-pool ls # rados -p sata-pool ls

- We will now add an object to these pools using the rados

putcommand. The syntax would be:rados -p <pool_name> put <object_name> <file_name>:# rados -p ssd-pool put dummy_object1 /etc/hosts # rados -p sata-pool put dummy_object1 /etc/hosts

- Using the

radoslist command, list these pools. You should get the object names that we stored in the last step:# rados -p ssd-pool ls # rados -p sata-pool ls

- Since these pools are new, they should not contain any objects, but let's verify this by using the

- Now, the interesting part of this entire section is to verify that the objects are getting stored on the correct set of OSDs:

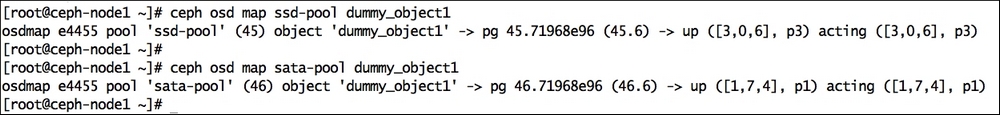

- For the

ssd-pool, we have used the OSDs 0, 3, and 6. Check theosd mapforssd-poolusing the syntax:ceph osd map <pool_name> <object_name>:# ceph osd map ssd-pool dummy_object1 - Similarly, check the object from

sata-pool:# ceph osd map sata-pool dummy_object1

- For the

As shown in the preceding screenshot, the object that is created on ssd-pool is actually stored on the OSDs set [3,0,6], and the object that is created on sata-pool gets stored on the OSDs set [1,7,4]. This output was expected, and it verifies that the pool that we created uses the correct set of OSDs as we requested. This type of configuration can be very useful in a production setup, where you would like to create a fast pool based on SSDs only, and a medium/slower performing pool based on spinning disks.