While the previous chapter focused on pattern recognition in images, this chapter is all about recognizing patterns in sensor data, which, in contrast to images, has temporal dependencies. We will discuss how to recognize granular daily activities such as walking, sitting, and running using mobile phone inertial sensors. The chapter also provides references to related research and emphasizes best practices in the activity recognition community.

The topics covered in this chapter will include the following:

- Introducing activity recognition, covering mobile phone sensors and activity recognition pipeline

- Collecting sensor data from mobile devices

- Discussing activity classification and model evaluation

- Deploying activity recognition model

Activity recognition is an underpinning step in behavior analysis, addressing healthy lifestyle, fitness tracking, remote assistance, security applications, elderly care, and so on. Activity recognition transforms low-level sensor data from sensors, such as accelerometer, gyroscope, pressure sensor, and GPS location, to a higher-level description of behavior primitives. In most cases, these are basic activities, for example, walking, sitting, lying, jumping, and so on, as shown in the following image, or they could be more complex behaviors, such as going to work, preparing breakfast, shopping, and so on:

In this chapter, we will discuss how to add the activity recognition functionality into a mobile application. We will first look at what does an activity recognition problem looks like, what kind of data do we need to collect, what are the main challenges are, and how to address them?

Later, we will follow an example to see how to actually implement activity recognition in an Android application, including data collection, data transformation, and building a classifier.

Let's start!

Let's first review what kinds of mobile phone sensors there are and what they report. Most smart devices are now equipped with a several built-in sensors that measure the motion, position, orientation, and conditions of the ambient environment. As sensors provide measurements with high precision, frequency, and accuracy, it is possible to reconstruct complex user motions, gestures, and movements. Sensors are often incorporated in various applications; for example, gyroscope readings are used to steer an object in a game, GPS data is used to locate the user, and accelerometer data is used to infer the activity that the user is performing, for example, cycling, running, or walking.

The next image shows a couple of examples what kind of interactions the sensors are able to detect:

Mobile phone sensors can be classified into the following three broad categories:

- Motion sensors measure acceleration and rotational forces along the three perpendicular axes. Examples of sensors in this category include accelerometers, gravity sensors, and gyroscopes.

- Environmental sensors measure a variety of environmental parameters, such as illumination, air temperature, pressure, and humidity. This category includes barometers, photometers, and thermometers.

- Position sensors measure the physical position of a device. This category includes orientation sensors and magnetometers.

Note

More detailed descriptions for different mobile platforms are available at the following links:

- Android sensors framework: http://developer.android.com/guide/topics/sensors/sensors_overview.html

- iOS Core Motion framework: https://developer.apple.com/library/ios/documentation/CoreMotion/Reference/CoreMotion_Reference/

- Windows Phone: https://msdn.microsoft.com/en-us/library/windows/apps/hh202968(v=vs.105).aspx

In this chapter, we will work only with Android's sensors framework.

Classifying multidimensional time-series sensor data is inherently more complex compared to classifying traditional, nominal data as we saw in the previous chapters. First, each observation is temporally connected to the previous and following observations, making it very difficult to apply a straightforward classification of a single set of observations only. Second, the data obtained by sensors at different time points stochastic, that is unpredictable due to influence of sensor noise, environmental disturbances, and many other reasons. Moreover, an activity can comprise various sub-activities executed in different manner and each person performs the activity a bit differently, which results in high intraclass differences. Finally, all these reasons make an activity recognition model imprecise, resulting in new data being often misclassified. One of the highly desirable properties of an activity recognition classifier is to ensure continuity and consistency in the recognized activity sequence.

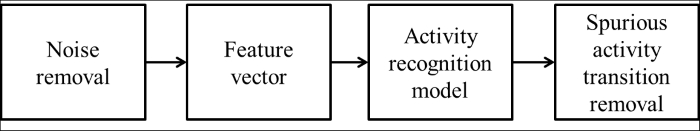

To deal with these challenges, activity recognition is applied to a pipeline as shown in the following:

In the first step, we attenuate as much noise as we can, for example, by reducing sensor sampling rate, removing outliers, applying high/low-pass filters, and so on. In the next phase, we construct a feature vector, for instance, we convert sensor data from time domain to frequency domain by applying Discrete Fourier Transform (DFT). DFT is a method that takes a list of samples as an input and returns a list of sinusoid coefficients ordered by their frequencies. They represent a combination of frequencies that are present in the original list of samples.

Note

An gentle introduction of Fourier transform is written by Pete Bevelacqua at http://www.thefouriertransform.com/.

If you want to get more technical and theoretical background on the Fourier transform, take a look at the eighth and ninth lectures in the class by Robert Gallanger and Lizhong Zheng at MIT open course:

http://theopenacademy.com/content/principles-digital-communication

Next, based on the feature vector and set of training data, we can build an activity recognition model that assigns an atomic action to each observation. Therefore, for each new sensor reading, the model will output the most probable activity label. However, models make mistakes. Hence, the last phase smooths the transitions between activities by removing transitions that cannot occur in reality, for example, it is not physically feasible that the transition between activities lying-standing-lying occurs in less than half a second, hence such transition between activities is smoothed as lying-lying-lying.

The activity recognition model is constructed with a supervised learning approach, which consists of training and classification steps. In the training step, a set of labeled data is provided to train the model. The second step is used to assign a label to the new unseen data by the trained model. The data in both phases must be pre-processed with the same set of tools, such as filtering and feature-vector computation.

The post-processing phase, that is, spurious activity removal, can also be a model itself and, hence, also requires a learning step. In this case, the pre-processing step also includes activity recognition, which makes such arrangement of classifiers a meta-learning problem. To avoid overfitting, it is important that the dataset used for training the post-processing phase is not the same as that used for training the activity recognition model.

We will roughly follow a lecture on smartphone programming by professor Andrew T. Campbell from Dartmouth University and leverage data collection mobile app that they developed in the class (Campbell, 2011).

The plan consists of training phase and deployment phase. Training phase shown in the following image boils down to the following steps:

- Install Android Studio and import

MyRunsDataCollector.zip. - Load the application in your Android phone.

- Collect your data, for example, standing, walking, and running, and transform the data to a feature vector comprising of FFT transforms. Don't panic, low-level signal processing functions such as FFT will not be written from scratch as we will use existing code to do that. The data will be saved on your phone in a file called

features.arff. - Create and evaluate an activity recognition classifier using exported data and implement filter for spurious activity transitions removal.

- Plug the classifier back into the mobile application.

If you don't have an Android phone, or if you want to skip all the steps related to mobile application, just grab an already-collected dataset located in data/features.arff and jump directly to the Building a classifier section.