In this section, we will demonstrate one technique for classifying visual data. We will use Neuroph to accomplish this. Neuroph is a Java-based neural network framework that supports a variety of neural network architectures. Its open source library provides support and plugins for other applications. In this example, we will use its neural network editor, Neuroph Studio, to create a network. This network can be saved and used in other applications. Neuroph Studio is available for download here: http://neuroph.sourceforge.net/download.html. We are building upon the process shown here: http://neuroph.sourceforge.net/image_recognition.htm.

For our example, we will create a Multi Layer Perceptron (MLP) network. We will then train our network to recognize images. We can both train and test our network using Neuroph Studio. It is important to understand how MLP networks recognize and interpret image data. Every image is basically represented by three two-dimensional arrays. Each array contains information about the color components: one array contains information about the color red, one about the color green, and one about the color blue. Every element of the array holds information about one specific pixel in the image. These arrays are then flattened into a one-dimensional array to be used as an input by the neural network.

To begin, first create a new Neuroph Studio project:

We will name our project RecognizeFaces because we are going train the neural network to recognize images of human faces:

Next, we create a new file in our project. There are many types of project to choose from, but we will choose an Image Recognition type:

Click Next and then click Add directory. We have created a directory on our local machine and added several different black and white images of faces to use for training. These can be found by searching Google images or another search engine. The more quality images you have to train with, theoretically, the better your network will be:

After you click Next, you will be directed to select an image to not recognize. You may need to try different images based upon the images you want to recognize. The image you select here will prevent false recognitions. We have chosen a simple blue square from another directory on our local machine, but if you are using other types of image for training, other color blocks may work better:

Next, we need to provide network training parameters. We also need to label our training dataset and set our resolution. A height and width of 20 is a good place to start, but you may want to change these values to improve your results. Some trial and error may be involved. The purpose of providing this information is to allow for image scaling. When we scale images to a smaller size, our network can process them and learn faster:

Finally, we can create our network. We assign a label to our network and define our Transfer function. The default function, Sigmoid, will work for most networks, but if your results are not optimal you may want to try Tanh. The default number of Hidden Layers Neuron Counts is 12, and that is a good place to start. Be aware that increasing the number of neurons increases the time it takes to train the network and decreases your ability to generalize the network to other images. As with some of our previous values, some trial and error may be necessary to find the optimal settings for a given network. Select Finish when you are done:

Once we have created our network, we need to train it. Begin by double-clicking on your neural network in the left pane. This is the file with the .nnet extension. When you do this, you will open a visual representation of the network in the main window. Then drag the dataset, with the file extension .tsest, from the left pane to the top node of the neural network. You will notice the description on the node change to the name of your dataset. Next, click the Train button, located in the top-left part of the window:

This will open a dialog box with settings for the training. You can leave the default values for Max Error, Learning Rate, and Momentum. Make sure the Display Error Graph box is checked. This allows you to see the improvement in the error rate as the training process continues:

After you click the Train button, you should see an error graph similar to the following one:

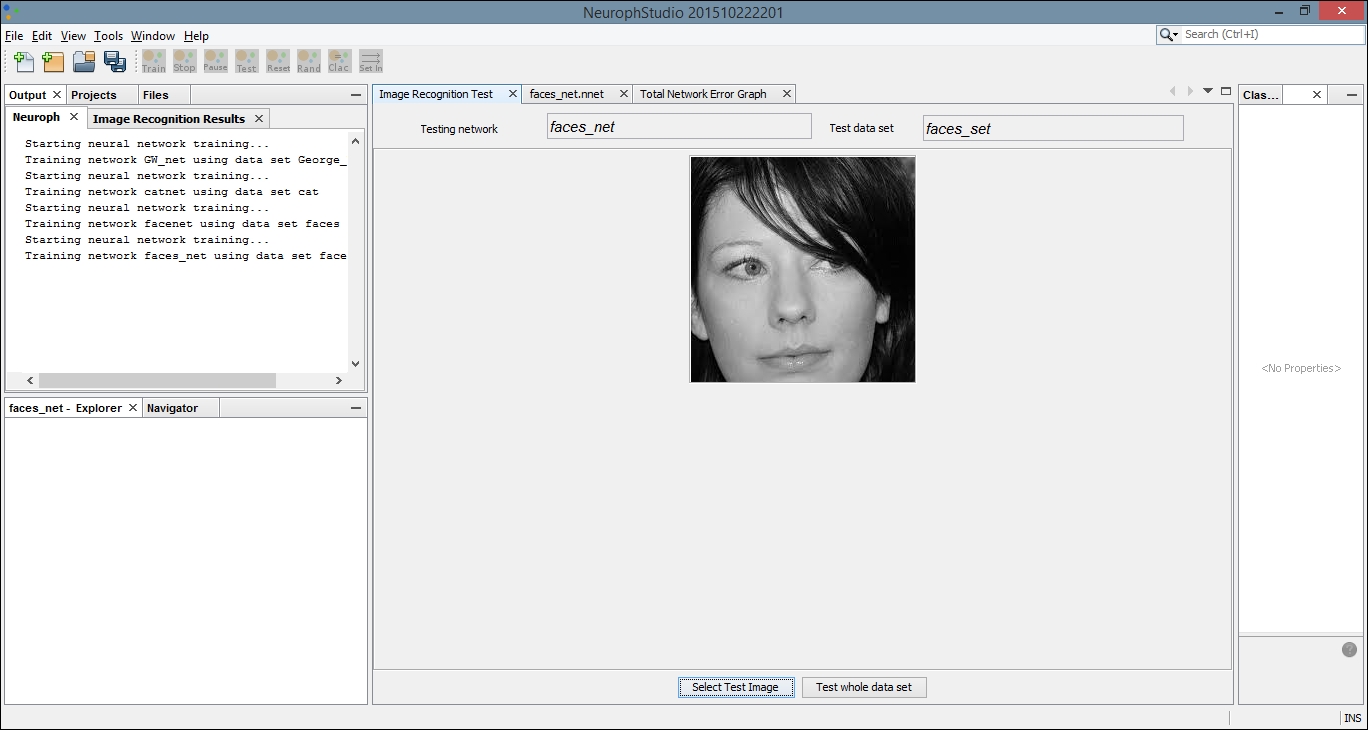

Select the tab titled Image Recognition Test. Then click on the Select Test Image button. We have loaded a simple image of a face that was not included in our original dataset:

Locate the Output tab. It will be in the bottom or left pane and will display the results of comparing our test image with each image in the training set. The greater the number, the more closely our test image matches the image from our training set. The last image results in a greater output number than the first few comparisons. If we compare these images, they are more similar than the others in the dataset, and thus the network was able to create a more positive recognition of our test image:

We can now save our network for later use. Select Save from the File menu and then you can use the .nnet file in external applications. The following code example shows a simple technique for running test data through your pre-built neural network. The NeuralNetwork class is part of the Neuroph core package, and the load method allows you to load the trained network into your project. Notice we used our neural network name, faces_net. We then retrieve the plugin for our image recognition file. Next, we call the recognizeImage method with a new image, which must handle an IOException. Our results are stored in a HashMap and printed to the console:

NeuralNetwork iRNet = NeuralNetwork.load("faces_net.nnet");

ImageRecognitionPlugin iRFile

= (ImageRecognitionPlugin)iRNet.getPlugin(

ImageRecognitionPlugin.class);

try {

HashMap<String, Double> newFaceMap

= imageRecognition.recognizeImage(

new File("testFace.jpg"));

out.println(newFaceMap.toString());

} catch(IOException e) {

// Handle exceptions

}

This process allows us to use a GUI editor application to create our network in a more visual environment, but then embed the trained network into our own applications.