This section describes the first three steps from the plan. If you want to directly work with the data, you can just skip this section and continue to the following Building a classifier section. There are many open source mobile apps for sensor data collection, including an app by Prof. Campbell that we will use in this chapter. The application implements the essentials to collect sensor data for different activity classes, for example, standing, walking, running, and others.

Let's start by preparing the Android development environment. If you have already installed it, jump to the Loading the data collector section.

Android Studio is a development environment for Android platform. We will quickly review installation steps and basic configurations required to start the app on a mobile phone. For more detailed introduction to Android development, I would recommend an introductory book, Android 5 Programming by Example by Kyle Mew.

Grab the latest Android Studio for developers at http://developer.android.com/sdk/installing/index.html?pkg=studio and follow the installation instructions. The installation will take over 10 minutes, occupying approximately 0.5 GB of space:

Then:

First, grab source code of MyRunsDataCollector from http://www.cs.dartmouth.edu/~campbell/cs65/code/myrunsdatacollector.zip. Once the Android Studio is installed, choose to open an existing Android Studio project as shown in the following image and select the MyRunsDataCollector folder. This will import the project to Android Studio:

After the project import is completed, you should be able to see the project files structure, as shown in the following image. As shown in the following, the collector consists of CollectorActivity.java, Globals.java, and SensorsService.java. The project also shows FFT.java implementing low-level signal processing:

The main myrunscollector package contains the following classes:

Globals.java: This defines global constants such as activity labels and IDs, data filenames, and so onCollectorActivity.java: This implements user interface actions, that is, what happens when specific button is pressedSensorsService.java: This implements a service that collects data, calculates the feature vector as we will discuss in the following sections, and stores the data into a file on the phone

The next question that we will address is how to design features.

Finding an appropriate representation of the person's activities is probably the most challenging part of activity recognition. The behavior needs to be represented with simple and general features so that the model using these features will also be general and work well on behaviors different from those in the learning set.

In fact, it is not difficult to design features specific to the captured observations in a training set; such features would work well on them. However, as the training set captures only a part of the whole range of human behavior, overly specific features would likely fail on general behavior:

Let's see how it is implemented in MyRunsDataCollector. When the application is started, a method called onSensorChanged() gets a triple of accelerometer sensor readings (x, y, and z) with a specific time stamp and calculates the magnitude from the sensor readings. The methods buffers up to 64 consecutive magnitudes marked before computing the FFT coefficients (Campbell, 2015):

"As shown in the upper left of the diagram, FFT transforms a time series of amplitude over time to magnitude (some representation of amplitude) across frequency; the example shows some oscillating system where the dominant frequency is between 4-8 cycles/second called Hertz (H) – imagine a ball attached to an elastic band that this stretched and oscillates for a short period of time, or your gait while walking, running -- one could look at these systems in the time and frequency domains. The x,y,z accelerometer readings and the magnitude are time domain variables. We transform these time domain data into the frequency domain because the can represent the distribution in a nice compact manner that the classifier will use to build a decision tree model. For example, the rate of the amplitude transposed to the frequency domain may look something like the figure bottom plot -- the top plot is time domain and the bottom plot a transformation of the time to the frequency domain.

The training phase also stores the maximum (MAX) magnitude of the (m0..m63) and the user supplied label (e.g., walking) using the collector. The individual features are computed as magnitudes (f0..f63), the MAX magnitude and the class label."

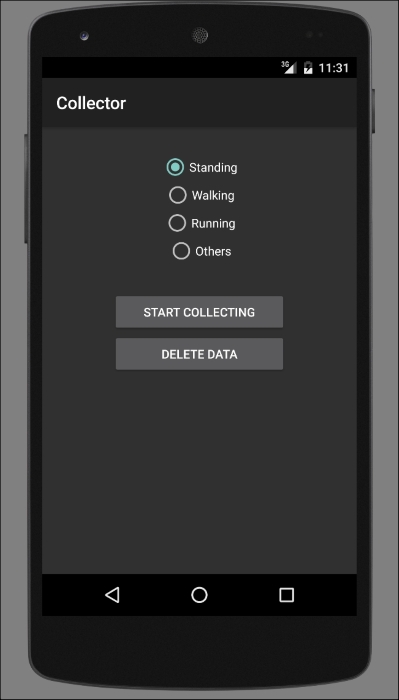

We can now use the collector to collect training data for activity recognition. The collector supports three activities by default: standing, walking, and running, as shown in the application screenshot in the following figure.

You can select an activity, that is, target class value, and start recording the data by clicking the START COLLECTING button . Make sure that each activity is recorded for at least three minutes, for example, if the Walking activity is selected, press START COLLECTING and walk around for at least three minutes. At the end of the activity, press stop collecting. Repeat this for each of the activities.

You could also collect different scenarios involving these activities, for example, walking in the kitchen, walking outside, walking in a line, and so on. By doing so, you will have more data for each activity class and a better classifier. Makes sense, right? The more data, the less confused the classifier will be. If you only have a little data, overfitting will occur and the classifier will confuse classes—standing with walking, walking with running. However, the more data, the less they get confused. You might collect less than three minutes per class when you are debugging, but for your final polished product, the more data, the better it is. Multiple recording instances will simply be accumulated in the same file.

Note, the delete button removes the data that is stored in a file on the phone. If you want to start over again, hit delete before starting otherwise, the new collected data will be appended at the end of the file:

The collector implements the diagram as discussed in the previous sections: it collects accelerometer samples, computes the magnitudes, uses the FFT.java class to compute the coefficients, and produces the feature vectors. The data is then stored in a Weka formatted features.arff file. The number of feature vectors will vary as you will collect a small or large amount of data. The longer you collect the data, the more feature vectors are accumulated.

Once you stop collecting the training data using the collector tool, we need to grab the data to carry on the workflow. We can use the file explorer in Android Device Monitor to upload the features.arff file from the phone and to store it on the computer. You can access your Android Device Monitor by clicking on the Android robot icon as shown in the following image:

By selecting your device on the left, your phone storage content will be shown on the right-hand side. Navigate through mnt/shell/emulated/Android/data/ edu.dartmouth.cs.myrunscollector/files/features.arff:

To upload this file to your computer, you need to select the file (it is highlighted) and click Upload.

Now we are ready to build a classifier.