Detection of Intent-Based Vulnerabilities in Android Applications

Sébastien Salva1 and Stassia R. Zafimiharisoa2, 1University of Auvergne, Clermont-Ferrand,France, 2Blaise Pascal University, Clermont-Ferrand, France

The intent mechanism of the Android platform is a powerful message-passing system that allows for sharing data among components and applications. Nevertheless, it might also be used as an entry point for security attacks if incautiously employed. Attacks can be easily sent through intents to components, which can indirectly forward them to other components, and so on. In this context, this chapter proposes a model-based security testing approach to attempt to detect data vulnerabilities in Android applications. In other words, this approach generates test cases to check whether components are vulnerable to attacks, sent through intents that expose personal data. Our method takes Android applications and intent-based vulnerabilities formally expressed with models called vulnerability patterns. Then, and this is the originality of our approach, partial specifications are automatically generated from Android applications with algorithms reflecting the Android documentation. These specifications avoid false positives and refine test verdicts. A tool called APSET is presented and evaluated with tests on some Android applications.

Keywords

security testing; Android application security; intent-based vulnerabilities; model-based testing; formal specifications

Information in this chapter

Introduction

Many recent security reports and research papers show that mobile device operating systems and applications are suffering from a high rate of security attacks. They are rapidly becoming attractive targets for attackers due to significant advances in both hardware and operating systems, which also tend to open new security vulnerabilities. The Android platform is often cited in these reports [1,2]. Android was indeed created with openness in mind and offers a lot of flexibility and features to develop applications. On the other hand, some of these features, if incautiously used, can also lead to security breaches that can be exploited by malicious applications. Many important security flaws are based on the intent system of Android, which is a message-passing mechanism employed to share data among applications and components of an application.

Android applications consist of components that are tied together with intents. These interactions among components are by default highly controlled: components within an application are sandboxed by Android, and other applications may access such components only if they have the required permissions. Some papers and tools have already been proposed to check the validity of these permissions [3]. But even with the right ones, applications can be still vulnerable to malicious intents if incorrectly designed. This chapter focuses on data vulnerabilities that may result from components called ContentProviders. Data can be stored in an Android mobile device by various options; for example, in raw files or SQLite databases. The ContentProvider component represents a more elegant interface thatmakes data available to applications. The ContentProvider access is more restricted (no intent) and requires permissions. Without permission (the default mode), data cannot be directly read by external applications. Considering this case, data can still be exposed by the components that have a full access to ContentProviders; that is, those composed with ContentProviders inside the same application. These components can be attacked by malicious intents [4], composed of incorrect data or attacks, that are indirectly forwarded to ContentProviders. As a result, data may be exported or modified. This work tackles this issue by proposing a model-based testing method that automatically generates test cases from intent-based vulnerabilities.

Our method takes intent-based vulnerabilities formally expressed with vulnerability patterns. These are specialised ioSTS (input output Symbolic Transition Systems [5]) that formally exhibit vulnerable and non-vulnerable behaviors and help define test verdicts without ambiguity. From vulnerability patterns, our method performs both the automatic test case generation and execution. The originality of our approach comes from the generation of partial specifications, from Android applications, built with algorithms that reflect a part of the Android documentation [6]. The benefits of using them are manifold: they determine the nature of each component (type, links to other components) and describe the functional behavior that should be observed from components after receiving intents. They also avoid giving false positive verdicts (false alarms), because each component is exclusively experimented with test cases generated from its specification. In particular, since the chapter is dealing with data vulnerabilities, test cases shall be constructed from the components that are composed with ContentProviders. Secondly, these specifications help refine test results with special verdicts that notify when a component is not compliant to its specification.

Afterward, we introduce the evaluation of the tool APSET (Android aPplications SEcurity Testing), which implements this method. The test results on some popular applications of the Android Market1 show that this tool is effective in detecting vulnerability flaws within a reasonable time delay.

The chapter is structured as follows: “Comparison to Related Work” compares our approach with related work. We briefly recall some definitions of the ioSTS model in “Model Definition and Notations,” and vulnerability patterns are defined in “Vulnerability Modeling.” The testing methodology is described in “Security Testing Methodology.” We give some tests results in “Implementation and Experimentation,” and end the chapter with a “Conclusion.”

Comparison to related work

Several works and tools dealing with Android security have been recently proposed in the literature. Below, we compare our approach with some of them.

Some works focus on privilege problems in Android applications. For instance, the tool Stowaway was developed to detect over-privilege [3]. It statically analyzes application codes and compares the maximum set of permissions needed for an application with the set of permissions actually requested. This approach offers a different point of view from our work, since we assume that permissions are correctly set. Amalfitano et al. proposed a GUI crawling-based testing technique of Android applications [7] that is a kind of random testing technique based on a crawler simulating real user events on the user interface and automatically infers a GUI model. The source code is instrumented to detect defects. This tool can be applied on small size applications to detect crashes only. In contrast, our method can detect a larger set of vulnerabilities since it takes vulnerability scenarios on not only Activities, but also on Services and ContentProviders. However, we do not consider sequences of Activities.

The analysis of the Android IPC mechanism was also studied in [4]. Chin et al. described the permission system vulnerabilities that applications may exploit to perform unauthorized actions. The vulnerability patterns considered in our method can be directly extracted from this work. The same authors also proposed the tool Comdroid, which analyzes Manifest files and application source codes to detect weak permissions and potential intent-based vulnerabilities. Nevertheless, the tool provides a high rate of false negatives (about 75 percent), since it warns users on potential issues but does not verify the existence of attacks. Actually, our tool APSET completes Comdroid, since it tests vulnerability issues on running applications. Another way to reduce intent-based vulnerabilities is to modify the Android platform. In this context, Kantola et al. proposed to alter the heuristics that Android uses to determine the eligible senders and recipients of messages [8]. Intents are passed through filters to detect those unintentionally sent to third-party applications. It is manifest that all attacks are not blocked by these filters, so security testing is still required here. Furthermore, ContentProviders and the management of personal data are not considered.

Other studies deal with the security of pre-installed Android applications and show that target applications receiving oriented intents can re-delegate wrong permissions [9,10]. Some tools have been developed to detect the receipt of wrong permissions by means of malicious intents. In our work, we consider vulnerability patterns to model more general threats based on availability, integrity, or authorization, etc. Permissions can be taken into consideration in APSET with the appropriate vulnerability patterns. Jing et al. introduced a model-based conformance testing framework for the Android platform [11]. As in our approach, partial specifications are constructed from Manifest files. Test cases are generated from these specifications to check whether intent-based properties hold. This approach lacks scalabilit,y though, since the set of properties provided in the paper is based on the intent functioning and cannot be modified. Our work can take as input a larger set of vulnerability patterns.

Model definition and notations

After discussion with the Android developers of the Openium company, we chose the input/output Symbolic Transition Systems (ioSTS) model [5] to express vulnerabilities, since it is flexible enough to describe a large set of intent-based vulnerabilities and is still user-friendly enough to represent vulnerabilities that do not require obligation, permission, and related concepts. An ioSTS is a kind of automata model that is extended with two sets of variables: internal variables to store data and parameters to enrich the actions. Transitions carry actions, guards, and assignments over variables. The action set is separated with inputs beginning by ? to express actions expected by the system, and with outputs beginning by ! to express actions produced by the system. An ioSTS does not have states, but locations.

Below, we give the definition of an ioSTS extension, called ioSTS suspension, that also expresses quiescence; that is, the authorized deadlocks observed from a location. For an ioSTS ![]() , quiescence is modeled by a new action,

, quiescence is modeled by a new action, ![]() , and an augmented ioSTS denoted

, and an augmented ioSTS denoted ![]() , which are obtained by adding a self-loop labeled by

, which are obtained by adding a self-loop labeled by ![]() for each location where no output action may be observed.

for each location where no output action may be observed.

An ioSTS is also associated to an ioLTS (Input/Output Labeled Transition System) to formulate its semantics. In short, ioLTS semantics correspond to valued automata without symbolic variables, which are often infinite. The semantics of an ioSTS ![]() is the ioLTS

is the ioLTS ![]() composed of valued states in

composed of valued states in ![]() ,

, ![]() is the initial one,

is the initial one, ![]() is the set of valued symbols and

is the set of valued symbols and ![]() is the transition relation. The ioLTS semantics definition can be found in [5].

is the transition relation. The ioLTS semantics definition can be found in [5].

Runs and traces of an ioSTS, which are typically action sequences observed while testing, are defined from its semantics:

Below, we recall the definitions of some classic operations on ioSTS. The same operations can also be applied on the underlying ioLTS semantics. An ioSTS can be completed on its output set to express its incorrect behavior with new transitions to the sink location Fail, guarded by the negation of the union of guards of the same output action on outgoing transitions:

The parallel composition of two ioSTS is a specialized product that illustrates the shared behavior of the two original ioSTS that are compatible:

Vulnerability modeling

A vulnerability pattern, denoted ![]() , is a specialized ioSTS suspension composed of two distinct final locations Vul, NVul, which aim to recognize the vulnerability status over component executions. Intuitively, runs of a vulnerability pattern, starting from the initial location and ended by Vul, describe the presence of the vulnerability. By deduction, runs ended by NVul show the absence of the vulnerability.

, is a specialized ioSTS suspension composed of two distinct final locations Vul, NVul, which aim to recognize the vulnerability status over component executions. Intuitively, runs of a vulnerability pattern, starting from the initial location and ended by Vul, describe the presence of the vulnerability. By deduction, runs ended by NVul show the absence of the vulnerability. ![]() is also output-complete to recognize a status, whatever the actions observed while testing.

is also output-complete to recognize a status, whatever the actions observed while testing.

Naturally, a vulnerability pattern ![]() has to be equipped with actions also employed for describing Android components. We denote

has to be equipped with actions also employed for describing Android components. We denote ![]() the set that gathers all these actions for components having the type t. Transition guards can also be composed of specific predicates to ease their writing. In this chapter, we consider some predicates such as in, which stands for a Boolean function returning true if a parameter list belongs to a given value set. In the same way, we consider several value sets to categorize malicious values and attacks: RV is a set of values known for relieving bugs enriched with random values. Inj is a set gathering XML and SQL injections constructed from database table URIs found in the tested Android application. URI is a set of randomly constructed URIs completed with the URIs found in the tested Android application.

the set that gathers all these actions for components having the type t. Transition guards can also be composed of specific predicates to ease their writing. In this chapter, we consider some predicates such as in, which stands for a Boolean function returning true if a parameter list belongs to a given value set. In the same way, we consider several value sets to categorize malicious values and attacks: RV is a set of values known for relieving bugs enriched with random values. Inj is a set gathering XML and SQL injections constructed from database table URIs found in the tested Android application. URI is a set of randomly constructed URIs completed with the URIs found in the tested Android application.

It remains to populate vulnerability patterns with concrete actions having a real signification in accordance with the Android documentation. In this chapter, we focus on the detection of data vulnerabilities based on the intent mechanism. The components that may play a role with these vulnerabilities are:

• Activities, the most common components that display user interfaces used to interact with an application

• Services, which represent long tasks executed in the background

• ContentProviders, which are dedicated to the management of data stored in a smartphone. Without permission (the default mode), data cannot be directly accessed by external applications.

In this context, a Vulnerability pattern ![]() is then composed of actions of a component Comp (Activity or Service), composed with a ContentProvider. Consequently,

is then composed of actions of a component Comp (Activity or Service), composed with a ContentProvider. Consequently, ![]() . For readability, we only consider Activities here.

. For readability, we only consider Activities here.

Activities display screens to let users interact with programs. We denote !Display(A) the action modeling the display of a screen for the Activity A. Activities may also throw exceptions that we group into two categories: those raised by the Android system on account of the crash of a component and the other ones. This difference can be observed while testing with our framework. This is modeled with the actions !SystemExp and !ComponentExp, respectively. Components are joined together with intents, denoted intent(Cp, a,d, c, t, ed), with Cp the called component, a an action which has to be performed, d a data expressed as a URI, c a component category, t a type that specifies the MIME type of the intent data, and finally, ed, which represents additional (extra) data [6]. Intent actions have different purposes; that is, the action VIEW is called to display something and the action PICK is called to choose an item and to return its URI to the calling component. Hence, in reference to the Android documentation [6], the action set, denoted ![]() , is divided into two categories: the set

, is divided into two categories: the set ![]() gathers the actions requiring the receipt of a response and

gathers the actions requiring the receipt of a response and ![]() gathers the other actions. We also denote C the set of predefined Android categories and T the set of types. Finally, one can deduce that

gathers the other actions. We also denote C the set of predefined Android categories and T the set of types. Finally, one can deduce that ![]() is the set {?intent(Cp,a,d,c,t,ed), !Display(A),

is the set {?intent(Cp,a,d,c,t,ed), !Display(A), ![]() , !SystemExp, !ComponentExp}. ContentProviders are components that receive SQL-oriented requests (no intents) denoted !call(Cp, request, tableURI) and eventually return responses denoted !callResp(Cp, resp). Consequently,

, !SystemExp, !ComponentExp}. ContentProviders are components that receive SQL-oriented requests (no intents) denoted !call(Cp, request, tableURI) and eventually return responses denoted !callResp(Cp, resp). Consequently, ![]() is the set {!call(Cp, request, tableURI), !callResp(Cp, resp),

is the set {!call(Cp, request, tableURI), !callResp(Cp, resp), ![]() , !ComponentExp, !SystemExp}. These action sets can be completed, if required.

, !ComponentExp, !SystemExp}. These action sets can be completed, if required.

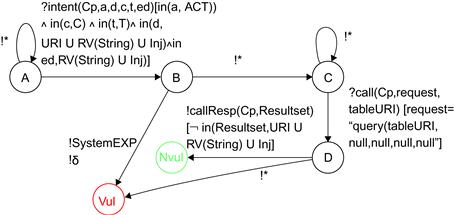

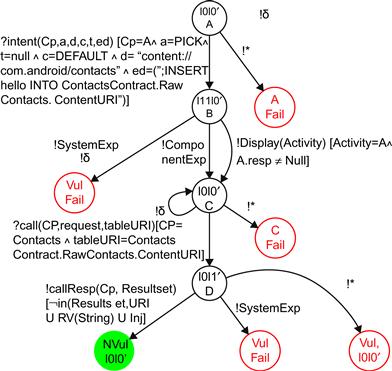

Figure 24.1 illustrates a straightforward example of a vulnerability pattern related to data integrity. It aims to check whether an Activity, called with an intent composed of malicious data, cannot alter the content of a database table managed by a ContentProvider. Intents are constructed with data and extra data composed of malformed URIs or String values known for relieving bugs or XML/SQL injections. If the called component crashes, it is considered as vulnerable. Once the intent is performed, the ContentProvider is called with the query function of the Android SDK to retrieve all the data stored in a table whose URI is given by the variable tableURI. If the result set is not composed of incorrect data given in the intent, then the component is not vulnerable. Otherwise, it is vulnerable. The label !* is a shortcut notation for all valued output actions that are not explicitly labeled by other transitions.

Assuming a vulnerability pattern ![]() , the vulnerability status of an ioSTS

, the vulnerability status of an ioSTS ![]() compatible with

compatible with ![]() can be stated when its suspension traces are also the suspension traces of

can be stated when its suspension traces are also the suspension traces of ![]() recognised by the locations Vul or NVul:

recognised by the locations Vul or NVul:

Security testing methodology

Initially, a component under test (![]() ) is regarded as a black box whose interfaces are all that are known. However, one usually assumes the following test hypotheses to carry out the test case execution:

) is regarded as a black box whose interfaces are all that are known. However, one usually assumes the following test hypotheses to carry out the test case execution:

• The functional behavior of the component under test can be modeled by an ioLTS ![]() .

. ![]() is unknown (and potentially nondeterministic).

is unknown (and potentially nondeterministic). ![]() is assumed input-enabled (it accepts any of its input actions from any of its states);

is assumed input-enabled (it accepts any of its input actions from any of its states); ![]() denotes its ioLTS suspension.

denotes its ioLTS suspension.

• To dialog with ![]() , one assumes that

, one assumes that ![]() is a composition of an Activity or a Service with a ContentProvider whose type is equal to

is a composition of an Activity or a Service with a ContentProvider whose type is equal to ![]() , and that it is compatible with

, and that it is compatible with ![]() .

.

Since ![]() is assumed modeled by an ioLTS, Definition 8 exhibits that the vulnerability status of

is assumed modeled by an ioLTS, Definition 8 exhibits that the vulnerability status of ![]() against a vulnerability pattern

against a vulnerability pattern ![]() can be determined with the

can be determined with the ![]() traces. These are constructed by testing

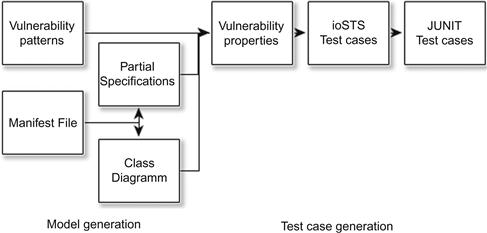

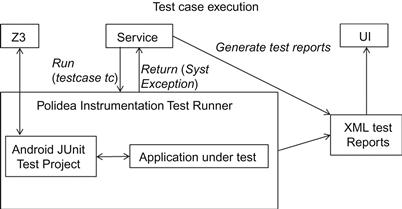

traces. These are constructed by testing ![]() with test cases. Our method generates them by the following steps, illustrated in Figure 24.2: we assume having a set of vulnerability patterns modeled with ioSTS suspensions. From an unpacked Android application, we begin extracting a partial class diagram that lists the components and the associations between them. We keep only the components composed with ContentProviders here. From the Android configuration file called Manifest, an ioSTS suspension is generated for each component. It partially describes the intent-based normal functioning of a component combined with a ContentProvider. Models, called vulnerability properties, are then derived from the composition of vulnerability patterns with specifications. Test cases are obtained by concretizing vulnerability properties to obtain executable test cases. These steps are detailed below.

with test cases. Our method generates them by the following steps, illustrated in Figure 24.2: we assume having a set of vulnerability patterns modeled with ioSTS suspensions. From an unpacked Android application, we begin extracting a partial class diagram that lists the components and the associations between them. We keep only the components composed with ContentProviders here. From the Android configuration file called Manifest, an ioSTS suspension is generated for each component. It partially describes the intent-based normal functioning of a component combined with a ContentProvider. Models, called vulnerability properties, are then derived from the composition of vulnerability patterns with specifications. Test cases are obtained by concretizing vulnerability properties to obtain executable test cases. These steps are detailed below.

Model generation

Android applications gather a lot of information that can be exploited to produce partial specifications. For this method, we generate the following structures and models:

1. First, the tools dextojar2 and apktool3 are successively called to produce a .jar package and to extract the application configuration file, named Manifest. The latter declares the components participating in the application and the kinds of intents accepted by them. From the .jar package, a class diagram, depicting Android components of the application and their types, is initially computed. The component methods and attribute names are recovered by applying reverse engineering based on Java reflection. This class diagram also gives some information about the relationships between components. This step particularly gives the Activities or Services composed with ContentProviders: ![]() is the set gathering the combinations of a component

is the set gathering the combinations of a component ![]() with a ContentProvider

with a ContentProvider ![]() .

.

2. An ioSTS suspension ![]() is generated for each item of

is generated for each item of ![]() such that

such that ![]() (

(![]() , for example, Activity

, for example, Activity ![]() ContentProvider, is also the type of the vulnerability pattern

ContentProvider, is also the type of the vulnerability pattern ![]() .

. ![]() is an ioSTS suspension modeling the call of the ContentProvider

is an ioSTS suspension modeling the call of the ContentProvider ![]() , derived from a generic ioSTS where only the ContentProvider name and the variable tableURI are updated from the information found in the Manifest file. Naturally, this specification is written in accordance with the set

, derived from a generic ioSTS where only the ContentProvider name and the variable tableURI are updated from the information found in the Manifest file. Naturally, this specification is written in accordance with the set ![]() .

. ![]() is the ioSTS suspension of the component

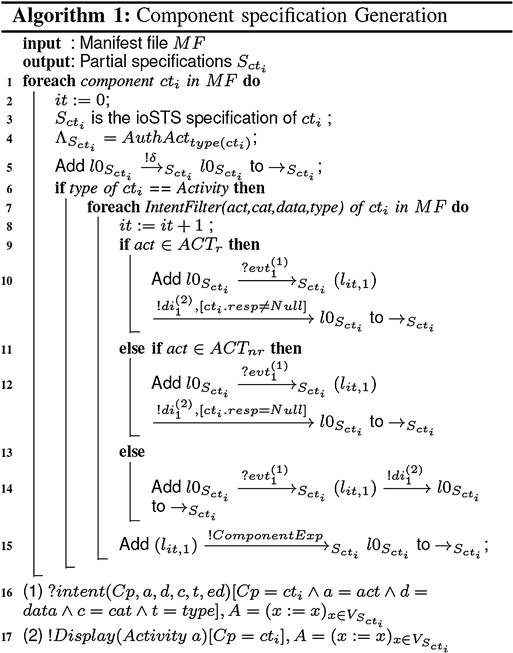

is the ioSTS suspension of the component ![]() constructed by means of the intent filters listed in the Manifest file of the Android project. An intent filter, IntentFilter(act,cat,data,type), declares a type of intent accepted by the component. For readability, we present a simplified version of the algorithm dedicated to Activities only in Algorithm 24.1. Initially, the action set of

constructed by means of the intent filters listed in the Manifest file of the Android project. An intent filter, IntentFilter(act,cat,data,type), declares a type of intent accepted by the component. For readability, we present a simplified version of the algorithm dedicated to Activities only in Algorithm 24.1. Initially, the action set of ![]() is set to

is set to ![]() . Then Algorithm 24.1 produces the ioSTS suspension

. Then Algorithm 24.1 produces the ioSTS suspension ![]() from intent filters and, with respect to the intent functioning, described in the Android documentation. It covers each intent filter and adds one transition carrying an intent followed by two transitions labeled by output actions (lines 7–15). Depending on the action type read in the intent filter, the guard of a transition equipped by an output action is completed to reflect the fact that a response may be received or not. For instance, an action in

from intent filters and, with respect to the intent functioning, described in the Android documentation. It covers each intent filter and adds one transition carrying an intent followed by two transitions labeled by output actions (lines 7–15). Depending on the action type read in the intent filter, the guard of a transition equipped by an output action is completed to reflect the fact that a response may be received or not. For instance, an action in ![]() (line 9) implies both the display of a screen and the receipt of a response. If the action of the intent filter is unknown (lines 13 and 14), no guard is formulated on the output action (a response may be received or not). Finally, the product

(line 9) implies both the display of a screen and the receipt of a response. If the action of the intent filter is unknown (lines 13 and 14), no guard is formulated on the output action (a response may be received or not). Finally, the product ![]() is completed on the output action set to also describe its incorrect behavior, modeled with new transitions to a Fail location. The Fail location shall be particularly useful to refine the test verdict by helping to recognize correct and incorrect behaviors of an Android component with respect to its specification. For the Service components, the specification generation algorithm is similar. These algorithms were evaluated by the Android developers of the Openium company to check their soundness and relevance.

is completed on the output action set to also describe its incorrect behavior, modeled with new transitions to a Fail location. The Fail location shall be particularly useful to refine the test verdict by helping to recognize correct and incorrect behaviors of an Android component with respect to its specification. For the Service components, the specification generation algorithm is similar. These algorithms were evaluated by the Android developers of the Openium company to check their soundness and relevance.

Algorithm 24.1 Activity specification generation algorithm.

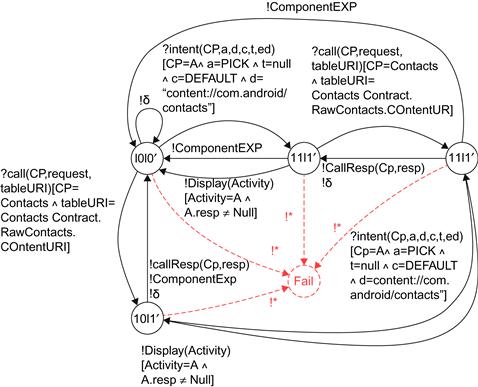

Figure 24.3 illustrates a specification example that stems from the product of one Activity with the ContentProvider Contacts. This composition accepts intents composed of the action PICK and data whose URI corresponds to the contact list stored in the device. It returns responses (probably a chosen contact). This composition also accepts requests to the ContentProvider Contacts. The incorrect behavior is expressed with transitions to Fail.

Test case selection

Test cases are extracted from the compositions of vulnerability patterns with specifications. Given a vulnerability pattern ![]() compatible with a specification

compatible with a specification ![]() , the composition

, the composition ![]() is called a vulnerability property of

is called a vulnerability property of ![]() . It represents the vulnerable and non-vulnerable behaviors that can be observed from

. It represents the vulnerable and non-vulnerable behaviors that can be observed from ![]() . Furthermore, the parallel composition

. Furthermore, the parallel composition ![]() produces new locations and, in particular, new final verdict locations:

produces new locations and, in particular, new final verdict locations:

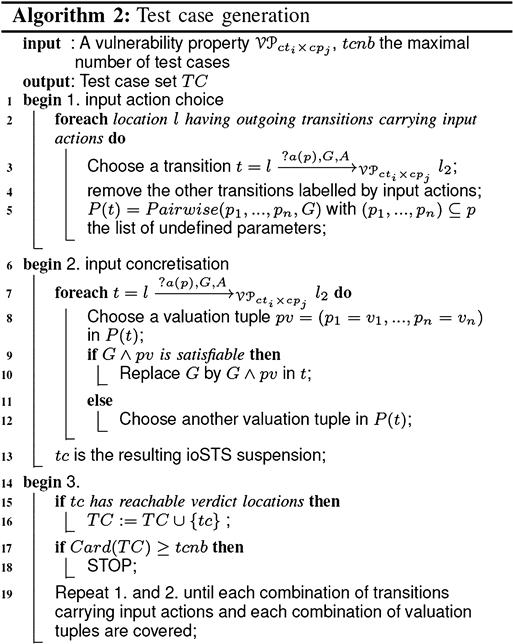

Test cases are achieved with Algorithm 24.2, which performs two main steps. Firstly, it splits a vulnerability property ![]() into several ioSTS. Intuitively, from a location l having k transitions carrying an input action, for example, an intent, k new test cases are constructed to test

into several ioSTS. Intuitively, from a location l having k transitions carrying an input action, for example, an intent, k new test cases are constructed to test ![]() with the k input actions and so on for each location l having transitions labeled by input actions (lines 1–4). Then, a set of valuation tuples is computed from the undefined parameter list of each input action (line 5). For instance, intents are composed of several variables whose domains are given in guards. These ones have to be concretized before testing; that is, each undefined parameter is assigned to a value. Instead of using a Cartesian product to construct a tuple of valuations, we adopted a Pairwise technique [13]. This technique strongly reduces the coverage of variable domains by constructing discrete combinations for pairs of parameters only. The set of valuation tuples is constructed with the Pairwise procedure, which takes the list of undefined parameters and the transition guard to find the domain of each parameter. In the second step (lines 6–13), input actions are concretized. Given a transition t and its set of valuation tuples

with the k input actions and so on for each location l having transitions labeled by input actions (lines 1–4). Then, a set of valuation tuples is computed from the undefined parameter list of each input action (line 5). For instance, intents are composed of several variables whose domains are given in guards. These ones have to be concretized before testing; that is, each undefined parameter is assigned to a value. Instead of using a Cartesian product to construct a tuple of valuations, we adopted a Pairwise technique [13]. This technique strongly reduces the coverage of variable domains by constructing discrete combinations for pairs of parameters only. The set of valuation tuples is constructed with the Pairwise procedure, which takes the list of undefined parameters and the transition guard to find the domain of each parameter. In the second step (lines 6–13), input actions are concretized. Given a transition t and its set of valuation tuples ![]() , this step constructs a new test case for each tuple

, this step constructs a new test case for each tuple ![]() by replacing the guard

by replacing the guard ![]() with

with ![]() if

if ![]() is satisfiable. Finally, if the resulting ioSTS suspension

is satisfiable. Finally, if the resulting ioSTS suspension ![]() has verdict locations, then

has verdict locations, then ![]() is added in the test case set

is added in the test case set ![]() . Steps 1 and 2 are iteratively applied until each combination of valuation tuples and each combination of transitions carrying input actions are covered. Since the algorithm may produce a large test case set, depending on the number of tuples of valuations given by the Pairwise function, the algorithm also ends when the test case set

. Steps 1 and 2 are iteratively applied until each combination of valuation tuples and each combination of transitions carrying input actions are covered. Since the algorithm may produce a large test case set, depending on the number of tuples of valuations given by the Pairwise function, the algorithm also ends when the test case set ![]() reaches a cardinality of tcnb (lines17 and 18).

reaches a cardinality of tcnb (lines17 and 18).

A test case example, derived from the specification of Figure 24.3 and the vulnerability pattern of Figure 24.1, is depicted in Figure 24.4. It calls the Activity A with intents whose extra data parameter is composed of an SQL injection. Then, the data managed by the ContentProvider Contacts must not have been modified. Otherwise, the component is vulnerable. If it crashes, it is vulnerable as well.

A test case constructed, with Algorithm 24.2, from a vulnerability property ![]() , produces traces that belong to the trace set of

, produces traces that belong to the trace set of ![]() . In other words, the test selection algorithm does not add new traces leading to verdict locations. Indeed, a test case is composed of paths of a vulnerability property, starting from its initial location. Each guard

. In other words, the test selection algorithm does not add new traces leading to verdict locations. Indeed, a test case is composed of paths of a vulnerability property, starting from its initial location. Each guard ![]() of a test case transition carrying an input action stems from a guard

of a test case transition carrying an input action stems from a guard ![]() completed with a tuple of valuations such that, if

completed with a tuple of valuations such that, if ![]() is satisfied, then

is satisfied, then ![]() is also satisfied. This is captured by the following proposition:

is also satisfied. This is captured by the following proposition:

Test case execution definition

The test case execution is defined by the parallel composition of the test cases with the implementation under test ![]() :

:

The above proposition leads to the test verdict of a component under test against a vulnerability pattern ![]() . Intuitively, it refers to the vulnerability status definition, completed by the detection of incorrect behavior described in the specification with the verdict locations VUL/FAIL and NVUL/FAIL. An inconclusive verdict is also defined when a FAIL verdict location is reached after a test case execution. This verdict means that incorrect actions or data were received. To avoid false positive results, the test is stopped without completely executing the scenario given in the vulnerability pattern.

. Intuitively, it refers to the vulnerability status definition, completed by the detection of incorrect behavior described in the specification with the verdict locations VUL/FAIL and NVUL/FAIL. An inconclusive verdict is also defined when a FAIL verdict location is reached after a test case execution. This verdict means that incorrect actions or data were received. To avoid false positive results, the test is stopped without completely executing the scenario given in the vulnerability pattern.

Implementation and experimentation

Methodology implementation

The above security testing method has been implemented in a prototype tool called APSET (Android aPplications SEcurity Testing), publicly available in a Github repository.4 As presented in “Security Testing Methodology,” it takes as inputs vulnerability patterns written in dot format5 and an Android application. IoSTS test cases are converted into JUNIT test cases in order to be executed with a test runner (set of control methods to run tests). Any action or predicate defined in the previous sections has its corresponding function coded in the tool. For instance, the action !Display(A) is coded by the function Display() returning true if a screen is displayed. This link between actions and Java code eases to the development of final test cases, which actually call Java sections of code that can be executed. In short, JUNIT test cases are constructed as follows: input actions representing component calls are converted into Java code composed of parameter values given by guards. Output actions are translated into Java code and JUNIT assertions composed of verdicts. The actions !ComponentExp and !SystemExp are converted into try/catch statements.

Afterward, JUNIT test cases can be executed on Android emulators or devices by means of the test execution framework depicted in Figure 24.5. This framework is composed of the Android testing execution tool provided by Google, an enriched framework with the tool PolideaInstrumentation,6 to yield XML reports.

Test cases are executed on Android devices or emulators by an Android Service component, which returns an XML report directly displayed on the device. (External computers are not required during this step.) The test runner starts ![]() and iteratively executes JUNIT test cases in separate processes.

and iteratively executes JUNIT test cases in separate processes.

This procedure is required to catch the exceptions raised by the Android system when a component crashes. Once all the test cases are executed, the XML report gathers all the assertion results. In particular, the VUL, VUL/FAIL messages exhibit the detection of a vulnerability issue.

The following example illustrates part of an XML report expressing the crash of a component. We obtain a VUL/FAIL message inside a failure XML block. Hence, the verdict is VUL/FAIL.

<test suite errors =“0” failures=“1” name=“packagename.test.Intent. ContactActivityTest” package=“packagename.test.Intent” tests=“1” time =“0.15” timestamp= “2013-02-13T10:05:02”>

<testcase classname=“packagename.test.Intent.ContactActivityTest” name= “test1” time =“0.15”>

<failure> VUL/FAIL

INSTRUMENTATION RESULT: shortMsg= java.lang.NullPointerException

INSTRUMENTATION RESULT:longMsg= java.lang.NullPointerException INSTRUMENTATION CODE: 0

</failure></testcase>

</testsuite>

The guard solving, used in Algorithm 24.2 and in the test execution framework, is performed with the SMT (Satisfiability Modulo Theories) solver Z37 that we have chosen, since it allows a direct use of arithmetic formulae. However, it does not support String variables. So, we extended the Z3 expression language with new predicates, and in particular with String-based predicates (e.g., in, streq, contains). A predicate stands for a function over ioSTS internal variables and parameters that return Boolean values.

Experimentation

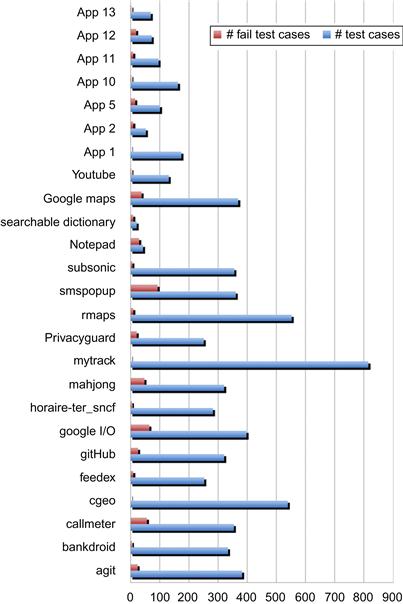

We randomly chose 50 popular applications in Google Play Store and 20 applications provided by the Openium company. Among these, we kept the 25 applications composed of ContentProviders, (18 applications of the Android Market and 7 developed by Openium, app 1 up to app 13). We tested them with three vulnerability patterns: ![]() corresponds to the vulnerability pattern taken as an example in the paper.

corresponds to the vulnerability pattern taken as an example in the paper. ![]() checks whether an Activity called with intents composed of malicious data cannot change the structure of a database managed by a ContentProvider (modification of attribute names, removal of tables, etc.).

checks whether an Activity called with intents composed of malicious data cannot change the structure of a database managed by a ContentProvider (modification of attribute names, removal of tables, etc.). ![]() checks that incorrect data, already stored in a database, are not displayed by an Activity after having called it with intents.

checks that incorrect data, already stored in a database, are not displayed by an Activity after having called it with intents.

Our tool APSET detected a total of 22 vulnerable applications using only these three vulnerability patterns. Figure 24.6 illustrates the obtained test results. This chart shows the number of test cases executed per application and the number of VUL verdicts. Some application test results revealed a high number of VUL verdicts, for example, smspopup with 94 failures. These do not necessarily reflect the number of security defects, though. Several VUL verdicts can arise from the same flaw in the source code.

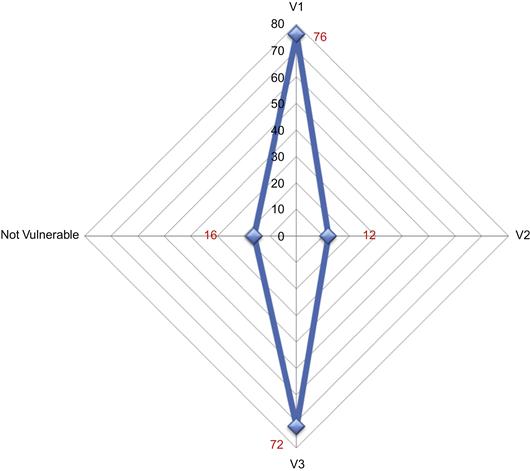

Analyzing XML reports can help developers localize these defects by identifying the incriminated components, the raised exceptions, or the actions performed. Figure 24.7 depicts the percentage of applications vulnerable to the vulnerability patterns ![]() . Eighty-eight percent of the applications are vulnerable to

. Eighty-eight percent of the applications are vulnerable to ![]() ,

, ![]() , or both, and hence are not protected against SQL injections. Seventy-two percent of the applications display incorrect data on user interfaces (

, or both, and hence are not protected against SQL injections. Seventy-two percent of the applications display incorrect data on user interfaces (![]() ) without checking for consistency.

) without checking for consistency.

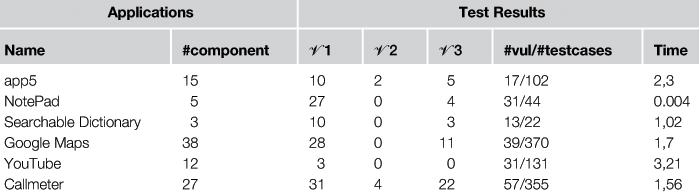

Thereafter, we manually analyzed the test reports and codes of six applications. The test results are depicted in Table 24.1. It respectively illustrates the number of tested components, the number of issues detected with each vulnerability pattern, and the total number of test cases providing a vulnerable verdict. For instance, with app5, 102 test cases were generated and 17 showed vulnerability issues. Ten test cases showed that app5 is vulnerable to ![]() . More precisely, we noticed that one test case showed that personal data can be modified by using malicious intents. App5 crashed with the other test cases, probably because of the bad handling of malicious intents by the components. Two test cases revealed that the structure of the database can be modified (

. More precisely, we noticed that one test case showed that personal data can be modified by using malicious intents. App5 crashed with the other test cases, probably because of the bad handling of malicious intents by the components. Two test cases revealed that the structure of the database can be modified (![]() ). By analysing the app5 source codes, we actually detected that no ContentProvider methods were protected against malicious SQL requests. Finally, five test cases revealed the display of incorrect data stored in the database (

). By analysing the app5 source codes, we actually detected that no ContentProvider methods were protected against malicious SQL requests. Finally, five test cases revealed the display of incorrect data stored in the database (![]() ). This means that the database content is directly displayed into the user interface without validation.

). This means that the database content is directly displayed into the user interface without validation.

Thirty-nine test cases revealed vulnerability issues with the application Google Maps. Twenty-eight vulnerabilities were detected with ![]() : three test cases showed that databases can be updated or modified with incorrect data through the component Maps-Activity. One test case revealed the same flaw for the component ResolverActivity. As a consequence, one can conclude that Google Maps is vulnerable to some SQL injections and has integrity issues. The other failed test cases concern MapsActivity crashes. With

: three test cases showed that databases can be updated or modified with incorrect data through the component Maps-Activity. One test case revealed the same flaw for the component ResolverActivity. As a consequence, one can conclude that Google Maps is vulnerable to some SQL injections and has integrity issues. The other failed test cases concern MapsActivity crashes. With ![]() , the 11 detected defects also correspond to component crashes. For Youtube, we obtained three vulnerability issues corresponding to component crashes, with the exception NullPointerException. No more serious vulnerabilities were detected. Table 24.1 also gives the average test case execution time, measured with a mid-2011 computer with a CPU of 2.1Ghz Core i5. The execution time is included between some milliseconds up to some seconds, depending of the number of components and the code of the tested components. These results are coherent with other available Android application testing tools [12]. These results, combined with the number of vulnerability issues detected on real applications, tend to show that our tool is effective and leads to substantial improvement in security vulnerability detection.

, the 11 detected defects also correspond to component crashes. For Youtube, we obtained three vulnerability issues corresponding to component crashes, with the exception NullPointerException. No more serious vulnerabilities were detected. Table 24.1 also gives the average test case execution time, measured with a mid-2011 computer with a CPU of 2.1Ghz Core i5. The execution time is included between some milliseconds up to some seconds, depending of the number of components and the code of the tested components. These results are coherent with other available Android application testing tools [12]. These results, combined with the number of vulnerability issues detected on real applications, tend to show that our tool is effective and leads to substantial improvement in security vulnerability detection.

Conclusion

In this chapter, we present a security testing method for Android applications that aims at detecting data vulnerabilities based on the intent mechanism. The originality of this work resides in the automatic generation of partial specifications, used to generate test cases. These enrich the test verdict with the verdicts NVUL/FAIL and VUL/FAIL, pointing out that the component under testing does not comply with the recommendations provided in the Android documentation.

They also avoid false positive verdicts, since each component is exclusively tested by means of the vulnerability patterns that share behavior with the component specification. We tested our approach on 25 randomly chosen Android applications. Our tool reported that 22 applications have defects that can be exploited by attackers to crash applications, to extract personal data, or to modify them.

This experimentation firstly showed that our tool APSET is effective. The APSET effectiveness could be yet improved by using more vulnerability patterns or, eventually, by generating more test cases per pattern. In comparison to other intent-based testing tools [4,8], APSET is scalable, since existing vulnerability patterns can be modified or new vulnerability patterns can be proposed to meet the testing requirements. Value sets considered for testing, for example, the SQL injection set Inj and the set of predicates used in guards, can be updated, as well. Nevertheless, APSET is only based on the intent mechanism. Consequently, it cannot test any kind of vulnerability. For instance, attacks carried out by sequences of user actions performed on the application interface cannot be applied with our tool. APSET does not consider the component type BroadcastReceiver, either. This component type is vulnerable to malicious intents, though. These drawbacks could be explored in future works.

Acknowledgments

This work was undertaken in collaboration with the Openium company.8 Thanks for their valuable feedback and advice on this work.

References

1. IT business: Android security. [Internet]. Retrieved from: http://www.itbusinessedge.com/cm/blogs/weinschenk/google-must-deal-with-android-security-problems-quickly/?cs=49291>; 2012 Jun [accessed 02.13].

2. Shabtai A, Fledel Y, Kanonov U, Elovici Y, Dolev S. Google Android: A state-of-the-art review of security mechanisms. CoRR 2009.

3. Felt AP, Chin E, Hanna S, Song D, Wagner D. Android permissions demystified. In: Proceedings of the eighteenth ACM Conference on Computer and Communications Security; 2011. p. 627–38.

4. Chin E, Felt AP, Greenwood K, Wagner D. Analyzing inter-application communication in Android. Proc of the nineth International Conference on Mobile Systems Applictaions and Services; 2011.

5. Frantzen L, Tretmans J, Willemse T. Test generation based on symbolic specifications. FATES. 2004;2005 p. 1–17.

6. Android developer. [Internet]. Retrieved from: http://developer.android.com/index.html; 2013 [accessed 02.13].

7. Amalfitano D, Fasolin A, Tramontana P. A gui crawling-based technique for Android mobile application testing. ICSTW: IEEE Fourth International Conference on Software Testing, Verification and Validation Workshops 2011;252–261.

8. Kantola D, Chin E, He W, Wagner D. Reducing attack surfaces for intra-application communication in Android. Proceedings of the second ACM workshop on security and privacy in smartphones and mobile devices 2012;69–80.

9. Zhong J, Huang J, Liang B. Android permission redelegation detection and test case generation. In: Computer Science Service System (CSSS). 2012 International Conference; 2012. p. 871–74.

10. Grace M, Zhou Y, Wang Z, Jiang X. Systematic detection of capability leaks in stock Android smartphones. In: Proceedings of the nineteenth Network and Distributed System Security Symposium (NDSS); 2012.

11. Jing Y, Ahn G-J, Hu H. Model-based conformance testing for Android. Proceedings of the 7th International Workshop on Security (IWSEC) 2012;1–18.

12. Benli S, Habash A, Herrmann A, Loftis T, Simmonds D. A comparative evaluation of unit testing techniques. Proceedings of the 2012 Ninth International Conference on Information Technology 2012;263–268.

13. Cohen MB, Gibbons PB, Mugridge WB, Colbourn CJ. Constructing test suites for interaction testing. In: Proc of the twenty fifth International Conference on Software Engineering; 2003. p. 38–48.