The new IT products offered in the 1960s radically changed the way business was conducted. Closely linked to this phenomenon was the IBM System/360 (S/360), which was arguably the single most significant event in the advancement of IT in business and society. This new hardware and software architecture produced a truly general-purpose computing system and revolutionized the industry to a degree that is not likely to be seen again.

The popularity of general-purpose computers in the 1960s demonstrated the need for the systems management functions regarding batch performance and system availability. During the 1970s and 1980s these functions continued to evolve in both scope and sophistication. As online transaction processing—with its large supporting databases—became more prevalent, functions such as online transaction tuning, database management, and asset security were added to the responsibilities of systems management. The continued refinement and sophistication of the microchip, combined with its plummeting cost, also opened up new hardware architectures that expanded the use of digital computers. This appendix will discuss the evolution of these functions.

If the 1960s were defined as a time of revolution in IT, the 1970s were a time of evolution. The Internet, though developed in the late 1960s, would not see widespread use until the early 1990s. But the decade of the 1970s would witness major changes within IT.

At this point the phenomenal success of the S/360 had stretched the limits of its architecture in ways its designers could not have foreseen. One of the most prevalent of these architectural restrictions was the amount of main memory available for application processing. This operational limitation had effectively limited growth in application sophistication and became a key redesign goal for IBM. As the cost of memory chips fell, the demand for larger amounts of main memory grew. The expensive storage chips of the 1960s—which indirectly led to the Year 2000 (Y2K) problem by prompting software developers to truncate date fields to reduce the use and cost of main memory—were replaced with smaller, faster, and cheaper chips in the 1970s.

Internal addressing and computing inside a digital computer is done using the binary number system. One of the design limitations inside the S/360 was related to the decision to use address registers of 24 binary digits, or bits, which limited the size of the addressable memory. This architectural limitation had to be resolved before the memory size could be expanded. Briefly, the binary numbering system works as described in the following paragraphs.

A binary (2 valued) number is either a 0 or a 1. The binary numbering system is also referred to as the base 2 numbering system because only 2 values exist in the system (0 and 1) and all values are computed as a power of 2. In our more familiar decimal (base 10) system, there are 10 distinct digits, 0 through 9, and all values are computed as a power of 10. For example, the decimal number 347 is actually:

3x102 + 4x101 + 7x100 = 300 + 40 + 7 = 347

100 equals 1 because any number raised to the 0 power equals 1. Now let’s apply this formula to a base 2 number. Suppose we want to compute the decimal value of the binary number 1101. Applying the previous formula results in:

1x23 + 1x22 + 0x21 +1x20 = 8 + 4+ 0+ 1 = 13

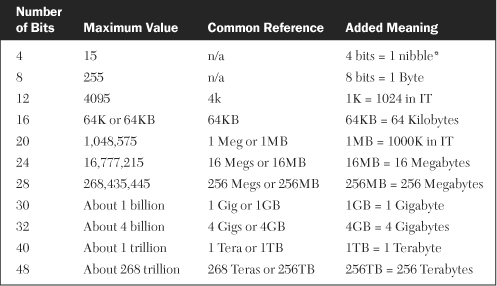

Using the same formula, you should be able to see that the binary number 1111 equals the decimal number 15, binary 11111 equals decimal 31, and so on.

The simple formula for computing the maximum decimal number for an n-bit binary number is 2n – 1. The maximum decimal value of an 8-bit binary number is:

28 – 1 = 256 – 1 = 255

In a similar manner, the maximum decimal value of a 24-bit binary number is approximately 16 million. The maximum number of unique decimal values that could be stored in an 8-bit binary field is actually 256, because 0 counts as a number. Table F-1 shows the maximum decimal values for a variety of n-bit fields, as well as some other common references and additional meanings.*

*Tongue-in-cheek programmers back in the 60’s designated a 4-bit field as a nibble since it was half of an 8-bit field which was known universally as a byte. Only a seasoned IT professional can appreciate a programmer’s sense of humor.

A digital computer is designed to perform all calculations in binary because most of its circuits are designed to detect only one of two states at a time. All electronic switches and gates inside a computer are designated as being either on (a binary 1) or off (a binary 0). Early computing machines were called digital computers for two reasons:

- They were based on the binary system, which is a two digit, or digital, numbering scheme.

- The name distinguished them from analog computers, which used continuous values of circuit voltage rather than the discrete values of 1 or 0 employed by their digital counterparts.

But what is the significance of all this discussion about binary numbering systems, the size of fields in bits, and the maximum quantity of unique numbers you can store in a field? Part of the answer lies in the same issue that primarily accounted for the extensive Y2K problem (the so-called millennium bug) in the late 1990s. That root issue was the cost of data storage. In this case, it specifically applied to the cost of main memory.

When IBM engineers first developed the operating system for the S/360, they had to decide how much addressable memory to design into its architecture.

The field in the operating system that would define the maximum memory size would be referenced often in the many routines managing memory and I/O. Making the fields larger than necessary would result in a costly waste of what was then expensive memory.

The expected size of programs and data also entered into the decision about maximum addressable memory. A typical S/360 programming instruction was 4 bytes in length (for example, 32 bits). An unusually large program of several thousand instructions, referencing tens of thousands of bytes of data, would still need only 20 to 30 kilobytes of memory (and that was presuming all of the data would be residing in memory, which normally was not the case).

So when designers finally decided on a 24-bit addressing scheme for the S/360, they expected a maximum addressable memory size of 16 megabytes to more than accommodate for memory requirements for a long time to come. But something interesting happened on the way to the 1970s. First, the cost and power of integrated circuits began to change dramatically in the mid-1960s. The price of manufacturing a computer chip plummeted while its relative processing power accelerated. This cost/growth phenomenon became known as Moore’s Law. This growth formula has held true for the past three decades and is forecast to continue for decades to come.

The reduction of computer chip price/performance ratios meant that the cost of using more main memory was also falling. Engineers designing computers in the late 1960s were not as constrained by circuit costs as they had been in the early part of the decade. But taking advantage of inexpensive memory chips by providing vast amounts of main storage meant ensuring that an operating system could address this larger memory.

As we have described, the S/360 had a maximum addressable memory of 16 megabytes. Changing the operating system to support more memory would mean millions of dollars in labor cost to redesign, code, integrate, and test all the necessary routines. Such a shift in strategic direction would require significant business justification.

Part of the justification came from IBM’s customers themselves. The success and popularity of the S/360 caused many users to demand faster computers capable of running more programs concurrently. Companies started seeing the benefit of using more interactive programs that could quickly access records stored in huge corporate databases. These companies were willing to invest more capital in advanced computers as they put more and more of their business online. IBM responded to this need with an evolved version of its S/360 flagship. The new product would be called System/370, or S/370, with its name referencing the decade in which it was introduced.

IBM introduced S/370 in 1971. Just as S/360 was based on the complex operating system known as OS/360, the new S/370 architecture would be based on the operating system known as OS/370. One of the many unique features of OS/370 was a new memory management system called Multiple Virtual Storage (MVS). Actually, MVS was the final refinement of several prior memory management schemes that included Virtual Storage 1 (VS 1), Single Virtual Storage (SVS) and Virtual Storage 2 (VS 2).

MVS was an ingenious concept that maximized the physical main memory of a computer by extending the apparent, or virtual, size of memory that would be addressable by each program. To accomplish this, MVS developers cleverly based MVS on a common but often overlooked property of most computer programs known as the locality characteristic. The locality characteristic refers to the property of a typical computer program in which the majority of processing time is spent executing a relatively small number of sequential instructions over and over again. Many of the other instructions in the program may be designed to handle input/output (I/O), exception situations, or error conditions and consequently are executed very infrequently.

This means that only a small portion of a program needs to be physically in main memory for the majority of its executing time. Portions of a program not executing, or executing infrequently, could reside on less expensive and more readily available direct access storage devices (DASD). The MVS version of OS/370 utilized these principles of locality to efficiently map a program’s logical, or virtual, memory into a much smaller physical memory.

MVS accomplished this mapping by dividing a program into 4-kilobyte segments called pages. Various algorithms—such as last used, least recently used, and next sequential used—were designed into OS/370 to determine which pages of a program would be written out to DASD and which ones would be read into main memory for execution. In this way, programs could be developed with essentially no memory constraints, because MVS would allow each program to access all of the maximum 16 megabytes of memory virtually, while in reality giving it only a fraction of that amount physically. Program segments infrequently used would be stored out on DASD (said to be paged out), while frequently used segments would reside in main memory (paged in).

Incidentally, you may think that a software developer who designs even a moderately large program with thousands of lines of code would still need only a few 4-kilobyte pages of memory to execute his or her program. In fact, just about all programs use high-level instructions that represent dozens or even hundreds of actual machine-level instructions for frequently used routines.

In higher-level programming languages such as COBOL or FORTRAN, these instructions are referred to as macros. In lower-level languages such as assembler, these instructions may be machine-level macros such as supervisor calls (SVCs); I/O macros such as READ/WRITE or PUT/Fetch; or even privileged operating system macros requiring special authorizations.

Suppose, for example, that a programmer were to write a program of 3,000 instructions. Depending on the complexity of the programming logic, this could require up to several weeks of coding and testing. By the time all the high- and low-level macros are included, the program could easily exceed 30,000 to 40,000 instructions. At roughly 4 bytes per instruction, the program would require about 160,000 bytes of memory to run, or about 40 pages of 4 kilobytes each.

But this is just a single program. Major application development efforts, such as an enterprise-wide payroll system or a manufacturing resource system, could easily have hundreds of programmers working on thousands of programs in total. As online transaction systems began being fed by huge corporate databases, the need for main memory to run dozens of these large applications concurrently became readily apparent. The MVS version of OS/370 addressed this requirement—it enabled multiple applications to run concurrently by accessing large amounts of virtual storages.

While there were many other features of S/370 that contributed to its success, the MVS concept was by far its most prominent. The S/370’s expanded use of memory that helped proliferate online transaction processing (OLTP) and large databases gave way to several more disciplines of systems management.

The impact of the S/370 in general, and of MVS in particular, on systems management disciplines was substantial. Until this time, most of the emphasis of the S/360 was on availability and batch performance. As the S/370 expanded the use of main memory and transaction processing, the emphasis on systems management began shifting from that of a batch orientation to more online systems.

Several new systems management disciplines were brought about by the proliferation of OLTP systems; the growth of huge databases; and the need to better manage the security, configuration, and changes to these expanding online environments. The online orientation also increased the importance of the already established function of availability.

The discipline of performance and tuning was one of the most obvious changes to systems management. The emphasis on this function in the 1960s centered primarily on batch systems, whereas in the 1970s it shifted significantly to online systems and to databases. Efforts with performance and tuning focused on the online systems and their transactions, on the batch programs used for mass updates, and on the databases themselves. Research in each of these three areas resulted in new processes, tools, and skill sets for this new direction of what was previously a batch-oriented function.

As databases grew in both size and criticalness, the emphasis on efficiently managing disk storage space also increased. Primary among database management concerns was the effective backing-up and restoring of huge, critical databases. This gave way to new concepts such as data retention, expiration dates, offsite storage, and generation data groups. Although the price of DASD was falling dramatically during the 1970s, disk space management still needed to be emphasized—as was the case with most IT hardware components. Fragmented files, unused space, and poorly designed indexes and directories were often the expensive result of mismanaged disk storage. All these various factors made storage management a major new function of systems management in the 1970s.

One of the systems management functions that changed the most during the 1970s was—somewhat ironically—change management itself. As we previously discussed, the majority of applications running on mainframe computers during the 1960s were batch-oriented. Changes to these programs could usually be made through a production control function in which analysts would point executable load libraries toward a revised program segment, called a member, instead of toward the original segment. There was nothing very sophisticated, formalized, or particularly risky about this process. If an incorrect member was pointed to, or the revised member processed incorrectly, the production control analyst would simply correct the mistake or point back to the original member and then re-run the job.

This simplicity, informality, and low risk all changed with OLTP. Changes made to OLTP systems increased in frequency, importance, and risk. If a poorly managed change caused an unscheduled outage to a critical online system, particularly during a peak usage period, it could result in hundreds of hours of lost productivity, not to mention the possible risk of corrupted data. So a more sophisticated, formal process for managing changes to production software started to emerge in the midto-late 1970s.

Coupled with this more structured approach to change management was a similar evolution for problem management. Help desks became a data center mainstay; these help desks initiated formal logging, tracking, and resolution of problem calls. Procedures involving escalation, prioritization, trending, and root-cause analysis started being developed in support for this process.

As more and more critical corporate data began populating online databases, the need for effective security measures also emerged. The systems management discipline of security was typically implemented at three distinct levels:

Later on, network and physical security were added to the mix.

The final systems management discipline that came out of the 1970s was configuration management. As with many of the systems management functions that emerged from this decade, configuration management came in multiple flavors. One was hardware configuration, which involved both the logical and physical interconnection of devices. Another was operating system configuration management, which managed the various levels of the operating systems. A third was application software configuration management, which configured and tracked block releases of application software.

At least four major IT developments occurred during the decade of the 1980s that had substantial influence on the disciplines of systems management:

• The continuing evolution of mainframe computers

• The expanded use of midrange computers

• The proliferation of PCs

• The emergence of client/server systems

A variety of new features were added to the S/370 architecture during the 1980s. These included support for higher-density disk and tape devices, high-speed laser printers, and fiber optic channels. But one of the most significant advances during this decade again involved main memory.

By the early 1980s, corporations of all sizes were running large, mission-critical applications on their mainframe computers. As the applications and their associated databases grew in size and number, they once again bumped up against the memory constraints of the S/370 architecture. Few would have believed back in the early 1970s that the almost unlimited size of virtual storage would prove to be too little. Yet by the early 1980s, that is exactly what happened.

IBM responded by extending its maximum S/370 addressing field from 24 bits to 32 bits. As shown in Table F-1, this meant that the maximum addressable memory was now extended from 16 megabytes to 4 gigabytes (4 billion bytes of memory). This new version of the S/370 operating system was appropriately named Multiple Virtual Storage/Extended Architecture, or MVS/XA.

The demand for more memory was coupled with increased demands for processing power and disk storage. This led to a more formal process for planning the capacity of CPUs, main memory, I/O channels, and disk storage. The large increase in mission-critical applications also led to a more formal process for accepting major application systems into production and for managing changes. Finally, as the availability of online systems became more crucial to the success of a business, the process of disaster recovery became more formalized.

Midrange computers had been in operation for many years prior to the 1980s. But during this decade, these machines flourished even more, in both numbers and importance. In the 1970s, Hewlett-Packard and DEC both thrived in the midrange market, with HP monopolizing small businesses and manufacturing companies while DEC carving out an impressive niche in the medical industry.

Contributing to this surge in midrange sales was the introduction of IBM’s Advanced Systems 400, or AS/400. As more and more critical applications started running on these midrange boxes, disaster recovery now arose as a legitimate business need for these smaller computer systems; it was a natural extension of mainframe disaster recovery.

The PC, like many technological advancements before it, was born in rather humble surroundings. But what began almost as a hobby in a San Jose garage in the late 1970s completely transformed the IT industry during the 1980s. Steve Jobs and Steve Wozniak, cofounders of Apple Computer, envisioned a huge, world-wide market. However, they were initially limited to selling mostly to hobbyists and schools, with many of the academic Apples greatly discounted or donated. Conservative corporate America was not yet ready for what many perceived to be little more than an amateur hobbyist’s invention, particularly if it lacked the IBM trademark.

Eventually IBM came to embrace the PC and American business followed. Companies began to see the productivity gains of various office automation products such as word processors, spread sheets, and graphics presentation packages. Engineering and entertainment firms also saw the value in the graphics capabilities of PCs, particularly Apple’s advanced Macintosh model. By the middle of the decade, both Apple and IBM-type PCs were prospering.

As the business value of PCs became more apparent to industry, the next logical extension was to network them together in small local groups to enable the sharing of documents, spreadsheets, and electronic mail (or email). The infrastructure used to connect these small clusters of PCs became known as local area networks (LANs). This growth of LANs laid the groundwork for the evolving systems management discipline of network management. The expansion of LANs also contributed to the expansion of security beyond operating systems, applications, and databases and out onto the network.

Toward the end of the 1980s, a variation of an existing IT architecture began to emerge. The cornerstone of this technology was a transaction-oriented operating system developed first by AT&T Bell Laboratories and later refined by the University of California at Berkeley. It was called UNIX, short for uniplexed information and computing system. The name was selected to distinguish it from the earlier mainframe operating system MULTICS, short for multiplexed information and computing system. Like midrange computers, UNIX had its origins in the late 1960s and early 1970s, but really came into its own in the 1980s.

As mini-computers—later referred to as workstation servers and eventually just servers—became more powerful and PCs became more prevalent, the merging of these two entities into a client/server (PC-server) relationship emerged. One of the outcomes of this successful merger was the need to extend storage management to client/server applications. The problems and solutions of mainframe and midrange backups and restores now extended to client/server environments.

As we have seen, the 1980s ushered in several significant advances in IT. These, in turn, necessitated several new disciplines of systems management. The continuing evolution of the S/370 highlighted the need for disaster recovery, capacity planning, change management, and production acceptance. The proliferation and interconnection of PCs necessitated the need for formal network management.

Just as it is difficult to define the exact date of the origin of the computer, it is similarly difficult to pinpoint the beginning of some of the disciplines of systems management. I believe that the timing of these new systems management disciplines is closely linked to the major events in the progression of IT.

Just as new systems management disciplines resulted from the IT developments of the 1980s, existing systems management processes were impacted by those same IT developments. This included security, which now extended beyond batch applications, online systems, and corporate databases, to now include the network arena.

It also included the function of storage management, which now extended beyond the mainframe and midrange environments to that of client/server systems. As more and more critical applications began running on these platforms, there was a genuine need to ensure that effective backups and restores were performed on these devices.

The growth of online systems with huge corporate databases, coupled with falling prices of memory chips, exposed the limited memory of the otherwise immensely successful S/360. IBM responded with an evolved architecture called S/370, which featured a memory mapping scheme. The MVS memory mapping scheme essentially gave each application access to the full 16 megabytes of memory available in OS/370.

MVS helped proliferate OLTP systems and extremely large databases. These, in turn, shifted the emphasis of performance and tuning away from a batch orientation to that of online transactions and databases. Five other systems management functions also became prominent during this time:

• Storage management

• Change management

• Problem management

• Security

• Configuration management

Four of the key IT developments of the 1980s that helped advance the field of systems management were the evolution of mainframe computing, the expanded use of midrange computers, the proliferation of PCs, and the emergence of client/server systems.

The continuing evolution of mainframe computers helped formalize capacity planning, production acceptance, change management, and disaster recovery. The expanded use of midrange computers encouraged the refinement of disaster-recovery processes.

The proliferation of PCs brought the discipline of network management into the forefront. Finally, the 1980s saw the coupling of two emerging technologies: the utility of the workstation server merged with the power of the desktop computer to usher in the widespread use of client/server computing. Among the many offshoots of client/server computing was the refinement of the storage management function.