This appendix concludes our historical look at systems management by presenting key IT developments in the decade of the 1990s along with a few predictions for the new millennium. This is worth reviewing because the future direction of IT is where many of us will spend much of our professional lives.

The proliferation of interconnected PCs led to critical emphasis on the performance and reliability of the networks that connected all these various clients to their servers. As a result, capacity planning expanded its discipline into the more widespread area of networks, with multiple distributed computing resources of various architectures.

Undoubtedly the most significant IT advancement in the 1990s was the world-wide explosion of the Internet. In the span of a few short years, companies went from dabbling in limited exposure to the Internet to becoming totally dependent on its information resources. Many firms have spawned their own intranets and extranets. Security, availability, and capacity planning are just some of the disciplines touched by this incredibly potent technology.

No discussion of the new millennium would be complete without mention of the ubiquitous Y2K computer problem (the so-called millennium bug). Many IT observers predicted that this fatal software flaw would bring down virtually every non-upgraded computer at midnight on December 31, 1999. Although the problem was identified and addressed in time to prevent widespread catastrophic impacts, its influence on a few of the systems management disciplines is worth noting.

The 1990s saw the world of mainframes come full circle. During the 1970s, many companies centralized their large processors in what became huge data centers. Favorable economic growth in the 1980s allowed many businesses to expand both regionally and globally. Many of the IT departments in these firms decentralized their data centers to distribute control and services locally. Driven by the need to reduce costs in the 1990s, many of these same companies now recentralized back into even larger data centers. This is a pattern that continues to repeat every few years as companies grow, split off, merge, acquire, or sell other business entities.

As the mainframe architectures migrated back to major data centers, managers felt increased pressure to improve the overall efficiency of their operating environments. One method employed to accomplish this was to automate parts of the computer operations organization. Terms such as automated tape libraries, automated consoles, and automated monitoring started becoming common in the 1990s.

As data centers gradually began operating more efficiently and more reliably, so did the environmental functions of these centers. Environmental controls for temperature, humidity, and electrical power became more automated and integrated into the overall management of the data center. The function of facilities management had certainly been around in one form or another in prior decades. But during the 1990s it emerged and progressed as a highly refined function for IT operations managers.

Mainframes themselves also went through some significant technical refinements during this time frame. One of the most notable advances again came from IBM. An entirely new architecture that had been in development for over a decade was introduced as System/390 (S/390), with its companion operating system OS/390. Having learned their lessons about memory constraints in years past, IBM engineers planned to stay ahead of the game by designing a 48-bit memory addressing field into the system. This would eventually provide the capability to address up to approximately 268 trillion bytes of memory. Will this finally be enough memory to satisfy the demands of the new millennium? Only time will tell, of course. But there are systems already announced, such as Windows 7, with 64-bit addressing schemes. In all likelihood, the 48-bit memory addressing will not be the next great limiting factor in IT architectures; more probably it will be either the database or network arenas that will apply the brakes.

IBM’s new architecture also introduced a different process technology for its family of processors. It is based on complimentary metal-oxide semiconductor (CMOS) technology. While not as new or even as fast as other circuitry IBM has used in the past, it is relatively inexpensive and extremely low in heat generation. Heat has often plagued IBM and others in their pursuit of the ultimate fast and powerful circuit design. CMOS also lends itself to parallel processing, which more than compensates for its slightly slower execution speeds.

Other features were designed into the S/390 architecture that helped prepare it for some of the emerging technologies of the 1990s. One of these was the more robust use of high-speed, fiber-optic channels for I/O operations. Another was the integrated interface for IBM’s version of UNIX, which it called AIX (for advanced interactive executive). A third noteworthy feature of the S/390 was its increased channel port capacity. This was intended to handle anticipated increases in remote I/O activity primarily due to higher Internet traffic.

The decade of the 1990s saw many companies transforming themselves in several ways. Downsizing and rightsizing became common buzzwords as global competition forced many industries to cut costs and become more productive. Acquisitions, mergers, and even hostile takeovers became more commonplace as industries as diverse as aerospace, communications, and banking endured corporate consolidations.

The push to become more efficient and streamlined drove many companies back to centralizing their IT departments. The process of centralization forced many IT executives to take a long, hard look at the platforms in their shop. This was especially true when merging companies attempted to combine their respective IT shops. This recognition highlighted the need for a global evaluation to identify which types of platforms would work best in a new consolidated environment and which kinds of operational improvements each platform type offered.

By the early 1990s, midrange and client/server platforms both had delivered improvements in two areas:

- The volumes of units shipped, which had more than doubled in the past 10 years.

- The types of applications now running on these platforms.

Companies that had previously run most all of their mission-critical applications on large mainframes were now migrating many of these systems onto smaller midrange computers, or more commonly onto client/server platforms.

The term server itself was going through a transformation of sorts about this time. As more data became available to clients—both human and machine—for processing, the computers managing and delivering this information started being grouped together as servers, regardless of their size. In some cases, even the mainframe was referred to as a giant server.

As midrange computers became more network-oriented, and as the more traditional application and database servers became more powerful, the differences in terms of managing the two types began to fade. This was especially true in the case of storage management. Midrange and client/server platforms by now were both running mission-critical applications and enterprise-wide systems. This meant the backing up of their data was becoming just as crucial a function as it had been for years on the mainframe side.

The fact that storage management was now becoming so important on such a variety of platforms refined the storage management function of systems management. The discipline evolved into an enterprise-wide process involving products and procedures that no longer applied just to mainframes but to midrange and client/server platforms as well. Later in the decade, Microsoft introduced its new, 32-bit NT architecture that many in the industry presumed stood for New Technology. As NT became prevalent, storage management was again extended and refined to include this new platform offering.

Just as NT began establishing itself as a viable alternative to UNIX, a new operating system arrived on the scene that was radically different in concept and origin. In 1991, Linus Torvald, a 21-year-old doctorial student at Helsinki University in Finland, wrote his own operating system (called Linux). It was to be an improved alternative to the well-established but flawed UNIX system and to the unproven and fledgling NT system. More importantly, it would be distributed free over the Internet with all of its source code made available to users for modification. Thus began a major new concept of free, open source code for a mainstream operating system in which users are not only allowed to improve and modify the source code for their own customized use, they are encouraged to do so. As of 2006, less than 2 percent of the current Linux operating system has Torvald’s original code in it.

By the time the last decade of the 20th century rolled around, PCs and the networks that interconnected them had become an ingrained part of everyday life. Computer courses were being taught in elementary schools for the young, in senior citizen homes for the not so young, and in most every institution of learning in between.

Not only had the number of PCs grown substantially during these past 10 years, but so had their variety. The processing power of desktop computers changed in both directions. Those requiring extensive capacity for complex graphics and analytical applications evolved into powerful—and expensive—PC workstations. Those needing only minimal processing capability (such as for data entry, data inquiries, or Internet access) were offered as less-expensive network computers.

These two extremes of PC offerings are sometimes referred to as fat clients and thin clients, with a wide variation of choices in between. Desktop models were not the only offering of PCs in the 1990s. Manufacturers offered progressively smaller versions commonly referred to as laptops (for portable use), palmtops (for personal scheduling—greatly popularized by the Personal Digital Assistant Palm Pilot and the BlackBerry, which is shown in Figure G-1), and thumbtops (an even smaller, though less popular offering). These models provided users with virtually any variation of PC they required or desired.

Laptop computers alone had become so popular by the end of the decade that most airlines, hotels, schools, universities, and resorts had changed policies, procedures, and facilities to accommodate the ubiquitous tool. Many graduate schools taught courses that actually required laptops as a pre-requisite for the class.

As the number and variety of PCs continued to increase, so also did their dependence on the networks that interconnected them. This focused attention on two important functions of systems management: availability and capacity planning.

Availability now played a critical role in ensuring the productivity of PC users in much the same way as it did during its evolution from mainframe batch applications to online systems. However, the emphasis on availability shifted away from the computers themselves because most desktops had robust redundancy designed into them. Instead, the emphasis shifted to the availability of the LANs that interconnected all these desktop machines.

The second discipline that evolved from the proliferation of networked PCs was capacity planning. It, too, now moved beyond computer capacity to that of network switches, routers, and especially bandwidth.

Among all the numerous advances in the field of IT, none has had more widespread global impact than the unprecedented growth and use of the Intranet.

What began as a relatively limited-use tool for sharing research information between Defense Department agencies and universities has completely changed the manner in which corporations, universities, governments, and even families communicate and do business.

The commonplace nature of the Internet in business and in the home generated a need for more stringent control over access. The systems management function of strategic security evolved as a result of this need by providing network firewalls, more secured password schemes, and effective corporate policy statements and procedures.

As the capabilities of the Internet grew during the 1990s, the demand for more sophisticated services intensified. New features such as voice cards, animation, and multimedia became popular among users and substantially increased network traffic. The need to more effectively plan and manage these escalating workloads on the network brought about additional refinements in the functions of capacity planning and network management.

By 1999 there were probably few people on the planet who had not at least heard something about the Y2K computer problem. While many are now at least somewhat aware of what the millennium bug involved, this section describes it once more for readers who may not fully appreciate the source and significance of the problem.

As mentioned earlier, the shortage of expensive data storage, both in main memory and on disk devices, led application software developers in the mid-1960s to conserve on storage space. One of the most common methods used to save storage space was to shorten the length of a date field in a program. This was accomplished by designating the year with only its last two digits. The years 1957 and 1983, for example, would be coded as 57 and 83, respectively. This would reduce the four-byte year field down to two bytes.

Taken by itself, a savings of two bytes of storage may seem relatively insignificant. But date fields were very prevalent in numerous programs of large applications, sometimes occurring hundreds or even thousands of times. An employee personnel file, for example, may contain separate fields for an employee date of birth, company start date, company anniversary date, company seniority date, and company retirement date—all embedded within each employee record. If the company employed 10,000 workers, the firm would store 50,000 date fields in its personnel file. The two-byte savings from the contracted year field would total 100,000 bytes, or about 100 kilobytes of storage for a single employee file.

Consider an automotive or aerospace company that could easily have millions of parts associated with a particular model of a car or an airplane. Each part record may have fields for a date of manufacture, a date of install, a date of estimated life expectancy, and a date of actual replacement. The two-byte reduction of the year field in these inventory files could result in millions of bytes of savings in storage.

But a savings in storage was not the only reason that a 2-byte year field in a date record was used. Many developers needed to sort dates within their programs. A common scheme used to facilitate sorting was to comprise the date in a yy-mm-dd format. The two-digit year gave a consistent, easy-to-read, and easy-to-document form for date fields, not to mention slightly faster sort times due to fewer bytes that must be compared.

Perhaps the most likely reason that programmers were not worried about concatenating their year fields to two digits was that few, if any, thought that their programs would still be running in production 20, 30 and even 40 years later. After all, the IT industry was still in its infancy in the 1960s, when many of these programs were first developed. IT was rapidly changing on a year-to-year and even a month-to-month basis. Newer and more improved methods of software development—such as structured programming, relational databases, fourth-generation languages, and object-oriented programming—would surely replace these early versions of production programs.

Of course, hindsight is often 20/20. What we know now but did not fully realize then was that large corporate applications became mission-critical to many businesses during the 1970s and 1980s. These legacy systems grew in such size, complexity, and importance over the years that it became difficult to justify the large cost of their replacements.

The result was that countless numbers of mission-critical programs developed in the 1960s and 1970s with two-byte year fields were still running in production by the late 1990s. This presented the core of the problem, which was that the year 2000 in most of these legacy programs would be interpreted as the year 1900, causing programs to fail, lock up, or otherwise generate unpredictable results.

A massive remediation and replacement effort began in the late 1990s to address the Y2K computer problem. Some industries, such as banking and airlines, spent up to four years correcting their software to ensure it ran properly on January 1, 2000. These programming efforts were, by and large, successful in heading off any major adverse impacts of the Y2K problem.

There were also a few unexpected benefits from the efforts to address the millennium bug. One was that many companies were forced to conduct a long-overdue inventory of their production application profiles. Numerous stories surfaced about IT departments discovering from these inventories that they were supporting programs no longer needed or not even being used. Not only did application profiles become more accurate and current as a result of Y2K preparations, the methods used to compile these inventories also improved.

A second major benefit of addressing the Y2K problem were the refinements it brought about in two important functions of systems management. The first was in change management. Remedial programming necessitated endless hours of regression testing to ensure that the output of the modified Y2K-compliant programs matched the output of the original programs. Upgrading these new systems into production often resulted in temporary back-outs and then re-upgrading. Effective change management procedures helped to ensure these upgrades were done smoothly and permanently when the modifications were done properly; they also ensured that the back-outs were done effectively and immediately when necessary.

The function of production acceptance was also refined as a result of Y2K. Many old mainframe legacy systems were due to be replaced because they were not Y2K compliant. Most of these replacements were designed to run on client/server platforms. Production acceptance procedures were modified in many IT shops to better accommodate client/server production systems. This was not the first time mission-critical applications were implemented on client/server platforms, but the sheer increase in numbers due to Y2K compliance forced many IT shops to make their production acceptance processes more streamlined, effective, and enforceable.

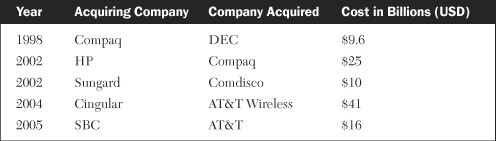

A number of major events helped shape the IT environment during the outset of the new millennium. Some of these events combined the forces of technology, economics, and politics. For example, a number of technology companies purchased other firms in a series of high-profile, mega-billion (U.S. dollar) acquisitions (see Table G-1). Many of these mergers put additional strain on their IT infrastructures in attempting to integrate dissimilar platforms, products, and processes.

Toward the end of the 1990s and beyond, many companies world-wide scrambled to provide a presence on the Web. This resulted in many hastily designed websites with little regard for security. Almost overnight, cyberspace became the playground of hackers and crackers, some merely wanting to pull off pranks but many others with malicious intents. New legislation, prosecutions, and jail time heightened the awareness of how vulnerable the Internet could be and greatly increased the emphasis on network and data security.

Companies also began using the Internet for e-commerce. This impacted not only security but other IT functions, such as availability and capacity. The giant toy retailer Toys-R-Us experienced this first-hand when the capacity of their network and website could not handle the huge amount of pre-Christmas traffic in 1999, leaving many a child without toys on Christmas morning.

The emotional, political, and economical effects of the 9/11 attacks on the World Trade Centers are still being felt and assessed. One of the results was greater concern and emphasis on disaster recovery and business continuity. This obviously impacts several other infrastructure processes such as availability, security, and facilities management.

The next 10 to 20 years will be very exciting times for IT infrastructures. A number of currently emerging technologies will be refined and made commonplace. Wireless technology will continue to evolve mobility and portability beyond its present state of emails and the Internet to possibly include full-scale application access and processing. Similarly, bio-metrics and voice recognition may well advance to such sophisticated levels that identify theft becomes a crime of the past. Researchers are already working on implanted chips that combine personal identification information with DNA characteristics to verify identities.

The manufacturing of computer chips will also drastically change in the next few years. Scientists are now experimenting with re-programmable chips that can re-configure themselves depending on the type of tasks they are required to perform. Improvements in power and miniaturization will continue, enabling tiny devices to process millions of operations almost instantaneously. The integration of technologies will continue accelerating beyond today’s ability to combine the functions of a telephone, camera, laptop computer, music player, geo-positioning satellite, and dozens of other functions yet to be developed. These various functions will all need to be controlled and managed with robust infrastructure processes.

Without question, the Internet will continue expanding and improving in performance and functions. Current concerns about capacity and network bandwidth are already being addressed. Improvements to security are enabling the proliferation of wireless technologies. Researchers are investing technologies today that will enable tomorrow’s next generation of the Internet to increase its speed, power, and capacity by ten-fold. This blinding speed could enable companies and users to utilize and integrate more powerful applications on the Web, and will greatly enhance streaming video, Web TV, and large-scale video conferencing.

Regardless of the number of technical advances and the usefulness that they offer, there will always be the need to design their use appropriately, to implement their features properly, and to manage their results responsibly. Issues of security, availability, performance, and business continuity will always be present and need to be addressed. This is where the prudent execution of IT systems management becomes most valuable.

Table G-2 summarizes these timelines and shows for which systems the disciplines emerged and evolved.

The trend to centralize back and optimize forward the corporate computer centers of the new millennium led to highly automated data centers. This emphasis on process automation led to increased scrutiny of the physical infrastructure of data centers, which, in turn, led to a valuable overhaul of facilities management. As a result, most major computer centers have never been more reliable in availability, nor more responsive in performance. Likewise, most have never been more functional in design nor more efficient in operation.

The 1990s saw the explosive and global growth of the Internet. The wide-spread use of cyberspace led to improvements in network security and capacity planning. Midrange computers and client/server platforms moved closer to each other, technology-wise, and pushed forward the envelope of enterprise-wide storage management. The popularity of UNIX as the operating system of choice for servers, particularly application and database servers, continued to swell during this decade. IBM adapted its mainframe architecture to reflect the popularity of UNIX and the Internet. Similarly, Microsoft refined its NT operating system and Internet Explorer browser to improve the use of the Internet and to aggressively compete against the incumbent UNIX and the upstart Linux.

PCs proliferated at ever-increasing rates, not only in numbers sold but in the variety of models offered. Client PCs of all sizes were now offered as thin, fat, laptop, palmtop, or even thumbtop. The interconnection of all these PCs with each other and with their servers caused capacity planning and availability for networks to improve as well.

The final decade of the second millennium concluded with a flurry of activity surrounding the seemingly omnipresent millennium bug. As most reputable IT observers correctly predicted, the problem was sufficiently addressed and resolved for most business applications months ahead of the year 2000. The domestic and global impacts of the millennium bug proved to have been relatively negligible. The vast efforts expended in mitigating the impacts of Y2K did help to refine both the production acceptance and the change management functions of systems management.

This appendix and the previous two appendices collectively presented a unique historical perspective of some of the major IT developments that took place during the latter half of the 20th century and the first decade of this century. These developments had a major influence on initiating or refining the disciplines of systems management. Table G-2 summarized a timeline for the 12 functions that are described in detail in Part Two of this book.