The TV picture

When you sit in your living room, watching TV, have you ever wondered how the picture is made up? If you are reading this book, the answer is probably ‘yes’.

How can we send a moving picture down a cable or over the air? Well, we have to break it up into pieces. Think of each picture you see on the screen at any one instant as a frame – like a single slide in a slide show – and TV is just a very fast slide show. Within each frame of the TV picture, there are areas of different brightness (luminance) and colour (chrominance). We call the smallest perceptible block of picture a ‘pixel’ (an abbreviation of picture element).

Now we need to know which parts of the picture are bright and which bits are particular colours. So we divide the frame up into tiny horizontal strips referred to as lines, and so each frame of a TV picture is made up of 525 or 625 lines. The number of lines depends on the TV standard used in a particular country – so for example, it is 525 lines in United States and Japan, and 625 lines in Europe. In addition we need to define how many frames we are going to send each second, and that is either 25 or 30fps (frames per second). In addition, the lines are ‘interlaced’ within frames – we will see how this works in a minute.

Line and frame structure of TV picture

Colour information

Let us just briefly look at the way the luminance and chrominance information is conveyed to the brain. The eye registers the amount of light coming from a scene, and visible light is made up of differing amounts of the three primary colours of red, green and blue. In terms of TV, the colour white is made up of proportions of red (30%), green (59%) and blue (11%) signals.

As with many aspects of TV technology, the method of transmitting a colour signal is a compromise. Back in the 1950s and 1960s when colour TV was being introduced, both the American (NTSC) and European (PAL) colour systems had to be able to produce a satisfactory black and white signal on the existing monochrome TV sets. Therefore, both colour systems derive a composite luminance signal from ‘matrixing’ (mixing) the red, green and blue signals. The matrix circuitry also produces colour difference signals, which when transmitted are disregarded by the monochrome receivers, but form the colour components for colour TV receivers for re-matrixing with the luminance signal to reconstitute the original full colour picture.

Why produce colour difference signals? Why not simply transmit the red, green and blue signals individually? Well, this is a demonstration of the early use of compression in the analogue domain.

Individual chrominance signals would each require full bandwidth, and as a video signal requires around 5 MHz of bandwidth for reasonable sharpness, the three colour signals would require 15 MHz in total. The analogue TV signal that is transmitted to the viewer is termed ‘composite’, as it combines the luminance, colour and timing signals into one combined signal, and the technique of embedding the colour information as ‘colour difference’ in along with the luminance signal requires only 5.5 MHz of bandwidth.

Capitalizing on the brain’s weaknesses

By relying on the relatively poor colour perception of the human brain, the colour signals can be reduced in bandwidth so that the entire luminance and chrominance signal fits into 5 MHz of bandwidth – a reduction of 3:1. This is achieved by the use of colour difference signals – known as the red difference signal and the blue difference signal – which are essentially signals representing the difference in brightness between the luminance and each colour signal.

The eye is more sensitive to changes in brightness than in colour, i.e. it can more easily see an error when a pixel is too bright or too dark, rather than if it has too much red or too much blue. What happened to the green signal, then? Well, if we have the luminance (which if you recall is made up of red, green and blue), and the red and blue difference signals, we can derive the green signal back from these three signals by a process of subtraction.

Frames

We now need to process each frame of the picture. In NTSC, there are 525 lines in every frame, and the frame rate is 30 fps; in PAL, there are 625 lines per frame, and the frame rate is 25 fps.

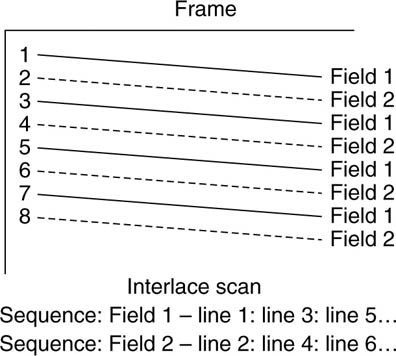

If a video frame is ‘progressively’ scanned, each line is scanned in sequence in the frame, and if this is done (as in PAL) 25 times per second, then a significant amount of bandwidth is required. If the frame is scanned in an interlaced structure, where each frame is divided into a pair of TV fields each sending odd- and then even-numbered lines in alternate sequence, then this both saves bandwidth and gives the appearance that the picture is ‘refreshed’ 50 times per second – which looks very smooth to the human eye, and the human brain quickly adapts. Thus, 25/30 frames per second are displayed as 50/60 fields per second with half the number of lines in each field.

Brightness = luminance

Colour = chrominance

Colour difference = luminance-red; luminance-blue

Two fields = one frame

625 or 525 = one frame