While neural networks have been around for a number of years, they have grown in popularity due to improved algorithms and more powerful machines. Some companies are building hardware systems that explicitly mimic neural networks (https://www.wired.com/2016/05/google-tpu-custom-chips/). The time has come to use this versatile technology to address data science problems.

In this chapter, we will explore the ideas and concepts behind neural networks and then demonstrate their use. Specifically, we will:

- Define and illustrate neural networks

- Describe how they are trained

- Examine various neural network architectures

- Discuss and demonstrate several different neural networks, including:

- A simple Java example

- A Multi Layer Perceptron (MLP) network

- The k-Nearest Neighbor (k-NN) algorithm and others

The idea for an Artificial Neural Network (ANN), which we will call a neural network, originates from the neuron found in the brain. A neuron is a cell that has dendrites connecting it to input sources and other neurons. It receives stimulus from multiple sources through the dendrites. Depending on the source, the weight allocated to a source, the neuron is activated and fires a signal down a dendrite to another neuron. A collection of neurons can be trained and will respond to a particular set of input signals.

An artificial neuron is a node that has one or more inputs and a single output. Each input has a weight associated with it. By weighting inputs, we can amplify or de-amplify an input.

This is depicted in the following diagram, where the weights are summed and then sent to an Activation Function that determines the Output.

The neuron, and ultimately a collection of neurons, operate in one of two modes:

- Training mode - The neuron is trained to fire when a certain set of inputs are received

- Testing mode - Input is provided to the neuron, which responds as trained to a known set of inputs

A dataset is frequently split into two parts. A larger part is used to train a model. The second part is used to test and verify the model.

The output of a neuron is determined by the sum of the weighted inputs. Whether a neuron fires or not is determined by an activation function. There are several different types of activations functions, including:

- Step function - This linear function is computed using the summation of the weighted inputs as follows:

The f(Net) designates the output of a function. It is 1, if the Net input is greater than the activation threshold. When this happens the neuron fires. Otherwise it returns 0 and doesn't fire. The value is calculated based on all of the dendrite inputs.

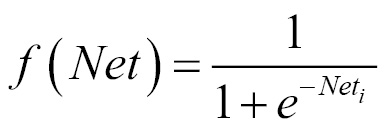

- Sigmoid - This is a nonlinear function and is computed as follows:

As the neuron is trained, the weights with each input can be adjusted.

In contrast to the step function, the sigmoid function is non-linear. This better matches some problem domains. We will find the sigmoid function used in multi-layer neural networks.

There are three basic training approaches:

- Supervised learning - With supervised learning the model is trained with data that matches input sets to output values

- Unsupervised learning - In unsupervised learning, the data does not contain results, but the model is expected to determine relationships on its own

- Reinforcement learning - Similar to supervised learning, but a reward is provided for good results

These datasets differ in the information they contain. Supervised and reinforcement learning contain correct output for a set of input. The unsupervised learning does not contain correct results.

A neural network learns (at least with supervised learning) by feeding an input into a network and comparing the results, using the activation function, to the expected outcome. If they match, then the network has been trained correctly. If they don't match then the network is modified.

When we modify the weights we need to be careful not to change them too drastically. If the change is too large, then the results may change too much and we may miss the desired output. If the change is too little, then training the model will take too long. There are times when we may not want to change some weights.

A bias unit is a neuron that has a constant output. It is always one and is sometimes referred to as a fake node. This neuron is similar to an offset and is essential for most networks to function properly. You could compare the bias neuron to the y-intercept of a linear function in slope-intercept form. Just as adjusting the y-intercept value changes the location of the line, but not the shape/slope, the bias neuron can change the output values without adjusting the shape or function of the network. You can adjust the outputs to fit the particular needs of your problem.

Neural networks are usually created using a series of layers of neurons. There is typically an Input Layer, one or more middle layers (Hidden Layer), and an Output Layer.

The following is the depiction of a feedforward network:

The number of nodes and layers will vary. A feedforward network moves the information forward. There are also feedback networks where information is passed backwards. Multiple hidden layers are needed to handle the more complicated processing required for most analysis.

We will discuss several architectures and algorithms related to different types of neural networks throughout this chapter. Due to the complexity and length of explanation required, we will only provide in-depth analysis of a few key network types. Specifically, we will demonstrate a simple neural network, MLPs, and Self-Organizing Maps (SOMs).

We will, however, provide an overview of many different options. The type of neural network and algorithm implementation appropriate for any particular model will depend upon the problem being addressed.