MODULE 16

Host Hardening

In the previous module, we discussed some of the various threats that can affect hosts and networks. In this module, as well as the next one, we are going to look at how to protect your hosts. Keep in mind that hosts are pretty much anything that processes data or may be able to acquire an IP address on a network. So the definition of hosts includes not only computers, but also servers, laptops, tablets, and even smartphones. Hosts can also include items that you might not otherwise consider computers; some of these things are even classified in a category known as the Internet of Things. These could be devices that control temperature, humidity, or even electricity usage in the house, for example. Increasingly, more and more devices are connected to the Internet or other networks and are considered hosts. Most of the items we will discuss in this module apply to all of these types of hosts, to varying degrees. And all of these items are vulnerable to some type of threat and must be hardened to protect the host against various threats.

Hardening Hosts

When a host is hardened, it’s considered to be very locked down, to have minimum functionality, to be current with all the latest patches, to have user accounts and other configuration settings properly set, and so on. Hardening a host is really the best way to mitigate against the multitude of vulnerabilities that can affect each host, as well as protect against various threats that exist, including malware, unauthorized access, and so on. That’s what host hardening is about—mitigating threats. In the next few sections, we’ll discuss the different ways to harden a host. We’ll take a look at hardening the operating system itself, the applications that run on the host, and the steps we can take to protect host hardware. Although we also mention host-based firewalls and intrusion detection systems in this module, we’ll cover the networking portion of host hardening in the next module of the book.

Secure Configuration

When an operating system is first installed, it’s not secure at all. The default configuration leaves a wide variety of attack vectors open, since normally users want full functionality out of the box, rather than security. Unfortunately, most users intend to go back later and secure their computers, but this doesn’t always happen, so computers are left in their default configuration states. The following configuration items need to be examined and changed to lock down our systems a bit better than they are when they first come out of the box.

Disabling Unnecessary Services

All hosts run services that perform different functions. Some hosts, however, run services that they may not necessarily need to run. In addition to wasting processing power and resources, running unnecessary services is also a security concern. Services can be vulnerable to attacks for a variety of reasons, including unchecked privilege use, accessing sensitive resources, and so on. Adding unnecessary services to a host increases the vulnerabilities present on that host, widening the attack surface someone might use to launch an attack on the host. One of the basic host hardening principles in security is to disable any unnecessary services. For example, if you have a public-facing web server, it shouldn’t be running any services that could be avenues of attack into the host, or worse, into the internal network. For example, a public-facing web server shouldn’t be running e-mail, Dynamic Host Configuration Protocol (DHCP), Domain Name System (DNS), and so on. Internal hosts have the same issues; workstations don’t need to be running File Transfer Protocol (FTP) servers or any other service that the user doesn’t explicitly need to perform his or her job functions.

You should take the time to inventory all of the different services that users, workstations, servers, and other devices need to perform their functions, and turn off any that aren’t required. Often, when a device is first installed, it is running many unnecessary services by default; often these do not get disabled. Administrators should take care to disable or uninstall those services, or at least configure them to run more securely. This involves restricting any unnecessary access to resources on the host or network, as well as following the principle of least privilege and configuring those services to run with limited privilege accounts.

Protecting Management Interfaces and Applications

On any type of host, whether it is a workstation or server, the principles of least privilege also apply to management interfaces and applications. For example, most users should not be able to access certain portions of the computer management console, nor should they be able to access utilities to change security configurations on the system. Administrative access to these utilities may be permissible, but only to people whose job requires them to access these interfaces. Even if the user doesn’t have permission to perform some of the functions in these management interfaces, it’s not a good idea to allow the user to see or access them. It’s best to deny them the ability to be able to execute the utility or interface, through the local security policy on a workstation or group policy in an Active Directory environment.

The same applies to certain applications. Even applications that include user-level functionality should have their management interfaces or privileged functionality hidden from ordinary users and restricted through rights and privilege management. Not only does this reduce the temptation from users to attempt higher level access, but it also denies them the opportunity to see how administrative functions control the system and applications, which might give them insight into bypassing those functions. Restricted or constrained interfaces are generally good security practices for applications or utilities, where the different levels of user privileges can view and access only the certain functions they require for their jobs.

Password Protection

We discuss password protections throughout this book; in this module, the focus is on ensuring that not only is the host password-protected using standard security best practices, but also applications and utilities, where necessary and appropriate, are also protected if they have individual authentication mechanisms installed on them. For example, even if a user has a valid username and password to access a host, they shouldn’t necessarily be granted credentials to access every application or utility on that host. Password protecting data or applications where possible is not only good security practice, but it also follows the principle of least privilege.

As we mentioned, best practices should always be used in password-protection schemes. Although the use of passwords is one of the weakest forms of authentication available, it’s all too common, and as such should at least be managed in the most secure way possible. Best practices for password protection on a host include the use of long, complex passwords that aren’t dictionary words; password expiration; rules against password sharing; use of individual passwords versus group passwords; limitation on the number of previous passwords that can be used; and frequent password changes. As a security professional, you should also audit password strength by performing password cracking on certain systems at random intervals to ensure that your users are creating secure passwords. Operating systems can go far in enforcing a secure password policy, and most modern systems support secure password requirements. Figure 16-1 shows an example of a local computer password policy.

Figure 16-1 Local computer password policy

Disabling Unnecessary Accounts

Out-of-the-box operating systems often include default accounts with passwords that are either blank or don’t meet the organization’s password complexity requirements. Some of these default accounts may not even be necessary for the normal day-to-day operation of the host. A good example of such an account is the Windows default guest account. This account may not be required in the environment the host resides in, so it should be disabled immediately after installing the operating system. In most modern versions of Windows, the guest account is usually already disabled; however, earlier versions of Windows were installed with the guest account enabled by default. Linux operating systems are also prone to this issue; many accounts come with a default Linux installation and should be examined to see if they are really needed, and then disabled or deleted if they aren’t necessary for the day-to-day operation of the host.

Operating System Hardening

Most operating systems are not secure by default. Although this has improved over the years as newer versions of operating systems have been released, there are still issues with default configurations that should be addressed before the host is put into production. User accounts should be configured correctly, configurations should be set up securely, and the operating system should be patched with the latest security updates. All of this ensures that the host is hardened and ready to be plugged into the network. In the next several sections, we will discuss hardening the operating system in depth.

Operating System Security and Settings

Hardening an operating system means several things, but mostly it’s about configuring the operating system and setting security options appropriately. Although each operating system provides different methods and interfaces for configuring security options, we’re not going to focus on one specific operating system in this module. Instead, we’ll focus on several configuration settings that should be considered regardless of operating system.

Regardless of the operating system in use, you should focus on privilege levels and groups (administrative users and regular user accounts); access to storage media, shared files, and folders; and rights to change system settings. Additional settings can include encryption and authentication settings, account settings such as lockout and login configuration, and password settings that control strength and complexity, password usage, and expiration time. Other system settings that directly affect security should be considered as well, including user rights and the ability to affect certain aspects of the system itself, such as network communication. All of these configuration settings contribute to the overall hardening of the operating system.

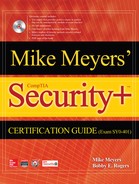

Figure 16-2 shows a sample of some of the different options that can be configured for security in Windows. Figure 16-3 shows other options that are configured in a Linux operating system.

Figure 16-2 Security options in Windows 8

Figure 16-3 Security options in Linux

Patch Management

It’s not unusual for issues to arise occasionally with operating systems, where bugs are discovered in the OS, or functionality needs to be fixed or improved—and, of course, newly discovered vulnerabilities have to be addressed. Operating system vendors release patches and security updates to address these issues. For the most part, modern operating systems are configured to seek out and download patches from their manufacturers automatically, although in some cases this has to be done manually. In a home environment, it’s probably okay for hosts to download and install patches automatically on a regular basis. In an enterprise environment, however, a more formalized patch management process should be in place that is closely related to configuration and change management.

In a patch management process, patches are reviewed for their applicability in the enterprise environment before they are installed. A new patch or update should be researched to discover what functionality it fixes or what security vulnerabilities it addresses. Administrators should then look at the hosts in their environment to see if the patch applies to any of them. If it is determined that the patch is needed, it should be installed on test systems first to see if it causes issues with security or functionality. If it does cause significant issues, the organization may have to determine whether it will accept the risk of breaking functionality or security by installing the new patch, or if it will accept the risk incurred by not installing the patch and correcting any problems or issues the patch is intended for. If there are no significant issues, then there should be a formal procedure or process for installing the patch on the systems within the environment. This may be done automatically through centralized patch management software, or in some cases, manual intervention may be required and the patch may have to be installed individually on each host in the enterprise.

In addition, it may be wise to run a vulnerability scanner on the host both before and after installing the patch to ensure that it addresses the problem as intended. Patches installed on production systems should be documented, so that if there is a problem that isn’t immediately noticed, the patch installation process can be checked to determine if the patch was a factor in the issue.

Trusted OS

A trusted OS is a specialized version of an operating system, created and configured for high-security environments. It may require very specific hardware to run on. Trusted operating systems are also evaluated by a strict set of criteria and are usually used in environments such as the US Department of Defense and other government agencies, where multi-level security requirements are in place. An example of the criteria that a trusted OS must meet is the Common Criteria, a set of standards that operating systems and other technologies are evaluated against for security. Trusted operating systems have very restrictive configuration settings and are hardened beyond the level needed for normal business operations in typical security environments. Examples of trusted operating systems include Trusted Solaris, SE Linux, and AIX 5. Some operating systems, such as Windows 7 and Windows Server 2008 R2, have been certified as trusted operating systems, with certain configuration changes.

Anti-Malware

In Module 15, we discussed malware and some of the effects it can have on a host. So it makes sense that in any discussion on host security and hardening, we should give some attention to dealing with malware. Any discussion on defending your hosts from malware obviously includes anti-malware products. In earlier years, you sometimes needed to install separate products that focused specifically on antivirus, antispam, anti-spyware, pop-up blockers, and adware. These days, however, most available products tend to cover the entire gamut of “bad-ware”; they also serve as combination firewalls, intrusion detection systems, and overall all-in-one security applications.

While there are definitely advantages to this all-in-one approach, such as integrated threat prevention, central updates for all of your security products, and standardization, in some instances it may be better to have different products that fulfill these security roles on a host. This theory is called “defense diversity” and is often employed in architectural consideration, such as network-based firewall appliances, for example. The theory is that if you use several similar products from the same manufacturer, they are usually all vulnerable to the same new exploit at the same time, so an attacker could take advantage of this scenario and make it through multiple layers of defenses quickly and easily if those vulnerabilities are not mitigated. In a diversity of defense scenario, you might have two firewalls on your network perimeter, for example, that are from two different manufactures. That way, a vulnerability found in one will not necessarily be subject to exploit on the other. Obviously, there are disadvantages to this practice as well, including lack of standardization as well as the logistics involved in maintaining configuration settings, updates, and patches on two separate platforms.

With anti-malware solutions, it’s still probably a good idea to maintain some separate and specialized applications for detecting and eradicating malware from a host, or at least use a combination of programs working together. One reason for this is that all-in-one anti-malware solutions tend not to catch everything that a host could get in terms of the various types of malware. Having a few different products installed, especially at different levels within the infrastructure (such as an antivirus solution on a host, as well as an antispam solution on an e-mail server), increases the likelihood that a piece of malware missed by one solution will be caught by another.

Anti-malware obviously does not do any good if it is not updated with the latest signatures. This is an absolute must, since new malware is released almost every day, and existing malware can change signatures to prevent detection. Anti-malware solutions should be configured to get updates quickly and regularly from the vendor’s site or from a designated repository within the organization if the enterprise centrally manages antivirus solutions. Figure 16-4 shows an example of a common anti-malware all-in-one solution.

Figure 16-4 A common anti-malware all-in-one solution for Windows

Other Host Hardening Measures

Beyond securely configuring the operating system, user accounts, applications, and other configuration settings with a host, you can use other measures to harden and protect systems. Some of these reside on the hosts, while others are network-based; all help protect and monitor hosts for security issues. Others, such as hardware security, involve the physical aspects of protecting the host and its associated hardware. We’ll discuss some of these measures in the upcoming sections.

Whitelisting vs. Blacklisting Applications

Often, security administrators identify software that is harmful to computers or the organization for various reasons. In some cases, software may contain malicious code, or there may just be some reasons why the organization does not want to allow the use of certain applications, such as chat programs, file sharing programs, and so on. You can restrict access to these applications in several different ways, one of which is blacklisting. Blacklisting involves an administrator adding an undesirable piece of software or an application to a list on a content filtering device, in Active Directory group policy, or on another type of mechanism, so that users are not allowed to download, install, or execute these particular applications. This keeps certain applications off the host. Applications may be blacklisted by default until an administrator has made a decision on whether or not to allow them to be used on the host or the network.

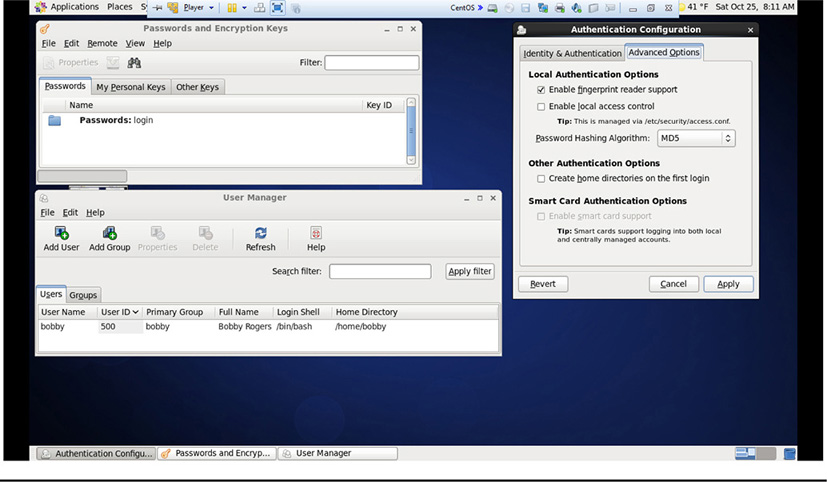

Whitelisting works similarly to blacklisting, except that a whitelist contains applications that are allowed on the network or that are okay to install and use on hosts. A whitelist would be useful in an environment where, by default, applications are not allowed unless approved by an administrator. This ensures that only certain applications can be installed and executed on the host. It also may help ensure that applications are denied by default until they are checked for malicious code or other undesirable characteristics before they are added to the whitelist. Applications and software can be restricted using several different criteria. You could restrict software based upon its publisher, whether or not you trust that publisher, or by file executable. You could also further restrict software by controlling whether certain file types can be executed or used on the host. All of these criteria could be used in a whitelist or blacklist. Figure 16-5 shows an example of how Windows can do very basic white- and blacklisting using different criteria in the security settings on the local machine. When using blacklisting in a larger infrastructure, using Active Directory for example, you have many more options to choose from.

Figure 16-5 Application control in Windows 8

Host-based Firewalls and Intrusion Detection

In addition to setting up security configurations on a host, you need to protect the host from a variety of network-based threats. Obviously, network-based firewalls and intrusion detection systems work to protect the entire network from malicious traffic. Host-based versions of these devices are usually software-based, installed on the host as an application. Their purpose is to filter traffic coming into the host from the network, based upon rules that inspect the traffic and make decisions about whether to allow or deny it into the host in accordance with those rules. Intrusion detection systems on hosts serve to detect patterns of malicious traffic, such as those that may target certain protocols or services, that appear to cause excessive amounts of traffic, or other types of intrusion.

Host-based firewalls and intrusion detection systems may be included in the operating system, but they can also be independent applications installed on the host. Like their network counterparts, they should be carefully configured to allow only the traffic necessary into the host for it to perform its function. They should also be baselined to the normal types of network traffic that host may experience. Like their network counterparts, they should be updated with new attack signatures as needed and tuned occasionally as the network environment changes to ensure that they are fulfilling their role in protecting the host from malicious traffic, while permitting traffic the users need to do their jobs. Figure 16-6 shows a side-by-side example of both Windows and Linux firewalls.

Figure 16-6 Comparison of Windows and Linux firewalls

Hardware Security

Never underestimate the value of physical security when securing computers and associated peripherals. While technical security measures, such as locking down operating system configuration, are effective in preventing unauthorized user access to the data on the system, they can’t really do much to help if an unauthorized person has physical access to the host and simply picks it up and walks away with it. They also can’t help if someone takes the computer apart and pulls the hard drive or tries to destroy the computer. That’s where physical security comes in, which is the subject of Module 32. For now, however, we’ll discuss a part of physical security that involves your computer hardware.

Securing hardware can help prevent physical types of attacks, such as theft and destruction of hardware. You can use different methods, and those you actually implement depend upon the physical environment in which your hosts reside. For example, if your hosts reside in a very secure facility, you may not be inclined to use cable locks to secure workstations and laptops to a piece of furniture. However, if your machines aren’t in a physically secure area, this might be a good idea to keep them from walking away with someone.

Another option, especially if you are using mobile devices such as laptops and tablets, for example, is to store them in a safe or locking cabinet when no one is physically in the area to watch after them. Mobile device theft is a huge issue these days, and investing in a safe or secure cabinet is smart thing to do when you compare the prices of mobile devices (and possibly the sensitive data they contain) to a good locking cabinet. The same applies to removable media: when not in use, it should be locked up securely.

Hardware security also can be improved by creating policies that apply to the equipment used in the organization. For example, you should have a policy that dictates that a physical inventory of all hardware must be accomplished periodically, and that hardware must be signed out by the individual to which it is issued. This ensures accountability and gives people an extra incentive to take care of and protect the devices.

Maintaining a Host Security Posture

Although we discuss the more technical, and some of the physical, aspects of securing hosts in this module, all of these fall under the overall management of hosts and the network infrastructure in the organization. And, from a management perspective, formalized programs should be in place to keep hosts secure, through policies, procedures, and configuration control. Configuration control is used to establish and maintain a baseline operating system and standardized applications and hardware configuration for all of the hosts in the organization. Additionally, it ensures that deviations from this baseline are adjusted and handled as necessary. In the next three sections, we’ll discuss the different aspects of configuration control relating to baseline configurations.

Initial Baseline Configuration

One of the first steps in getting the hosts in your organization under configuration control is to establish a standardized baseline for them. Standardizing configuration settings throughout the infrastructure has several benefits, including more efficient maintenance, easier problem solving when host issues occur, and, of course, better security by conforming to uniform policy requirements for hosts.

Establishing this initial configuration baseline has its challenges. First, if all of the hosts in the organization are not already configured according to some standard procedures, getting this going will be difficult. Reining in “rogue” workstations with different software installed on them, different security settings, and, in some cases, even different operating systems, are some of these challenges. Also, deciding exactly what the “standard” configuration should be is a challenge, since one standard likely won’t work for the entire organization. Different user groups will have different software and security requirements, so you may actually have several standard baselines, each of which would apply to a different group. The goal, of course, is to keep these baselines to as few as possible and document good reasons why a different standard baseline is needed for a particular group as well as any other approved deviations to the baseline.

Continuous Security Monitoring

It’s not enough simply to set a baseline, install hosts, and walk away, thinking it will never change. Security baselines require constant monitoring to ensure that the inevitable changes that will happen are discovered and addressed. Changes to the baseline occur for a number of reasons. Whenever new software is installed, changes to the configuration of a host often happen and are often necessary for the software to work properly. The same applies to patches and updates installed on hosts, which change operating system and applications settings, as well as security settings for the host. Another reason that settings change are users. If given permission and opportunity to do so, users will often install applications and make changes to the system that causes it to deviate from its standard baseline.

Continuous security monitoring can address some of these issues (and more) by tracking the configuration of the system and alerting administrators to changes that occur, regardless of whether they are a result of innocuous software or patch installations, well-intended user changes, or even malicious changes that result from host attacks. Monitoring (discussed in more depth in Module 23) may use various techniques, including both manual and automated means. Manual means, when necessary, include sitting at the host reviewing log files, running local software to check for system integrity, dumping configuration files for review, and so on. Obviously, this isn’t practical on a widespread basis, beyond more than a couple of hosts. Automated means, which use host-based applications and agents that report back to centralized monitoring stations (think use of SNMP as one of many examples), centralized log collection and analysis systems, real-time configuration monitoring, and so on, are much better ways to monitor baseline configurations continuously, especially in a large enterprise environment. We’ll discuss some of these methods in depth later on in the book.

Remediation

The objective of continuous monitoring of host configuration baselines is to detect deviations in the baseline. Once any changes to the host configuration are detected, decisions will need to be made about whether they are acceptable changes or not. If they are acceptable, they should be added to the current baseline. If they are not acceptable, remediating them is the next step. Remediation means correcting these unwanted changes and returning the system back to the standard configuration baseline. The decision that a change to the baseline is acceptable or not should follow a formal process of configuration or change management, as defined in organizational policy and carried out through a change management process. Obviously, though, before this decision is made on a permanent basis, changes should be reverted back to the standard baseline to prevent any potential security or interoperability issues, reducing risk to the organization.

Module 16 Questions and Answers

Questions

1. All of the following are characteristics of host hardening, except:

A. Minimum needed functionality

B. Maximum level of privileges for each user

C. Patched and updated

D. Removal of unnecessary applications

2. You have an external DNS server that has been the target of a lot of traffic directed at SMTP lately. The server is required to make and respond to name resolution queries only. You examine the configuration of the server and find that the sendmail utility is configured and running on the host, and the server is also listening for inbound connections on TCP port 25. Which of the following actions should you take?

A. Disable sendmail and any other program using TCP port 25.

B. Configure sendmail to send and receive e-mail from designated hosts only.

C. Disable the name resolution services on the host.

D. Configure DNS service to block inbound e-mail into the host.

3. Which of the following should always be considered when configuring a host?

A. Allowing access to configuration of management interfaces and configuration utilities

B. Maximum functionality for user accounts

C. Principle of least privilege

D. Shared passwords for administrative groups of users

4. Which of the following systems is normally required in high-security environments, and is used to address multilevel security requirements?

A. 256-bit encryption

B. Biometric authentication

D. Trusted operating system

5. On which of the following might an antispam solution be best installed in an enterprise infrastructure?

A. Workstation

B. E-mail server

C. Firewall

D. Proxy server

6. To prevent an application from being installed or executed on a host, __________ should be implemented.

A. blacklisting

B. greylisting

C. whitelisting

D. protected memory execution

7. Which of the following would be considered an effective measure in preventing a removable drive from being stolen when not in use?

A. Encrypting the drive

B. Requiring a strong password to unlock the drive

C. Securing the drive to the device using it with a cable lock

D. Locking the drive in a steel cabinet

8. Which of the following should you do before installing a patch on a production host in an enterprise environment?

A. Install host-based firewall and IDS applications.

B. Test the patch.

C. Determine the urgency of the patch and install it immediately if it is a critical security update.

D. Get approval from the end user.

9. Different groups in your organization require certain applications and security configuration settings that are unique to each group. You install a standard baseline image to the host computers for all the different groups. A developer group now complains that their applications do not work as they require, and that the security settings are too restrictive for them. They are able to document and show the necessity for less-restrictive security settings. Which of the following is your best course of action?

A. Make changes to each individual host within the developer group to accommodate their needs.

B. Withdraw the requirement for a standard baseline since it cannot be enforced without impacting productivity.

C. Require that the developer group change their procedures and applications to fit with the new standard baseline image.

D. Create a specialized baseline image that applies only to the developer group, and ensure that everyone else gets the standard baseline image.

10. All of the following are reasons to implement a continuous monitoring solution, except:

A. Ensure that any changes made are included in the updated standard security baseline.

B. Detect changes to the configuration baseline.

C. Detect security incidents.

D. Ensure that unauthorized changes are reversed.

Answers

1. B. Hosts should be configured with the minimum necessary level of privileges for each user, not the maximum.

2. A. Disabling sendmail and any other program using TCP port 25 is the best option, since the e-mail service is not needed on the host.

3. C. The principle of least privilege should always be considered when configuring a host, regardless of whether it is user accounts, management interfaces, or authentication methods.

4. D. A trusted OS is normally required in high-security environments and is used to address multilevel security requirements.

5. B. An antispam solution should be installed on an e-mail server.

6. A. To prevent an application from being installed or executed on a host, blacklisting should be implemented.

7. D. Locking the drive in a steel cabinet would prevent the drive from being stolen.

8. B. You should always test the patch before installing it on a production host in an enterprise environment.

9. D. Create a specialized baseline image that applies only to the developer group, and ensure that everyone else gets the standard baseline image.

10. A. Ensuring that any changes made are included in the updated standard security baseline is not the purpose of a continuous monitoring program. Decisions on whether these changes are acceptable or not, or if they should be included in the baseline, resides with the change management authority. Continuous monitoring is designed to detect all changes, acceptable or not.