Chapter 2

Competitive Research Techniques and Tools

In This Chapter

- Finding out how to equal your high-ranking competitors

- Calculating what your site needs to gain high ranking

- Running a Page Analyzer

- Using Excel to help analyze your competition

- Discovering other tools for analyzing your competitors

- Diving into SERP research

If you followed our suggestions in Chapter 1 of this minibook, you spent some time finding out who your real competitors are on the web, and you might have discovered that they are quite different from your real-world, brick-and-mortar competitors. You also found out that for each of your main keyword phrases, you probably have a different set of competitors. If you’re starting to feel overwhelmed and thinking that you’ll never be able to compete in such a busy, complicated marketplace, take heart! In this chapter, we show you how to get “under the hood” of your competitors' sites and find out why they rank so well.

Realizing That High Rankings Are Achievable

No matter what type of market your business competes in — whether broad-based or niche, large or small, national or local, corporate or home-based — you can achieve high rankings for your Internet pages by applying a little diligence and proper search engine optimization (SEO) techniques.

Your site may not be coming up at the top of search engine results for a specific keyword (yet), but someone else’s is. The websites that do rank well for your keywords are there for a reason: The search engines find them the most relevant. So in the online world, those pages are your competitors, and you need to find out what you must do to compete with them. What is the barrier to entry into their league? You need a model for what to change, and analyzing the pages that do rank well can start to fill in that model.

Getting All the Facts on Your Competitors

Identifying your competition on the web can be as easy as typing your main keywords into Google and seeing which pages rank above your own. (Note: If you know that your audience uses another search engine heavily, run your search there as well. But with a market share of more than 64 percent in the U.S. and even higher globally, we think Google offers the most efficient research tool.)

You want to know which web pages make it to the first search engine results page. After you weed out the Wikipedia articles and other non-competitive results, what are the top four or five web pages listed? Write down their web addresses (such as www.wiley.com) and keep them handy. Or, if you did more in-depth competition gathering, which we explain in Chapter 1 of this minibook, bring those results along. We’re going to take you on a research trip to find out what makes those sites rank so well for your keywords.

- On-page elements (such as content and Title tags and metadata)

- Links (incoming links to the page from other web pages, which are called backlinks, as well as outbound links to other pages)

- Site architecture

One basic strategy of SEO is this: Make yourself equal before you set yourself apart. But you want to analyze the sites that rank well because they are the least imperfect. You can work to make your site equal to them in all the ranking factors you know about first. When your page can play on a level field with the least imperfect sites, you’ll see your own rankings moving up. After that, you can play with different factors and try to become better than your competition and outrank them. That’s when the fun of SEO really starts! But we’re getting ahead of ourselves.

Calculating the Requirements for Rankings

As you look at your keyword competitors, you need to figure out what it takes to play in their league. What is the bare minimum of effort required in order to rank in the top ten results for this keyword? In some cases, you might decide the effort required is not worth it. However, figuring out what kind of effort is required takes research. You can look at each of the ranking web pages and see them as a human does to get an overall impression. But search engines are your true audience (for SEO, anyway), and they are deaf, dumb, and blind. They can’t physically experience the images, videos, music, tricks, games, bells, and whistles that may be on a site. They can only read the site’s text, count everything that can be boiled down to numbers, and analyze the data. To understand what makes a site rank in a search engine, you need research tools that help you think like a search engine.

Table 2-1 outlines the different research tools and procedures we cover in this chapter for doing competitor research. Although SEO tools abound, you can generally categorize them into several basic types of information-gathering: on-page factors, web server factors, relevancy, and site architecture. For each category of information gathering, we’ve picked out one or two tools and procedures to show you.

Table 2-1 Information-Gathering Tools for Competitor Research

Tool or Method |

Type of Info the Tool Gathers |

Page Analyzer |

On-page SEO elements and content |

Server Response Checker |

Web server problems or health |

Google [link:domain.com] query |

Expert relevancy and popularity (how many links a site has) |

Yahoo Site Explorer |

Expert relevancy and popularity |

View Page Source |

Content, HTML (how clean the code is) |

Google [site:domain.com] query |

Site architecture (how many pages are indexed) |

Microsoft Excel |

Not an information-gathering tool, but a handy tool for tracking all the data for analysis and comparison |

Of the three types of information you want to know about your competitors’ web pages — their on-page elements, links, and architecture — a good place to start is the on-page elements. You want to find out what keywords your competitors use and how they’re using them, look at the websites’ content, and analyze their other on-page factors.

Behind every web page’s pretty face is a plain skeleton of black-and-white HTML called source code. You can see a web page’s source code easily by choosing Source or Page Source from your browser’s View menu. If you understand HTML, you can look under the hood of a competitor’s web page. However, you don’t have to understand HTML for this book, or even to do search engine optimization. We’re going to show you a tool that can read and digest a page’s source code for you and then spit out some statistics that you’ll find very useful.

We do recommend that you know at least some HTML or learn it in the future: Your search engine optimization campaign will be a great deal easier for you to manage if you can make the changes to your site on your own. You can check out the many free HTML tutorials online, such as the one by W3Schools (http://www.w3schools.com/html/), if you need a primer on HTML.

Grasping the tools for competitive research: The Single Page Analyzer

The Single Page Analyzer tool tells you what a web page’s keywords are (by identifying every word and phrase that’s used at least twice) and computes their density. Keyword density is a percentage indicating the number of times the keyword occurs compared to the total number of words in the page. We also cover the Single Page Analyzer in Book II, Chapter 5, as it applies to analyzing your own website. When you run a competitor’s page through the Single Page Analyzer, it lets you analyze the on-page factors that help the web page rank well in search engines. Subscribers to SEOToolSet Pro can simply run the Multi Page Analyzer, but for those just using the Single Page Analyzer included with a free subscription to SEOToolSet Lite, we’ve included a step-by-step process to build a comparison tool for yourself.

Because you’re going to run the Single Page Analyzer report for several of your competitors’ sites and work with some figures, it’s time to grab a pencil and paper. Better yet, open a spreadsheet program such as Microsoft Excel, which is a search engine optimizer’s best friend. Excel comes with most Microsoft Office packages, so if you have Word, chances are you already have Excel, too. Microsoft Excel allows you to arrange and compare data in rows and columns, similar to a paper ledger or accounts book. (We talk about Microsoft Excel, but you might have another spreadsheet program such as Google Sheets, and that's fine, too.)

Here’s how to set up your spreadsheet:

- In Excel, open a new spreadsheet and name it Competitors.

Type a heading for column A that says URL or something that makes sense to you.

In this first column, you’re going to list your competitors’ web pages, one per row.

Under column A’s heading, type the URL (the web page address, such as www.bruceclay.com) for each competing web page (the pages that are ranking well in search results for your keyword phrase), one address per cell.

You can just copy and paste the URLs individually from the search results page if that’s easier than typing them.

Now you’re ready to run the Single Page Analyzer report for each competitor. You can use the free version of this tool available through our website. Here’s how to run the Page Analyzer:

- Go to

www.seotoolset.com/tools/free-tools/. - In the Single Page Analyzer section, enter a competitor’s URL (such as

www.competitor.com) in the Page URL text box. - Click the Run Page Analyzer button and wait while the report is prepared.

While you run this report for one of your own competitors, we’re going to use a Single Page Analyzer report we ran on a competitor for our classic custom cars website. The whole report contains a lot of useful information (including ideas for keywords you might want to use on your own site), but what we’re trying to gather now are some basic counts of the competitor’s on-page content. So we want you to zero in on a row of data that begins with “Used Words,” about halfway down the report and shown in Figure 2-1, which shows a quick summary of some important page content counts.

Figure 2-1: The summary row of a competitor’s on-page elements from a Single Page Analyzer report.

Next, you’re going to record these summary counts in your spreadsheet. We suggest you create some more column headings in your spreadsheet, one for each of the following seven bold items (which we also explain here):

- Title: Shows the number of words in the page’s Title tag (which is part of the HTML code that gets read by the search engines).

- Meta Description: Shows the number of words in the description Meta tag (also part of the page’s HTML code).

- Meta Keywords: Shows the number of words in the keywords Meta tag.

- Headings: The number of headings in the text (using HTML heading tags).

- ALT Tags: The number of Alt attributes (descriptive text placed in the HTML for an image file) assigned to images on the page.

- Body Words: The number of words in the page text that’s readable by humans.

- All Words: The total number of words in the page content, including onscreen text plus HTML tags, navigation, and other.

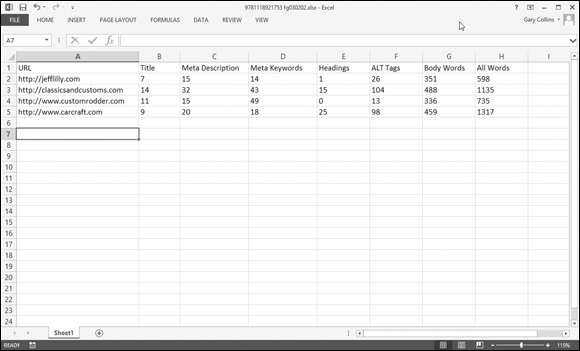

Now that you have the first several columns labeled, start typing in the counts from the report for this competitor. Run the Single Page Analyzer report for each of your other competitors’ URLs. You’re just gathering data at this point, so let yourself get into the rhythm of running the report, filling in the data, and then doing it all over again. After you’ve run the Single Page Analyzer for all your competitors, you should have a spreadsheet that looks something like Figure 2-2.

Figure 2-2: The spreadsheet showing data gathered by running the Single Page Analyzer.

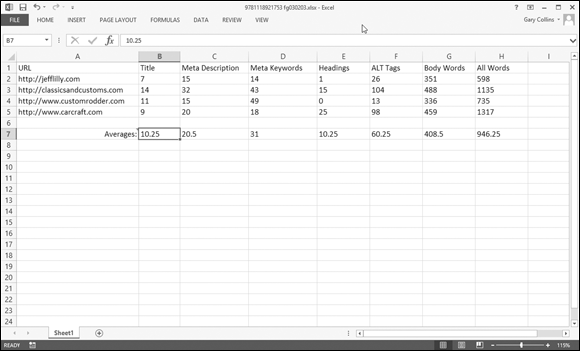

After you gather some raw numbers, what can you do with them? You’re trying to find out what’s “normal” for the sites that are ranking well for your keyword. So far you’ve gathered data on eight different factors that are part of the search engines’ ranking systems. Now it’s just simple math to calculate an average for each factor. You can do it the old-fashioned way, but Excel makes this super-easy if you use the AutoSum feature (found in the Formulas toolbar). Click to highlight a cell below the column you want to average and then click formulas, then AutoSum, and then Average. When you select Average, Excel automatically selects the column of numbers above the field containing the average calculation, so press Enter to approve the selection. Your average appears in the highlighted field, as shown in Figure 2-3.

Figure 2-3: Excel’s tools let you compute averages effortlessly.

You can create an average for each of the columns in literally one step. (You can see why we like Excel!) In Figure 2-3, if you look at the outlined cell to the right of the Average cell, notice the slightly enlarged black square in the lower-right corner. Click and drag that little square to the right, all the way across all the columns that have data, and then let go. Averages should now display for each column because you just copied the AutoSum Average function across all the columns where you have data.

You can next run a Single Page Analyzer on your own website and compare these competitor averages to your own figures to see how far off you are from your target. For now, just keep this spreadsheet handy and know that you’ve taken some good strides down the SEO path of information gathering. In Chapter 3 of this minibook, we go into depth, showing you how to use the data you gathered here, and begin to plan the changes to your website to raise your search engine rankings.

Discovering more tools for competitive research

Beyond the Single Page Analyzer, there are some other tricks that you can use to size up your competition. Some of this may seem a little technical, but we introduce each tool and trick as we come to it. We even explain what you need to look for. Don’t worry: We won’t turn you loose with a bunch of techie reports and expect you to figure out how to read them. In each case, there are specific items you need to look for (and you can pretty much ignore the rest).

Mining the source code

Have you ever looked at the underside of a car? Even if it’s a shiny new luxury model fresh off the dealer’s lot, the underbelly just isn’t very pretty. Yet the car’s real value is hidden there, in its inner workings. And to a trained mechanic’s eye, it can be downright beautiful.

You’re going to look at the underside of your competitors’ websites, their source code, and identify some important elements. Remember that we’re just gathering facts at this point. You want to get a feel for how this web page is put together and notice any oddities. You may find that the page seems to be breaking all the best-practice rules but somehow ranks well anyway — in a case like that, it’s obviously doing something else very right (such as having tons of high-quality backlinks pointing to the page). On the other hand, you might discover that this is a very SEO-savvy competitor that could be hard to beat.

To look at the source code of a web page, do the following:

- View a competitor’s web page (the particular page that ranks well in searches for your keyword, which may or may not be the site’s home page) in your browser.

- From the View menu, choose Source or Page Source (depending on the browser).

- Use an external CSS (Cascading Style Sheet) file to control formatting of text and images. Using style sheets eliminates font tags that clutter up the text. Using a CSS that’s in an external file gets rid of a whole block of HTML code that could otherwise clog the top section of your web page and slow everything down (search engines especially).

- JavaScript code should also be off the page in an external JS file (for the same clutter-busting reasons).

- Get to the meat in the first hundred lines. The actual text content (the part users read in the Body section) shouldn’t be too far down in the page code. We recommend limiting the code above the first line of user-viewable text overall.

You want to get a feel for how this web page is put together. Pay attention to issues such as:

- Doctype: Does it show a Doctype at the top? If so, does the Doctype validate with W3C (World Wide Web Consortium) standards? (Note: We explain this in Book IV, Chapter 4 in our recommendations for your own website.)

- Title, description, keywords: Look closely at the Head section (between the opening and closing Head tags). Does it contain the Title, Meta description, and Meta keywords tags? If you ran the Single Page Analyzer for this page, which we describe how to do in the section “Grasping the tools for competitive research: The Single Page Analyzer,” earlier in this chapter, you already know these answers, but now notice how the tags are arranged. The best practice for SEO puts them in this order: title, description, keywords. Does the competitor’s page do that?

- Other Meta tags: Also notice any additional Meta tags (“revisit after” is a popular and perfectly useless one) in the Head section. Webmasters can make up all sorts of creative Meta tags, sometimes with good reasons that may outweigh the cost of expanding the page code. However, if you see that a competitor’s page has a hundred different Meta tags, you can be pretty sure it doesn't know much about SEO.

- Heading tags: Search engines look for heading tags such as H1, H2, H3, and so forth to confirm what the page is about. It’s logical to assume that a site will make its most important concepts look like headings, so these heading tags help search engines determine the page’s keywords. See whether and how your competitor uses these tags. (We explain the best practices for heading tags in Book IV, Chapter 1, where we cover good SEO-friendly site design.)

- Font tags, JavaScript, CSS: As we mention in the previous set of bullets, if these things show up in the code, the page is weighted down and not very SEO-friendly. Outranking pages with a lot of formatting code might end up being easier than you thought.

Seeing why server setup makes a difference

Even after you've checked out the source code for your competitor's pages (which we talk about in the preceding section), you're still in information-gathering mode, sizing up everything you can about your biggest competitors for your chosen keywords. The next step isn’t really an on-page element; it’s more the foundation of the site. We’re looking beyond the page now at the actual process that displays the page, which is on the server level. In this step, you find out how a competitor’s server looks to a search engine by running a server response checker utility.

Generally, an SEO-friendly site should be free of server problems such as improper redirects (a command that detours you from one page to another that the search engine either can't follow or is confused by) and other obstacles that can stop a search spider in its tracks. When you run the server response checker utility, it attempts to crawl the site the same way a search engine spider does and then spits out a report. In the case of our tool (available at no charge as part of the SEOToolSet at www.seotoolset.com), the report lists any indexing obstacles it encounters, such as improper redirects, robot disallows, cloaking, virtual IPs, block lists, and more. Even if a page’s content is perfect, a bad server can keep it from reaching its full potential in the search engine rankings.

You can use any server response checker tool you have access to, but we’re going to recommend ours because we know it works, it returns all the information we just mentioned, and it’s free. Here’s how you can run the free SEOToolSet Server Response Checker:

- Go to

www.seotoolset.com/tools/free-tools/. - Under the heading Check Server Page, enter the URL of the site you want to check in the Your URL text box, and then click the Check Response Headers button.

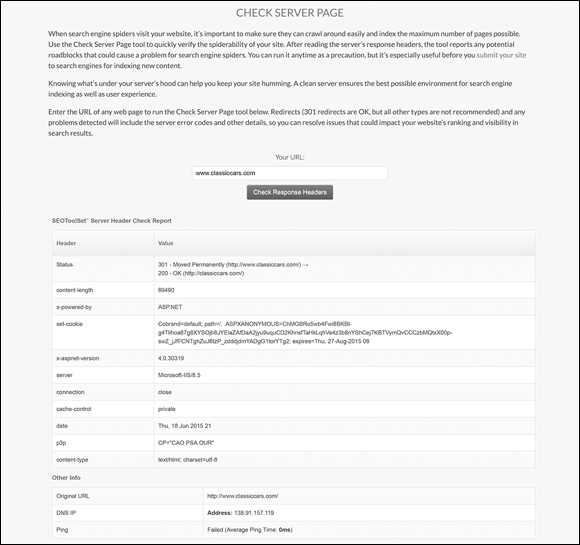

When we run the Check Server Page report for a classic cars competitor site, the report looks like Figure 2-4.

Figure 2-4: The Check Server Page report for a competitor’s web page.

In the report shown in Figure 2-4, you can see that it has a Sitemap.xml file, which serves to direct incoming bots. The more important item to notice, however, is the number 200 that displays in the Header Info section. This is the site’s server status code, and 200 means that its server is A-okay and is able to properly return the page requested.

Table 2-2 explains the most common server status codes. These server statuses are standardized by the World Wide Web Consortium (W3C), so they mean the same thing to everyone. The official definitions can be found on its site at http://www.w3.org/protocols/rfc2616/rfc2616-sec10.html if you want to research further. We go into server code standards in greater depth in Book IV. Here, we boil down the technical language into understandable English to show you what each server status code really means to you.

Table 2-2 Server Status Codes and What They Mean

Code |

Description |

Definition |

What It Means (If It’s on a Competitor’s Page) |

200 |

Okay |

The web page appears as expected. |

The server and web page have the welcome mat out for the search engine spiders (and users too). This is not-so-good news for you, but it isn’t surprising either because this site ranks well. |

301 |

Moved Permanently |

The web page has been redirected permanently to another web page URL. |

When a search engine spider sees this status code, it simply moves to the appropriate other page. |

302 |

Found (Moved Temporarily) |

The web page has been moved temporarily to a different URL. |

This status should raise a red flag. Although there are supposedly legitimate uses for a 302 Redirect code, they can cause serious problems with search engines and could even indicate something malicious is going on. Spammers frequently use 302 Redirects. |

400 |

Bad Request |

The server could not understand the request because of bad syntax. |

This could be caused by a typo in the URL. Whatever the cause, it means the search engine spider is blocked from reaching the content pages. |

401 |

Unauthorized |

The request requires user authentication. |

The server requires a login in order to access the page requested. |

403 |

Forbidden |

The server understood the request, but refuses to fulfill it. |

Indicates a technical problem that would cause a roadblock for a search engine spider. (This is all the better for you, although it may be only temporary.) |

404 |

Not Found |

The web page is not available. |

You’ve seen this error code; it’s the Page Can Not Be Displayed page that displays when a website is down or nonexistent. Chances are that the web page is down for maintenance or having some sort of problem. |

500 and higher |

Miscellaneous Server Errors |

Individual errors are defined in the report. |

The 500–505 status codes indicate that something’s wrong with the server. |

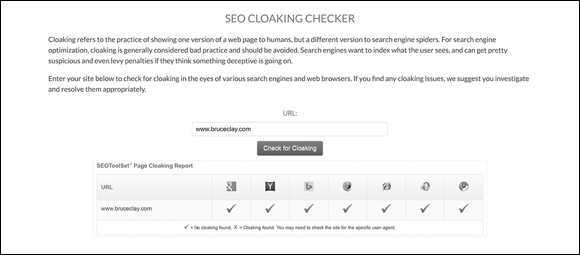

The other thing you want to glean is whether the page is cloaked (the page shows one version of a page’s content to users but a different version to the spiders). Enter the site URL you want to check into the SEO Cloaking Checker tool (also located at www.seotoolset.com/tools/free-tools/). This software tool runs through the site, identifying itself as different services, including Mozilla Firefox, Googlebot, and Bingbot, to ensure that they all match (see Figure 2-5).

Figure 2-5: Cloaking info from the SEO Cloaking Checker report.

To manually detect whether a competitor’s site uses cloaking, you need to compare the spiderable version to the version that you are viewing as a user. So do a search that you know includes that web page in the results set, and click the cached link under that URL when it appears. This shows you the web page as it looked to the search engine the last time it was spidered. Keeping in mind that the current page may have been changed a little in the meantime, compare the two versions. If you see entirely different content, you’re probably looking at cloaking.

Tracking down competitor links

So far, we’ve been showing you how to examine your competitors’ on-page elements and their server issues. It’s time to look at another major category that determines search engine relevance: backlinks.

Why do search engines care so much about backlinks? Well, it boils down to the search engines’ eternal quest to find the most relevant sites for their users. They reason that if another web page thinks your web page is worthy of a link, your page must have value. Every backlink to a web page acts as a vote of confidence in that page.

The search engines literally count these “votes.” It’s similar in some ways to an election, but with one major exception: Not every backlink has an equal vote. Backlinks that come from authorities in your subject area will have greater weight than those that do not. For example, if you have a site about search engine optimization, a backlink from Search Engine Land (a popular digital marketing news website) will carry more weight than a backlink from your neighbor's blog about pet care. However, that backlink from the pet care blog would be valuable to a pet store's website. The value of a backlink depends on where it's pointing. You can read more about how linking works in Book VI.

In the search engines’ eyes, the number of mentions and backlinks to a web page increases its expertness factor (and yes, that is a word, because we say so). Lots of backlinks indicate the page’s popularity and make it appear more trustworthy as a relevant source of information on a subject. This alone can cause a page to rank much higher in search engine results when the links come from related sites.

You can find out how many backlinks and mentions your competitors have:

- Using tools: There are a number of paid tools, such as Link Detox (

www.linkdetox.com) and Majestic (https://majestic.com), that let you analyze your competitors' links. Majestic also provides a free plug-in (https://majestic.com/majestic-widgets/plugins) that provides backlink data for any page you visit, including the number of backlinks and the quality of those backlinks. - Using Google: In the regular search box on

www.google.com, type the query [“domain.com” -site:domain.com], substituting the competing page’s URL for domain.com, and click the Google Search button. This returns all pages that mention your site, usually as a link (and if it isn't, you can ask the site to make it a link!). You can also use [link:domain.com] but the numbers are less accurate.

In any event, you want to track your competitors’ backlink counts; this is very useful raw data. We suggest adding more columns to your competitor-data spreadsheet and recording the numbers given by Google and/or tools in columns so that you can compare your competitors’ numbers to your own.

Sizing up your opponent

If you walk onto a battlefield, you want to know how big your opponent is. Are you facing a small band of soldiers or an entire army with battalions of troops and air support? This brings us to the discussion of the website as a whole, and what you can learn about it.

So far we’ve focused a lot on the individual web pages that rank well against yours. But each individual page is also part of a website containing many pages of potentially highly relevant supporting content. If your competition has an army, you need to know.

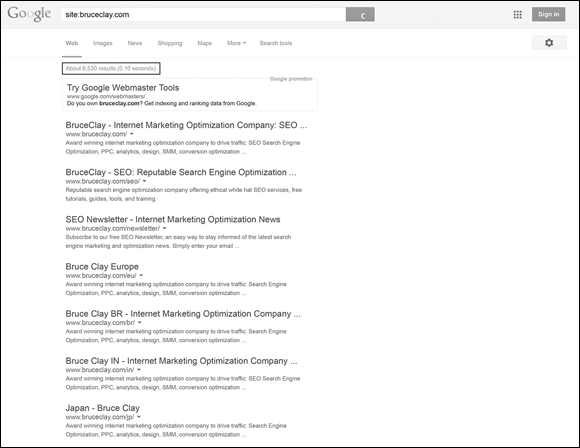

To find out how big a website is, you can use a simple Google search with the site: operator in front of the domain, as shown in Figure 2-6. At Google.com, enter [site:domain.com] in the search box (leaving out the square brackets, and using the competitor’s domain) and then click Google Search. The number of results tells you how many pages on the site have been indexed by Google — and what you're up against. You can also use this exact same process on Bing.

Figure 2-6: By searching for [site:bruceclay.com], you can identify how many pages Google has indexed for a site. In the case of www.bruceclay.com, we see Google has indexed 6,530 pages.

Comparing your content

You’ve been pulling in lots of data, but data does not equal analysis. Now it’s time to run research tools on your own web page and find out how you compare to your competition.

Run a Single Page Analyzer report for your web page, and compare your on-page elements to the figures you collected in your competitor-data spreadsheet (as we describe in the earlier section, “Grasping the tools for competitive research: The Single Page Analyzer”). Next, check your own backlink counts using Google and Yahoo. (See the earlier section, “Tracking down competitor links,” for details on how to do this.) Record all the numbers with today’s date so that you have a benchmark measurement of the “before” picture before you start doing your SEO.

After you have metrics for the well-ranked pages and your own page, you can tell at a glance how far off your page is from its competitors. The factors in your spreadsheet are all known to be important to search engine ranking, but they aren’t the only factors, not by a long shot. Google has more than 200 factors in its algorithm, and they can change constantly. However, having a few that you can measure and act on gives you a starting place for your search engine optimization project.

Penetrating the Veil of Search Engine Secrecy

The search engines tell you a lot, but not the whole story. Search engines claim that the secrecy surrounding their algorithms is necessary because of malicious spammers, who would alter their sites deceptively for the sole purpose of higher rankings. It’s in the search engines’ best interests to keep their methods a secret; after all, if they published a list of do’s and don’ts, and just what their limits and boundaries are, then the spammers would know the limits of the search engines' spam catching techniques. Also, secrecy leaves the search engines free to modify things any time they need to. Google changes its algorithm frequently. For instance, Matt Cutts of Google said the search engine makes more than 500 changes to the algorithm per year. No one knows what changed, how big the changes were, or when exactly they occurred. Instead of giving out the algorithm, search engines merely provide guidelines as to their preferences. This is why we say that SEO is an art, not just a science: Too many unknown factors are out of your control, so a lot of finesse and intuition are involved.

Other factors can complicate rankings as well. Here’s a brief list of factors, which have nothing to do with changes on the websites themselves, that can cause search engine rankings to fluctuate:

- The search engine changed its algorithm and now weighs factors differently.

- The search engine may be testing something new (a temporary change).

- The index being queried is coming from a different data center. (Google, for instance, has many data centers in different locations, which may have different versions of the index.)

- The search engine had a technical problem and restored data temporarily from cache or a backup version.

- Data may not be up-to-date (depending on when the search engine last crawled the websites).

Diving into SERP Research

You can use the search engines to help you analyze your competitors in many ways. You’re going to switch roles now and pretend for a moment that the high-ranking site is yours. This helps you better understand the site that is a model for what yours can become.

Start with a competitor’s site that’s ranking high for your keyword in the search engine results pages (SERPs). You want to find out why this web page ranks so well. It may be due to one of the following:

- Backlinks: Find out how many backlinks the web page has. Run a search at Google for ["www.domain.com/page.htm" =site:domain.com], substituting the competitor’s web page URL for domain.com. The number of results is an indicator of the site’s popularity with other web pages. If it’s high, and especially if the links come from related industry sites with good PageRank themselves, backlinks alone could be why the page tops the list.

- Different URL: Run a search for your keyword on Google to see the results page. Notice the URL that displays for the competitor’s listing. Keeping that URL in mind, click the link to go to the active page. In the address bar, compare the URL showing to the one you remembered. Are they the same? Are they different? If they’re different, how different? Although an automatic redirect from http://domain.com to http://www.domain.com (or vice versa) is normal, other types of swaps may indicate that something fishy is going on. Do the cache check in the next bullet to find out whether the page the search engine sees is entirely different than the one live visitors are shown.

Cached version: If you’ve looked at the web page and can’t figure out why it would rank well, the search engine may have a different version of the page in its cache (its saved archive version of the page). Whenever the search engine indexes a website, it stores the data in its cache. Note that some websites are not cached, such as the first time a site is crawled or if the spider is being told not to cache the page (using the Meta robots noarchive instruction) or if there is an error in the search engine's database.

To see the cached version of a page, follow these steps:

- Run a search on Google for your keyword.

- Click the drop-down icon next to the URL and click Cached.

View the cached version of the web page.

At the top of the page, you can read the date and time it was last spidered. You can also easily view how your keywords distribute throughout the page in highlighted colors.

You can also view a cached version of a page on Bing following the same steps.

The top-ranking web pages are not doing things perfectly. That would require that they know and understand every single one of Google's more than 200 ranking signals and are targeting them perfectly, which is highly improbable. However, the websites that rank highly for your keyword are working successfully with the search engines for the keyword you want. The web pages that appear in the search results may not be perfect, but if they rank at the top, they are the least imperfect of all the possible sites indexed for that keyword. They represent a model that you can emulate so that you can join their ranks. To emulate them, you need to examine them closely.

The top-ranking web pages are not doing things perfectly. That would require that they know and understand every single one of Google's more than 200 ranking signals and are targeting them perfectly, which is highly improbable. However, the websites that rank highly for your keyword are working successfully with the search engines for the keyword you want. The web pages that appear in the search results may not be perfect, but if they rank at the top, they are the least imperfect of all the possible sites indexed for that keyword. They represent a model that you can emulate so that you can join their ranks. To emulate them, you need to examine them closely. You need to know as much as you can about the web pages that rank well for your keywords. The types of things you need to know about your competitors’ websites can be divided into three categories:

You need to know as much as you can about the web pages that rank well for your keywords. The types of things you need to know about your competitors’ websites can be divided into three categories:  The SEOToolSet Check Server Page tool reads the robots text (

The SEOToolSet Check Server Page tool reads the robots text (