AR applications need trusted markers to show their tricks, like a magician saying, "See that top hat? There will be a rabbit soon!", where the top hat is the reference point. There are several types of reference points and each of them has their own advantages and spheres of application.

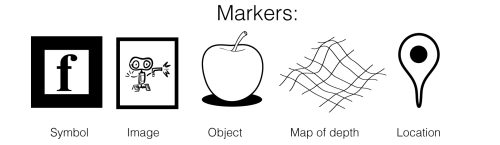

The following figure shows most common forms of markers:

The following are the short descriptions of these markers:

- Symbol-based: This is a basic method. It is the recognition and tracking of special graphic markers that are officially known as fiducial markers or fiducials (from the Latin fiducia, which means trust), but are sometimes referred to as AR tags, tracking marks, artificial landmarks, and so on. In some cases, slightly modified QR code markers can be used as well.

- Image-based: Graphic images such as photos or illustrations are split into reference points (the simplest way is to find a contrast frame around a picture). For instance, some applications use the one-dollar bill as the real-world basis that AR content will be created on, arguing that bills are the most common pieces of paper with graphics on them that an ordinary person can get their hands on. Such practice is used by programs such as Shimmer Augmented Reality Viewer for iOS (http://ionreality.com/shimmer/), which allows you to view 3D models in the AR space. In some cases, when very accurate recognition tasks are the goal, the image-based method may require very powerful CPU resources, because graphic images are split into thousands of markers that are being tracked in real time. This is why there is a service called Xloudia (http://www.lm3labs.com/xloudia/) that makes such calculations in the cloud computing to recognize complicated images on the server side.

- Object-based: Special real objects are used as markers. They can be passive (only their geometry and contrast color will be used as markers) or active (they will emit signals as light ones). Usually, they come in the shape of small cubes or spheres. There are also some applications that track a user's face and use their movements as input data.

- Map-based: A 3D map of the surroundings is created and the elements of a landscape are used as references. For instance, AR applications can use simultaneous localization and mapping (SLAM) tracking, a technology that is utilized by autonomous, movable robots. This is one of the most advanced methods of orientation and navigation in space. It allows us to use real surroundings, and virtual elements can interact with real-world objects in a more natural way.

- Location-based: This algorithm does not require any graphic markers. It uses the advantages of GPS (or its competitors), the compass, and accelerometer to determine the position and tilt of a device. Usually, such technology is used to add some virtual features to AR geo-information about real physical objects, such as city landmarks, in order to give the virtual representation of the information some textual and graphic description, interactive functionality, and so on. There are also some interesting massively multiplayer online game projects based on accurate location detection.

AR is based on three fundamental actions: recognition, tracking, and adaptation. The system analyzes an image that it gets from the camera and tries to recognize some important reference points (usually up to 10 can be used, but advanced AR systems can work with thousands and even tens of thousands of markers). Then it calculates changes of reference point's coordinates over a specific period of time. These parameters are tracking in real time. Then the virtual elements of the scene should be adapted to those changes of the coordinates. Thus general space parameters of the virtual objects, as position variable, rotation angles, and scales must be adapted to the new conditions. Since any shift of a reference point is always compensated by virtual "anti-shift", the subtraction of vectors gives the illusion that the virtual object is physically attached to a point in the real world.

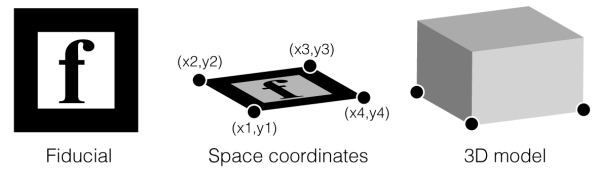

The following image illustrates the process of interpretations of a fiducial marker:

The principle is pretty obvious and the mechanics are not so complicated. It centers around the precision of image recognition algorithms, and the smoothness of the illusion depends directly on them. It is not easy to create such algorithms from scratch without being an expert in image-processing theories and practices. Nevertheless, there are some ready-to-use solutions that iOS developers can utilize in their applications. Some people also try to write their own libraries on the basis of a popular AR solution like the open-source program ARToolkit (http://www.hitl.washington.edu/artoolkit/). The following is a brief list of various development platforms for iOS. Many of them can be integrated with popular game development environments such as Unity 3D:

- Metaio SDK (http://www.metaio.com/products/sdk/) from metaio GmbH

- String (http://www.poweredbystring.com/product) by String Labs Ltd

- Vuforia Augmented Reality SDK (https://www.vuforia.com/) by Qualcomm Austria Research Center GmbH

- VYZAR (http://limitlesscomputing.com/SightSpace/custom) from Limitless Computing Inc

- Wikitude SDK (http://www.wikitude.com) from Wikitude GmbH, which has location-based functionality as well

Note

PrimeSense (http://www.primesense.com/) is the company that created the technology Microsoft Kinect. One of its new innovations is Capri™, a sensor that is capable of creating an accurate 3D map of the depth of surrounding objects. The prototype looks like a tiny device attached to a smartphone or tablet. It scans the environment around in real time, since it draws the 3D map not once, but constantly, and considers any momentary changes in the space. Therefore, it is a real breakthrough for AR applications because it provides a pretty realistic map of surroundings. It is pretty accurate, fast, and graphic-markerless. This would be a great basis for different creative experiments. It is worth noting that PrimeSense was bought by Apple Inc. in November, 2013. So, it is now reasonable to expect that there will be depth sensors in future iOS devices.