Blind Signal Separation for Digital Communication Data

Antoine Chevreuil* and Philippe Loubaton†, *ESIEE Paris/UMR 8049 LIGM, 2 bd Blaise Pascal BP 99, 93162 Noisy-le-grand cedex, France, †Université de Paris-Est Marne-la-Vallée, UMR 8049 LIGM, 5 bd Descartes, 77454 Marne-la-Vallée cedex 2, France

Abstract

Blind source separation, often called independent component analysis, is a main field of research in signal processing since the eighties. It consists in retrieving the components, up to certain indeterminacies, of a mixture involving statistically independent signals. Solid theoretical results are known; besides, they have given rise to performance algorithms. There are numerous applications of blind source separation. In this contribution, we particularize the separation of telecommunication sources. In this context, the sources stem from telecommunication devices transmitting at the same time in a given band of frequencies. The received data is a mixed version of all these sources. The aim of the receiver is to isolate (separate) the different contributions prior to estimating the unknown parameters associated with a transmitter. The context of telecommunication signals has the particularity that the sources are not stationary but cyclo-stationary. Now, in general, the standard methods of blind source separation assume the stationarity of the sources. In this contribution, we hence make a survey of the well-known methods and show how the results extend to cyclo-stationary sources.

2.04.1 Introduction

2.04.1.1 Generalities on blind source separation

The goal of blind source separation is to retrieve the components of a mixture of independent signals when no a priori information is available on the mixing matrix. This question was introduced in the eighties in the pioneering works of Jutten and Herault [1]. Since then, Blind Source Separation (BSS), also called independent component analysis, was developed by many research teams in the context of various applicative contexts. The purpose of this chapter is to present BSS methods that have been developed in the past in the context of digital communications. In this case, K digital communication devices sharing the same band of frequencies transmit simultaneously K signals. The receiver is equipped with ![]() antennas, and has to retrieve a part of (or even all) the transmitted signals. The use of BSS techniques appears to be relevant when the receiver has no a priori knowledge on the channels between the transmitters and the receiver. As many digital communication systems use training sequences which allow one to estimate the channels at the receiver side, blind source separation is in general not a very useful tool. However, it appears to be of particular interest in contexts such as spectrum monitoring or passive listening in which it is necessary to characterize unknown transmitters (estimation of technical parameters such as the carrier frequency, symbol rate, symbol constellation,…) interfering in a certain bandwidth. For this, it is reasonable to try to firstly retrieve the transmitted signals, and then to analyze each of them in order to characterize the system it has been generated by. In this chapter, we provide a comprehensive introduction to the blind separation techniques that can be used to achieve the first step.

antennas, and has to retrieve a part of (or even all) the transmitted signals. The use of BSS techniques appears to be relevant when the receiver has no a priori knowledge on the channels between the transmitters and the receiver. As many digital communication systems use training sequences which allow one to estimate the channels at the receiver side, blind source separation is in general not a very useful tool. However, it appears to be of particular interest in contexts such as spectrum monitoring or passive listening in which it is necessary to characterize unknown transmitters (estimation of technical parameters such as the carrier frequency, symbol rate, symbol constellation,…) interfering in a certain bandwidth. For this, it is reasonable to try to firstly retrieve the transmitted signals, and then to analyze each of them in order to characterize the system it has been generated by. In this chapter, we provide a comprehensive introduction to the blind separation techniques that can be used to achieve the first step.

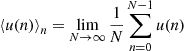

In order to explain the specificity of the problems we address in the following, we first recall what are the most classical BSS methodologies. The observation is a discrete-time M-variate signal ![]() defined as

defined as ![]() where the components of the K-dimensional (

where the components of the K-dimensional (![]() ) time series

) time series ![]() represent K signals which are statistically independent. The signal y is thus an instantaneous mixture of the K independent source signals

represent K signals which are statistically independent. The signal y is thus an instantaneous mixture of the K independent source signals ![]() in the sense that

in the sense that ![]() only depends on the value of s at time n. The signal y is said to be a convolutive mixture of the K independent source signals

only depends on the value of s at time n. The signal y is said to be a convolutive mixture of the K independent source signals ![]() if

if ![]() where

where ![]() represents the transfer function

represents the transfer function ![]() . For the sake of simplicity, we just consider the context of instantaneous mixtures in this introductory section. The goal of blind source separation is to retrieve the signals

. For the sake of simplicity, we just consider the context of instantaneous mixtures in this introductory section. The goal of blind source separation is to retrieve the signals ![]() from the sole knowledge of the observations. Fundamental results of Darmois (see e.g., [2]) show that if the source signals are non Gaussian, then it is possible to achieve the separation of the sources by adapting a matrix

from the sole knowledge of the observations. Fundamental results of Darmois (see e.g., [2]) show that if the source signals are non Gaussian, then it is possible to achieve the separation of the sources by adapting a matrix ![]() in such a way that the components of

in such a way that the components of ![]() are statistically independent. For this, it has been shown that it is sufficient to optimize over G a function, usually called a contrast function, that can be expressed in terms of certain moments of the joint probability distribution of

are statistically independent. For this, it has been shown that it is sufficient to optimize over G a function, usually called a contrast function, that can be expressed in terms of certain moments of the joint probability distribution of ![]() . A number of successful contrast functions have been derived in the case where the signal

. A number of successful contrast functions have been derived in the case where the signal ![]() are stationary sequences [2–5]. However, it will be explained below that in the context of digital communications, the signals

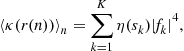

are stationary sequences [2–5]. However, it will be explained below that in the context of digital communications, the signals ![]() are not stationary, but cyclostationary, in the sense that their statistical properties are almost periodic function of the time index. For example, for each k, the sequence

are not stationary, but cyclostationary, in the sense that their statistical properties are almost periodic function of the time index. For example, for each k, the sequence ![]() appears to be a superposition of sinusoids whose frequencies, called cyclic frequencies, depend on the symbol rate of transmitter k, and are therefore unknown at the receiver side. The cyclostationarity of the

appears to be a superposition of sinusoids whose frequencies, called cyclic frequencies, depend on the symbol rate of transmitter k, and are therefore unknown at the receiver side. The cyclostationarity of the ![]() induces specific methodological difficulties that are not relevant in other applications of blind source separation.

induces specific methodological difficulties that are not relevant in other applications of blind source separation.

2.04.1.2 Illustration of the potential of BSS techniques for communication signals

The example we provide is purely academical. We consider the transmission of two BSPK sequences modulated with a Nyquist raised-cosine filter (see Section 2.04.2.1) whose symbol period is ![]() and roll-off factor is fixed to

and roll-off factor is fixed to ![]() . The energy per symbol equals

. The energy per symbol equals ![]() for the first source and

for the first source and ![]() for the second source. The receiver has

for the second source. The receiver has ![]() antennas and the channel between the source no i and the antenna no j is a delay times a real constant

antennas and the channel between the source no i and the antenna no j is a delay times a real constant ![]() . After sampling at

. After sampling at ![]() , the noiseless model of the received data is

, the noiseless model of the received data is ![]() as specified in the introduction, where the component

as specified in the introduction, where the component ![]() of

of ![]() is

is ![]() (more details are provided in Section 2.04.2.3). An additive white noise Gaussian corrupts the model whose variance is

(more details are provided in Section 2.04.2.3). An additive white noise Gaussian corrupts the model whose variance is ![]() where

where ![]() is fixed to 100 dB (we purposely fixed the noise level to a low value in order to show results that can be graphically interpreted). Moreover,

is fixed to 100 dB (we purposely fixed the noise level to a low value in order to show results that can be graphically interpreted). Moreover, ![]() .

.

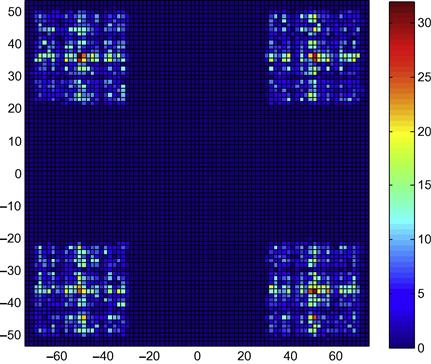

Suppose in a first step that the channel is ideal such that the mixing matrix ![]() is the identity matrix. We may have a look at the eye diagrams of the two components of the received data. We obtain Figure 4.1. This is almost a perfectly opened eye since the noise is negligible. We may also have a look at a 2D-histogram of the data. Notice that the components of

is the identity matrix. We may have a look at the eye diagrams of the two components of the received data. We obtain Figure 4.1. This is almost a perfectly opened eye since the noise is negligible. We may also have a look at a 2D-histogram of the data. Notice that the components of ![]() are not stationary. We hence down-sample these data by a factor 3 in order to have stationary data. We plot the 2D-histogram: see Figure 4.2. As the two components are independent, their joint probability density function (pdf) is separable which seems to be the case in view of the figure.

are not stationary. We hence down-sample these data by a factor 3 in order to have stationary data. We plot the 2D-histogram: see Figure 4.2. As the two components are independent, their joint probability density function (pdf) is separable which seems to be the case in view of the figure.

Let us now consider the case of the channel matrix:

We obtain Figures 4.3 and 4.4 respectively for the eye diagrams and the 2D-histograms. Clearly the channels are severe and close the eyes. Moreover, the pdf is obviously not separable, which attests to the non independency of the two components of ![]() .

.

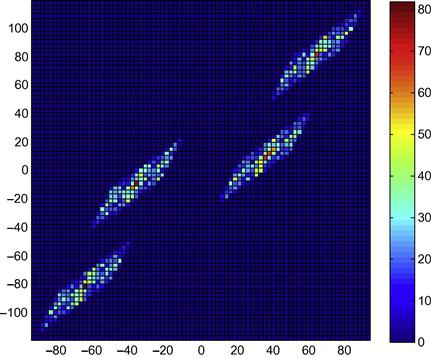

We run the JADE algorithm (see Sections 2.04.3.5 and 2.04.3.8) on the data (the observation duration is fixed to 1000 symbols): we obtain a ![]() matrix G such that, theoretically at least, GH should be diagonal. We form the data

matrix G such that, theoretically at least, GH should be diagonal. We form the data ![]() and plot Figures 4.5 and 4.6. The eyes have been opened and the joint pdf is hence separable. This is not a surprise since we have computed the resulting matrix GH:

and plot Figures 4.5 and 4.6. The eyes have been opened and the joint pdf is hence separable. This is not a surprise since we have computed the resulting matrix GH:

which is close to a diagonal matrix. We need to explain why BSS has been successfully achieved in this simple example and why it can also be achieved in much more difficult contexts.

2.04.1.3 Organisation of the paper

This chapter is organized as follows. In Section 2.04.2, we provide the model of the signals which are supposed to be linear modulations of symbols (Section 2.04.2.1). We discuss the statistics of the sampled versions of the transmitted sources in Section 2.04.2.2: in general, a sampled version is cyclo-stationary and we provide the basic tools and notation used along the paper. The model of the received data is specified in Section 2.04.2.3: (1) If the propagation channel between each transmitter and the receiver is a single path channel, the received signal is an instantaneous mixture of the transmitted signals; (2) if at least one of the propagation channel is a multipath channel, the mixture appears to be convolutive. Besides, we discuss the assumptions under which the received data are stationary. In general, however, the data are cyclo-stationary with unknown cyclic frequencies.

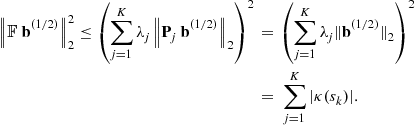

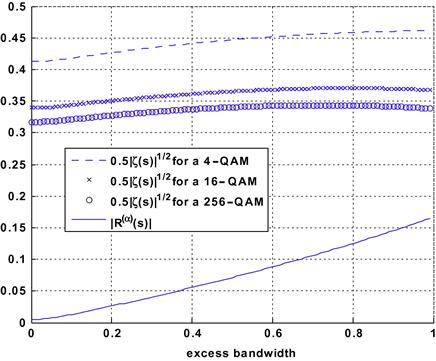

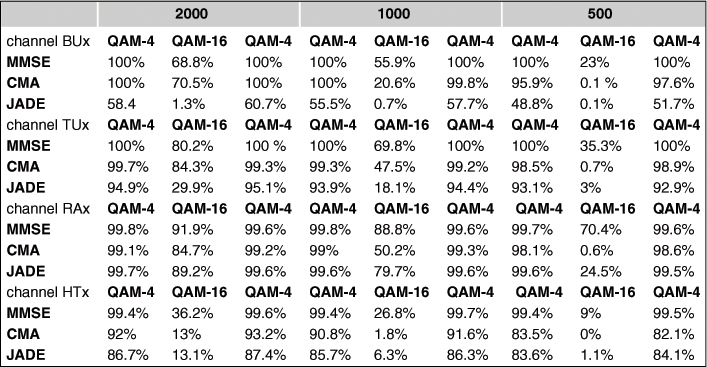

The case of instantaneous mixtures is addressed in Section 2.04.3. When the sources are independent and identically distributed (i.i.d.) (this case is discussed in Section 2.04.2.3), and that strong a priori information on the constellations are known, it is possible to provide algebraic solutions to the BSS problem, e.g., the Iterative Least Squares Projections (ILSP) algorithm or the Algebraic Constant Modulus Algorithms (ACMA): these methods are explained in Section 2.04.3.2. In Section 2.04.3.3, we consider the case of second-order methods (one of the advantages of these latter is that they are robust to the cyclo-stationarities, hence can be applied to general scenarios): the outlines of one of the most popular approach, the Second-Order Blind Identification (SOBI) algorithm, which consists in estimating the mixing matrix from the autocorrelation function of the received signal. This approach is conceptually simple, and the corresponding scheme allows one to identify the mixture and hence, to separate the source signals. That SOBI is rarely considered for BSS of digital communication signals is explained. The subsections that follow cope with BSS methods based on fourth-order cumulants. They are called “direct” BSS methods since they provide estimates of the sources with no prior estimation of the unknown channel matrix. For pedagogical and historical reasons, we firstly cope with the very particular case of stationary signals. One-by-one methods based are explained (Section 2.04.3.4) and are shown to be convergent; the associated deflation procedure is introduced and an improvement is presented. Global methods (also called joint separating methods) aim at separating jointly the K sources: they are depicted in Section 2.04.3.5; these approaches are based on the minimization of well chosen contrast functions over the set of ![]() unitary matrices: the famous Joint Approximate Diagonalization of Eigenmatrices (JADE) algorithm is presented, since it represents a touchstone in the domain of BSS. When the sources are cyclo-stationary, which is really the interesting point for the context of this paper, the preceding “stationary” methods (one-by-one and global) are again considered. The following problem is addressed: do the convergence result still hold when the algorithms are fed by cyclo-stationary data instead of stationary ones? Sufficient conditions are shown to assure the convergence: semi-analytical computations (Section 2.04.3.9) prove that the conditions in question hold true.

unitary matrices: the famous Joint Approximate Diagonalization of Eigenmatrices (JADE) algorithm is presented, since it represents a touchstone in the domain of BSS. When the sources are cyclo-stationary, which is really the interesting point for the context of this paper, the preceding “stationary” methods (one-by-one and global) are again considered. The following problem is addressed: do the convergence result still hold when the algorithms are fed by cyclo-stationary data instead of stationary ones? Sufficient conditions are shown to assure the convergence: semi-analytical computations (Section 2.04.3.9) prove that the conditions in question hold true.

In Sections 2.04.4 and 2.04.5, the case of convolutive mixtures is addressed. In certain particular scenarios, e.g., sparse channels, the gap between the instantaneous case and the convolutive one can be bridged quite directly (Section 2.04.4). More precisely, if the delays of the various multipaths are sufficiently spread out on the one hand and if, on the other hand, the number of antennas of the receiver is large enough, it is still possible to formulate the source separation problem as the separation of a certain instantaneous mixture. If these conditions do not hold, we face a real convolutive mixture, i.e., the received data are the output of a Multi-Input/Multi-Output (MIMO) unknown filter driven by jointly independent (cyclo-) stationary sources. Due to their historical and theoretical importance, we present algebraic methods (Section 2.04.5.1) when the data are stationary. Under this latter assumption, the identification of the unknown transfer function can be achieved using standard methods using the Moving Average (MA) or Auto-Regressive (AR) properties: see Section 2.04.5.2. The famous subspace method, introduced in Section 2.04.5.3, is based on second-order moments and can be used for general cyclo-stationary data; its inherent numerical problems are discussed. In Section 2.04.5.4, global direct methods are evoked (temporal domain and frequency domain) for stationary data. In Section 2.04.5.5, the case of one-to-one methods previously introduced in Section 2.04.3.4 is extended to the convolutive case and positive results for BSS are provided. The results are further extended for the cyclo-stationary case in Section 2.04.5.6 where convergence results are shown.

In Section 2.04.7, we discuss several points that have not been developed in the core of the paper. Further bibliographic entries are provided.

2.04.2 Signals

We have specified in the Introduction that the domain of source separation is not restricted to the context of telecommunication signals. In the following, however, most of the results apply specifically to digital telecommunication signals.

2.04.2.1 Source signals. Basic assumptions

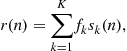

We assume that K digital telecommunication devices simultaneously transmit information in the same band of frequencies. For ![]() , we denote by

, we denote by ![]() the complex envelope of the kth transmitted signal (“the kth source”). The subscript “a” in

the complex envelope of the kth transmitted signal (“the kth source”). The subscript “a” in ![]() underlines that the signal is “analog”. Throughout this contribution,

underlines that the signal is “analog”. Throughout this contribution, ![]() is supposed to stem from a linear modulation of a sequence of symbols. The model is hence:

is supposed to stem from a linear modulation of a sequence of symbols. The model is hence:

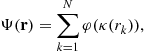

![]() (4.1)

(4.1)

In this latter equation, ![]() is a sequence of symbols belonging to a certain constellation. The function

is a sequence of symbols belonging to a certain constellation. The function ![]() is a shaping function and

is a shaping function and ![]() is the duration of a symbol

is the duration of a symbol ![]() . We denote by

. We denote by ![]() the carrier frequency associated with the kth source. Along this contribution, the following assumptions and notation are adopted.Assumptions on the source signals: For a given index k, the sequence

the carrier frequency associated with the kth source. Along this contribution, the following assumptions and notation are adopted.Assumptions on the source signals: For a given index k, the sequence ![]() is assumed to be independent and identically distributed (i.i.d.). We assume that it has zero mean

is assumed to be independent and identically distributed (i.i.d.). We assume that it has zero mean ![]() . With no restriction at all, the normalization

. With no restriction at all, the normalization ![]() holds. We also suppose that it is second-order complex-circular in the sense that

holds. We also suppose that it is second-order complex-circular in the sense that

![]() (4.2)

(4.2)

It is undoubtedly a restriction to impose the condition (4.2) especially in the telecommunication context of this paper; indeed, the BPSK modulation for instance does not verify (4.2). Some points on the extensions to general non circular mixtures are provided in Section 2.04.7.1.

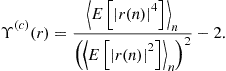

The Kurtosis of the symbol ![]() , is defined as

, is defined as

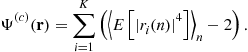

![]()

where the fourth-order cumulant of a complex-valued random variable X is defined when it makes sense, as ![]() : see for instance [6]. Here, by the circularity Assumption 4.2, we have

: see for instance [6]. Here, by the circularity Assumption 4.2, we have

![]()

We assume that we have, for any index k:

![]() (4.3)

(4.3)

This inequality is given as an assumption; is has more the flavor of a result, since we do not know complex-circular constellations such that (4.3) is not satisfied.

We may now come to the key assumption: the sources ![]() are mutually independent.

are mutually independent.

Concerning the shaping filter, ![]() we suppose that

we suppose that ![]() is a square-root raised cosine with excess bandwidth (also called roll-off factor)

is a square-root raised cosine with excess bandwidth (also called roll-off factor) ![]() .

.

2.04.2.2 Cyclo-stationarity of a source

In this short paragraph, we drop the index of the source and ![]() refer to respectively

refer to respectively ![]() . Thanks to Eq. (4.1), it is quite obvious that

. Thanks to Eq. (4.1), it is quite obvious that ![]() and

and ![]() are similarly distributed since

are similarly distributed since ![]() is i.i.d. This simple reasoning applies to any vector

is i.i.d. This simple reasoning applies to any vector ![]() whose distribution equals this of

whose distribution equals this of ![]() . This shows that the process

. This shows that the process ![]() is cyclo-stationary in the strict sense with period T. In particular, its second and fourth-order moments evolve as T-periodic functions of the time. Let us focus on the second-order moments:

is cyclo-stationary in the strict sense with period T. In particular, its second and fourth-order moments evolve as T-periodic functions of the time. Let us focus on the second-order moments: ![]() is hence a periodic function with period T. We let its Fourier expansion be

is hence a periodic function with period T. We let its Fourier expansion be

![]() (4.4)

(4.4)

where ![]() is called “cyclo-correlation” of

is called “cyclo-correlation” of ![]() at cyclic frequency

at cyclic frequency ![]() and time lag

and time lag ![]() . We have the reverse formula:

. We have the reverse formula:

![]()

or

![]()

Generally, we may introduce the cyclo-correlation at any cyclic frequency ![]() :

:

![]()

In the case of ![]() given by Eq. (4.1),

given by Eq. (4.1), ![]() is identically zero for cyclic frequencies

is identically zero for cyclic frequencies ![]() that are not multiples of

that are not multiples of ![]() . In passing, we have the following symmetry:

. In passing, we have the following symmetry:

![]() (4.5)

(4.5)

Let us inspect a bit further a main specificity of linear modulations on the Fourier expansion of Eq. (4.4). In this respect, denote by ![]() the Fourier transform of the cyclo-correlation function

the Fourier transform of the cyclo-correlation function ![]() . We have, after elementary calculus (see [7,8]):

. We have, after elementary calculus (see [7,8]):

![]()

where ![]() is the Fourier transform of

is the Fourier transform of ![]() . This formula is visibly a generalization of the so-called Benett Equality (see [9]Section 4.4.1) that gives the power spectral density of

. This formula is visibly a generalization of the so-called Benett Equality (see [9]Section 4.4.1) that gives the power spectral density of ![]() : indeed, in the above equation, if one takes

: indeed, in the above equation, if one takes ![]() , we obtain

, we obtain ![]() which is the power spectral density. An important consequence was underlined in [8]:

which is the power spectral density. An important consequence was underlined in [8]:

We deduce from what precedes some consequences on the second-order statistics of a sampled version of a source. In this respect, we denote by ![]() any sampling period; the discrete-time signal associated with

any sampling period; the discrete-time signal associated with ![]() is hence

is hence ![]() for

for ![]() . Thanks to Lemma 1, the expansion (4.4) may be re-written as:

. Thanks to Lemma 1, the expansion (4.4) may be re-written as:

![]() (4.6)

(4.6)

where we let ![]() be

be ![]() . We distinguish between three cases:

. We distinguish between three cases:

1. If ![]() is a integer, the three terms of the r.h.s. of (4.6) all aggregate in a single term, making the function

is a integer, the three terms of the r.h.s. of (4.6) all aggregate in a single term, making the function ![]() not depend on the time index n. This is not surprising since the condition

not depend on the time index n. This is not surprising since the condition ![]() where p is a non-null integer corresponds to a strict-sense stationary signal (see the polyphase decomposition in [10]). In particular, if

where p is a non-null integer corresponds to a strict-sense stationary signal (see the polyphase decomposition in [10]). In particular, if ![]() , we have:

, we have:

![]() (4.7)

(4.7)

where ![]() . In the following, we will not study the case

. In the following, we will not study the case ![]() with

with ![]() .

.

2. If ![]() modulo

modulo ![]() : unless

: unless ![]() is rational, it cannot be said that, as a function of

is rational, it cannot be said that, as a function of ![]() is periodic: it is called an almost periodic function [11] and

is periodic: it is called an almost periodic function [11] and ![]() is hence called almost periodically correlated, having

is hence called almost periodically correlated, having ![]() as cyclic-frequencies. We introduce

as cyclic-frequencies. We introduce

![]() (4.8)

(4.8)

where the operator ![]() is the time-averaging one, i.e., for a complex-valued (deterministic) series

is the time-averaging one, i.e., for a complex-valued (deterministic) series ![]()

when the limit makes sense. As the three cyclic-frequencies in the expansion (4.6) are distinct modulo 1 then we obtain

![]()

which reminds us of the Shannon sampling theorem.

3. If ![]() it turns out that, similarly to the previous case, the discrete-time source is cyclo-stationary, having

it turns out that, similarly to the previous case, the discrete-time source is cyclo-stationary, having ![]() as cyclic- frequencies. Moreover

as cyclic- frequencies. Moreover ![]() and

and ![]() .

.

2.04.2.3 Received signals

The receiver is equipped with M antennas, the number of antennas being as big as the number of sources, i.e., ![]() (see section 2.04.2.3.2). We denote by

(see section 2.04.2.3.2). We denote by

the complex envelope of the received ![]() vector computed at a frequency of demodulation denoted by

vector computed at a frequency of demodulation denoted by ![]() . We consider that

. We consider that ![]() obeys the linear model

obeys the linear model

where ![]() is the contribution of the kth source to the observation. We further assume that

is the contribution of the kth source to the observation. We further assume that ![]() stems from delayed/attenuated versions of

stems from delayed/attenuated versions of ![]() . In this respect, we may write

. In this respect, we may write ![]() , the component of

, the component of ![]() associated with the mth sensor, as:

associated with the mth sensor, as:

(4.9)

(4.9)

where the index ![]() represents the path index,

represents the path index, ![]() the number of paths associated with the source no

the number of paths associated with the source no ![]() an attenuation factor and

an attenuation factor and ![]() the delay of the propagation along the path no

the delay of the propagation along the path no ![]() between the source no k and the sensor no m. In this latter equation,

between the source no k and the sensor no m. In this latter equation, ![]() is the complex envelope of the modulated signal

is the complex envelope of the modulated signal ![]() at the demodulation frequency, i.e.,

at the demodulation frequency, i.e., ![]() .

.

2.04.2.3.1 Models of the sampled data

We distinguish between two cases:

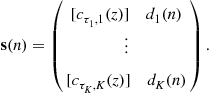

1. Instantaneous mixture: This scenario holds when the signal ![]() evolves in a linear space of dimension 1, that is when the components

evolves in a linear space of dimension 1, that is when the components ![]() in (4.9) do not depend neither on

in (4.9) do not depend neither on ![]() nor on m. This holds when there exists

nor on m. This holds when there exists ![]() such that

such that ![]() for all indices

for all indices ![]() . This happens, for instance, when there is a single path (

. This happens, for instance, when there is a single path (![]() ) and the transmitted signal is narrow-band (

) and the transmitted signal is narrow-band (![]() ). In this case we have

). In this case we have

More compactly, this gives

![]()

where ![]() is a

is a ![]() mixing matrix, and

mixing matrix, and

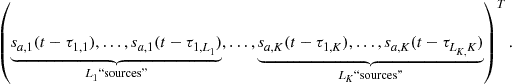

![]() (4.10)

(4.10)

If ![]() is the sampling period of the receiver, it is supposed that all the components of the data are low-pass filtered in the sampling band (the matched-filter cannot be considered since the shaping filters are not supposed to be known to the receiver). Finally, the (noiseless model) of the data is

is the sampling period of the receiver, it is supposed that all the components of the data are low-pass filtered in the sampling band (the matched-filter cannot be considered since the shaping filters are not supposed to be known to the receiver). Finally, the (noiseless model) of the data is

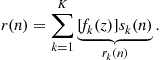

![]() (4.11)

(4.11)

where

![]() (4.12)

(4.12)

Generally speaking, any of the components of the source vector ![]() is cyclo-stationary (see Section 2.04.2.2) hence the model given by (4.11) is a cyclo-stationary one. For simplification, let us suppose in the following that

is cyclo-stationary (see Section 2.04.2.2) hence the model given by (4.11) is a cyclo-stationary one. For simplification, let us suppose in the following that ![]() for all the indices k (this point is discussed in Section 2.04.7). As the original theory of BSS assumed stationary data, we inspect under which conditions the above model can be stationary. A necessary and sufficient condition is that all the components of

for all the indices k (this point is discussed in Section 2.04.7). As the original theory of BSS assumed stationary data, we inspect under which conditions the above model can be stationary. A necessary and sufficient condition is that all the components of ![]() be stationary. As discussed previously, this can happen when all the symbol periods are equal to, say, T and if the sampling period

be stationary. As discussed previously, this can happen when all the symbol periods are equal to, say, T and if the sampling period ![]() . Under these conditions, we even have:

. Under these conditions, we even have:

![]()

where we have set for any delay ![]()

![]() (4.13)

(4.13)

The stationary model can be written as:

![]() (4.14)

(4.14)

where we have set

(4.15)

(4.15)

(note: the notation might be confusing since ![]() was already defined in (4.12): in the following sections, the context is always specified which prevents the confusion) In the literature, it is sometimes required that the sources be i.i.d. In the context of this paper, this i.i.d. condition is fulfilled when the filters

was already defined in (4.12): in the following sections, the context is always specified which prevents the confusion) In the literature, it is sometimes required that the sources be i.i.d. In the context of this paper, this i.i.d. condition is fulfilled when the filters ![]() all have the form of a constant times a delay: in short, this happens when (1) all the transmitted symbols are synchronized (2) the receiver runs a matched filter (square-root Nyquist), and (3) the symbol synchronization is performed at the receiver. In this case we have:

all have the form of a constant times a delay: in short, this happens when (1) all the transmitted symbols are synchronized (2) the receiver runs a matched filter (square-root Nyquist), and (3) the symbol synchronization is performed at the receiver. In this case we have:

(4.16)

(4.16)

The reader may find this set of condition very restrictive in real scenarios. It is indeed; however, the developments of BSS are based on the stationary assumption. Moreover, many interesting methods exploit the i.i.d. condition.

2. Convolutive mixture: This is the general case when multi-paths affect the propagation. We provide the discrete-time version of Eq. (4.9). Let us begin by the general case. In this respect, we assume that the sampling period ![]() verifies the Shannon sampling condition, i.e.,

verifies the Shannon sampling condition, i.e.,

![]()

This is a non-restrictive condition whatever the scenario: a crude prior spectral analysis of the data is simply needed. Provided this condition, the discrete-time signal ![]() , for any indices

, for any indices ![]() , is a filtered version of

, is a filtered version of ![]() . It is hence easy to deduce that the sampled data

. It is hence easy to deduce that the sampled data ![]() follows the equation:

follows the equation:

![]() (4.17)

(4.17)

where ![]() is a vector of mutually independent sources and

is a vector of mutually independent sources and ![]() is certain the

is certain the ![]() transfer function whose kth column is the digital channel between the kth source and the receiver: it depends on the parameters

transfer function whose kth column is the digital channel between the kth source and the receiver: it depends on the parameters ![]() . The above general model is, in general, cyclo-stationary. For simplification, we assume ion the following that

. The above general model is, in general, cyclo-stationary. For simplification, we assume ion the following that ![]() for all indices k. Similarly to the case of instantaneous mixtures, it is instructive to find conditions under which the data are stationary. This occurs when the symbol periods

for all indices k. Similarly to the case of instantaneous mixtures, it is instructive to find conditions under which the data are stationary. This occurs when the symbol periods ![]() all coincide with a certain T, and when the sampling period

all coincide with a certain T, and when the sampling period ![]() equals T. Under all these conditions,

equals T. Under all these conditions, ![]() , the contribution of the kth source to the mixture, can be written as

, the contribution of the kth source to the mixture, can be written as

![]() (4.18)

(4.18)

where ![]() and

and ![]() is a certain

is a certain ![]() unknown filter matrix. This shows that

unknown filter matrix. This shows that ![]() depends on the shaping filters, the steering vectors associated with the paths and their corresponding delays.

depends on the shaping filters, the steering vectors associated with the paths and their corresponding delays.

2.04.2.3.2 Assumptions on the channels

In this paper, we consider over-determined mixtures, that is: mixtures such that the number of sensors exceeds the number of sources (![]() ). This condition is necessary in order to retrieve the vector

). This condition is necessary in order to retrieve the vector ![]() —see model (4.11) (respectively (4.17) )—from the data

—see model (4.11) (respectively (4.17) )—from the data ![]() by means of a

by means of a ![]() constant matrix (respectively a

constant matrix (respectively a ![]() filter). This has to be specified.

filter). This has to be specified.

For instantaneous mixtures, the following condition holds:

![]() (4.19)

(4.19)

Under this assumption, there exist ![]() matrices

matrices ![]() such that

such that ![]() .

.

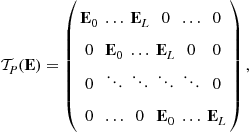

For convolutive mixtures, it is conventional to assume that the components of ![]() are polynomials in

are polynomials in ![]() (this is an approximation that is justified since the shaping filters

(this is an approximation that is justified since the shaping filters ![]() are rapidly vanishing when

are rapidly vanishing when ![]() ). We further assume that

). We further assume that

![]() (4.20)

(4.20)

Under this condition, there exist polynomial matrices ![]() such that

such that ![]() : see for instance [12,13]. The same kind of assumption holds in the stationary case—see the model (4.18): namely,

: see for instance [12,13]. The same kind of assumption holds in the stationary case—see the model (4.18): namely,

![]() (4.21)

(4.21)

At this level, we would like to point out a curiosity. In this respect, we assume further that the excess bandwidth factors of one source—say the first one—equals zero. As the choice ![]() satisfies the Shannon sampling condition, we may write

satisfies the Shannon sampling condition, we may write ![]() where

where

![]()

As ![]() , the first column

, the first column ![]() of

of ![]() can be factored as

can be factored as ![]() . In particular, after the standard FIR approximations, it yields that the condition given in (4.21) is not fulfilled.

. In particular, after the standard FIR approximations, it yields that the condition given in (4.21) is not fulfilled.

2.04.3 Instantaneous mixtures

The model of the data ![]() is given by (4.11). The mixing matrix

is given by (4.11). The mixing matrix ![]() is unknown. BSS can be achieved either by estimating

is unknown. BSS can be achieved either by estimating ![]() —this is the point of Section 2.04.3.3—or by computing directly estimates of the sources (up to indeterminacies).

—this is the point of Section 2.04.3.3—or by computing directly estimates of the sources (up to indeterminacies).

2.04.3.1 Indeterminacies

It is always possible to consider that the sources have equal and normalized power. Indeed, as ![]() where

where ![]() is the square-root of the power of the first source, we suggest to scale the first column of

is the square-root of the power of the first source, we suggest to scale the first column of ![]() by

by ![]() . Repeating this process for all the sources, we have constructed a new matrix

. Repeating this process for all the sources, we have constructed a new matrix ![]() . Eventually denoting

. Eventually denoting ![]() , we obviously show that the data alternatively writes

, we obviously show that the data alternatively writes

![]()

Though apparently innocent, this remark gives precious a priori indications. First of all, it says that the model (4.22) is not uniquely defined. As a consequence, it is always possible to consider, without restricting the model, that the sources have equal power equal to one—this precisely corresponds to the above defined ![]() ; specifically, we will assume in the following that

; specifically, we will assume in the following that

![]() (4.22)

(4.22)

This shows it is beyond a reasonable expectation to retrieve the sources with no scaling ambiguities. Similarly, if ![]() is a permutation matrix,

is a permutation matrix, ![]() , underlining the non-unicity of the model.

, underlining the non-unicity of the model.

With no further assumptions on the sources, the ultimate result that can be achieved is: retrieve the sources up to unknown complex scaling factors (scaling and phase ambiguities) and a permutation.

2.04.3.2 Algebraic methods (i.i.d. scenario)

The model of the data is given by (4.16). We may collect the N available data in a ![]() matrix

matrix ![]() , we have:

, we have: ![]() where

where ![]() . As any entry of

. As any entry of ![]() corresponds to a symbol, associated specificities (e.g., finite alphabet constellations or modulus one symbols) are a priori relations the receiver can make use of. As far as the identifiability is concerned, it is proven in [14] (Lemma 1) that the above factorization is essentially unique for modulus one symbols, at least if the number of snapshots N verifies

corresponds to a symbol, associated specificities (e.g., finite alphabet constellations or modulus one symbols) are a priori relations the receiver can make use of. As far as the identifiability is concerned, it is proven in [14] (Lemma 1) that the above factorization is essentially unique for modulus one symbols, at least if the number of snapshots N verifies ![]() (which is the case in practical contexts). By essentially unique, we mean that the rows of

(which is the case in practical contexts). By essentially unique, we mean that the rows of ![]() may be permuted and/or multiplied by modulus one constants.

may be permuted and/or multiplied by modulus one constants.

Talwar et al. [15,16] propose iterative algorithms that assume known the alphabets of the symbols. Call ![]() an estimate of

an estimate of ![]() at the iteration no

at the iteration no ![]() . The Iterative Least Square with Projection (ILSP) is:

. The Iterative Least Square with Projection (ILSP) is:

1. Take any full rank ![]() for iteration

for iteration ![]()

• ![]() where

where ![]() denotes the pseudo-inverse.

denotes the pseudo-inverse.

• ![]() projection of each component of

projection of each component of ![]() on the corresponding alphabet.

on the corresponding alphabet.

Similar projection-based algorithms that rather take into account the constant modulus property of the entries of ![]() have been considered [17,18]: similarly to the IMSP algorithm, no results on the convergence can be given (how many samples are required? are there local minima the algorithm could be trapped in?). Van der Veen et al. [14] proposes a non-iterative algorithm, called the Algebraic CMA (ACMA): the ACMA provides exactly “the” solution (up to the above mentioned ambiguities) of the factorization of

have been considered [17,18]: similarly to the IMSP algorithm, no results on the convergence can be given (how many samples are required? are there local minima the algorithm could be trapped in?). Van der Veen et al. [14] proposes a non-iterative algorithm, called the Algebraic CMA (ACMA): the ACMA provides exactly “the” solution (up to the above mentioned ambiguities) of the factorization of ![]() —at least if the number of data N exceeds

—at least if the number of data N exceeds ![]() . It is based on a joint diagonalization of a pencil of K matrices.

. It is based on a joint diagonalization of a pencil of K matrices.

Certain BSS methods for convolutive mixtures need, as a final step, to run such algorithms (see e.g., Section 2.04.5.1).

2.04.3.3 Second-order based identification (general cyclo-stationary case)

In this section, we address the “indirect” BSS; by this terminology, we mean that the BSS is achieved in two steps. The first step consists in estimating the unknown mixing matrix H by, say, ![]() . In a second step, the proper separation is carried out. If

. In a second step, the proper separation is carried out. If ![]() is an accurate estimate, then

is an accurate estimate, then ![]() is the natural estimate of the source vector. In general, however, noise is present (estimation noise and additive noise in the observed signals) and other strategies have to be considered: this aspect is not addressed in this paper.

is the natural estimate of the source vector. In general, however, noise is present (estimation noise and additive noise in the observed signals) and other strategies have to be considered: this aspect is not addressed in this paper.

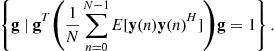

The first point to be addressed in this section is the pre-whitening of the data. We suppose that ![]() (notice: in the non-square case, a principal component analysis is processed). In this respect, we consider the auto-correlation matrix of the data

(notice: in the non-square case, a principal component analysis is processed). In this respect, we consider the auto-correlation matrix of the data ![]() can be written (we recall that the sources are assumed to have equal normalized powers as discussed previously):

can be written (we recall that the sources are assumed to have equal normalized powers as discussed previously):

![]()

Since ![]() is full rank, the above matrix is positive definite and we form the new data

is full rank, the above matrix is positive definite and we form the new data

![]()

We have:

![]()

where ![]() is a unitary matrix.

is a unitary matrix.

The second point concerns the estimation of the unitary matrix U. The data ![]() is cyclo-stationary. As the cyclic-frequencies are not always directly accessible, the identification of the unknown mixing matrix U is done by solely considering the statistics

is cyclo-stationary. As the cyclic-frequencies are not always directly accessible, the identification of the unknown mixing matrix U is done by solely considering the statistics

![]()

which can be expressed as

![]()

This says that the normal matrix ![]() , for any index

, for any index ![]() , is diagonalized in the orthonormal basis formed by the columns of U. For

, is diagonalized in the orthonormal basis formed by the columns of U. For ![]() , this gives

, this gives ![]() and this is clearly not sufficient to identify U! On the contrary, consider that the spectra of the sources

and this is clearly not sufficient to identify U! On the contrary, consider that the spectra of the sources ![]() are all different at least for a frequency

are all different at least for a frequency ![]() . For any unitary matrix

. For any unitary matrix ![]() , the matrix

, the matrix ![]() is diagonal for every indices

is diagonal for every indices ![]() if and only if the columns of

if and only if the columns of ![]() equal these of U up to a modulus one factor and a permutation. This remark was done in [19] and an algorithm (SOBI) was deduced based on a joint diagonalization technique [20].

equal these of U up to a modulus one factor and a permutation. This remark was done in [19] and an algorithm (SOBI) was deduced based on a joint diagonalization technique [20].

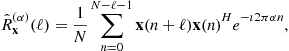

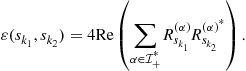

The reader has noticed the suboptimality of the above method when the mixture is cyclo-stationary. The exploited statistics are only the ![]() for certain indices

for certain indices ![]() . In [21], it is suggested to take advantage of the cyclic-statistics of the mixture. In this respect, notice that for any

. In [21], it is suggested to take advantage of the cyclic-statistics of the mixture. In this respect, notice that for any ![]() cyclic frequency of the mixture, we have

cyclic frequency of the mixture, we have

![]()

hence these “new” statistics could be added in the pencil of matrices to be jointly diagonalized. This theoretical appeal is attenuated by the fact that the non null-components of ![]() are numerically inconsistent.

are numerically inconsistent.

At this level, we should emphasize that the statistics ![]() for any

for any ![]() (zero or not) are not accessible to the receiver and should be replaced by the empirical estimate denoted by

(zero or not) are not accessible to the receiver and should be replaced by the empirical estimate denoted by ![]() and defined for

and defined for ![]() as

as

(4.23)

(4.23)

where N is the number of snapshots. This estimate is a consistent estimate of the matrix ![]() . In an ideal scenario where the model

. In an ideal scenario where the model ![]() holds true, it is remarkable that

holds true, it is remarkable that ![]() and the joint diagonalization of the estimated statistics should provide the exact mixing matrix: the algorithm is called deterministic. In a realistic context, however, the data are perturbed by an additive noise term: in this case, the above factorization does not hold true anymore and the joint diagonalization is an approximate joint diagonalization.

and the joint diagonalization of the estimated statistics should provide the exact mixing matrix: the algorithm is called deterministic. In a realistic context, however, the data are perturbed by an additive noise term: in this case, the above factorization does not hold true anymore and the joint diagonalization is an approximate joint diagonalization.

In practice, despite its attractivity, SOBI is seldom used to achieve BSS of digital communication data. Indeed, the condition that there are no two sources whose spectra are identical (up to a multiplicative constant) does not make sense most of the time. Indeed, the transmitted symbols are generally white sequences whose shaping functions are close from to one another. As the spectra are numerically similar, the joint diagonalization approach is bound to suffer from numerical problems.

2.04.3.4 Iterative BSS (stationary case)

As was specified, the stationary scenario assumes that for all indices ![]() , i.e., all the baud-rates are equal, and

, i.e., all the baud-rates are equal, and ![]() . Under these very specific circumstances, the model (4.14) involves a source vector

. Under these very specific circumstances, the model (4.14) involves a source vector ![]() whose components are stationary and mutually independent. We insist on the fact that the components of the source vector are not the i.i.d. symbol sequences but linear processes generated by these symbol sequences as indicated by Eq. (4.15). BSS aims at estimating the sources, not the symbol sequences. Hence, BSS may be seen as a preliminary step before the estimation of the symbols.

whose components are stationary and mutually independent. We insist on the fact that the components of the source vector are not the i.i.d. symbol sequences but linear processes generated by these symbol sequences as indicated by Eq. (4.15). BSS aims at estimating the sources, not the symbol sequences. Hence, BSS may be seen as a preliminary step before the estimation of the symbols.

Contrary to other methods, no pre-processing of the data is necessary (PCA, pre-whitening).

In this section, we firstly design methods able to recover one of the sources (or a scaled version). In a second step, we present the so-called deflation that allows one to run the extraction of another source from a deflated mixture where the contribution of the first estimated source has been removed. The convergence is established: after K such steps, the K sources are expected to be estimated. Convergence properties are discussed.

2.04.3.4.1 Estimation of one source: theoretical considerations

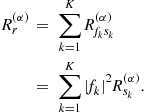

Thanks to the mixing matrix ![]() having full-rank—see condition (4.19)—we know that, for any source index k, there exist column vectors

having full-rank—see condition (4.19)—we know that, for any source index k, there exist column vectors ![]() such that

such that

![]()

Denoting ![]() , we may call this new signal as the reconstructed source since it involves only one source. This new signal is obtained after a so-called spatial filtering of the data. Of course, it is not possible to compute

, we may call this new signal as the reconstructed source since it involves only one source. This new signal is obtained after a so-called spatial filtering of the data. Of course, it is not possible to compute ![]() since H is not accessible. A possible approach hence consists in adapting a spatial filter g that makes

since H is not accessible. A possible approach hence consists in adapting a spatial filter g that makes

![]() (4.24)

(4.24)

resemble one of the sources. This will be done by considering particular statistics of the signal ![]() . We may write this signal under this form

. We may write this signal under this form

(4.25)

(4.25)

where the taps ![]() are the components of the vector

are the components of the vector

![]() (4.26)

(4.26)

The term ![]() in

in ![]() represents the contribution of the kth source to the reconstructed signal

represents the contribution of the kth source to the reconstructed signal ![]() . As may be easily understood, we aim at finding a “good”

. As may be easily understood, we aim at finding a “good” ![]() , i.e., such that f is a vector having a single non-null component.

, i.e., such that f is a vector having a single non-null component.

Evidently, the signal ![]() involves a single source if and only if the composite vector

involves a single source if and only if the composite vector ![]() is separating.

is separating.

We may inspect higher-order statistics and particularly the fourth-order ones. It has been proposed to consider the fourth-order cumulant (see Section 2.04.3.6 for theoretical justifications):

![]()

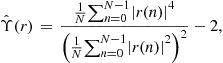

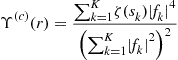

In this respect, we may introduce the following function, called normalized (fourth-order) cumulant:

![]() (4.27)

(4.27)

Thanks to the definition of the cumulants, we have: ![]() . Now, the circularity assumption of the symbol sequences (4.2) implies the circularity of the sources, hence

. Now, the circularity assumption of the symbol sequences (4.2) implies the circularity of the sources, hence

![]()

We re-express ![]() as a function of the moments of

as a function of the moments of ![]() :

:

(4.28)

(4.28)

As a remark, it is instructive, though superfluous in this paper since the digital communication symbols have negative Kurtosis, to address the optimization of ![]() for general distributions of the

for general distributions of the ![]() : the reader may find the details in [5].

: the reader may find the details in [5].

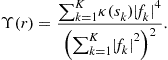

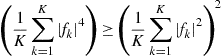

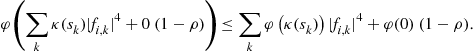

One may inspect the minimum minimorum of ![]() over all the possible constellations. The Jensen inequality (see [22] p. 80) gives:

over all the possible constellations. The Jensen inequality (see [22] p. 80) gives: ![]() for a convex mapping

for a convex mapping ![]() ; the equality is achieved when

; the equality is achieved when ![]() . Taking

. Taking ![]() , we obtain

, we obtain

![]()

and the equality is achieved when ![]() has unit modulus. Of course, this can only happen if one of the sources has a modulus equal to one or, there exists an index k such that

has unit modulus. Of course, this can only happen if one of the sources has a modulus equal to one or, there exists an index k such that ![]() . This does not happen in general, but this remark shows that the minimization of

. This does not happen in general, but this remark shows that the minimization of ![]() tends to make

tends to make ![]() resemble as much as possible a constant modulus sequence. We inspect this point a bit further. A way to measure the distance of

resemble as much as possible a constant modulus sequence. We inspect this point a bit further. A way to measure the distance of ![]() to the modulus one is simply to consider

to the modulus one is simply to consider

![]() (4.30)

(4.30)

This function was originally considered for deconvolution problems [23,24] and then for source separation problems ([25–27] for instance).

We may bridge the gap between ![]() and

and ![]() :

:

As is explained in the next section, the search of a global minimum of ![]() or

or ![]() is done according to a gradient method. It is well known that such an algorithm may be stuck in a local minimum of the function to be minimized. We have the result (see [5], Lemma 1):

is done according to a gradient method. It is well known that such an algorithm may be stuck in a local minimum of the function to be minimized. We have the result (see [5], Lemma 1):

Thanks to the expansion (4.29), and the fact that for all the sources ![]() , the local minima of

, the local minima of ![]() over the unit sphere are the separating vectors (of unit norm). After simple topological considerations, it can even be deduced that:

over the unit sphere are the separating vectors (of unit norm). After simple topological considerations, it can even be deduced that:

As far as the function ![]() is concerned, we have:

is concerned, we have:

2.04.3.4.2 Estimation of one source: practical aspects

Basic algorithms: Two problems arise when one focuses on the implementation of the results presented so forth: the first one concerns the estimation of the cost functions ![]() or

or ![]() , the second one is to choose a method able to find the argument minima of these estimated functions.

, the second one is to choose a method able to find the argument minima of these estimated functions.

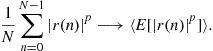

The two functions we have considered involve second and fourth-order moments of the signal ![]() . As the number of available data is finite—say, we observe

. As the number of available data is finite—say, we observe ![]() for

for ![]() —it is not possible to compute any of the moments of

—it is not possible to compute any of the moments of ![]() . However, a version of the law of large numbers allows one to consider estimates of the moments:

. However, a version of the law of large numbers allows one to consider estimates of the moments:

We are in position to estimate both functions ![]() and

and ![]() respectively by

respectively by

(4.31)

(4.31)

(4.32)

(4.32)

Indeed, we have the result:

The functions ![]() and

and ![]() to be minimized are non-convex, and the associated machinery cannot be considered. The functions, however, are regular w.r.t. the parameter

to be minimized are non-convex, and the associated machinery cannot be considered. The functions, however, are regular w.r.t. the parameter ![]() . Hence, we choose to seek the argument minima by means of a gradient method. For instance, consider the minimization of

. Hence, we choose to seek the argument minima by means of a gradient method. For instance, consider the minimization of ![]() . The notation for the gradient of

. The notation for the gradient of ![]() calculated at the point

calculated at the point ![]() being

being ![]() , the gradient algorithm, for a fixed

, the gradient algorithm, for a fixed ![]() , can be written as:

, can be written as:

1. choose an initial vector ![]() and compute

and compute ![]() for all the available data;

for all the available data;

2. at the mth step: compute ![]() and the associated updated signal

and the associated updated signal ![]() ;

;

The same algorithm could be written for the minimization of ![]() . However, the fact this latter function is homogeneous may involve numerical problems (the vector

. However, the fact this latter function is homogeneous may involve numerical problems (the vector ![]() is not bounded). This is why the projected gradient algorithm is prefered: it consists in normalizing at each iteration of the algorithm the updated signal

is not bounded). This is why the projected gradient algorithm is prefered: it consists in normalizing at each iteration of the algorithm the updated signal ![]() , i.e., projecting the current parameter

, i.e., projecting the current parameter ![]() on the set

on the set

Whatever the considered cost function, the parameter ![]() controls the performance. The next section faces the problem of choosing

controls the performance. The next section faces the problem of choosing ![]() .Refinement: choosing a locally optimal

.Refinement: choosing a locally optimal ![]() . For simplicity, the minimization of

. For simplicity, the minimization of ![]() is addressed. The same idea may be considered for the minimization of

is addressed. The same idea may be considered for the minimization of ![]() by means of the projected gradient. In order to boost the speed of convergence, it has been proposed to change

by means of the projected gradient. In order to boost the speed of convergence, it has been proposed to change ![]() at each step of the algorithm: the parameter

at each step of the algorithm: the parameter ![]() is chosen such that the value of the function evaluated at the point

is chosen such that the value of the function evaluated at the point ![]() is minimum. It is easily seen that the function

is minimum. It is easily seen that the function ![]() is a polynomial of degree four. The minimum is hence easily (numerically) computed.Robustness of the algorithms to the presence of local minima: It is well-known that such a gradient algorithm may be trapped in a local minimum: this, in general, is a clear limitation to the use of such an algorithm. Of course, it is not possible to say much on the local minima of the estimated functions

is a polynomial of degree four. The minimum is hence easily (numerically) computed.Robustness of the algorithms to the presence of local minima: It is well-known that such a gradient algorithm may be trapped in a local minimum: this, in general, is a clear limitation to the use of such an algorithm. Of course, it is not possible to say much on the local minima of the estimated functions ![]() and

and ![]() . However, Propositions 6 and 7 indicate that, asymptotically, if the algorithms are trapped in a local minimum, this does not impact the performance since this local minimum is precisely separating. This remark certainly explains why the algorithms show very good performance.

. However, Propositions 6 and 7 indicate that, asymptotically, if the algorithms are trapped in a local minimum, this does not impact the performance since this local minimum is precisely separating. This remark certainly explains why the algorithms show very good performance.

2.04.3.4.3 The deflation step

The algorithms depicted above provide a way to retrieve one of the sources. Of course, we aim at estimating all the sources. An idea hence consists in running again the previous algorithm. However, it is not possible to guarantee that the second extracted source is not the first extracted one. In the literature, three methods have been presented that overcome this major problem.

In the first one [29] it is proposed to penalize the cost function ![]() or

or ![]() by adding to them a positive term that gives a measure of decorrelation between the current signal

by adding to them a positive term that gives a measure of decorrelation between the current signal ![]() and the previously extracted source. It is simple to show that, indeed, the global minimum is achieved if and only if the

and the previously extracted source. It is simple to show that, indeed, the global minimum is achieved if and only if the ![]() is an other source. However, this approach has been noticed to show poor performance. The reason is that the extended cost function, contrary to the original, has many local minima that do not correspond to separating solutions. The algorithms is known to be trapped in such local minima and, in this case, the provided solution is not an estimate of one of the remaining sources.

is an other source. However, this approach has been noticed to show poor performance. The reason is that the extended cost function, contrary to the original, has many local minima that do not correspond to separating solutions. The algorithms is known to be trapped in such local minima and, in this case, the provided solution is not an estimate of one of the remaining sources.

The second one is algebraic: the idea is to estimate the subspace associated with the first estimated source and to run the minimization of ![]() or

or ![]() on the orthogonal complement of the subspace in question: see [5].

on the orthogonal complement of the subspace in question: see [5].

The third is the most popular for source separation [30,31]: it consists in deflating the mixture by subtracting an estimation of the contribution of the extracted source and then to redo the minimization of ![]() or

or ![]() . Ideally, the “new” mixture should not involve the source that has been extracted and the minimization hence allows one to estimate another source. We provide some details.

. Ideally, the “new” mixture should not involve the source that has been extracted and the minimization hence allows one to estimate another source. We provide some details.

Thanks to the previous results, we may suppose that we have ![]() where

where ![]() is an unknown scaling. We have arbitrarily considered that the extracted source was the one numbered “1”: this has of course no impact on the generality. The contribution of the first source in the mixture

is an unknown scaling. We have arbitrarily considered that the extracted source was the one numbered “1”: this has of course no impact on the generality. The contribution of the first source in the mixture ![]() has the form

has the form ![]() where

where ![]() is the first column of the mixing matrix. We adopt a least square approach: the contribution of the first source is estimated as

is the first column of the mixing matrix. We adopt a least square approach: the contribution of the first source is estimated as ![]() where this vector is defined as the minimizer of

where this vector is defined as the minimizer of

Then the “deflated mixture” to be considered is

![]()

Ideally, the deflated mixture should not involve the first source. Hence running the Constant Modulus algorithm on this mixture should provide an estimate ![]() of another source—say

of another source—say ![]() . The deflation is done again: this time

. The deflation is done again: this time ![]() is an estimation of the contribution of the second source. The deflated mixture is

is an estimation of the contribution of the second source. The deflated mixture is

![]()

And so forth until all the source are estimated. Notice that, asymptotically (when ![]() ) the deflation procedure is convergent: in K steps the K sources are estimated.

) the deflation procedure is convergent: in K steps the K sources are estimated.

2.04.3.4.4 Improving the deflation

Though its inherent advantages (simplicity, convergence of the algorithm of extraction), the above approach is supposed to suffer from the K deflation steps. Indeed, the deflation is expected to increase, step after step, the noise level, impinging dramatically the extraction of the “last” source. This aspect has already been addressed and partially got round: we shortly address the re-initialization procedure introduced in [32].

Consider the extraction of the “second” source and apply the deflation technique. The source extraction algorithm is run on the deflated mixture and is likely to provide a spatial filter ![]() . We have, up to a scaling factor:

. We have, up to a scaling factor:

which provides the approximation:

![]()

where ![]() . We hence have computed a spatial filter g that is close to a separating filter w.r.t. the initial mixture. The idea is hence the following: run the algorithm of minimization on the initial mixture, taking g as an initial point. As g is close to a filter that is a local minimum of the function to minimize (see Propositions 6, 7), the computed spatial filter hence obtained after convergence is likely to separate

. We hence have computed a spatial filter g that is close to a separating filter w.r.t. the initial mixture. The idea is hence the following: run the algorithm of minimization on the initial mixture, taking g as an initial point. As g is close to a filter that is a local minimum of the function to minimize (see Propositions 6, 7), the computed spatial filter hence obtained after convergence is likely to separate ![]() from the initial mixture. This procedure can be iterated: at each step, the separation is processed on the initial

from the initial mixture. This procedure can be iterated: at each step, the separation is processed on the initial ![]() and not on a deflated mixtures. Though simple, this procedure considerably enhances the performance.

and not on a deflated mixtures. Though simple, this procedure considerably enhances the performance.

2.04.3.4.5 Extensions

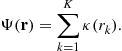

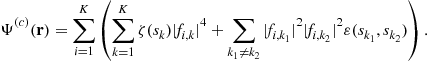

Many contributions in BSS consider such functions as

![]() (4.33)

(4.33)

over the unit sphere ![]() where

where ![]() is any continuous function on

is any continuous function on ![]() such that

such that ![]() and

and ![]() is strictly monotone over

is strictly monotone over ![]() and

and ![]() . The most common choices are

. The most common choices are ![]() and

and ![]() and

and ![]() . It quite simple to prove the following result:

. It quite simple to prove the following result:

2.04.3.5 Global BSS (stationary mixture)

In this section, we present global methods, i.e., methods that “invert” the system in one shot. Assuming that ![]() , it is possible to linearly transform the data such as in Section 2.04.3.3. The “new” data can be written as

, it is possible to linearly transform the data such as in Section 2.04.3.3. The “new” data can be written as

![]()

where U is a unitary ![]() matrix. A global BSS method hence aims at determining a unitary matrix

matrix. A global BSS method hence aims at determining a unitary matrix ![]() such that the components of

such that the components of

![]()

correspond to the sources up to modulus one scalings and a permutation. In this respect, we suggest to take profit of certain results of Section 2.04.3.4.

2.04.3.5.1 First result

Denote by ![]() the kth component of

the kth component of ![]() . Due to the pre-whitening, we have:

. Due to the pre-whitening, we have: ![]() , hence

, hence ![]() . This later can be seen as a function of the kth row of the matrix

. This later can be seen as a function of the kth row of the matrix ![]() . We have shown that

. We have shown that ![]() is minimum if

is minimum if ![]() corresponds to one of the sources up to a modulus one scaling, i.e., if the kth row of

corresponds to one of the sources up to a modulus one scaling, i.e., if the kth row of ![]() is separating, its non-zero component being located at an index corresponding to the sources that have the smallest Kurtosis. The idea is hence to form the function

is separating, its non-zero component being located at an index corresponding to the sources that have the smallest Kurtosis. The idea is hence to form the function

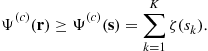

(4.34)

(4.34)

Obviously, we have ![]() . Conversely, this lower bound cannot be achieved in general: assume for instance that

. Conversely, this lower bound cannot be achieved in general: assume for instance that ![]() is reached once: say, the first source. The above lower bound is achieved only if

is reached once: say, the first source. The above lower bound is achieved only if ![]() is the matrix having non-zero components on the first column only. This of course violates the constraint that

is the matrix having non-zero components on the first column only. This of course violates the constraint that ![]() is unitary. We have the following tight result:

is unitary. We have the following tight result:

In practice, the function ![]() given by Eq. (4.34)cannot be computed. As the data are supposed to be complex-circular at the second-order, we have

given by Eq. (4.34)cannot be computed. As the data are supposed to be complex-circular at the second-order, we have

![]()

A consistent estimate of ![]() is hence

is hence

(4.37)

(4.37)

The minimization of this function is carried out over the space of unitary matrices. This can be done by using a Jacobi-like algorithm: unitary matrices are parametrized by means of the Given angles. The reader may find details in [2]. Notice that no results concerning the convergence of the algorithm can be said, since it has not been shown that the local minima of the “true” function ![]() are “good ones”, i.e., achieve the BSS.

are “good ones”, i.e., achieve the BSS.

2.04.3.5.2 Generalization: notion of contrast function

We introduce the function

(4.38)

(4.38)

where ![]() is a function to be specified. Rather than minimizing

is a function to be specified. Rather than minimizing ![]() , we address its maximization. If the maximum is attained when (and only when) BSS is achieved,

, we address its maximization. If the maximum is attained when (and only when) BSS is achieved, ![]() is called a contrast function. As seen previously, the choice

is called a contrast function. As seen previously, the choice ![]() makes

makes ![]() be a contrast function. We have, more generally:

be a contrast function. We have, more generally:

The standard choice for ![]() is

is ![]() . In this case, is enlightening to notice that maximizing

. In this case, is enlightening to notice that maximizing ![]() is equivalent to minimizing:

is equivalent to minimizing:

![]() (4.39)

(4.39)

which is clearly a measure of independence (up to the fourth-order).

2.04.3.5.3 A popular algorithm: JADE

If the indices ![]() are fixed, it may be noticed that the matrix

are fixed, it may be noticed that the matrix ![]() whose entry

whose entry ![]() is given by

is given by ![]() admits the factorization

admits the factorization

![]()

where ![]() is diagonal; the entry

is diagonal; the entry ![]() is

is ![]() . Otherwise stated, U diagonalizes the normal matrix

. Otherwise stated, U diagonalizes the normal matrix ![]() whatever the indices

whatever the indices ![]() . Introducing the

. Introducing the ![]() operator that sums all the entries of a matrix except the ones located on the main diagonal, this says that

operator that sums all the entries of a matrix except the ones located on the main diagonal, this says that

![]() (4.40)

(4.40)

is minimum, equal to zero, when ![]() essentially equals the identity matrix. Conversely, it can be shown that (4.40) can be written as (4.39). Hence a minimizer of the function given in (4.40) is a maximizer of

essentially equals the identity matrix. Conversely, it can be shown that (4.40) can be written as (4.39). Hence a minimizer of the function given in (4.40) is a maximizer of ![]() with

with ![]() . Now, Proposition 12 with the fact that, for any index

. Now, Proposition 12 with the fact that, for any index ![]() proves that the maximizers of

proves that the maximizers of ![]() are such that F is essentially equal to the identity matrix. This trick of algebra allows one to achieve the maximization of

are such that F is essentially equal to the identity matrix. This trick of algebra allows one to achieve the maximization of ![]() thanks to a joint diagonalization of a set of normal matrices. It has been proposed by Cardoso [4] The algorithm associated with this approach is called JADE. It is very popular since efficient algorithms of joint diagonalization are known [20].

thanks to a joint diagonalization of a set of normal matrices. It has been proposed by Cardoso [4] The algorithm associated with this approach is called JADE. It is very popular since efficient algorithms of joint diagonalization are known [20].

Notice that the pencil of matrices is a pencil of normal matrices hence the matrix U is searched in the set of unitary matrices. Recently, Yeredor et al. [34] suggest to relax the unitary constraint. These authors even suggest to skip the pre-whitening of the data: they argue that the pre-whitening may limit the attainable performance as Cardoso pointed it out in [35]. With no whitening of the data, the matrices of cumulants are still jointly diagonalized; if the channel matrix H is square, the columns of this latter form a basis for the diagonalization. The converse is not clear on the one hand and, on the other hand, the case of tall matrices H remains to be addressed.

2.04.3.6 Generalizations

We have presented ad’hoc BSS methods, whose theoretical foundations are solid and whose good performance is well-known. In the literature, however, many other methods can be found. They stem from considerations of information theory. We provide some key ideas and related bibliographical references.

After pre-whitening, we recall that the received data is ![]() (we have dropped the time index): on the one hand, U is unitary and on the other hand, the component of the random variables in s are mutually independent. The idea of independent Component Analysis (ICA) is hence to exhibit matrices V such that the components of