SAR Interferometry and Tomography: Theory and Applications

Gianfranco Fornaro* and Vito Pascazio†, ‡, *Istituto per il Rilevamento Elettromagnetico Ambientale (IREA), Consiglio Nazionale delle Ricerche (CNR), Napoli, Italy, †Dipartimento di Ingegneria, Università degli Studi di Napoli Parthenope, Napoli, Italy, ‡Laboratorio Nazionale di Comunicazioni Multimediali, CNIT Complesso Universitario di Monte S. Angelo, Edificio Centri Comuni, Napoli, Italy, [email protected], [email protected], [email protected]

Abstract

Synthetic Aperture Radar (SAR) is among of the most used remote sensing systems for Earth observation and has wide application in security in both marine and terrestrial environments. The last decade has been a period of extraordinary development of SAR systems with an impressive growth in the number of launch and operational deployment of spaceborne SAR remote sensing systems. Enabling an extensive range of new applications is the advent of several very high resolution spaceborne SARs, such as TerraSAR-X/Tandem-X and the COSMO-SKYMED constellation. Very fine details of Earth surface are provided on a regular basis by data acquired and processed by those sensors. A significant contribution to the desire to field such systems has been the development of coherent processing techniques, in particular interferometry, that have dominated SAR applications since their first demonstration in the late 70s and early 80s. Evidence of the importance and versatility of radar interferometry is its application to such diverse area as the monitoring of volcanoes, earthquakes, landslides, ice sheet motion and anthropogenic sources such as ground pumping of water and oil. Development of innovative processing techniques, like permanent scatterer interferometry, polarimetric-interferometry and tomography have expanded the number of applications and data sets that can be successfully exploited. For example, permanent scatterer interferometry and tomography have revolutionized what can be done by SARs in urban environments. In this article we aim to provide a description of the some of the major developments in SAR interferometry and SAR tomography with particular emphasis on the digital signal processing aspects. We will illustrate SAR tomography using urban and infrastructures applications although it has other applications such as in forest and ice structure. Examples of applications of interferometry and tomography are provided to demonstrate the practical usefulness of the technological advances occurring on both the SAR system and data processing. With respect to other published tutorial on interferometry, we focus on the development of multibaseline/multipass coherent processing approach from a signal processing perspective with the aim to provide to readers a comprehensive description of the topics demanding to the reference bibliography deeper investigations.

Keywords

Synthetic Aperture Radars (SAR); Radar imaging; SAR Interferometry (InSAR); SAR Tomography (TomoSAR); Differential Interferometry (DinSAR); 3D-SAR imaging; 4D-SAR imaging

Acknowledgments

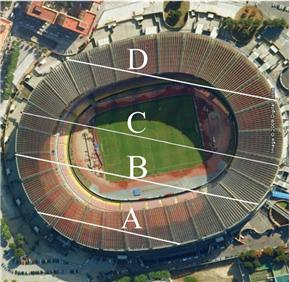

The Authors wish to thank the anonymous Reviewers for their comments which contributed to improve the quality of the paper, and Prof. Gilda Schirinzi, Prof. Alessandra Budillon, Prof. Giampaolo Ferraioli, and Dr. Fabio Baselice, of the Universitá di Napoli Parthenope, Italy, and Dr. Diego Reale of IREA-CNR, Napoli, Italy, for the valuable discussions about the main topics of the paper. Moreover, the Authors wish to thank Prof. Richard Bamler of DLR and Technical University of Munich, Germany, and Dr. Michael Eineider of DLR, Germany, and Dr. Alessandro Ferretti, of Telerilevamento Europa (TRE), Italy, for providing some of the images included in this paper. The Authors wish to thank also Prof. Fabrizio Lombardini of University of Pisa for providing the Capon results relevant to the San Paolo Stadium data set, and Dr. Nicola D’Agostino of the Istituto Nazionale di Geofisica e Vulcanologia for providing the GPS data used in Figure 20.25.

2.20.1 Introduction

During the 19th century, the theory of electromagnetic fields became a firmly established science: Maxwell’s equations accurately described the propagation of the fields, Marconi’s wireless experiments demonstrated the possibility of wireless communication at large distances. Nevertheless, even since that early time it was evident that electromagnetic waves could be not only used for communication but also to obtain information, or better to “sense” the environment and the objects without being in contact with them.

Remote sensing is today well established and intensively used for acquiring information about the Earth’s surface [1]: among the most used remote sensing systems, active microwave sensors and particularly Synthetic Aperture Radar (SAR) have gained an increasing interest from both a scientific and industrial viewpoint. This success is a consequence of the capability of the sensor to operate independently of an external illumination source (day and night) and practically in almost any meteorological condition.

2.20.1.1 Microwave high resolution imaging

Active sensors make use of radars, typically installed on spacecraft, or on aircraft, or even on ground. They transmit a coherent (i.e., well controlled at the level of a single oscillation) signal and record the echoes scattered back to the sensor from the observed area. Accordingly, they are independent from any external illumination sources: this peculiarity, together with the fact that they work at wavelength that are, differently from optical and infrared sensors, almost immune to the presence of clouds and fog, provide the system with the possibility to operate day and night and also in adverse weather conditions.

Modern SAR sensors transmit signals whose bandwidth is of the order of tens/hundreds MHz, thus leading to spatial resolution along the range (typically called across track direction) of the order of meters or fraction of meter.

For comparable optical aperture and antenna size, the spatial resolution along the flight track of images acquired by microwave sensors should be several order of magnitude worse than the optical images. This drawback is however overcome by the possibility to synthesize a very large antenna (of the order of few kilometers) by moving a much smaller real antenna along a straight trajectory corresponding to the platform flight track. This possibility, first postulated by Wiley [2] with the Doppler beam sharpening concept, is a direct consequence of the coherent nature of the system.

The operation of synthesizing a large antenna is today carried out typically off-line, after the downlink to a ground station, via a digital processing operation, usually referred to as SAR focusing operation, that, coherently combine on a 2D domain the echoes received from the radar at different positions. The obtained images are characterized by a resolution along the direction of array synthesis (typically referred to as along track direction) of the order of the length of the physical antenna dimension, independently from the wavelength and the height of the platform. Depending on the operative mode (scan, strip, and spot-mode) and on the fact that the platform can be disturbed during its motion by turbulences as in the airborne case, this operation can be in some cases more problematic.

SAR images are complex entities where the intensity basically measures the energy backscattered by the ground targets toward the sensor, which depends on the geometric (shape, roughness, and slope) and on physical (conductivity and permittivity) properties of the observed scene.

SAR sensors provide information about the observed scene complementary to that provided by optical systems. SAR images are nowadays used in many areas of interest: In glaciology they are used for glaciers monitoring and snow mapping, in agriculture for crop classification and soil moisture monitoring, in forestry for biomass estimation, etc. They are also used in environment monitoring for the detection of oil spills, flooding, as well as to monitor the urban growth or moving targets.

The technique that has opened probably the widest range of application is SAR Interferometry [3,4].

As in any electromagnetic coherent system the phase information is related to the travelled path, that is the distance target-sensor (range). Radar measurements therefore embed the information of distances with extreme high accuracy, on the order of wavelength fraction: due to the randomness of the scattering mechanism this information can be however extracted only as a relative measurement between different images. SAR Interferometry (InSAR) is a technique that, by exploiting at least two SAR images acquired from slightly different angles, allows retrieving the topography of the observed scene. A single SAR image provides measurement of measure the backscattering scene property only on a 2D domain, i.e., by performing a projection onto the plane containing the flight direction and the radar line of sight. Similarly to the human eyes system, height sensitivity can be achieved by combining two images of the same area acquired from two slightly different positions. The key principle of SAR interferometry is the use of the phase difference between SAR images for the accurate measurement of the distances of a target from two sensors displaced in location in order to create a parallax.

The two images can be acquired simultaneously if two antenna are present at the same time on the platform (single-pass interferometry) or through different passes of the same antenna (repeat-pass interferometry). In the latter case changes of the scene backscattering properties and variations of the phase delay contribution from the atmosphere may strongly impair the accuracy of the results. The accuracy of the topography estimation depends on the component orthogonal to the line of sight of the antenna vector separation commonly referred to as (spatial) baseline: for this reason this technique is also referred to as across-track interferometry.

As an alternative to topographic mapping, when the two antennas are present on the same platform and are separated along the flight direction, they acquire images repeated with a revisit of a few milliseconds. This is the case of the along-track interferometry which allows monitoring fast movements of targets on the ground. Applications concern for example the estimations of ocean currents or moving detection and velocity estimation [5].

An interesting extension of across and along-track InSAR is the Differential SAR Interferometry (DInSAR): by exploiting phase difference of images acquired at times (epochs), separated typically by some days, it allows accurately monitoring slow displacements over the epoch sequence. Differential interferometric data can be acquired by radar observations separated in time either from a single radar on one platform (e.g., ERS-1, JERS-1, ENIVSAT, TerraSAR-X) or from multiple radars on different platforms provided the radar have similar radar operating a viewing parameters (e.g., Cosmo Skymed constellation). Since the precision of radar in estimating distance is in the order of fraction of wavelength, DInSAR can estimate movements with sub-centimetric accuracy using L-, C-, or X-Band radars.

Major applications of this technique regard the natural hazard and security area. Besides, by exploiting archives of past images multipass techniques are also extremely useful to provide a past monitoring.

Interferometry applications have dramatically increased the use of microwave remote sensing for the environment monitoring: This is also testified by the growing interest of the major international space agencies in the development and launch of spaceborne SAR sensors satellites. The twin satellites ERS-1 and ERS-2 [6] of the European Space Agency (ESA), operative since 1992 and 1996, respectively, each one with a revisiting time of 35 days each, were characterized by the possibility to acquire a pairs of tandem images, i.e., interferometric images separated by only one day. ERS sensors from nineties to the first decade of 2000 were the very first systems used for the operative demonstration and routinely application of interferometry. Their acquisitions have been deeply exploited for years to develop most of the interferometric processing algorithm currently used and to demonstrate the potentials of the application of SAR Interferometry in several natural risk areas. Recently, the Italian Cosmo Skymed [7] and German TerraSAR-X [8] missions improved the quality of SAR product by providing images with spatial resolution up to one meter. Together with its twin satellite TanDEM-X, TerraSAR-X is going to provide the most accurate Digital Elevation Model (DEM), i.e., the topography, of the Earth on a global scale with a relative accuracy of 2 m for slope lower than 20° and 4 m for higher slopes with a spatial grid of 12 m. From the other side, the Italian COSMO-Skymed mission [7] is, worldwide, the unique constellation of more than two SAR sensors exploited also for civilian applications. It is composed of four medium-size satellites, each one equipped with an X-band high-resolution SAR system, allowing acquiring images on the same area every 4 days on average, thus both reducing the effects of decorrelation and allowing a more frequent imaging which is useful for interferometric application to cases of emergency.

Polarimetry [9–16] and Polarimetric SAR interferometry [17,18] and are techniques that use multi-polarization channels to extract further information on the scattering mechanism. Polarimetric information allows separating different scattering mechanisms. Whereas SAR polarimetry is a technique that use single antenna data, Polarimetric SAR interferometry use data acquired by two antennas. The former has a wide use in the field of classification, the latter allows generating interferograms corresponding to different scattering mechanisms and has an area of application in the field of forest height retrieval and biomass estimation.

The advances in the SAR hardware has allowed to reach very high imaging resolutions at microwaves on the order of a meter and has in parallel stimulated the development of advanced processing techniques able to extract from the data the highest possible information content.

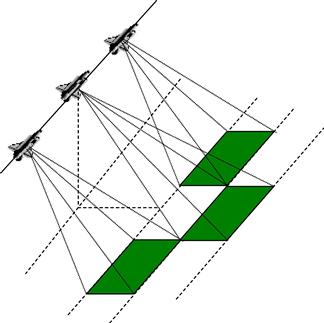

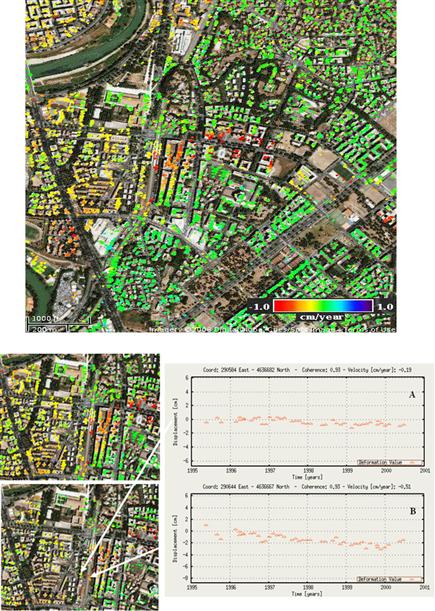

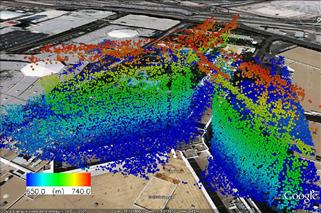

One of the most important and recent innovations in SAR processing is associated with the extension of the imaging process form a 2D domain to a multi-dimensional domain. The so-called SAR Tomography has been among the first examples giving to SAR the ability of reconstructing images of the backscattering property of the scene also along the direction (elevation) orthogonal to the two classical dimension (azimuth and range). The key aspect of this technique is the possibility to synthesize, similarly to the flight direction (azimuth) an array also along the “height” direction to sharpen and steer the beam in such a way to measure the backscattering characteristics of the scene along the elevation direction and hence to generate full 3D images.

SAR Tomography allows vertically profiling (3D imaging) the backscattering to detect targets which interfere in the same pixel of a single SAR image and even monitoring, with the extension of the imaging properties to the time direction (4D Imaging), their individual deformation. Beside the application to a distributed scenario such as forest where the scattering is distributed along the height, the tomographic technique also provide significant advances in the application to the imaging and monitoring of in areas characterized by an high density of scatterers, such as urban areas, opening the possibility to achieve dense imaging and monitoring of single buildings and individual structures from the space, for the first time comparable to what obtainable with in situ systems like laser scanner [19,20].

Polarimetric SAR tomography [21–24] takes benefits of both polarimetry and tomography: by accessing the multibaseline information on different polarization channels, it allows retrieving scattering profiles along the elevation direction associated with different scattering mechanisms such as single bounce, double bounce and volume scattering. This work concentrates on the development of SAR interferometry (including multipass Differential SAR Interferometry) and Tomography for 3D reconstruction and target deformation monitoring.

2.20.2 Basics concepts in SAR imaging and SAR interferometry

2.20.2.1 High resolution image formation

Among the several parameter characterizing an image, resolution plays certainly a major role. In the radar case the resolution along the range coordinate depends on the system bandwidth [25,26]. Large bandwidths are obtained, with simplified (i.e., low peak power) hardware, by transmitting long duration linear frequency modulated (chirp) pulses which are, after echo reception, compressed (typically on the ground) via correlation techniques: this operation is commonly referred to as range pulse-compression or range focusing (see Figure 20.1).

The transmitted chirp pulse has the following expression:

![]() (20.1)

(20.1)

wherein rect[![]() ] is the window function,

] is the window function, ![]() is the pulse duration, and

is the pulse duration, and ![]() is the Chirp rate

is the Chirp rate ![]() . The correlation of the response of a target at range r with the transmitted pulse replica provides the expression of the range impulse response function (IRF), also known as range Point Spread Function (PRF):

. The correlation of the response of a target at range r with the transmitted pulse replica provides the expression of the range impulse response function (IRF), also known as range Point Spread Function (PRF):

![]() (20.2)

(20.2)

with c being the light-speed and ![]() the bandwidth of the transmitted pulse. The (3 dB) range resolution is numerically given by [27]

the bandwidth of the transmitted pulse. The (3 dB) range resolution is numerically given by [27]

![]() (20.3)

(20.3)

Such a resolution value is also referred to as slant range resolution to highlight that it refers to the line-of-sight (LOS) direction. Scaling factors derived by standard trigonometry should be applied to achieve the resolution along the main scene direction, for instance along the direction corresponding to the projection of range onto the local cartographic reference system usually referred to as ground range resolution:

![]() (20.4)

(20.4)

where ![]() is the so-called incident angle, defined as the angle between the radar LOS and the local normal to the surface at the point of the reflection on the ground (see Figure 20.2), and

is the so-called incident angle, defined as the angle between the radar LOS and the local normal to the surface at the point of the reflection on the ground (see Figure 20.2), and ![]() is the terrain slope. The ground range resolution is, of course, coarser than the slant range resolution.

is the terrain slope. The ground range resolution is, of course, coarser than the slant range resolution.

Figure 20.2 SAR range resolution. ![]() is the slant range resolution,

is the slant range resolution, ![]() is the ground range resolution,

is the ground range resolution, ![]() is the look angle,

is the look angle, ![]() is the incidence angle, and

is the incidence angle, and ![]() is the local terrain slope. In absence of topography

is the local terrain slope. In absence of topography ![]() , it results that

, it results that ![]() .

.

Note that in the absence of terrain slope, the incidence angle is equal to the angle ![]() (known as look angle and defined with respect to the nadir direction) only when the Earth curvature can be neglected, i.e., as in the case of airborne sensors operating at low altitude.

(known as look angle and defined with respect to the nadir direction) only when the Earth curvature can be neglected, i.e., as in the case of airborne sensors operating at low altitude.

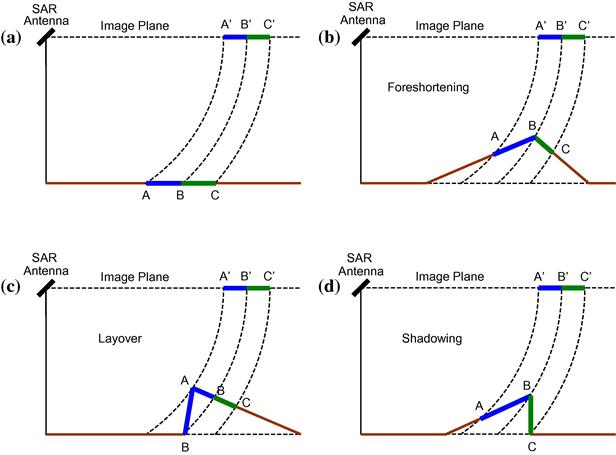

In case of flat ground and if the SAR antenna beamwidth in the range direction is not too big, as usually occur for instance for new generation X-band SAR sensors, the ground resolution is almost constant along the footprint. Differently, in case of non-flat ground, as shown in Figure 20.3, the ground resolution can change significantly, as effect of topography, giving rise to the well known effects of foreshortening, layover, and shadowing. Under conditions of foreshortening different resolution cells can contain contribution of very different, in terms of dimension, ground areas (see Figure 20.3b). Layover is beyond the limit case just described: in this case, points more distant in the ground can appear closer to the SAR radar sensor, and are mapped erroneously in the SAR image (see Figure 20.3c). Such effect is very common in mountainous areas with steep slopes, and in urban areas [4]. The shadowing effect occurs when ground area are masked by reliefs, as in Figure 20.3d. In this last case, a slant range resolution cell does not map any ground area (the ground area BC in Figure 20.3d is not seen from the SAR antenna). The above presented distortion effects makes that SAR images of mountain and urban areas can look very different from optical images, as an effect of geometrical distortions.

Figure 20.3 SAR distortion effects: (a) normal conditions, (b) foreshortening, (c) layover, and (d) shadowing.

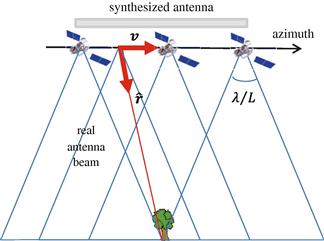

In the azimuth direction the focusing operation is necessary to synthesize a long antenna with higher resolution capabilities, that is for achieving the beam sharpening.

With reference to Figure 20.4 where the system imaging geometry represented in the flight direction, the system “senses” the scene by transmitting pulses at regular time instants, regulated by the pulse repetition frequency. The echoes collected in each position may be coherently processed in such a way to synthesize (digitally) an antenna whose dimension is equal to the footprint (X) of the real antenna [25,28]:

![]() (20.5)

(20.5)

where ![]() is the wavelength, L is the azimuth length of the real antenna, and r is the range of the target (range). Note that

is the wavelength, L is the azimuth length of the real antenna, and r is the range of the target (range). Note that ![]() is the angular aperture of the real SAR antenna in the azimuth direction. The final resolution of the image, provided by the synthetic antenna is [25]:

is the angular aperture of the real SAR antenna in the azimuth direction. The final resolution of the image, provided by the synthetic antenna is [25]:

![]() (20.6)

(20.6)

where the resolution gain factor 2 in the first equality is associated with the capability of the array to transmit and receive the radiation from each position of the real antenna during the synthetic antenna formation.

Figure 20.4 System geometry in the azimuth direction. The movement of the platform allows to synthesize a larger antenna thus achieving a sharpening of the beam of the real antenna.

The time interval in which the scatterer is illuminated is referred to as integration time: for a standard operating mode, as that illustrated in Figure 20.4 (referred to as stripmap mode), it is trivially given by the ratio between the real antenna footprint and the platform velocity (v).

A dual approach used for the computation of the azimuth resolution of the focused image is provided by the so called Doppler analysis which states that when either transmitters or receiver are subject to a uniform motion, the received radiation is subject to a frequency shift (called Doppler shift) equal ![]() , with

, with ![]() being the velocity vector (in our case of the platform) and

being the velocity vector (in our case of the platform) and ![]() being the versor of the receiving radiation (in our case the direction locating the scatterer from the platform). It is therefore clear that, during the integration interval, the echo from the target sweeps an interval from

being the versor of the receiving radiation (in our case the direction locating the scatterer from the platform). It is therefore clear that, during the integration interval, the echo from the target sweeps an interval from ![]() to

to ![]() , which corresponds to a Doppler frequency interval from

, which corresponds to a Doppler frequency interval from ![]() to

to ![]() . Accordingly, the Doppler bandwidth amounts to:

. Accordingly, the Doppler bandwidth amounts to:

![]() (20.7)

(20.7)

Such a bandwidth is able to provide pulses with time duration equals L/(2v), which corresponds a spatial extent of about L/2. Unfortunately, the relative motion between the sensor and the target introduces also linear (phase) distortions as well as motion through resolution cell. Therefore, to provide short duration pulses the phase distortions affecting the available bandwidth must be compensated at the azimuth focusing level with the use of filters which are intrinsically 2D and also space variant only (for rectilinear tracks) with the range. The SAR focusing topic is out of the scope of this work, readers can refer to [25,28].

2.20.2.2 Operational modes

The classical operational mode of a SAR system considers the antenna pointing with a fixed offset from the flight direction, non-necessary orthogonal (i.e., broadside) pointing: this is referred to as Stripmap mode to highlight the fact that the scene is illuminated along a strip. The stripmap imaging mode geometry is depicted in Figures 20.4 and 20.5a.

In this way the integration time for forming the image of a target is, as discussed in the previous section, limited by the ratio between the real antenna azimuth footprint dimension and the platform velocity. This poses a limitation on the maximum achievable resolution. Another limitation of this imaging mode is associated with the coverage of the imaged strip in the slant range direction, which is in this case provided by the ground range extent of the real antenna footprint [25]. Slant range coverage and imaging resolution can be traded-off by operating a beam steering during the acquisition. Beside the classical stripmap mode, the two most known operational mode are spotlight and scan mode.

In the Spotlight mode the antenna beam is steered backward with respect to the antenna flight direction in such a way to collect data from a fixed area on ground on a longer (compared with the classical stripmap mode) flight segment [26,29,30]. The spotlight imaging mode geometry is depicted in Figure 20.5b.

In particular with respect to the stripmap mode (Figure 20.5) where the beam orientation is fixed, in the spotlight mode what is fixed is the illuminated area: this spotlight configuration is usually referred to as staring spotlight mode. A configuration that allow obtaining illumination interval and hence resolutions between the stripmap and staring spotlight mode is the so called sliding spotlight in which the angular beam steering rate is reduced in such a way to allow the footprint to slide on the ground [31,32]. With respect to the staring spotlight, the resolution loss is compensated by an increase of the azimuth coverage.

A mode complementary to the spotlight is the ScanSAR mode [33] whose geometry is shown in Figure 20.6. In this case the antenna is steered in the range direction to increase the range coverage. During the aperture synthesis in azimuth, the beam is regularly steered in range to sweep among a fixed number (typically 2–4) of adjacent range subswaths. The sweep mechanism is carried out in such a way to avoid gaps along the azimuth direction over the subswaths, i.e., to avoid the presence of areas which are not illuminated in the azimuth direction. The data collected in an illumination sub-interval for a generic subswath is called burst. In the stripmap case each target is imaged from the whole antenna beam and therefore the radiometric accuracy is preserved, that is homogenous area are imaged in a (average) constant backscattering level. In the ScanSAR case, as the target is seen only from a, or from a few small portions of the azimuth beam during the burst acquisition, not only we have a reduction of the azimuth resolution (this is the price for the increase in the range cover), but also different areas can be seen by different portions of the azimuth antenna beam. The latter effect produces radiometric losses seen as stripes along the azimuth (scalloping): homogenous area are imaged in a variable backscattering level. A mitigation of the scalloping problem is achieved by the adopting a TOPS (literally reverse of SPOT) acquisition mode [34]. In this case, in addition to the range steering, a steering in azimuth, with a forward rotation (i.e., opposite to that of the SPOT mode), is carried out to allow the azimuth beam to run forward, faster that the platform, in such a way that almost all scatterers in azimuth are imaged by the highest possible beam portion.

The azimuth resolution for the different modes can be evaluated by referring to the Doppler bandwidth, evaluated in (20.7), which can be written as a product of the Doppler rate ![]() and the integration time

and the integration time ![]() :

:

![]() (20.8)

(20.8)

Equation (20.8) follows directly from the fact that the signal collected along the azimuth direction (slow time) is with a good approximation a linear frequency modulated pulse; the associated Doppler rate ![]() equals:

equals:

![]() (20.9)

(20.9)

In the Stripmap case the integration time is fixed by the real antenna beamwidth:

![]() (20.10)

(20.10)

By substituting Eqs. (20.10) and (20.9) in Eq. (20.8), Eq. (20.7) is obtained. In the Scansar and Spotlight cases, due to the range or azimuth antenna sweep, the integration time is fixed to a value which is lower and higher than the limit in (20.10), respectively, in order to select the wanted azimuth resolution.

It is important to point out also that, while in the Stripmap case the spectral properties of the received signal are time invariant along the azimuth, in the case of Scansar and Spotlight acquisition, due to the antenna steering, the spectral properties show an azimuth space variance. For instance, in the spotlight mode, and particularly in the sliding spotlight configuration, due to the difference between the platform and footprint velocity, the angular view of the system to the scene is azimuth dependent and accordingly, the received Doppler bandwidth is progressively translated from positive to negative frequencies. All these aspects must be accounted during post processing, such as for instance image resampling and/or image filtering as in the case of SAR interferometry [35].

2.20.3 SAR interferometry

SAR image is a 2D complex signal, resulting from the coherent processing of raw data acquired from the synthetic antenna [26]. The amplitude of the SAR images represents the reflectivity of the ground area under view while the phase of the SAR images is randomly distributed [26,36]. In addition to electromagnetic scattering properties of the targets, the latter embeds also very important geometrical measurements.

Such information can be extracted exploiting two [37–39] (or more than two [40–42] SAR complex images in the framework of SAR interferometry. In particular, the term SAR Interferometry (InSAR) is referred to all methods that employ at least two complex SAR images, exploiting mainly their phases, in order to derive more information about a ground scene respect to the information provided by a single SAR image. The additional information is provided when at least one among the key acquisition parameters of the SAR system is different from acquisition to acquisition.

There exist two possible main configurations of SAR Interferometry: across track interferometry [38] and along track interferometry [43]. In the across track configuration, two (or more) SAR sensors fly on two parallel flight lines and look at the ground from slightly different look angles. In the along-track configuration, two (or more) sensors fly on the same path, looking the scene from the same position but with a very small temporal gap. The across track InSAR configuration allows recovering the height profile of the ground area under observation, while the InSAR along track configuration is mainly used for measurement of fast displacements such as ocean currents [44] and for moving target detection and velocity estimation [45,46].

In all interferometric processing the starting point are the SAR complex images, that can be obtained by means of a two dimensional (2D) processing of the raw data acquired from the SAR sensors [47]. The SAR complex images z(x,r) are representative of the reflectivity of the ground scene, in the sense that they are 2D discrete complex signals of the azimuth (x) and range (r) coordinates, where each sample (an image pixel) embeds the mean reflectivity characteristics of a sampling cell of the ground scene.

2.20.3.1 Across-track SAR interferometry for measuring the surface topography

The regular and controlled oscillation of coherent radiation used in SAR systems allows determining with high accuracy the variations of the propagation distance: such a basic property is the key principle of interferometric techniques.

A single SAR image provides measurement about the backscattering scene property along two directions: The target range (i.e., the distance of the target from the illumination track) and the position of the target along the track (the azimuth direction). Hence, no information is provided on the angle under which the target is imaged (look angle). Knowledge of the latter information completes the set of coordinates in a cylindrical reference system with the axis coincident with the track, thus allowing a full localization of the scatterers in 3D and therefore an estimation of topography.

Similar to the mechanism used in human visual system for the determination of the depth, SAR interferometry is a technique that exploits the parallax in the view of the scene to allows extending the capability of a single SAR system to the reconstruction of the scene elevation profile: as SAR is sensitive to distance whereas the visual system is sensitive to angles, the mechanism is indeed slightly different.

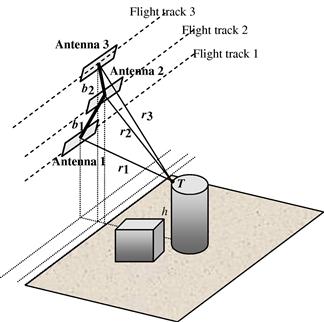

Figure 20.7 shows the geometry of a basic (two-antenna) interferometric acquisition in the plane orthogonal to the flight track: it is clear that by measuring the range of the target with a single (master) SAR system (say the blue line) it is not possible to uniquely localize the position of the scatterer because at the same range would be located all of the points distributed on a equi-range curve (the blue1 one) in the elevation beam (dash line).

By using a second (slave) antenna that images the scene from a different look angle the system is able to measure also the range from a second location [48]: there is then only one point (the intersection of the two equi-range, i.e., blue and red lines in Figure 20.7) that obey to the distance measurements pair. The larger the separation between the two antennas, the sharper the crossing and hence the higher the height accuracy. As in the visual system the 3D sensitivity is given by the difference in the location of the object in the two images at the different eyes [37], the accuracy of the stereometric system in Figure 20.7 is related to the variation of the distance of the target from one to the other antenna (range difference). To provide sufficient accuracy in such a range variation, SAR interferometry uses the phase difference between the two SAR images: the path difference is hence measured to an accuracy which is a fraction of the wavelength (centimeters at microwaves).

Specifically, the terms that plays the key role in the determination of the height is the path difference ![]() . In particular, at large distances it can be shown that the (variation of) the path difference (with respect to a reference point for instance located on a plane) is [37,39]:

. In particular, at large distances it can be shown that the (variation of) the path difference (with respect to a reference point for instance located on a plane) is [37,39]:

![]() (20.11)

(20.11)

where (see Figure 20.7) ![]() is the variation of the look angle between the master and slave antenna,

is the variation of the look angle between the master and slave antenna, ![]() is the orthogonal baseline component, that is the component orthogonal to the (master) line of sight of the vector (baseline) connecting the two satellites, and

is the orthogonal baseline component, that is the component orthogonal to the (master) line of sight of the vector (baseline) connecting the two satellites, and ![]() is the incidence angle, that is the angle between the vertical direction related to the target and the direction of the incoming radiation (line of sight). It is however important to note that Eq. (20.11) represent a simplification of the true scenario which is useful to understand the key principles of across-track interferometry. In the reality, the height is referred to a geographic or cartographic reference system and determined by measuring

is the incidence angle, that is the angle between the vertical direction related to the target and the direction of the incoming radiation (line of sight). It is however important to note that Eq. (20.11) represent a simplification of the true scenario which is useful to understand the key principles of across-track interferometry. In the reality, the height is referred to a geographic or cartographic reference system and determined by measuring ![]() and by knowing the orbital state vectors [49].

and by knowing the orbital state vectors [49].

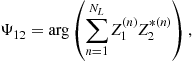

In SAR interferometry the path difference is measured to accuracy of the order of the wavelength by using the phase difference signal:

![]() (20.12)

(20.12)

For application to topographic mapping the two interferometric images can, or better should be acquired at the same time by two antennas on the same platform (bistatic system). This is because changes in the scattering properties, as well as differences in the propagation phase delay through the atmosphere strongly impact the quality of the retrieved DEM. In the case of the Shuttle Radar Topography Mission (SRTM) in 2001 an extensible boom 60 m long was mounted on-board the Shuttle to separate the slave from the master antenna available in the fuselage [50]. The German TanDEM-X mission of 2010 is instead the first example of a bistatic system composed by two twin satellites (TerrSAR-X and Tandem-X) orbiting in a close (500 m one behind the other) formation [51].

2.20.3.2 Statistical characterization of across-track SAR interferometric signals

As mentioned before, an Across Track SAR interferometric (InSAR) system is used to reconstruct earth topography, providing high precision Digital Elevation Model (DEM) of Earth surface. The geometry of an InSAR system has already been shown in Figure 20.7, where two SAR systems look at the scene from two slightly different tracks. As already introduced, the distance b between the two SAR tracks is called baseline, its component orthogonal to the look direction ![]() is the orthogonal baseline, while its component parallel to the look direction (to the slant range)

is the orthogonal baseline, while its component parallel to the look direction (to the slant range) ![]() is the parallel baseline.

is the parallel baseline.

In order to understand how an InSAR system works, consider the distance ![]() between the first SAR antenna

between the first SAR antenna ![]() and a point target T on the ground, and the distance

and a point target T on the ground, and the distance ![]() between the second SAR antenna

between the second SAR antenna ![]() and the same point target T, as shown in Figure 20.7, while

and the same point target T, as shown in Figure 20.7, while ![]() and

and ![]() denote the angles at which the two antennas look at the point target on the ground (slightly different from each other).

denote the angles at which the two antennas look at the point target on the ground (slightly different from each other).

Consider now the two complex (envelope of the) images ![]() and

and ![]() obtained processing raw data collected by the two SAR sensors, where (n,m) are the discrete coordinates corresponding to the continuous azimuth and range coordinates (x,r). Such images can be considered as random processes whose expression is [52]:

obtained processing raw data collected by the two SAR sensors, where (n,m) are the discrete coordinates corresponding to the continuous azimuth and range coordinates (x,r). Such images can be considered as random processes whose expression is [52]:

![]() (20.13)

(20.13)

where ![]() is the complex envelope of the (deterministic) ground reflectivity function (which, in first approximation, can been assumed to be constant with the antenna position k

is the complex envelope of the (deterministic) ground reflectivity function (which, in first approximation, can been assumed to be constant with the antenna position k ![]() 1, 2, since, as commented above, the view angles change only slightly from one position to another),

1, 2, since, as commented above, the view angles change only slightly from one position to another), ![]() are phase factors related to the different propagation paths between the two antenna positions and the point target, and

are phase factors related to the different propagation paths between the two antenna positions and the point target, and

![]() (20.14)

(20.14)

is the random process representing the multiplicative speckle noise at the kth antenna, typical of any coherent system, which is commonly assumed to be a complex Gaussian correlated process with zero mean and unit variance [53]. Of course, ![]() are also random processes.

are also random processes.

As result of the SAR signal model given by (20.13) and (20.14), the phase of a SAR image pixel (n,m) is given by three main contributions:

• a first term ![]() , representing the phase shift induced by the scattering mechanism; it is deterministic, and it is the same for the two antennas;

, representing the phase shift induced by the scattering mechanism; it is deterministic, and it is the same for the two antennas;

• a second term ![]() , representing the phase shift due to the different propagation; it is deterministic, and it is depends on the antenna;

, representing the phase shift due to the different propagation; it is deterministic, and it is depends on the antenna;

• a third term ![]() , induced by the coherent nature of the SAR processing; it is

, induced by the coherent nature of the SAR processing; it is ![]() , and it is depends on the antenna.

, and it is depends on the antenna.

Other phase terms related to geometrical uncertainties, to random propagation effects, or to the changes of the scattering mechanism between the two SAR image acquisitions (for instance, due to the time delay between the two acquisitions) can be also present in Eq. (20.13).

After a processing called image registration which aims to locate the response of a target in the azimuth range pixel in the two images same pixel [37,54], the two SAR images ![]() and

and ![]() are used to build the so-called multi-look SAR interferogram:

are used to build the so-called multi-look SAR interferogram:

(20.15)

(20.15)

where arg(![]() denotes the principal value of the phase,

denotes the principal value of the phase, ![]() is the number of looks [26], and the explicit dependence on (n,m) has been understood (the same will be made in the following). Equation (20.15) represents, for homogeneous targets, the Maximum Likelihood Estimator (MLE) of the interferometric phase [39]. In the following, we will consider the single look case, with

is the number of looks [26], and the explicit dependence on (n,m) has been understood (the same will be made in the following). Equation (20.15) represents, for homogeneous targets, the Maximum Likelihood Estimator (MLE) of the interferometric phase [39]. In the following, we will consider the single look case, with ![]() .

.

From (20.12) and (20.13), it is easy to show that the interferometric phases are related to the observed scene height profile through the well known mapping [3,53]:

![]() (20.16)

(20.16)

where ![]() denotes the modulo

denotes the modulo ![]() operation and

operation and

![]() (20.17)

(20.17)

is the decorrelation phase noise related to the phase differences of the speckle. In Eq. (20.16) it has been assumed, as commented before, that the scattering phase term ![]() , is constant in the two SAR images, so their differences vanishes.

, is constant in the two SAR images, so their differences vanishes.

The problem to be solved in across track InSAR consists of estimating the height values ![]() , starting from the measured (then, noisy) wrapped phases

, starting from the measured (then, noisy) wrapped phases ![]() . Such problem is worldwide known as phase-unwrapping problem, as it amounts to find the unwrapped phase

. Such problem is worldwide known as phase-unwrapping problem, as it amounts to find the unwrapped phase ![]() (not constrained to belong to the interval [0,2

(not constrained to belong to the interval [0,2![]() )) corresponding to the measured wrapped phase

)) corresponding to the measured wrapped phase ![]() (constrained to belong to the interval [0,2

(constrained to belong to the interval [0,2![]() )) [55]:

)) [55]:

![]() (20.18)

(20.18)

The unwrapped phase will be proportional to an estimate of the height h, according to the model given by Eq. (20.12):

![]() (20.19)

(20.19)

Once that the phase unwrapping problem (20.18) has been solved, an estimate of the height profile can be obtained from Eq. (20.19).

Equation (20.19) shows how much sensitive is the unwrapped phase with the height. Considering a fixed geometry for the satellite (or airplane) carrying the SAR antennas (![]() and

and ![]() are fixed), it is easy to understand that the larger the orthogonal baseline and the larger the frequency, the more sensitive is the SAR interferometer. In other words, if we want to measure the height profile h, it could seem more convenient to use a larger baseline and a higher frequency, because for a given variance of the phase noise, the corresponding height variance (inaccuracy) decreases (see Eq. (20.19)). However, an increase of the baseline value may contributes to decorrelate the two speckle phase contributions (

are fixed), it is easy to understand that the larger the orthogonal baseline and the larger the frequency, the more sensitive is the SAR interferometer. In other words, if we want to measure the height profile h, it could seem more convenient to use a larger baseline and a higher frequency, because for a given variance of the phase noise, the corresponding height variance (inaccuracy) decreases (see Eq. (20.19)). However, an increase of the baseline value may contributes to decorrelate the two speckle phase contributions (![]() and

and ![]() , thus increasing the interferometric phase noise (geometrical or spatial decorrelation) [56,57]. For a distributed scattering the correlation between the two speckle contributions decreases linearly with the increase of the baseline [37,39], for a point scatterer the decorrelation disappear because such targets are not affected by speckle. The difference between such two scattering mechanisms influences also the multipass interferometric processing chains (see Section 2.20.4). In any case an increase in the baseline impact also the degree of complexity of the phase unwrapping step described in the following sections.

, thus increasing the interferometric phase noise (geometrical or spatial decorrelation) [56,57]. For a distributed scattering the correlation between the two speckle contributions decreases linearly with the increase of the baseline [37,39], for a point scatterer the decorrelation disappear because such targets are not affected by speckle. The difference between such two scattering mechanisms influences also the multipass interferometric processing chains (see Section 2.20.4). In any case an increase in the baseline impact also the degree of complexity of the phase unwrapping step described in the following sections.

Before describing in the following sub-section part the several methods that have been proposed in the scientific literature to solve the phase unwrapping problem [42,58–63], it is important to describe the random nature of the SAR complex signal and of the SAR phase terms.

Consider the random terms ![]() given by (20.14), representing the multiplicative speckle noise present in the SAR complex signals

given by (20.14), representing the multiplicative speckle noise present in the SAR complex signals ![]() given by (20.13). Such random terms can be modeled as zero mean, mutually correlated Gaussian complex variables with unit variance, which assume uncorrelated values in adjacent pixels, and have real and imaginary parts mutually uncorrelated [52]. By understanding the dependence on range and azimuth discrete co-ordinates n and m, for the sake of simplicity of notation, we can consider the vector:

given by (20.13). Such random terms can be modeled as zero mean, mutually correlated Gaussian complex variables with unit variance, which assume uncorrelated values in adjacent pixels, and have real and imaginary parts mutually uncorrelated [52]. By understanding the dependence on range and azimuth discrete co-ordinates n and m, for the sake of simplicity of notation, we can consider the vector:

![]() (20.20)

(20.20)

whose (real valued) elements ![]() and

and ![]() , with k

, with k ![]() 1,2, denoting the cosine and sine components of the speckle signal

1,2, denoting the cosine and sine components of the speckle signal ![]() , are zero mean Gaussian random variables. The assumed statistical model implies that [52]:

, are zero mean Gaussian random variables. The assumed statistical model implies that [52]:

Note that the ![]() independence is true if the speckle band-pass spectrum is Hermitian respect to the central frequency, assumption which can be considered always satisfied since the speckle vector

independence is true if the speckle band-pass spectrum is Hermitian respect to the central frequency, assumption which can be considered always satisfied since the speckle vector ![]() is related to the complex envelope of a modulated real signal [64]. Moreover, from the assumption (b), it stems that

is related to the complex envelope of a modulated real signal [64]. Moreover, from the assumption (b), it stems that ![]() .

.

In these assumptions, the probability density function of the vector ![]() is given by [65]:

is given by [65]:

![]() (20.21)

(20.21)

where ![]() , and C is the covariance matrix given by:

, and C is the covariance matrix given by:

(20.22)

(20.22)

where ![]() is the correlation coefficient of

is the correlation coefficient of ![]() and

and ![]() , which by virtue of assumption (b) assume the same value of the correlation coefficient of

, which by virtue of assumption (b) assume the same value of the correlation coefficient of ![]() and

and ![]() , given by:

, given by:

(20.23)

(20.23)

where ![]() denotes expectation. Note that in Eq. (20.23) the terms present at the denominator are equal to one, as they represent the unit variance of the considered processes, so that their explicit presence should not be necessary. Nonetheless, we use such definition, as it is valid also in the case of not-normalized processes.

denotes expectation. Note that in Eq. (20.23) the terms present at the denominator are equal to one, as they represent the unit variance of the considered processes, so that their explicit presence should not be necessary. Nonetheless, we use such definition, as it is valid also in the case of not-normalized processes.

We note that, according to the assumptions (a) and (b), ![]() given by (20.23) is equal to the interferometric coherence usually employed in InSAR systems [57], defined starting from complex signals [3]:

given by (20.23) is equal to the interferometric coherence usually employed in InSAR systems [57], defined starting from complex signals [3]:

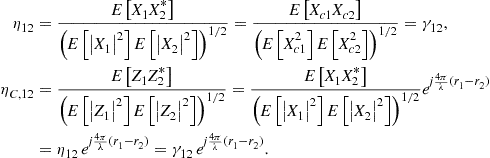

(20.24)

(20.24)

Note that first result of Eq. (20.24)![]() implies that the coherence

implies that the coherence ![]() of the (complex) speckle noise is real valued, due to the above assumptions (a) and (b). Note also that the module of the coherence

of the (complex) speckle noise is real valued, due to the above assumptions (a) and (b). Note also that the module of the coherence ![]() of the complex received signals

of the complex received signals ![]() and

and ![]() is, in module, equal to the coherence

is, in module, equal to the coherence ![]() of the (complex) speckle noise.

of the (complex) speckle noise.

Starting from Eq. (20.21), it is possible to derive, by a change of variable from Cartesian to polar (from ![]() to

to ![]() , and following integration with respect to

, and following integration with respect to ![]() and

and ![]() , the pdf of the single-look speckle phase difference

, the pdf of the single-look speckle phase difference ![]() [53]:

[53]:

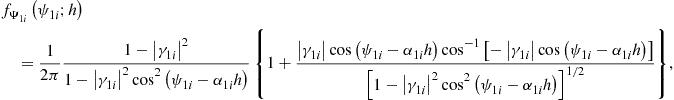

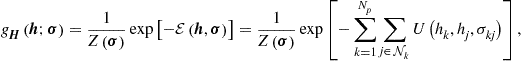

(20.25)

(20.25)

where the dependence of phase difference ![]() and

and ![]() on (n,m) has been, as before, understood.

on (n,m) has been, as before, understood.

The coherence coefficient ![]() is influenced by all factors that cause differences between the two complex speckle images

is influenced by all factors that cause differences between the two complex speckle images ![]() and

and ![]() . The larger these differences, the smaller the coherence coefficient’s value. A coherence reduction can be induced by actual physical changes occurring between the acquisition times of the two data sets (temporal decorrelation) and/or by changes of the ground reflectivity when it is seen from different angles (spatial decorrelation) [57]. Note that also the coherence is a function of the ground coordinate pair (n,m), so that it may change across the image.

. The larger these differences, the smaller the coherence coefficient’s value. A coherence reduction can be induced by actual physical changes occurring between the acquisition times of the two data sets (temporal decorrelation) and/or by changes of the ground reflectivity when it is seen from different angles (spatial decorrelation) [57]. Note that also the coherence is a function of the ground coordinate pair (n,m), so that it may change across the image.

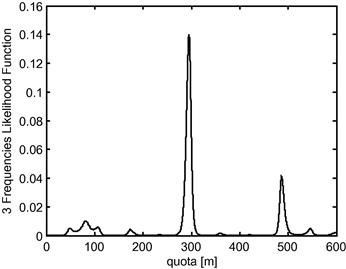

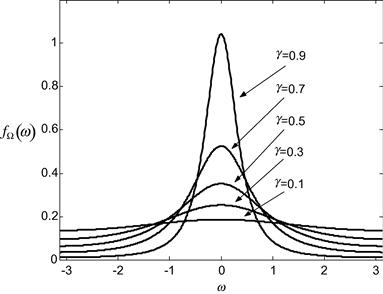

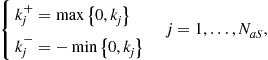

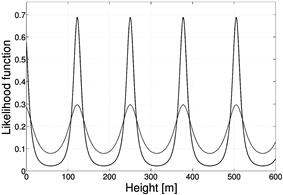

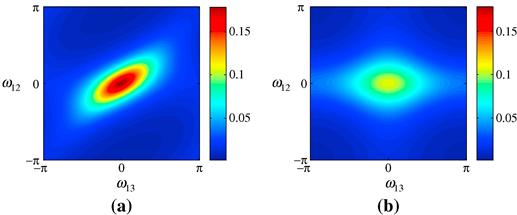

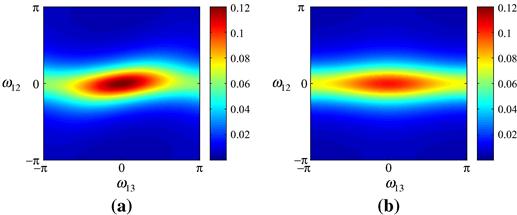

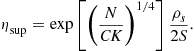

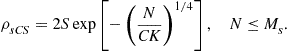

The plot of the speckle phase difference pdf (20.25) for different values of the coherence coefficient is given in Figure 20.8. It has to be noted that the pdf become less picked by reducing the coherence value. The smaller the coherence value, the larger the variance of the speckle phase noise.

Figure 20.8 Interferometric phase pdf plotted for different coherence values (0.01, 0.1, 0.25, 0.5, 0.7, and 0.9).

The joint pdf ![]() of the interferometric phases can be obtained from the joint pdf of the speckle noise phase

of the interferometric phases can be obtained from the joint pdf of the speckle noise phase ![]() given by Eq. (20.25) and plotted in Figure 20.8, exploiting the random variable transformation given by Eq. (20.16), which leads to:

given by Eq. (20.25) and plotted in Figure 20.8, exploiting the random variable transformation given by Eq. (20.16), which leads to:

![]() (20.26)

(20.26)

where the dependence of phase difference ![]() , and

, and ![]() on (n,m) has been again understood. The pdf’s (20.26) have the same shape of pdf of Eq. (20.25), but they are centered on the value

on (n,m) has been again understood. The pdf’s (20.26) have the same shape of pdf of Eq. (20.25), but they are centered on the value ![]() h.

h.

Such pdf family, that as it can be noted is parametrized by the height h, will be used as starting point of some of the phase unwrapping methods described in the following section.

The pdf (20.26) of the interferometric phase is strongly influenced by the coherence. Such parameter can be written as the product of four main contributions [3,39,57]:

![]() (20.27)

(20.27)

where ![]() represents the influence of thermal noise in the receiver,

represents the influence of thermal noise in the receiver, ![]() represents the decorrelation effects due to the different SAR view acquisition angles, depending upon the spatial baseline,

represents the decorrelation effects due to the different SAR view acquisition angles, depending upon the spatial baseline, ![]() represents the decorrelation effects due to volume scattering mechanisms, and

represents the decorrelation effects due to volume scattering mechanisms, and ![]() represents the so called temporal decorrelation effects [3,4].

represents the so called temporal decorrelation effects [3,4].

The first factor in Eq. (20.27) can be computed starting from the circular Gaussian and independent nature of thermal noise and is given by:

![]() (20.28)

(20.28)

where ![]() and

and ![]() are the signal to noise ratio on the two receiving SAR interferometric antennas [4,53,57].

are the signal to noise ratio on the two receiving SAR interferometric antennas [4,53,57].

The second factor in Eq. (20.27) is the so-called geometric coherence, also referred to as angular or baseline coherence; it is present for all scattering situations, it depends on the system parameters and on the overall observation geometry, including the different SAR view acquisition angles, and depending upon the spatial baseline.

Geometric coherence values can be easily computed, in case of flat terrain, and for a white scattering process, leading to [3]:

(20.29)

(20.29)

where ![]() is the orthogonal baseline and

is the orthogonal baseline and ![]() is the orthogonal critical baseline given by:

is the orthogonal critical baseline given by:

![]() (20.30)

(20.30)

where all symbols in Eq. (20.30) have been previously introduced.

Geometric decorrelation effects, for flat terrain geometry, can be explained also from the so-called spectral shift effect [55]. This interpretation allows also to derive a filtering strategy of the interferometric channels aimed at mitigating such decorrelation. Such proper processing is also called “common band filtering” [55,66], because requires to process, to maximize the geometric coherence value, the common (overlapped) part of the spectrum of the two interferometric signals. The larger the baseline, the larger the terrain slope, the less the common part of the two spectra, and the larger the decorrelation effects. For a non-flat topography the approach in [67] can be adopted. For the ERS and ASAR-ENVISAT sensors, the critical baseline is of about 1100 m for ![]() , while for the last generations high resolution systems such as COSMO-Skymed and TerraSAR-X, this value is significantly enlarged. Therefore, for these new generation sensors, thanks to fact that the distribution of the baseline values is bounded by an “orbital tubes” significantly smaller than the critical baseline, such common band filtering is typically not required.

, while for the last generations high resolution systems such as COSMO-Skymed and TerraSAR-X, this value is significantly enlarged. Therefore, for these new generation sensors, thanks to fact that the distribution of the baseline values is bounded by an “orbital tubes” significantly smaller than the critical baseline, such common band filtering is typically not required.

Similar effects can be induced also in the azimuth direction by the presence of variations of the azimuth antenna pointing [67]. This effect can be critical in some acquisition modes where antenna steering is present, such as in ScanSAR and Spotlight modes. Also in this case, the larger the acquisition geometric diversity, the less the spectra overlapping, and the larger the decorrelation effects.

The third factor in Eq. (20.27)![]() , is the volume coherence, it is due to volume scattering, and it is the effect induced by the scattering layer to increase the size projected range cell, and consequently, to decrease the correlation distance. As in the case of

, is the volume coherence, it is due to volume scattering, and it is the effect induced by the scattering layer to increase the size projected range cell, and consequently, to decrease the correlation distance. As in the case of ![]() , it is dependent on the spatial baseline.

, it is dependent on the spatial baseline.

The last factor in Eq. (20.27)![]() represents the so called temporal decorrelation [57] due to the instability of scattering mechanisms in the two different acquisition times, as the structure of the scatterer can change in the meantime between the two acquisitions. Such effect can be very important when the two SAR images are acquired at distance of several days or months in the case of vegetated or agricultural areas, or in presence of different climatic conditions.

represents the so called temporal decorrelation [57] due to the instability of scattering mechanisms in the two different acquisition times, as the structure of the scatterer can change in the meantime between the two acquisitions. Such effect can be very important when the two SAR images are acquired at distance of several days or months in the case of vegetated or agricultural areas, or in presence of different climatic conditions.

2.20.3.3 The differential SAR interferometry technique for measuring displacements

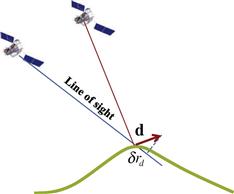

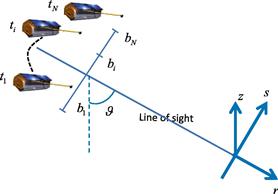

Differential Interferometry (DInSAR) is a particular configuration of SAR interferometry. The reference geometry is the same of the classical InSAR case, but the target on the ground is allowed to move, displacing of say ![]() , between the two successive passes (see Figure 20.9).

, between the two successive passes (see Figure 20.9).

In the following, for sake of simplicity, we indicate deterministic and stochastic terms all with non-capital symbols: the nature is specified whenever ambiguous. In this case, the interferometric phase is composed by three main factors:

![]() (20.31)

(20.31)

where ![]() is the measured range variation that, in the far field observation approximation, is equal to the component of the displacement along the line of sight,

is the measured range variation that, in the far field observation approximation, is equal to the component of the displacement along the line of sight, ![]() is the phase contribution corresponding to the target height as in Eq. (20.12),

is the phase contribution corresponding to the target height as in Eq. (20.12), ![]() is a stochastic term associated with the variation, between the two passes, of the wave propagation delay through the atmosphere,

is a stochastic term associated with the variation, between the two passes, of the wave propagation delay through the atmosphere, ![]() is the phase noise which in this case includes also the temporal decorrelation effects in addition to the decorrelation noise due to the speckle (

is the phase noise which in this case includes also the temporal decorrelation effects in addition to the decorrelation noise due to the speckle (![]() in Section 2.20.3.2). In the cases in which the topographic contribution is limited, that is if the baseline is negligible and/or an external DEM is available to compute and cancel out

in Section 2.20.3.2). In the cases in which the topographic contribution is limited, that is if the baseline is negligible and/or an external DEM is available to compute and cancel out ![]() from Eq. (20.31), and in the case of a predominance of the deformation component and/or limited effects of atmosphere, displacements can be measured to accuracy which are on the order of the wavelength. By using this classical two passes DInSAR configuration in the past Scientists have been able to capture the surface deformation field generated by major earthquakes, or highlight deformation associated with volcanic activities.

from Eq. (20.31), and in the case of a predominance of the deformation component and/or limited effects of atmosphere, displacements can be measured to accuracy which are on the order of the wavelength. By using this classical two passes DInSAR configuration in the past Scientists have been able to capture the surface deformation field generated by major earthquakes, or highlight deformation associated with volcanic activities.

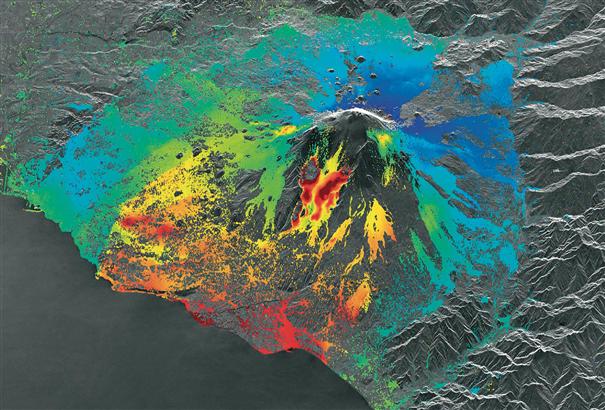

The idea of mapping ground deformation via the interference of signals acquired by SAR systems was demonstrated for airborne systems in [43] and for the very first time using real data from the European Remote Sensing Satellite (ERS) in keystone experiments by [68], for ice-stream velocity measures in Antarctica, and by [69] for the co-seismic deformation field generated by the Landers earthquake (CA-USA). The Landers result received cover of Nature (vol. 364, 8 July 1993, Issue No. 6433) with a title “The image of an Earthquake” that translates the importance of the achievements and of the DInSAR technology with reference to application to seismic and to geo-hazards in general.

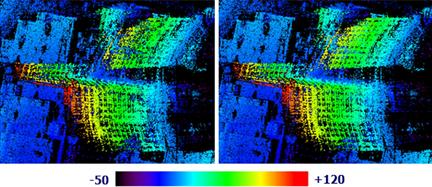

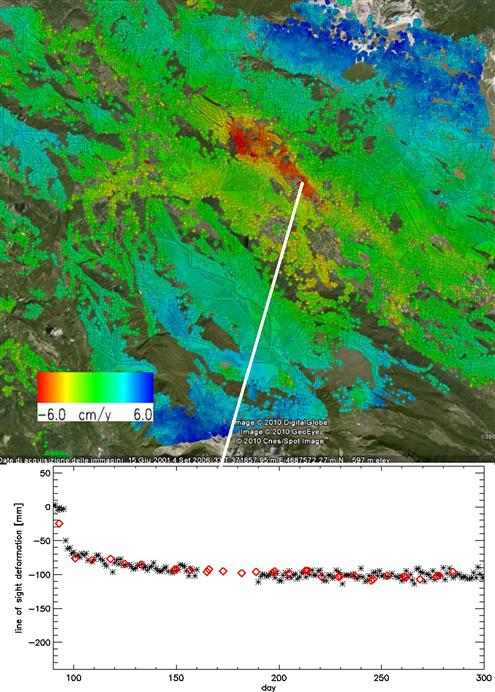

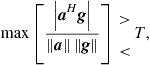

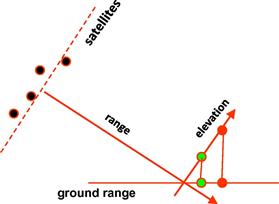

Today, with the availability of many SAR sensors with interferometric capabilities orbiting around the Earth, co-seismic, i.e., before and after main seismic events (see Figure 20.10), DInSAR data are almost analyzed routinely by scientists to study the displacements induced by known and unknown geological faults that are the causes of catastrophic events all over the world.

Figure 20.10 Co-seismic interferogram of the Bam earthquake obtained by a combination of Envisat Advanced Synthetic Aperture Radar (ASAR) Wide Swath Mode (WSM) image with an Image Mode (IM) image.Polimi/Poliba.

An example of measurement of DInSAR co-seismic displacement is reported to provide an idea of the powerfulness of this technology with reference to the 6.6 Mw Iran earthquake in 2003 that stroke the city of Bam. The image Figure 20.10, shows the co-seismic interferogram obtained by interferometric combination of the SCANSAR (Wide Swath Mode) acquisition of September 24th, 2003 and the STRIPMAP (Image Mode) acquisition of December 3rd, 2003: each color-cycle correspond to 1.55 cm in the line of sight. The coherence of the data and therefore the quality of the interferogram is very high due to the arid nature of the region: each color-cycle correspond to 2.8 cm displacement in the line of sight.

A key factor in such application is associated with the revisiting time. The retired satellites ERS-1, ERS-2, and ENVISAT of the Europen Space Agency have been the satellites on which repeat pass interferometry has been experimented for the very first time. These satellites were characterized in the normal operational situation by a strip width of approximately 100 Km EW and a revisiting time (i.e., the time necessary to repeat approximately the same orbit) of 35 days. The revisiting time poses a limitation to the minimum number of days necessary to generate an interferogram. The new generation of sensors operating at slightly lower orbits with respect to ERS and ENVISAT, such as TerraSAR-X and Tandem-X allows reducing the revisiting time to 11 days. The Italian COSMO-SkyMed (CSK) constellation is a constellation formed by four SAR satellites that acquires data for interferometric use, regardless of the specific satellite. This peculiarity allows CSK to provide the highest maximum revisit rate of an area of interest, that is one acquisition every 4 days (on average) for the whole constellation, instead of one acquisition every 16 days for a single satellite. CSK and TerraSAR-X provide spatial resolution (between ![]() and

and ![]() one order of magnitude better than the previous available C-Band satellite SAR data.

one order of magnitude better than the previous available C-Band satellite SAR data.

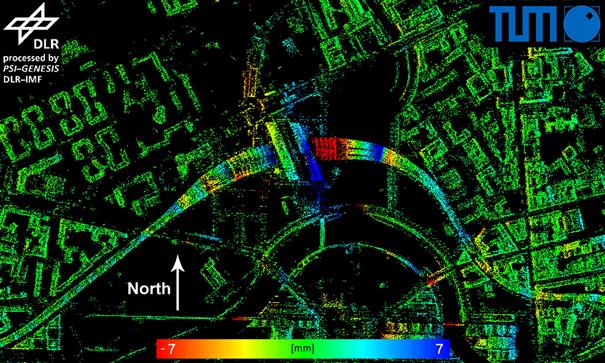

These systems operates in X-band and are characterized by higher spatial resolution with respect to the past ESA C-Band satellites; the counterpart of these advantages are however the reduced swath coverage which in the classical stripmap imaging mode reduces to 40 Km in the EW direction. An example of co-seismic interferogram obtained with a 8 days temporal baseline form COSMO-Skymed data is discussed in the following. On 6 April, 2009 the MW 6.3 L’Aquila earthquake occurred in the Central Apennines (Italy) causing extensive damage to the town of L’Aquila and killing 300 inhabitants. The event epicenter was located few kilometers southwest of the town of L’Aquila, the main shock nucleated at a depth of ![]() 9 km, was preceded by a preseismic sequence with the largest shock having a ML 4 magnitude, and was followed by a vigorous aftershock sequence. In Figure 20.11 it is shown the interferogram evaluated by the CSK pair of April 4th and April 12th: the activated fault is located NW-SE emerging to the right of the dense fringes area: each color-cycle correspond to 1.55 cm in the line of sight.

9 km, was preceded by a preseismic sequence with the largest shock having a ML 4 magnitude, and was followed by a vigorous aftershock sequence. In Figure 20.11 it is shown the interferogram evaluated by the CSK pair of April 4th and April 12th: the activated fault is located NW-SE emerging to the right of the dense fringes area: each color-cycle correspond to 1.55 cm in the line of sight.

Figure 20.11 Co-seismic interferogram of 6 April 2009 6.0 Mw l’Aquila earthquake in Italy. COSMO-Skymed acquisitions of 4 April 2009 and 12 April 2009.

Since then, many experiments showed the potentiality of the technique in detecting deformation phenomena not only associated with earthquakes [70] but also in volcanic areas [71,72] and of glaciers [73].

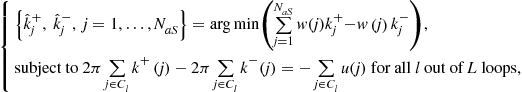

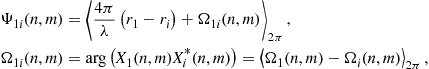

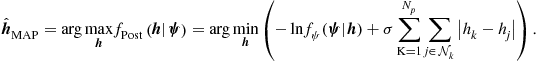

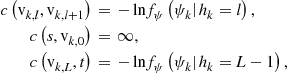

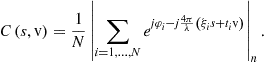

However, in order to fully exploit the potentiality of the SAR technology in order to measure deformation with a centimetric/millimetric accuracy two or few images are typically not sufficient. At such accuracy level the presence of the atmospheric component and additional disturbing contributions such as orbital inaccuracies cannot be in fact neglected. For sake of simplicity we indicate still with ![]() the differential interferometric phase obtained after the subtraction of the contribution associated with the DEM (differential interferogram). We have therefore:

the differential interferometric phase obtained after the subtraction of the contribution associated with the DEM (differential interferogram). We have therefore:

![]() (20.32)

(20.32)

where ![]() is associated with orbital inaccuracies which affect the computation of the phase contribution associated with the topography (baseline error): as for Eq. (20.31), the noise term is associated with the noise contributions such as decorrelation of the response not only due to variation of the speckle contribution due to the angular imaging diversity (spatial baseline decorrelation), but also to changes of the backscattering response over the time (temporal decorrelation). Availability of on board GPS systems allows significantly mitigating the effects of orbital errors; for airborne systems which are subject to trajectory deviations due to turbulence the GPS must be integrated with accurate inertial navigation systems. Due to the DEM subtraction

is associated with orbital inaccuracies which affect the computation of the phase contribution associated with the topography (baseline error): as for Eq. (20.31), the noise term is associated with the noise contributions such as decorrelation of the response not only due to variation of the speckle contribution due to the angular imaging diversity (spatial baseline decorrelation), but also to changes of the backscattering response over the time (temporal decorrelation). Availability of on board GPS systems allows significantly mitigating the effects of orbital errors; for airborne systems which are subject to trajectory deviations due to turbulence the GPS must be integrated with accurate inertial navigation systems. Due to the DEM subtraction ![]() is now the residual target height, i.e., the height of the target with respect to the reference DEM. Accordingly, to have the possibility to achieve measurement of small deformation components, use of accurate external DEM as well as small baseline separations, is mandatory. In any case the atmospheric component plays a major role because it causes the presence of errors which are spatially correlated and therefore may be mixed with possible deformations.

is now the residual target height, i.e., the height of the target with respect to the reference DEM. Accordingly, to have the possibility to achieve measurement of small deformation components, use of accurate external DEM as well as small baseline separations, is mandatory. In any case the atmospheric component plays a major role because it causes the presence of errors which are spatially correlated and therefore may be mixed with possible deformations.

The atmospheric contribution is typically separated in two components: a turbulent component which is associated with the air inhomogeneity and that causes a spatial variation of the Atmospheric Phase Delay (APD) and a stratified component which is associated with the vertical stratification of the atmosphere. Both these terms occur in the lower part of the atmosphere, the troposphere, whereas contribution in the upper part of the atmosphere mainly show contributions which are very low spatial variable and can be misinterpreted as orbital inaccuracies. The former is commonly referred to as wet component and is dependent on the relative humidity, the latter, also referred to as hydrostatic or improperly as dry component, is responsible of a contribution which is highly correlated with the topography and therefore almost negligible on quasi flat areas. A model that describes the statistical behavior of the turbulent component is due to Kolmogorov. In this case the turbulence is assumed spatially stationary and isotropic. The refractivity index, i.e., the excess in parts per million of the reflectivity index with respect to the vacuum, that provided the increase in the path difference due to the crossing of the atmosphere can be modeled in terms of the variogram, i.e., the variance of the difference of the refractivity contributions between two points. For separations below the order of a kilometer, the variance of the difference of the refractivity between two points small and decreasing with a power of 2/3 of the distance. A more thoughtful characterization and analysis of the tropospheric contribution can be found in [74].

The temporal correlation of the atmosphere is however typically low: this means that APD contributions over different epochs can be averaged together to diminish it contribution on the path difference. Therefore to measure small (up to millimeter per year) displacements and to handle the problem of monitoring at high resolution ground scatterers, techniques based on the use of several images acquired over the same scene have been developed. In fact, by exploiting a higher dimensional acquisition space, the “phase firms” of the different components such as DEM error, displacement, and APD can be deterministically or stochastically characterized and estimated directly from the received data. This topic is treated in more details in Section 2.20.5.

2.20.3.4 Phase unwrapping

Unwrapping aims at reconstructing an unrestricted (absolute2) signal starting from a measured wrapped signal version restricted a reference interval. In the case of interferometry, the phase is intrinsically wrapped in the (![]() ) interval being extracted from complex values. Accordingly, we have:

) interval being extracted from complex values. Accordingly, we have:

![]() (20.33)

(20.33)

where ![]() and

and ![]() are the restricted (wrapped) and unrestricted (absolute) phase. Phase unwrapping is a step necessary to reconstruct a phase signal

are the restricted (wrapped) and unrestricted (absolute) phase. Phase unwrapping is a step necessary to reconstruct a phase signal ![]() which is an estimate of

which is an estimate of ![]() .

.

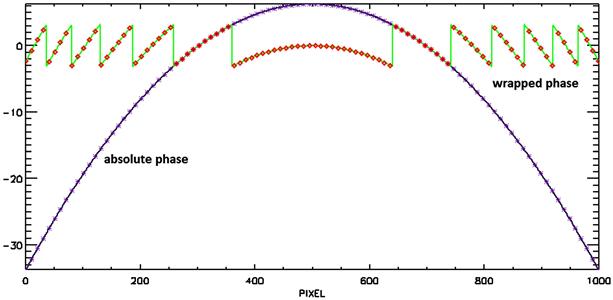

Figure 20.12 reports an example in a 1D case: phase unwrapping aims to estimate the absolute phase (shown in blue) starting from the measured restricted phase (shown in red).

It should also be noted that the term “absolute” phase in the interferometric context is typically used to refer to the phase corresponding to ![]() which has been corrected by an offset to account for the correct number of global cycles which are lost due to the wrapping operator and for the timing errors. In SAR interferometry such an offset is commonly evaluated after phase unwrapping by using one or more reference points with a known topography.

which has been corrected by an offset to account for the correct number of global cycles which are lost due to the wrapping operator and for the timing errors. In SAR interferometry such an offset is commonly evaluated after phase unwrapping by using one or more reference points with a known topography.

The problem of unwrapping is ambiguous as it admits in principle infinitely many solutions (the wrapped phase can be itself a possible absolute phase), and a reasonable solution can be obtained by imposing a certain degree of continuity: the absolute phase in Figure 20.12 is, among all possible absolute phase functions corresponding to the measured wrapped phase, the continuous one. Unfortunately, in real cases, in addition to the noise, the problem is further complicated by the presence of a finite sampling rate, see the measured red diamond samples in Figure 20.12 and the absolute black star samples corresponding to the absolute phase. Moreover, the actual solution could be locally not continuous.

In the following, some popular approaches to solve the phase unwrapping problem and to recover the height profile of the ground scene will be described.

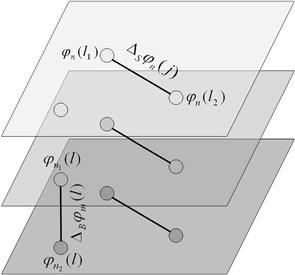

2.20.3.4.1 Residue cut algorithms for PhU