Through-the-Wall Radar Imaging: Theory and Applications

Moeness G. Amin* and Fauzia Ahmad†, *Center for Advanced Communications, Villanova University, Villanova, PA, USA, †Radar Imaging Lab, Center for Advanced Communications, Villanova University, Villanova, PA, USA, [email protected], [email protected]

Abstract

Through-the-Wall radar imaging (TWRI) is emerging as a viable technology for providing high quality imagery of enclosed structures. TWRI makes use of electromagnetic waves below the S-band to penetrate through building wall materials. The indoor scene can be illuminated from each antenna, and be reconstructed using the data from the receive antennas. Due to the “see”-through ability, TWRI has attracted much attention in the last decade with a variety of important civilian and military applications. For instance, this technology is employed for surveillance and detection of humans and interior objects in urban environments, and for search and rescue operations in military situations. In this chapter, we cover signal processing algorithms that proved valuable in allowing proper imaging and image recovery in the presence of high clutter, caused by front walls, and multipath, caused by reflections from internal walls. Specifically, we focus on ground-based imaging systems which are relatively mature, and discuss wall mitigation techniques, multipath exploitation methods, change detection of moving target, and compressive sensing for fast data acquisition.

Keywords

Through-the-wall radar; Urban sensing; Compressive sensing; Multipath exploitation; Clutter mitigation; Moving target indication; Beamforming; Backprojection

2.17.1 Introduction

The field of remote sensing has developed a range of interesting imaging approaches for a variety of applications. Through-the-wall sensing is a relatively new area that addresses the desire to see inside buildings for various purposes, including determining the room layouts, discerning the building intent and nature of activities, locating the occupants, and even identifying and classifying objects within the building. Through-the-wall sensing is highly desired by police, fire and rescue, emergency relief workers, and military operations. Accurate sensing and imaging can allow a police force to obtain an accurate description of a building in a hostage crisis, or allow firefighters to locate people trapped inside a burning structure. In essence, the goals of through-the-wall sensing technology are to provide vision into otherwise obscured areas [1].

Each remote sensing application area has driven different sensing modalities and imaging algorithm development based upon propagation characteristics, sensor positioning, and safety issues. Traditional optical, radar, and sonar image processing all begin with basic wave physics equations to provide focusing to individual points. In many radar applications, for example, data sampled from many sensors are mathematically integrated to provide equivalent focusing using free-space propagation assumptions. Free-space imaging is commonly seen in synthetic aperture radar (SAR) techniques since atmospheric distortions are often negligible and can be safely ignored in first-order calculations [2].

Conventional imaging approaches exploit the wave equation to compute the expected phase at each point in space and time over which the data are collected. The complex phase front is similar to the spatial representation of the wavefront captured by a hologram [3]. The complex returns can then be compared against the predicted returns from points in the imaging target space to focus on each point in that space. The focusing is analogous to image reconstruction in holography, where a spatial pattern is projected back into the originating target image space. In true free-space conditions, this focusing approach represents a mathematically accurate way to perform imaging. More sophisticated approaches extend beyond free-space assumptions to allow for more complicated propagation effects, such as adaptive optics [4], atmospheric correction for radar [2], and matched field processing for sonar [5]. Correction approaches range from simple wavefront calibration to more sophisticated volumetric propagation corrections.

Free-space propagation does not apply to several applications where transmission through scattering media is encountered, including many modern imaging approaches such as geophysical sensing, medical imaging, and more recently through-the-wall imaging and sensing. In these applications, propagating signals diffract through a volume. As examples, geophysical imaging techniques generally measure seismic propagation through the earth to look for discontinuities that are often indicators of oil, gas, water, or mineral deposits [6]. In medical imaging, ultrasound tomographic approaches account for propagation through different tissue classes [7].

Non-freespace scattering applications are more representative of the through-the-wall sensing problem, albeit each has its own distinct challenges and approaches [1]. In geophysical and medical applications, the propagation medium is discontinuous but still respectively fills the sensing volume of the earth and tissue. To better propagate into the volume, sensors are placed in direct contact with the medium (e.g., seismometers for geophysical sensing, ultrasound transducers for medical imaging). In through-the-wall sensing, there are many air-material interfaces that dramatically change the wavefront. Through-the-wall sensors may be placed against the front walls or located some distance away from the structure. In either case, attenuation is largely seen only in the building materials and contents rather than in the large volumes of air that occupy most of the space outside or within a building. The rich through- the-wall scattering environment makes volumetric tomographic imaging approaches most relevant for through-the-wall sensing. Rather than using free-space focusing assumptions, correcting for propagation effects may greatly improve the imaging solution [8–10].

Through-the-wall sensing is best motivated by examining the applications primarily driving its development. Through-the-wall sensing grew from the application of ground-penetrating radar systems to walls, with specific applications documented in the literature since the late 1990s showing abilities to sense beyond a single wall from near range [11–25]. Applications can be divided based upon whether information is sought on motions within a structure or on imaging the structure and its stationary contents.

The need to detect motion is highly desired in situations like firefighters finding a child in a burning building or law enforcement officers locating hostages and their captors. Such applications can resort to Doppler discrimination of movement from background clutter [26–31]. Motion detection and localization can be decomposed into zero (0-D), one (1-D), two (2-D), or three-dimensional (3-D) systems. A 0-D system is simply a motion detector and will report any motion in the scene. Such systems may be useful to detect whether a room is occupied or not, often useful in monitoring applications such as security systems or intruder detection. Interior motion may be ample information for a firefighter to decide whether to enter a room or building. Since range or angle is not required, 0-D systems may use continuous wave (CW) tones as waveforms. While such systems have exquisite sensitivity, it is difficult to confine the sensitivity to desired areas, so care must be taken to prevent undesired detections beyond the intended range or even from the system operator himself due to multipath reflections. 0-D systems are not particularly useful in cases where other moving individuals may be within the sensor field of view.

One-dimensional systems provide a range to a target but not an angle. The extra dimension provides the ability to separate and possibly discriminate multiple targets. The range information can help bound whether a detection is in the adjacent room, or perhaps deeper within the building. The systems can obviously gate out detections from ranges beyond the ranges of interest, and may more easily discriminate desired target motion from the motion of the operator. The systems often consist of a single receiving antenna in addition to a transmitting antenna (although sometimes a single antenna can be used for both functions). Motion of the operator can be gated out in range, or a reference antenna pointed towards the radar operator can be used for cancellation. Ranging is historically one of the principle reasons for the development of radar technology, but the short stand-off ranges of most through-the-wall systems provide some particular challenges. Three methods are often employed to obtain target range, namely, ultrawideband pulses [22,32–36], dual frequencies [28,37–39], or stepped CW [11,20].

Two-dimensional systems provide a slice through the scene in the range and angle dimensions [11,22,23]. The 2-D representation provides better localization of the mover, at the expense of a larger antenna array whose elements are collinear. 2-D systems will be subject to layover of objects, meaning that objects out of plane will appear to be rotated to the imaging plane, which can lead to distortion of the field of view. 3-D systems attempt to represent a volumetric representation of the field of view in the range, azimuth, and elevation dimensions [40]. The third dimension avoids the layover issue of targets being projected into a 2-D plane; at the expense of a 2-D array and higher processing requirements. Potentially, the third dimension can provide additional localization for identification of targets. Height information may allow discrimination between people and animals such as household pets, since radar cross-section alone can be unreliable in the presence of through-the-wall multipath and other issues. The ultimate goal is higher resolution for even better moving target classification. Both 2-D and 3-D processing techniques have generally used either multilateration [8,17] or SAR techniques [41,42] using either ultrawideband pulses or stepped CW radars to localize features in the scene.

Imaging of structural features and contents of buildings requires at least 2-D (and preferably 3-D) systems. It cannot rely on Doppler processing for separation of desired features, so multilateration or SAR approaches have been the most commonly used approaches. The general idea behind multilateration is to correlate range measurements from multiple sensors to specific points in the image. With sufficient spatial diversity from a large set of transmit/receive combinations, specific reflection points will start to integrate above the background interference. However, ambiguities will arise as the number of reflection points increases which can provide an over-determined system relative to the transmit/receive signal pairs which can detract from image quality. SAR-based systems can be thought of as a coherent extension of the multilateration concept. Instead of incoherent combinations of range returns from multiple transmit/receive pairs, coherent algorithms are used to provide a complex matched filter to specific points in the target space. SAR algorithms are well established for stand-off imaging applications [2]. Stand-off applications generally assume free-space propagation to each point in the target scene, although platform motion compensation and atmospheric effects are removed with autofocusing algorithms.

Most of the SAR and multilateration techniques usually neglect propagation distortions such as those encountered by signals passing through walls and objects. Distortions degrade the performance and can lead to ambiguities in target and wall localizations. Free-space assumptions no longer apply after the electromagnetic waves propagate through the first wall. As a result, free-space approximations may carry imaging systems through to the first wall, but propagation effects will then affect further imaging results. Shadowing, attenuation, multipath, reflection, refraction, diffraction, and dispersion, all play a role in how the signals will propagate after the first interface. Without factoring in propagation effects, imaging of contents within buildings will be severely impacted. As such, image formation methods, array processing techniques, target detection, image sharpening, and clutter and multipath identification and rejection paradigms must work in concert and be reexamined in view of the nature and specificities of the underlying sensing problem.

A common occurrence of incorrect localization is objects outside the building being illuminated by the reflection off the first wall and subsequently creating an ambiguous image visible inside the building. Moreover, the strong front wall reflection causes nearby indoor weak targets to go undetected. Multipath propagation introduces ghosts or false targets in the image. Uncompensated refraction through walls can lead to localization or focusing errors, leading to offsets and blurring of imaged targets [41,43–45]. Bragg scattering off repeating structural elements, such as rebar in concrete walls or repetitive voids in concrete block walls, can cause image ambiguities and modulation of subsequent wavefronts. Some of the wall propagation effects can be compensated for using image-focusing techniques incorporating proper wavefront corrections [41,44]. SAR techniques and tomographic algorithms, specifically tailored for through-the-wall imaging, are capable of making some of the adjustments for wave propagation through solid materials. While such approaches are well suited for shadowing, attenuation, and refraction effects, they do not account for multipath, Bragg scattering, as well as strong reflections from the front wall.

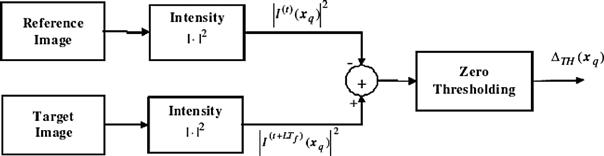

In this chapter, we consider the recent algorithmic advances that address some of the aforementioned unique challenges in through-the-wall sensing operations. More specifically, Section 2.17.2 deals with techniques for the mitigation of the wall clutter. Front wall reflections are often stronger than target reflections, and they tend to persist over a long duration of time. Therefore, weak and close by targets behind walls become obscured and invisible in the image. Approaches based on both electromagnetic modeling and signal processing are advocated to significantly mitigate the front wall clutter. Section 2.17.3 presents an approach to exploit the rich indoor multipath environment for improved target detection. It uses a ray tracing model to implement a multipath exploitation algorithm, which maps each multipath ghost to its corresponding true target location. In doing so, the algorithm improves the radar system performance by aiding in ameliorating the false positives in the original SAR image as well as increasing the SNR at the target locations, culminating in enhanced behind the wall target detection and localization. Section 2.17.4 discusses a change detection approach to moving target indication for through-the-wall applications. Change detection is used to mitigate the heavy clutter that is caused by strong reflections from exterior and interior walls. Both coherent and noncoherent change detection techniques are examined and their performance is compared using real data collected in a semi-controlled laboratory environment. Section 2.17.5 deals with fast data acquisition schemes to provide timely actionable intelligence in through-the-wall operations. The demand for high degree of situational awareness to be provided by through-the-wall radar systems requires the use of wideband signals and large antenna arrays. As a result, large amounts of data are generated, which presents challenges in both data acquisition and processing. The emerging field of compressive sensing is employed to provide the means to circumvent possible logistic difficulties in collecting measurements in time and space and provide fast data acquisition and scene reconstruction for moving target indication.

It is noted that the chapter provides a signal processing perspective to through-the-wall radar imaging. Outside the scope of this chapter, there has been significant work performed for TWRI applications in the areas of antenna and system design [36,46–48], wall attenuation and dispersion [49–51], electromagnetic modeling [52–55], inverse scattering approaches [24,56,57], and polarization exploitation [58–60].

The progress reported in this chapter is substantial and noteworthy. However, many challenging scenarios and situations remain unresolved using the current techniques and, as such, further research and development is required. However, with the advent of technology that brings about better hardware and improved system architectures, opportunities for handling more complex building scenarios will definitely increase.

2.17.2 Wall clutter mitigation

Scattering from the exterior front wall is typically stronger than that from targets of interest, such as a human or a rifle, which have relatively small radar cross sections (RCS). This makes imaging of stationary targets behind walls difficult and challenging. The problem is further compounded when targets are in close vicinity of walls of either a high dielectric constant or with layered structures. In particular, hollow cinder block walls contain an air-gap void within the cinder block with disparate dielectric constants. This establishes a periodic structure resonance cavity that traps electromagnetic (EM) modes. The consequence of this layered composite structure on radar target imaging is to introduce long time constant relaxations on target detections in radar range profiles. Therefore, weak and close by targets behind walls become obscured and sometimes totally invisible in the image. Thus, wall reflections should be suppressed, or significantly mitigated, prior to applying image formation methods. One of the simple but effective methods is based on background subtraction. If the received signals can be approximated as the superposition of the wall and the target reflections, then subtracting the raw complex data without target (empty scene) from that with the target would remove the wall contributions and eliminate its potential overwhelming signature in the image. Availability of the empty scene, however, is not possible in many applications, and one must resort to other means to deal with strong and persistent wall reflections.

In the past few years, a number of approaches have been proposed to mitigate the front wall returns and effectively increase the signal-to-clutter ratio. For example, the wall reflections can be gated out, the corresponding parameters can be estimated, and then used to model and subtract the wall contributions from the received data [44]. This wall-dependent technique is effective, but its performance is subject to wall estimation and modeling errors. Another method is proposed in [42], which employs three parallel antenna arrays at different heights. The difference between the received signals at two different arrays is used for imaging. Due to the receiver symmetry with respect to the transmitter, a simple subtraction of the radar returns leads to wall reflection attenuation and image improvement. However, this technique requires two additional arrays and the effect of the subtraction operation on the target reflection is unknown and cannot be controlled. In [54], the walls reflections are eliminated by operating the imaging radar in cross-polarization as the cross-polarization returns from a planar interface such as the wall surface are theoretically zero, unlike the returns from humans. However, the cross-polarization returns from the targets behind walls are often very weak compared to their co-polarization counterparts, and as such, the radar performance may be limited by noise.

An eigen-structure technique is developed in [61] to decompose the received radar signal into three subspaces: clutter (including the front wall), target, and noise. Singular value decomposition (SVD is applied on the data matrix to extract the target signatures. SVD has also been used as a wall clutter reduction method for TWRI in [62,63]. Another approach for wall clutter mitigation is based on spatial filtering. It utilizes the strong similarity between wall EM responses, as viewed by different antennas along the entire physical or synthesized array aperture, is proposed in [64]. The idea is that under monostatic radar operation, the wall returns approximately assume equal values and identical signal characteristics across the array elements, provided that the extent of the wall is much greater than the antenna beamwidth. On the other hand, returns from targets with limited spatial extent, such as humans, vary from sensor to sensor. The dc component corresponding to the constant-type return, which is typical of walls, can be significantly reduced while preserving the target returns by applying an appropriate spatial filter along the array axis. However, care must be exercised in the choice of the spatial filter so that its characteristics cause minimum distortion to the target returns. The spatial-domain preprocessing scheme is analogous, in its objective, to the moving target indication (MTI) clutter filter operation in the time domain.

In this section, we present in details two of the aforementioned methods, namely, the wall parameter estimation method and the spatial filtering technique. For the spatial filtering technique, we consider two different types of filters, namely, moving average subtraction filter and the infinite impulse response notch filter, and compare their effects on the target image.

2.17.2.1 Spatial filtering

2.17.2.1.1 Characteristic of the wall reflection

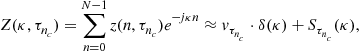

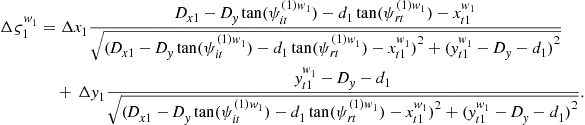

Assume a synthetic aperture radar (SAR) is used in which a single antenna at one location transmits and receives the radar signal, then moves to the next location, and repeats the same operation along the axis parallel to the wall. Assume N antenna locations. With the wall reflection, the received signal at the ![]() th antenna due to a single point target of complex reflectivity

th antenna due to a single point target of complex reflectivity ![]() is given by

is given by

![]() (17.1)

(17.1)

where ![]() is the signal reflected from the wall,

is the signal reflected from the wall, ![]() is the two-way traveling time of the signal from the nth sensor to the wall,

is the two-way traveling time of the signal from the nth sensor to the wall, ![]() is the transmitted signal convolved with the two-way transmission transfer function of the wall, and

is the transmitted signal convolved with the two-way transmission transfer function of the wall, and ![]() is the two-way traveling time between the

is the two-way traveling time between the ![]() th antenna and the target. Note that

th antenna and the target. Note that ![]() is assumed to be independent of frequency and

is assumed to be independent of frequency and ![]() does not vary with the sensor location since the wall is parallel to the array. Furthermore, if the wall is homogeneous and much larger than the beamwidth of the antenna, the wall reflection

does not vary with the sensor location since the wall is parallel to the array. Furthermore, if the wall is homogeneous and much larger than the beamwidth of the antenna, the wall reflection ![]() will be the same for all antenna locations. This implies that the first term in (17.1) assumes the same value across the array aperture. When the wall is not parallel to the array axis,

will be the same for all antenna locations. This implies that the first term in (17.1) assumes the same value across the array aperture. When the wall is not parallel to the array axis, ![]() should be calculated for each sensor location. Compensations for signal attenuations due to differences in the two-way traveling distances for different antenna positions should be performed prior to filtering.

should be calculated for each sensor location. Compensations for signal attenuations due to differences in the two-way traveling distances for different antenna positions should be performed prior to filtering.

Unlike ![]() , the time delay

, the time delay ![]() in (17.1) changes with each antenna location, since the signal path from the antenna to the target is different. For

in (17.1) changes with each antenna location, since the signal path from the antenna to the target is different. For ![]() and

and ![]() , the received signal is

, the received signal is

![]() (17.2)

(17.2)

for ![]() . Since the time t is fixed, the received signal is now a function of n via the variable

. Since the time t is fixed, the received signal is now a function of n via the variable ![]() . We can rewrite (17.2) as a discrete function of n such that

. We can rewrite (17.2) as a discrete function of n such that

![]() (17.3)

(17.3)

where ![]() and

and ![]() . Since the delay

. Since the delay ![]() is not linear in

is not linear in ![]() for

for ![]() is a nonuniformly sampled version of

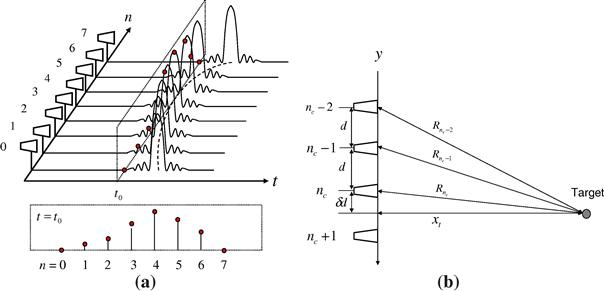

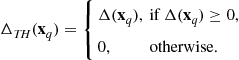

is a nonuniformly sampled version of ![]() . Figure 17.1a shows the signals received by the N antennas at a given time instant. For example, suppose that the

. Figure 17.1a shows the signals received by the N antennas at a given time instant. For example, suppose that the ![]() th sensor is the closest to the target (see Figure 17.1b). If the wall diffraction is negligible, then

th sensor is the closest to the target (see Figure 17.1b). If the wall diffraction is negligible, then

(17.4)

(17.4)

where ![]() . In most cases, the target’s range

. In most cases, the target’s range ![]() is much larger than the antenna spacing d. Then,

is much larger than the antenna spacing d. Then, ![]() . Using Taylor series expansion, we can approximate (17.4) as,

. Using Taylor series expansion, we can approximate (17.4) as,

(17.5)

(17.5)

Figure 17.1 Received signals using SAR data collection scheme. (a) Array signal at ![]() . (b) Range between antenna locations and the target.

. (b) Range between antenna locations and the target.

Therefore,

![]() (17.6)

(17.6)

The received signal at ![]() is given by

is given by

(17.7)

(17.7)

The spatial frequency transform of (17.7) is given by

(17.8)

(17.8)

where ![]() denotes the spatial frequency, and

denotes the spatial frequency, and ![]() is the target spatial signature at

is the target spatial signature at ![]() such as

such as

(17.9)

(17.9)

The above analysis shows that separating wall reflections from target reflections amounts to basically separating constant (zero-frequency signal) from nonconstant valued signals across antennas, which can be performed by applying a proper spatial filter.

2.17.2.1.2 Target spatial signature

In the previous section, it is shown that the target signal across the antennas at a fixed time (fixed down-range) is a nonuniformly sampled version of the target reflection. In order to minimize the effect of the wall removal, which is done by spatial filtering, on the target signal, it is necessary to find the target spatial frequency bandwidth as viewed by the array aperture. This is a critical factor in designing the proposed spatial filters for the purpose of wall reflection removal. The target spatial frequency bandwidth depends on the target downrange, as shown in (17.9), and is also inversely proportional to the extent of the target signal return along the array. At any given time, the latter depends on the array length, target down range, and the target signal temporal duration.

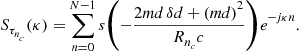

Considering a point target, which reflects the radar signal without modification, the target spatial signature can be analytically computed. The minimum bandwidth occurs when the extent of the target return is maximum. Two different cases can be identified depending on the target location in cross-range relative to the edge of the array. Figure 17.2a shows the target signal return in the time-space domain when the target is located within the array aperture, whereas Figure 17.2b shows the case in which the target is outside the array aperture. In these figures, L represents the array length, ![]() is the signal temporal duration, and R is the target range. Consider the case of Figure 17.2a, where

is the signal temporal duration, and R is the target range. Consider the case of Figure 17.2a, where ![]() is the cross-range of the target location from the edge of the array. Without loss of generality, assume that

is the cross-range of the target location from the edge of the array. Without loss of generality, assume that ![]() is larger than or equal to

is larger than or equal to ![]() . The maximum width of the target return across the array for the case of Figure 17.2a is [64]

. The maximum width of the target return across the array for the case of Figure 17.2a is [64]

![]() (17.10)

(17.10)

and for the case of Figure 17.2b,

![]() (17.11)

(17.11)

Figure 17.2 Target return signal in the time-space domain. (a) Target is within the array aperture. (b) Target is outside the array aperture.

In the above equations, ![]() is the radar down-range resolution and

is the radar down-range resolution and

![]() (17.12)

(17.12)

The maximum width is achieved when the target is located along the centerline of the array

![]() (17.13)

(17.13)

Define ![]() as the effective width. Then, the maximum effective width is

as the effective width. Then, the maximum effective width is

![]() (17.14)

(17.14)

and the corresponding minimum frequency bandwidth is given by

![]() (17.15)

(17.15)

Note that ![]() decreases as R increases. This implies that the spatial filter should have a narrower stopband to process the more distant target.

decreases as R increases. This implies that the spatial filter should have a narrower stopband to process the more distant target.

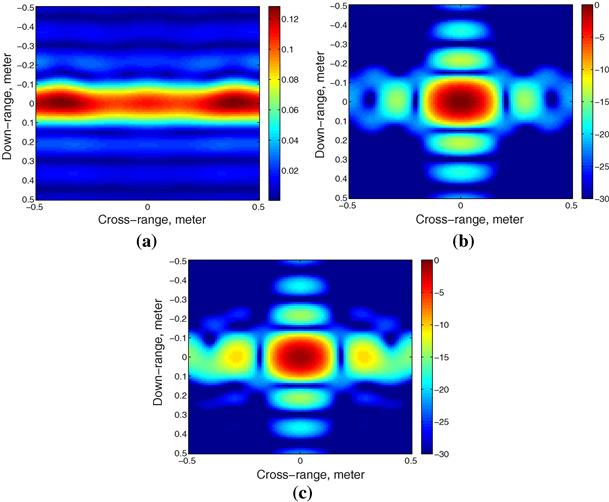

2.17.2.1.3 Moving average wall removal

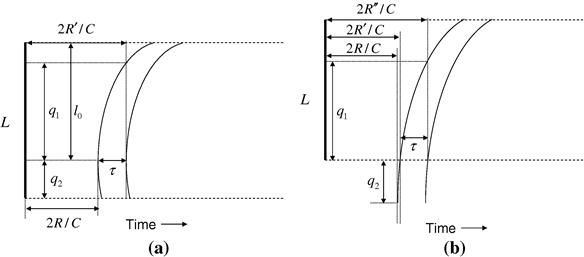

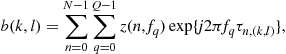

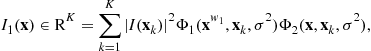

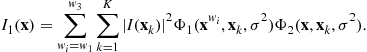

Moving Average (MA) filter background subtraction is a noncausal Finite impulse response (FIR) filter, which notches out the zero spatial frequency component. The MA subtraction method has been effectively used for removal of the ground reflections in ground penetrating radar [65]. The effect of the MA on the target image can be viewed in the context of the corresponding change in the point spread function (PSF). With the transmitted signal chosen as a stepped-frequency waveform consisting of Q narrowband signals, let ![]() be the signal received at the nth antenna at frequency

be the signal received at the nth antenna at frequency ![]() . Let

. Let ![]() be the signal after MA subtraction. That is

be the signal after MA subtraction. That is

![]() (17.16)

(17.16)

(17.17)

(17.17)

where 2D + 1 is the filter length. When the sum is taken over the entire length of the array, the moving average becomes just an average over the entire aperture and 2D + 1 = N. The klth pixel value of the image obtained by applying delay-and-sum (DS) beamforming to the received data ![]() is given by

is given by

(17.18)

(17.18)

where ![]() is the two-way traveling time, through the air and the wall, between the nth antenna and the klth pixel location. The new DS beamforming image after filtering is given by

is the two-way traveling time, through the air and the wall, between the nth antenna and the klth pixel location. The new DS beamforming image after filtering is given by

(17.19)

(17.19)

The second term in the above equation is due to the average subtraction. This additional term depends on the signal waveform, target location, and the antenna positions, implying that the effect of average subtraction varies according to these parameters. The PSF is the DS image of a point source as a function of the down-range and the cross-range. Figure 17.3a shows the image of the second term in (17.19) when a point target is located 6 m away from the center of a 3 m long antenna array. In this case, the maximum value is about 0.13 (The maximum value in PSF is one). The signal is a step-frequency waveform covering the 2–3 GHz band with 5 MHz steps. It is noted that the magnitude of this image assumes a high value over a large cross-range area, whereas the down-range spread of the function is small. Figure 17.3b is the PSF without spatial filtering. The modified PSF of the spatial filtered signal, which is the difference between Figure 17.3a and b, is shown in Figure 17.3c. We observe that the size of the mainlobe of the modified PSF remains unchanged with about 0.8 dB loss in the maximum value. However, the sidelobes are higher compared to the original PSF. Although the average subtraction seems to work well in this simulation, the limits of using this subtraction method when handling the distant targets can be shown as follows.

Figure 17.3 Modification in the PSF due to MA subtraction. (a) Second term in (17.19). (b) PSF without filtering. (c) PSF after MA subtraction.

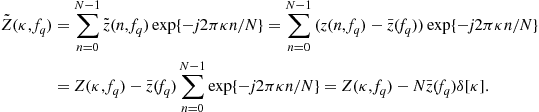

The discrete Fourier transform of ![]() is

is

(17.20)

(17.20)

Note that

(17.21)

(17.21)

Therefore,

(17.22)

(17.22)

As shown above, the subtraction removes the single spatial frequency component ![]() without changing other components. The actual spatial frequency band that is filtered out is always

without changing other components. The actual spatial frequency band that is filtered out is always ![]() , where L is the array length. When

, where L is the array length. When ![]() is equal to L, the target bandwidth is

is equal to L, the target bandwidth is ![]() . In this case, most of the target signal return will be removed by the process of the average subtraction. The range at which

. In this case, most of the target signal return will be removed by the process of the average subtraction. The range at which ![]() is

is

![]() (17.23)

(17.23)

For example, for L = 2 m and ![]() (2 GHz bandwidth),

(2 GHz bandwidth), ![]() is equal to 13.33 m, implying that when the target’s down-range is larger than 13.33 m, the MA subtraction method will eliminate most of the target signature, which is undesirable. Thus, the MA subtraction is simple but produces spatial filter characteristics, which may not be applicable to all wall types and target locations.

is equal to 13.33 m, implying that when the target’s down-range is larger than 13.33 m, the MA subtraction method will eliminate most of the target signature, which is undesirable. Thus, the MA subtraction is simple but produces spatial filter characteristics, which may not be applicable to all wall types and target locations.

2.17.2.1.4 Notch filtering

Zero-phase spatial IIR filters, such as notch filters, are good candidates for wall clutter mitigation. The IIR filter provides a flexible design. It overcomes the problem of the fixed MA (average) filter characteristics that could be unsuitable for various wall and target types and locations. Although the IIR filter spatial extent is truncated because of the finite number of antennas, the filter is still capable of delivering good wall suppression performance without a significant change in the target signal when the number of antennas is moderate. A simple IIR notch filter that rejects zero frequency component is given by

![]() (17.24)

(17.24)

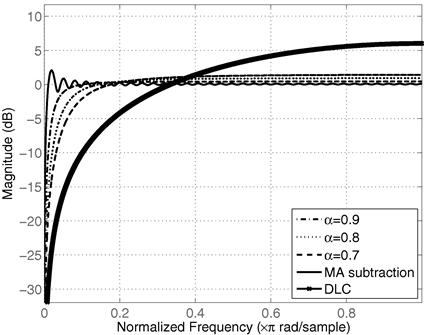

The positive parameter ![]() determines the width of the filter notch. Figure 17.4 shows the frequency response of the filter for various values of

determines the width of the filter notch. Figure 17.4 shows the frequency response of the filter for various values of ![]() and that of MA subtraction with 67 antenna locations. Note the difference between the notch filters and MA. The notch filter does not have ripples and can change its stopband depending on the parameter

and that of MA subtraction with 67 antenna locations. Note the difference between the notch filters and MA. The notch filter does not have ripples and can change its stopband depending on the parameter ![]() . In the underlying problem,

. In the underlying problem, ![]() is the spatial frequency and the parameter

is the spatial frequency and the parameter ![]() provides a compromise between wall suppression and target signal conservation. This flexibility is an advantage of the notch filtering over MA subtraction method. As shown in the figure, as

provides a compromise between wall suppression and target signal conservation. This flexibility is an advantage of the notch filtering over MA subtraction method. As shown in the figure, as ![]() moves closer to one, the stopband becomes narrower such that most of the signal except dc will pass through the filtering.

moves closer to one, the stopband becomes narrower such that most of the signal except dc will pass through the filtering.

Figure 17.4 Frequency response of the notch filter for various ![]() , DLC with two pulses, and MA subtraction with 67 antennas.

, DLC with two pulses, and MA subtraction with 67 antennas.

The spatial spectrum of wall reflections may have a nonzero width due to unstable antenna path and local inhomogeneities. In this case, a wider filter stopband should be applied to remove most of the wall reflections around dc, but it would also reduce the target reflection. Therefore, the spatial filter should be adjusted to the environment. It is noted that the frequency spectrum of the target reflection depends on the range to the target, the transmitted waveform, and the distance between antenna locations. These parameters determine the sampling point of the received signal and, subsequently, the spatial frequency characteristics of the target. If the sampled target signal does not have a dc component, it will not be severely affected by the spatial filter and, as such, the target image after notch filtering will approximately remain unaltered. It is noted that the desire to have the target spatial spectrum least attenuated by the spatial filtering could play an important role in designing signal waveforms for TWRI.

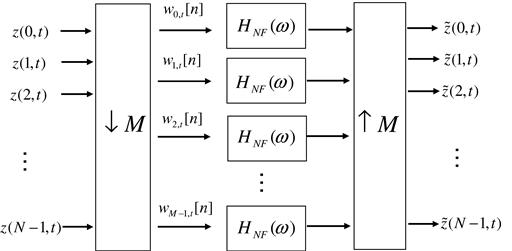

The filter zero-phase property, which is required for a focused image formation, can be achieved by applying the notch filter twice in the forward and backward directions [66]. By two-way filtering, group delay can be removed. One way to avoid the loss of the target signal returns by filtering, particularly if these returns vary slowly across the array aperture antennas, is to increase the delay between antenna outputs. This is true whether the MA, the notch filter, or any other filter is employed. The increased delay can be achieved by down sampling of sensors without making the sensor spacing too large to cause aliasing. When the antenna positions are down sampled by M, there will be M different sets with ![]() antennas. These M sets are filtered independently and the results are used by the delay and sum beamforming. Figure 17.5 shows the diagram of notch filter processing. The downsampled signal

antennas. These M sets are filtered independently and the results are used by the delay and sum beamforming. Figure 17.5 shows the diagram of notch filter processing. The downsampled signal ![]() for

for ![]() and

and ![]()

![]() (17.25)

(17.25)

The effective filter length of the IIR filter should be considered when downsampling since the length of the downsampled signal is now ![]() . A highly truncated IIR will lead to undesired filter characteristics.

. A highly truncated IIR will lead to undesired filter characteristics.

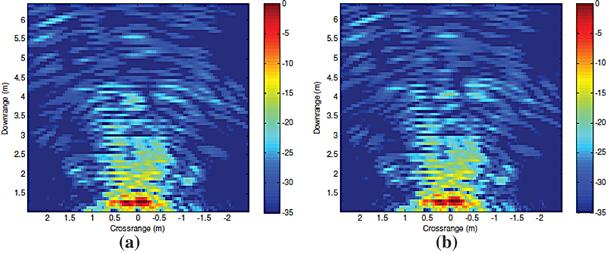

2.17.2.1.5 Imaging results

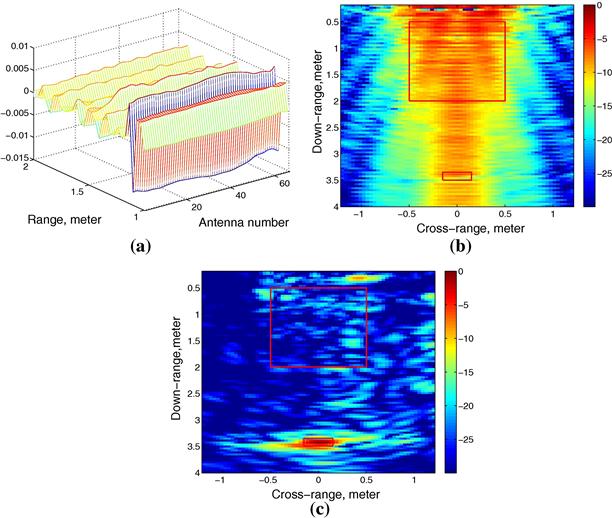

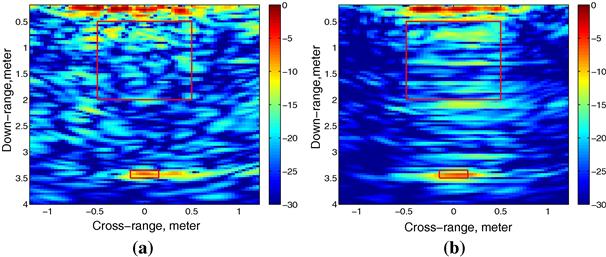

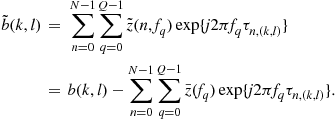

A through-the-wall SAR system was set up in the Radar Imaging Lab at Villanova University. A line array of length 1.2446 m with 0.0187 m inter-element spacing was synthesized, parallel to a 0.14 m thick solid concrete wall, at a standoff distance of 1.01m. The back and the side walls of the room were covered with RF absorbing material to reduce clutter. A stepped-frequency signal covering 1–3 GHz band with 2.75 MHz frequency step was employed. A vertical dihedral was used as the target and was placed 3.04m away on other side of the front wall. The size of each face of the dihedral is 0.39m by 0.28 m. The empty scene without the dihedral target present was also measured for comparison.

First, we examine the validity of the wall EM return assumption that the spatial filtering approach is based on. Figure 17.6a shows the signal reflected from the concrete wall. It clearly shows the first few reflections from the wall and as expected, the wall return assumes almost constant values across the antenna positions. In order to quantify the performance of the images, we apply the target-to-clutter ratio (TCR) which is commonly adopted in SAR image evaluations [67,68]. TCR is similar to the MTI improvement factor, except that the latter is for the time-domain, whereas TCR is for the image domain. TCR is calculated as

(17.26)

(17.26)

where ![]() is the target area,

is the target area, ![]() is the clutter area, and

is the clutter area, and ![]() is the number of pixels in the clutter area. TCR, in essence, is the ratio between the maximum pixel value of the target to the average pixel value in the clutter region. The clutter region is the area where no target is present and the wall reflection is severe. The clutter area is manually selected in close vicinity to the wall where wall reflections are most pronounced. The rectangles depicted in the figures represent the clutter area and the target area in our example. Figure 17.6b shows the image without any preprocessing and the target is masked by the wall response. Figure 17.6c shows the result of applying background subtraction, and the target is clearly visible. Figure 17.7a and b demonstrates that the spatial filtering approach is effective in reducing the wall reflections without significantly compromising the target image. Figure 17.7a shows the DS image after MA subtraction, and Figure 17.7b shows the DS image after the notch filtering. TCRs in these figures are 2.2 dB (with wall), 26.2 dB (background subtraction), 13.4 dB (notch filtering), and 15.3 dB (MA subtraction). The background subtracted image provides the highest TCR, while MA subtraction and notch filtering provide comparable performance.

is the number of pixels in the clutter area. TCR, in essence, is the ratio between the maximum pixel value of the target to the average pixel value in the clutter region. The clutter region is the area where no target is present and the wall reflection is severe. The clutter area is manually selected in close vicinity to the wall where wall reflections are most pronounced. The rectangles depicted in the figures represent the clutter area and the target area in our example. Figure 17.6b shows the image without any preprocessing and the target is masked by the wall response. Figure 17.6c shows the result of applying background subtraction, and the target is clearly visible. Figure 17.7a and b demonstrates that the spatial filtering approach is effective in reducing the wall reflections without significantly compromising the target image. Figure 17.7a shows the DS image after MA subtraction, and Figure 17.7b shows the DS image after the notch filtering. TCRs in these figures are 2.2 dB (with wall), 26.2 dB (background subtraction), 13.4 dB (notch filtering), and 15.3 dB (MA subtraction). The background subtracted image provides the highest TCR, while MA subtraction and notch filtering provide comparable performance.

2.17.2.2 Wall parameter estimation, modeling, and subtraction

2.17.2.2.1 Approach

In this approach, the effect of the wall on EM wave propagation is achieved through three steps. The wall parameters are accurately estimated. Then, the reflected signal from the wall is properly modeled. Finally, the modeled signal is subtracted from the measured signal.

Building walls, such as brick, adobe, and poured concrete walls, can be modeled by homogeneous dielectric slabs. Using the geometric optics approach, the reflection coefficient ![]() for vertically and horizontally polarized incident waves through the wall is given by [44,69]

for vertically and horizontally polarized incident waves through the wall is given by [44,69]

(17.27)

(17.27)

where ![]() for horizontal polarization and

for horizontal polarization and ![]() for vertical polarization of the incident fields. In (17.27),

for vertical polarization of the incident fields. In (17.27), ![]() is the relative dielectric constant, d is the thickness of the wall, and

is the relative dielectric constant, d is the thickness of the wall, and ![]() and

and ![]() are the normal components of the propagation constants in air and in the dielectric, respectively. The expressions for the vertical/transverse magnetic and horizontal/transverse electric unit vectors are given in [69].

are the normal components of the propagation constants in air and in the dielectric, respectively. The expressions for the vertical/transverse magnetic and horizontal/transverse electric unit vectors are given in [69].

The wall parameters can be estimated from the time-domain backscatter at a given location [44,70]. Basically, the response of the wall is gated from the total backscatter signal and transferred to the frequency domain. The mean-squared error between the calculated reflection coefficient ![]() of (17.27) and the measured reflection coefficient

of (17.27) and the measured reflection coefficient ![]() given by

given by

(17.28)

(17.28)

is computed, and its minimum is searched for the wall thickness and permittivity [44,71]. In (17.28), ![]() is the number of frequency points and

is the number of frequency points and ![]() is the nth frequency.

is the nth frequency.

The estimated wall parameters are then used to compute the wall EM reflection. This could be accomplished either numerically using EM modeling software, or in case of far-field conditions, analytically using the following expression [44]

![]() (17.29)

(17.29)

where ![]() is the standoff distance of the radar from the wall,

is the standoff distance of the radar from the wall, ![]() is the wavelength, G is the antenna gain,

is the wavelength, G is the antenna gain, ![]() is the nth wave constant, c is the speed of light in free space, and

is the nth wave constant, c is the speed of light in free space, and ![]() is the reflectivity matrix from the wall at normal incidence angle and is given by,

is the reflectivity matrix from the wall at normal incidence angle and is given by,

(17.30)

(17.30)

In (17.30), the subscripts “v” and “h,” respectively, denote the vertical and horizontal polarization and ![]() is given by (17.27). This estimated wall reflection should be coherently subtracted from the total received signal in order to isolate the signature of the target on the other side of the wall.

is given by (17.27). This estimated wall reflection should be coherently subtracted from the total received signal in order to isolate the signature of the target on the other side of the wall.

The isolated target return can then be processed to generate an image of the scene. The effects of the wall transmissivity on the target image are the following: (1) dislocation of the target from its actual position in range and (2) significant degradation in cross-range resolution [41,44]. To compensate for the effects of transmission through the wall, a compensation factor proportional to the inverse of the squared of the wall transmittivity can be used in the conventional free-space image formation methods. In cases where transmissivity is low and the signal is noisy, division by a small noise-affected number may cause significant distortion. In such cases, one may use only the phase of transmission coefficients as a compensation factor [44].

2.17.2.2.2 Imaging results

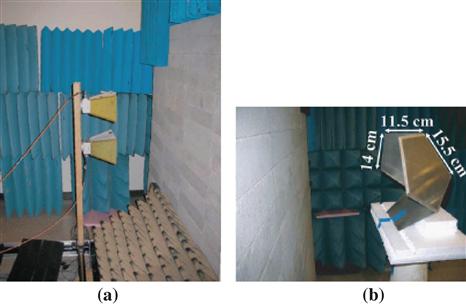

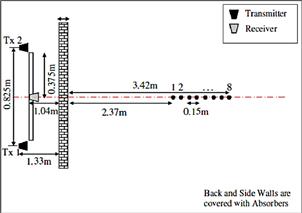

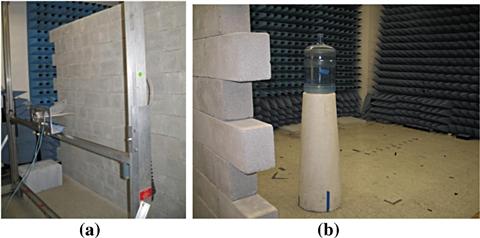

An experimental study was conducted at the Radiation Laboratory in University of Michigan. The measurement setup consists of an HP8753D vector network analyzer, two double ridge horn antennas with operational frequency range of 1–18 GHz, an XY table along with a control unit, and a personal computer. The vertically aligned antennas, one for transmission and the other for reception, are mounted on a vertical wooden rod (along the z-axis), which is attached to the carriage of the XY table. A 2.37 m ![]() 1.77 m wall composed of poured concrete blocks is made on top of a layer of cinder blocks inside the laboratory. The underlying cinder block layer is used to line up the antennas in height at approximately the middle of the concrete block wall. Figure 17.8a shows the side view of the measurement setup. A small trihedral corner reflector with pentagonal panel geometry is used as a point target behind the wall, as shown in Figure 17.8b. The back corner of the trihedral (scattering phase center) is at x = −0.71 m and at the same height as that of the receiver antenna, i.e., 1.28 m above the ground plane. The transmitting antenna is attached about 0.25 m below the receiving antenna on the wooden rod. The antennas are moved along a scan line of length 95.88 cm, parallel to the wall, with a spacing of 2.04 cm. The apertures of both antennas are about 0.45 m away from the wall. The frequency of operation is from 1 to 2.5 GHz and the frequency step is 12.5 MHz. It is noted that the antennas are in the far-field region of the target.

1.77 m wall composed of poured concrete blocks is made on top of a layer of cinder blocks inside the laboratory. The underlying cinder block layer is used to line up the antennas in height at approximately the middle of the concrete block wall. Figure 17.8a shows the side view of the measurement setup. A small trihedral corner reflector with pentagonal panel geometry is used as a point target behind the wall, as shown in Figure 17.8b. The back corner of the trihedral (scattering phase center) is at x = −0.71 m and at the same height as that of the receiver antenna, i.e., 1.28 m above the ground plane. The transmitting antenna is attached about 0.25 m below the receiving antenna on the wooden rod. The antennas are moved along a scan line of length 95.88 cm, parallel to the wall, with a spacing of 2.04 cm. The apertures of both antennas are about 0.45 m away from the wall. The frequency of operation is from 1 to 2.5 GHz and the frequency step is 12.5 MHz. It is noted that the antennas are in the far-field region of the target.

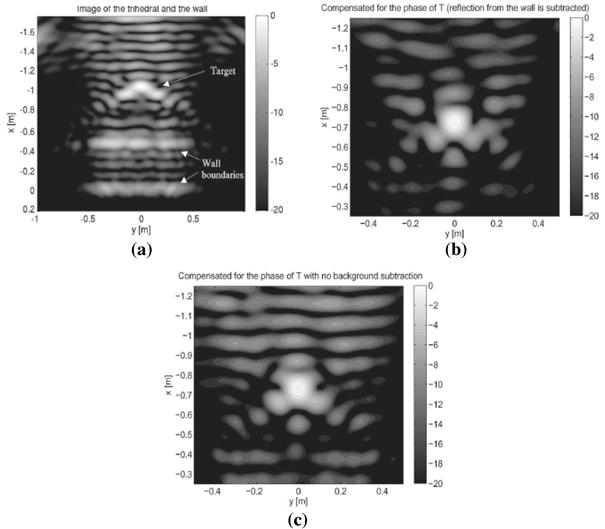

An image is first constructed under assumption of free-space propagation by using the total received signal, and is shown in Figure 17.9a. Here, in addition to the target, the wall is also imaged, primarily as two parallel lines showing the front and back boundaries. Since backscatter from the wall is very strong, the sidelobes generated by image formation spill over into desired image domain, which is manifested as multiple lines parallel to the wall surfaces. Also, the target appears blurred and its location is biased. Figure 17.9b shows the image after the estimate, model, and subtract approach was applied. The wall thickness and the permittivity were estimated using (17.28) as 20 cm and 5.7 + j0.6, respectively. The wall reflection was computed using (17.29) and subtracted from the total received signal, and the resulting signal was used for image formation. For comparison, the signal prior to wall return subtraction was used for imaging wherein compensation for the effect of transmission through the wall was applied and is shown in Figure 17.9c. Although the imaged target is at the correct location and is refocused in Figure 17.9c, existence of the wall sidelobes in the image is still evident. By comparing Figure 17.9b and c, a substantial improvement in clutter rejection is observed after subtraction of the modeled wall return.

2.17.3 Multipath exploitation

The existence of targets inside a room or in an enclosed structure introduces multipath in the radar return, which results in false targets or ghosts in the radar images. These ghosts lie on or near the vicinity of the back and side walls, leading to a cluttered image with several false positives. Without a reasonable through-the-wall multipath model, it becomes difficult to associate a ghost to a particular target. Identifying the nature of the targets in the image and tagging the ghosts with their respective target according to a developed multipath model, although important to reduce false alarms, is not the final goal of a high-performance through-the-wall imaging system. Since each multipath provides some information about the target, it becomes prudent to utilize the multipath rather than ignoring it, once identified. The utilization or exploitation of the multipath to one’s advantage represents a paradigm shift when compared to the classical approach of either ignoring or mitigating it.

For through-the-wall radar imaging applications, the existence of multipath has been recently demonstrated for stationary targets in [52,72,73]. In [72], the authors use distributed fusion to remove the false targets caused either from multipath or target interactions for stationary scenes after suitable image registration. In [52], time reversal techniques are used to alleviate ghosts and clutter from the target scene and in [73], a synthetic aperture radar (SAR) based image of a human inside a room is shown along with possible multipath ghosts. However, no more rigorous multipath modeling and analysis are presented in the aforementioned references [52,72,73] and the references therein.

In this section, we derive a model for the multipath in an enclosed room of four walls. The model considers propagation through a front wall and specular reflections at interior walls. A SAR system is considered and stationary or slow moving targets are assumed. Although the multipath model presented deals with walls, reflections from the ceiling and floor can be readily handled. We demonstrate analytically that the multipath as seen by each sensor is displaced, and, therefore, we derive the actual focusing positions of the multipath ghosts in downrange and crossrange. The multipath model permits an implementation of a multipath exploitation algorithm, which associates as well as maps each target ghost back to its corresponding true target location. In so doing, the exploitation algorithm improves the radar system performance by ameliorating the false positives in the original SAR image as well as increasing the signal-to-clutter ratio (SCR) at the target locations, culminating in enhanced behind the wall target detection and localization. It is noted that the exploitation algorithm only maps back target-wall interactions; target-target interactions are left untreated. The multipath exploitation algorithm is inspired by the work in [74], wherein the shadowed regions of a target are revealed via its multipath returns. The difference, however, is that we are not striving to reveal the shadowed regions of the target. In our case, we deal with targets with arbitrary dielectric constants, and wish to associate and map each multipath to its true target location.

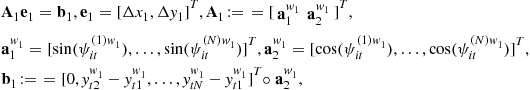

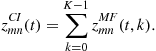

2.17.3.1 Image formation algorithm

In this section, we describe the through-the-wall delay-and-sum beamforming approach [40,41,75,76]. We consider a SAR system in which a single antenna at one location transmits a wideband signal and receives the radar return, and then moves to the next location and repeats the same operation along the axis parallel to the front wall. Assume N monostatic antenna locations. The setup is as depicted in Figure 17.10. Consider a single point target, located at ![]() . At each antenna location, the radar transmits a pulsed waveform

. At each antenna location, the radar transmits a pulsed waveform ![]() , where “t” indexes the time within the pulse, and measures the reflected signal. The target return at the

, where “t” indexes the time within the pulse, and measures the reflected signal. The target return at the ![]() th antenna location is given by,

th antenna location is given by,

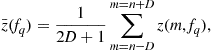

![]() (17.31)

(17.31)

where ![]() represents the target reflectivity,

represents the target reflectivity, ![]() is the complex amplitude associated with the one-way propagation through a dielectric wall for the

is the complex amplitude associated with the one-way propagation through a dielectric wall for the ![]() th antenna location [77], and

th antenna location [77], and ![]() represents the one-way through-the-wall propagation delay from the

represents the one-way through-the-wall propagation delay from the ![]() th antenna location to the target.

th antenna location to the target.

The scene of interest comprises several pixels indexed by the downrange and the crossrange. The complex composite return from the ![]() th pixel location

th pixel location ![]() is obtained by applying time delays to the N received signals, followed by weighting and summing the results. That is,

is obtained by applying time delays to the N received signals, followed by weighting and summing the results. That is,

(17.32)

(17.32)

The signal ![]() is passed through a matched filter, with impulse response

is passed through a matched filter, with impulse response ![]() . The complex amplitude assumed by the pixel

. The complex amplitude assumed by the pixel ![]() in the image

in the image ![]() is obtained by sampling the matched filter output at time

is obtained by sampling the matched filter output at time ![]() ,

,

![]() (17.33)

(17.33)

where “![]() ” denotes the convolution operation. Equations (17.32) and (17.33) describe the standard beamforming approach in through-the-wall radar imaging. It is noted that if the imaged pixel is in the vicinity of or at the true target location, then the complex amplitude in (17.33) assumes a high value as given by the system’s point spread function. The process described in (17.32) and (17.33) is carried out for all pixels in the scene of interest to generate the image of the scene.

” denotes the convolution operation. Equations (17.32) and (17.33) describe the standard beamforming approach in through-the-wall radar imaging. It is noted that if the imaged pixel is in the vicinity of or at the true target location, then the complex amplitude in (17.33) assumes a high value as given by the system’s point spread function. The process described in (17.32) and (17.33) is carried out for all pixels in the scene of interest to generate the image of the scene.

The beamforming approach as described above is pertinent to a point target in a two-dimensional (2D) scene. Extensions to three-dimensional (3D) scenes and spatially extended targets are discussed in [40,78]. It is noted that, in the above beamforming description, we have not considered the multipath returns; neither have we addressed the specificities of calculating the delays, ![]() , which will be treated in detail in the following sections. Without loss of generality, we now assume that the weights

, which will be treated in detail in the following sections. Without loss of generality, we now assume that the weights ![]() and the target has unit reflectivity.

and the target has unit reflectivity.

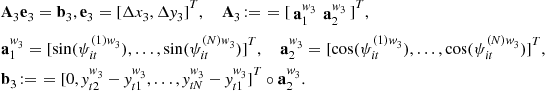

2.17.3.2 Multipath model

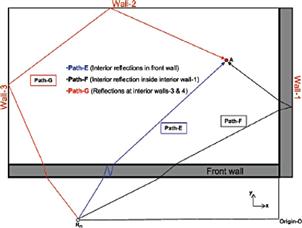

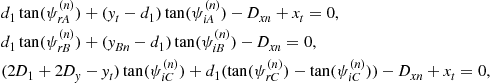

Consider a room under surveillance using a SAR system. A priori knowledge of the room layout, i.e., wall locations and material properties, is assumed. The scene being observed relative to the ![]() th sensor location is as shown in Figure 17.11. The origin is marked as point “O” in the figure, and the standard convention for the positive x- and y- axes is assumed. The

th sensor location is as shown in Figure 17.11. The origin is marked as point “O” in the figure, and the standard convention for the positive x- and y- axes is assumed. The ![]() th sensor location is given by

th sensor location is given by ![]() The front wall has a thickness

The front wall has a thickness ![]() and dielectric constant

and dielectric constant ![]() . For notational simplicity, the back and the side walls are also assumed to have

. For notational simplicity, the back and the side walls are also assumed to have ![]() as the dielectric constant. The side walls, labeled as wall-1 and wall-3, have a length

as the dielectric constant. The side walls, labeled as wall-1 and wall-3, have a length ![]() , whereas the front wall and the back wall (wall-2) have a length

, whereas the front wall and the back wall (wall-2) have a length ![]() . The target is stationary and at location

. The target is stationary and at location ![]() . The standoff distance from the front wall is constant for each sensor location constituting the synthetic aperture, and is denoted as

. The standoff distance from the front wall is constant for each sensor location constituting the synthetic aperture, and is denoted as ![]() . In the figure, we consider the direct path, referred to as path-A, and three additional paths, namely, paths-B, C, and D, which correspond to single-bounce multipath. In general, there exist other paths which can contribute to the multipath returns; these include multiple bounces from the back and side walls as well as paths that include multiple reflections within each of the four walls themselves. Examples of the former and the latter are provided in Figure 17.12. Such paths are defined as higher-order multipath. Hence, in Figure 17.11, we have considered only first-order multipath. It is noted that higher-order multipath are in general weaker compared to the first-order multipath due to the secondary reflections and refractions at the various air-wall interfaces and high attenuation in the wall material. Therefore, we choose to exclude these paths from the model.

. In the figure, we consider the direct path, referred to as path-A, and three additional paths, namely, paths-B, C, and D, which correspond to single-bounce multipath. In general, there exist other paths which can contribute to the multipath returns; these include multiple bounces from the back and side walls as well as paths that include multiple reflections within each of the four walls themselves. Examples of the former and the latter are provided in Figure 17.12. Such paths are defined as higher-order multipath. Hence, in Figure 17.11, we have considered only first-order multipath. It is noted that higher-order multipath are in general weaker compared to the first-order multipath due to the secondary reflections and refractions at the various air-wall interfaces and high attenuation in the wall material. Therefore, we choose to exclude these paths from the model.

The walls are assumed smooth with specular reflections. The smoothness assumption is valid, since for through-the-wall radars, the wavelength of operation is much larger than the roughness of the walls. Specular reflections are a direct consequence of the wall smoothness, and necessitate that the angle of incidence be equal to the angle of reflection. Note that, in general, the back and side walls may each be of a different material (interior or exterior grade), and thus may assume different values for the wall thickness and dielectric constant. If interior reflections inside these walls are considered, then the thickness of these walls is required [52,73,74]. However, since we have ignored such higher-order paths, we only require the dielectric constant of the back and side walls to be known. As the EM wave propagates through the front wall, it bends at the medium discontinuity as dictated by Snell’s law, i.e., each of the paths, as seen in Figure 17.11, has an associated angle of incidence and an angle of refraction. For example, the angles of incidence and refraction for path-B are denoted as ![]() and

and ![]() , respectively. Similar nomenclature follows for the remaining paths.

, respectively. Similar nomenclature follows for the remaining paths.

Let the reflection points on wall-1, 2, and 3 be denoted by ![]() , and

, and ![]() , with respective position vectors

, with respective position vectors ![]() , and

, and ![]() . It is clear that these position vectors are dependent on the sensor location. The one-way path delays for the four paths, with the antenna at the

. It is clear that these position vectors are dependent on the sensor location. The one-way path delays for the four paths, with the antenna at the ![]() th location, are denoted by

th location, are denoted by ![]() , and can be derived from the geometry as

, and can be derived from the geometry as

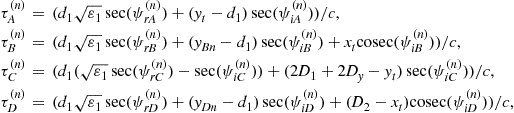

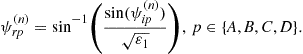

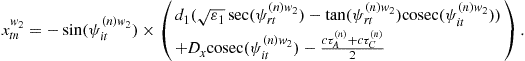

(17.34)

(17.34)

with c being the speed of light and the coordinates of ![]() , and

, and ![]() given by

given by

(17.35)

(17.35)

Equations (17.34) and (17.35) depend on the angles of incidence and refraction. Since the standoff distance is a known constant, by projecting the various paths to the x-axis, we obtain the following equations, which are useful in calculating the various angles.

(17.36)

(17.36)

![]()

The angles of refraction can be determined from Snell’s law as,

(17.37)

(17.37)

Substituting the angles of refraction from (17.37) in (17.36), and using (17.35), we obtain a set of equations that can be solved numerically for the angles of incidence by using the Newton method. The angles of refraction can then be obtained using (17.37).

We are now in a position to write the radar signal return from the single target scene as a superposition of the direct path and the multipath returns. For the ![]() th sensor location, we obtain

th sensor location, we obtain

(17.38)

(17.38)

where ![]() is the complex amplitude associated with reflection and transmission coefficients for the one-way propagation along path-p, and depends on the angles of incidence, the angles of refraction, and dielectric properties of the walls [77]. In (17.38), the first summation captures the two-way returns along the direct path and each multipath. More specifically, the signal propagates along a particular path-p,

is the complex amplitude associated with reflection and transmission coefficients for the one-way propagation along path-p, and depends on the angles of incidence, the angles of refraction, and dielectric properties of the walls [77]. In (17.38), the first summation captures the two-way returns along the direct path and each multipath. More specifically, the signal propagates along a particular path-p, ![]() , reaches the target and retraces the same path back to the radar, i.e., path-p. The multipath returns due to the combination paths are captured by the second summation, i.e., the wave initially travels to the target via path-p and returns to the radar through a different path-q,

, reaches the target and retraces the same path back to the radar, i.e., path-p. The multipath returns due to the combination paths are captured by the second summation, i.e., the wave initially travels to the target via path-p and returns to the radar through a different path-q, ![]()

From Figure 17.11 and (17.35), we observe that the coordinates of the points of reflection at the back and side walls are sensor dependent, implying that the multipath presents itself at different locations to the different sensors. Location of the multipath as seen by each sensor and the actual focusing position of the resulting ghost in the image are discussed next.

2.17.3.2.1 Multipath locations

For simplicity of analysis, consider the scenario in Figure 17.11, but without the front wall. We first focus on the multipath originating from wall-1, i.e., the signal traveling to the target via path-A, and following path-B back to the radar or vice versa. The goal is to find the location of the multipath corresponding to the above combination path as seen by each of theN sensors. Let the point ![]() , represented by the vector

, represented by the vector ![]() , denote the multipath location as seen by the

, denote the multipath location as seen by the ![]() th sensor. The superscript “

th sensor. The superscript “![]() ” stresses that the multipath is associated with the reflection at wall-1 and the subscript “t” indicates that the multipath is due to the target located at

” stresses that the multipath is associated with the reflection at wall-1 and the subscript “t” indicates that the multipath is due to the target located at ![]() . Reflecting path-B about wall-1 yields an alternate radar-target geometry, as shown in Figure 17.13. We obtain the virtual target denoted by point

. Reflecting path-B about wall-1 yields an alternate radar-target geometry, as shown in Figure 17.13. We obtain the virtual target denoted by point ![]() with coordinates

with coordinates ![]() such that the distance

such that the distance ![]() , which implies that

, which implies that

![]() (17.39)

(17.39)

In other words, the combination path comprising of path-A and path-B has the same length as path-A and the path connecting the ![]() th sensor to the virtual target at

th sensor to the virtual target at ![]() . Due to the monostatic nature of the radar system, this combination path appears to the

. Due to the monostatic nature of the radar system, this combination path appears to the ![]() th sensor as the traditional two way path of length

th sensor as the traditional two way path of length ![]() . Therefore, we have

. Therefore, we have

![]() (17.40)

(17.40)

Additionally, we obtain the virtual radar at ![]() on the other side of the wall, as shown in Figure 17.13. Considering Figure 17.13, it is readily observed that the first order multipath has the same time delay as a bistatic configuration, comprising the radar and the virtual radar. That is, in terms of range, we have

on the other side of the wall, as shown in Figure 17.13. Considering Figure 17.13, it is readily observed that the first order multipath has the same time delay as a bistatic configuration, comprising the radar and the virtual radar. That is, in terms of range, we have

![]() (17.41)

(17.41)

where ![]() represents the bistatic configuration. In the bistatic case, the constant range contour corresponding to the multipath is an ellipse, which has foci at the radar and virtual radar and passes through the target and the virtual target locations. On the other hand, the monostatic measurement scenario described by (17.40) has a circular constant range contour, centered at the radar. Since the two measurements are equivalent, the location of the multipath corresponds to the point of intersection of the bistatic elliptical and the monostatic circular constant range contours. The equations for the ellipse and circle are given by

represents the bistatic configuration. In the bistatic case, the constant range contour corresponding to the multipath is an ellipse, which has foci at the radar and virtual radar and passes through the target and the virtual target locations. On the other hand, the monostatic measurement scenario described by (17.40) has a circular constant range contour, centered at the radar. Since the two measurements are equivalent, the location of the multipath corresponds to the point of intersection of the bistatic elliptical and the monostatic circular constant range contours. The equations for the ellipse and circle are given by

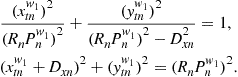

(17.42)

(17.42)

Solving (17.42) for an intersection point, we can readily see that ![]() is the only solution. In other words, regardless of the location of the target, its first-order multipath always falls on the wall. The y-coordinate of

is the only solution. In other words, regardless of the location of the target, its first-order multipath always falls on the wall. The y-coordinate of ![]() can then be derived as

can then be derived as

(17.43)

(17.43)

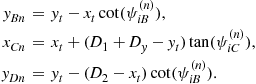

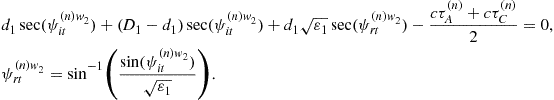

In (17.43), the positive y-coordinate is the desired value as the other solution lies behind the radar. It is clear from (17.43) that the multipath location is sensor dependent. Therefore, the locations of the target multipath corresponding to the various sensor positions will be displaced from one another. In the presence of the front wall, the multipath remains on the wall, but the equations for the ellipse and circle are different than that presented in (17.42). Hence, for the scenario comprising of the front-wall and wall-1, the multipath appears on wall-1, with its y-coordinate given by

(17.44)

(17.44)

where ![]() and

and ![]() are, respectively, the angles of incidence and refraction for the ghost. The solution in (17.44) depends on the angle

are, respectively, the angles of incidence and refraction for the ghost. The solution in (17.44) depends on the angle ![]() , which can be obtained by solving

, which can be obtained by solving

(17.45)

(17.45)

So far, we have considered the multipath corresponding to wall-1; there exist two other multipath returns tagged to the remaining walls, namely, wall-2, and wall-3. We can readily show, using similar analysis, that the multipath associated with wall-2 appears on wall-2, i.e., ![]() , at an x-coordinate given by

, at an x-coordinate given by

(17.46)

(17.46)

The angles in the above equation are determined by solving the equations,

(17.47)

(17.47)

Likewise, the multipath associated with wall-3 appears at wall-3, i.e., ![]() , and at a y-coordinate given by,

, and at a y-coordinate given by,

(17.48)

(17.48)

The respective angles can be obtained by solving,

(17.49)

(17.49)

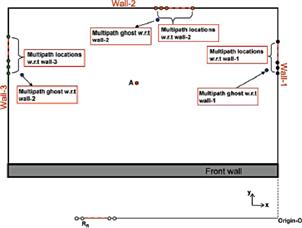

From the above equations, it is again observed that the N sensors view the multipath, resulting from the combination paths associated with a particular wall, at different locations on that wall. That is, the multipath maybe regarded as a moving target. As a result, when applying beamforming, the multipath ghost appears at a different pixel in the vicinity of the true multipath locations. We note that the multipath ghost will lie inside the room except when the target is near the corners of the room. In this case, as the multipath corresponding to the N sensors may appear along an extrapolation of the wall, the multipath ghost may appear outside the room. We further note that the virtual target corresponds to two-way propagation along the single-bounce multipath, and is readily seen to lie outside the room perimeter. The multipath focusing pixel analysis for the combination paths is discussed next.

2.17.3.2.2 Multipath focusing analysis

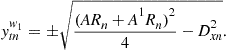

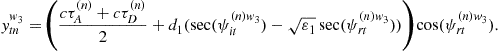

Consider Figure 17.14, which shows the multipath locations w.r.t to walls-1–3, and the focusing pixels w.r.t to these walls. Consider the multipath locations associated with wall-1; further assume that the focused multipath ghost appears at a pixel location given by

![]() (17.50)

(17.50)

where ![]() is the true multipath location corresponding to the first sensor position. Using a first order Taylor series expansion, which is valid under conditions of a small aperture [79] and when the ghost is in the vicinity of the true multipath locations, we obtain the difference in propagation path length between the multipath ghost location and the true multipath location w.r.t the first sensor position as

is the true multipath location corresponding to the first sensor position. Using a first order Taylor series expansion, which is valid under conditions of a small aperture [79] and when the ghost is in the vicinity of the true multipath locations, we obtain the difference in propagation path length between the multipath ghost location and the true multipath location w.r.t the first sensor position as

(17.51)

(17.51)

Following the analysis in [79], (17.51) can be expressed as

![]() (17.52)

(17.52)

In general, for the ![]() th sensor, we have

th sensor, we have

![]() (17.53)

(17.53)

For the multipath to focus at the location ![]() , we must have,

, we must have,

![]() (17.54)

(17.54)

This yields a least squares (LS) formulation, given by

(17.55)

(17.55)

where “![]() ” denotes the Hadamard or element-wise product. The solution of (17.55) is readily obtained by

” denotes the Hadamard or element-wise product. The solution of (17.55) is readily obtained by ![]() .

.

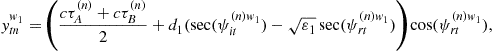

Now, considering the multipath w.r.t wall-2, we have the following LS formulation for the focused ghost pixel.

(17.56)

(17.56)

![]()

Similarly, for the multipath from wall-3, we have

(17.57)

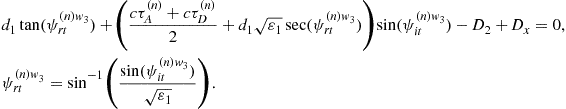

(17.57)

The formulations in (17.56)–(17.58) assume that the sensor position increases from left to right. In other words, sensor-1 is at the far left of the array whereas sensor-N is at the far right.

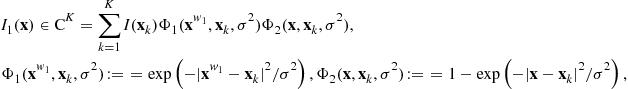

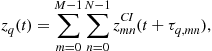

2.17.3.3 Multipath exploitation algorithm

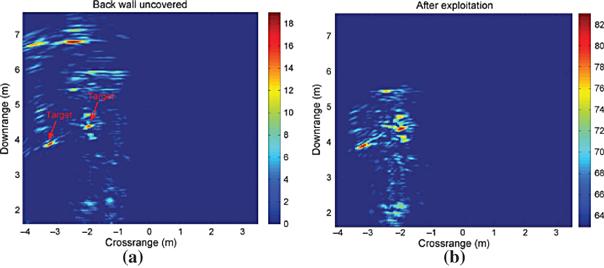

Noting that the multipath ghosts exist due to the presence of the target, we state our objective as follows. Given the beamformed image ![]() , our aim is to associate each multipath ghost with the respective target via the model developed in Section 2.17.3.2. The principle is captured in Figure 17.15, which consists of two targets and six false positives or multipath ghosts. We desire to associate and map these ghosts to their respective true target locations. The main advantages of such an association or mapping are reduction in false positives in the original beamformed image, and an increase in the SCR at the true target coordinates. Note that the first advantage is directly implied in Figure 17.15, whereas the second advantage is explained as follows.

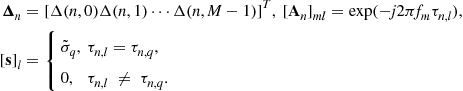

, our aim is to associate each multipath ghost with the respective target via the model developed in Section 2.17.3.2. The principle is captured in Figure 17.15, which consists of two targets and six false positives or multipath ghosts. We desire to associate and map these ghosts to their respective true target locations. The main advantages of such an association or mapping are reduction in false positives in the original beamformed image, and an increase in the SCR at the true target coordinates. Note that the first advantage is directly implied in Figure 17.15, whereas the second advantage is explained as follows.