2.07.4.2 Decentralized observations with centralized estimation

In many cases, the observations are gathered in distributed form, through sensors deployed over a certain area, but the decision (either estimation or detection) is carried out in a central fusion center. In this section, we review some of the problems related to distributed estimation, with centralized decision. In such a case, the measurements gathered by the sensors are sent to a fusion center through rate-constrained physical channels. The question is how to design the quantization step in each sensor in order to optimize some performance metric related to the estimation of the parameter of interest. Let us start with an example, to introduce the basic issues.

Let us consider a network of N sensors, each observing a value ![]() containing a deterministic parameter

containing a deterministic parameter ![]() , corrupted by additive noise

, corrupted by additive noise ![]() , i.e.,

, i.e.,

![]() (7.99)

(7.99)

The noise variables ![]() are supposed to be zero mean spatially uncorrelated random variables with variance

are supposed to be zero mean spatially uncorrelated random variables with variance ![]() . Suppose that the sensors transmit their observations via some orthogonal multiple access scheme to a control center which wishes to estimate the unknown signal

. Suppose that the sensors transmit their observations via some orthogonal multiple access scheme to a control center which wishes to estimate the unknown signal ![]() by minimizing the estimation mean square error (MSE)

by minimizing the estimation mean square error (MSE) ![]() . In the ideal case, where the observations are unquantized and received by the control center without distortion, the best linear unbiased estimator (BLUE) can be performed by the control center and the estimate

. In the ideal case, where the observations are unquantized and received by the control center without distortion, the best linear unbiased estimator (BLUE) can be performed by the control center and the estimate ![]() is given by

is given by

(7.100)

(7.100)

with MSE given by ![]() . This estimator coincides with the maximum likelihood estimator when the noise variables are jointly Gaussian and uncorrelated.

. This estimator coincides with the maximum likelihood estimator when the noise variables are jointly Gaussian and uncorrelated.

Let us consider now the realistic case, where each sensor quantizes the observation ![]() to generate a discrete message

to generate a discrete message ![]() of

of ![]() bits. Assuming an error-free transmission, the fusion center must then provide an estimate

bits. Assuming an error-free transmission, the fusion center must then provide an estimate ![]() of the true parameter, based on the messages

of the true parameter, based on the messages ![]() transmitted by all the nodes. More specifically, assuming a uniform quantizer which generates unbiased message functions, the estimator at the control center performs a linear combination of the received messages. Let us suppose that the unknown signal to be estimated belongs to the range

transmitted by all the nodes. More specifically, assuming a uniform quantizer which generates unbiased message functions, the estimator at the control center performs a linear combination of the received messages. Let us suppose that the unknown signal to be estimated belongs to the range ![]() and each sensor uniformly divides the range

and each sensor uniformly divides the range ![]() into

into ![]() intervals of length

intervals of length ![]() rounding

rounding ![]() to the midpoint of these intervals. In this case, the quantized value

to the midpoint of these intervals. In this case, the quantized value ![]() at the kth sensor can be written as

at the kth sensor can be written as ![]() , where the quantization noise

, where the quantization noise ![]() is independent of

is independent of ![]() . It can be proved that

. It can be proved that ![]() is an unbiased estimator of

is an unbiased estimator of ![]() with

with

![]() (7.101)

(7.101)

where ![]() denotes an upper bound on the quantization noise variance and is given by

denotes an upper bound on the quantization noise variance and is given by

![]() (7.102)

(7.102)

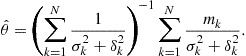

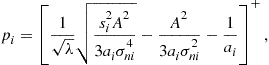

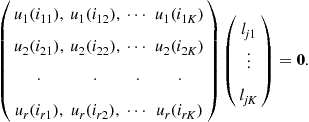

A linear unbiased estimator of ![]() is [49]

is [49]

(7.103)

(7.103)

This estimate yields an MSE upper bound

(7.104)

(7.104)

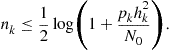

As mentioned before, the previous strategy assumes that there are no transmission errors. This property can be made as close as possible to reality by enforcing the transmission rate of sensor k to be strictly less than the channel capacity from sensor k to the fusion center. If we denote by ![]() the transmit power of sensor

the transmit power of sensor ![]() the channel coefficient between sensor k and control node and

the channel coefficient between sensor k and control node and ![]() is the noise variance at the control node receiver, the bound on transmit rate guaranteeing an arbitrarily small error probability is

is the noise variance at the control node receiver, the bound on transmit rate guaranteeing an arbitrarily small error probability is

(7.105)

(7.105)

The problem is then how to allocate power and bits over each channel in order to fulfill some optimality criterion dictated by the estimation problem. This problem was tackled in [49] where it was proposed the minimization of the Euclidean norm of the transmit power vector under the constraint that the estimation variance is upper bounded by a given quantity and that the number of bits per symbol is less than the channel capacity. Here we formulate the problem as the minimization of the total transmit power under the constraint that the final MSE be upper bounded by a given quantity ![]() . From (7.105), defining

. From (7.105), defining ![]() , we can derive the number of quantization level as a function of the transmit power,6

, we can derive the number of quantization level as a function of the transmit power,6

![]() (7.106)

(7.106)

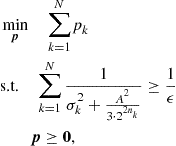

Our aim is to minimize the sum of powers transmitted by all the sensors under the constraint

(7.107)

(7.107)

Denoting with ![]() the power vector, the optimization problem can be formulated as

the power vector, the optimization problem can be formulated as

(7.108)

(7.108)

where ![]() is a function of

is a function of ![]() , as in (7.105). In practice, the values

, as in (7.105). In practice, the values ![]() are integer. However, searching for the optimal integer values

are integer. However, searching for the optimal integer values ![]() leads to an integer programming problem. To relax the problem, we assume that the variables

leads to an integer programming problem. To relax the problem, we assume that the variables ![]() are real. Then, by using (7.106), the optimization problem in (7.108) can be formulated as

are real. Then, by using (7.106), the optimization problem in (7.108) can be formulated as

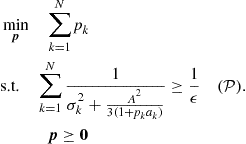

(7.109)

(7.109)

Problem ![]() is indeed a convex optimization problem and it is feasible if

is indeed a convex optimization problem and it is feasible if ![]() .

.

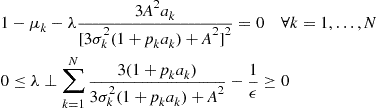

The optimal solution of the convex problem ![]() can be found by imposing the KKT conditions of

can be found by imposing the KKT conditions of ![]() , i.e.,

, i.e.,

(7.110)

(7.110)

![]()

where ![]() and

and ![]() denote the Lagrangian multipliers associated to the

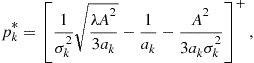

denote the Lagrangian multipliers associated to the ![]() constraints. The solution for the optimal powers turns out to be

constraints. The solution for the optimal powers turns out to be

(7.111)

(7.111)

where ![]() and

and ![]() is found by imposing the MSE constraint to be valid with equality.

is found by imposing the MSE constraint to be valid with equality.

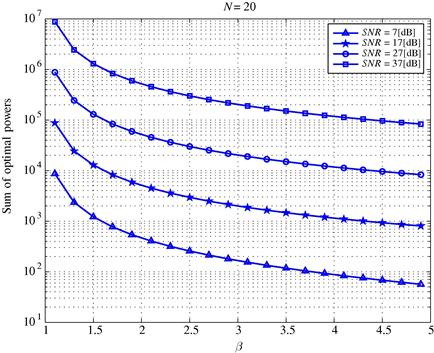

It is now useful to present some numerical results. To guarantee the existence of a solution, we set the bound ![]() with

with ![]() and

and ![]() . In Figure 7.13 we report the sum of the optimal transmit powers vs.

. In Figure 7.13 we report the sum of the optimal transmit powers vs. ![]() , for different SNR values. The number of sensors is

, for different SNR values. The number of sensors is ![]() . We can notice that the minimum transmit power increases for smaller values of

. We can notice that the minimum transmit power increases for smaller values of ![]() , i.e., when we require the realistic system to perform closer and closer to the ideal communication case.

, i.e., when we require the realistic system to perform closer and closer to the ideal communication case.

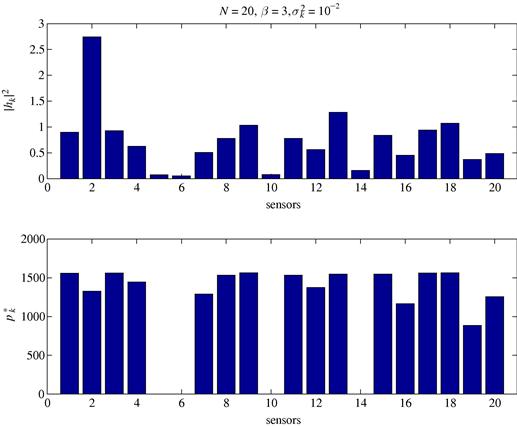

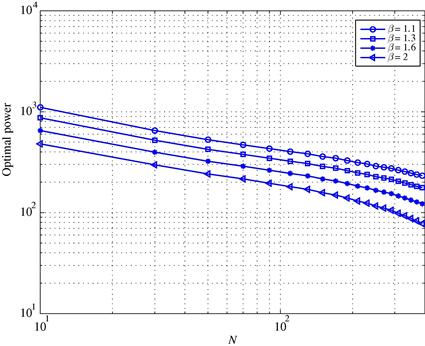

In the bottom subplot of Figure 7.14 we report an example of optimal power allocation obtained by solving the optimization problem ![]() , corresponding to the channel realization shown in the top subplot, assuming a constant observation noise variance

, corresponding to the channel realization shown in the top subplot, assuming a constant observation noise variance ![]() . We can observe that the solution is that only the nodes with the best channels coefficients are allowed to transmit. Finally, in Figure 7.15 we plot the sum of the optimal transmit powers versus the number of sensors N, for different values of

. We can observe that the solution is that only the nodes with the best channels coefficients are allowed to transmit. Finally, in Figure 7.15 we plot the sum of the optimal transmit powers versus the number of sensors N, for different values of ![]() . We can see that, as N increases, a lower power is necessary to achieve the desired estimation variance, as expected.

. We can see that, as N increases, a lower power is necessary to achieve the desired estimation variance, as expected.

2.07.5 Distributed detection

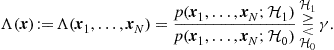

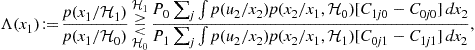

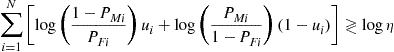

The distributed detection problem is in general more difficult to handle than the estimation problem. There is an extensive literature on distributed detection problem, but there is still a number of open problems. According to decision theory, an ideal centralized detector having error-free access to all the measurements collected by a set of nodes, should form the likelihood ratio and compare it with a suitable threshold [5]. Denoting with ![]() and

and ![]() the two alternative hypotheses, i.e., absence or presence of the event of interest, and with

the two alternative hypotheses, i.e., absence or presence of the event of interest, and with ![]() the joint probability density function of the whole set of observed data, under the hypothesis

the joint probability density function of the whole set of observed data, under the hypothesis ![]() , many decision tests can be cast as threshold strategies where the likelihood ratio (LR) is compared with a threshold

, many decision tests can be cast as threshold strategies where the likelihood ratio (LR) is compared with a threshold ![]() , which depends on the decision criterion. This is true, for example, for two important formulations leading to the Bayes approach and to the Neyman-Pearson criterion, the only difference between the two’s being the values assumed by the threshold

, which depends on the decision criterion. This is true, for example, for two important formulations leading to the Bayes approach and to the Neyman-Pearson criterion, the only difference between the two’s being the values assumed by the threshold ![]() . The detection rule decides for

. The detection rule decides for ![]() if the threshold is exceeded or for

if the threshold is exceeded or for ![]() , otherwise. In formulas,

, otherwise. In formulas,

(7.112)

(7.112)

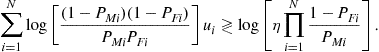

Ideally, with no communication constraints, every node should then send its observation vector ![]() , with

, with ![]() to the fusion center, which should then use all the received vectors to implement the LR test, as in (7.112). In reality, there are intrinsic limitations due to, namely: (a) the finite number of bits with which every sensor has to encode the measurements before transmission; (b) the maximum latency with which the decision has to be taken; (c) the finite capacity of the channel between sensors and fusion center. The challenging problem is then how to devise an optimum decentralized detection strategy taking into account the limitations imposed by the communication over realistic channels. The global problem, in the most general setting, is still an open problem, but there are many works in the literature addressing some specific cases. The interested reader may check the book [50] or the excellent tutorial reviews given in [51–53]. The situation becomes more complicated when we take explicitly into account the capacity bound imposed by the communication channel and we look for the number of bits to be used to quantize the local observations before transmitting to the fusion center. This problem was addressed in [54,55], where it was shown that binary quantization is optimal for the problem of detecting deterministic signals in Gaussian noise and for detecting signals in Gaussian noise using a square-law detector. The interesting indication, in these contexts, is that the gain offered by having more sensor nodes outperforms the benefits of getting more detailed (nonbinary) information from each sensor. A general framework to cast the problem of decentralized detection is the one where the topology describing the exchange of information among sensing nodes is not simply a tree, with all nodes sending data to a fusion center, but it is a graph. Each node is assumed to transmit a finite-alphabet symbol to its neighbors and the problem is how to find out the encoding (quantization) rule on each node. A class of problems admitting a message passing algorithm with provable convergence properties was proposed in [10]. The solution is a sort of distributed fusion protocol, taking explicitly into account the limits on the communication resources. An interesting and well motivated observation model is a correlated random field, as in many applications the observations concern physical quantities, like temperature or pressure, for example, which being subject to diffusion processes, are going to be spatially and temporally correlated. One of the first works addressing the detection of a known signal embedded in a correlated Gaussian noise was [56]. Using large deviations theory, the authors of [57] study the impact of node density, assuming that observations become increasingly correlated as sensors are in closer proximity of each other. More recently, the detection of a Gauss-Markov Random field (GMRF) with nearest-neighbor dependency was studied in [8]. Scaling laws for the energy consumption of optimal and sub-optimal fusion policies were then presented in [9]. The problem of energy-efficient routing of sensor observations from a Markov random field was analyzed in [58].

to the fusion center, which should then use all the received vectors to implement the LR test, as in (7.112). In reality, there are intrinsic limitations due to, namely: (a) the finite number of bits with which every sensor has to encode the measurements before transmission; (b) the maximum latency with which the decision has to be taken; (c) the finite capacity of the channel between sensors and fusion center. The challenging problem is then how to devise an optimum decentralized detection strategy taking into account the limitations imposed by the communication over realistic channels. The global problem, in the most general setting, is still an open problem, but there are many works in the literature addressing some specific cases. The interested reader may check the book [50] or the excellent tutorial reviews given in [51–53]. The situation becomes more complicated when we take explicitly into account the capacity bound imposed by the communication channel and we look for the number of bits to be used to quantize the local observations before transmitting to the fusion center. This problem was addressed in [54,55], where it was shown that binary quantization is optimal for the problem of detecting deterministic signals in Gaussian noise and for detecting signals in Gaussian noise using a square-law detector. The interesting indication, in these contexts, is that the gain offered by having more sensor nodes outperforms the benefits of getting more detailed (nonbinary) information from each sensor. A general framework to cast the problem of decentralized detection is the one where the topology describing the exchange of information among sensing nodes is not simply a tree, with all nodes sending data to a fusion center, but it is a graph. Each node is assumed to transmit a finite-alphabet symbol to its neighbors and the problem is how to find out the encoding (quantization) rule on each node. A class of problems admitting a message passing algorithm with provable convergence properties was proposed in [10]. The solution is a sort of distributed fusion protocol, taking explicitly into account the limits on the communication resources. An interesting and well motivated observation model is a correlated random field, as in many applications the observations concern physical quantities, like temperature or pressure, for example, which being subject to diffusion processes, are going to be spatially and temporally correlated. One of the first works addressing the detection of a known signal embedded in a correlated Gaussian noise was [56]. Using large deviations theory, the authors of [57] study the impact of node density, assuming that observations become increasingly correlated as sensors are in closer proximity of each other. More recently, the detection of a Gauss-Markov Random field (GMRF) with nearest-neighbor dependency was studied in [8]. Scaling laws for the energy consumption of optimal and sub-optimal fusion policies were then presented in [9]. The problem of energy-efficient routing of sensor observations from a Markov random field was analyzed in [58].

A classification of the various detection algorithms depends on the adopted criterion. A first important classification is the following:

1. Global decision is taken at the fusion center

a. Nodes send data to FC; FC takes global decision

b. Nodes send local decisions to FC; FC fuses local decisions

2. Every node is able to take a global decision

In the first case, the observation is distributed across the nodes, but the decision is centralized. This case has received most of the attention. The interested reader may check, for example, the book [50] or the tutorial reviews given in [51–53]. In the second case, also the decision is decentralized. This case has been considered only relatively recently. Some references are, for example, [59–65].

An alternative classification is between

In the first case, the network collects a given amount of data along the time and space domains and then it takes a decision. In the second case, the number of observations, either in time or in terms of number of involved sensors, is not decided a priori, but it is updated at every new measurement. The network stops collecting information only when some performance criterion is satisfied (typically, false alarm and detection probability) [66–68].

One of the major difficulties in distributed detection comes from establishing the optimal decision thresholds at local and global level. The main problem is how to optimize the local decisions, taking into account that the final decisions will be only the result of the interaction among the nodes. Taking a local decision can be interpreted as a form of source coding. The simple (binary) hypothesis testing can be seen in fact as a form of binary coding. Whenever the observations are conditionally independent, given each hypothesis, the likelihood ratio test at the sensor nodes is indeed optimal [69]. However, finding the optimal quantization levels is a difficult task. Even when the observations are i.i.d., assuming identical decision rules is very common and apparently well justified. Nevertheless there are counterexamples showing that nonidentical decision rules are optimal [69]. Identical decision rules in the i.i.d. case turns out to be optimal only asymptotically, as the number of nodes tends to infinity [70].

A simple example may be useful to grasp some of the difficulties associated with distributed detection. For this purpose, we briefly recall the seminal work of Tenney and Sandell [71]. Let us consider two sensors, each measuring a real quantity ![]() , with

, with ![]() . Based on its observation

. Based on its observation ![]() , sensor i decides whether the phenomenon of interest is present or not. In the first case, it sets the decision variable

, sensor i decides whether the phenomenon of interest is present or not. In the first case, it sets the decision variable ![]() , otherwise, it sets

, otherwise, it sets ![]() . The question is how to implement the decision strategy, according to some optimality criterion. The approach proposed in [71] is a Bayesian approach, where the goal of each sensor is to minimize the Bayes risk, which can be made explicit by introducing the cost coefficients and the observation probability model. Let us denote by

. The question is how to implement the decision strategy, according to some optimality criterion. The approach proposed in [71] is a Bayesian approach, where the goal of each sensor is to minimize the Bayes risk, which can be made explicit by introducing the cost coefficients and the observation probability model. Let us denote by ![]() the cost of detector 1 deciding on

the cost of detector 1 deciding on ![]() , detector 2 deciding on

, detector 2 deciding on ![]() , when the true hypothesis is

, when the true hypothesis is ![]() . Denoting by

. Denoting by ![]() the prior probability of event

the prior probability of event ![]() and by

and by ![]() the joint pdf of having

the joint pdf of having ![]() , observing the pair

, observing the pair ![]() and deciding for the pair

and deciding for the pair ![]() , the average risk can be written as

, the average risk can be written as

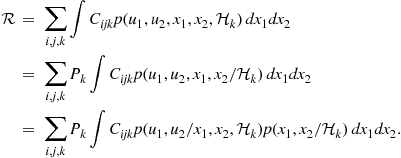

(7.113)

(7.113)

In this case, each node observes only its own variable and takes a decision independently of the other node. Hence, we can set

![]() (7.114)

(7.114)

Expanding the right hand side by explicitly summing over index i, we get

![]() (7.115)

(7.115)

Considering that ![]() and ignoring all terms which do not contain

and ignoring all terms which do not contain ![]() , we get

, we get

![]() (7.116)

(7.116)

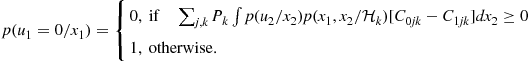

The average risk is minimized if ![]() is chosen as follows

is chosen as follows

(7.117)

(7.117)

This expression shows that the optimal local decision rule is a deterministic rule. After a few algebraic manipulations, (7.117) can be rewritten, equivalently, as [50]

(7.118)

(7.118)

where ![]() is the LR at node 1. Eq. (7.118) has the structure of a LRT. However, note that the threshold on the right hand side of (7.118) depends on the observation

is the LR at node 1. Eq. (7.118) has the structure of a LRT. However, note that the threshold on the right hand side of (7.118) depends on the observation ![]() , through the term

, through the term ![]() , which incorporates the statistical dependency between the observations

, which incorporates the statistical dependency between the observations ![]() and

and ![]() . Hence, Eq. (7.118) is not a proper LRT.

. Hence, Eq. (7.118) is not a proper LRT.

The situation simplifies if the observations are conditionally independent, i.e., ![]() . In such a case, the threshold

. In such a case, the threshold ![]() can be simplified into

can be simplified into

![]() (7.119)

(7.119)

Since ![]() , (7.119) can be rewritten as

, (7.119) can be rewritten as

![]() (7.120)

(7.120)

Hence, the threshold ![]() to be used at node 1 is a function of

to be used at node 1 is a function of ![]() , i.e., on the decision taken by node 2. At the same time, the threshold

, i.e., on the decision taken by node 2. At the same time, the threshold ![]() to be used by node 2 will depend on the decision rule followed by node 1. This means that, even if the observations are conditionally independent and the decisions are taken autonomously by the two nodes, the decisions are still coupled through the thresholds. This simple example shows how the detection problem can be rather complicated, even under a very simple setting.

to be used by node 2 will depend on the decision rule followed by node 1. This means that, even if the observations are conditionally independent and the decisions are taken autonomously by the two nodes, the decisions are still coupled through the thresholds. This simple example shows how the detection problem can be rather complicated, even under a very simple setting.

In the special case where ![]() ,

, ![]() , and

, and ![]() , i.e., there is no penalty if the decisions are correct, the penalty is 1, when there is one error, and the penalty is 2 when there are two errors, the threshold simplifies into

, i.e., there is no penalty if the decisions are correct, the penalty is 1, when there is one error, and the penalty is 2 when there are two errors, the threshold simplifies into

![]() (7.121)

(7.121)

Hence, in this special case, the two thresholds are independent of each other and the two detectors become independent of each other.

After having pointed out through a simple example some of the problems related to distributed detection, it is now time to consider in more detail the cases where the nodes send their (possibly encoded) data to the FC or they take local decisions first and send them to the FC. In both situations, there are two extreme cases: (a) there is only one FC; (b) every node is a potential FC, as it is able to take a global decision.

2.07.5.1 Nodes send data to decision center

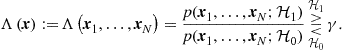

Let us consider for simplicity the simple (binary) hypothesis testing problem. Given a set of vector observations ![]() , where

, where ![]() is the vector collected by node

is the vector collected by node ![]() , the optimal decision rule for the simple hypothesis testing problem, under a variety of optimality criteria, amounts to compute the likelihood ratio (LR)

, the optimal decision rule for the simple hypothesis testing problem, under a variety of optimality criteria, amounts to compute the likelihood ratio (LR) ![]() and compare it with a threshold. In formulas,

and compare it with a threshold. In formulas,

(7.122)

(7.122)

In words, the detector decides for ![]() if the LR exceeds the threshold, otherwise it decides for

if the LR exceeds the threshold, otherwise it decides for ![]() . In general, what changes the distributed detection problem from the standard centralized detection is that the data are sent to the decision center after source encoding into a discrete alphabet. The simplest form of encoding is quantization. But also taking local decisions can be interpreted as a form of binary coding. Clearly, source coding is going to affect the detection performance. It is then useful to show, through a simple example, how local quantization affects the final detection performance and how we can benefit from the theoretical analysis to optimize the number of bits associated to the quantization step in order to optimize performance of the detection scheme.

. In general, what changes the distributed detection problem from the standard centralized detection is that the data are sent to the decision center after source encoding into a discrete alphabet. The simplest form of encoding is quantization. But also taking local decisions can be interpreted as a form of binary coding. Clearly, source coding is going to affect the detection performance. It is then useful to show, through a simple example, how local quantization affects the final detection performance and how we can benefit from the theoretical analysis to optimize the number of bits associated to the quantization step in order to optimize performance of the detection scheme.

2.07.5.1.1 Centralized detection of deterministic signal embedded in additive noise

Let us consider the detection of a deterministic (known) signal embedded in additive noise. In this section, we consider the case where the decision is taken at a FC, after having collected the data sent by the sensors. This case could refer for example to the detection of undesired resonance phenomena in buildings, bridges, etc. The form of the resonance is known. However, the measurements taken by the sensors are affected by noise and then it is of interest to check the performance as a function of the signal to noise ratio.

The measurement vector is ![]() , where

, where ![]() is the measurement taken by node i. Let us denote as

is the measurement taken by node i. Let us denote as ![]() the known deterministic signal. The observation can be modeled as

the known deterministic signal. The observation can be modeled as

(7.123)

(7.123)

where ![]() is the background noise, whereas

is the background noise, whereas ![]() is the quantization noise. We assume the noise to be Gaussian with zero mean and (spatial) covariance matrix

is the quantization noise. We assume the noise to be Gaussian with zero mean and (spatial) covariance matrix ![]() , i.e.,

, i.e., ![]() . To simplify the mathematical tractability, we consider a dithered quantization so that the quantization error can be modeled as a random process statistically independent of noise. We may certainly assume that, after dithering, the quantization noise variables over different sensors are statistically independent. Hence, we can state that the quantization noise vector

. To simplify the mathematical tractability, we consider a dithered quantization so that the quantization error can be modeled as a random process statistically independent of noise. We may certainly assume that, after dithering, the quantization noise variables over different sensors are statistically independent. Hence, we can state that the quantization noise vector ![]() has zero mean and a diagonal covariance matrix

has zero mean and a diagonal covariance matrix ![]() . If the amplitude of the useful signal spans the dynamic range

. If the amplitude of the useful signal spans the dynamic range ![]() and the number of bits used by node i is

and the number of bits used by node i is ![]() , the quantum range is

, the quantum range is ![]() so that the quantization noise variance at node i is

so that the quantization noise variance at node i is

![]() (7.124)

(7.124)

The overall noise has then a zero mean and covariance matrix ![]() .

.

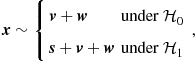

If the quantization noise is negligible, the Neyman-Pearson criterion applied to this case leads to the following linear detector

(7.125)

(7.125)

where ![]() denotes the real part of x, and the detection threshold

denotes the real part of x, and the detection threshold ![]() is computed in order to guarantee the desired false alarm probability

is computed in order to guarantee the desired false alarm probability ![]() . Unfortunately, since the quantization noise is not Gaussian, the composite noise

. Unfortunately, since the quantization noise is not Gaussian, the composite noise ![]() is not Gaussian and then the detection rule in (7.125) is no longer optimal. Nevertheless, the rule in (7.125) is still meaningful as it maximizes the signal to noise ratio (SNR). Hence, it is of interest to look at the performance of this detector in the presence of quantization noise. The exact computation of the detection probability is not easy, at least in closed form, because it requires the computation of the pdf of

is not Gaussian and then the detection rule in (7.125) is no longer optimal. Nevertheless, the rule in (7.125) is still meaningful as it maximizes the signal to noise ratio (SNR). Hence, it is of interest to look at the performance of this detector in the presence of quantization noise. The exact computation of the detection probability is not easy, at least in closed form, because it requires the computation of the pdf of ![]() . Nevertheless, when the number of nodes is sufficiently high (an order of a few tens can be sufficient to get a good approximation), we can invoke the central limit theorem to state that

. Nevertheless, when the number of nodes is sufficiently high (an order of a few tens can be sufficient to get a good approximation), we can invoke the central limit theorem to state that ![]() is approximately Gaussian. Using this approximation, the detection probability can be written in closed form for any fixed

is approximately Gaussian. Using this approximation, the detection probability can be written in closed form for any fixed ![]() , following standard derivations (see, e.g., [5]), as

, following standard derivations (see, e.g., [5]), as

![]() (7.126)

(7.126)

This formula is useful to assess the detection probability as a function of the bits allocated to each transmission. At the same time, we can also use (7.126) as a way to find out the bit allocation that maximizes the detection probability. This approach establishes an interesting link between the communication and detection aspects. In practice, in fact, encoded data are transmitted over a finite capacity channel. Hence, it is useful to relate the number of quantization bits used by each node and capacity of the channel between that node and the FC. For simplicity, we consider the optimization problem under the assumption of spatially uncorrelated noise, i.e., ![]() . The problem we wish to solve is the maximization of the detection probability, for a given false alarm rate and a maximum global transmit power. To guarantee an arbitrarily low transmission error rate, we need to respect Shannon’s channel coding theorem, so that the number of bits per symbol must be less than channel capacity. Denoting with

. The problem we wish to solve is the maximization of the detection probability, for a given false alarm rate and a maximum global transmit power. To guarantee an arbitrarily low transmission error rate, we need to respect Shannon’s channel coding theorem, so that the number of bits per symbol must be less than channel capacity. Denoting with ![]() the power transmitted by user i and assuming flat fading channel, with channel coefficient

the power transmitted by user i and assuming flat fading channel, with channel coefficient ![]() , the capacity is given by (7.105). From(7.126), maximizing

, the capacity is given by (7.105). From(7.126), maximizing ![]() is equivalent to maximizing

is equivalent to maximizing ![]() . Hence, using (7.124), the maximum

. Hence, using (7.124), the maximum ![]() , for a given

, for a given ![]() and a given global transmit power

and a given global transmit power ![]() , can be achieved by finding the power vector

, can be achieved by finding the power vector ![]() that solves the following constrained problem

that solves the following constrained problem

(7.127)

(7.127)

(7.128)

(7.128)

It is straightforward to check that this is a convex problem. Imposing the Karush-Kuhn-Tucker conditions, the optimal powers can be expressed in closed form as:

(7.129)

(7.129)

where the Lagrange multiplier ![]() associated to the sum-power constraint can be determined as the value that makes

associated to the sum-power constraint can be determined as the value that makes ![]() .

.

A numerical example is useful to grasp some of the properties of the proposed algorithm. Let us consider a series of sensors placed along a bridge of length L. The purpose of the network is to detect one possible spatial resonance, which we represent as the signal ![]() , where

, where ![]() denotes the spatial coordinate. The sensors are uniformly spaced along the bridge, at positions

denotes the spatial coordinate. The sensors are uniformly spaced along the bridge, at positions ![]() , with

, with ![]() . Every sensor measures a shift

. Every sensor measures a shift ![]() , affected by the error

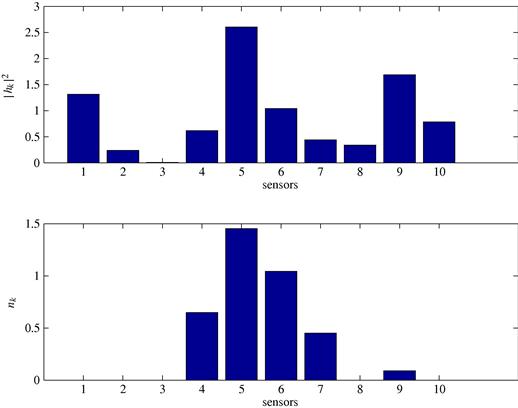

, affected by the error ![]() . To communicate its own measurement to the FC, every sensor has to quantize the measurement first. The optimal number of bits to be used by every sensor can be computed by using the previous theory. In this case, in Figure 7.16 we report the detection probability vs. the sum power

. To communicate its own measurement to the FC, every sensor has to quantize the measurement first. The optimal number of bits to be used by every sensor can be computed by using the previous theory. In this case, in Figure 7.16 we report the detection probability vs. the sum power ![]() available to the whole set of sensors, for different numbers N of sensors. As expected, as the total transmit power increases,

available to the whole set of sensors, for different numbers N of sensors. As expected, as the total transmit power increases, ![]() increases because more bits per symbol can be transmitted and then the quantization errors become negligible. It is also important to notice how, increasing the number of sensors, the detection probability improves, for any given transmit power. Furthermore, in Figure 7.17 we can see the optimal per channel bit allocation (bottom), together with the channels profiles

increases because more bits per symbol can be transmitted and then the quantization errors become negligible. It is also important to notice how, increasing the number of sensors, the detection probability improves, for any given transmit power. Furthermore, in Figure 7.17 we can see the optimal per channel bit allocation (bottom), together with the channels profiles ![]() (top). Interestingly, we can see that the method allocates more bits in correspondence with the best channels and the central elements of the array, where the useful signal is expected to have the largest variations.

(top). Interestingly, we can see that the method allocates more bits in correspondence with the best channels and the central elements of the array, where the useful signal is expected to have the largest variations.

2.07.5.1.2 Decentralized detection under conditionally independent observations

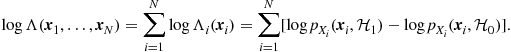

Let us consider now the case where the globally optimal decision can be taken, in principle, by any node. To enable this possibility, every node must be able to implement the statistical test (7.122). If the measurements collected by the sensors are conditionally independent, the logarithm of the likelihood ratio can be written as

(7.130)

(7.130)

This formula shows that, in the conditionally independent case, running a consensus algorithm is sufficient to enable every node to compute the global LR. It is only required that every sensor initializes its own state with the local log-LR ![]() and then runs the consensus iterations. If the network is connected, every node will end up with the average value of the local LR’s. In practice, to send the local LR, every node must quantize it first. Then, we need to refer to the consensus algorithm in the presence of quantization errors. However, we have already seen in Section 2.07.3.2 that the consensus iterations may be properly modified to make the algorithm robust against a series of drawbacks coming from communications through realistic channels, as, eg., random packet drops and quantization. Hence, a consensus algorithm, properly modified, can enable every node to compute the global LR with controllable error.

and then runs the consensus iterations. If the network is connected, every node will end up with the average value of the local LR’s. In practice, to send the local LR, every node must quantize it first. Then, we need to refer to the consensus algorithm in the presence of quantization errors. However, we have already seen in Section 2.07.3.2 that the consensus iterations may be properly modified to make the algorithm robust against a series of drawbacks coming from communications through realistic channels, as, eg., random packet drops and quantization. Hence, a consensus algorithm, properly modified, can enable every node to compute the global LR with controllable error.

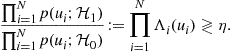

2.07.5.2 Nodes send local decisions to fusion center

Consider now the case where each node i takes a local decision, according to a locally optimal criterion, and encodes the decision into the binary variable ![]() . Then, the node sends the variable

. Then, the node sends the variable ![]() to the fusion center, which is asked to take a global decision on the basis of the vector

to the fusion center, which is asked to take a global decision on the basis of the vector ![]() containing all local decisions. Let us consider for simplicity the binary hypothesis test. This problem was considered in [50] and we will now review the basic results. This problem is distinct from the case studied in the previous section because here the local decision thresholds are optimized according to a detection criterion, whereas in standard quantization the decision thresholds are not optimized.

containing all local decisions. Let us consider for simplicity the binary hypothesis test. This problem was considered in [50] and we will now review the basic results. This problem is distinct from the case studied in the previous section because here the local decision thresholds are optimized according to a detection criterion, whereas in standard quantization the decision thresholds are not optimized.

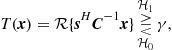

Under both Bayesian and Neyman-Pearson (NP) formulations, the optimal test amounts to a likelihood ratio test, based on ![]() , i.e.,

, i.e.,

![]() (7.131)

(7.131)

In the case of conditionally independent local decisions, the LRT converts into

(7.132)

(7.132)

Since each variable ![]() can only assume the values 0 or 1, we can group all the variables into two subsets: the subset

can only assume the values 0 or 1, we can group all the variables into two subsets: the subset ![]() containing all variables

containing all variables ![]() and the subset

and the subset ![]() containing all variables

containing all variables ![]() , thus yielding

, thus yielding

![]() (7.133)

(7.133)

Denoting with ![]() , and

, and ![]() , the probabilities of miss and the probability of false alarm of node i, respectively, (7.133) can be rewritten as

, the probabilities of miss and the probability of false alarm of node i, respectively, (7.133) can be rewritten as

![]() (7.134)

(7.134)

Taking the logarithm of both sides and reintroducing the variables ![]() , the fusion rule becomes

, the fusion rule becomes

(7.135)

(7.135)

or, equivalently

(7.136)

(7.136)

The optimal fusion rule is then a simple weighted sum of the local decisions, where the weights depend on the reliabilities of the local decisions: Larger weights are assigned to the most reliable nodes.

If instead of having a single FC, we wish to enable every node to implement the decision fusion rule described above, we can see that, again, running a consensus algorithm suffices to reach the goal. In fact, if each local state variable is initialized with a value ![]() , running a consensus algorithm allows every node to know the function in (7.136). The only constraint is, as always, network connectivity. The drawback of this simple approach is that running this sort of consensus algorithm requires the transmission of real variables, rather than the binary variables

, running a consensus algorithm allows every node to know the function in (7.136). The only constraint is, as always, network connectivity. The drawback of this simple approach is that running this sort of consensus algorithm requires the transmission of real variables, rather than the binary variables ![]() . In fact, even if the local decision

. In fact, even if the local decision ![]() is binary, the coefficient multiplying

is binary, the coefficient multiplying ![]() is a real variable, which needs to be quantized before transmission over a realistic channel. Again, the consensus algorithm can be robustified against quantization errors by using dithered quantization and a decreasing step size, as shown in 2.07.3.2. However, it is important to clarify that we cannot make any claim of optimality of this kind of distributed decision. In principle, when the nodes exchange their decisions with the neighbors, the decision thresholds should be adjusted in order to accommodate some optimality criterion. This is indeed an interesting, yet still open, research topic.

is a real variable, which needs to be quantized before transmission over a realistic channel. Again, the consensus algorithm can be robustified against quantization errors by using dithered quantization and a decreasing step size, as shown in 2.07.3.2. However, it is important to clarify that we cannot make any claim of optimality of this kind of distributed decision. In principle, when the nodes exchange their decisions with the neighbors, the decision thresholds should be adjusted in order to accommodate some optimality criterion. This is indeed an interesting, yet still open, research topic.

2.07.6 Beyond consensus: distributed projection algorithms

In many applications, the field to be reconstructed by a sensor network is typically a smooth function of the spatial coordinates. This happens for example, in the reconstruction of the spatial distribution of the power radiated by a set of transmitters. The problem is that local measurements may be corrupted by local noise or fading effects. An important application of this scenario is given by cognitive networks. In such a case, a secondary node would need to know the channel occupation across space, to find out unoccupied channels, within the area of interest. This requires some sort of spectrum sensing, but in a localized area. The problem of sensing is that wireless propagation is typically affected by fading or shadowing effects, so that a sensor in a shadowed location might indicate that a channel is unoccupied, while this is not true. To avoid this kind of error, which would lead to undue channel occupation from opportunistic users, it is useful to resort to cooperative sensing. In such a case, nearby nodes exchange local measurements to counteract the effect of shadowing.

The problem with local averaging operations is that they should reduce the effect of fading, but without destroying valuable spatial variations. In the following, we recall a distributed algorithm proposed in [72] to recover a spatial map of a field, using local weighted averages where the weights are chosen so as to improve upon local noise or fading effects, but without destroying the spatial variation of the useful signal.

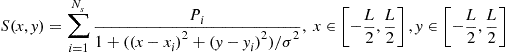

Let us consider a network composed of N sensors located at positions ![]() , and denote the measurement collected by the ith sensor by

, and denote the measurement collected by the ith sensor by ![]() , where

, where ![]() represents the useful field while

represents the useful field while ![]() is the observation error. Let us also denote by

is the observation error. Let us also denote by ![]() , a set of linearly independent spatial functions defining a basis for the useful signal. The useful signal can then be represented through the basis expansion model

, a set of linearly independent spatial functions defining a basis for the useful signal. The useful signal can then be represented through the basis expansion model

![]() (7.137)

(7.137)

In vector notation, introducing the N-size column vector ![]() and similarly for the vector

and similarly for the vector ![]() , we may write

, we may write

![]() (7.138)

(7.138)

where ![]() is the

is the ![]() matrix whose mth column is

matrix whose mth column is ![]() ,

, ![]() is an r-size vector of coefficients and

is an r-size vector of coefficients and ![]() is the useful signal. The spatial smoothness of the useful signal field may be captured by choosing the functions

is the useful signal. The spatial smoothness of the useful signal field may be captured by choosing the functions ![]() to be the low frequency components of the Fourier basis or low-order 2 D polynomials. For instance, if the space under monitoring is a square of side L, we may choose the set

to be the low frequency components of the Fourier basis or low-order 2 D polynomials. For instance, if the space under monitoring is a square of side L, we may choose the set

![]() (7.139)

(7.139)

In practice, the dimension r of the useful signal subspace is typically much smaller than the dimension N of the observation space, i.e., of the number of sensors. We can exploit this property to devise a distributed denoising algorithm.

If we use a Minimum Mean Square Error (MMSE) strategy, the goal is to find the useful signal vector ![]() that minimizes the mean square error

that minimizes the mean square error

![]() (7.140)

(7.140)

The solution is well known and is given by [44]:

![]() (7.141)

(7.141)

Our goal is actually to recover the vector ![]() , rather than

, rather than ![]() . In such a case, the estimate of

. In such a case, the estimate of ![]() is

is

![]() (7.142)

(7.142)

The operation performed in (7.142) corresponds to projecting the observation vector onto the subspace spanned by the columns of ![]() . Assuming, without any loss of generality (w.l.o.g.), the columns of

. Assuming, without any loss of generality (w.l.o.g.), the columns of ![]() to be orthonormal, the projector simplifies into

to be orthonormal, the projector simplifies into

![]() (7.143)

(7.143)

The centralized solution to this problem is then very simple: The fusion center collects all the measurements ![]() , compute

, compute ![]() and then recovers

and then recovers ![]() from (7.143).

from (7.143).

The previous approach is well known. The interesting point is that the MMSE solution can be achieved with a totally decentralized approach, where every sensor interacts only with its neighbors, with no need to send any data to a fusion center. The proposed approach is based on a very simple iterative procedure, where each node initializes a state variable with the local measurement, let us say ![]() , and then it updates its own state by taking a linear combination of its neighbors’ states, similarly with what happens with consensus algorithms, but with coefficients computed in order to solve the new problem.

, and then it updates its own state by taking a linear combination of its neighbors’ states, similarly with what happens with consensus algorithms, but with coefficients computed in order to solve the new problem.

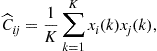

More specifically, denoting by ![]() , the N-size vector containing the states of all the nodes, at iteration k, and by

, the N-size vector containing the states of all the nodes, at iteration k, and by ![]() the vector containing the initial measurements collected by all the nodes, the vector

the vector containing the initial measurements collected by all the nodes, the vector ![]() evolves according to the following linear state equation:

evolves according to the following linear state equation:

![]() (7.144)

(7.144)

where ![]() is typically a sparse (not necessarily symmetric) matrix. The network topology is reflected into the sparsity of

is typically a sparse (not necessarily symmetric) matrix. The network topology is reflected into the sparsity of ![]() . In particular, the number of nonzero entries of, let us say, the ith row is equal to the number of neighbors of node i. In a WSN, the neighbors of a node are the nodes falling within the coverage area of that node, i.e., within a circle centered on the location of the node, with radius dictated by the transmit power of the node and by the power attenuation law. Our goal is to find the nonnull coefficients of

. In particular, the number of nonzero entries of, let us say, the ith row is equal to the number of neighbors of node i. In a WSN, the neighbors of a node are the nodes falling within the coverage area of that node, i.e., within a circle centered on the location of the node, with radius dictated by the transmit power of the node and by the power attenuation law. Our goal is to find the nonnull coefficients of ![]() that allow the convergence of

that allow the convergence of ![]() to the vector

to the vector ![]() given in (7.143). In general, not every network topology guarantees the existence of a solution of this problem. In the following, we will show that a solution exists only if each node has a number of neighbors greater than the dimension r of the useful signal subspace.

given in (7.143). In general, not every network topology guarantees the existence of a solution of this problem. In the following, we will show that a solution exists only if each node has a number of neighbors greater than the dimension r of the useful signal subspace.

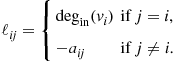

Let us denote by ![]() the orthogonal projector onto the r-dimensional subspace of

the orthogonal projector onto the r-dimensional subspace of ![]() spanned by the columns of

spanned by the columns of ![]() , where

, where ![]() denotes the range space operator and

denotes the range space operator and ![]() is a full-column rank matrix, assumed, w.l.o.g., to be semi-unitary. System (7.144) converges to the desired orthogonal projection of the initial value vector

is a full-column rank matrix, assumed, w.l.o.g., to be semi-unitary. System (7.144) converges to the desired orthogonal projection of the initial value vector ![]() onto

onto ![]() , for any given

, for any given ![]() , if and only if

, if and only if

![]() (7.145)

(7.145)

i.e.,

![]() (7.146)

(7.146)

Resorting to basic algebraic properties of discrete-time systems, it is possible to derive immediately some basic properties of ![]() . In particular, denoting with OUD the Open Unit Disk, i.e., the set

. In particular, denoting with OUD the Open Unit Disk, i.e., the set ![]() , a matrix

, a matrix ![]() is semistable if its spectrum

is semistable if its spectrum ![]() satisfies

satisfies ![]() and, if

and, if ![]() , then 1 is semisimple, i.e., its algebraic and geometric multiplicities coincide. If

, then 1 is semisimple, i.e., its algebraic and geometric multiplicities coincide. If ![]() is semistable, then [73, p.447]

is semistable, then [73, p.447]

![]() (7.147)

(7.147)

where ![]() denotes group generalized inverse [73, p.228]. Furthermore, setting, without loss of generality, the matrix

denotes group generalized inverse [73, p.228]. Furthermore, setting, without loss of generality, the matrix ![]() in the form

in the form ![]() , (7.147) can be rewritten as

, (7.147) can be rewritten as

![]() (7.148)

(7.148)

But ![]() is the projector onto the null-space of

is the projector onto the null-space of ![]() . Hence, we can state the following:

. Hence, we can state the following:

Alternatively, the previous conditions can be rewritten equivalently in the following form

The previous conditions do not make any explicit reference to the sparsity of matrix ![]() . However, when we consider a sparse matrix, reflecting the network topology, additional conditions are necessary to make sure that the previous conditions are satisfied. In other words, not every network topology is able to guarantee the asymptotic projection onto a prescribed signal subspace. One basic question is then what network topology is able to guarantee the convergence to a prescribed projector. We provide now the conditions on the sparsity of

. However, when we consider a sparse matrix, reflecting the network topology, additional conditions are necessary to make sure that the previous conditions are satisfied. In other words, not every network topology is able to guarantee the asymptotic projection onto a prescribed signal subspace. One basic question is then what network topology is able to guarantee the convergence to a prescribed projector. We provide now the conditions on the sparsity of ![]() , or equivalently

, or equivalently ![]() , guaranteeing the desired convergence.

, guaranteeing the desired convergence.

From condition (i) of Proposition 1, given the matrix ![]() ,

, ![]() must satisfy the equation

must satisfy the equation ![]() . Let us assume that every row of

. Let us assume that every row of ![]() has K nonzero entries and let us indicate with

has K nonzero entries and let us indicate with ![]() the set of the column indices corresponding to the nonzero entries of the jth row of

the set of the column indices corresponding to the nonzero entries of the jth row of ![]() . Hence, every row of

. Hence, every row of ![]() must satisfy the following equation

must satisfy the following equation

(7.149)

(7.149)

To guarantee the existence of a nontrivial solution to (7.149), the matrix on the left hand side must have a kernel of dimension at least one. This requires K to be strictly greater than r, the dimension of the signal subspace. Since the number of nonzero entries of, let us say the jth, row of ![]() is equal to the number of neighbors of node j plus one (the coefficient multiplying the state of node i itself), this implies that the minimum number K of neighbors of each node must be at least equal to the dimension r of the signal subspace. Of course this condition is necessary but not sufficient. It is also necessary to check that the sparse matrix

is equal to the number of neighbors of node j plus one (the coefficient multiplying the state of node i itself), this implies that the minimum number K of neighbors of each node must be at least equal to the dimension r of the signal subspace. Of course this condition is necessary but not sufficient. It is also necessary to check that the sparse matrix ![]() built with rows satisfying (7.149), with

built with rows satisfying (7.149), with ![]() , had rank

, had rank ![]() . This depends on the location of the nodes and on the specific choice of the orthogonal basis.

. This depends on the location of the nodes and on the specific choice of the orthogonal basis.

An example can be useful to illustrate the benefits achievable with the proposed technique. We consider the case where the observation is corrupted by a multiplicative, spatially uncorrelated, noise, which models, for example a fading effect. Let us denote with ![]() the measurement carried out from node i, located in the point of coordinates

the measurement carried out from node i, located in the point of coordinates ![]() , where

, where ![]() denotes the useful field, whereas

denotes the useful field, whereas ![]() represents fading. We consider, for instance, a useful signal composed by

represents fading. We consider, for instance, a useful signal composed by ![]() transmitters and we assume a polynomial power attenuation, so that the useful signal measured at the point of coordinates

transmitters and we assume a polynomial power attenuation, so that the useful signal measured at the point of coordinates ![]() is

is

(7.150)

(7.150)

where ![]() is the power emitted by source i, located at

is the power emitted by source i, located at ![]() , and

, and ![]() specifies the power spatial spread. Furthermore, fading is modeled as a spatially uncorrelated multiplicative noise. The sensor network is composed of 2500 nodes uniformly distributed over a 2D grid. All the transmitters use the same power, i.e.,

specifies the power spatial spread. Furthermore, fading is modeled as a spatially uncorrelated multiplicative noise. The sensor network is composed of 2500 nodes uniformly distributed over a 2D grid. All the transmitters use the same power, i.e., ![]() in (7.150), and the noise has zero mean and variance

in (7.150), and the noise has zero mean and variance ![]() .

.

In this case, it is useful to apply a homomorphic filtering to the measured field. In particular, we take the ![]() of the measurement, thus getting

of the measurement, thus getting ![]() . To smooth out the undesired effect of fading, we assume a signal model composed by the superposition of 2D sinusoids, so that the columns of the matrix

. To smooth out the undesired effect of fading, we assume a signal model composed by the superposition of 2D sinusoids, so that the columns of the matrix ![]() in (7.138) are composed of signals of the form

in (7.138) are composed of signals of the form ![]() , and

, and ![]() , with

, with ![]() . We set the initial value of the state of each node equal to

. We set the initial value of the state of each node equal to ![]() and we run the distributed projection algorithm described above. After convergence, we simply take the

and we run the distributed projection algorithm described above. After convergence, we simply take the ![]() of the result.

of the result.

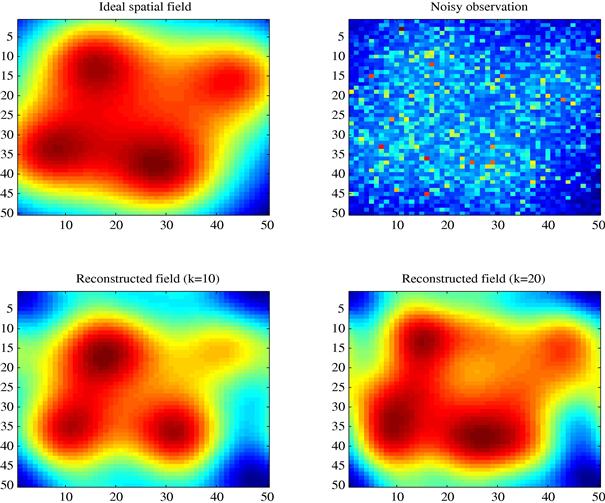

Figure 7.18 shows an example of application. In particular, the spatial behavior of the useful signal power is shown in the top left plot, while the observation corrupted by fading is reported in the top right figure. It is useful to consider that, in the example at hand, the useful signal would require a Fourier series expansion with an infinite number of terms to null the modeling error. Conversely, in our example, we used two different orders, ![]() and

and ![]() . The corresponding reconstructions are shown in the bottom figures. From Figure 7.18 it is evident the capability of the proposed distributed approach to provide a significant attenuation of the fading phenomenon, without destroying valuable signal variations.

. The corresponding reconstructions are shown in the bottom figures. From Figure 7.18 it is evident the capability of the proposed distributed approach to provide a significant attenuation of the fading phenomenon, without destroying valuable signal variations.

Figure 7.18 Example of field reconstruction in the presence of fading: ideal spatial field (top left); measured field (top right); field reconstructed with order ![]() (bottom left) and

(bottom left) and ![]() (bottom right) [75].

(bottom right) [75].

2.07.7 Minimum energy consensus

Although distributed algorithms to achieve consensus have received a lot of attention because of their capability of reaching optimal decisions without the need of a fusion center, the price paid for this simplicity is that consensus algorithms are inherently iterative. As a consequence the iterated exchange of data among the nodes might cause an excessive energy consumption. Hence, to make consensus algorithms really appealing in practical applications, it is necessary to minimize the energy consumption necessary to reach consensus. The network topology plays a fundamental role in determining the convergence rate [74]. As the network connectivity increases, so does the convergence rate. However, a highly connected network entails a high power consumption to guarantee reliable direct links between the nodes. On the other hand, if the network is minimally connected, with only neighbor nodes connected to each other, a low power is spent to maintain the few short range links, but, at the same time, a large convergence time is required. Since what really matters in a WSN is the overall energy spent to achieve consensus, in [76,77] it was considered the problem of finding the optimal network topology that minimizes the overall energy consumption, taking into account convergence time and transmit powers jointly. More specifically, in [77] it is proposed a method for optimizing the network topology and the power allocation across every link in order to minimize the energy necessary to achieve consensus. Two different types of networks are considered: a) deterministic topologies, where node positions are arbitrary, but known; b) random geometries, where the unknown node locations are modeled as random variables. We will now review the methodology used in both cases.

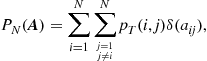

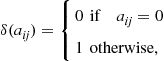

2.07.7.1 Optimization criterion

By considering only the power spent to enable wireless communications, the overall energy consumption to reach consensus can be written as the product between the sum of the power ![]() necessary to establish the communication links among the nodes and the number of iterations

necessary to establish the communication links among the nodes and the number of iterations ![]() necessary to achieve consensus. The exchange of information among the nodes is supposed to take place in the presence of a slotted system, with a medium access control (MAC) mechanism that prevents packet collisions. The number of iterations can be approximated as

necessary to achieve consensus. The exchange of information among the nodes is supposed to take place in the presence of a slotted system, with a medium access control (MAC) mechanism that prevents packet collisions. The number of iterations can be approximated as ![]() where

where ![]() denotes the duration of a time slot unit and

denotes the duration of a time slot unit and

![]()

is the convergence time defined as the time necessary for the slowest mode of the dynamical system (7.23) to be reduced by a factor ![]() . The total power spent by the network in each iteration is then

. The total power spent by the network in each iteration is then ![]() where the coefficient

where the coefficient ![]() denotes the power transmitted by node i to node j, while the binary coefficients

denotes the power transmitted by node i to node j, while the binary coefficients ![]() assess the presence (

assess the presence (![]() ) of a link between nodes i and j or not (

) of a link between nodes i and j or not (![]() ). Our goal is to minimize the energy consumption expressed by the following metric

). Our goal is to minimize the energy consumption expressed by the following metric

![]() (7.151)

(7.151)

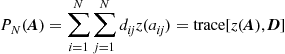

where K incorporates all irrelevant constants, N is the number of sensors and ![]() is the Laplacian matrix depending on the vector

is the Laplacian matrix depending on the vector ![]() containing all the coefficients

containing all the coefficients ![]() . More specifically, we aim to find the set of active links, i.e., the non-zero coefficients

. More specifically, we aim to find the set of active links, i.e., the non-zero coefficients ![]() , and the powers

, and the powers ![]() that minimize the energy consumption (7.151), under the constraint of guaranteeing network connectivity, i.e., enforcing

that minimize the energy consumption (7.151), under the constraint of guaranteeing network connectivity, i.e., enforcing ![]() . The problem can be formulated as follows [77]:

. The problem can be formulated as follows [77]:

(7.152)

(7.152)

where ![]() is an arbitrarily small positive constant used to ensure network connectivity and

is an arbitrarily small positive constant used to ensure network connectivity and ![]() is the vector with entries

is the vector with entries ![]() . Since the topology coefficients are binary variables, [P.0] is a combinatorial problem, with complexity increasing with the size N of the network as

. Since the topology coefficients are binary variables, [P.0] is a combinatorial problem, with complexity increasing with the size N of the network as ![]() . In [77] we have modified [P.0] in order to convert it into a convex problem, with negligible performance losses. A first simplification comes from observing that the coefficients

. In [77] we have modified [P.0] in order to convert it into a convex problem, with negligible performance losses. A first simplification comes from observing that the coefficients ![]() and

and ![]() are dependent of each other through the radio propagation model so that the set of unknowns can be reduced to the set of powers

are dependent of each other through the radio propagation model so that the set of unknowns can be reduced to the set of powers ![]() . More specifically, by assuming flat fading channel, we can assume that the power

. More specifically, by assuming flat fading channel, we can assume that the power ![]() received by node j when node i transmits is given by

received by node j when node i transmits is given by

![]() (7.153)

(7.153)

where ![]() is the distance between nodes i and

is the distance between nodes i and ![]() is the path loss exponent, and the parameter

is the path loss exponent, and the parameter ![]() corresponds to the so called Fraunhofer distance. We have included in the denominator the unitary term to avoid the unrealistic situation in which the received power could be greater than the transmitted one. Given the propagation model (7.153), the relation between the power coefficients

corresponds to the so called Fraunhofer distance. We have included in the denominator the unitary term to avoid the unrealistic situation in which the received power could be greater than the transmitted one. Given the propagation model (7.153), the relation between the power coefficients ![]() and the topology coefficients

and the topology coefficients ![]() is then

is then

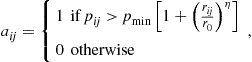

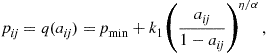

(7.154)

(7.154)

where ![]() is the minimum power needed at the receiver side to establish a communication. In [77] we have shown how to relax this relation in order to simplify the solution of the optimal topology control problem considering both the deterministic and random topology.

is the minimum power needed at the receiver side to establish a communication. In [77] we have shown how to relax this relation in order to simplify the solution of the optimal topology control problem considering both the deterministic and random topology.

2.07.7.2 Optimal topology and power allocation for arbitrary networks

In the case where the distances between the nodes are known, to find the optimal solution of problem [P.0] involves a combinatorial strategy that makes the problem numerically very hard to solve. In [77], we have relaxed problem [P.0] so that, instead of requiring ![]() to be binary, we assume

to be binary, we assume ![]() to be a real variable belonging to the interval

to be a real variable belonging to the interval ![]() . This relaxation is the first step to transform the previous problem into a convex problem. More specifically, we have introduced the following relationship between the coefficients

. This relaxation is the first step to transform the previous problem into a convex problem. More specifically, we have introduced the following relationship between the coefficients ![]() and the distances

and the distances ![]() :

:

![]() (7.155)

(7.155)

where ![]() is a positive coefficient and

is a positive coefficient and ![]() is the coverage radius, which depends on the transmit power. According to (7.155),

is the coverage radius, which depends on the transmit power. According to (7.155), ![]() is close to one when node j is within the coverage radius of node i, i.e.,

is close to one when node j is within the coverage radius of node i, i.e., ![]() , whereas

, whereas ![]() is close to zero, when

is close to zero, when ![]() . The switching from zero to one can be made steeper by increasing the value of

. The switching from zero to one can be made steeper by increasing the value of ![]() . In [77] we have found the coefficients

. In [77] we have found the coefficients ![]() as a function of

as a function of ![]()

(7.156)

(7.156)

with ![]() . Consequently, we can reduce the set of variables to the only power vector

. Consequently, we can reduce the set of variables to the only power vector ![]() and problem [P.0] can be relaxed into the following problem:

and problem [P.0] can be relaxed into the following problem:

(7.157)

(7.157)

The first important result proved in [77] is that the problem [P.1] is a convex-concave fractional problem if ![]() , so that we can use one of the methods that solve quasi-convex optimization problems, see e.g., [78,79]. In [77] we have used the nonlinear parametric formulation proposed in [79]. Hence we have further converted the convex-concave fractional problem [P.1] into the following equivalent parametric problem in terms of vector

, so that we can use one of the methods that solve quasi-convex optimization problems, see e.g., [78,79]. In [77] we have used the nonlinear parametric formulation proposed in [79]. Hence we have further converted the convex-concave fractional problem [P.1] into the following equivalent parametric problem in terms of vector ![]() , i.e.,

, i.e.,

(7.158)

(7.158)

where ![]() and

and ![]() controls the trade-off between total transmit power and convergence time.

controls the trade-off between total transmit power and convergence time.

The optimization problem [P.2] is a convex parametric problem [77] and an optimal solution can be found via efficient numerical tools. Furthermore, using Dinkelbach’s algorithm [79], we are also able to find the optimal parameter ![]() in [P.2].

in [P.2].

2.07.7.3 Numerical examples

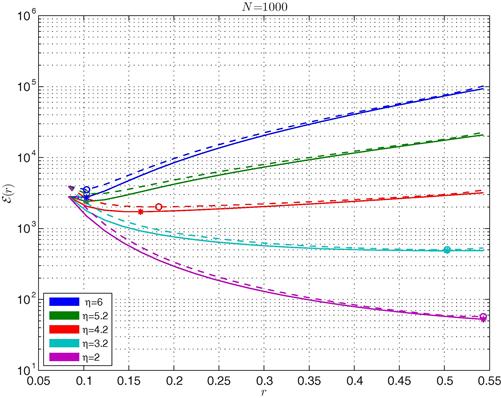

Since our optimization procedure is based on a relaxation technique, we have evaluated the impact of the relaxation on the final topology and performance.

More specifically, the topology coefficients ![]() obtained by solving [P.2] are real variables belonging to the interval

obtained by solving [P.2] are real variables belonging to the interval ![]() , so that, to obtain the network topology, it is necessary a quantization step to convert them into binary values, 1 or 0, by comparing each

, so that, to obtain the network topology, it is necessary a quantization step to convert them into binary values, 1 or 0, by comparing each ![]() with a threshold

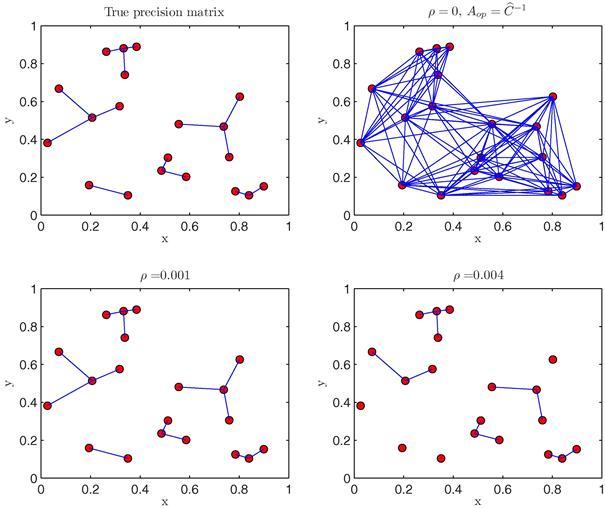

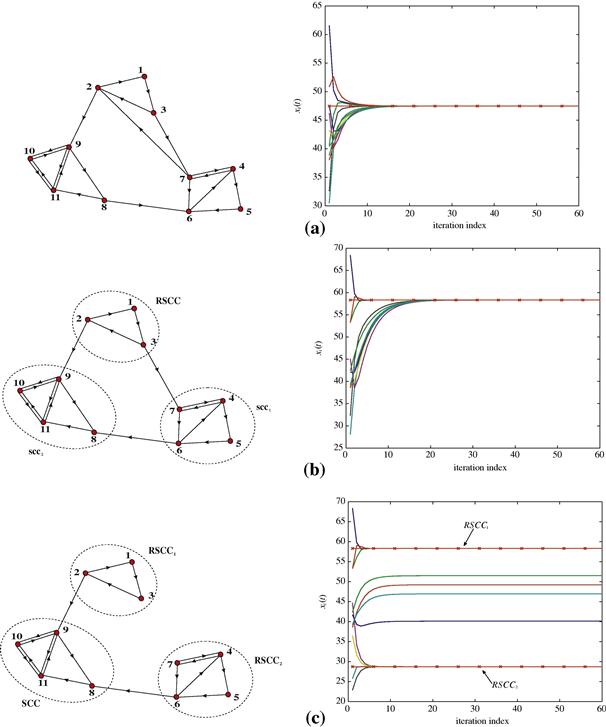

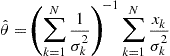

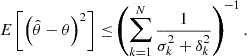

with a threshold ![]() . It has been shown that the loss in terms of optimal energy due to the relaxation of the original problem is negligible. To evaluate the impact of thresholding operation, in Figure 7.19 we show the topologies obtained by solving problem [P.2], for a network composed of

. It has been shown that the loss in terms of optimal energy due to the relaxation of the original problem is negligible. To evaluate the impact of thresholding operation, in Figure 7.19 we show the topologies obtained by solving problem [P.2], for a network composed of ![]() nodes, using different values of

nodes, using different values of ![]() and assuming

and assuming ![]() . Comparing the four cases reported in Figure 7.19, we can note that for a large range of values of

. Comparing the four cases reported in Figure 7.19, we can note that for a large range of values of ![]() , the final topology is practically the same, while only for very low values of the threshold (i.e., case (d)), we can observe a sensitive change of topology. This means that the relaxation method is robust against the choice of the final threshold.

, the final topology is practically the same, while only for very low values of the threshold (i.e., case (d)), we can observe a sensitive change of topology. This means that the relaxation method is robust against the choice of the final threshold.

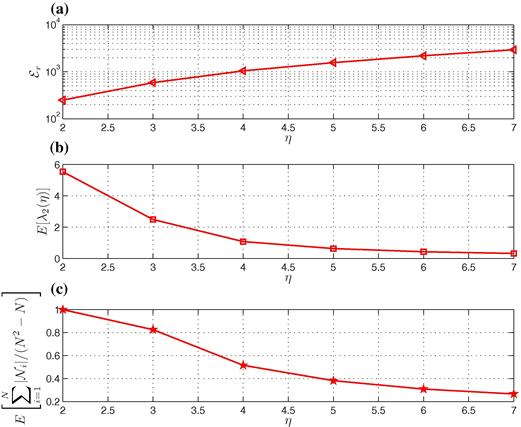

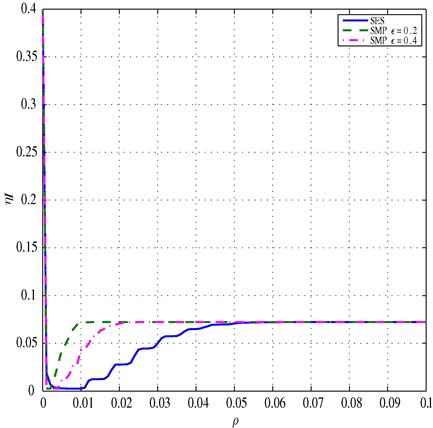

The previous results pertain to a specific realization of the node locations. To provide results of more general validity, in Figure 7.20, we report the average value of (a) the energy (![]() ) (b)

) (b) ![]() , and (c) fraction of active links

, and (c) fraction of active links ![]() , as a function of the path loss exponent

, as a function of the path loss exponent ![]() , setting

, setting ![]() . From Figure 7.20, we observe that when the attenuation is high (i.e.,

. From Figure 7.20, we observe that when the attenuation is high (i.e., ![]() is large), reducing the number of links (making the topology sparser) is more important than reducing convergence time. Conversely, when the attenuation is low (i.e.,

is large), reducing the number of links (making the topology sparser) is more important than reducing convergence time. Conversely, when the attenuation is low (i.e., ![]() is small), increasing network connectivity is more important than reducing power consumption.

is small), increasing network connectivity is more important than reducing power consumption.

2.07.7.4 Minimization of the energy consumption over random geometric graphs

Let us consider now the problem of minimizing the energy consumption for a sensor network modeled as a random geometric graph. We will use the symbol ![]() to indicate an RGG composed of N points, with coverage radius r.

to indicate an RGG composed of N points, with coverage radius r.

In [74], it has been shown that the degree of an RGG ![]() of points uniformly distributed over a two-dimensional unit torus7 is equal to

of points uniformly distributed over a two-dimensional unit torus7 is equal to

![]() (7.159)

(7.159)

with high probability, i.e., with probability ![]() , if the radius behaves as

, if the radius behaves as ![]() in (7.2). This implies that if the coverage radius is chosen so as to guarantee connectivity with high probability, an RGG tends to behave, asymptotically, as a regular graph. In order to calculate the convergence rate we have to derive the second eigenvalue of the Laplacian,

in (7.2). This implies that if the coverage radius is chosen so as to guarantee connectivity with high probability, an RGG tends to behave, asymptotically, as a regular graph. In order to calculate the convergence rate we have to derive the second eigenvalue of the Laplacian, ![]() , where