Multitarget Multisensor Tracking

X. Chen, R. Tharmarasa and T. Kirubarajan, ECE Department, McMaster University, Hamilton, Ontario, Canada

Abstract

Multitarget-Multisensor tracking is a category of widely used techniques that are applicable to fields like air traffic control, air/ground/maritime surveillance, transportation, video monitoring and biomedical imaging/signal processing. In this chapter, various multitarget-multisensor tracking algorithms to handle state estimation, data association, track initialization, spatial clutter intensity estimation, debaising, and multisensor fusion in centralized/distributed/decentralized architecture are discussed in detail, including their quantitative and qualitative merits. In addition, several evaluation metrics are presented to measure the performance of different multitarget-multisensor tracking systems. Various combinations of these algorithms and performance evaluation metrics will provide a complete tracking and fusion framework for multisensor networks with application to civilian as well as military problems. The application of some of these algorithms and performance evaluation metrics is demonstrated on a representative real scenario, where several closely spaced targets are tracked using a radar system.

Keywords

Multitarget tracking; Multisensor fusion; Bayesian filtering; Clutter estimation; Tracklet; Data association

3.15.1 Introduction

Multisensor-multitarget tracking is an emerging technology in which measurements from several sensors are combined such that resulting tracks are significantly better than that obtained when these devices operate individually. Recent advances in sensor technologies, signal processing techniques and improved processor capabilities make it possible for large amounts of data to be fused in real-time. These technical advancements allow the use of many sophisticated algorithms and robust mathematical techniques in multisensor-multitarget tracking. Furthermore, multisensor-multitarget tracking has received significant attention for military applications. Such applications involve a wide range of expertise including filtering, tracking initialization and maintenance, data association, and performance evaluation.

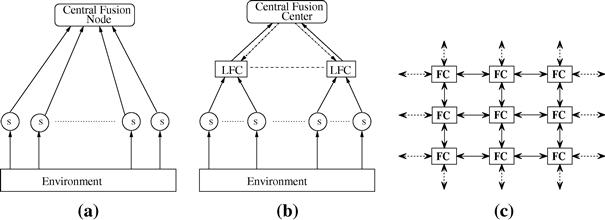

Three major types of architecture, namely, centralized, distributed and decentralized, are commonly used in multisensor-multitarget tracking applications [9,62,92,126]. In the centralized architecture, there are several sensors monitoring the region of interest with only one fusion center. All sensors report their measurements to the fusion center. It is the fusion center’s responsibility to process all acquired measurements and update the tracks. The single sensor-multitarget tracking problem can be considered as a special case of the centralized architecture, where only one sensor is deployed to observe the region of interest. In the distributed multisensor-multitarget tracking architecture, there are several fusion centers. One of them is the Central Fusion Center (CFC) and the remaining ones are Local Fusion Centers (LFCs). Measurements generated by the sensors are first processed by the LFCs and local tracks are updated inside each LFC. Then, local tracks from each LFC are reported to the CFC and the track-to-track fusion is accomplished by the CFC to form the global track set. In decentralized tracking architecture, each fusion center (FC) can be considered as a combination of LFC and CFC. Each FC is connected with several sensors and measurements reported by those sensors are used to update the track state inside the FC. Furthermore, each FC will also do track-to-track fusion whenever it receives additional information from its neighboring FCs. Usually, without a major modification, the algorithms developed for the distributed tracking architecture can be used to handle the decentralized tracking architecture. Regardless whether the sensor measurements are processed in the centralized or the distributed architecture, the data of each sensor has to be converted to a common coordinate system before multisensor-multitarget tracking, i.e., sensor registration and data alignment [39,54,74].

Filtering plays a vital role in multitarget tracking by obtaining the state estimate from the measurements received from one or more sensors. Tracking filters [10,31] can be broadly categorized as either linear or nonlinear. The Kalman filter [10,53] is a widely known recursive filter that is most suited for linear Gaussian systems. However, most systems are inherently nonlinear. The extensions of Kalman filter, such as extended Kalman filter (EKF) [10] and unscented Kalman filter (UKF) [52,105,128] are applicable to nonlinear systems. Both EKF and UKF are restricted in that the resulting probability densities are approximated as Gaussian. When the system is nonlinear and non-Gaussian, particle filters (or sequential Monte Carlo methods) [3,33,38,100] provide better estimates than many other filtering algorithms. In general, a tracking filter requires a model for target dynamics, and a model mismatch would diminish the performance of the filter. Thus, different models may be required to describe the target dynamics accurately at different times, especially in the case of maneuvering targets, whose kinematic models may evolve in a time-varying manner. Multiple model tracking algorithms such as the Interacting Multiple Model (IMM) [1,10,14–16,18] estimator, which contains a bank of models matched to different modes of possible target dynamics, would perform better in such situations.

Data association [9,13,113] is an essential component in multisensor-multitarget tracking due to the uncertainty in the data origin. Data association refers to the methodology of correctly associating the measurements to tracks, measurements to measurements [58,106] or tracks to tracks [2,6,8,9,19,22,127], depending on the fusion architecture. To address data association, a number of techniques have been developed and two widely used are single-frame assignment algorithm [11,95] and the multi-frame assignment algorithm [11,17,27,32,59,63,95].

Many algorithms have been proposed for the single sensor-multitarget tracking problem, such as the Probability Data Association (PDA) algorithm and the Joint Probability Data Association (JPDA) algorithm [9], the Multiple Hypotheses Tracking (MHT) algorithm [12], and the Probability Hypothesis Density (PHD) filter [78]. In PDA and JPDA algorithms, for each scan the track-to-measurement association events are enumerated and combined probabilistically, while in the MHT algorithm the track-to-measurement association history over several scans are enumerated and updated. In PHD filter, the track-to-measurement association events are not explicitly constructed. Although these algorithms are originally proposed to handle the single sensor-multitarget tracking problem, they are also widely used as the backbone for the multisensor-multitarget tracking.

In many scenarios, after the signal detection process, clutter points provided by the sensor (e.g., sonar, infrared sensor, radar) are not distributed uniformly in the surveillance region as assumed by most tracking algorithms. On the other hand, in order to obtain accurate results, the target tracking filter requires information about clutter’s spatial intensity. Thus, nonhomogeneous clutter spatial intensity has to be estimated from the measurement set and the tracking filter’s output. Also, in order to take advantage of existing tracking algorithms, it is desirable for the clutter estimation method to be integrated into the tracker itself.

Performance evaluation is very important for multisensor-multitarget tracking problem, especially when the performance of different tracking algorithms needs to be compared. Many measures of performance have been proposed in multisensor-multitarget tracking literatures. These measures can be divided into two different classes: sensor-related measures and tracker-related measures [40]. Sensor-related measures are independent of the tracking algorithm, therefore, most of them are not useful for the performance evaluation of multiple trackers. One exception is the Posterior Cramér-Rao Lower Bound (PCRLB) of tracking [10,44,46], which provides a minimum bound of any tracking estimation. On the other hand, tracker-related measures have been widely used in the tracker performance evaluation. For general multitarget tracking problem, tracker-related measures have been defined in terms of cardinality, time and accuracy measures [66,103]. To evaluate a general multitarget tracking problem, a combination of tracker-related measures should be used, because as shown in [29], it has been observed that the tracker may have provided inaccurate results while some measures show correct and satisfactory performances.

In this chapter, various multisensor-multitarget tracking architectures, estimators for spatial clutter intensity, filters for linear and nonlinear systems, algorithms for data associations and multitarget tracking, techniques used in centralized and distributed track-to-track fusion are discussed in detail. In addition, their quantitative and qualitative merits are discussed. Various combinations of these algorithms will provide a complete tracking framework for multisensor networks with application to civilian as well as military problems. For example, the tracking and fusion techniques discussed here are applicable to fields like air traffic control, air/ground/maritime surveillance, mobile communication, transportation, video monitoring and biomedical imaging/signal processing. The tracker performance evaluation, including its guiding principle and several measures of performance, is also discussed in this chapter. A challenging scenario with many closely-spaced targets is used to compare several multitarget tracking algorithms.

3.15.2 Formulation of multisensor-multitarget tracking problems

In a multisensor surveillance systems, several sensors such as radar, infrared (IR), and sonar, report their measurements to the tracker at regular intervals of time (scans or data frames). However, not all measurements are originated from the targets of interest; some measurements may come from physical background objects such as clutter, and others may be generated by thermal noise. In other words, there is a measurement origin ambiguity. Furthermore, in some scans, the target of interest does not produce any measurements at all (i.e., the probability of detection is less than unity). The objective of a typical multisensor-multitarget tracking system is to first partition all sensors’ measurements into sets, such that all observations in one set are produced by the same object (i.e., data-association process); then the measurements corresponding to the same object are processed in order to estimate the state of the object (i.e., filtering process) [9,12].

To handle the multisensor-multitarget tracking problem, usually the Bayesian approach is applied. In the Bayesian approach, the final goal is to construct the posterior probability density function (pdf) of the multitarget state given all the received measurements so far. Since this pdf contains all available statistical information, it is the complete solution to the multisensor-multitarget tracking problem. In principle, given a cost function, it is always possible to obtain the optimal estimate under the given cost function from the posterior probability. Note that, the state of the multitarget system should be a combination of the number of the targets and the state of each target, because in a real scenario both of them are random and unknown [78,120].

To distinguish the target-originated measurements from the clutter and estimate the state of the multitarget system, the following three models, namely, the target dynamic model, the sensor model, and the clutter model are crucial for all multisensor-multitarget tracking systems.

3.15.2.1 Target dynamic models

Target dynamic model, which is also known as system model, describes the evolution of the state with time, and is given by

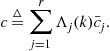

![]() (15.1)

(15.1)

where ![]() is, in general, a nonlinear function,

is, in general, a nonlinear function, ![]() is the state of the target, and

is the state of the target, and ![]() is the process noise, which is usually assumed to be Gaussian. The covariance of the process noise multiplied by the gain

is the process noise, which is usually assumed to be Gaussian. The covariance of the process noise multiplied by the gain ![]() is

is

![]() (15.2)

(15.2)

The following models are widely used by the multitarget tracker as the target dynamic model: [10]

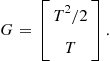

• Constant velocity:

The state vector in one generic coordinate is

![]() (15.3)

(15.3)

The ![]() and

and ![]() in one generic coordinate are

in one generic coordinate are

(15.4)

(15.4)

(15.5)

(15.5)

The process noise standard deviation ![]() is

is

![]() (15.6)

(15.6)

where ![]() is the scaling factor and

is the scaling factor and ![]() is the maximum acceleration.

is the maximum acceleration.

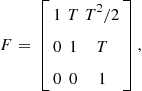

• Constant acceleration:

The state vector in one generic coordinate is

![]() (15.7)

(15.7)

The ![]() and

and ![]() in one generic coordinate are

in one generic coordinate are

(15.8)

(15.8)

(15.9)

(15.9)

The process noise standard deviation ![]() is

is

![]() (15.10)

(15.10)

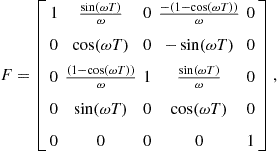

• Coordinated turn:

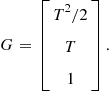

The state vector is

![]() (15.11)

(15.11)

The ![]() and

and ![]() are

are

(15.12)

(15.12)

(15.13)

(15.13)

The process noise standard deviations ![]() and

and ![]() are

are

![]() (15.14)

(15.14)

![]() (15.15)

(15.15)

where ![]() and

and ![]() are the scaling factors for velocity and turn rate, respectively,

are the scaling factors for velocity and turn rate, respectively, ![]() is the maximum turn rate change in unit time.

is the maximum turn rate change in unit time.

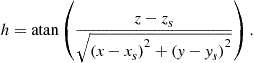

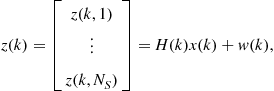

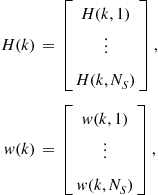

3.15.2.2 Sensor models

Sensor model, which is also known as measurement model, is a model relating the noisy measurements to the state and given by

![]() (15.16)

(15.16)

where ![]() is, in general, nonlinear functions,

is, in general, nonlinear functions, ![]() is the state of the target,

is the state of the target, ![]() is the measurement vector, and

is the measurement vector, and ![]() is the measurement noise at measurement time

is the measurement noise at measurement time ![]() , which is usually assumed to be Gaussian. The following measurement models are typical for the multisensor-multitarget tracking system:

, which is usually assumed to be Gaussian. The following measurement models are typical for the multisensor-multitarget tracking system:

![]() (15.17)

(15.17)

![]() (15.18)

(15.18)

![]() (15.19)

(15.19)

![]() (15.20)

(15.20)

For 2D tracking, the terms related to ![]() must be deleted.

must be deleted.

![]() (15.21)

(15.21)

For 2D tracking, the terms related to ![]() must be deleted.

must be deleted.

![]() (15.22)

(15.22)

![]() (15.23)

(15.23)

(15.24)

(15.24)

(15.25)

(15.25)

![]() (15.26)

(15.26)

![]() (15.27)

(15.27)

In (15.27),

![]()

3.15.2.3 A clutter model

For a sensor with ![]() resolution cells, sometimes detections will be declared in those cells that are pointed to a region without any targets of interest. The detection, which is not produced by any targets of interest, is know as a false alarm (i.e., clutter). Assume:

resolution cells, sometimes detections will be declared in those cells that are pointed to a region without any targets of interest. The detection, which is not produced by any targets of interest, is know as a false alarm (i.e., clutter). Assume:

• The events of detection in each cell is independent of each other.

• The probability of false alarm is equal to ![]() in each cell and

in each cell and ![]() .

.

Then the probability mass function (pmf) of the number of false alarm in these ![]() resolution cells,

resolution cells, ![]() , is approximately following the Poisson distribution [9]

, is approximately following the Poisson distribution [9]

![]() (15.28)

(15.28)

Furthermore, the spatial distribution of the false alarm is uniform based on the above three assumptions. Thus, if the granularity due to the size of the resolution cells can be neglected, the pdf of a false measurement, i.e., the clutter spatial intensity normalized by the expected number of clutter in the measurement space, is [9]

![]() (15.29)

(15.29)

where ![]() represents the volume of the sensor’s measurement space. The un-normalized clutter spatial intensity is

represents the volume of the sensor’s measurement space. The un-normalized clutter spatial intensity is

![]() (15.30)

(15.30)

3.15.2.4 Spatial clutter intensity estimation

Many target tracking algorithms assume that the clutter background is known or at least homogeneous. However, in real tracking problems, the distribution of clutter is often unknown and spatially non-homogeneous. Thus, there is usually a mismatch between the true spatial distribution of clutter points and the spatial distribution model used in the tracking filter. This mismatch may result in a high false track acceptance rate or a long delay of track initialization. Therefore, it is desirable for the tracking filter to estimate the spatial intensity of clutter from the measurement set. Also, due to the fact that target-originated measurement points and clutter points are indistinguishable before data association in the tracker, the output of tracking filter should also be used in order to get an unbiased estimate of clutter spatial intensity. Furthermore, estimation methods for clutter spatial distribution should be compatible with the existing target tracking algorithms, otherwise their application range would be limited.

One way to estimate clutter spatial intensity is to assume that clutter points are uniformly distributed in the validation gate and then use the sample spatial intensity as the estimate of clutter’s spatial intensity [9]. However, this method is based on the current measurement set alone and its performance relies on the volume of validation gate. For example, if the gate is so small that there are only a few measurements falling in it, the estimate of clutter’s spatial intensity may suffer from a large variance; on the other hand, if the gate is too large, then the uniform distribution assumption of clutter points may no longer hold. Also, this estimation method is biased, since it does not take into account target-originated measurements in the gate. In [67], in order to obtain an unbiased estimator of clutter’s spatial intensity, “track perceivability,” the probability that the target exists at the current time given all previous measurement [68], was used to handle target-originated measurements in the current measurement set.

In [43,87], the surveillance region was divided into sectors and clutter points in each sector were assumed to follow Poisson point processes. Based on the Poisson point processes assumption, three clutter spatial intensity estimators were discussed: the first one was based on the number of measurements falling in each sector, the second was based on each sector’s nearest neighbor measurement distance, which is equal to the distance from the center of the sector to its nearest measurement point, while the third was based on the inter-arrival time between two consecutive measurements falling in the same sector. In all three estimators, after obtaining the clutter intensity estimate based on the current measurement set, a time-averaging filter was used to smooth the clutter intensity estimate over time.

In [81,82], it was assumed that there are several unknown targets, called clutter generators, in a space which is disjoint from both the state space and the measurement space. All clutter points are generated by the clutter generator and an approximated Bayesian estimation method for the density of the clutter generator and the clutter is proposed. However, the proposed method is intractable and no practical implementation method was given in [81,82].

In [23], based on Poisson point processes, two methods for joint non-homogeneous clutter background estimation and multitarget tracking were presented. In that paper, non-homogeneous Poisson point processes, whose intensity function are assumed to be a mixture of Gaussian functions, were used to model clutter points. Based on this model, a recursive maximum likelihood method and an approximated Bayesian method using Normal-Wishart conjugate prior-posterior pair were proposed to estimate the non-homogeneous clutter spatial intensity. Both clutter estimation methods were integrated into the Probability Hypothesis Density (PHD) filter, which itself also uses the Poisson point process assumption. The mean and the covariance of each Gaussian function were estimated and used to calculate the clutter density in the update equation of the PHD filter. Simulation results showed that both methods were able to improve the performance of the PHD filter in the presence of slowly time varying non-homogeneous clutter background.

3.15.3 Filters

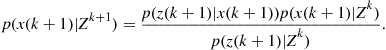

Filtering is the estimation of the state of a dynamic system from noisy data, based on the predefined target dynamic model and the sensor model. In recursive filtering, the received measurements are processed sequentially rather than as a batch so that it is neither necessary to store the complete measurement set nor to reprocess existing measurement if a new measurement becomes available. The Bayesian recursive filter is widely used in the multisensor-multitarget tracking area and such a filter consists of two stages: prediction and update.

The prediction stage uses the system model to predict the state pdf forward from one measurement time to the next. Suppose that the required pdf ![]() at measurement time

at measurement time ![]() is available, where

is available, where ![]() . The prediction stage involves using the system model (15.1) to obtain the prior pdf of the state at measurement time

. The prediction stage involves using the system model (15.1) to obtain the prior pdf of the state at measurement time ![]()

![]() (15.31)

(15.31)

The update stage uses the latest measurement ![]() to update the prior via Bayes’ formula

to update the prior via Bayes’ formula

(15.32)

(15.32)

The above recursive propagation of the posterior density is only a conceptual solution. Analytical formulas of the posterior density exist only in a restrictive set of cases.

3.15.3.1 Kalman filter

The Kalman filter assumes that the state and measurement models are linear, i.e., ![]() . Also, in the Kalman filter, the initial state error and all the noises entering into the system are assumed to be Gaussian, i.e.,

. Also, in the Kalman filter, the initial state error and all the noises entering into the system are assumed to be Gaussian, i.e., ![]() is white and Gaussian with zero mean and covariance

is white and Gaussian with zero mean and covariance ![]() , and

, and ![]() is white and Gaussian with zero mean and covariance

is white and Gaussian with zero mean and covariance ![]() . Under the above assumptions, if

. Under the above assumptions, if ![]() is Gaussian, it can be proved that

is Gaussian, it can be proved that ![]() is also Gaussian, which can be parameterized by a mean and covariance [10].

is also Gaussian, which can be parameterized by a mean and covariance [10].

The Kalman filter algorithm consists of the following recursive relationship [10]:

![]() (15.33)

(15.33)

![]() (15.34)

(15.34)

![]() (15.35)

(15.35)

![]() (15.36)

(15.36)

![]() (15.37)

(15.37)

![]() (15.38)

(15.38)

where

![]() (15.39)

(15.39)

The Kalman filter is the optimal solution to the tracking problem if the above assumptions hold because it provides the posterior probability density of targets state.

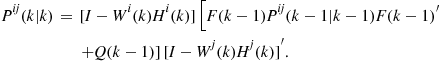

3.15.3.2 Extended Kalman filter (EKF)

While the Kalman filter assumes linearity, most of the real world problems are nonlinear. The extended Kalman filter is a suboptimal state estimation algorithm for nonlinear systems. In EKF, local linearizations of the equations are used to describe the nonlinearity,

![]() (15.40)

(15.40)

![]() (15.41)

(15.41)

The EKF assumes that ![]() can be approximated by a Gaussian. Then the equations of the Kalman filter can be used with this approximation and the linearized functions, except the state and measurement prediction are performed using the original nonlinear functions

can be approximated by a Gaussian. Then the equations of the Kalman filter can be used with this approximation and the linearized functions, except the state and measurement prediction are performed using the original nonlinear functions

![]() (15.42)

(15.42)

![]() (15.43)

(15.43)

The above is a first-order EKF based on the first-order series expansion of the nonlinearities. There are several error reduction methods to improve the performance of the EKF [10]. One of them is using the second-order series expansion of the nonlinearities, i.e., higher-order EKFs, but the additional complexity and little or no benefit has prohibited its widespread use. For continuous-time nonlinear systems, numerical integration on the continuous-time stochastic differential equation of the state from ![]() to

to ![]() can be used to obtain a better predicted state. The third approach to improve the EKF is using an iteration to compute the updated state as a maximum a posterior (MAP) estimate, rather than an approximate conditional mean. This type of EKF is called the Iterated extended Kalman filter (IEKF). The iteration used by the IEKF amounts to relinearize the measurement equation around the updated state rather than relying only on the predicted state. If the measurement model fully observes the state, then the IEKF is able to handle the non-linear measurement model better than the EKF [61].

can be used to obtain a better predicted state. The third approach to improve the EKF is using an iteration to compute the updated state as a maximum a posterior (MAP) estimate, rather than an approximate conditional mean. This type of EKF is called the Iterated extended Kalman filter (IEKF). The iteration used by the IEKF amounts to relinearize the measurement equation around the updated state rather than relying only on the predicted state. If the measurement model fully observes the state, then the IEKF is able to handle the non-linear measurement model better than the EKF [61].

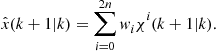

3.15.3.3 Unscented Kalman filter (UKF)

When the state transition and observation models are highly nonlinear, the EKF may perform poorly. The unscented Kalman filter does not approximate the nonlinear functions of state and measurement models as required by the EKF. Instead, the UKF uses a deterministic sampling technique known as the unscented transform to pick a minimal set of sample points called sigma points around the mean. Here, the propagated mean and covariance are calculated from the transformed samples [52]. In some UKF implementations, the state random variable is augmented as the concatenation of the original state and noise variables [123]. The steps of UKF are described below.

3.15.3.3.1 Sigma point generation

The state vector ![]() with mean

with mean ![]() and covariance

and covariance ![]() is approximated by

is approximated by ![]() weighted sigma points, where

weighted sigma points, where ![]() is the dimension of the state vector, as

is the dimension of the state vector, as

![]() (15.44)

(15.44)

![]() (15.45)

(15.45)

![]() (15.46)

(15.46)

where ![]() is the weight associated with the ith point,

is the weight associated with the ith point, ![]() is a scaling parameter,

is a scaling parameter, ![]() , and

, and ![]() is the ith row or column of the matrix square root of

is the ith row or column of the matrix square root of ![]() .

.

3.15.3.3.2 Recursion

1. Find the predicted target state ![]() and corresponding covariance

and corresponding covariance ![]() :

:

a. Transform the Sigma points using the system model

![]() (15.47)

(15.47)

(15.48)

(15.48)

c. Find the predicted covariance

(15.49)

(15.49)

2. Find the predicted measurement ![]() and the corresponding covariance

and the corresponding covariance ![]() :

:

a. Regenerate the Sigma points ![]() using the mean

using the mean ![]() and covariance

and covariance ![]() in order to incorporate the effect of

in order to incorporate the effect of ![]() . If

. If ![]() is zero, the resulting

is zero, the resulting ![]() will be the same as in (15.47). If the process noise is correlated with the state, then the noise vector must be stacked with the state vector

will be the same as in (15.47). If the process noise is correlated with the state, then the noise vector must be stacked with the state vector ![]() before generating the sigma points [52].

before generating the sigma points [52].

b. Find the predicted measurement mean ![]()

(15.50)

(15.50)

where

![]() (15.51)

(15.51)

c. Find the innovation covariance ![]() and gain

and gain ![]()

(15.52)

(15.52)

3. Update the state ![]() and corresponding covariance

and corresponding covariance ![]() using (15.37) and (15.38), respectively.

using (15.37) and (15.38), respectively.

3.15.3.4 Particle filter

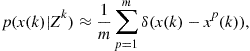

If the true density is substantially non-Gaussian, then a Gaussian model as in the case of the Kalman filter will not yield accurate estimates. In such cases, particle filters will yield an improvement in performance in comparison to the EKF or UKF. The particle filter provides a mechanism for representing the density, ![]() of the state vector

of the state vector ![]() at time epoch

at time epoch ![]() as a set of random samples

as a set of random samples ![]() , with associated weights

, with associated weights ![]() . That is, the particle filter attempts to represent an arbitrary density function using a finite number of points, instead of a pair of mean vector and covariance matrix that is sufficient for Gaussian distributions. Several variations of particle filters are available and the reader is referred to [3] for detailed description. The Sampling Importance Resampling (SIR) type particle filter, which is arguably the most common technique to implement particle filters, is discussed below. In general, the particles are sampled either from the prior density or likelihood function. Taking the prior as the importance density, the method of SIR is used to produce a set of equally weighted particles that approximates

. That is, the particle filter attempts to represent an arbitrary density function using a finite number of points, instead of a pair of mean vector and covariance matrix that is sufficient for Gaussian distributions. Several variations of particle filters are available and the reader is referred to [3] for detailed description. The Sampling Importance Resampling (SIR) type particle filter, which is arguably the most common technique to implement particle filters, is discussed below. In general, the particles are sampled either from the prior density or likelihood function. Taking the prior as the importance density, the method of SIR is used to produce a set of equally weighted particles that approximates ![]() , i.e.,

, i.e.,

(15.53)

(15.53)

where ![]() is the Dirac Delta function. The prediction and update steps of the particle filter recursion are given below.

is the Dirac Delta function. The prediction and update steps of the particle filter recursion are given below.

Prediction: Take each existing sample, ![]() and generate a sample

and generate a sample ![]() , using the system model. The set

, using the system model. The set ![]() provides an approximation of the prior,

provides an approximation of the prior, ![]() , at time

, at time ![]() .

.

Update: At each measurement epoch, to account for the fact that the samples, ![]() are not drawn from

are not drawn from ![]() , the weights are modified using the principle of importance sampling. When using the prior as the importance density, it can be shown that the weights are given by

, the weights are modified using the principle of importance sampling. When using the prior as the importance density, it can be shown that the weights are given by

![]() (15.54)

(15.54)

A common problem with the above recursion is the degeneracy phenomenon, whereby the particle set quickly collapses to just a single particle. To overcome this problem a regularization can be imposed via reselection as follows.

Reselection: Resample (with replacement) from ![]() , using the weights,

, using the weights, ![]() , to generate a new sample,

, to generate a new sample, ![]() , then set

, then set ![]() for

for ![]() .

.

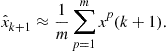

The mean of the posterior distribution is used to estimate, ![]() of the target state,

of the target state, ![]() , i.e.,

, i.e.,

(15.55)

(15.55)

The accuracy of the particle filter based estimate (15.53) depends on the number of particles employed. A more accurate state estimates can be obtained at the expense of extra computation. The extension of particle filters allows them to be applicable to multitarget tracking problems [60].

3.15.3.5 Interacting multiple-model estimator

Tracking maneuvering targets is a very important task for almost all practical systems. Several schemes and methods have been proposed to track maneuvering targets [69–73]. One of widely used method is the Multiple Mode (MM) approach [10]. In MM approach, the target system is assumed to follow one of predetermined models (or mods) and a number of filters operate in parallel. Thus, the system has both continuous uncertainties brought by the noise, and discrete uncertainties brought by the model uncertainty. Starting from the prior distribution of the target state and the prior probability that the system is in a particular mode, the goal of the MM approach is to obtain the posterior distribution of the target state and posterior mode probability.

Depending on whether mode jumping is allowed, there are static MM estimator and dynamic MM estimator. In static MM algorithms, it is assumed that there is no model switching from one mode to another during the whole estimation process and this assumption is not realistic for many real scenarios. On the other hand, in dynamic MM algorithms, the target is allowed to switch from one mode to another according to a Markov chain. Optimal dynamic MM estimator is infeasible since it needs to carry all the mode sequence hypotheses and the total number of the mode sequence hypotheses increases exponentially with time. To get a feasible dynamic MM suboptimal estimator, the mode sequences that only differ in “older” modes are combined and the mode sequences are kept to depth ![]() in the Generalized Pseudo-Bayesian (GPB) approaches [9,10]. A GPB algorithm of depth

in the Generalized Pseudo-Bayesian (GPB) approaches [9,10]. A GPB algorithm of depth ![]() (

(![]() ) requires

) requires ![]() filters in its bank, where

filters in its bank, where ![]() is the number of models.

is the number of models.

The Interacting Multiple Model (IMM) estimator [9,10,15,16], which mixes the mode sequence hypotheses with depth ![]() at the beginning of each filtering cycle, requires only

at the beginning of each filtering cycle, requires only ![]() number of filters to operate in parallel, (i.e., same as

number of filters to operate in parallel, (i.e., same as ![]() estimator) but is able to perform nearly as well as

estimator) but is able to perform nearly as well as ![]() . Thus, the IMM estimator is very cost-efficient. The main contributor for the cost-efficiency of the IMM estimator is the “mixing/interaction” between its “mode-matched” base state filtering modules at the beginning of each cycle. It has been shown in [7], the same feature is exactly what the IMM has in common with the optimal estimator for dynamic MM systems. Besides its cost-efficiency, another advantage of the IMM estimator is that it does not require maneuver detection decision as in the case of Variable State Dimension (VSD) filter [10] algorithms and undergoes a soft switching between models based on the updated mode probabilities.

. Thus, the IMM estimator is very cost-efficient. The main contributor for the cost-efficiency of the IMM estimator is the “mixing/interaction” between its “mode-matched” base state filtering modules at the beginning of each cycle. It has been shown in [7], the same feature is exactly what the IMM has in common with the optimal estimator for dynamic MM systems. Besides its cost-efficiency, another advantage of the IMM estimator is that it does not require maneuver detection decision as in the case of Variable State Dimension (VSD) filter [10] algorithms and undergoes a soft switching between models based on the updated mode probabilities.

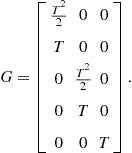

3.15.3.5.1 Modeling assumptions in dynamic multiple model approach

Base state model:

![]() (15.56)

(15.56)

![]() (15.57)

(15.57)

where ![]() denotes the mode in effect during the sampling period ending at

denotes the mode in effect during the sampling period ending at ![]() .

.

Mode (“model state”)—among the possible ![]() modes:

modes:

![]() (15.58)

(15.58)

The structure of the system and/or the statistics of the noises can differ from mode to mode:

![]() (15.59)

(15.59)

![]() (15.60)

(15.60)

Mode jump process: Markov chain with known transition probabilities

![]() (15.61)

(15.61)

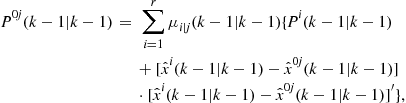

3.15.3.5.2 The IMM estimation algorithm

• Interaction: Mixing of the previous cycle mode-conditioned state estimates and covariance, using the mixing probabilities ![]() , to initialize the current cycle of each mode-conditioned filter. For filter

, to initialize the current cycle of each mode-conditioned filter. For filter ![]() , there is:

, there is:

![]() (15.62)

(15.62)

(15.63)

(15.63)

where

![]() (15.64)

(15.64)

![]() (15.65)

(15.65)

• Mode-conditioned filtering: Calculation of the state estimates and covariances conditioned on a mode being in effect. The KF matched to ![]() (filter

(filter ![]() ) uses

) uses ![]() to yield

to yield ![]() and

and ![]() . Likelihood function corresponding to filter

. Likelihood function corresponding to filter ![]() is

is ![]() .

.

• Probability evaluation: Computation of the mixing and the updated mode probabilities. For the mode probabilities of the jth mode (![]() )

)

![]() (15.66)

(15.66)

(15.67)

(15.67)

• Overall state estimate and covariance (for output only): Combination of the latest mode-conditioned state estimates and covariances

(15.68)

(15.68)

(15.69)

(15.69)

The IMM estimation algorithm has a modular structure.

For non-maneuvering targets, unnecessarily using multiple-model algorithms is not optimal because it might diminish the performance level of the tracker and increase the computational load. A multiple-model approach is only necessary for targets with high maneuverability. Typically, the decision to use a multiple-model estimator should be based on the maneuvering index [10], which quantifies the maneuverability of the target in terms of the process noise, sensor measurement noise and sensor revisit interval. In [56], a study was presented to compare the IMM estimator with the Kalman filter based on the maneuvering index.

3.15.4 Filter initialization

Track initiation is an essential component of all tracking algorithms. Two major types of initialization techniques, namely, the Single-Point (SP) method and the Two-Point-Difference (TPD) method, are commonly used in multisensor-multitarget tracking applications [9,10,84].

3.15.4.1 Single-point track initialization

In SP track initialization, every detection (measurement), which is unassociated to any track, is an “initiator.” The unassociated measurement is first converted into Cartesian space through the unbiased conversion method [10] and then a tentative track is declared. In SP algorithm, the position component of the tentative track is initialized using the position component of the converted measurement and the velocity components is set to zero. To compensate the zero velocity assumption made for the tentative track, when initializing the covariance matrix of the tentative track, the maximum possible speed of the target is often used as the standard deviation of the velocity component of the tentative track. For the position component, the variance of the converted measurement is used. Furthermore, for the newly declared tentative track, its velocity and position along different coordinates are usually assumed to be independent. A Kalman filter is then used for subsequent processing of the tentative track.

3.15.4.2 Two-point difference track initialization

In TPD track initialization, every unassociated detection (measurement) is also an “initiator,” but does not immediately yield a tentative track. At the sampling time (scan or frame) following the detection of an initiator, a gate is set up around the initiator based on the assumed maximum and minimum target speed as well as the measurement noise intensities. Thus, it is reasonable to assume that if the initiator is from a target, then the measurement from that target in the second scan (if detected) will fall inside the gate with nearly unity probability. A tentative track will be declared only if there is at least one detection falling in the gate. Since now each tentative track has two measurements, a straight line extrapolation is used to obtain its speed and the covariance matrix. A Kalman filter is then used for the subsequent processing.

3.15.4.3 Issues related to track initialization

It has been demonstrated numerically that the SP method has a smaller mean square error matrix than the TPD method for a 3D radar target tracking problem. Also, it has been analytically shown that, if the process noise approaches zero and the maximum speed of a target used to initialize the velocity variance approaches infinity, then the SP algorithm reduces to the TPD algorithm [84].

In many multi-sensor multitarget tracking algorithms, the track initialization and the track maintenance phases are treated as two independent (and consecutive) stages. However, in a real tracking problems, targets can enter and leave the surveillance region at any time. As a result, track initiation has to be considered at every sampling time. That is, track initialization occurs even after the first few scan. Similarly, the fact that track maintenance stage has been activated does not obviate the need for further track initiations. Both have to be carried out simultaneously throughout the entire tracking interval. Because of this, the track initialization module needs to take into account the number, states and qualities of the established tracks being retained by the track maintenance module. Otherwise, spurious tracks and track seduction will ensue, damaging the overall quality of the tracker [42].

3.15.5 Data association

In Section 3.15.3, it has been implicitly assumed that there is no measurement origin ambiguity. However, the crux of the multitarget problem is to carry out the association process for measurements whose origins are uncertain due to

• random false alarms in the detection process,

• clutter due to spurious reflectors or radiators near the target of interest,

Furthermore, the probability of obtaining a measurement from a target—the target detection probability—is usually less than unity.

Data association problems may be categorized according to the pairs of information that are associated together:

• measurement-to-track association—track maintenance or updating,

• measurement-to-measurement association—parallel updating for centralized tracking,

• track-to-track association—track fusion (for distributed or decentralized tracking).

In this subsection, measurement-to-track association and measurement-to-measurement association techniques are discussed. The track-to-track association is discussed in Section 3.15.9.

3.15.5.0.1 Measurement-to-track association

A multidimensional gate is set up in the measurement space around the predicted measurement in order to avoid searching for the measurement from the target of interest in the entire measurement space. A measurement in the gate, while not guaranteed to have originated from the target the gate pertains to, is a valid association candidate. Thus the name validation region or association region. If there is more than one measurement in the gate, this leads to an association uncertainty.

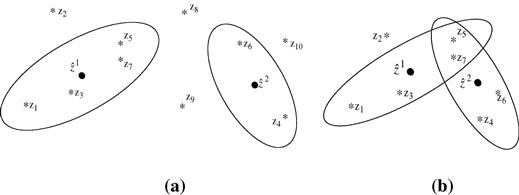

Figure 15.1a and b illustrates the gating for two well-separated and closely-spaced targets respectively. In the figures, “![]() ” indicates the expected measurement and the “

” indicates the expected measurement and the “![]() ” indicates the received measurement.

” indicates the received measurement.

If the true measurement conditioned on the past is normally (Gaussian) distributed with its probability density function given by

![]() (15.70)

(15.70)

then the true measurement will be in the following region:

![]() (15.71)

(15.71)

with probability determined by the gate threshold ![]() . The region defined by (15.71) is called gate or validation region.

. The region defined by (15.71) is called gate or validation region.

Some well-known approaches for data association in the presence of well-separated targets, where no measurement origin uncertainties exist are discussed below.

Nearest Neighbor (NN): This is the simplest possible approach and uses the measurement nearest to the predicted measurement, assuming that the nearest one is the correct one. The nearest measurement to the predicted measurement is determined according to the distance measure (norm of the innovation squared),

![]() (15.72)

(15.72)

Strongest Neighbor (SN): Select the strongest measurement (in terms of signal intensity) among the validated ones—this assumes that signal intensity information is available.

2-D Assignment: This technique is also known as the Global Nearest Neighbor (GNN) method. The fundamental idea behind 2-D assignment is that the measurements from the scan list ![]() at time

at time ![]() are deemed to have come from the tracks in list

are deemed to have come from the tracks in list ![]() at time

at time ![]() . To find the best match between

. To find the best match between ![]() and

and ![]() , a constrained global optimization problem has to be solved. The optimization is carried out to minimize the “cost” of associating the measurements in

, a constrained global optimization problem has to be solved. The optimization is carried out to minimize the “cost” of associating the measurements in ![]() to tracks predicted from

to tracks predicted from ![]() .

.

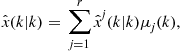

To present the 2-D assignment, define a binary assignment variable ![]() such that

such that

(15.73)

(15.73)

where ![]() is the time stamp of the mth measurement from scan or frame

is the time stamp of the mth measurement from scan or frame ![]() .

.

A set of complete assignments, which consists of the associations of all the measurements in ![]() and the tracks in

and the tracks in ![]() , is denoted by

, is denoted by ![]() , i.e.,

, i.e.,

![]() (15.74)

(15.74)

where ![]() and

and ![]() are the cardinalities of the measurement and track sets, respectively. The indices

are the cardinalities of the measurement and track sets, respectively. The indices ![]() and

and ![]() correspond to the non-existent (or “dummy”) measurement and track. The “dummy” notation is used to formulate the assignment problem in a uniform manner, where the non-association possibilities are also considered, making it computer-solvable.

correspond to the non-existent (or “dummy”) measurement and track. The “dummy” notation is used to formulate the assignment problem in a uniform manner, where the non-association possibilities are also considered, making it computer-solvable.

The objective of the assignment is to find the optimal assignment ![]() , which minimizes the global cost of association

, which minimizes the global cost of association

(15.75)

(15.75)

where ![]() is the cost of the assignment

is the cost of the assignment ![]() . That is,

. That is,

![]() (15.76)

(15.76)

The costs ![]() are the negative of the logarithm of the dimensionless likelihood ratio of the measurement-to-track associations, namely,

are the negative of the logarithm of the dimensionless likelihood ratio of the measurement-to-track associations, namely,

![]() (15.77)

(15.77)

where

(15.78)

(15.78)

are the following likelihood ratios:

1. that measurement ![]() came from track

came from track ![]() for

for ![]() with the association likelihood function being the probability density function of the corresponding innovation,

with the association likelihood function being the probability density function of the corresponding innovation, ![]() versus from an extraneous source whose spatial density is

versus from an extraneous source whose spatial density is ![]() ,

,

2. that measurement ![]() came from none of the tracks (i.e., from the dummy track

came from none of the tracks (i.e., from the dummy track ![]() ) versus from an extraneous source. Note that, if measurement

) versus from an extraneous source. Note that, if measurement ![]() came from none of the tracks, then that measurement must be generated by an extraneous source, Thus, the likelihood ratio must be unity in this case,

came from none of the tracks, then that measurement must be generated by an extraneous source, Thus, the likelihood ratio must be unity in this case,

3. that the measurement from track ![]() is not in

is not in ![]() , i.e., track

, i.e., track ![]() is associated with the dummy measurement—the cost of not associating any measurement to a track amounts to the miss probability

is associated with the dummy measurement—the cost of not associating any measurement to a track amounts to the miss probability ![]() , where the nominal target detection probability is denoted by

, where the nominal target detection probability is denoted by ![]() .

.

The 2-D assignment is subject to the following constraints.Validation: A measurement is assigned only to one of the tracks that validated it.One-to-one constraint: Each track is assigned at most one measurement. The only exception is the dummy track ![]() , which can be associated with any number of measurements. Similarly, a measurement is assigned to at most one track. The dummy measurement

, which can be associated with any number of measurements. Similarly, a measurement is assigned to at most one track. The dummy measurement ![]() can be assigned to multiple tracks.Non-empty association: The association cannot be empty, i.e., the dummy measurement cannot be assigned to the dummy track.

can be assigned to multiple tracks.Non-empty association: The association cannot be empty, i.e., the dummy measurement cannot be assigned to the dummy track.

The modified auction algorithm can solve the above constrained optimization problem and that algorithm runs in quasi-polynomial time [94,95].

Multidimensional (S-D) Assignments: In 2-D assignment only the latest scan is used and information about target evolution through multiple scans is lost. Also it is not possible to change an association later in light of subsequent measurements. A data-association algorithm may perform better when a number of past scans are utilized. This corresponds to multidimensional assignment for data association. In S-D assignment the latest ![]() scans of measurements are associated with the established track list (from time

scans of measurements are associated with the established track list (from time ![]() , where

, where ![]() is the current time, i.e., with a sliding window of time depth

is the current time, i.e., with a sliding window of time depth ![]() ) in order to update the tracks.

) in order to update the tracks.

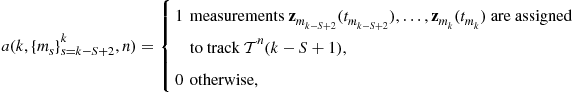

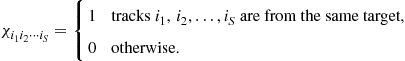

Similarly to the 2-D assignment, define a binary assignment variable ![]() such that

such that

(15.79)

(15.79)

which is the general version of (15.73). The cost associated with (15.79) is denoted as

![]() (15.80)

(15.80)

and ![]() is the likelihood ratio that the S-1-tuple of measurements, which is given by

is the likelihood ratio that the S-1-tuple of measurements, which is given by ![]() , originated from the target represented by track

, originated from the target represented by track ![]() versus some measurements

versus some measurements ![]() being extraneous.

being extraneous.

The objective of the S-D assignment is to find the S-tuples of measurement-to-track associations ![]() , which minimize the global cost of association given by

, which minimize the global cost of association given by

(15.81)

(15.81)

where ![]() is the number of measurements in scan

is the number of measurements in scan ![]() and

and ![]() is the complete set of associations analogous to that defined in (15.74) for the 2-D assignment. The association likelihoods are given by

is the complete set of associations analogous to that defined in (15.74) for the 2-D assignment. The association likelihoods are given by

(15.82)

(15.82)

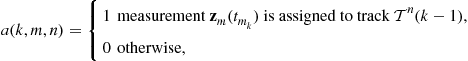

where ![]() is a binary function such that

is a binary function such that

(15.83)

(15.83)

and ![]() is the filter-calculated innovation pdf if the (kinematic) measurement

is the filter-calculated innovation pdf if the (kinematic) measurement ![]() is associated with track

is associated with track ![]() continued with the (kinematic) measurements

continued with the (kinematic) measurements ![]() .

.

The association costs are given to the generalized S-D assignment algorithm, which uses Lagrangian relaxation, as described in [32,95] to solve the assignment problem in quasi-polynomial time. The feasibility constraints are similar to those from the 2-D assignment.

3.15.5.0.2 Measurement-to-measurement association

Measurement-to-measurement association is the most important step in the parallel updating scheme for the centralized tracking. Without any major modification, multidimensional (S-D) Assignments and 2-D Assignment technique are commonly used for measurement-to-measurement association. A good example of using S-D assignment technique to solve the measurement-to-measurement association problem in a multisensor-multitarget tracking problem is given in [9].

3.15.6 Multitarget tracking algorithms

Three widely used multitarget tracking algorithms, namely, the Probabilistic Data Association (PDA) and Joint Probabilistic Data Association (JPDA) algorithm, the Multiple Hypothesis Tracker (MHT) algorithm, and the Probability Hypothesis Density (PHD) algorithm, are discussed in this section.

3.15.6.1 Probabilistic data association (PDA) and joint probabilistic data association (JPDA)

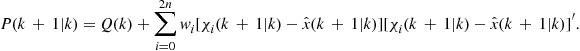

PDA algorithm is a Bayesian approach that probabilistically associates all the validated measurements to the target of interest [9]. The state update equation of the PDA filter is

![]() (15.84)

(15.84)

where

(15.85)

(15.85)

![]() (15.86)

(15.86)

![]() is the number of validated measurements and

is the number of validated measurements and

![]() (15.87)

(15.87)

is the conditional probability of the event that the ith validated measurement is correct.

The covariance associated with the updated state is

![]() (15.88)

(15.88)

where ![]() is the conditional probability of the event that none of the measurements is correct and the covariance of the state updated with the correct measurement is

is the conditional probability of the event that none of the measurements is correct and the covariance of the state updated with the correct measurement is

![]() (15.89)

(15.89)

In equation (15.89), the gain matrix ![]() and the innovation matrix

and the innovation matrix ![]() are calculated using the standard Kalman filter equation (15.36), (15.39). The spread of the innovations term in (15.88) is

are calculated using the standard Kalman filter equation (15.36), (15.39). The spread of the innovations term in (15.88) is

(15.90)

(15.90)

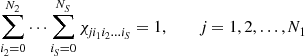

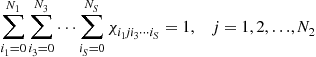

The association of measurements in a multitarget environment with closely-spaced targets must be done while simultaneously considering all the targets. Thus, the Joint Probabilistic Data Association (JPDA) is proposed as an extension of the PDA method to handle the scenario with closely-spaced targets [9]. For a known number of targets, JPDA evaluates the measurement-to-target association events probabilities (for the latest set of measurements) and combines them into the corresponding state estimates.

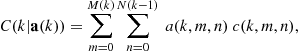

The JPDA algorithm includes the following steps:

• A validation matrix that indicates all the possible sources of each measurement is set up.

• From the validation matrix, all the feasible joint association events are constructed by the JPDA tracker according to the following two rules:

• One measurement must be originated from one target or a false alarm.

• One target can only generate one measurement at most.

• The probabilities of these joint events are evaluated according to the following assumptions:

• Target-originated measurements are Gaussian distributed around the predicted location of the corresponding target’s measurement.

• False alarms are distributed in the surveillance region according to a Poisson point process model.

• Marginal (individual measurement-to-target) association probabilities are obtained from the joint association probabilities.

• The target states are estimated by separate (uncoupled) PDA filters using these marginal probabilities.

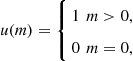

An extension of the PDA algorithm, the Integrated Probabilistic Data Association (IPDA) algorithm is proposed in [85]. The main idea behind IPDA tracker is to introduce the track existence probability, a measure of the quality of the track, to the PDA algorithm. Like PDA tracker, IPDA track can only be used to track well-separated targets in clutter. To handle the multitarget environment with closely-spaced targets, the Joint Integrated Probabilistic Data Association (JIPDA) tracker is proposed in [86], which is actually a JPDA with the track existence probability.

3.15.6.2 Multiple Hypothesis Tracker (MHT)

Multiple Hypothesis Tracking (MHT) is an efficient algorithm for tracking multitargets in a cluttered environment. The algorithm is capable of initiating tracks, accounting false or missing reports, and processing sets of dependent reports. As each measurement is received, probabilities are calculated for the hypotheses that the measurement came from previously existing target, or from a new target, or that the measurement is a false alarm. Target states are estimated from each such data-association hypothesis with a certain filter (e.g., Kalman Filter). As more measurements are received, the probabilities of joint hypotheses are calculated recursively using all available information such as the density of unknown target ![]() , the density of false targets

, the density of false targets ![]() and the probability of detection

and the probability of detection ![]() . This branching technique allows the correlation of a measurement with its source based on subsequent, as well as previous, data. To keep the number of hypotheses reasonable, unlikely hypotheses are eliminated and hypotheses with similar target estimates are combined. To minimize computational requirements, clustering and pruning techniques are embedded in the MHT tracker. Note that MHT strictly follows the one-to-one assumption between measurements and targets. There are two types of implementation for MHT, the hypothesis-oriented MHT (HOMHT), which is also known as the measurement-oriented MHT (MOMHT), and the track-oriented MHT (TOMHT).

. This branching technique allows the correlation of a measurement with its source based on subsequent, as well as previous, data. To keep the number of hypotheses reasonable, unlikely hypotheses are eliminated and hypotheses with similar target estimates are combined. To minimize computational requirements, clustering and pruning techniques are embedded in the MHT tracker. Note that MHT strictly follows the one-to-one assumption between measurements and targets. There are two types of implementation for MHT, the hypothesis-oriented MHT (HOMHT), which is also known as the measurement-oriented MHT (MOMHT), and the track-oriented MHT (TOMHT).

3.15.6.2.1 Hypothesis-oriented MHT (HOMHT)

In HOMHT, hypotheses are composed of sets of compatible tracks. Multiple tracks are compatible if they have no measurement in common. At every scan, each hypothesis carried over from the previous scan (i.e., parent hypothesis) is expanded into a set of new hypotheses (i.e., offspring hypothesis) by considering all possible track-to-measurement associations for the tracks within the parent hypothesis [9]. The HOMHT includes the following steps:

• Initialization: The measurements received at the first scan ![]() have two possible origination: (1) new target with probability

have two possible origination: (1) new target with probability ![]() ; (2) false alarm with probability

; (2) false alarm with probability ![]() . Therefore, the total number of possible hypotheses is

. Therefore, the total number of possible hypotheses is ![]() where

where ![]() is the number of measurements received at scan

is the number of measurements received at scan ![]() . The probability of each hypothesis is proportional to

. The probability of each hypothesis is proportional to

![]() (15.91)

(15.91)

where ![]() and

and ![]() denote the number of measurements that are assigned as new targets and the number of measurements that are assigned as false alarms give the jth valid hypothesis

denote the number of measurements that are assigned as new targets and the number of measurements that are assigned as false alarms give the jth valid hypothesis ![]() . Then, select the top

. Then, select the top ![]() hypotheses based on their probabilities

hypotheses based on their probabilities ![]() . These

. These ![]() hypotheses

hypotheses ![]() are parent hypotheses for the next scan, and their probabilities are normalized at the end of this scan.

are parent hypotheses for the next scan, and their probabilities are normalized at the end of this scan.

• Update of hypotheses: In this step, every parent hypothesis will be used to generate a set of offspring hypotheses. The collection of all the offspring hypotheses forms the current set of hypotheses. Then, top ![]() hypotheses will be selected from the current set of hypotheses, and their probabilities will be normalized.

hypotheses will be selected from the current set of hypotheses, and their probabilities will be normalized.

• Prune hypotheses: It is infeasible to keep all the hypotheses because the number of hypotheses grows exponentially. Several pruning techniques are embedded to the HOMHT tracker to maintain the number of hypotheses in a suitable level. The first pruning method removes hypothesis whose probability is less than a predefined threshold ![]() . The second pruning method keeps only the top

. The second pruning method keeps only the top ![]() hypotheses with the greatest probabilities. The third method discards the set of hypotheses with the smallest probabilities such that the total probability of discarded hypotheses does not exceed a user defined threshold

hypotheses with the greatest probabilities. The third method discards the set of hypotheses with the smallest probabilities such that the total probability of discarded hypotheses does not exceed a user defined threshold ![]() . For example, the probabilities of all the current hypotheses are

. For example, the probabilities of all the current hypotheses are ![]() . Assume that

. Assume that ![]() and

and ![]() . Then, the third method removes the

. Then, the third method removes the ![]() th to nth hypotheses where

th to nth hypotheses where ![]() and

and ![]() .

.

• Track management: In HOMHT tracker, two kinds of track management systems are available. The first is based on ![]() logic and the second relies on track qualities. Note that, the HOMHT tracker does not probabilistically combine the update states, which are calculated conditioning on the measurement-to-track association events. Thus the equations that used in JIPDA tracker can not be applied here. One method to compute the track quality in the MHT framework is proposed in [88].

logic and the second relies on track qualities. Note that, the HOMHT tracker does not probabilistically combine the update states, which are calculated conditioning on the measurement-to-track association events. Thus the equations that used in JIPDA tracker can not be applied here. One method to compute the track quality in the MHT framework is proposed in [88].

• Find the best hypothesis and output: The last step of a HOMHT loop for a scan is to find the best current hypothesis and then output it to the user.

3.15.6.2.2 Track oriented MHT (TOMHT)

The track-oriented MHT constructs a target tree for each potential (or postulated) target according to the measurements, and the branches represent the different measurements with which the target may be associated. A trace of successive branches from the root to a leaf of the tree represents a potential measurement history generated by the target. Conventionally, the target trees are referred to as track hypotheses, and a collection of compatible tracks is referred to as global hypothesis. Unlike the HOMHT in which three possible originalities of measurement are considered, usually the TOMHT treats a measurement as either originated from an existing target or a new one [13]. The TOMHT includes the following steps:

• Initialize tree: The target trees are initialized on the receipt of the first set of measurements. The root of each tree is a measurement.

• Build tree: In this step, the set of trees of previous scan are updated. More specifically, (1) the depth of each previous tree is increased by one and each branch grows to several new branches to account for all possible target-to-measurement associations; (2) each measurement also is used to initiate a new target tree where the measurement is the root of the tree. In addition, the compatibility relation has to be updated in order to find the global hypothesis.

• Track management: The track management techniques used for TOMHT are the same as that used for HOMHT.

• Track level pruning: The purpose of this step is to remove the branches of low probabilities so that the computation load for finding the global hypothesis is remained in a reasonable level. Two pruning methods can be embedded into the HOMHT: (1) limit the number of branches per tree below ![]() , which is defined by user as the maximum number of branches per tree; (2) discard the branches whose probability is lower than a predefined threshold

, which is defined by user as the maximum number of branches per tree; (2) discard the branches whose probability is lower than a predefined threshold ![]() .

.

• Find clusters: All the branches, i.e., the node or potential track, belong to the same target tree are within the same cluster because they share the same root. Therefore, the clustering procedure for TOMHT is done in the tree-to-measurement level. The algorithm and code for HOMHT clustering can be reused for TOMHT by just replacing the track by tree and measurement associated with track by measurements associated with target tree.

• Find global hypothesis: This step is used to find the best global hypothesis, which is a collection of compatible trees from all the target trees. Enumeration is the basic method for finding the best global hypothesis. In enumeration method, all valid global hypotheses are constructed and then the best one is chosen according to the costs of hypotheses. However, the total number of valid global hypotheses grows exponentially with respect to the number of trees. For example, assume that ![]() trees exist and there are

trees exist and there are ![]() branches for each tree. Then, the total number of global hypotheses is

branches for each tree. Then, the total number of global hypotheses is ![]() . Although some of the invalid hypotheses will be removed from this

. Although some of the invalid hypotheses will be removed from this ![]() hypotheses, the remaining part is still a large amount. Therefore, the pruning method must be used to limit the number of valid hypotheses and then an approximate best global hypothesis is found.

hypotheses, the remaining part is still a large amount. Therefore, the pruning method must be used to limit the number of valid hypotheses and then an approximate best global hypothesis is found.

Due to the requirement of node compatibility (or track compatibility), one and only one branch must be selected per tree to form a possible valid global hypothesis. Note that the selection of dummy node means no track is selected from this tree in the global hypothesis.

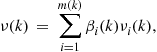

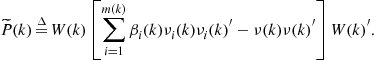

3.15.6.3 Probability hypothesis density (PHD) method

In tracking multiple targets, if the number of targets is unknown and varying with time, it is not possible to compare states with different dimensions using ordinary Bayesian statistics. However, the problem can be addressed using Finite Set Statistics (FISST) [75] to incorporate comparisons of states of different dimensions. FISST facilitates the construction of “multitarget densities” from multiple target transition functions into computing set derivatives of belief-mass functions [75], which makes it possible to combine states of different dimensions. The main practical difficulty with this approach is that the dimension of state space becomes large when many targets are present, which increases the computational load exponentially with the number of targets. Since the PHD is defined over the state space of one target in contrast to the full posterior distribution, which is defined over the state space of all the targets, the computation cost of propagating the PHD over time is much lower than propagating the full posterior density. A comparison in terms of computation and estimation accuracy of multitarget filtering using FISST particle filter and PHD particle filter is given in [112].

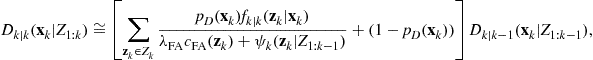

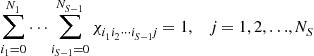

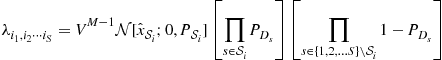

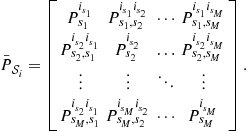

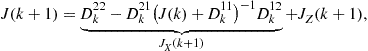

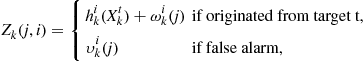

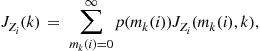

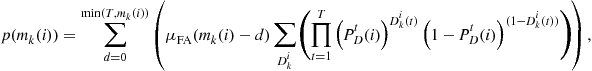

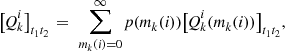

Assume a sensor has monitored an area since time ![]() and at time

and at time ![]() , the measurement set

, the measurement set ![]() is provided by that sensor. From time

is provided by that sensor. From time ![]() to time

to time ![]() , the union of all available measurement sets from that sensor are

, the union of all available measurement sets from that sensor are ![]() . By definition, the PHD

. By definition, the PHD ![]() , with argument single-target state vector

, with argument single-target state vector ![]() and all available measurement sets up to time step

and all available measurement sets up to time step ![]() , is the density whose integral on any region

, is the density whose integral on any region ![]() of the state space is the expected number of targets

of the state space is the expected number of targets ![]() contained in

contained in ![]() . That is,

. That is,

![]() (15.92)

(15.92)

Since this property uniquely characterizes the PHD and since the first order statistical moment of the full target posterior distribution possesses this property, the first order statistical moment of the full target posterior is indeed the PHD. The first moment of the full target posterior or the PHD, given all the measurement sets ![]() up to time step

up to time step ![]() , is given by [76]

, is given by [76]

![]() (15.93)

(15.93)

where ![]() is the multitarget state. The approximate expected target states are given by the local maxima of the PHD. The following section demonstrates the prediction and update steps of one cycle of the PHD filter.

is the multitarget state. The approximate expected target states are given by the local maxima of the PHD. The following section demonstrates the prediction and update steps of one cycle of the PHD filter.

Prediction: In a general scenario of interest, there are target disappearances, target spawning and entry of new targets. We denote the probability that a target with state ![]() at time step

at time step ![]() will survive at time step

will survive at time step ![]() by

by ![]() , the PHD of spawned targets at time step

, the PHD of spawned targets at time step ![]() from a target with state

from a target with state ![]() by

by ![]() and the PHD of newborn spontaneous targets at time step

and the PHD of newborn spontaneous targets at time step ![]() by

by ![]() . Then, the predicted PHD is given by

. Then, the predicted PHD is given by

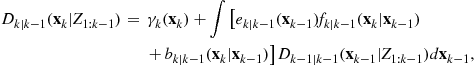

(15.94)

(15.94)

where ![]() denotes the single-target Markov transition density. The prediction Eq. (15.94) is lossless since there are no approximations.

denotes the single-target Markov transition density. The prediction Eq. (15.94) is lossless since there are no approximations.

Update: The predicted PHD can be updated with measurement set ![]() at time step

at time step ![]() to get the posterior PHD. Assume that the number of false alarms is Poisson-distributed with the average number

to get the posterior PHD. Assume that the number of false alarms is Poisson-distributed with the average number ![]() and that the probability density of the spatial distribution of false alarms is

and that the probability density of the spatial distribution of false alarms is ![]() . Let the detection probability of a target with state

. Let the detection probability of a target with state ![]() at time step

at time step ![]() be

be ![]() . Then, the updated PHD at time step

. Then, the updated PHD at time step ![]() is given by

is given by

(15.95)

(15.95)

where the likelihood function ![]() is given by

is given by

![]() (15.96)

(15.96)

and ![]() denotes the single-sensor/single-target likelihood. The update Eq. (15.95) is not lossless since to derive a closed-formula for the update step, it is necessary to assume that the predicted multitarget target state

denotes the single-sensor/single-target likelihood. The update Eq. (15.95) is not lossless since to derive a closed-formula for the update step, it is necessary to assume that the predicted multitarget target state ![]() is approximately a Poisson point process, where the physical distribution of targets is independent and identically distributed (I.I.D.) with a single probability density

is approximately a Poisson point process, where the physical distribution of targets is independent and identically distributed (I.I.D.) with a single probability density ![]() and the target number follows a Poisson distribution. The PHD filter can be implemented thorough the sequential Monte Carlo method [120] or the Gaussian mixture method [121].

and the target number follows a Poisson distribution. The PHD filter can be implemented thorough the sequential Monte Carlo method [120] or the Gaussian mixture method [121].

A generalization of PHD filter, so called Cardinalized PHD (CPHD) filter is proposed in [79]. Besides the target PHD function, the CPHD filter also propagates the entirely probability distribution on target number. The core difference between the CPHD filter and the PHD filter is that in the CPHD filter, it is assumed that the predicted multitarget target state ![]() is approximately an I.I.D. cluster point process, where the physical distribution of targets is still I.I.D. with a single probability density

is approximately an I.I.D. cluster point process, where the physical distribution of targets is still I.I.D. with a single probability density ![]() but the target number now can follow any arbitrary distribution

but the target number now can follow any arbitrary distribution ![]() . A polynomial running time implementation of the CPHD filer using the Gaussian mixture technique has been proposed in [122].

. A polynomial running time implementation of the CPHD filer using the Gaussian mixture technique has been proposed in [122].