Space-Time Adaptive Processing for Radar

William L. Melvin, Georgia Institute of Technology, Atlanta, GA, USA, [email protected]

Abstract

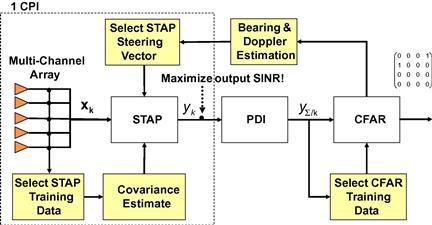

Space-time adaptive processing (STAP) is an important radar technology. It is a cornerstone in the design of modern moving target indication and imaging radar systems. Specifically, STAP is a multidimensional filtering technique that mitigates the influence of clutter or radio frequency interference on principal radar products, viz. radar detections or images. This work serves as a tutorial reference of fundamental STAP concepts and techniques. The interested reader will encounter the basic STAP formulation, common STAP performance metrics, signal models, interference mitigation approaches, an application example corresponding to Doppler spread clutter mitigation in airborne radar systems, current challenges, and some implementation issues. The fundamentals presented herein are extensible to a range of radar signal processing problems.

Keywords

Space-time adaptive processing (STAP); Radar signal processing; Array processing; Radar detection; Radar clutter

2.12.1 Introduction

Space-time adaptive processing (STAP) is a two-dimensional, adaptive filtering technique foundational to modern radar system design and implementation. Generally, spatial sampling is given by the outputs of an array of antenna elements (sometimes called “channels”); the Fourier transform of space yields angle. There are essentially two time measurements in radar: fast-time and slow-time. Fast-time samples correspond to outputs of the analog-to-digital converter abiding with the Nyquist rate for the reflected radar waveform; the Fourier transform of fast-time is radio-frequency (RF), which relates to radar range. The processor collects slow-time voltage samples at a given range realization from distinct, reflected, pulsed transmissions; the Fourier transform of slow-time yields Doppler frequency. Depending on the statistical properties of the interference source, either slow-time or fast-time is applicable. For example, the adaptive suppression of ground clutter received by airborne or spaceborne radar requires joint spatial and slow-time adaptivity since clutter returns appear correlated in the angle-Doppler domain, but are uncorrelated in fast-time. Fast-time adaptive degrees of freedom play in role in wideband and terrain-bounce interference mitigation in both aerospace and surface-based radar systems.

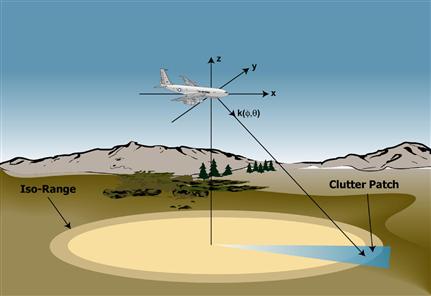

Suppose we consider adaptive mitigation of Doppler-spread ground clutter returns in further detail. This problem arises in airborne and spaceborne radar systems searching for moving targets. The clutter returns are generally much stronger than the target signal, in many cases by orders of magnitude, thereby rendering detection in certain regions of the range-Doppler map virtually impossible using conventional antenna beamforming and Doppler processing techniques. Spatial and Doppler beamforming represent one-dimensional matched filtering operations. The matched filter maximizes signal-to-noise ratio (SNR), thereby maximizing probability of detection for a fixed false alarm rate for uncorrelated, circular Gaussian disturbance [1,2]. STAP is needed when clutter and radio frequency interference (RFI)—colored noise—are present; the STAP applies a space-time weighting incorporating estimated characteristics of the interference environment to asymptotically maximize signal-to-interference-plus-noise ratio (SINR), a sufficient condition to maximize the probability of detection for a fixed false alarm rate under the assumption the disturbance is circular Gaussian [3].

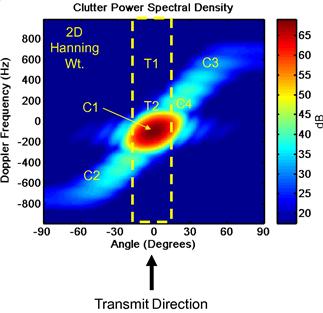

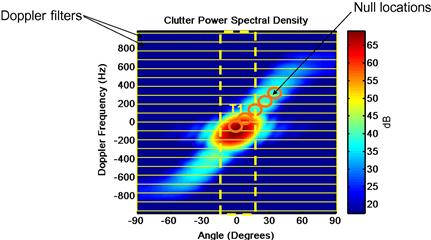

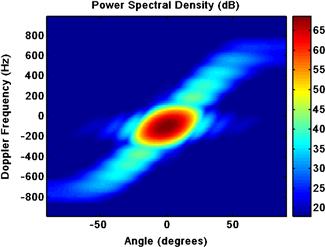

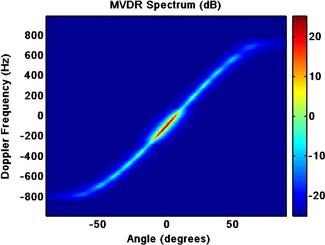

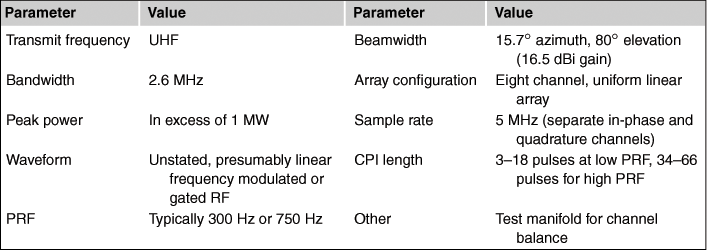

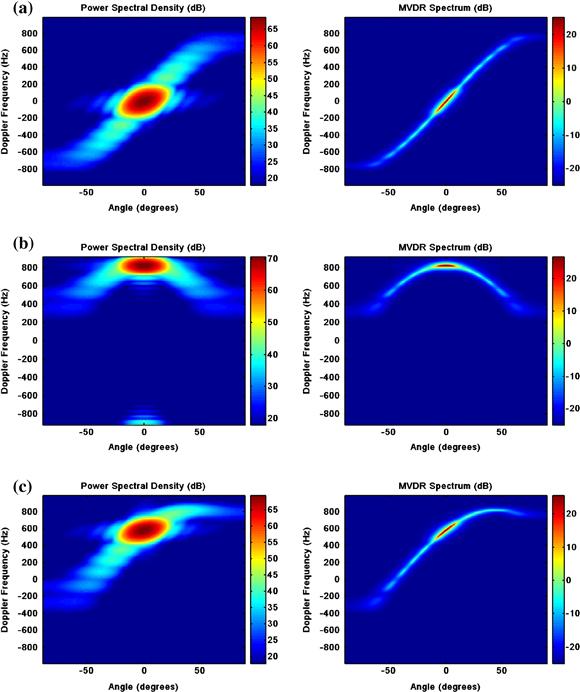

Fixed points on the Earth’s surface exhibit a Doppler frequency shift consistent with their angular displacement from the platform velocity vector, ![]() . In turn, this angular displacement corresponds to a specific angle of arrival at the antenna array. Thus, given a particular azimuth and elevation angle in the antenna coordinate system, the clutter Doppler frequency is precisely specified, and vice versa. The clutter angle-Doppler region of support is described as a ridge in airborne and spaceborne radar. This ridge is a line in sidelooking radar, opens up into an ellipse for varying degrees of platform yaw, and becomes a circle for the forward-looking array. Figure 12.1 provides example power spectral density (PSD) and minimum variance distortionless response (MVDR) super-resolution plots of the clutter angle-Doppler region of support for the side-looking array radar (SLAR), a forward-looking array radar (FLAR), and an array with forty-five (45)° of yaw. Typical radar parameters are used in this example and will be described in further detail in subsequent sections.

. In turn, this angular displacement corresponds to a specific angle of arrival at the antenna array. Thus, given a particular azimuth and elevation angle in the antenna coordinate system, the clutter Doppler frequency is precisely specified, and vice versa. The clutter angle-Doppler region of support is described as a ridge in airborne and spaceborne radar. This ridge is a line in sidelooking radar, opens up into an ellipse for varying degrees of platform yaw, and becomes a circle for the forward-looking array. Figure 12.1 provides example power spectral density (PSD) and minimum variance distortionless response (MVDR) super-resolution plots of the clutter angle-Doppler region of support for the side-looking array radar (SLAR), a forward-looking array radar (FLAR), and an array with forty-five (45)° of yaw. Typical radar parameters are used in this example and will be described in further detail in subsequent sections.

Figure 12.1 Clutter angle-Doppler region of support for three array configurations. (a) PSD (left) and super-resolution clutter image (right) for side-looking array, (b) PSD (left) and super-resolution clutter image (right) for forward-looking array, and (c) PSD (left) and super-resolution clutter image (right) for array with 45° of yaw.

The PSD plots are a result of classical, two-dimensional, Fourier analysis. Resolution is limited in Fourier analysis by roughly the wavelength of the signal divided by the aperture length; to resolve more closely spaced signals, either the wavelength must be decreased for a fixed aperture size, or the physical aperture must be increased for a fixed wavelength. Super-resolution spectral estimators overcome the diffraction limitations of Fourier-based methods, oftentimes providing resolution enhancement by a factor of two to five, or even more. It turns out that STAP is intimately related to the MVDR spectrum, as discussed in Section 2.12.2.2.2. Hence, STAP likewise exhibits super-resolution properties, enabling it to detect slow moving targets buried in mainbeam clutter or targets closely spaced to other interference sources.

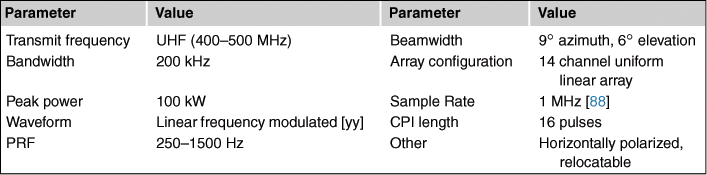

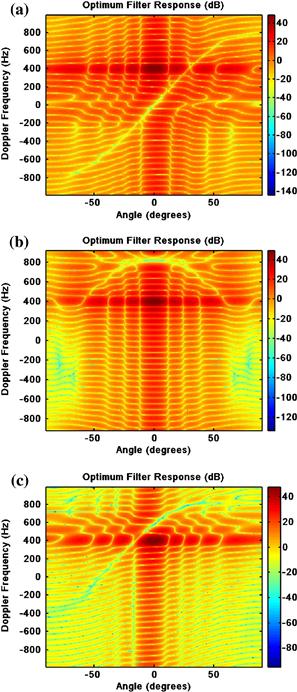

As mentioned, STAP coherently combines spatial and temporal voltage samples from a particular range realization using a data-dependent weighting to maximize SINR. The corresponding adaptive filter frequency response exhibits sharp nulls along the clutter region of support while maximizing the gain at another, specified angle-Doppler location where a potential target might exist. A target with a Doppler frequency outside of the mainlobe clutter Doppler spread (given as the highest power, circular region in the PSD) is called exoclutter, whilst targets within the mainlobe clutter spread are endoclutter. The STAP frequency response null is the inverse of the super-resolution contour shown in Figure 12.1. Hence, STAP itself exhibits super-resolution properties, providing detection performance within the diffraction limits of the traditional space-time aperture. This important characteristic makes STAP invaluable on airborne and spaceborne platforms, enabling drastic reduction in antenna aperture length for a desired minimum detectable velocity (MDV). The asymptotic STAP frequency responses for each of the PSDs in Figure 12.1 are given in Figure 12.2. (The asymptotic STAP response is optimal, since this is the weighting that precisely maximizes SINR.) The filter is tuned to broadside and 400 Hz Doppler.

Figure 12.2 Comparison of STAP frequency response for the three scenarios in Figure 12.1. (a) Side-looking array, (b) forward-looking array, and (c) array with 45° of yaw.

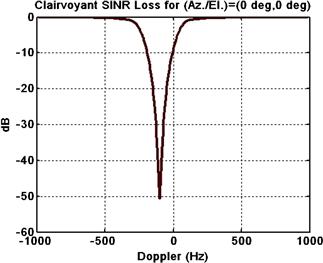

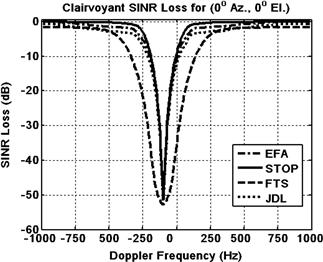

The MDV is the lowest target range-rate where sufficient SINR exists to permit a specified probability of detection for an acceptable probability of false alarm. Conversely, the MDV occurs at those points where the loss in SINR due to clutter is maximally acceptable. Figure 12.3 provides an example of the loss in SINR due to clutter for the radar array configuration of Figures 12.1 and 12.2. SINR loss will be described further in Section 2.12.2. Noise-limited performance is at 0 dB. The depth of the loss null is related to the clutter-to-noise ratio (CNR).

2.12.1.1 Historical overview

Historical overviews of STAP are given in [4,5]. Reed [4] describes the earlier history of adaptive arrays, whilst Klemm [5] covers more contemporary developments in STAP. Additionally, Entzminger et al. [6] discusses the history and future of Joint STARS and ground moving target indication (GMTI), identifying the central role of STAP in the success of both.

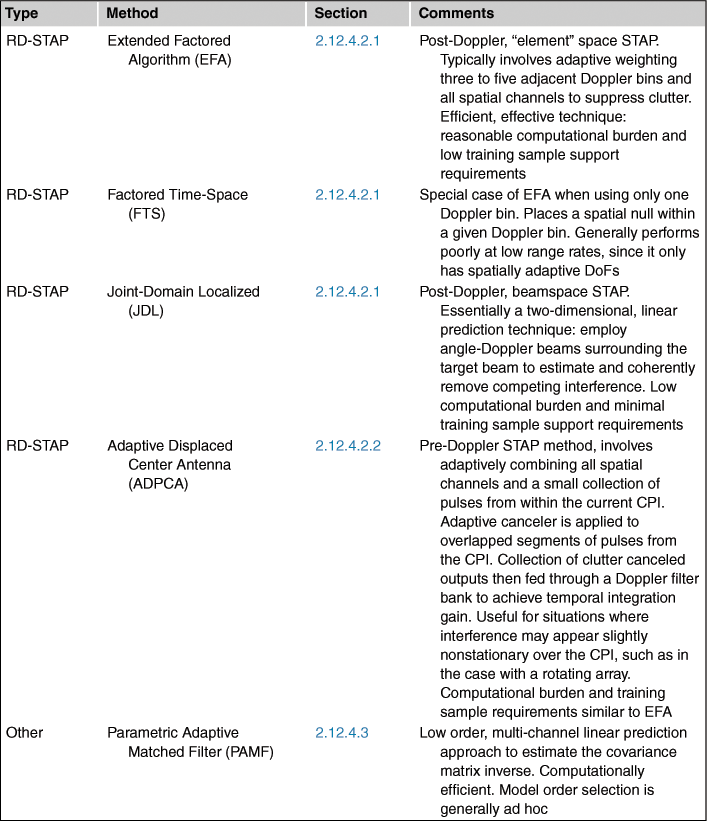

Table 12.1 is an historical accounting of STAP from the author’s perspective, as well as information derived from [4–6]. As such, it attempts to convey some key developments and general trends, but is necessarily incomplete. (Note: references to specific works are provided throughout the remainder of this text. Additionally, Klemm [5] provides an excellent, historical accounting and perspective, and is highly recommended to the interested reader.)

Table 12.1

Some Significant Events in the History of STAP

| Year | Topic |

| 1957 | Paul Howells of General Electric, Syracuse (now Lockheed-Martin), develops technique to electronically scan antenna null in direction of a jammer |

| 1959 | Howells receives US Patent, “Intermediate frequency side-lobe canceller” |

| 1962 | Howells and Sidney Applebaum successfully test five-loop side-lobe canceller |

| 1963 | Howells and Applebaum, at Syracuse University Research Corp. (then Syracuse Research Corp., now SRC) investigate application of adaptive techniques to radar, including OTHR and BMD radar |

| 1965 (1976) | Applebaum publishes “Adaptive Arrays,” SURC TR-66–001, later available in IEEE Transactions on Antennas and Propagation in 1976 |

| 1969 | Lloyd Griffiths publishes an adaptive algorithm for wideband antennas in Proceedings of the IEEE |

| 1969 | V. Anderson, H. Cox and N. Owsley separately publish works on adaptive arrays for sonar |

| 1971 | R.T. Compton describes the application of adaptive arrays for communications systems in Ohio State University Quarterly Rept. 3234–1, December 1971 |

| 1972 | O.L. Frost publishes an adaptive algorithm for antenna arrays incorporating constraints |

| 1972 | Howells and Applebaum investigate adaptive radar for Airborne Early Warning (AEW) radar |

| 1973 | Larry Brennan and Irving Reed publish the seminal paper, “Theory of adaptive radar,” in IEEE Trans. AES |

| 1974 | Reed, John Mallett and Brennan publish a paper describing the Sample Matrix Inverse (SMI) method for adaptive arrays in IEEE Transactions on Aerospace and Electronic Systems |

| 1976 | “Adaptive antenna systems,” published by Bernard Widrow et al. in IEEE Proceedings |

| 1980 | R.A. Monzingo and T.W. Miller publish the book Adaptive Arrays |

| 1983 | Rule for calculating clutter subspace dimension proposed by Richard Klemm in IEEE Proceedings Part F, Vol 130, February 1983 |

| 1991 | Joint STARS prototypes deploy to Gulf War using adaptive clutter suppression methods (1978, Pave Mover was pre-cursor) |

| 1992 | Klemm proposes spatial transform techniques for dimensionality reduction in paper on antenna design for STAP |

| 1992 | Real-time STAP implementation by Alfonso Farina et al. |

| 1992 | Bob DiPietro presents post-Doppler STAP algorithm at Asilomar Conference on Signals, Systems and Computers |

| 1994 | Post-Doppler, beamspace method proposed by Wang and Cai in IEEE Transactions on Aerospace and Electronic Systems |

| 1994 | Jim Ward of MIT Lincoln Lab describes STAP techniques in ESC-TR-94–109, Space–Time Adaptive Processing for Airborne Radar |

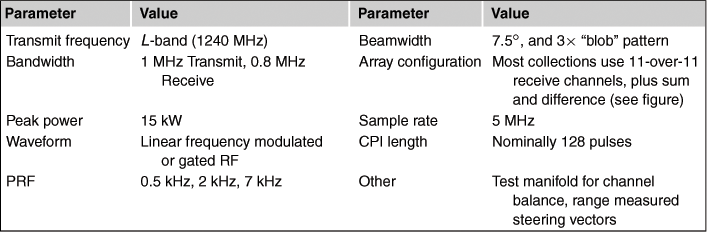

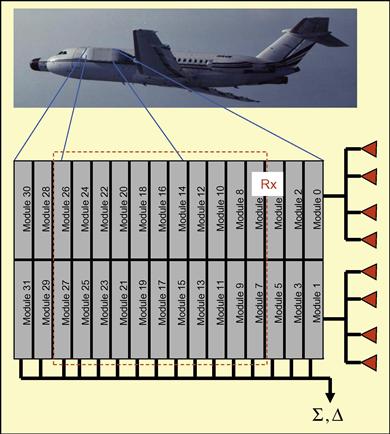

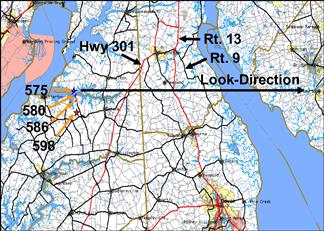

| 1995 | Air Force Rome Laboratory (now Air Force Research Laboratory) and Westinghouse (now Northrop Grumman) collect twenty-four channel airborne radar data for STAP research and development under the Multichannel Airborne Radar Measurements (MCARM) program |

| 1998 | Richard Klemm publishes first STAP text book, Space-Time Adaptive Processing: Principles and Applications |

| 1999 | IEE Electronics and Communications Engineering Journal (ECEJ) Special Issue on STAP (Klemm, Ed.) |

| 1999 | STAP techniques for space-based radar, by Rabideau and Kogon, presented at IEEE Radar Conference |

| 1999 | 3-D STAP for hot and cold clutter mitigation appears in IEEE Trans. AES by Techau, Guerci, Slocumb and Griffiths |

| 2000 | Bistatic STAP techniques appear in literature (numerous authors) |

| 2000 | IEEE Transactions on Aerospace and Electronic Systems Special Section on STAP (William Melvin, Ed.) |

| 2002 | DARPA’s Knowledge-Aided Sensor Signal Processing & Expert Reasoning (KASSPER) commences under leadership of Joseph Guerci |

| 2003 | Guerci publishes the book Space-Time Adaptive Processing for Radar |

| 2004 | IEE publishers release the book The Applications of Space-Time Processing (Klemm, Ed.) |

| c. 2005 | Multi-Input, Multi-Output (MIMO) adaptive systems (numerous authors) |

| 2006 | IEEE Transactions on Aerospace and Electronic Systems, Special Section on Knowledge-Aided Signal and Data Processing (Melvin, Guerci, Ed.) |

| 2009 | Cognitive Radar (Guerci) |

2.12.1.2 Organization

We organize the remaining discussion as follows:

• Section 2.12.2 describes basic concepts, include target detection; different space-time optimal filter formulations, including the maximum SINR filter, the minimum variance beamformer, and the generalized sidelobe canceler; the sample matrix inversion (SMI) approach to adaptive filtering; and, key performance metrics, such as SINR loss.

• Section 2.12.3 discusses clutter, RFI, target, and receiver space-time signal models.

• Section 2.12.4 discusses different adaptive filter topologies, including several reduced-rank methods, reduced-dimension STAP, and parametric adaptive matched filtering.

• Section 2.12.5 describes the utility of STAP to clutter suppression in airborne radar, as an example. This section also includes benchmark results for some of the algorithms discussed in Section 2.12.4.

• Much of current STAP research focuses on extending textbook discussion to real-world application. Section 2.12.6 describes various STAP challenges and research areas, including the impact of heterogeneous or nonstationary clutter on detection performance and mitigating techniques, application to airborne bistatic and conformal array radar, and knowledge-aided STAP. Each of these challenge areas predominantly focuses on issues surrounding effective estimation of the interference covariance matrix.

• A description of some additional, practical issues in STAP implementation is given in Section 2.12.7, including maximum likelihood angle estimation and adaptive matched filter normalization.

• In Section 2.12.8 we briefly discuss some multichannel radar data collection efforts.

• Summary comments are given in Section 2.12.9, along with notation and acronym lists in the appendices.

2.12.1.3 Key points

STAP is a key component of modern radar. Some important points are given below:

• STAP is really a catch-all phrase for a variety of weight calculation schemes and adaptive architectures.

• Radar terminology usually includes two time measurements: fast-time, corresponding to a sample rate consistent with the analog-to-digital converter clock speed and effectively measuring range; and, slow-time, where the pulse repetition interval (PRI) defines the sample rate and separating targets and clutter in Doppler frequency is the primary motivation. Space and slow-time are used to mitigate ground clutter returns in aerospace radar, whereas space and fast-time are used to cope with wideband RFI or multipath signals in aerospace or surface radar systems.

• Herein, we focus on space and slow-time adaptive processing for clutter suppression. The ideas discussed are easily extended to other radar degrees of freedom (DoFs), include fast-time, polarization, and multiple passes.

• Implementation of the optimal processor requires precise knowledge of the null-hypothesis covariance matrix and the target space-time steering vector. The adaptive filter is an approximation to the optimal processor, since neither covariance matrix nor steering vector are known in practice. There are two primary factors leading to differences between adaptive and optimal filter outputs: covariance matrix estimation error and unknown array manifold due to amplitude and phase error as a function of frequency. Adaptive SINR loss describes the impact of both of these errors on detection performance potential.

• Current STAP research focuses on coupling basic STAP theory, physics-based models, and the realities of the real-world operating environment to construct practical detection architectures.

• STAP is applied at the coherent processing interval (CPI) level, often typically prior to noncoherent—or post-detection—integration. Maximizing SINR is always effective at improving detection performance, since the separation between the null and alternative hypothesis output probability density functions increases.

• In many respects, STAP development is in its early stages, at least from an implementation perspective. It has been only recently that embedded computing power has improved to the point where more flexible, real-time STAP implementation is feasible.

2.12.2 Basic concepts

In this section we discuss radar space-time detection and several approaches to calculate the optimal space-time weight vector: the maximum SINR filter, the minimum variance beamformer, the generalized sidelobe canceler, and use of the Rayleigh quotient and generalized eigen-analysis. We introduce the sample matrix inverse adaptive filter as the most common of the various adaptive implementations used in radar application. We also discuss several important STAP metrics, such as clairvoyant and adaptive SINR loss and improvement factor.

2.12.2.1 Detection

Suppose we collect M spatial samples across the antenna array for range realization k and each of the N received pulses. These voltages are organized in a vector as

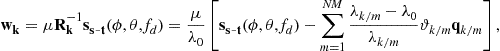

![]() (12.1)

(12.1)

where ![]() is the space-time snapshot and

is the space-time snapshot and ![]() is the spatial snapshot for the nth pulse. Two hypotheses correspond to (12.1): the null hypothesis,

is the spatial snapshot for the nth pulse. Two hypotheses correspond to (12.1): the null hypothesis, ![]() , where only additive interference and noise are present; and, the alternative hypothesis,

, where only additive interference and noise are present; and, the alternative hypothesis, ![]() , which includes target presence in addition to the components of the null hypothesis. Defining

, which includes target presence in addition to the components of the null hypothesis. Defining ![]() as the clutter space-time snapshot,

as the clutter space-time snapshot, ![]() as the RFI space-time snapshot,

as the RFI space-time snapshot, ![]() as the uncorrelated noise space-time snapshot, and

as the uncorrelated noise space-time snapshot, and ![]() the target space-time snapshot, we have

the target space-time snapshot, we have

![]() (12.2)

(12.2)

The radar detection problem is to decide, given ![]() , which of the two hypotheses is most probable. It is shown in [3] that maximizing SINR is equivalent to maximizing probability of detection for a fixed false alarm rate when

, which of the two hypotheses is most probable. It is shown in [3] that maximizing SINR is equivalent to maximizing probability of detection for a fixed false alarm rate when ![]() is jointly complex normal with zero mean and covariance matrix,

is jointly complex normal with zero mean and covariance matrix, ![]() , viz.

, viz. ![]() . We wish to combine the elements of

. We wish to combine the elements of ![]() using a linear, finite impulse response (FIR) filter, and compare the scalar to a threshold,

using a linear, finite impulse response (FIR) filter, and compare the scalar to a threshold, ![]() , to decide which hypothesis is a best fit,

, to decide which hypothesis is a best fit,

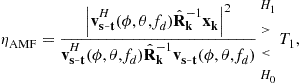

(12.3)

(12.3)

where ![]() is the space-time weight vector.

is the space-time weight vector.

Figure 12.4 shows probability of detection, ![]() , as a function of output SINR for varying probability of false alarm,

, as a function of output SINR for varying probability of false alarm, ![]() . The curves correspond to nonfluctuating and Swerling 1 target types for

. The curves correspond to nonfluctuating and Swerling 1 target types for ![]() and

and ![]() . As seen,

. As seen, ![]() increases monotonically with SINR. Also, it is seen that for reasonably high

increases monotonically with SINR. Also, it is seen that for reasonably high ![]() we generally need higher SINR to detect a Swerling 1 target at the same rate as the nonfluctuating target due to fading; the SINR difference between Swerling 1 and nonfluctating detection curves is called fluctuation loss.

we generally need higher SINR to detect a Swerling 1 target at the same rate as the nonfluctuating target due to fading; the SINR difference between Swerling 1 and nonfluctating detection curves is called fluctuation loss.

2.12.2.2 Space-time filter formulations

2.12.2.2.1 Maximum SINR filter

A primary objective in STAP is to approximate as closely as possible the optimal weighting that maximizes SINR. By attempting to maximize output SINR, the STAP maximizes the probability of detection for a fixed false alarm rate, as discussed in the prior section. We now formulate the optimal weight vector.

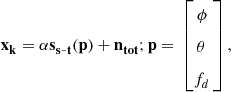

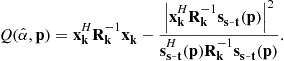

The target snapshot is of the form, ![]() , where

, where ![]() is a complex gain term proportional to the square root of the target cross section,

is a complex gain term proportional to the square root of the target cross section, ![]() is the space-time steering vector of dimension NM-by-one,

is the space-time steering vector of dimension NM-by-one, ![]() is azimuth,

is azimuth, ![]() is elevation, and

is elevation, and ![]() is Doppler frequency. In the nonfluctuating target case,

is Doppler frequency. In the nonfluctuating target case, ![]() is a constant, whereas in the Swerling 1 case,

is a constant, whereas in the Swerling 1 case, ![]() , where

, where ![]() is the target signal variance [1,7,8]. The space-time steering vector is the Kronecker product of the spatial steering vector,

is the target signal variance [1,7,8]. The space-time steering vector is the Kronecker product of the spatial steering vector, ![]() , and the temporal steering vector,

, and the temporal steering vector, ![]() . Generally, the spatial steering vector describes the phase variation among the spatial antenna channels (of common size and gain) to a signal with a direction of arrival of

. Generally, the spatial steering vector describes the phase variation among the spatial antenna channels (of common size and gain) to a signal with a direction of arrival of ![]() , whereas the temporal steering vector describes the phase variation over the slow-time aperture to a signal with a particular range-rate [9–11]. If the aperture in either space or time is uniformly sampled, the corresponding steering vector will be Vandermonde.

, whereas the temporal steering vector describes the phase variation over the slow-time aperture to a signal with a particular range-rate [9–11]. If the aperture in either space or time is uniformly sampled, the corresponding steering vector will be Vandermonde.

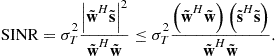

The output SINR of the FIR filter with weight vector, ![]() , is the ratio of target power,

, is the ratio of target power, ![]() , to interference-plus-noise power,

, to interference-plus-noise power, ![]() , given as

, given as

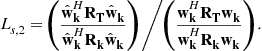

(12.4)

(12.4)

Using the definition for ![]() gives,

gives, ![]() , and the Schwarz Inequality lets us write (12.4) as

, and the Schwarz Inequality lets us write (12.4) as

(12.5)

(12.5)

In this case, ![]() and

and ![]() . By inspection, we find that the right side of the inequality achieves the upper bound when

. By inspection, we find that the right side of the inequality achieves the upper bound when ![]() , thereby providing the solution for the optimal weight vector. Through substitution, it follows that

, thereby providing the solution for the optimal weight vector. Through substitution, it follows that

![]() (12.6)

(12.6)

for arbitrary scalar, ![]() , is the weighting that yields maximal output SINR. When the disturbance is uncorrelated noise,

, is the weighting that yields maximal output SINR. When the disturbance is uncorrelated noise, ![]() , where

, where ![]() is the noise power; then, (12.6) becomes the space-time matched filter, viz.

is the noise power; then, (12.6) becomes the space-time matched filter, viz. ![]() .

.

Inserting the optimal weight vector, ![]() , into (12.4) yields the maximum output SINR,

, into (12.4) yields the maximum output SINR,

![]() (12.7)

(12.7)

For simplicity, and without loss in meaning, in subsequent sections we use ![]() .

.

The maximum SINR weight vector has the following interpretation. If ![]() , then

, then ![]() is the whitened data vector, where

is the whitened data vector, where ![]() and

and ![]() is the NM-by-NM identity matrix. This results because

is the NM-by-NM identity matrix. This results because

![]() (12.8)

(12.8)

Then, the filter employing the maximum SINR weight vector is seen as a cascade of whitening and warped matched filtering operations,

![]() (12.9)

(12.9)

where ![]() is the warped matched filter accounting for the linear transformation of the target signal through the whitening process and

is the warped matched filter accounting for the linear transformation of the target signal through the whitening process and ![]() is the original matched filter weight vector.

is the original matched filter weight vector.

2.12.2.2.2 Minimum variance beamformer

The minimum variance (MV) space-time beam former is another common formulation leading to the adaptive processor [12,13]. The MV beamformer employs a weight vector yielding minimal output power subject to a linear constraint on the desired target spatial and temporal response:

![]() (12.10)

(12.10)

where g is a complex scalar. We can determine the weight vector satisfying the problem statement in (12.10) using the method of Lagrange. Thus, define the following cost function

![]() (12.11)

(12.11)

where ![]() is a complex Lagrangian multiplier and

is a complex Lagrangian multiplier and ![]() . Taking the gradient of (12.11) with respect to the conjugate of the weights and setting to zero gives

. Taking the gradient of (12.11) with respect to the conjugate of the weights and setting to zero gives

![]() (12.12)

(12.12)

Solving (12.12) for the weight vector yields

![]() (12.13)

(12.13)

Next, we find by applying the constraint in (12.10) that

![]() (12.14)

(12.14)

Solving (12.14) for ![]() then gives

then gives

![]() (12.15)

(12.15)

The minimum variance weight vector follows from (12.13) and (12.15) as

(12.16)

(12.16)

Equation (12.16) abides by the form ![]() , where

, where

![]() (12.17)

(12.17)

Hence, (12.16) likewise yields maximal SINR.

Setting ![]() is known as the distortionless response, and the corresponding weight vector yields the minimum variance distortionless response (MVDR) beamformer. The output power of the MVDR beamformer is given as

is known as the distortionless response, and the corresponding weight vector yields the minimum variance distortionless response (MVDR) beamformer. The output power of the MVDR beamformer is given as

![]() (12.18)

(12.18)

The MVDR spectrum, as given in Figure 12.1, follows from (12.18) by scanning the space-time steering vector in the denominator over the angle and Doppler locations of interest.

2.12.2.2.3 Generalized sidelobe canceler

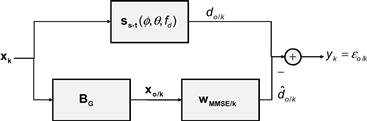

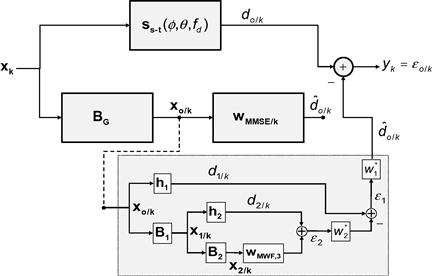

The GSLC is a formulation that conveniently converts the minimum variance constrained beamformer described in the preceding section into an unconstrained form [12,14]. Many prefer the GSLC interpretation of STAP over the whitening filter-warped matched filter interpretation of Section 2.12.2.2.1; since the GSLC implements the MV beamformer for the single linear constraint [14], this structure likewise can be shown to maximize output SINR.

Figure 12.5 provides a block diagram of the GSLC. The top leg of the GSLC generates a quiescent response by forming a beam at the angle and Doppler of interest. A blocking matrix, ![]() , prevents the target signal from entering the lower leg; essentially, the blocking matrix forms a notch filter tuned to

, prevents the target signal from entering the lower leg; essentially, the blocking matrix forms a notch filter tuned to ![]() . With the desired signal absent from the lower leg, the processor tunes the weight vector to provide a minimal mean square error (MMSE) estimate of the interference in the top leg. In the final step, the processor differences the desired signal,

. With the desired signal absent from the lower leg, the processor tunes the weight vector to provide a minimal mean square error (MMSE) estimate of the interference in the top leg. In the final step, the processor differences the desired signal, ![]() , with the estimate from the lower leg,

, with the estimate from the lower leg, ![]() , to form the filter output,

, to form the filter output, ![]() . Ideally, any large residual at the output corresponds to an actual target.

. Ideally, any large residual at the output corresponds to an actual target.

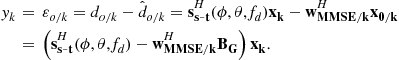

The desired signal is given as

![]() (12.19)

(12.19)

The signal vector in the lower leg is

![]() (12.20)

(12.20)

Forming the quiescent response of (12.19) uses a single degree of freedom (DoF), resulting in the reduced dimensionality of ![]() . By weighting the signal vector in the lower leg, the GSLC forms a MMSE estimate of

. By weighting the signal vector in the lower leg, the GSLC forms a MMSE estimate of ![]() as

as

![]() (12.21)

(12.21)

where the MMSE weight vector follows from the well-known Wiener-Hopf equation [12,13],

![]() (12.22)

(12.22)

The lower leg covariance matrix is

![]() (12.23)

(12.23)

whilst the cross-correlation between lower and upper legs is

![]() (12.24)

(12.24)

The GSLC filter output is then

(12.25)

(12.25)

Comparing (12.25) and (12.3), we identify

![]() (12.26)

(12.26)

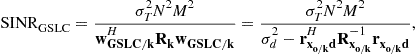

We compute the output SINR as the ratio of output signal power to interference-plus-noise power, as in (12.4). The signal-only output power of the GSLC is

(12.27)

(12.27)

and the output interference-plus-noise power is

![]() (12.28)

(12.28)

The ratio of (12.27) and (12.28) is

(12.29)

(12.29)

where ![]() . We arrive at the denominator in (12.29) by using (12.22)–(12.24),

. We arrive at the denominator in (12.29) by using (12.22)–(12.24),

(12.30)

(12.30)

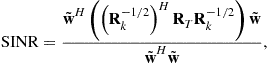

2.12.2.2.4 Rayleigh quotient

The optimal weight vector in Section 2.12.2.2.1 maximizes SINR. As seen from (12.4), for weight vector ![]() , SINR is given as the ratio of quadratics,

, SINR is given as the ratio of quadratics, ![]() . Thus, our problem is to find the weight vector that maximizes this function. In accord with [15], and similar to our discussion in Section 2.12.2.2.1, SINR is written as

. Thus, our problem is to find the weight vector that maximizes this function. In accord with [15], and similar to our discussion in Section 2.12.2.2.1, SINR is written as

(12.31)

(12.31)

where ![]() . The weight vector,

. The weight vector, ![]() , that maximizes this Rayleigh quotient form is known to be proportional to the eigenvector

, that maximizes this Rayleigh quotient form is known to be proportional to the eigenvector ![]() associated with the largest eigenvalue

associated with the largest eigenvalue ![]() of the matrix in the numerator [13],

of the matrix in the numerator [13],

![]() (12.32)

(12.32)

and so

![]() (12.33)

(12.33)

Take the case where ![]() , as previously introduced in Section 2.12.2.2.1. The maximum eigenvector in (12.32) must abide by

, as previously introduced in Section 2.12.2.2.1. The maximum eigenvector in (12.32) must abide by

![]() (12.34)

(12.34)

The solution for ![]() in (12.34) is thus seen to be

in (12.34) is thus seen to be

![]() (12.35)

(12.35)

and so, as expected,

![]() (12.36)

(12.36)

(Note: inserting (12.35) in (12.34) gives ![]() times a scalar on each side of the equation, thereby satisfying the eigen-relation.)

times a scalar on each side of the equation, thereby satisfying the eigen-relation.)

By plugging ![]() found in (12.33) into (12.4), it is straightforward to show that the maximum SINR is equal to

found in (12.33) into (12.4), it is straightforward to show that the maximum SINR is equal to ![]() . In this vein, using (12.35) in (12.34) gives

. In this vein, using (12.35) in (12.34) gives

![]() (12.37)

(12.37)

which is the desired result and matches the expression given in (12.7).

2.12.2.2.5 Generalized eigen-analysis

The second approach, described in [13], begins by noting that the solution to maximizing SINR is equivalent to finding the maximum eigenvalue and associated eigenvector for the generalized Eigen-problem

![]() (12.38)

(12.38)

This can be solved as an ordinary eigen-equation by writing it as

![]() (12.39)

(12.39)

Then, the weight vector that maximizes (12.4) is proportional to the eigenvector ![]() associated with the largest eigenvalue

associated with the largest eigenvalue ![]() of

of ![]() ,

,

![]() (12.40)

(12.40)

The maximum eigenvalue of ![]() is the maximum achievable SINR in (12.4).

is the maximum achievable SINR in (12.4).

If we again consider the case where ![]() , the generalized eigen-analysis equation of (12.39) becomes

, the generalized eigen-analysis equation of (12.39) becomes

![]() (12.41)

(12.41)

which, we find, is satisfied when ![]() . Substituting this eigenvector,

. Substituting this eigenvector, ![]() , into (12.41) gives,

, into (12.41) gives,

![]() (12.42)

(12.42)

from which it is seen the maximum eigenvalue, ![]() , is identical to the result in (12.37) and also the same as the maximum SINR expression found in Section 2.12.2.2.1.

, is identical to the result in (12.37) and also the same as the maximum SINR expression found in Section 2.12.2.2.1.

2.12.2.2.6 Summary

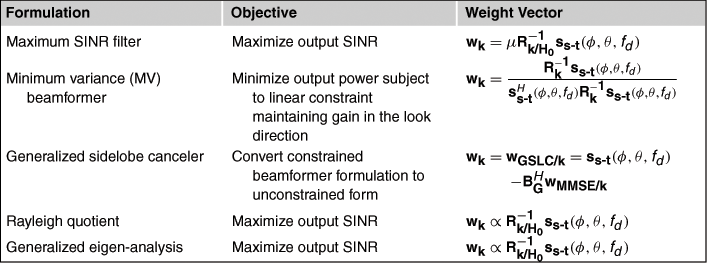

Table 12.2 summarizes the weight vector formulation given in the prior sections.

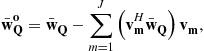

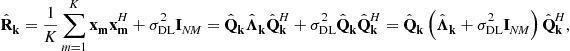

2.12.2.3 Sample matrix inversion

All of the previously described approaches to filter design presume the availability of the interference-plus-noise covariance matrix for the kth realization, ![]() , and perfectly known target space-time steering vector,

, and perfectly known target space-time steering vector, ![]() . In practice, neither

. In practice, neither ![]() nor

nor ![]() is known. The disturbance covariance matrix,

is known. The disturbance covariance matrix, ![]() , changes as look-angle, platform location, and platform attitude change. Errors in

, changes as look-angle, platform location, and platform attitude change. Errors in ![]() are generally a result of straddling the precise target spatial or temporal frequency when searching over angle and Doppler, as well as system hardware errors that most greatly affect the spatial component,

are generally a result of straddling the precise target spatial or temporal frequency when searching over angle and Doppler, as well as system hardware errors that most greatly affect the spatial component, ![]() . These system errors generally manifest as spatially varying, complex gain errors due to factors such as spatial variation in element gain patterns, varying line lengths, radome and airframe interactions, etc.

. These system errors generally manifest as spatially varying, complex gain errors due to factors such as spatial variation in element gain patterns, varying line lengths, radome and airframe interactions, etc.

A number of techniques are available to adapt the weight vector, including least means square (LMS) and recursive least squares (RLS) formulations [12]. However, the sample matrix inversion (SMI) method is by far the most popular choice for radar application for two primary reasons: (1) convergence of the SMI approach depends only on the number of samples used to estimate the unknown interference-plus-noise covariance matrix, regardless of environmental conditions as long as the data are independent and identically distributed (IID), for a system of specified DoFs; and, (2) prior to processing, radar systems generally collect their data in blocks, called coherent processing intervals (CPIs), and so a batch processing strategy using SMI is fully acceptable. In the SMI approach, the optimal weight vector,

![]() (12.43)

(12.43)

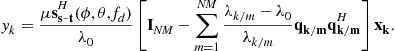

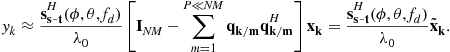

is simply replaced with the adaptive weight vector,

![]() (12.44)

(12.44)

where ![]() is a scalar that often depends on estimated quantities,

is a scalar that often depends on estimated quantities, ![]() is the interference-plus-noise covariance matrix estimate, and

is the interference-plus-noise covariance matrix estimate, and ![]() is the hypothesized space-time steering vector. In the absence of straddle error, system errors dominate the error between

is the hypothesized space-time steering vector. In the absence of straddle error, system errors dominate the error between ![]() and

and ![]() . Generally, array errors are explicitly modeled as

. Generally, array errors are explicitly modeled as

![]() (12.45)

(12.45)

where ![]() is the spatially-varying error between the ideal and actual array manifold, and

is the spatially-varying error between the ideal and actual array manifold, and ![]() and

and ![]() are Kronecker (tensor) and Hadamard (element-wise) products, respectively.

are Kronecker (tensor) and Hadamard (element-wise) products, respectively.

In practice, ![]() is “calibrated out” of the system using a variety of techniques, including array tuning on an antenna range or in situ using the clutter background and navigation data to estimate differences between ideal and actual multi-channel antenna responses [16]. Correspondingly, the hypothesized space-time steering vector is then

is “calibrated out” of the system using a variety of techniques, including array tuning on an antenna range or in situ using the clutter background and navigation data to estimate differences between ideal and actual multi-channel antenna responses [16]. Correspondingly, the hypothesized space-time steering vector is then

![]() (12.46)

(12.46)

where ![]() is an estimate of the array error vector, and

is an estimate of the array error vector, and ![]() and

and ![]() are otherwise the hypothesized temporal and spatial steering vectors accounting for potential straddle. Temporal errors due to system non-ideality are commonly very small for the typical STAP CPI, and thus usually are not considered further.

are otherwise the hypothesized temporal and spatial steering vectors accounting for potential straddle. Temporal errors due to system non-ideality are commonly very small for the typical STAP CPI, and thus usually are not considered further.

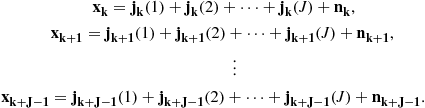

The complex baseband, pulse compressed, space-time snapshots comprise the voltage vectors for the pth CPI,

![]() (12.47)

(12.47)

A particular realization, ![]() , chosen from amongst the space-time vectors in (12.47) for filtering and detection thresholding is called the cell-under-test (CUT); multiple CUTs form the primary data set. The remaining vectors in (12.47) are available to estimate the unknown interference-plus-noise covariance matrix and are called training or secondary data. The primary data set can be as small as a single CUT with several adjacent realizations serving as guard cells; the purpose of the guard cell region is to prevent any target energy from leaking into the training interval. Let

, chosen from amongst the space-time vectors in (12.47) for filtering and detection thresholding is called the cell-under-test (CUT); multiple CUTs form the primary data set. The remaining vectors in (12.47) are available to estimate the unknown interference-plus-noise covariance matrix and are called training or secondary data. The primary data set can be as small as a single CUT with several adjacent realizations serving as guard cells; the purpose of the guard cell region is to prevent any target energy from leaking into the training interval. Let ![]() be the number of guard cells on either side of the primary data region. Then, define the training set as

be the number of guard cells on either side of the primary data region. Then, define the training set as

![]() (12.48)

(12.48)

It is shown in [17] that if the data vectors comprising the training interval—the columns of (12.48)—are IID with respect to the null-hypothesis of the CUT, a maximum likelihood estimate for ![]() is

is

![]() (12.49)

(12.49)

A fundamental question centers on how many training samples, ![]() , lead to an acceptable covariance matrix estimate. This matter was addressed in [17] and considered in further detail in Section 2.12.2.4.2.

, lead to an acceptable covariance matrix estimate. This matter was addressed in [17] and considered in further detail in Section 2.12.2.4.2.

2.12.2.4 Metrics

In radar, performance is generally measured according to the specific goals of the collection and processing mode. In moving target indication (MTI) radar, probability of detection, ![]() , and false alarm rate (FAR)—or probability of false alarm,

, and false alarm rate (FAR)—or probability of false alarm, ![]() —are the primary measures of performance. As discussed in Section 2.12.2.1, the probability of detection is a monotonic function of output SINR for a fixed false alarm rate. Thus, measures of SINR are key to understanding achievable system performance. Among these measures, SINR loss metrics are preferable, since they characterize performance over certain independent variables—usually range rate or Doppler frequency, as shown in Figure 12.3—and are not tied to a specific radar cross section, thus folding into traditional link budget analysis. Specifically, given SNR calculated from the radar range equation [1,7,8] or measured in the field, the output SINR is

—are the primary measures of performance. As discussed in Section 2.12.2.1, the probability of detection is a monotonic function of output SINR for a fixed false alarm rate. Thus, measures of SINR are key to understanding achievable system performance. Among these measures, SINR loss metrics are preferable, since they characterize performance over certain independent variables—usually range rate or Doppler frequency, as shown in Figure 12.3—and are not tied to a specific radar cross section, thus folding into traditional link budget analysis. Specifically, given SNR calculated from the radar range equation [1,7,8] or measured in the field, the output SINR is

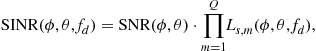

(12.50)

(12.50)

where ![]() is the mth SINR loss term and

is the mth SINR loss term and ![]() . (N.b., the term “SINR loss” is widely used, even though such losses are negative valued on a decibel scale. This oftentimes contradicts typical radar systems engineering usage. However, the losses are numerator terms in the full link calculation.) In general, SINR losses vary with angle and Doppler, since the interference PSD likewise varies.

. (N.b., the term “SINR loss” is widely used, even though such losses are negative valued on a decibel scale. This oftentimes contradicts typical radar systems engineering usage. However, the losses are numerator terms in the full link calculation.) In general, SINR losses vary with angle and Doppler, since the interference PSD likewise varies.

In imaging radar, terrain-to-noise ratio (TNR) is a primary metric and is a function of multiplicative noise ratio and SINR loss. We focus our attention specifically on MTI radar, but the basic metrics are adaptable.

2.12.2.4.1 Clairvoyant SINR loss

Clairvoyant SINR loss is the ratio of output SINR for a filter implementation, ![]() , where all parameters are known precisely, to the maximum SNR. Clairvoyant SINR is given as

, where all parameters are known precisely, to the maximum SNR. Clairvoyant SINR is given as

(12.51)

(12.51)

A few cases are worth considering:

Case 1—Noise-Limited Condition: In this case, ![]() , where

, where ![]() is the noise power. Then, the maximum SINR weight vector,

is the noise power. Then, the maximum SINR weight vector,

![]() (12.52)

(12.52)

and so

(12.53)

(12.53)

As expected, the optimal weighting defaults to the matched filter and there is no loss relative to the bound defined by the uncorrelated noise.

Case 2—Color-Limited Condition, Matched Filter Weights: Clairvoyant loss characterizes the impact of interference on detection performance. Consider the case where the weight vector is set to the space-time matched filter, ![]() . The clairvoyant SINR loss follows from (12.51) as

. The clairvoyant SINR loss follows from (12.51) as

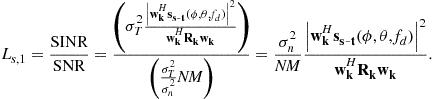

(12.54)

(12.54)

where ![]() is the power spectral density (PSD), equal to the two-dimensional Fourier transform of the space-time covariance matrix [12]; Figure 12.1 provides example clutter-plus-noise PSD plots. Equation (12.54) shows the impact of interference on performance relative to the noise-limited case. Since the PSD is diffraction-limited (the mainlobe is determined by the size of the space-time aperture), (12.54) characterizes the performance impacts of using the deterministic matched filter. At those angles and Doppler frequencies away from the clutter angle-Doppler region of support, the clairvoyant SINR loss approaches the noise-limited case.

is the power spectral density (PSD), equal to the two-dimensional Fourier transform of the space-time covariance matrix [12]; Figure 12.1 provides example clutter-plus-noise PSD plots. Equation (12.54) shows the impact of interference on performance relative to the noise-limited case. Since the PSD is diffraction-limited (the mainlobe is determined by the size of the space-time aperture), (12.54) characterizes the performance impacts of using the deterministic matched filter. At those angles and Doppler frequencies away from the clutter angle-Doppler region of support, the clairvoyant SINR loss approaches the noise-limited case.

Case 3—Color-Limited Condition, Optimal Weights: Using the maximum SINR weight vector, ![]() , in (12.51), gives

, in (12.51), gives

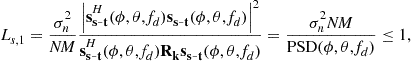

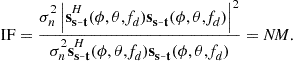

(12.55)

(12.55)

where ![]() is a sample of the MVDR spectrum given in (12.18). Considering the MVDR plots in Figure 12.1, (12.55) suggests regions of loss confined to the sharp, super-resolution contours of the clutter MVDR response (note the inverse in (12.55)). For this reason, STAP is able to detect targets in close proximity to the center of the clutter angle-Doppler region of support. In contrast, (12.54) suggests that SINR loss extends to the full width of the diffraction-limited spectrum when using nonadaptive weights. Figure 12.25, given in Section 2.12.5.3, compares SINR loss for adaptive and nonadaptive solutions, confirming these observations.

is a sample of the MVDR spectrum given in (12.18). Considering the MVDR plots in Figure 12.1, (12.55) suggests regions of loss confined to the sharp, super-resolution contours of the clutter MVDR response (note the inverse in (12.55)). For this reason, STAP is able to detect targets in close proximity to the center of the clutter angle-Doppler region of support. In contrast, (12.54) suggests that SINR loss extends to the full width of the diffraction-limited spectrum when using nonadaptive weights. Figure 12.25, given in Section 2.12.5.3, compares SINR loss for adaptive and nonadaptive solutions, confirming these observations.

2.12.2.4.2 Adaptive SINR loss

Adaptive SINR loss is the ratio of the output SINR for the filter using estimated quantities, ![]() , to the optimal filter output,

, to the optimal filter output, ![]() , where all parameters are clairvoyantly known, viz.

, where all parameters are clairvoyantly known, viz.

(12.56)

(12.56)

Observe that when ![]() . Moreover,

. Moreover, ![]() , since

, since ![]() yields the maximum output SINR and so the denominator in (12.56) will always be greater than or equal to the numerator.

yields the maximum output SINR and so the denominator in (12.56) will always be greater than or equal to the numerator.

Substituting (12.43) and (12.44) into (12.56) yields

![]() (12.57)

(12.57)

where ![]() is given by (12.7). Assuming

is given by (12.7). Assuming ![]() uses (12.49) in its calculation, as (12.57) suggests, then determining

uses (12.49) in its calculation, as (12.57) suggests, then determining ![]() is critical. This important matter was addressed in [17], where it is shown that (12.57) is Beta distributed, with mean value

is critical. This important matter was addressed in [17], where it is shown that (12.57) is Beta distributed, with mean value

![]() (12.58)

(12.58)

Setting (12.58) equal to ![]() (or, 3 dB of loss) and solving for

(or, 3 dB of loss) and solving for ![]() yields

yields

![]() (12.59)

(12.59)

It is popular to refer to the result in (12.59), where setting ![]() roughly equal to twice the processor’s DoFs yields 3 dB of loss, as the Reed-Mallett-Brennan (RMB) rule after its originators. In practice, 3 dB of loss is substantial, and so choosing

roughly equal to twice the processor’s DoFs yields 3 dB of loss, as the Reed-Mallett-Brennan (RMB) rule after its originators. In practice, 3 dB of loss is substantial, and so choosing ![]() to be at least five times the processor’s DoF is desirable.

to be at least five times the processor’s DoF is desirable.

The IID assumption inherent in the calculation of (12.57) sets the bound on performance for the adaptive processor given ![]() homogeneous training samples. We address the impact of non-IID clutter conditions in further detail in Section 2.12.5.

homogeneous training samples. We address the impact of non-IID clutter conditions in further detail in Section 2.12.5.

2.12.2.4.3 Improvement factor

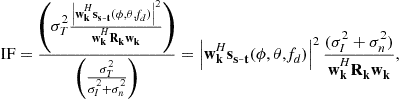

Improvement factor (IF) is given as the ratio of the output SINR to the input (element-level) SINR [18,19]. IF is given as

(12.60)

(12.60)

where ![]() is the input (element-level) interference power.

is the input (element-level) interference power.

Case 1—Noise-Limited Condition: In the noise-limited case, ![]() and

and ![]() , and considering the matched filter,

, and considering the matched filter, ![]() , then

, then

(12.61)

(12.61)

As expected, the improvement factor equals the space-time integration gain in this instance.

Case 2—Clutter-Limited Condition, Matched Filter Weights: In the clutter-limited case, with ![]() , the improvement factor is

, the improvement factor is

![]() (12.62)

(12.62)

The presence of interference in the PSD degrades the improvement factor. If we let ![]() and

and ![]() in (12.62), we arrive at (12.61).

in (12.62), we arrive at (12.61).

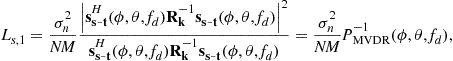

Case 3—Clutter-Limited Condition, Optimal Weights: Using the maximum SINR weight vector, ![]() , in (12.60), gives

, in (12.60), gives

(12.63)

(12.63)

This expression indicates reduced improvement for those angle-Doppler locations aligning with the clutter, and otherwise good performance in proximity to the clutter response owing to the super-resolution characteristic of ![]() (see Figure 12.1).

(see Figure 12.1).

2.12.2.4.4 Optimal and adaptive filter patterns

The filter gain pattern follows directly by evaluating the filter response over the angles (or spatial frequencies) and Doppler frequencies of interest. Define the space-time steering matrix,

![]() (12.64)

(12.64)

for all ![]() , and

, and ![]() . The optimal gain pattern is

. The optimal gain pattern is

![]() (12.65)

(12.65)

where ![]() is the optimal weighting given by (12.43). The adaptive gain pattern follows similarly as

is the optimal weighting given by (12.43). The adaptive gain pattern follows similarly as

![]() (12.66)

(12.66)

with (12.44) yielding ![]() .

.

Example gain patterns corresponding to the clutter environments shown in Figure 12.1 are given in Figure 12.2. This gain pattern corresponds to (12.65).

2.12.3 Signal models

In this section we consider basic space-time signal and covariance models.

2.12.3.1 Clutter

A plethora of reflected signals from the Earth’s surface comprise what is known as radar ground clutter. Ground clutter is a primary impediment to the detection of moving targets. The clutter signal is predominantly made up of signal reflections from distributed objects, such as returns from soil, forests, fields, etc. Discrete clutter sources are less frequently occurring and spatially distributed with a particular density as a function of RCS, leading to strong, point-like returns. Since clutter discrete returns occur relatively infrequently, their suppression is more challenging and they tend to drive false alarm rate. Many discrete returns result from manmade objects, like buildings, water towers, utility poles, etc. Fence lines, train tracks, and power lines are examples of extended sources of discrete clutter.

We discuss basic distributed and discrete clutter models.

2.12.3.1.1 Distributed clutter

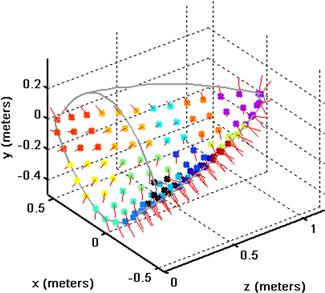

Distributed clutter returns result from the integrated response of all scatterers within the range resolution cell. A model for distributed clutter involves discretizing the range resolution cell into fine angle bins called clutter patches. The location of the angle bin relative to the platform velocity vector determines the clutter patch Doppler frequency. The clutter snapshot then follows as the sum of the returns from each of the individual patches.

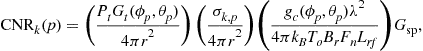

Figure 12.6 depicts the discretized clutter patch model for distributed clutter. The antenna gain varies around the range resolution annulus on the Earth’s surface in accordance with the array normal and steering direction. It is common to model the clutter complex voltage as a Gaussian random variable due to the constructive and destructive sum of the returns from the sub-scatterers comprising each resolution cell. The patch clutter-to-noise ratio (CNR) at the channel-level (assuming matched channels) is

(12.67)

(12.67)

where ![]() is the transmit power,

is the transmit power, ![]() is the transmit gain in the pth direction of interest, r is the slant range,

is the transmit gain in the pth direction of interest, r is the slant range, ![]() is the pth clutter patch radar cross section,

is the pth clutter patch radar cross section, ![]() is the receive channel gain,

is the receive channel gain, ![]() is the center wavelength,

is the center wavelength, ![]() is Boltzman’s constant,

is Boltzman’s constant, ![]() is standard operating temperature,

is standard operating temperature, ![]() is the receiver bandwidth,

is the receiver bandwidth, ![]() is noise figure,

is noise figure, ![]() is RF system loss, and

is RF system loss, and ![]() is signal processing gain (the pulse compression ratio, in this case) [1,7,8]. The clutter RCS is a function of the patch reflectivity,

is signal processing gain (the pulse compression ratio, in this case) [1,7,8]. The clutter RCS is a function of the patch reflectivity, ![]() , and area,

, and area, ![]() , where the area is determined to be a fraction of the resolution cell,

, where the area is determined to be a fraction of the resolution cell,

![]() (12.68)

(12.68)

The constant gamma model is a common choice used to model reflectivity and is given as ![]() , where

, where ![]() is a normalized reflectivity term dependent on the terrain type and

is a normalized reflectivity term dependent on the terrain type and ![]() is the grazing angle to the clutter patch [20]. The clutter patch power then follows by multiplying (12.67) by the receiver noise,

is the grazing angle to the clutter patch [20]. The clutter patch power then follows by multiplying (12.67) by the receiver noise, ![]() . The clutter voltage for the kth range bin and pth patch voltage is then

. The clutter voltage for the kth range bin and pth patch voltage is then

![]() (12.69)

(12.69)

where ![]() [9–11,18,19]. (If the response is non-fluctuating, as in the case of a clutter discrete subsequently discussed, then

[9–11,18,19]. (If the response is non-fluctuating, as in the case of a clutter discrete subsequently discussed, then ![]() is a complex scalar with unity magnitude and uniformly distributed phase.)

is a complex scalar with unity magnitude and uniformly distributed phase.)

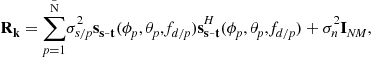

Figure 12.6 Illustration of space-time clutter patch calculation (after [9]). © 2003 IEEE

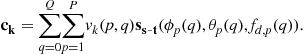

The patch voltage in (12.69) varies over the space-time aperture: there is a phase change from channel-to-channel due to direction of arrival, described by the spatial steering vector; and, a phase change from pulse-to-pulse due to the change in range-rate between the clutter patch and the radar platform, described by the temporal steering vector. The clutter space-time snapshot then follows by summing the voltages over the P patches for each of Q range ambiguities, where ![]() is the first (unambiguous) range return

is the first (unambiguous) range return

(12.70)

(12.70)

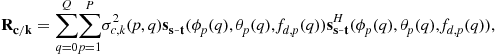

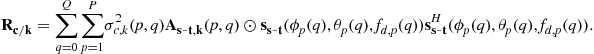

It is common to assume each patch is statistically independent, in which case the clutter covariance matrix corresponding to (12.70) is

(12.71)

(12.71)

where ![]() is the clutter patch power and follows from (12.69). Equations (12.70) and (12.71) form the basic ground clutter models.

is the clutter patch power and follows from (12.69). Equations (12.70) and (12.71) form the basic ground clutter models.

The clutter voltage decorrelates, mainly in time due to intrinsic clutter motion (ICM). Windswept vegetation and moving water are two cases where ICM results. The two basic models describing clutter temporal decorrelation include the Billingsley model [21] and the Gaussian model [7,10]. The Billingsley model is most common for overland surveillance; it allows a certain amount of the clutter power to decorrelate, say due to leaves fluctuating in the breeze, whilst some of the clutter power is persistent, thus modeling the tree trunk, for example. The Billingsley model leads to an exponential autocorrelation function. In contrast, the Gaussian power spectrum of [7] leads to a Gaussian autocorrelation; the Gaussian model leads to complete decorrelation over a specified time interval and is most appropriate in marine or riverine environments. Dispersion (group delay) across the array is the common source of spatial decorrelation; a sinc autocorrelation model is commonly chosen to model this effect, since it is the inverse transform of a rectangular function in the frequency domain [22,23].

Given the aforementioned discussion, define the length-NM space-time correlation taper as ![]() , with correlation matrix

, with correlation matrix ![]() .

. ![]() is the temporal correlation matrix and

is the temporal correlation matrix and ![]() is the spatial correlation matrix. The resulting clutter covariance matrix is

is the spatial correlation matrix. The resulting clutter covariance matrix is

(12.72)

(12.72)

Generating space-time clutter snapshots exhibiting the correlation described by ![]() typically involves shaping white noise by multiplying the matrix square root of

typically involves shaping white noise by multiplying the matrix square root of ![]() and

and ![]() by random vectors of length N or M, where the elements of each vector are uncorrelated, zero mean, unity variance Gaussian variates.

by random vectors of length N or M, where the elements of each vector are uncorrelated, zero mean, unity variance Gaussian variates.

2.12.3.1.2 Discrete clutter

Clutter discretes are strong returns that occur relatively infrequently and correspond to stationary, point-like objects, such as parked vehicles, utility poles, water towers, etc. Generally, the RCS of the clutter discrete is large relative to the RCS of a typical, resolved clutter patch. In some cases, the discrete clutter can appear extended, such as in the case of a fence line or train track.

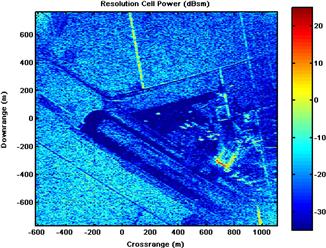

Figure 12.7 shows an example of discrete-like returns in a spotlight synthetic aperture radar (SAR) image of the Mojave Desert Airport. The hangar edges and fence lines, as well as some aircraft on the tarmac, are evident in the figure. Figure 12.8 shows an exceedance plot characterizing the typical impact of clutter discretes on detection performance. (EFA is a post-Doppler STAP method described in Section 2.12.4 and AMF refers to a STAP normalization discussed in Section 2.12.7.) In a homogeneous environment, the output of the STAP is well-behaved and the cumulative distribution follows the expected exponentially-shaped exceedance; in contrast, with discretes present, the tails of the exceedance plot are extended, indicating a requirement to raise the detection threshold to maintain a constant false alarm rate.

Figure 12.7 Spotlight SAR image of Mojave Desert Airport at 1 m resolution ([24]). © 2004 IEEE

A plausible discrete model involves laying out the spatial locations of discrete clutter of varying densities. One way to do this is to specify the density per km2 for a particular discrete RCS and employ a Poisson distribution to identify the random clutter discrete locations. An example of a range-angle seeding of discrete clutter of varying RCS from 20 dBsm to 60 dBsm is show in Figure 12.9.

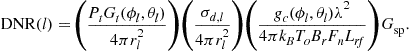

Since discrete clutter is stationary, the angular position uniquely specifies Doppler frequency. In other words, clutter discretes reside along the angle-Doppler region of support corresponding to stationary objects. As in the case of distributed clutter, the discrete-to-noise ratio (DNR) follows in a form similar to (12.67) for the lth discrete at range, ![]() , and angle

, and angle ![]() , as

, as

(12.73)

(12.73)

As the discrete is considered point-like, the RCS is taken as nonfluctuating, whereas the phase is uniformly distributed. The corresponding discrete space-time snapshot is

![]() (12.74)

(12.74)

where u is a uniformly-distributed random variable between ![]() and

and ![]() is the range realization for the lth clutter discrete. The discrete clutter is additive. The corresponding covariance matrix for each discrete is

is the range realization for the lth clutter discrete. The discrete clutter is additive. The corresponding covariance matrix for each discrete is

![]() (12.75)

(12.75)

2.12.3.2 Radio frequency interference

Typically, RFI appears as a noise-like, in-band signal source. The RFI can be intentional or not. In the narrowband case, the kth spatial snapshot for the ith RFI source and nth pulse is

![]() (12.76)

(12.76)

where ![]() is an uncorrelated source and T is the PRI. The waveform,

is an uncorrelated source and T is the PRI. The waveform, ![]() , is generally uncorrelated at nonzero lags, viz.,

, is generally uncorrelated at nonzero lags, viz.,

![]() (12.77)

(12.77)

but otherwise fully correlated for very short time lags corresponding to propagation across the multi-channel array, as (12.76) indicates (thus, ![]() , for

, for ![]() , in the narrowband case). The corresponding spatial covariance matrix is

, in the narrowband case). The corresponding spatial covariance matrix is

![]() (12.78)

(12.78)

As a result of (12.77), the space-time covariance matrix is

(12.79)

(12.79)

Thus, a spatial null is sufficient to remove the narrow-band RFI, as no temporal correlation is present.

Each RFI source is considered statistically independent, so that for J sources, ![]() , where

, where ![]() is the space-time snapshot and

is the space-time snapshot and ![]() .

.

As the fractional bandwidth—the ratio of waveform bandwidth to center frequency—of the receive signal increases, dispersion occurs. Dispersion leads to decorrelation of the RFI over the receive array; in this case, a single RFI signal appears as multiple, closely spaced sources in angle [23]. In general, the wideband RFI suppression case is handled similar to the narrowband case through the use of subband filtering or the use of fast-time taps. In the former case, the subbanding is commonly implemented using polyphase filtering, allowing the processor to implement the narrowband canceler in each subband prior to recombining [25].

2.12.3.3 Receiver noise

Uncorrelated noise sources—such as receiver noise or sky noise—are modeled as a white Gaussian noise (WGN) disturbance, ![]() , where m and n are channel and pulse indices, respectively, and

, where m and n are channel and pulse indices, respectively, and ![]() . The waveform samples are assumed uncorrelated such that

. The waveform samples are assumed uncorrelated such that

![]() (12.80)

(12.80)

where ![]() is the channel noise power. This noise source is also uncorrelated, independent, and identically distributed over the range dimension.

is the channel noise power. This noise source is also uncorrelated, independent, and identically distributed over the range dimension.

2.12.3.4 Target

The target snapshot is generally modeled using both Swerling 1 and Swerling 2 statistics [1,7,8]. Swerling 1 and Swerling 2 targets each exhibit voltages corresponding to a circular Gaussian distribution; the Gaussian nature of the voltage distribution models target fading effects. The target voltage is assumed perfectly correlated within the CPI, in accord with the Swerling 1 model, and uncorrelated from CPI-to-CPI according to the Swerling 2 target model. Frequency hopping from CPI-to-CPI is a common reason for target voltage decorrelation and is used to minimize the impact of target fading on detection performance. STAP is applied on a CPI basis, as it is a coherent signal processing technique, with noncoherent addition (NCA) applied to the STAP output—from CPI-to-CPI—to mitigate target fading effects.

Using the Swerling 1 target model, the target snapshot at the kth range, Doppler frequency, ![]() , and angle,

, and angle, ![]() , is

, is

![]() (12.81)

(12.81)

where ![]() and SNR is the single channel, single pulse signal-to-noise ratio. The random variable,

and SNR is the single channel, single pulse signal-to-noise ratio. The random variable, ![]() , models targeting fading resulting from subscatterers adding in and out of phase. As indicated, to handle fading, it is common to frequency hop from CPI-to-CPI, in which case target voltages appear decorrelated (Swerling 2).

, models targeting fading resulting from subscatterers adding in and out of phase. As indicated, to handle fading, it is common to frequency hop from CPI-to-CPI, in which case target voltages appear decorrelated (Swerling 2).

Nonfluctuating, point-target analysis employs (12.81) with ![]() , where u is uniformly distributed between

, where u is uniformly distributed between ![]() . Sometimes the nonfluctuating target model is used in analysis. However, the Swerling 2 model is the preferred choice, with the Swerling 1 model applicable at the fixed frequency, CPI-level.

. Sometimes the nonfluctuating target model is used in analysis. However, the Swerling 2 model is the preferred choice, with the Swerling 1 model applicable at the fixed frequency, CPI-level.

2.12.3.5 The space-time snapshot

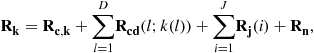

The space-time snapshot for realization, k, is given by (12.2) with the addition of clutter discretes, where the possibility of the single target case is

(12.82)

(12.82)

where D is the total number of discrete scatterers and the snapshot terms, ![]() , are only added when

, are only added when ![]() . The null-hypothesis covariance matrix corresponding to (12.82) is

. The null-hypothesis covariance matrix corresponding to (12.82) is

(12.83)

(12.83)

where, as expected, ![]() is included only for those terms where

is included only for those terms where ![]() .

.

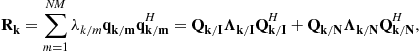

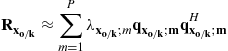

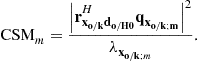

It is common to envision the collection of space-time snapshots organized as space-time data matrices,

![]() (12.84)

(12.84)

The spatial snapshot for pulse, n, is denoted as ![]() ; it results by removing N length-M segments from

; it results by removing N length-M segments from ![]() and stacking them side-by-side. The pictorial of (12.84) is given in Figure 12.10 and is known as the radar datacube. Generally, the STAP operates on each space-time data matrix, or slice, of the cube in Figure 12.10, while using slices at other ranges (or realizations) for training. We subsequently describe various adaptive implementations. (Note: space/fast-time processing uses slices along the pulse/fast-time domain and can train over the pulse dimension. As previously mentioned, we focus on space/slow-time adaptivity, but the basic formulations of the next section generally apply to any appropriately formatted data with the corresponding restrictions of each method.)

and stacking them side-by-side. The pictorial of (12.84) is given in Figure 12.10 and is known as the radar datacube. Generally, the STAP operates on each space-time data matrix, or slice, of the cube in Figure 12.10, while using slices at other ranges (or realizations) for training. We subsequently describe various adaptive implementations. (Note: space/fast-time processing uses slices along the pulse/fast-time domain and can train over the pulse dimension. As previously mentioned, we focus on space/slow-time adaptivity, but the basic formulations of the next section generally apply to any appropriately formatted data with the corresponding restrictions of each method.)

Figure 12.10 Radar datacube (after [9]). © 2003 IEEE

2.12.4 Adaptive filter implementations

Section 2.12.2.2 describes space-time filter formulations. As seen from the discussion, all solutions are similar, involving space-time weightings of the form of a covariance inverse and a steering vector to implement the matched filter.

We now discuss three basic paradigms to implement the weighting strategies discussed in Section 2.12.2.2: reduced-rank STAP (RR-STAP), reduced-dimension STAP (RD-STAP), and parametric STAP.

2.12.4.1 Reduced-rank STAP

As seen from (12.78), each narrowband RFI source is rank-one. Distributed clutter is oftentimes of rank significantly less than the dimensionality of ![]() , viz.

, viz. ![]() . Through empirical analysis, the clutter rank for a sidelooking radar with minimal yaw is approximated as

. Through empirical analysis, the clutter rank for a sidelooking radar with minimal yaw is approximated as

![]() (12.85)

(12.85)

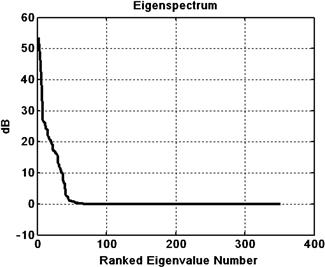

where ![]() is the platform along-track velocity and d is the separation between channels of a uniform linear array (ULA) [10]. The expression in (12.85) is known as Brennan’s Rule; a related rule is given by Klemm [26]. Brennan’s rule is closely related to the number of independent antenna channel positions during the collection interval; redundancy in the measurements lowers the rank, and in fact leads to coloration of the clutter return. The idea behind RR-STAP is to essentially project the interference subspace—those eigenspaces corresponding to larger eigenvalues above the noise floor—out of the space-time snapshot.

is the platform along-track velocity and d is the separation between channels of a uniform linear array (ULA) [10]. The expression in (12.85) is known as Brennan’s Rule; a related rule is given by Klemm [26]. Brennan’s rule is closely related to the number of independent antenna channel positions during the collection interval; redundancy in the measurements lowers the rank, and in fact leads to coloration of the clutter return. The idea behind RR-STAP is to essentially project the interference subspace—those eigenspaces corresponding to larger eigenvalues above the noise floor—out of the space-time snapshot.

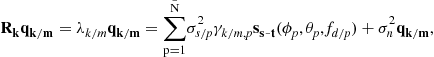

The eigendecomposition of the space-time covariance matrix yields

(12.86)

(12.86)

where ![]() is the mth eigenvalue corresponding to eigenvector

is the mth eigenvalue corresponding to eigenvector ![]() ,

, ![]() represents the interference subspace eigenvalues, and

represents the interference subspace eigenvalues, and ![]() characterizes the noise subspace eigenvalues. The interference eigenvectors have the special property that each span the collection of interference steering vectors. Moreover,

characterizes the noise subspace eigenvalues. The interference eigenvectors have the special property that each span the collection of interference steering vectors. Moreover, ![]() is unitary, so

is unitary, so

![]() (12.87)

(12.87)

We can write the distributed clutter-plus-noise part of (12.83) in a generic, simplified form

(12.88)

(12.88)

where ![]() is the number of signal sources and

is the number of signal sources and ![]() is the power for the pth signal source. While this simplified covariance form suggests a model for clutter-plus-noise, the subsequent results are extensible to other correlated sources. Then, from (12.88),

is the power for the pth signal source. While this simplified covariance form suggests a model for clutter-plus-noise, the subsequent results are extensible to other correlated sources. Then, from (12.88),

(12.89)

(12.89)

where

![]() (12.90)

(12.90)

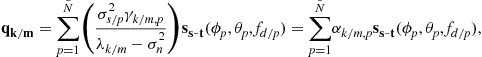

Solving (12.89) for the mth eigenvector, ![]() ,

,

(12.91)

(12.91)

where in this case

(12.92)

(12.92)

Naturally, these equations are only valid for the dominant subspace, ![]() . Equation (12.91) shows

. Equation (12.91) shows ![]() .

.

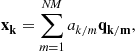

Also, it is known that any wide-sense stationary (WSS) process with zero mean and covariance matrix, ![]() , can be written as a linear combination of the eigenvectors of

, can be written as a linear combination of the eigenvectors of ![]() , via what is known as the Karhunen-Loève Transform (KLT),

, via what is known as the Karhunen-Loève Transform (KLT),

(12.93)

(12.93)

where ![]() are the Karhunen- Loève (KL) coefficients [12].

are the Karhunen- Loève (KL) coefficients [12].

The prior expressions, (12.86)–(12.93), provide necessary mathematical background for our subsequent discussion on RR-STAP methods.

Motivation for RR-STAP includes the following:

• Clutter and jamming tend to be of low numerical rank and the processor explicitly removes only those signal subspaces corresponding to interference.

• The eigendecomposition maximally compresses the interference into the fewest basis vectors [12].

• The RMB rule applies to the RR-STAP case, with rank replacing DoFs in the formulation, viz. training over twice the rank yields roughly 3 dB loss on average [27].

In effect, RR-STAP is a weight calculation strategy. There are challenges implementing RR-STAP, including high computational burden and difficulties in rank determination. Nevertheless, RR-STAP methods hold meaningful insight and, in some cases, are useful in weight vector determination.

2.12.4.1.1 Adaptive beamformer pattern synthesis

It is shown in [28] that (12.43) can be written

(12.94)

(12.94)

where ![]() is the noise-floor eigenvalue level and

is the noise-floor eigenvalue level and ![]() is the projection of the mth eigenvector onto the quiescent pattern. As seen from (12.94), the STAP response appears as a notching of the space-time beampattern given by

is the projection of the mth eigenvector onto the quiescent pattern. As seen from (12.94), the STAP response appears as a notching of the space-time beampattern given by ![]() by the weighted, interference eigenvectors. When

by the weighted, interference eigenvectors. When ![]() , no subtraction occurs, since the corresponding eigenvector lies in the noise subspace.

, no subtraction occurs, since the corresponding eigenvector lies in the noise subspace.