Most of an application's functionality could be discovered just by navigating through the application; but as you reviewed in this book, there are features that are hidden in the requests and responses. For this reason, using a spidering tool during navigation is essential. Basically, all the different HTTP proxies we covered in Chapter 8, Top Bug Bounty Hunting Tools, have spidering tools. The basic idea of spidering is to extract all the links to internal or external resources from the request and responses in order to discover sections, entry points, and hidden resources that could be in the scope.

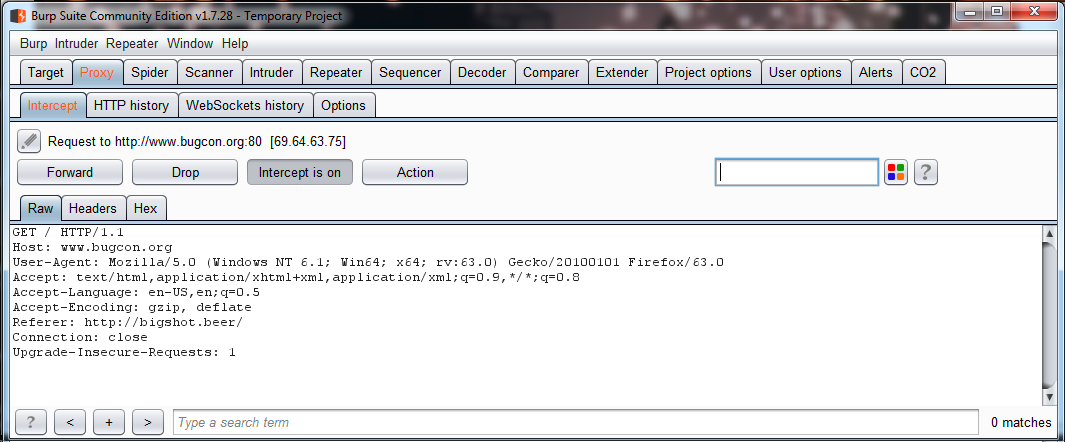

Let's look at how to use a spidering tool. In this example, I am going to use Burp Suite's spidering, but you can use another proxy that you prefer, as long as it works in a similar fashion. So, to use the Burp Suite's Spider, you need access to an application with the browser preconfigured to use Burp, and then you can intercept a request. I recommend the initial request, which is the first request you make to the application:

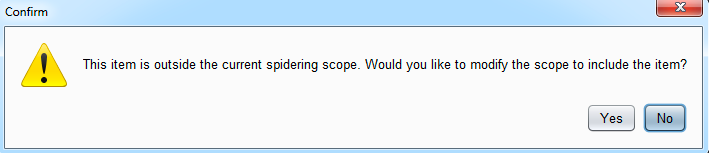

Then, as with all the options in Burp, do a secondary click and click on Send to spider. As this is the first time you have added a request to a Burp project, a warning message will be displayed. This message means that Burp Suite will include this application in the scope:

What is the scope? In all the HTTP proxies, it is possible to limit the analysis to a single domain (application) or multiple domains. It is useful when you are assessing applications that work under the same domain, but the bounty program is limited to only some of the applications included in the domain, for example:

- shop.google.com

- applications.google.com

- dev.google.com

The following are not included:

- *.google.com

- mail.google.com

- music.google.com

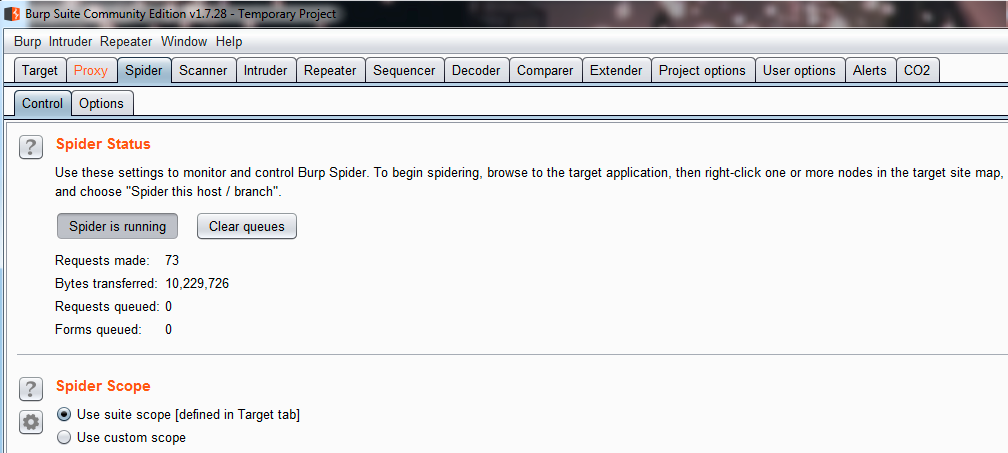

After accepting the warning message, the domain will be included in the scope. After this point, all the requests and responses made during your navigation will be spidered. This means that the proxy will extract all the links, redirects, and paths to other resources and map them. In the following screenshot, you will can how the proxy is monitoring the spidering, showing how many requests and responses have been analyzed:

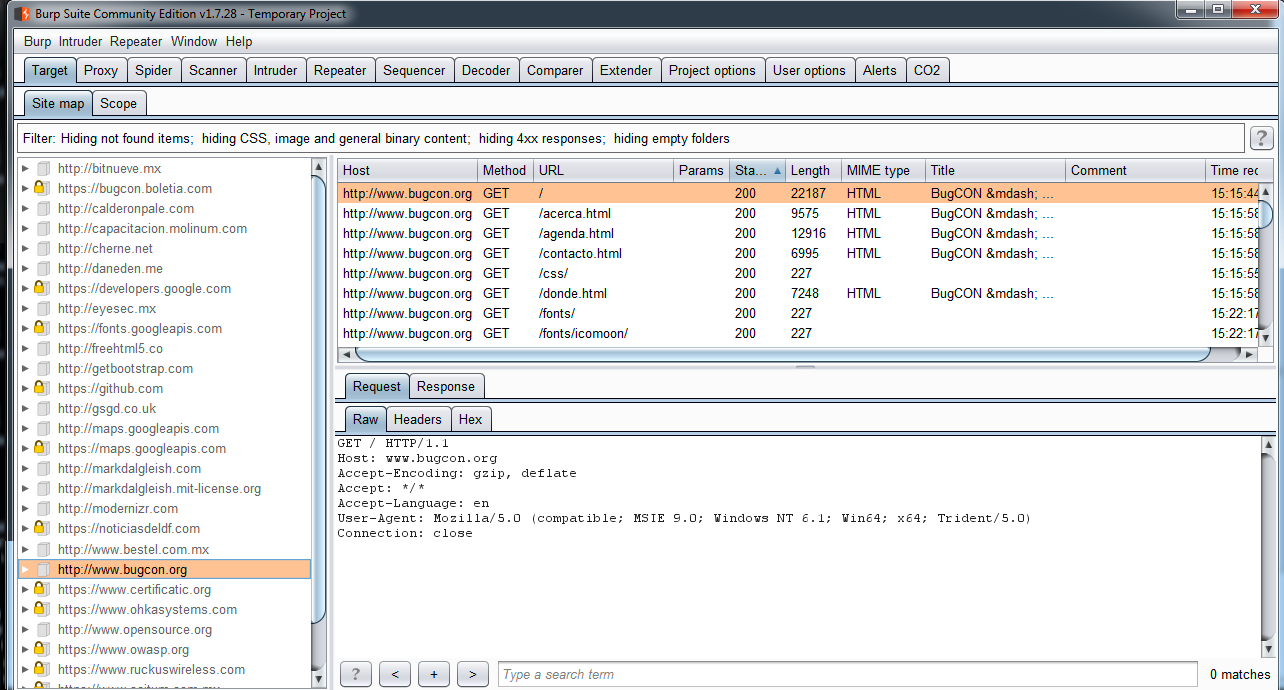

Now, you just need to navigate the application and explore each section while the proxy is in the background collecting information. Sometimes, it is possible to see messages about sections that are not accessed because of permissions. If you are lucky, maybe the proxy asks for credentials when a login form is located. In the Target table, we can see the map of the application and resources; here, you need to review the paths and domains of links that you have not seen before: