Integrated Sensor Systems and Data Fusion for Homeland Protection

Alfonso Farina*, Luciana Ortenzi*, Branko Ristic† and Alex Skvortsov†, *SELEX Electronic Systems, Rome, Italy, †DSTO, Melbourne, Vic. 3207, Australia

Abstract

This chapter addresses the application of data and information fusion to the design of integrated systems in the Homeland Protection (HP) domain. HP is a wide and complex domain: systems in this domain are large (in terms of size and scope) integrated (each subsystem cannot be considered as an isolated system) and different in purpose. Such systems require a multidisciplinary approach for their design and analysis and they are necessarily required to provide data and information fusion in the most general sense. The first data fusion algorithms employed in real systems in the radar field go back to the early seventies; now a days new concepts have been developed and spread to be applied to very complex systems with the aim to achieve the highest level of intelligence as possible and hopefully to support decision. Data fusion is aimed to enhance situation awareness and decision making through the combination of information/data obtained by networks of homogeneous and/or heterogeneous sensors. The aim of this chapter is to give an overview of the several approaches that can be followed to design and analyze systems for homeland protection. Different fusion architectures can be drawn on the basis of the employed algorithms: they are analyzed under several aspects in this chapter. Real study cases applied to real world problems of homeland protection are provided in the chapter.

Keywords

Data fusion; Information fusion; Homeland protection; Sensor network; Collaborative signal and information processing; Self-organizing sensor network; Cooperative sensor network; Network centric operations; Sensor deployment; Tracking; Classification; Situation awareness

Acknowledgments

The first two authors wish to warmly thank Prof F. Zirilli (Univ. of Rome) for his contribution to Section 2.22.6.1, Dr. S. Gallone (SELEX Sistemi Integrati) for contributing to Section 2.22.8.2 and Dr. A. Graziano (SELEX Sistemi Integrati) for the continuous and fruitful cooperation on the many topics described in the Chapter for years.

2.22.1 Introduction

As stated by John Naisbitt in his bestseller “Megatrends” [1], published in 1982, about the new trends and directions transforming our lives: “We are drowning in information but starved for knowledge. This level of information is clearly impossible to be handled by present means. Uncontrolled and unorganized information is no longer a resource in an information society, instead it becomes the enemy.” This successful sentence can be taken as a statement of the problem of information fusion: how can knowledge, awareness and decision making capability be achieved starting from the available information?

This chapter is intended as an attempt to technical and mathematical answer to the previous question; in particular it addresses the application of data and information fusion to the design of integrated systems in the Homeland Protection (HP) domain. HP refers to the broad civilian and military effort produced by a Country to protect its territory—including citizens, assets and activities which are vital and fundamental for its growth and prosperity—against internal and external hazards and to reduce its vulnerability to attacks, whatever their origin, as well as natural disasters. HP is therefore a wide and complex domain: systems in this domain are large, to mean that size and scope of such systems are conspicuous and that system boundaries may not be easy to identify; systems are integrated, to mean that it is generally not sufficient to study each subsystem in isolation; systems are different in purpose and require a multidisciplinary approach for their design and analysis.

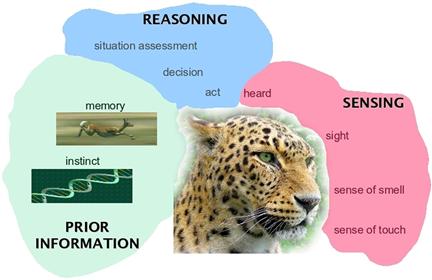

The design and analysis of such systems devoted to operate in such scenarios are necessarily required to provide data and information fusion in the most general sense. Information fusion is about combining, or fusing, information from different sources to provide knowledge that is not evident from individual sources. Numerous real world problems benefit from the combination of heterogeneous information sources, for instance, as depicted in Figure 22.1, multi-sensor data fusion is naturally performed by animals and humans to access more accurately the surrounding environment and to identify threats or food, thereby improving their chances of survival. The field of information fusion is commonly characterized as multidisciplinary research area and includes and/or overlaps with a number of other areas. The information fusion at sensor level includes signal processing; at data level data processing; at meta-data level it overlaps with knowledge representation and, finally, at the decision level it involves the decision making capability. Data fusion has been defined in [2] as “the process of combining evidence to support intelligence generation.” Mainly the methods employed to achieve this scope can be divided into two general classes: quantitative and qualitative. The former are based on numerical techniques, the latter ones are based on symbolic representation of information.

Figure 22.1 Why information fusion. (Kindly provided by Dr. A. Benavoli—IDSIA, Istituto Dalle Molle di Studi sull’Intelligenza Artificiale, Switzerland.)

Examples of quantitative methods can be found in stochastic estimation theory, that aim to estimate the state of a system, using all available information, and to characterize the fusion uncertainty in the framework of probability theory. Most of the algorithms developed for quantitative fusion are based on Bayes filter [3], as Kalman filter [4], information filter [5] and neural networks. The application of these algorithms is employed usually to perform multi-target and multi-sensor tracking. The new generation methods, applying a qualitative approach, are based on a symbolic representation of information. They are, of course, based on mathematical models and their output is numeric, however they can be employed to model qualitative information (e.g., fuzzy). They include expert systems, heuristic, behavioral and structural modeling. Qualitative methods are based on artificial intelligence techniques, such fuzzy logic [6], Dempster-Shafer theory [7,8] Dezert-Smarandache theory [9,10] and rules based-methods.

The first data fusion algorithms employed in real systems in the radar field go back to the early seventies, when they had been developed for multi-radar tracking (MRT) for netted sensors. It was late 1970s, beginning 1980s when these new algorithms and the corresponding means to mitigate unavoidable sources of errors due to practical world (e.g., the time synchronization, the radar alignment to the North, the inaccurate knowledge of coordinates of radar sites) were provided. Probably one of the first MRT system for Air Traffic Control (ATC) ever installed was the system operating in the center and South of Italy [11]. When the competence on tracking was so mature, it was collected in a brand new book on radar data processing [12,13], translated also in Russian and Chinese.

Later in 1990s further advancements were done in the field of multi-sensor fusion for Airborne Early Warning (AEW) systems, setting up an algorithm suite to track targets on the basis of the data provided by surveillance radar, an Identification Friend or Foe (IFF), an Electronic Support Measurement (ESM) and data links. After conceiving also algorithms to track targets on the basis of the angle and identification measurements provided by ESM on a moving platform, data fusion of active and passive tracks was provided [14–17].

Nowadays concepts have been developed and spread to be applied to very complex systems with the aim to achieve the highest level of intelligence as possible and hopefully to support decision. Data fusion is aimed to enhance situation awareness and decision making through the combination of information/data obtained by networks of homogeneous and/or heterogeneous sensors. A sensor network presents advantages over a single sensor under different points of views, as it supplies both redundant and complementary information. Redundant information is exploited to make the system robust to the failure in order that a malfunction of an entity of the system means only a degradation of the performances, rather than the complete failure of the system, since information about the same environment can be obtained from different sources. More robustness can be achieved also with respect to interferences, both intentional and unintentional, due to frequency and spatial diversity of the sensors. Complementary information build up a more complete picture of the observed system; for example sensors are dislocated over large regions providing diverse viewing angles of observed phenomenon and different technologies can be employed in the same application to provide improved system performance.

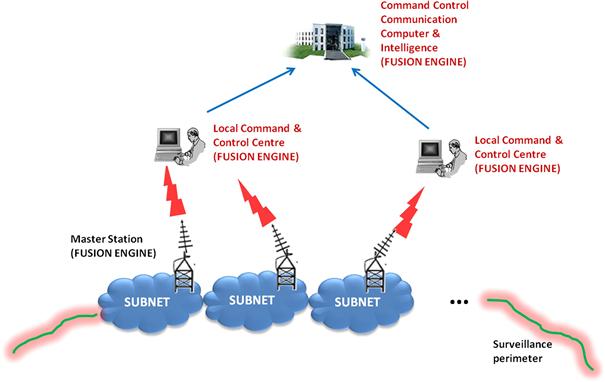

A large number of different applications, algorithms and architectures have been developed exploiting these advantages. Several examples can be found in robotics, military applications, Homeland Protection and management of large and complex critical infrastructures. Although the specific nature of each problem is different, the final goal, from the point of view of the sensed information, is always the same: using all the available data to better understand the investigated phenomena. The aim of this chapter is to give an overview of the several approaches that can be followed to design and analyze systems for Homeland Protection. Different fusion architectures can be drawn on the basis of the employed algorithms; according to this approach, three general categories can be identified in the literature [18,19]: centralized, hierarchical, and decentralized/netcentric.

The traditional architecture is centralized: in this framework several sensing devices are connected to a central component, the fusion node. For example, in the case of a sensor network employed for the surveillance of an area, usually the information traffic goes from the sensor nodes to a single sink node called information fusion center. According to the information received from the sensors, the fusion center monitors the area where the sensors are deployed and decides the actions to take. Conceptually, the algorithms employed in this case are relatively simple and the resource allocation is straightforward because the central component has an overall view of the whole system. This kind of architecture presents several drawbacks: high computational load, the possibility of catastrophic failure when the fusion node goes down and the lack of flexibility to changes of the system and sensor entities. Therefore this approach is still valid if the number of sensors, whose information is fused, independently of the width of the area to be monitored, is limited and also the relationship and interconnections among sensors are limited too.

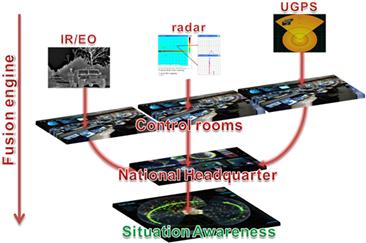

In hierarchical architectures, there are several fusion nodes, where intermediate fusion processes are performed, and an ending central fusion node. The principle of a hierarchy is to reduce the communications and computational loads of centralized systems by distributing data fusion tasks among a hierarchy of sensor entities. However in a hierarchy there is still a central component acting as a fusion center. Entities constituting local fusion center, locally process information and send it to the central fusion node. This approach is commonly used in robotics and surveillance applications. Although this architecture reduces the computational and communication loads, there are still some drawbacks connected to the centralized model. In addition to these problems, there are some disadvantages related to the resource allocation balancing and the vulnerability to communication bottlenecks.

In certain cases the traditional data fusion algorithms may still be valid; however, in some cases the great variety of sub-systems and the complexity of interconnections may require new approaches. Most of the drawbacks of centralized and hierarchical architectures can be overcame by decentralized architectures. The trend in surveillance today is towards Network Centric Operation (NCO) [20]. The vision for NCO is to provide seamless access to timely information to all operators (e.g., soldier, officer) and decision-makers at every echelon in the military hierarchy. The goal is to enable all elements, including individual infantry soldiers, ground vehicles, command centers, aircraft and naval vessels, to share the collected information and to combine it into a coherent, accurate picture of the battlefield.

The same approach can be followed in the organization of a sensor network. In recent years the decreasing sensor cost and the development of telecommunication technology have made possible the deployment of networks with a huge number of sensors; in this case the use of information fusion centers is unpractical. Consequently a new class of sensor networks, whose way of functioning is called network centric, has emerged. These networks do not have a fusion center and their functioning is based on the information exchange between near-by sensors. Under this approach the information can be considered as a property of the network rather than of the own sensor. This solution is strongly advocated for its robustness and ease of implementation, but it might suffer when the number of sensors grows very much. It has a broad range of potential applications in the field of Homeland Protection: surveillance of habitat and environmental monitoring, structural monitoring (e.g., bridges), contaminants, smart roads, intruder detection, battlefield. It is complementary to the classical surveillance with few large-costly sensors hierarchically organized.

The network of numerous sensors and communication nodes (for instance: peer to peer networks) may have link topology varying with time due to natural interferences, electromagnetic propagation masked by the terrain surface, meteorological conditions, dust and smoke which might be present in the environment, allowing therefore modularity, robustness and flexibility. These networks should be designed to be resilient to Electronic Counter Measures (ECM), cyber attacks and should be able to manage increasing and highly variable flow of data. The satisfaction of such demanding requirements, maintaining however the limitation of resources such as energy, bandwidth and node complexity, can be achieved borrowing from biological systems several mechanism. For example bio-inspired sensor networks employ decentralized decisions through the self-synchronization mechanism observed in nature that allows forcing every single node of the network to reach the globally optimal decision, without the need of any fusion center. However there are also drawbacks associated to these architectures: in fully decentralized systems, communication issues are more complex and depend on the topology of the network; generally, communication overheads are higher than in centralized systems.

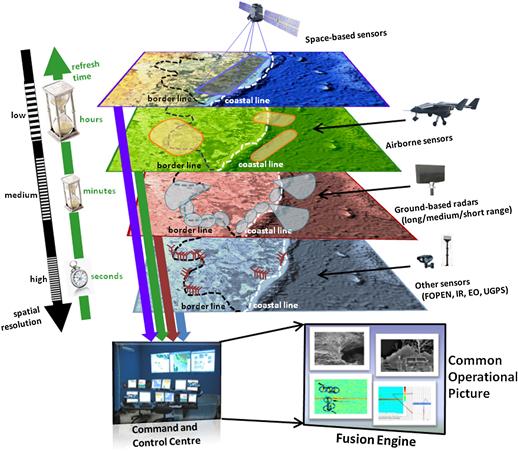

In this chapter these aspects will be investigated in depth for networks respectively of homogeneous and heterogeneous sensors with the description of real study cases applied to real world problems of Homeland Protection. In particular the possibility of netting different sensors operating with different characteristics of domain, coverage, frequency and resolution allows a multi-scale1 approach. This approach is particularly suitable for the surveillance of wide areas such as national borders or critical strategic regions.

The chapter is organized as follows: Section 2.22.2 illustrates the Homeland Protection domain and highlights some of the characteristics of systems in this specific domain; Section 2.22.3 briefly reviews the development of data fusion and gives references to new emerging trends in the domain of high level data fusion. Section 2.22.4 gives a broad and very general description of the basic categories of intelligence that are the source of data and information employed to perform the fusion process. The Sections 2.22.5 and 2.22.6 tackle different aspects related to homogeneous sensor networks. The former proposes several issues from a theoretical point of view, illustrating, next to traditional approaches, the new trends of Collaborative Signal and Information Processing (CSIP), self-organizing and self-synchronizing sensor network; Section 2.22.5 proposes also some remarks about real applications and the need to rethink some mathematical algorithms to overcome the network centric approach. The latter, Section 2.22.6, proposes three real study cases where the novel approaches give significant results. Likewise Section 2.22.7 tackles the aspects related to heterogeneous sensor networks, dealing with the problems of deployment, behavior assignment and coordination of the different sensors. Also in this case a special attention is focused on the mathematical issues related to these new approaches. Real applications of this kind of sensor networks are described in Sections 2.22.8 and 2.22.9, respectively for the border control problem and the forecasting and estimation of an epidemics. Finally Section 2.22.9, with the concluding remarks, follows.

2.22.2 The Problem of homeland protection

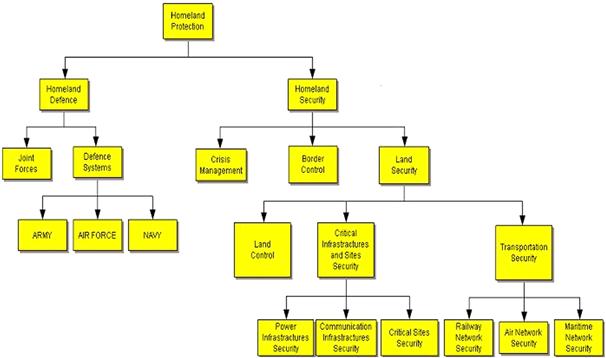

The diagram of Figure 22.2 provides a decomposition of the Homeland Protection domain: the two main sub-domains are Homeland Defense (HD) and Homeland Security (HS) [21].

Figure 22.2 Homeland Protection domain. (From [21], reprinted with permission.)

HD includes the typical duties and support systems of military joint forces and single armed forces. Usually HD systems are strictly military, are employed by military personnel only, satisfy specific technical requirements, operational needs and environmental scenarios, and in most cases are designed to face only military threats. The new trend aims to employ military surveillance systems in combined military and civil operations, especially to face terrorism [22]. The military domain has also been swept in recent years by the NCO paradigm; NCO predicates a tighter coupling among forces, especially in the cognitive domain, to achieve synchronization, agility and decision superiority and it is a strong driver in the transformation from a platform-centric force to a network-centric force [20].

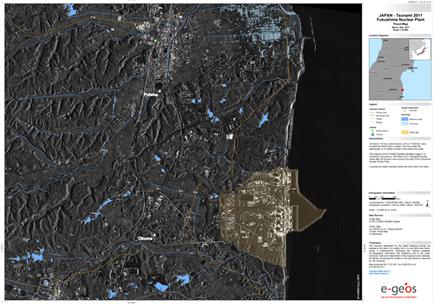

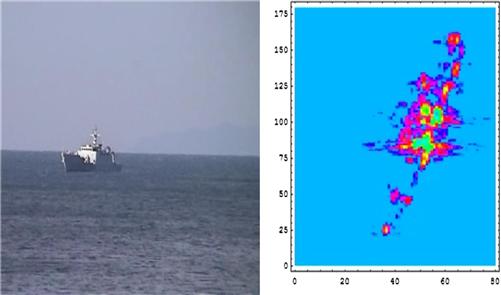

HS is a very broad and complex domain that requires coordinated action among national and local governments, private sector and concerned citizens across a country; it covers issues such as crisis management, border control, critical infrastructure protection and transportation security [23,24]. Crisis management is the ability of identifying and assessing a crisis, planning a response, and acting to resolve the crisis situation. Border control aims to build a smart protection belt all around a country to counter terrorism and illegal activities; yet it is not resolutive due to the difficulty of controlling the country boundaries along their full and variegated extension, the non necessarily physical nature of attacks in the current information age, and the threats which often arise internally to the country itself. HS includes also land security that is particularly critical because of its complexity and strategic importance; the security of critical assets, such as electric power plants, communication infrastructures, strategic areas and railway networks, must be ensured continuously in space and time [25–27]. The most recent terrorist attacks have shown the vulnerability of national critical infrastructures [28] and have made the world aware of the possibility of large-scale terrorist offensive actions against civil society: the September 11th, 2001 attack on the World Trade center in New York City is the most dramatic example of this new terrorism. The main emphasis has been put on the terrorist threat, but what emerges is the fragility and vulnerability of modern society to both deliberate threats and natural disasters. Figure 22.3 shows a Synthetic Aperture Radar (SAR) image collected by a satellite of the Italian CosmoSkyMed constellation of the area of the Fukushima nuclear plant hit in 2011 by the tsunami.

Figure 22.3 A CosmoSkyMed SAR image of Fukushima nuclear plant zone after the tsunami 2011 showing the flooded areas. (Courtesy of E-geos, a Telespazio Company.)

The HP domain includes also the protection from deliberate attacks against the commercial activities of a Country led also out of the national territory, comprehensive also of the territorial waters and Exclusive Economic Zone (EEZ). Seaborne piracy against transport vessels remains a significant issue (with estimated worldwide losses of US$13–16 billion per year), particularly in the waters between the Red Sea and Indian Ocean, off the Somali coast, and also in the Strait of Malacca and Singapore, which are navigated by over 50,000 commercial ships a year [29,30].

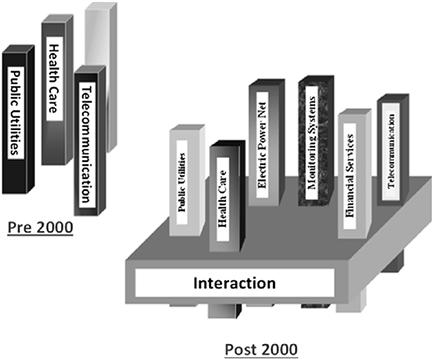

The globalization, the pervasiveness of information technologies and the transformation of the industrial sector and civil society have created new vulnerabilities in the system as a whole, but all this has happened without a corresponding effort to increase its robustness and security. As an example, single infrastructure networks have grown over the years independently, creating autonomous “vertical” systems with limited points of contact; around year 2000, as a consequence of the change of trend in the socio-techno scenario, the infrastructures have begun to share services and thus to create interconnected and interdependent systems. Nowadays infrastructures are interconnected and mutually dependent in a complex way: a phenomenon that affects one infrastructure can have a direct or indirect impact on other infrastructures, spreading on a wide geographical area and affecting several sectors of the citizen life. This is schematically represented in Figure 22.4[31,32].

Figure 22.4 Interdependencies between present infrastructures. (From [31], reprinted with permission.)

Beside the physical protection of territory, citizens, critical assets and activities, the security of information and computer systems is one the greatest challenges for a Country. Information and communication technologies have enhanced the efficiency and the comfort of the civil society on one hand, but added complexity and vulnerability on the other hand. The cyber security consists in ensuring the protection of information and property from hackers, corruption, or natural disaster, maintaining however the information and property accessible and productive to its intended users. This problem is pervasive in nearly all the systems supporting a nation: financial, energy, healthcare and transportation. The new trend toward the mobile communications is revealing a new cyber vulnerability, for instance the sheer mass of mobile endpoints gives more protection to hackers leading a cyber attack starting from a mobile. Therefore, the mobile infrastructure is becoming a critical infrastructure as well [33].

Nowadays the challenge is to understand this new scenario and to address the use of new and efficient algorithms for the information fusion in the domain of large integrated systems [34]. To integrate such heterogeneous information the necessity emerges to develop new algorithms of data fusion and information fusion to achieve an operational picture. In such scenario, where the attack can be lead with unconventional manners, information of heterogeneous sources, despite appearing uncorrelated, can be related and hence exploited by its fusion. Therefore particular attention is due to the information sources; Section 2.22.4 is devoted to this aspect of the problem, giving an overview of the sensors and the systems that traditionally provide information.

2.22.3 Definitions and background

Before addressing in more detail the topic of data fusion applied to the domain of Homeland Protection, it is useful to briefly review the evolution of data fusion and, more recently, the definition of the new paradigms and the introduction to high-level data fusion and information fusion.

A definition of data fusion is provided in [35]: “Data fusion is a process that combines data and knowledge from different sources with the aim of maximizing the useful information content, for improved reliability or discriminant capability, while minimizing the quantity of data ultimately retained.” Another definition is provided by the Joint Directors of Laboratories (JDL) Data Fusion Subpanel (DFS) which, in its latest revision of its data fusion model, Steinberg and Bowman [36] settle with the following short definition: “Data fusion is the process of combining data or information to estimate or predict entity states.” Due to its generality, the definition of JDL encompasses the previous one. One aspect of the data fusion process, which is not included in the first definition and is implicit in the second, is process refinement, i.e., the improving of data fusion process and data acquisition. Many authors, recognize process refinement and data fusion to be so closely coupled that process refinement should be considered to be a part of the data fusion process. This is not a new technique in itself, rather a framework for incorporating reasoning and learning with perceived information into systems, utilizing both traditional and new areas of research. These areas include decision theory, management of uncertainty, digital signal processing, and computer science. The data fusion process comprises techniques for data reduction, data association, resource management, and fusion of uncertain, incomplete, and contradictory information.

In 1986, an effort to standardize the terminology related to data fusion began and the JDL data fusion working group was established. The result of that effort was the conception of a process model for data fusion and a data fusion lexicon. The so-called JDL fusion model [37] is a functional model, developed to overcome potential confusion in the community and to improve communications among military researchers and system developers. The model provides a common frame of reference for fusion discussions and to facilitate understanding and recognizing the problems where data fusion is applicable. The first issue of the model, dated 1988, provided four fusion levels:

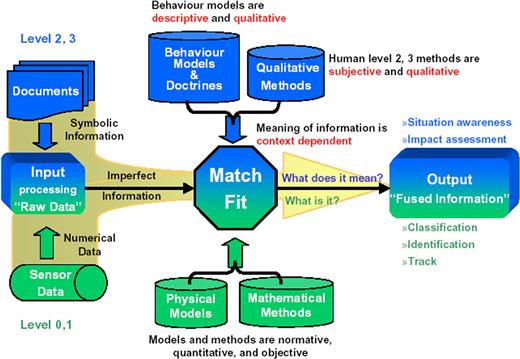

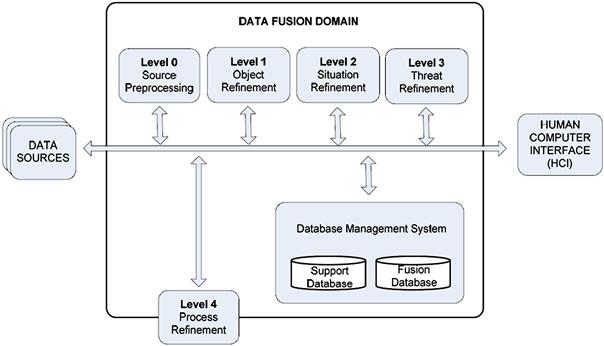

In 1998 Steinberg et al. [38] revised and expanded the JDL model to broaden the functional model and related taxonomy beyond the original military focus. They introduced a level 0 to the model for estimation and prediction of signal/object observable states on the basis of pixel/signal-level data association and characterization. They also suggested renaming and re-interpretation of level 2 and level 3 to focus on understanding the external world beyond military situation and threat focus. Figure 22.5 reports a block diagram representing this functional model. Although originally developed for military applications, the model is generally applicable. Furthermore, the model does not assume its functions to be automated, they could equally well be maintained by human labor. Hence, the model is both general and flexible. The revised JDL model levels specify logical separations in the data fusion process and divide information into different levels of abstraction depending on the kind of information they produce, where the lower levels yield more specific, and the higher more general, information. The model is divided into the following five levels [18]:

• Level 0—sub-object assessment: the pre-detection activities such as pixel or signal processing, spatial or temporal registration is present. Level 0 deals with the estimation and prediction of signal/object observable states on the basis of pixel/signal level data association and characterization.

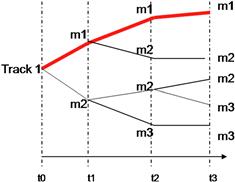

• Level 1—object assessment: is concerned with estimation and prediction of target locations, behavior or identity. In this level, which is sometimes referred to as multi-sensor data fusion or multi-sensor integration, data is combined to assign dynamic features (e.g., velocity) as well as static (e.g., identity) to objects, hence adding semantic labels to data. This level includes techniques for data association and management of objects (including creation and deletion of hypothesized objects, and state updates of the same). Level 1 addresses the following functions: data alignment, data/object correlation, object positional/kinematic/attribute estimation, object identity estimation.

• Level 2—situation assessment: investigates the relations among entities such as force structure and communication roles. This level involves aggregation of level 1 entities into high-level, more abstract entities, and relations between entities. An entity in this level might be a pattern of connected objects of level 1 entities. Input data are assessed with respect to the environment, relationship among level 1 entities, and entity patterns in space and time. Level 2 addresses the following functions: object aggregation, contextual interpretation/fusion, event/activity aggregation, multi-perspective assessment.

• Level 3—impact assessment: outlines sets of possible courses of action and the effect on the current situation. The impact assessment, which is sometimes called significance estimation or threat refinement, estimates and predicts the combined effects of system control plans and the entities of level 2 (possibly including estimated or predicted plans of other environment agents) on system objectives. Level 3 addresses the following functions: estimate/aggregate force capabilities, predict enemy intent, identify threat opportunities, estimate implications, multi perspective assessment.

• Level 4—process refinement: is an element of Resource Management used to close the loop by re-tasking resources to support the objectives of the mission. Process refinement evaluates the performance of the data fusion process during its operation and encompasses everything that refines it, e.g., acquisition of more relevant data, selection of more suitable fusion algorithms, optimization of resource usage with respect to, for instance, electrical power consumption. Process refinement is sometimes called process adaption to emphasize that it is dynamic and should be able to evolve with respect both its internal properties and the surrounding environment. The function of this level is in some literature handled by a so called meta-manager or meta-controller. It is also rewarding to compare level 4 fusion to the concept of covert attention in biological vision which involves, e.g., sifting through an abundance of visual information and selecting properties to extract. Level 4 addresses the following functions: evaluation (real-time control/long term improvement), fusion control, source requirements, mission management.

Figure 22.5 JDL model. (From [40], reprinted with permission.)

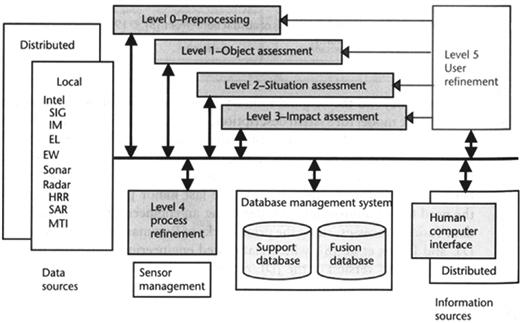

The 1998 revised JDL fusion model recognized the original Process Refinement level 4 function as a Resource Management function. In 2002, a level 5 was added [39,40], named User Refinement, into the JDL model to support a user’s trust, workload, attention, and situation awareness. Mainly the level 5 was added to distinguish between machine-process refinement and user refinement of either human control action or the user’s cognitive model. In many cases the data fusion process is focused on the machine point of view, however a full advantage can be taken by considering also the human factor, not only as a qualified expert to refine the fusion process, but also as a costumer for whom the fusion system is designed. Figure 22.6, taken from [40], shows the JDL fusion model including also the level 5.

Figure 22.6 JDL model including level 5. (From [40], reprinted with permission.)

Later in [41] also a level 6, Mission Management, was added; this level tackles the adaptive determination of spatial-temporal control of assets (e.g., airspace operations) and route planning and goal determination to support team decision making and actions (e.g., theater operations) over social, economic, and political constraints.

Figure 22.7 shows a multi-sensor data fusion architecture with a representation of the levels involved into each process of data fusion. Level 0 and level 1 concern the combination of data from different sensors, level 2 and level 3 are often referred to as information fusion. Under the proposed partitioning scheme, the same entity can simultaneously be the subject of level 0, 1, 2, and 3 fusion processes. Entity features can be estimated from one or more entity signal observations (e.g., pixel intensities, emitter pulse streams) via a level 0 data preparation/association/estimation process. The identity, location, track and activity state of an entity (whether it be a man, a vehicle, or a military formation) can be estimated on the basis of attributes inferred from one or more observations; i.e., via a level 1 data preparation/association/estimation process. The same entity’s compositional or relational state (e.g., its role within a larger structure and its relations with other elements of that structure) can be inferred via level 2 processes. Thus, a single entity—anything with internal structure, whether man, machine, or mechanized infantry brigade—can be treated either as an individual, subject to level 1 observation and state estimation—or as a “situation,” subject to compositional analysis via level 2 entity/entity association and aggregate state estimation. The impact of a signal, entity, or situation on the user goal or mission can then be predicted based upon an association of these to alternative courses of action for each entity via a level 3 process.

There are also other fusion models developed on the basis of different perspectives, including a purely computational and a human information processing. In the following an overview of different models [42].

The DIKW (Data Information Knowledge and Wisdom) [43] hierarchy organizes data, information, knowledge, and wisdom in layers with an increasing level of abstraction and addition of knowledge, starting from the bottommost data layer. The hierarchy can be considered alike the JDL data fusion model because both start from raw transactional data to yield knowledge at an increasing level of abstraction.

The JDL model and many other computational models do not simulate the complex human cognitive process that leads to “become aware,” because they do not model the fusion process from a human perspective. In 1988, Endsley defined the situation awareness as “the perception of the elements in the environment within a volume of time and space, the comprehension of their meaning, and the projection of their state in the near future” [44]. In [45,46] he identified three levels of situation awareness, namely perception, comprehension, and projection, parallel to the corresponding levels in the JDL model. Therefore the levels in the JDL model can be considered as processes producing results to help a human operator became aware of the situation. In [47] in addition to this three different aspects identified by Endsley, the model included also “intention” (i.e., the understanding of own options and courses of action relative to own goals) and “metacognition” (i.e., accounting for how reliable own situation awareness is likely to be). These levels summarize the fact that situation awareness requires the understanding of information, events, and the impact of own actions on own goals and objectives. This process involves several capabilities as learning, detection of anomalies, prediction of future behaviors, managing uncertainty, and analysis of heterogeneous sources.

The OODA (Observe-Orient-Decide-Act) loop, developed by Boyd in 1987 [48], is one of the first C4I (Command, Control, Communications, Computers, and Intelligence) architectures and it represents the classic decision-support mechanism in military information operations. Because decision-support systems for situational awareness are tightly coupled with fusion systems, the OODA loop has also been used for sensor fusion [49]. Observations in OODA refer to scanning the environment and gathering information from it; orientation is the use of the information to form a mental image of the circumstances; decision is considering options and selecting a subsequent course of action; and action refers to carrying out the conceived decision. Bedworth and O’Brien [50] report a comparison of the OODA loop to the levels of the JDL model.

The human information processing can be modeled by the Rasmussen model [51,52]. It is composed of three layers, namely skill-based, rule-based, and knowledge-based processing. The input of the process is a perception (e.g., the detection of a target by a sensor) and the output is an action. An example of result at the first level may be represented by the automatic identification of a tank by processing of row sensors data; at the next level an enemy unit composition can be indentified on the basis of its number and relative locations. Knowledge-based behavior represents the most complex cognitive processing used to handle novel, complex, situations where no routine or rule is available to manage situations. An example of this type of processing may be the interpretation of unusual behavior, and the consequent generation of a course of actions based on enemy unit size and behavior.

The Generic Error modeling System (GEMS) [53] is an extension of Rasmussen’s approach, which describes the competencies needed by workers to perform their roles in complex systems. GEMS describes three major categories of errors: skill-based slips and lapses, rule-based mistakes, and knowledge-based mistakes.

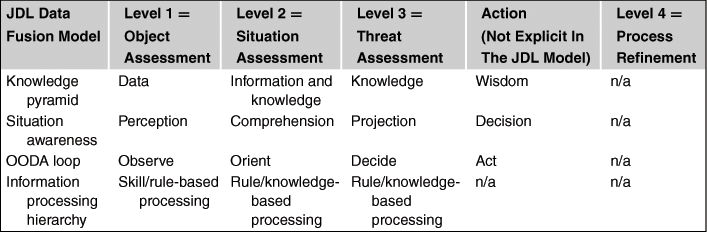

Table 22.1, from [42], shows a correspondence, and not a comparison, among levels and layers of various models presented before. This table is intended as a guide to identify the components of a data fusion architecture, where the separation between the columns is not so sharp. Notice that the JDL model does not explicitly model into a level the action consequent to the threat assessment. The action level, with the sense of a reaction is only in part included in the process refinement level 4, for this reason the column “action” has been inserted in the table, to allow a more clear correspondence with the other models that explicitly account for the reaction. The JDL model is the one that allows the most global view of the data fusion process from an operative perspective: there is not any correspondence of the other models with JDL level 4.

2.22.4 The information sources

This section gives a broad and very general description of the basic categories of intelligence that are the source of data/information employed to perform the fusion process. The USAF (United States Air Force) in 1998 first and the ODNA (Office of Directors of National Intelligence) later in 2008 described in their studies that there are six basic intelligence categories [54,55]:

• Signals Intelligence (SIGINT),

• Imagery Intelligence (IMINT),

• Measurement and Signature Intelligence (MASINT),

• Human Intelligence (HUMINT),

In addition, there is also Scientific and Technical (S&T) Intelligence resulting from the analysis of foreign scientific and technical information. In the following is an overview of the categories.

SIGINT is achieved by the interception/detection of electromagnetic (em) emissions. SIGINT includes Electronic Intelligence (ELINT) and Communications Intelligence (COMINT). The former derives from the processing and analysis of em radiation emitted from emitters, in most of cases radars, not employed for communications, other than nuclear detonations or radioactive sources. An emitter may be related closely to a specific threat. The information that can be achieved by a typical ESM (Electronic Support Measures) device consists of an estimate of the emitter category, location, with a certain accuracy, and various electronic attributes, such as frequency and pulse duration. This information can be employed in a high-level fusion process. COMINT derives from the processing and analysis of intercepted communications from emitters. The communications may be encrypted and they may be of several forms such as voice, e-mail, fax and the like.

IMINT is obtained by sensors working in several bandwidths which are able to produce a view of the scenario or of the specific target: electro/optical sensors, infrared, radar (e.g., Synthetic Aperture Radar (SAR) and Inverse SAR (ISAR), and Moving Target Indicator (MTI)), laser, laser radar (LADAR), and multi-spectral sensors. Each sensor has a unique capability. Some work in all weather conditions, some may work also in night conditions, and some produce high-quality images with detectable signatures.

MASINT is obtained by the collection and the analysis of several and heterogeneous sensors and instruments usually working in different regions or domains of the em spectrum, such as infrared or magnetic fields. MASINT includes Radar Intelligence (RADINT), Nuclear Intelligence (NUCINT), Laser Intelligence (LASINT), and Chemical and Biological Intelligence (CBINT). RADINT, is a specialized form of ELINT, which categorizes and locates as active or passive collection of energy reflected from a target.

HUMINT is the collection of information derived by the human contact. Information of interest might include target name, size, location, time, movement, and intent. HUMINT typically includes structured text (e.g., tables, lists), annotated imagery, and free text (e.g., sentences, paragraphs). HUMINT provides comprehension of adversary actions, capability and capacity, plans and intentions, decisions, research goals and strategies.

OSINT is publicly available information appearing either in print or in electronic form including radio, television, newspapers, journals, the Internet, commercial databases, videos, graphics, and drawings. OSINT can be considered as a complement to the other intelligence categories and can be used to fill gaps and improve accuracy and confidence in classified information. A special mentioning is for the Internet, that, with its blogs, e-mails, videos, messages and mobile systems, favors an ever greater interaction between users. Moreover notice that there is a little overall planning in the development of the World Wide Web, but rather a myriad of initiatives by individuals of small groups. Government have always tried to use telephone tapping, surveillance, files, i.e., intelligence. Now this is possible on a different scale given the technical possibilities offered by satellites, mobile, phones, credit cards management systems, information storage, etc. From the topological point of view, Internet is a scale-free complex network with a power-law of the distribution of the nodes [56]; this technical remark should be considered in the data exploitation analysis.

GEOINT is the analysis and the visual representation of the activities on the earth related to the security achieved by the sensors (radar, optical, IR, multispectral) deployed in the space. The information related to GEOINT is obtained through an integration of imagery, imagery intelligence, and geospatial information.

2.22.5 Homogeneous sensor networks

Stand-alone sensors usually provide a fragmentary view of a complex situation of interest. A significant enhancement of performance can therefore be accomplished by a combination of networked sensors in the close vicinity to the region of interest. Using efficient methods of centralized or decentralized multiple sensor fusion, the quality of the produced situation picture can significantly be improved. In practice, improvements with respect to the following aspects are of interest:

• production of accurate and continuous tracks (e.g., objects, persons, single vehicles, group objects),

• system reaction rates (e.g., track extraction, detection of target maneuvers, track monitoring),

• sustainment of reconnaissance capabilities in case of either system or network failures (e.g., graceful degradation),

• system robustness against jamming and deception,

• compensation of degradation effects (e.g., sensor misalignment, limited sensor resolution),

• robustness against sub-optimal real-time realizations of sensor data fusion algorithms,

• processing of eventually delayed sensor data (e.g., out-of sequence measurements).

In the following, several sections tackle different aspects related to homogeneous sensor networks.

2.22.5.1 Sensor configuration

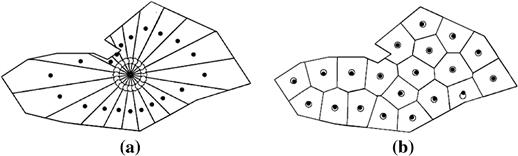

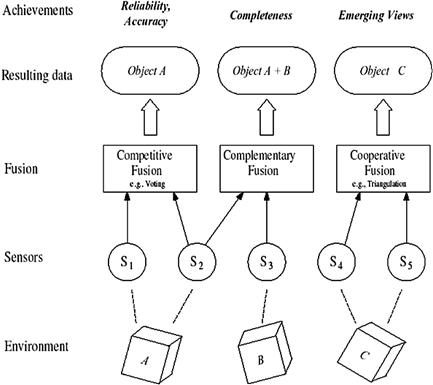

Sensor fusion networks can be categorized according to the type of sensor configuration. Durrant-Whyte distinguishes three types of sensor configuration as schematized in Figure 22.8 [57,58].

Figure 22.8 Sensors configuration (from [57], reprinted with permission).

Competitive sensor data fusion: Sensors are configured competitive if each sensor delivers independent measurements of the same property. Sensor data represent the same attribute, and the fusion is to reduce uncertainty and resolve conflicts. Competitive sensor configuration is also called a redundant configuration. Sensors S1 and S2 in Figure 22.8 represent a competitive configuration, where both sensors redundantly observe the same property of an object in the environment space.

Complementary sensor data fusion: A sensor configuration is called complementary if the sensors do not directly depend on each other, but can be combined to give a more complete image of the phenomenon under observation. Fusion of the sensor data provides an overall and complete model. Examples for a complementary configuration is the employment of multiple cameras each observing disjoint parts of a room, or using multiple spectrum signatures to identify a land cover type, or using different waveform to identify an aircraft type. Sensor S2 and S3 in Figure 22.8 represent a complementary configuration, since each sensor observes a different part of the environment space.

In both competitive and complementary sensor configurations, there is an improvement of the accuracy of the target characteristics estimation consequent to the data fusion. In their seminal work H. Cramer and C.R. Rao found how to compute the best theoretical accuracy that can be achieved by an estimator. The lower bound of accuracy, i.e., the mean square error of any unbiased estimator, is given by the inverse of the so-called Fisher Information Matrix (FIM). The computation of the CRLB (Cramer-Rao Lower Bound) applies to problems involving the maximum likelihood estimation of unknown constant parameters from noisy measurements [59]. The best achievable improvement of target location and track accuracy can be quantified by the reduction of the CRLB consequent to the track fusion. In [60] this computation is reported in case of fusion of data from two sensors with an ideal unitary detection probability. In [61,62] the same computation has been proposed in case of detection probability less than one and false alarm probability higher than zero.

Cooperative sensor data fusion: A cooperative sensor network uses the information provided by two independent sensors to derive information that would not be available from the single sensors. An example for a cooperative sensor configuration is stereoscopic vision: by combining two-dimensional images from two cameras at slightly different viewpoints a three-dimensional image of the observed scene is derived. Cooperative sensor fusion is the most difficult to design, because the resulting data are sensitive to inaccuracies in all individual participating sensors. Thus, in contrast to competitive fusion, cooperative sensor fusion generally decreases accuracy and reliability. Sensor S4 and S5 in Figure 22.8 represent a cooperative configuration. Both sensors observe the same object, but the measurements are used to form an emerging view on object C that could not have been derived from the measurements of S4 or S5 alone.

These three categories of sensor configuration are not mutually exclusive. Many applications implement aspects of more than one of the three types. An example for such a hybrid architecture is the application of multiple cameras that monitor a given area. In regions covered by two or more cameras the sensor configuration can be competitive or cooperative. For regions observed by only one camera the sensor configuration is complementary.

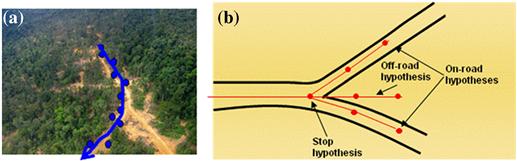

2.22.5.2 Classical approach to surveillance

Sensor networks have countless applications, for example, we mention the sensor networks used in computer science and telecommunications, in biology, where they can be used to monitor the behavior of animal species such as birds or fishes, and in habitat monitoring, where they can be used to provide real-time rainfall and water level information used to evaluate the possibility of flooding. In the field of Homeland Protection one of the main task to be assigned to a sensor network is the surveillance with its most general significance. Automatic surveillance is a process of monitoring the behavior of selected objects (targets and/or anomalies) inside a specific area by means of sensors. A target generally consists of an object (e.g., a tank close to a land border or a rubber approaching to the coast) whose presence and characteristics can be detected and estimated by the sensor; an anomaly consists in a non usual behavior (e.g., a jeep moving off-road, the increasing of the radioactivity level within an area) that can be revealed by the sensor. Sensors typically provide the following functions:

• detection of a targets or anomalies inside the surveillance area,

• estimation of target position or the anomaly localization and extension,

• monitoring of the target kinematic (tracking) or of the anomaly behaviors,

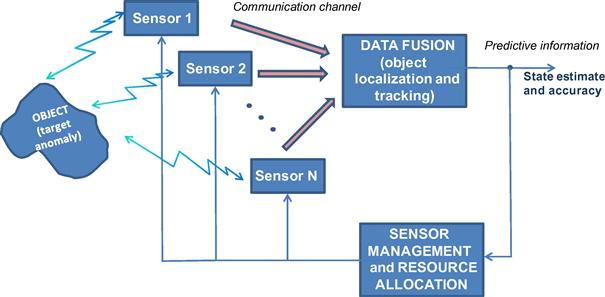

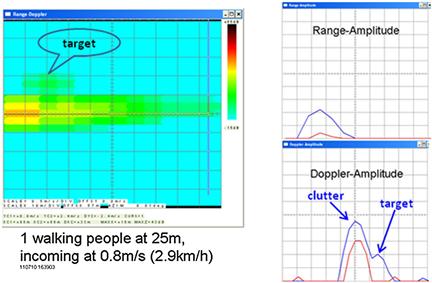

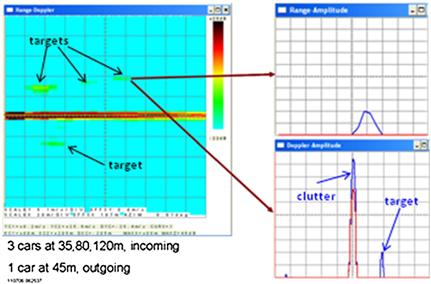

To perform the previous functions, the sensors can be organized on the bases of several approaches. The classical approach to surveillance of wide areas is based on the use of a single or few sensors with long range capabilities. The signal received by the single sensor is processed by means of suitable digital signal processing subsystems. In this case the sensors are costly, with adequate computation and communication capabilities. Sensors are normally located in properly selected sites, to mitigate terrain masking problems; nevertheless, they provide different performance depending on the location of target inside the surveillance area. Typical sensors are radars (ground-based, air-borne, ship-borne or space-based), infrared or TV cameras, seismic, acoustical, radioactive sensors. Usually in this kind of networks, as represented in Figure 22.9, the information traffic goes from the sensor nodes to a single sink node called information fusion center that performs the target localization and tracking. According to the information received from the sensors the fusion center monitors the area where the sensors are deployed and decides, on the basis of the state estimates and their accuracy (e.g., a covariance matrix for a Kalman filter or a particle cloud for a particle filter) the actions to take.

In [63] an example of high-performance radar netted for Homeland Security application with a centralized data fusion process is described. The same classical approach is presented in [64] where this kind of sensor network is employed for natural resource management and bird air strike hazard (BASH) applications.

However if an intruder reaches and neutralizes the fusion center, the communication between the network nodes are interrupted and the whole network is exposed to the risk of becoming useless as a network even if the individual sensors may still be all working.

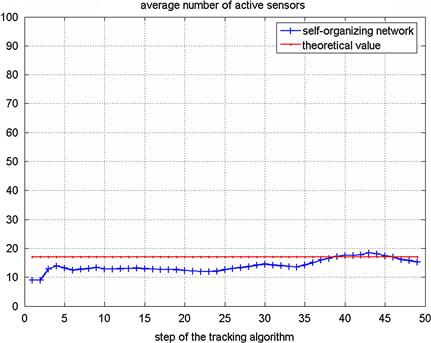

2.22.5.3 Collaborative signal and information processing (CSIP)

Nowadays, a novel approach to the automatic surveillance has been adopted; it is based on the use of many sensors with short range capabilities, low costs, and limited computation and communication capabilities. In case of a huge number of sensors, the use of information fusion centers is unpractical and their functioning is based on the information exchange between “near-by” sensors. The sensors can be distributed in fixed positions of the territory, but they could also be deployed adaptively to the change of the scenario. There are several approaches: they can be randomly distributed inside the surveillance area and if the number of sensors is high, the performance of the surveillance system can be considered independent of the location of the targets; then the signal received by each sensor is processed using the computational capabilities of a sub-portion of the sensor system and employed to re-organize dynamically the network. Sensors may be agile in a variety of ways, e.g., the ability to reposition, point an antenna, choose sensing mode, or waveform. Notice that the number of potential tasking of the network grows exponentially with the number of sensors. The goal of sensor management in a large network is to choose actions for individual sensors dynamically so as to maximize overall network utility. This process is called Collaborative Signal and Information Processing (CSIP) [65]. One of the central issues for CSIP to address is energy-constrained dynamic sensor collaboration: how to dynamically determine who should sense, what needs to be sensed, and who the information must be passed onto. This kind of processing system allows a limitation in the consumption of power. Applying a surveillance strategy which accounts for the target tracking accuracy and the sensor random location, only a limited number of sensors are awake and follow/anticipate the target movement; thus, the network self-organizes to detect and track the target, allowing an efficient performance from the energetic point of view with limited sensor prime power and with a reduced number of sensors working in the whole network. For example in [66], instead of requesting data from all the sensors, the fusion center iteratively selects sensors for the target localization: first a small number of anchor sensors send their data to the fusion center to obtain a coarse location estimate, then, at each step a few non-anchor sensors are activated to send their data to the fusion center to refine the location estimate iteratively. Moreover the possibility to actively probe certain nodes allows to disambiguate multiple interpretations of an event.

In [67] the techniques of information-driven dynamic sensor collaboration is introduced. In this case an information utility measurement is defined as the statistical entropy and it is exploited to evaluate the benefits in employing part of the network that consequently is re-organized. Other cost/utility functions can be employed as criteria to dynamically re-organize the sensor network as described in [68,69].

Several analytical efforts have been done to evaluate the performance of such networks in terms of tracking accuracy. As usual the CRLB has been taken as reference of the best achievable accuracy; in particular a new concept of conditional PCRLB (Posterior Cramer Rao Lower Bound) is proposed and derived in [70]. This quantity is dependent on the actual observation data up to the current time, and is implicitly dependent on the underlying system state. Therefore, it is adaptive to the particular realization of the underlying system state and provides a more accurate and effective online indication of the estimation performance than the unconditional PCRLB. In [71,72] the PCRLB is proposed as a criterion to dynamically select a subset of sensors over time within the network to optimize the tracking performance in terms of mean square error. In [73] the same criterion is proposed as a framework for the systematic management of multiple sensors in presence of clutter.

2.22.5.4 Self-Organizing Sensor Networks

Self-organization can be defined as the spontaneous set-up of a globally coherent pattern out of local interactions among initially independent components. Sensors are randomly spread out over a two dimensional surveillance area. In a self-organized system, its elements affect only close elements; distant parts of the system are basically unaffected. The control is distributed, i.e., all the elements contribute to the fulfillment of the task. The system is relatively insensitive to perturbations or errors, and have a strong capacity to restore itself. Initially independent components form a coherent whole able to efficiently fulfill a particular function [74]. Flocks of birds, shoals of fish, swarms of bees are examples of self-organizing systems; they move together in an elegantly synchronized manner without a leader which coordinates them and decides their movement. It has been shown that flocks of birds self-organize into V-formations when they need to travel long distances to save energy, by taking advantage of the upwash generated by the neighboring birds. Cattivelli and Sayed [75] propose a model for the upwash generated by a flying bird, and shows that a flock of birds is able to self-organize into a V-formation as if every bird processes spatial and network information by means of an adaptive diffusive process. This result has interesting implications. First, a simple diffusion algorithm is able to account for self-organization of birds. Second, according to the model, that birds can self-organize on the basis of the upwash generated by the other birds. Third, some information is necessarily shared among birds to reach the optimal flight formation. The paper also proposes a modification to the algorithm that allows birds to organize, starting from a V-formation, into a U-formation, leading to an equalization effect, where every bird in the flock observes approximately the same upwash. The same algorithm based on birds flight is extended in [76] to the problem of distributed detection, where a set of sensors/nodes is required to decide between two hypotheses on the basis of the collected measurements. Each node makes individual real-time decisions and communicates only with its immediate neighbors, in order that any fusion center is not necessary. The proposed distributed detection algorithms are based on diffusion strategies described in [77–79] and their performance is evaluated by means of classical probabilities of detection and false alarms.

These diffusion detection schemes are attractive in the context of wireless and sensor networks thanks to their intrinsic adaptability, scalability, improved robustness to node and link failure as compared to centralized schemes, and their potential to save energy and communication resources.

2.22.5.5 Self-synchronization mechanism applied to sensor network

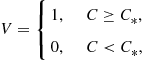

Several studies have shown how a simple self-synchronization mechanism, borrowed from biological systems, can form the basic tool for achieving globally optimal distribution decisions in a wireless sensor network with no need for a fusion center. Self-synchronization is a phenomenon first observed between pendulum clocks (hooked to the same wooden beam) by Christian Huygens in 1658. Since then, self-synchronization has been observed in a myriad of natural phenomena, from flashing fireflies in South East Asia to singing crickets, from cardiac peacemaker or neuron cells to menstrual cycles of women living in strict contact with each other [80]. The goal of these studies is to find a strategy of interaction among the sensors/nodes that could allow them to reach globally optimal decisions in terms of a “consensus” value in a totally decentralized manner. Distributed consensus algorithms are indeed techniques largely studied in distributed computing [81,82]. The approaches suggested in [83,84] give a form of consensus achieved through self-synchronization that may result critical in wide-area networks, where propagation delays might induce an ambiguity problem. This problem is overcome in [85–87] where also a model of the network and of the sensors is proposed. Each of the N nodes composing the network is equipped with four basic components: (1) a transducer that senses the physical parameter of interest ![]() (e.g., temperature, concentration of contaminants, radiation, etc.); (2) a local processing unit that provides a function

(e.g., temperature, concentration of contaminants, radiation, etc.); (2) a local processing unit that provides a function ![]() of the measurements; (3) a dynamical system, initialized with the local measurements, whose state

of the measurements; (3) a dynamical system, initialized with the local measurements, whose state ![]() evolves as a function of its own measurement

evolves as a function of its own measurement ![]() and of the state of nearby sensors; (4) a radio interface that makes possible the interactions among the sensors. The criterion to reach a consensus value is the asymptotical convergence toward a common value of all the derivatives of the state, for any set of initial conditions and for any set of bounded. This condition makes the convergence to the final consensus independent of the network graph topology. However the topology has an impact on several aspects: the overall energy necessary to achieve the consensus and the convergence time. In general there exists a trade-off between the local power transmitted by a each sensor and the converge time depending on the algebraic connectivity of the network graph, as shown in [88]. In the practical applications these aspects cannot be neglected; for instance, the design of a network should account for the precision to achieve, and the time to get the consensus value at the given precision, versus such constraints as the energy limitations of the sensors. A global overview of the problem is given in [89].

and of the state of nearby sensors; (4) a radio interface that makes possible the interactions among the sensors. The criterion to reach a consensus value is the asymptotical convergence toward a common value of all the derivatives of the state, for any set of initial conditions and for any set of bounded. This condition makes the convergence to the final consensus independent of the network graph topology. However the topology has an impact on several aspects: the overall energy necessary to achieve the consensus and the convergence time. In general there exists a trade-off between the local power transmitted by a each sensor and the converge time depending on the algebraic connectivity of the network graph, as shown in [88]. In the practical applications these aspects cannot be neglected; for instance, the design of a network should account for the precision to achieve, and the time to get the consensus value at the given precision, versus such constraints as the energy limitations of the sensors. A global overview of the problem is given in [89].

2.22.5.6 From theory to real application problems

Moving from the functional model to a working implementation in a real environment involves a number of design considerations: including what information sources to use and what fusion architecture to employ, communication protocols, etc.

Admittedly, the fusion of data is decoupled from the actual number of information sources and, hence, does not require necessarily multiple sensors: the fusion, in fact, may be performed also on a temporal sequence of data that was generated by a single information source (e.g., a fusion algorithm may be applied to a sequence of images produced by a single camera sensor). However, employing a number of sensors provides many advantages as well explained in the previous Sections. Unsurprisingly, there are also difficulties associated with the use of multiple sensors.

A missed sensor registration may cause a failure in the correct association between signals or features of different measurements. This problem and the similar data association problem are very important and apply also to single sensor data processing. To perform data registration, the relative locations of the sensors, the relationship between their coordinate systems, and any timing errors need to be known, or estimated, and accounted for otherwise a mismatch between the compiled picture and the truth may result. An overstated confidence in the accuracy of the fused output, and inconsistencies between track databases, such as multiple tracks that correspond to a single target may appear. A missed registration can result from location and orientation errors of the sensor relative to the supporting platform, or of the platform relative to the Earth, such as a bearing measurement with an incorrect North alignment. Errors may be present in data time stamping, and numerical errors may occur in transforming data from one coordinate system to another. Automatic sensor registration can correct for these problems by estimating the bias in the measurements along with the kinematics of the target. However, the errors in sensor registration need to be known and accounted for [90]. In [91] a maximum likelihood (EML) algorithm for registration is presented using a recursive two-step optimization that involves a modified Gauss-Newton procedure to ensure fast convergence. In [92] a novel joint sensor association, registration, and fusion is performed exploiting the expectation–maximization algorithm incorporated with the linear Kalman filter (KF) to give simultaneous state and parameter estimates. The same approach can be followed also with non linear filtering techniques as the Extended KF (EKF) and the Unscented KF (UKF) as proposed in [93], where also the performance is evaluated by means of the PCRLB.

Next to the spatial sensor registration also the temporal alignment cannot be neglected. For instance, a critical aspect of a sensor network is its vulnerability to temporary node sleeping, due to duty-cycling for battery recharge, permanent failures, or even intentional attacks.

Other realistic problems, such as conflicting information and noise model assumptions, may enable the use of some fusion techniques. Noisy input data sometimes yield conflicting observations, a problem that has to be addressed and which does not arise in single sensor data processing. The administration of multiple sensors have to be coordinated and information must be shared between them.

2.22.5.7 Rethinking mathematical algorithms for net-centric approaches

Most of the optimization algorithms have been developed in a centralized framework, i.e., they have been conceived to perform centralized data fusion process. In the last years the trend is to employ network centric approaches, and the mathematical optimization algorithms must be able to support this approach. In the following an example of the adaptation of a “centralized-conceived” algorithm to the new trend is presented.

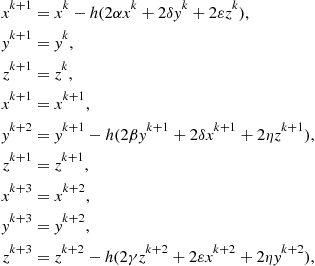

Consider the following minimization problem to solve:

![]() (22.1)

(22.1)

where ![]() are real positive values and the function

are real positive values and the function ![]() represents an ellipsoid function, whose axes do not coincide with the reference frame axes if

represents an ellipsoid function, whose axes do not coincide with the reference frame axes if ![]() . The problem of Eq. (22.1) can be solved by the steepest descent method in a centralized fusion process frame, hence it will be named “centralized steepest descent.” The centralized steepest descent method when used to solve minimization problems is an iterative procedure that, beginning from an initial guess, updates at every iteration the current approximation of the solution of the function to minimize with a step in the direction of the gradient of the own function. In a network centric approach it may be solved by the application of the Jacobi method2 usually employed for the iterative solution of linear system equation.

. The problem of Eq. (22.1) can be solved by the steepest descent method in a centralized fusion process frame, hence it will be named “centralized steepest descent.” The centralized steepest descent method when used to solve minimization problems is an iterative procedure that, beginning from an initial guess, updates at every iteration the current approximation of the solution of the function to minimize with a step in the direction of the gradient of the own function. In a network centric approach it may be solved by the application of the Jacobi method2 usually employed for the iterative solution of linear system equation.

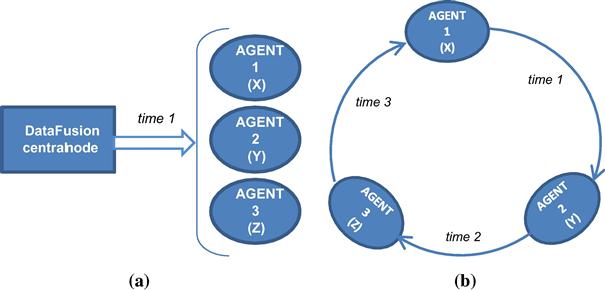

Consider three agents (namely agent 1, 2, and 3) controlling the three variables ![]() . In the centralized data fusion process, represented in Figure 22.10a, the communication between the three agents is completely performed at the same instant of time; in the network centric case this does not happen. Consider the model of Figure 22.10b with the following communication scheme:

. In the centralized data fusion process, represented in Figure 22.10a, the communication between the three agents is completely performed at the same instant of time; in the network centric case this does not happen. Consider the model of Figure 22.10b with the following communication scheme:

moreover the communications among agents is not instantaneous, but they succeeds in time.

Figure 22.10 (a) centralized data fusion process model; (b) network centric data fusion process model.

The method of the centralized steepest descent applied to the function ![]() , given a starting point

, given a starting point ![]() , is based on the following iterations:

, is based on the following iterations:

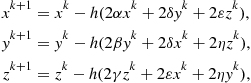

(22.2)

(22.2)

where ![]() , and

, and ![]() represents the step employed in the steepest descent method.

represents the step employed in the steepest descent method.

A network centric steepest descent method can be derived by the communication scheme represented in Figure 22.10b and described below. Given the starting point ![]() , the following iterations can be done:

, the following iterations can be done:

(22.3)

(22.3)

where ![]() and

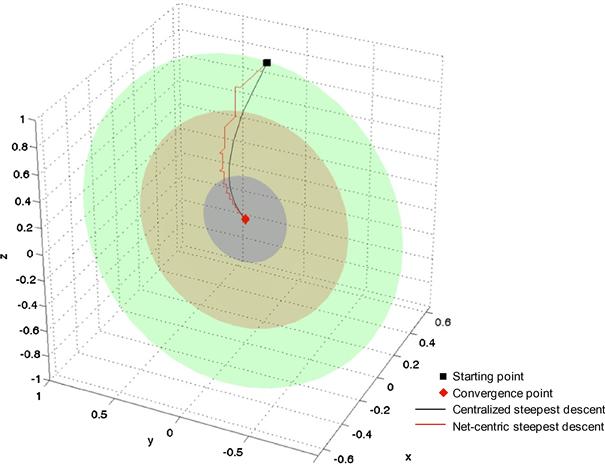

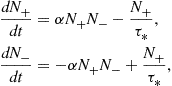

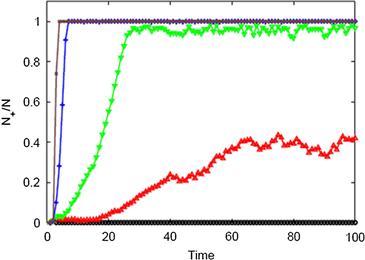

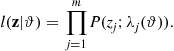

and ![]() represents the step employed in the steepest descent method. Figure 22.11 shows the comparison of the two methods for the previous model. Note that the three agents in the net-centric approach are those looking at the function to be minimized along the x, y, and z axes respectively. The black square and the red diamond in the curves represent respectively the starting point of the iteration and the final position. The black solid line shows the trajectory described by the variables

represents the step employed in the steepest descent method. Figure 22.11 shows the comparison of the two methods for the previous model. Note that the three agents in the net-centric approach are those looking at the function to be minimized along the x, y, and z axes respectively. The black square and the red diamond in the curves represent respectively the starting point of the iteration and the final position. The black solid line shows the trajectory described by the variables ![]() obtained by the application of the centralized steepest descent method; the red solid line shows the behavior of the variables obtained by the net-centric steepest descent method. Note that the red line approaches the minimum by moving along the x, y, and z axes separately. The ellipsoids of Figure 22.11 represent the iso-level surfaces of the objective function. Notice that the telecommunication network modeled for the net-centric steepest descent determines the usual Jacobi iteration employed for the solution of linear systems associated to minimization problems [94–96]. In the following Section 2.22.6.1 this approach is applied to reach the optimal deployment of a sensor network.

obtained by the application of the centralized steepest descent method; the red solid line shows the behavior of the variables obtained by the net-centric steepest descent method. Note that the red line approaches the minimum by moving along the x, y, and z axes separately. The ellipsoids of Figure 22.11 represent the iso-level surfaces of the objective function. Notice that the telecommunication network modeled for the net-centric steepest descent determines the usual Jacobi iteration employed for the solution of linear systems associated to minimization problems [94–96]. In the following Section 2.22.6.1 this approach is applied to reach the optimal deployment of a sensor network.

2.22.6 Real study cases: novel approaches to sensor networks

This section proposes several study cases of sensor networks employing novel approaches. Section 2.22.6.1 proposes an optimization method, projected in the network centric frame, to obtain the optimal deployment of a cooperative sensor network; Section 2.22.6.2 describes how to employ the so-called bio-inspired models of dynamic sensor collaboration in a chemical sensor network to detect a chemical pollutant; finally Section 2.22.6.3 gives a description of the typical problem of detection of radioactive sources.

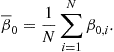

2.22.6.1 A cooperative sensor network: optimal deployment and functioning

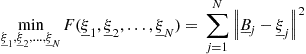

This section presents a mathematical model for the deployment of a sensor network, for the creation of consensus values from the noisy data measured and a statistical methodology to detect local anomalies in these data. A local anomaly in the data is associated to the presence of an intruder. The model of sensor network presented here is characterized by the absence of a fusion center. In other words the deployment, the construction of the consensus values, and the detection of local anomalies in the data are the result of local interactions between sensors. Nevertheless the local interactions will lead to global solution of the considered problem. This is an example of model of a network centric sensor network. The sensors are assumed to be identical and they measure a quantity pertinent to the properties of the area to survey able to reveal the presence of an intruder. In the proposed study case the sensors are able to measure the temperature of the territory in the position or in the “area” where they are located; in absence of anomalies there is a uniform temperature on the territory where the sensors are deployed. The sensor measures are noisy and can be considered synchronous. This measurement process is repeated periodically in time with a given frequency. From these measures a “consensus” temperature is deduced, pertinently to the territory where the sensors are deployed and an estimate of the magnitude of the noise contained in the data. Finally using these consensus values as reference values local anomalies are detected by the individual sensors. In the following we give some analytical details of the consensus method [97].

Let ![]() be a bounded connected polygonal domain in two dimensional real Euclidean space R2. The domain

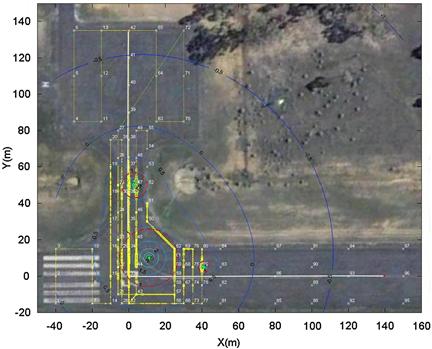

be a bounded connected polygonal domain in two dimensional real Euclidean space R2. The domain ![]() represents the territory where the sensor network must be deployed; in our case the downtown part of the Italian city of Urbino, shown in Figure 22.12. Let

represents the territory where the sensor network must be deployed; in our case the downtown part of the Italian city of Urbino, shown in Figure 22.12. Let ![]() denote the Euclidean norm of in R2. Consider N sensors

denote the Euclidean norm of in R2. Consider N sensors ![]() , located respectively, in the points

, located respectively, in the points ![]() , assumed to be distinct. To the sensor network deployed in the points

, assumed to be distinct. To the sensor network deployed in the points ![]() corresponds a graph whose nodes are the sensors location and whose edges join the sensors able to communicate between themselves. This graph is assumed to be connected and can be imagined as laid on the territory. The assumption that the graph is connected is equivalent to assuming that the sensors constitute a network. For

corresponds a graph whose nodes are the sensors location and whose edges join the sensors able to communicate between themselves. This graph is assumed to be connected and can be imagined as laid on the territory. The assumption that the graph is connected is equivalent to assuming that the sensors constitute a network. For ![]() , a polygonal region

, a polygonal region ![]() is associated to each sensor

is associated to each sensor ![]() ; this region is defined by the condition that the points belonging to

; this region is defined by the condition that the points belonging to ![]() are closest to the sensor

are closest to the sensor ![]() , that is they are closest to

, that is they are closest to ![]() , than to any other of the remaining sensors

, than to any other of the remaining sensors ![]() located in

located in ![]() . It follows:

. It follows:

![]() (22.4)

(22.4)

When for a given ![]() the minimizer of the function

the minimizer of the function ![]() is not unique we attribute

is not unique we attribute ![]() to

to ![]() , where i is the smallest index between the indices that are minimizers of the function f.

, where i is the smallest index between the indices that are minimizers of the function f.

The collection of subsets ![]() defined in Eq. (22.4) and further specified by the condition above is a partition of

defined in Eq. (22.4) and further specified by the condition above is a partition of ![]() and it is a Voronoi partition of

and it is a Voronoi partition of ![]() associated to the Voronoi centers

associated to the Voronoi centers ![]() , as represented in Figure 22.13 [98], where the sets

, as represented in Figure 22.13 [98], where the sets ![]() are the Voronoi cells. The sensor

are the Voronoi cells. The sensor ![]() is located in

is located in ![]() , with

, with ![]() , and monitors the sub-region

, and monitors the sub-region ![]() of

of ![]() . Note that there is a Voronoi partition of

. Note that there is a Voronoi partition of ![]() associated to each choice of the Voronoi centers

associated to each choice of the Voronoi centers ![]() , that, completed with the graph that defines the communication between the sensors, constitute a deployment of the sensors

, that, completed with the graph that defines the communication between the sensors, constitute a deployment of the sensors ![]() on the territory

on the territory ![]() .

.

After the definition of a Voronoi partition of ![]() , we want to determine the optimal one with respect to a pre-specified criterion, that in this study case is the fact that the Voronoi centers

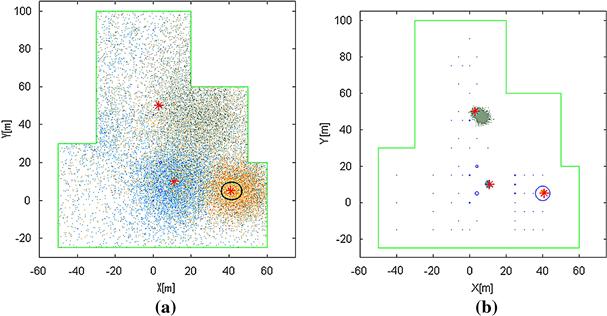

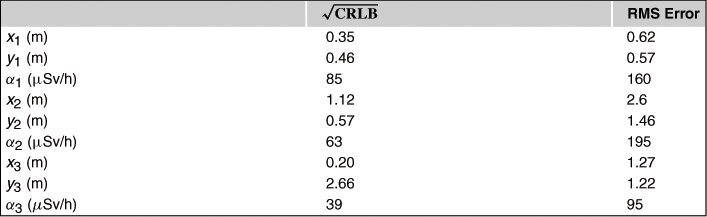

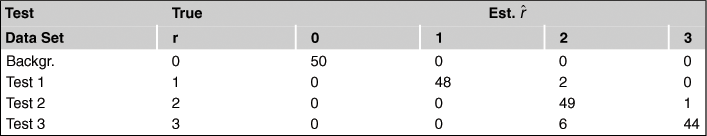

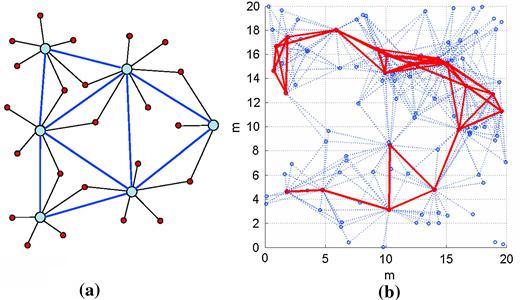

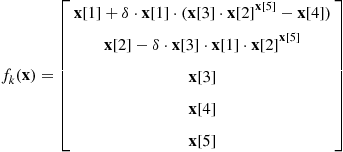

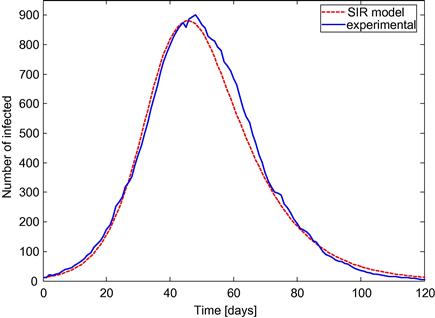

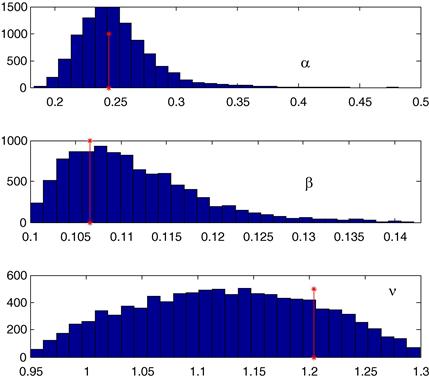

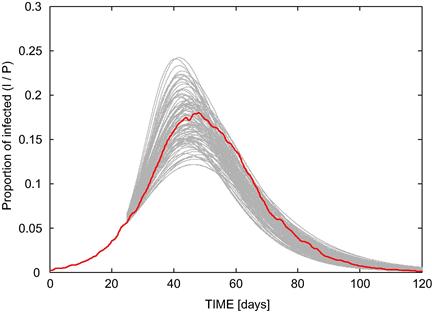

, we want to determine the optimal one with respect to a pre-specified criterion, that in this study case is the fact that the Voronoi centers ![]() should coincide (as much as possible) with the centers of mass of the corresponding Voronoi cells