As repmgr is a client/server PostgreSQL management suite, we need at least two nodes involved before we're really using it. We can perform the tasks outlined in this recipe as many times as we wish, creating several clones and registering them with repmgr. Of course, this book is for demonstration purposes, so we'll leave the larger clusters to you. With multiple nodes involved, the chances of data loss or system outages decline, which is excellent for our goal of high availability.

This recipe will focus on the process necessary to add a node to an existing repmgr cluster. The existing cluster in our case is the one that we established on pg-primary in the previous recipe.

This recipe depends on repmgr being installed on both a primary server and the clone that we will use. Please follow the Installing and configuring repmgr recipe before continuing.

For the purposes of this recipe, pg-primary will remain our master node, and the replica will be pg-clone. As always, the /db/pgdata path will be our default data directory. Be sure to have the password for the postgres system user ready.

All of these commands should be executed from pg-clone. Follow these steps to produce a fully functional repmgr replica:

- As the

postgresuser, generate an RSA key pair and send it topg-primarywith these commands:ssh-keygen -t rsa -N '' ssh-copy-id postgres@pg-primary

- Clone the data on

pg-primarywith the following command as thepostgresuser:repmgr -D /db/pgdata standby clone pg-primary - Start the new clone as the

postgresuser withpg_ctl:pg_ctl -D /db/pgdata start - Execute this command to find the binary path to PostgreSQL tools:

pg_config --bindir - Create a file named

/etc/repmgr.confand enter the following contents:cluster=pgnet node=2 node_name=child1 conninfo='host=pg-clone dbname=postgres' pg_bindir=[value from step 4]

- Register

pg-clonewithpg-primaryas thepostgresuser:repmgr -f /etc/repmgr.conf standby register - Start the

repmgrddaemon with the following command as a root-level user:sudo service repmgr start - Connect to the

postgresdatabase and view the status of repmgr with this SQL statement:SELECT standby_node, standby_name, replication_lagFROM repmgr_pgnet.repl_status;

Because the replica is based on the primary, much of the preliminary work we performed in the previous recipe is inherited. One thing we can't avoid is creating an SSH key for direct server-to-server communication. Any time we create a new clone, it's a good practice to generate a key with ssh-keygen and copy that key to the current primary server.

Tip

In fact, every server should have the postgres SSH key for every other server. In situations where any server in the cluster can be promoted to be the new primary, this ensures repmgr commands always work as expected. We strongly recommend that you use system automation tools such as Ansible, Chef, or Puppet to manage these keys.

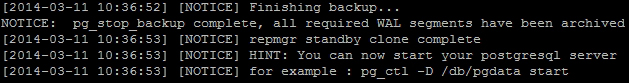

With the SSH key established, we can clone pg-primary with the repmgr command. Because no PostgreSQL instance exists on pg-clone yet, we can't use our configuration file just yet. Instead, we specify -D to define the path to the database. Assuming that there were no errors, the command should produce a lot of extremely verbose output, with this at the end:

If we follow the advice in the last line and start PostgreSQL with pg_ctl, the clone should immediately connect to pg-primary and begin replication. We can do this because repmgr knows all of the connection information necessary to establish a streaming replication connection with pg-primary. During the cloning process, it automatically created a recovery.conf file suitable to start directly in replication mode.

Now, we must configure repmgr to recognize the clone. When we create /etc/repmgr.conf, we need to use the same cluster name as we used on pg-primary. We also tell repmgr that this is node 2, and it should be named child1. The conninfo value should always reflect the connection string necessary for repmgr to connect to PostgreSQL on the named node. As we did earlier, we set pg_bindir to avoid encountering possible repmgr bugs.

With the configuration file in place, we can register the new clone similarly to the process that we used to register the primary. By calling the repmgr command with -f and the full path to the configuration file, there are several operations we can invoke. For now, we will settle with standby register to tell repmgr that it should track pg-clone as part of the pgnet cluster.

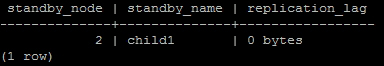

Once we start the repmgrd daemon, all nodes are aware of each other and the current status of each. We can confirm this by checking the repl_status view on any node. If we execute the supplied SQL statement, we should see this:

The repl_status view has other useful columns, but for now we can see that the cluster considers child1 the only standby node, and it's not lagging behind the primary at all.

Tip

If you are using Version 2.0 of repmgr, this view will be empty unless the repmgrd daemon is launched with the --monitoring-history parameter. The authors of repmgr claim that the view is no longer necessary for operation, but we feel more comfortable knowing that we can check the status of the cluster via SQL at any time. As such, the default for our included repmgr initialization script sets this option.

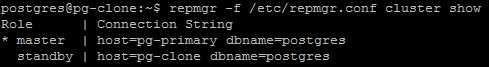

There is another way to obtain cluster status. The repmgr command can also report how it perceives the cluster from any active node, given the cluster show parameter. Here is the entire command:

repmgr -f /etc/repmgr.conf cluster show

The result of this command as executed on pg-clone is as follows:

- Though the process that we used differs slightly from the repmgr documentation, it is fully viable. If you would like to see the entire process in greater detail, repmgr documentation is available at https://github.com/2ndQuadrant/repmgr.