In this chapter, we have chosen to represent database shards as PostgreSQL schema names. So, if our basic schema is named myapp, shard 1 would be myapp1, shard 15 would be myapp15, and so on. This is what we call the logical shard name.

Beyond this, shards should be independent of each other such that they can be relocated to another PostgreSQL server arbitrarily. However, if shards can be moved at will, how do we find them? Much like LVM has a physical drive, logical shards have a corresponding physical shard. The physical shard is the server where the logical shard currently resides. Think of it like this diagram:

Elements such as clients, products, and vendors are shared resources that all PostgreSQL shard servers can use. This is where our foreign tables would be beneficial. The logical shards (schemas) myapp1 through myapp4 all reside on PG Server 1, and myapp5 through myapp8 live on PG Server 2. In this architecture, we have eight logical shards distributed to two physical servers.

In this recipe, we will explore various techniques to preserve and decode the logical to physical mapping necessary to interact with the correct data.

This recipe depends on the work we performed in the Creating a scalable nextval replacement recipe. Please review that part of this chapter before continuing.

All SQL statements in this recipe should be executed by the postgres database user. Follow these steps to build a table to map logical shards to their physical locations:

- Execute this SQL statement to create the shard-mapping table:

CREATE TABLE shard.shard_map ( map_id SERIAL PRIMARY KEY, shard_id INT NOT NULL, source_schema VARCHAR NOT NULL, shard_schema VARCHAR NOT NULL, server_name VARCHAR NOT NULL, UNIQUE (shard_id, source_schema) );

- Create a shard and register it with the shard map with this SQL:

CREATE SCHEMA myapp1; INSERT INTO shard.shard_map (shard_id, source_schema, shard_schema, server_name) VALUES (1, 'myapp', 'myapp1', 'pg-primary'),

- Repeat the previous step to create a second shard:

CREATE SCHEMA myapp2; INSERT INTO shard.shard_map (shard_id, source_schema, shard_schema, server_name) VALUES (2, 'myapp', 'myapp2', 'pg-primary'),

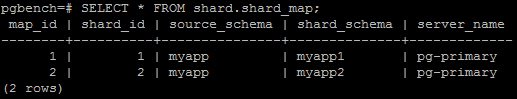

- View the current status of our shard mappings:

SELECT * FROM shard.shard_map;

If you wish, you can view this as another primer on preparing a shard-management API. Our first step towards this goal is to create a table to store the logical to physical location mappings necessary to locate a specific shard. At minimum, this table needs to track the shard ID (shard_id), the skeleton schema the shard is based on (source_schema), the shard name itself (shard_schema), and the server where the shard resides (server_name).

Tip

Some readers may wonder where the shard_map table should reside. There's a reason we introduced the shared PostgreSQL server in the introduction to this recipe. Metadata should be stored on that central server. A combination of foreign tables and materialized views will ensure that all servers have immediate access to its contents if necessary.

Next, we should create and save the location of two new shards for illustrative purposes. For our shard names, we chose to simply append the shard name to the source schema name. In addition, we created both shards on the pg-primary server we used in various chapters of this book. This kind of naming scheme makes it simple to locate and interact with any particular shard in our cluster.

The final step is to visualize the data we stored regarding our logical to physical mapping. On our test server, the mappings are as follows:

Notice that the shard_map table is designed in such a way that we can create mappings for any number of schemas. Any schema can have all 2048 shards, and we can find the physical location for any of them based on this table.

While the mapping is an important step, we still need two things to really make use of the mapping. Let's see what they are.

In modern applications, it is becoming increasingly common to inject a secondary cache layer between the application and database. This layer stores commonly retrieved data in memory for immediate use. This layer might be composed of memcached or a NoSQL database such as CouchDB, MongoDB, or Redis.

Once such a layer exists, it's important that the shard_map table is one of the first tables copied there. It has very few rows, and storing it in memory removes the relatively expensive round-trip to the database. With this mapping in memory, the application will always know which physical server it should be connected to as long as it also knows which shard it is using.

How does an application know which shard it should use in any particular situation? This answer requires one more modifications to the table structure our application uses. Our last decision involves adding a shard_id column to one table. This table can be anything but should be some central value that all data can eventually be traced to.

A good choice for this is a customer table. In an order system, all interaction is eventually driven by customer activity. If we assign a customer a specific shard ID, all of their order data will be stored in that shard. As the application likely has the customer row information available at all times, it should also know the associated shard and, hence, which server to store that data.

As a consequence, customer data should also be stored in the shared PostgreSQL instance that other shard servers can see. Customer data is relatively sparse compared to high volumes of order, image, or other types of activity a customer can generate. If the customer table is too large to cache directly, we could create a customer_shard table in the shared database instead.