Creating a clone can be surprisingly dangerous. When using a utility such as rsync, accidentally transposing the source and target can result in erasing the source PostgreSQL data directory. This is especially true when swapping from one node to another and then reversing the process. It's all too easy to accidentally invoke the wrong script when the source and target are so readily switched.

We've already established how repmgr can ease the process of clone creation, and now it's time to discuss node promotion. There are two questions we will answer in this recipe. How do we swap from one active PostgreSQL node to another? How do we then reactivate the original node without risking our data? The second question is perhaps more important due to the fact that we are at reduced capacity following node deactivation.

Let's explore how to keep our database available through multiple node swaps.

This recipe depends on repmgr being installed on both a primary server and at least one clone. Please follow the Installing and configuring repmgr and Cloning a database with repmgr recipes before continuing.

For the purposes of this recipe, pg-primary will remain our master node, and the replica will be pg-clone. As always, the /db/pgdata path will be our default data directory.

Follow these steps to promote pg-clone to be the new cluster master:

- Stop the PostgreSQL service on the

pg-primarynode withpg_ctl:pg_ctl -D /db/pgdata stop -m fast - As the

postgresuser onpg-clone, execute this command to promote it from standby status to primary:repmgr -f /etc/repmgr.conf standby promote - View the status of the cluster with this command as

postgresonpg-clone:repmgr -f /etc/repmgr.conf cluster show

Follow these steps to rebuild pg-primary (while logged into pg-primary) to be the new cluster standby:

- Clone the data on

pg-clonewith the following command as thepostgresuser:repmgr -D /db/pgdata --force standby clone pg-clone - Start the PostgreSQL service as the

postgresuser withpg_ctl:pg_ctl -D /db/pgdata start - Start the

repmgrddaemon with the following command as a root-level user:sudo service repmgr start - View the status of the cluster with this command as

postgres:repmgr -f /etc/repmgr.conf cluster show

To start the process, we simulate a failure of the pg-primary PostgreSQL node. The simplest way to do this is to stop the PostgreSQL service. After the database stops serving requests, repmgr will detect that pg-primary is no longer active. If we tried the next step before stopping the existing master node, repmgr would refuse to honor the request. After all, we can't promote a standby when there's already a functional master.

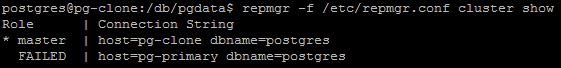

Next, we invoke the repmgr tool from pg-clone with standby promote. This tells repmgr that this node should be the new master. This is necessary because repmgr allows several nodes to act as standby systems, and any could be a candidate for promotion. Following this action, it's a good idea to check the status of the repmgr cluster to ensure that it shows the correct status. We expect pg-clone to be the new master, as seen here:

We can also see that repmgr has properly detected pg-primary as FAILED. However, this is not desirable long term. If we ever want to switch back to pg-primary, or our architecture works best with two active nodes, we need to restart the old master node as the new standby. Once again, we turn to the repmgr command-line utility.

If we log in to pg-primary as the postgres user, we can actually clone the standby the same way we initially provisioned the data on pg-clone. This means that we call repmgr once again with the standby clone parameter, except this time, we are cloning pg-clone as it is the new data master. There is also another important addition: the --force parameter. Without requesting that repmgr overwrite existing data on pg-primary, it will refuse. By forcing the operation, repmgr only copies data that is different between pg-clone and pg-primary.

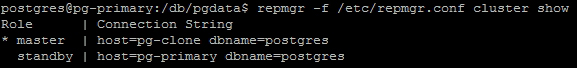

After the data is copied, PostgreSQL should be ready to start on pg-primary, which we do with pg_ctl as usual. With PostgreSQL running, we can safely launch the daemon to reintegrate pg-primary into the repmgr cluster as a standby node. Once again, we can invoke repmgr with cluster show to verify this has occurred:

We can complete the previous recipe as many times as we wish. If we followed the recipe again, we could revert the cluster to its original layout, with pg-primary as the master node and pg-clone as the standby.

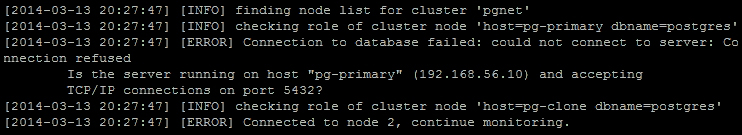

Remember that we mentioned the possibility of multiple nodes acting as standby. As a test, we created another clone using the process described in the Cloning a database with repmgr recipe. Then, we followed the recipes in this section and stopped pg-primary before promoting pg-clone. What do you think we saw while examining the repmgr logfile on the second standby node? This:

Notice how the other standby started checking known repmgr cluster nodes to find a new master to follow. Once we promoted pg-clone, the second standby had a new target. If this doesn't happen automatically, you may have to bootstrap the process by running this command on any standby that didn't transition properly:

repmgr -f /etc/repmgr.conf standby follow

- At the time of writing this book, the repmgr documentation has not been fully updated to reflect functionality changes to the 2.0 version. As such, we refer you to it with some trepidation. Regardless, we based our recipes on what we found at https://github.com/2ndQuadrant/repmgr.