2

Manufacturing History

2.1 Toolmaking Humans

In a classic science fiction film from half a century ago, ‘2001 A Space Odyssey’, there is a short scene that captures the essence of the following discussion. It occurs when what may be an early hominid, specifically a hominim, throws a bone into the air in triumph after discovering its usefulness as a weapon. As it spins upwards, it is transformed by the camera into a rotating toroidal space station ‘waltzing’ to the strains of ‘The Blue Danube’. Whether or not the ape‐like creature is a valid representation of early man the imagery is powerful. From the first use of simple primitive tools for weapons, humans have developed the manufacturing technology and culture of today. In fact, some sources suggest the word ‘tool’ comes from the Old Norse ‘tol’ meaning ‘weapon’.

Although this scene is poetically inspiring, it also highlights the fact that humans are unique in the animal kingdom in their ability to design and make tools. Humans progressed from the selective phase of simply picking a natural object from the environment to use as a tool, just as a chimpanzee selects a twig to poke into anthills to catch ants or a fish uses a stone to crack a snail shell. Humans also passed through the adaptive phase where a stone was chipped to create a sharp cutting edge. Now the inventive phase has been reached where an object not found in nature is conceived and manufactured; for example, a bow and arrow or a supersonic aircraft.

An early manlike creature, Australopithecus, is thought to have made a simple cutting and chipping tool by striking the edge of one stone against another to produce a sharp edge. Since these creatures lacked dexterity the tool would have been held crudely, with the thick blunt end pressed against the palm while being used to cut meat, see Figure 2.1. Australopithecus lived from about four million years ago in the open in Africa and they probably cooperated in hunting. However, stone tools are not likely to have been generally used until around two or three million years ago.

Figure 2.1 How one of the first stone tools may have been used.

Peking man, a form of Homo erectus, possibly lived as long as 750 000 years ago. They were hunters and travellers but built camps and primitive‐huts for shelter. Many stone ‘chopper’ type tools of varying size and shape have been found from this period, though they do not differ much from those used during the previous two million years. There is evidence, however, that fire was used to harden the tips of sharpened wooden tools and weapons.

Between 70 000 and 40 000 years ago there lived Neanderthal man, an early member of Homo sapiens. The average size of his brain was probably slightly larger than modern humans and there is disputed evidence that he had a primitive religion. He was probably a well‐organised hunter working in groups to ambush animals or drive them over cliffs. The tools and weapons he used were still of fire hardened wood and shaped stone, although they were now more elegant in design.

2.2 The New Stone Age

Modern man, Homo sapiens, appears just as Neanderthal man becomes extinct though they may have coexisted for a period. The use of stone tools continued into the New Stone Age. This was the Neolithic period that lasted in south west Asia from about 9000 to 6000 BC, and in Europe from about 4000 to 2400 BC. Europe developed later as the last ice age finished there only 10 000 years ago. During the Neolithic period, man made the change from a hunter gatherer to a farmer. Crop growing and cattle rearing were started. Tools had now become quite sophisticated although still made of wood, bone and types of stone such as flint. The flint tools were often the master tools from which others could be made. Stone headed axes with bone handles, and knives and arrow heads of flint were common. Wooden hoes and sickles, originally made from setting flints into straight or curved bones, were used for cultivation. Clay pots were made and clothing was woven using a primitive loom. The sling and the bow and arrow were early inventions and, by the end of this period, man had learned to make as well as use fire and had produced the lever, the wedge and the wheel. Since Neolithic society was relatively peaceful most weapons were probably used for hunting.

At this stage we can see the beginning of the primary industries. With the advent of agriculture man would be able to produce more food than his immediate community needed. This would create the opportunity of trading some of his food for other items, perhaps dried fish from a tribe that lived by the river or sea. The use of appropriate tools would allow him to increase the amount of food grown and so this would stimulate him to make improvements in these tools. Some tribes may have lived near sources of flint. They would become adept at fashioning tools and a ‘manufacturing’ industry would arise. These tools would be traded with the fishers and farmers. The toolmakers were adding value to the flint by working it into shape, the fishermen adding value by drying the fish to prevent it from spoiling and the farmers would be adding value to their produce by harvesting and transporting it to where it could be traded. These principles are unchanged today, except of course money is now used to facilitate the sale and purchase of labour and goods.

In the following sections, it should be remembered that the dates stated for both the Bronze and Iron Ages are generalisations since their start points depended on geographical location.

2.3 The Bronze Age

Technological progress does not really begin to accelerate until about 5000 BC when we begin to move out of prehistory. The first urban civilisations existed along fertile riverbanks. These were the Sumerians in the valleys of the Tigris and Euphrates rivers in Mesopotamia, the Egyptians along the Nile, the Indus Valley civilisation in India and the Chinese along the banks of the Yellow river. It is around this time that metal first began to be worked and it was metal that allowed manufacturing industry to have such an influence on society.

Originally copper was used; it was probably obtained in its pure form and beaten into shape. Relatively soft in this state, it has limited use for tools and was used mostly for ornaments. However, it was soon found that when melted it could be alloyed with tin to produce a much stronger and harder material – bronze. The Bronze Age lasted from its beginnings with the Egyptians and Sumerians around 3500 BC to the start of the Iron Age around 1200 BC. Europe was about 1000 years behind Asia Minor in using bronze, with the Bronze Age in Britain lasting roughly from 2000 to 500 BC.

Pure copper became scarce in supply, therefore it was necessary to obtain or ‘win’ the metal by smelting copper rich ores. Excavations have been made of copper smelting furnaces built into the ground in beds of sand. Stones of varying size were used to support the internal walls of the structure and burning charcoal was used as a source of heat. The furnace pit was lined with mortar and had clay tubes set into the side for bellows' access. The bellows provided a forced draught to increase the furnace temperature. About 10 cm above the base of the furnace there was a tapping hole that led downwards into a tapping pit. As the ore was smelted the impurities would rise to the top of the melt and eventually be drawn off through the tapping hole. The pure copper would be left in the bottom of the furnace. Later it could be refined and alloyed by re‐melting in crucibles. Objects were made by casting in moulds and by beating the material into shape. Hammering of the metal also improved its hardness, enabling it to be used for items such as nails.

The Sumerians and Egyptians used war captives as slave labour. This allowed some of their own workers to have the time to develop their skills as craftsmen in the manufacture of bronze artefacts. Ploughs, hoes and axes for farming; saw blades, chisels and bradawls for carpentry; helmets, spearheads and daggers for war and ornaments are just some of the items that were made out of bronze. Even after the use of iron became widespread bronze remained popular. Experience gained in alloying bronze with elements such as zinc and antimony ensured its continuing popularity.

As civilisation spread throughout the Middle East craftsmen such as metalworkers, potters, builders, carpenters, masons, tanners, weavers and dyers were all to be found. The more skilled artisans, for reasons of economy and supply, lived in the larger towns and cities. They usually lived in their own identifiable areas and later, in the Middle Ages, they would organise into craft guilds that became powerful political groups.

2.4 The Iron Age

Iron, which is harder and tougher than bronze, was first used in its naturally occurring form. Before smelting was feasible the original source was fallen meteorites, the ancient Egyptians calling it ‘the metal from heaven’. The iron would be broken off, heated, then hammered into shape. The Hittites, who occupied the same area as the Sumerians had many years before, were the main workers in iron from about 1200 BC. It is likely that they smelted their iron from bog ore. This ore occurs freely in marshy ground and can be obtained by simply sieving it out of the water. The ore is then smelted and the resulting iron ‘bloom’ hammered to remove the impurities, termed ‘slag’, before quenching in water. After repeating the heating, hammering and quenching process several times an iron of useful strength is obtained. However, widespread use of iron did not occur until the Hittite Empire was destroyed and the ironsmiths dispersed along with their skills. The design and use of tools in general was continued in parallel with metallurgical developments. For example, primitive manually powered wooden lathes were in use around 700 BC for making items such as food bowls.

The use of iron was longer in developing than that of copper due to the difference in melting temperatures. Copper melts at 1083°C and iron at 1539°C, thus requiring much hotter and more efficient furnaces for smelting and casting. Eventually a primitive form of steel was produced by hammering charcoal into heated iron. The first iron ploughshare possibly came from Palestine around 1100 BC and by the time of Christ the use of iron was widespread.

In Europe, iron mining and smelting grew up in areas where the ore could be mined and there was a plentiful supply of wood from local forests. One of the main reasons for the Roman invasion of Britain in 55 AD was the attractiveness of the iron and tin mines. Iron was used for farm implements, armour, horseshoes, hand tools and a wide variety of weapons. Iron wrought into beams had also been used in structural work by both the Greeks and the Romans. With an increasing population, the need for manufactured items was also increasing. This meant that there was now a need for the ‘mass production’ of some products. The Romans, for example, had ‘factories’ for mass producing glassware but these were very labour intensive and had low production rates compared with today's expectations.

We now take a time jump through the Dark and Middle Ages to around the beginning of the Renaissance period. The quality of iron had remained relatively poor and it was not until about the fifteenth century that furnaces using water powered bellows were sufficiently hot to smelt iron satisfactorily. This allowed better control of the material properties of the finished castings. In cannon and mortar manufacture, for example, the iron had previously been so brittle that the cannon often blew up in the users' faces. The higher quality iron that could now be produced prevented this. In the sixteenth century water power was also used for other metalworking processes and rolling mills were developed for producing the metal strips from which coins could be stamped.

As well as technology for manufacture, organisation of manpower is important and progress also occurred here. Leonardo da Vinci, who lived between 1452 and 1519, apparently found time to turn his attention to the manner in which work is carried out. He implemented a study of how a man shovelled earth and worked out the time it should take to move a specified amount of material using the observed method and size of shovel. This was an early example of the scientific analysis of work, which is still important to the efficiency of modern manufacturing systems. Although Leonardo da Vinci and many others of this period were part of the great re‐birth of interest in the arts and scientific discovery, it is not until the beginning of the eighteenth century that we find the manufacturing and engineering discoveries occurring that so changed the quality of life for the ordinary person.

The beginnings of the factory system were appearing in Europe during the seventeenth century in the woollen trade and cloth making. The ‘factory system’ is typified by the ‘specialisation’, or ‘division’, of labour. This entails individual workers specialising in one aspect of the total work required to complete a product. The ‘commission system’ had also arisen during this period. A ‘commission merchant’ was someone who bought a product from a manufacturer and then sold that product to an end user. For instance, he would buy wool from a sheep farmer and pay for it to be delivered to the wool cleaner. He would pay the cleaner, then pay for transport to the comber and pay him. This was repeated through the subsequent stages of carding, spinning and weaving. The commission merchant then paid for transport of the finished cloth to the market, either at home or abroad. The merchant financed his operation from borrowing money from a ‘capitalist’. Capitalists' wealth grew considerably from this type of investment. Initially, these woolworkers were unlikely to be all together in the one ‘factory’ and were probably operating as individual ‘outworkers’ in their own homes. The division of labour could also be seen in woodworking, rather than general woodworkers we find carpenters, wheelwrights and cabinet makers beginning to appear.

It should be remembered that agriculture was still the main source of employment at this time. For example, it has been estimated that in England in 1688 there were 4.6 million people employed in agriculture and only 246 000 in manufacturing. Between 1750 and 1850 the population of Britain trebled, yet it remained self‐sufficient in food, even though the number of workers in farming had dropped to around 1.5 million by 1841. This was due to the ‘agricultural revolution’, which was caused by new techniques such as planned crop selection and rotation, scientific animal breeding and the development of new farming tools. Probably the most important invention of this time was the mechanical seed drill, devised by Jethro Tull around 1701, which increased farming productivity. This was a good example of primary industry benefiting from a manufactured, or secondary industry, product. The advent of mechanisation on the farm would eventually lead to massive reductions in the numbers of labourers required; these were to provide a pool of workers for the blossoming Industrial Revolution.

2.5 The Industrial Revolution

A number of factors brought about what is sometimes called today the ‘first’ Industrial Revolution. It is generally accepted as having been started in Britain during the course of the eighteenth century. One of these factors, the division of labour within a factory system of working, already existed. Three other factors that were to contribute were cheap and efficient power in the form of steam engines, large supplies of good quality iron with which to make these engines and other products and the development of transport systems. The transport systems initially appeared due to the economic stimuli of the other factors but they also in themselves assisted further industrial development. A growing canal network and rail system facilitated trade and transport domestically, and increasing sea transport provided the essential ability to sell manufactured goods overseas.

Iron had always been produced in relatively small quantities, but in 1711 in Coalbrookdale, England, Abraham Darby began to use coke to smelt large quantities of ore. He produced good quality cast iron that was suitable for forging as well as for casting. Soon iron was to replace copper, bronze and brass for items such as pots and pans and other domestic goods.

Manufacturing industry at this time still relied on water power, even although Thomas Savery had installed a steam engine to raise water from a mine in 1698 and an ‘atmospheric’ engine had been developed by Thomas Newcomen and John Calley in 1705. Many of the improvements in manufacturing technology during this period were concerned with the textile industry. In 1733 water powered looms were made possible by the invention of the ‘flying shuttle’ by James Kay and in 1770 the ‘Spinning Jenny’ was patented by James Hargreaves. Replacing the spinning wheel, the Jenny was a frame containing a number of parallel spindles made to revolve by the use of a hand powered wheel and pulley belt. Where previously only one thread would have been spun by hand, eight could now be spun and this was later improved to 20 or 30.

The Industrial Revolution really got underway in 1776 when James Watt produced a much improved version of the steam engine. This was made a commercial possibility by the use of a boring machine made by John Wilkinson to produce the cylinders for the engine. Despite this, up until the beginning of the nineteenth century the relatively cheap Savery and Newcomen engines were often used in preference to the more expensive Watt engines. These were used on their own or as a supplement to water power.

Also at this time, the basis of modern economics was laid down by Adam Smith in his book The Wealth of Nations. In this he further developed the concept of the division of labour to improve productivity and stimulate economic progress. The general adoption of this principle contributed to the success of the Industrial Revolution.

In 1784, Edmund Cartwright built a loom capable of being driven by steam power, and in 1788 James Watt devised the centrifugal governor for automatically controlling the speed of a steam engine. Thus we have the beginnings of manufacturing automation. Looms had originally been located close to hills to make use of water power and the same type of equipment as corn mills, hence the name ‘mills’. With the advent of steam power they were relocated to areas where coal was plentiful.

Since the source of power for the early machines was a large, relatively expensive steam engine, it was natural that all machinery driven by the engine should be located as close to it as possible. This also applied to associated activities. It was therefore now convenient for all workers to work within a short distance of each other and probably under the same roof. This was one factor that contributed to the rise of the factory. The other was social. People had been used to working to agricultural rhythms, but the new systems of work demanded tight organisation with workers expected to be available at specific times. Thus the time was ripe for the factory due to a combination of technological and social and organisational reasons. The factory was to peak in the early to mid‐twentieth century with some employing around 70 000 people.

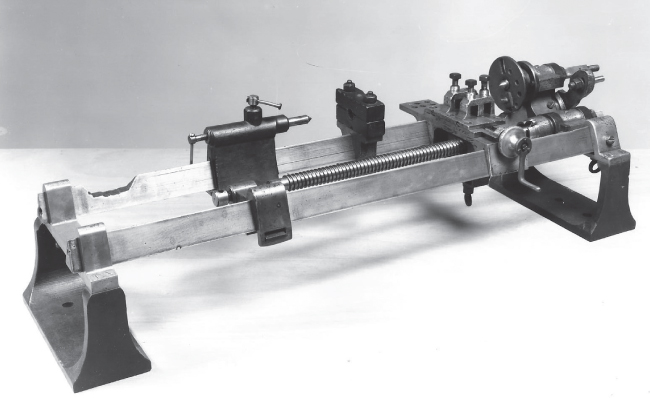

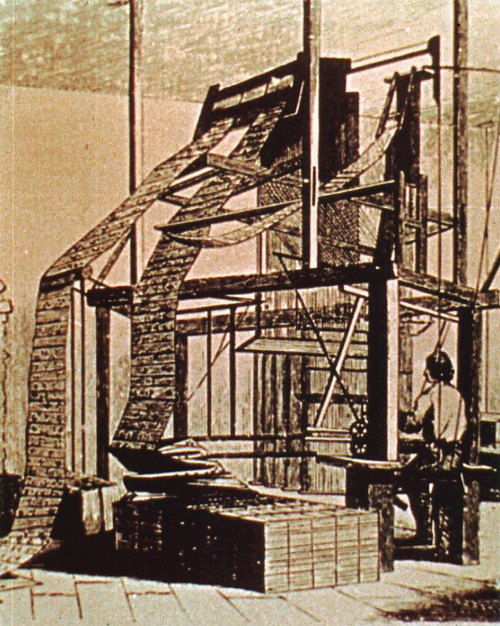

In 1794 in the UK Henry Maudsley created the first all metal screwcutting lathe (see Figure 2.2). Meanwhile, in the USA in 1792, Eli Whitney had produced the cotton gin that greatly increased the productivity of the southern cotton workers, who at that time were predominantly slaves. In 1796 in Britain Joseph Bramah developed the hydraulic press that provided a means of greatly increasing the force a manual worker was able to exert. In 1804 in France, we see Joseph Marie Jacquard introducing automatic control of a loom by the use of punched cards, see Figure 2.3. The use of punched cards to contain information for computers and automatic machine control was still being used more than 160 years later.

Figure 2.2 Maudsley's all metal screwcutting lathe 1794.

Figure 2.3 Jacquard punch card controlled loom 1804.

We have already seen the emergence of two of the main ingredients of modern manufacturing, that is, the ‘factory’ as a central manufacturing unit and specialisation of labour. At this point, at the beginning of the nineteenth century, we see the appearance of three further major concepts; that is, mass production, standardisation and interchangeability.

One of the first instances of the manufacture of interchangeable components was in the USA in 1798 when Eli Whitney used filing jigs to produce parts for a contract of 10 000 army muskets. Although the parts were almost identical, their manufacture did involve much manual labour in the filing to size of the components.

The manufacture of components using mechanised mass production techniques first occurred in 1803 in the Portsmouth Naval Dockyard in Britain. The components were pulley blocks for the Royal Navy. The machines for producing the blocks had been designed by the famous engineer Marc Isambard Brunel and manufactured by Henry Maudsley. There were 45 machines and they were powered by two steam engines. Eventually, productivity was so improved that 10 unskilled workers could produce as many blocks as had previously been produced by 110 craftsmen. By 1808 the production rate had risen to 130 000 per year.

In the USA, two of the innovators in using machinery to make the close‐toleranced components necessary for interchangeability were Samuel Colt and his chief engineer Elisha Root. In the Colt armoury in Connecticut they designed a turret lathe to facilitate the production of identical turned parts for the Colt revolver produced in 1835.

To achieve interchangeability of components not produced in the same factory, methods of standardisation had to be adopted for commonly used parts. One of the pioneers in this area was the British engineer Joseph Whitworth. Around 1830 he began developing precision measuring techniques and produced a measuring machine that utilised a large micrometre screw. These accurate measuring techniques and equipment were necessary for the inspection of components intended to be interchangeable. In 1841 he proposed a standard form and series of standard sizes for screw threads that were eventually adopted in Britain and although other countries began to adopt their own national standards these were not necessarily compatible on a world‐wide basis. Even today, much effort still goes into achieving international standards for manufactured items.

In the textile industry, which was the first industry to experience the effects of the Industrial Revolution on a large scale, workers began to be displaced from their jobs by encroaching mechanisation. This was resented so much that the Luddite movement was formed in England in 1811. The Luddites' purpose being to riot against the machinery, they caused considerable damage to equipment but refrained from violence against people. Repressive measures, including the shooting of a band of Luddites in 1812, did not put an end to the movement. They appeared in waves in various textile manufacturing areas. However, as the prosperity of individuals continued to increase, so resistance to the machinery died out.

Greater possibilities for the improvement of working conditions in Britain were made possible by the repealing of the Combination Acts in 1824. This repeal allowed Trade Unions (combinations of workers) to be formed, thus providing the opportunity for organised resistance to exploitation. In the years 1833–1840, Industrial Commissioners were appointed to investigate poor social conditions. These measures were necessary as there were many major problems to overcome.

Large numbers of people were now coming to work in factories in close proximity to each other. This meant that when working a then normal 12‐hour day, they had to live near to the factory. Thus factory towns and cities increased in size and population density. Congested housing, often in the type of houses known as tenements, forced men, women and children to live under very poor conditions. Rooms had poor lighting and ventilation, and were cramped and lacked privacy. There was also a ‘ticket’ system in operation for many workers. Under this system workers received tickets for their labour in lieu of money. To translate these tickets into the essentials of food and clothing, they had to be redeemed at the company store. Thus the company had a monopoly and was able to charge the highest prices for the poorest goods.

In the factories themselves, where many women and children were employed, working conditions were often poor. Low wages and long hours caused accidents, inefficient working and poor health. The ‘piecework’ system also caused hardship. Under this system, workers in their own homes, and eventually in what became known as ‘sweatshops’, worked on jobs sent out from the factories. They were payed per ‘piece’ produced and as they were forced to work at very low rates working conditions were often even worse than in the factories.

Considering the engineering aspects again, simple steam locomotives had been doing useful work in coalmines between 1813 and 1820, but it was not until 1825 that the first public railway was opened. This was between Stockton and Darlington in England. Improvements in manufacturing equipment and techniques were leading to stronger boilers and better fitting cylinders and pistons. Thus as the efficiency and power of the steam engines improved, so also did their ability to transport materials, products and people over greater distances at higher speeds. By 1848 there were 5000 miles of railway track laid in Britain. The first successful steam ship had been built on the banks of the river Clyde in Scotland in 1812. This was the ‘Comet’ and it was the forerunner of ships such as the ‘Sirius’ and the ‘Great Western’ that began regular transatlantic service in 1838.

In his book Days at the Factories published in 1843, George Dodd remarked:

The bulk of the inhabitants of a great city, such as London, have very indistinct notions of the means whereby the necessaries, the comforts, or the luxuries of life are furnished. The simple fact that he who has money can command every variety of exchangeable produce seems to act as a veil which hides the producer from the consumer

And this is rather similar to today. Dodd differentiates between, ‘mere handicrafts carried on by individuals of whom each makes the complete article’, and the work that involves, ‘the investment of capital and the division of labour incident to a factory’. He then goes on to describe the operation of various factories in the London of the early to mid‐nineteenth century: these were apparently well organised, with relatively sophisticated cost control systems, machines utilising steam power and a variety of skilled, semi‐skilled and unskilled workers.

The confluence of improvements in iron and steel production, the development of precision machinery, mechanised processes, division of labour, mass production techniques, interchangeability of components, standardisation, the application of steam to transportation giving access to many new markets and the beginnings of improvements in factory conditions, led into what has been termed the ‘later factory period’, beginning around 1850, see Figure 2.4.

Figure 2.4 The pen‐nib slitting room in the Hinks, Wells & Co. factory, Birmingham UK around 1850. Note the specialisation of labour.

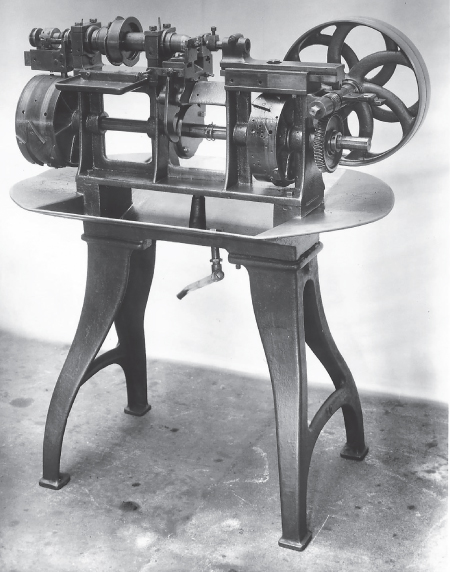

The acceleration in industrial development during the second half of the nineteenth century can be seen by considering the production of iron and the expansion of the rail network in Britain. The annual production of pig iron had risen from just over 17 000 tons in 1740 to 1.25 million tons in 1840, and by 1906 it had reached more than 10 million tons. In 1850 there were 6500 miles of railway in England; by 1900 this had risen to over 25 000. Working conditions were improving and by 1872 in Britain a basic nine‐hour working day had been agreed, although the working week was of six days. Manufacturing equipment continued to improve in quality and capability. For example, in 1873 a fully automatic lathe was produced by Christopher Spencer, see Figure 2.5. The movements of the machine elements were controlled by drum cams, or ‘brain wheels’ as they were called, forerunners of today's computer control systems. By 1880 electric motors were being used to drive some machines. Coupled with the advent of the internal combustion engine at the end of the century, this meant that factories and other power reliant establishments would eventually be able to spread out and away from a central steam power source.

Figure 2.5 Spencer's automatic lathe 1873.

Source: Photo Courtesy of the Birmingham Museums Trust.

2.6 The Twentieth Century

Many of the advances made in science, medicine and engineering have been possible due to parallel advances in manufacturing technology. The understanding of how best to organise and manage people at work has also increased. Towards the end of the nineteenth and the beginning of the twentieth century, great progress was made in the realm of ‘scientific management’. This was concerned with finding the best ways of doing work and became known as ‘work study’; it was composed of ‘work measurement’ and ‘method study’. As well as Leonardo da Vinci mentioned earlier, contributions had been made in this field by others. For example, around 1750 in France Jean R. Perronet carried out a detailed study of the method of manufacture of pins. Also Charles Babbage, the British inventor of the mechanical digital computer, published a book in 1832 titled On the Economy of Machinery and Manufactures, in which he considered how best to organise people for improved productivity. However, widely recognised as the major contributors to work study are Frederick Winslow Taylor and Frank and Lillian Gilbreth at the end of the nineteenth and beginning of the twentieth centuries. Their work has provided the basis for modern work organisation, which is described more fully later.

Whereas in the eighteenth and nineteenth centuries the textile industry had been a spur to, and a beneficiary of, much of the advances in manufacturing technology, at the beginning of the twentieth century this role was taken over by the motor car industry.

One of the earliest examples of an assembly line was in the Olds Motor Works in Detroit, USA. In ‘line production’ the division of labour is fully utilised in that work is transported from worker to worker in a linear fashion, thus reducing work in progress and increasing productivity; in the Olds factory, which was rebuilt after a fire destroyed the original in 1901, the work was wheeled from worker to worker. But it is Henry Ford who is seen as the major pioneer of modern mass production techniques. It is at this point that the ‘second’ Industrial Revolution is sometimes said to have occurred.

Ford seized on the assembly line concept and together with interchangeable mass produced components, a production line utilising conveyors and specially designed assembly equipment, he made a tremendous contribution to manufacturing industry efficiency. Although the motor car engine was not made in a practical form until 1887, it has been estimated that Ford's Model T would have taken 27 days to build using the technology and techniques available at the time of the American Civil War (1861–1865). By 1914 Ford was producing a Model T in just over an hour and a half. By 1918 he had cut the price from 850 dollars to 400 dollars; this of course increased sales that led to a demand for higher productivity and by the 1920s the production time for the Model T had been reduced to 27 minutes.

Laws were passed in the early twentieth century to ensure tolerable working conditions in industry. For example, in the UK there were laws relating to hours worked, the minimum age of workers, the amount of floor and air space per worker and heating and lighting conditions. Also, laws regarding the safety of machines were created; many machines used dangerous belt and shaft transmission systems and these had to be guarded. Employers were also to pay compensation for any injuries suffered by workers while carrying out their work.

The First World War of 1914–1918 was a stimulus to technological progress. By the 1930s significant advances were being made in materials and manufacturing technology. For example, the quality of steel was improving, tungsten carbide was being used for cutting tools and new casting and extruding processes were developed. Plastics such as polyvinylchloride (PVC) and polyethylene were created. Automated machines for making screws and other items, and transfer machines capable of mechanically transporting parts from workstation to workstation were also in use.

During the Second World War of 1939–1945 industrial progress was rapid as the demand for manufactured goods for the armed forces mushroomed. For example, stick electrode welding was used for the first time on a large scale to fabricate ships. Production rates and quality and reliability of components had to be maximised. The integration of electronic control techniques with hydraulic mechanisms for gun and radar control was also to provide the foundation for the post‐war automated machine tool industry. By 1947 the General Electric Company (GEC) in the USA demonstrated how it was possible to control a machine tool using a magnetic tape. This was termed the ‘record playback control’ system. The machine element movements necessary to produce an initial workpiece were recorded on the magnetic tape. When the tape was played back the machine movements were duplicated using a servo control system, so allowing further workpieces to be created automatically. Around this time a method of controlling a milling machine using punched cards was devised by J.T. Parsons, again in the USA. The coded pattern of holes on the cards was read automatically and the information fed to a controller to drive the milling cutter along the desired path. Through collaboration with the US Air Force and the Massachusetts Institute of Technology (MIT) the concept was further developed to produce a prototype three‐axis milling machine in 1952. In the same year the GEC method was improved by MIT and launched on the market by the Giddings and Lewis Machine Tool Company this was the first commercial numerically controlled machine tool system. About the same time, Ferranti in Britain also developed a three‐axis machine controlled by magnetic tape.

In the technical journals at this time there was much talk about the ‘automatic factory’ as the possibilities of applying mechanisation coupled with electronic control began to be understood. By 1954 the first large scale automated production line was built. This was named ‘Project Tinkertoy’, and was used to produce and assemble electronic products. The first industrial robot, made in the USA by Unimation, was installed in 1961, see Figure 2.6a. These examples of automation were not the computer controlled systems of today. They either used mechanical devices or ‘hard wired’ electronics to effect control and were therefore not easily reprogrammed to cope with changes of work. This only became possible with the advent of integrated electronic circuits.

Figure 2.6 (a) ‘Unimate’; the first industrial robot in 1961. (b) Cincinnati Milacron T3, the first microprocessor‐controlled robot in 1973. Both were hydraulically powered.

The year 1967 saw the first large scale integrated circuit manufactured that contained hundreds of electronic components and around this the ‘third’ Industrial Revolution is suggested to have started. By 1970 the ‘microprocessor’ had been developed by Intel; this was a single electronic chip capable of interpreting and carrying out logical instructions. The cost of computing power was much reduced and ‘minicomputers’ could now be used for machine control, for example, the first computer‐controlled industrial robot was produced by Cincinnati Milacron in 1973, see Figure 2.6 b. Today, microprocessor‐based control, industrial robots and other programmable automation is ubiquitous throughout manufacturing industry.

The ‘unmanned factory’ was again a popular concept in the 1980s, and some fully automated factories were developed, but there are few of these today as they are hard to justify economically. However, many unmanned manufacturing cells and larger units have been created that utilise computer control, such as the robot assembly system shown in Figure 2.7; although to take humans out of the factory completely is still not economically feasible. In fact, in many countries where wage rates for unskilled and semi‐skilled labour are relatively low, very large numbers of people can still be found employed in high volume assembly work. This is particularly the case where frequent design changes may occur, such as in mobile phone production.

Figure 2.7 A modern fully automated area within a factory where industrial robots build wrist units for more industrial robots.

Source: Image Courtesy of FANUC Europe.

2.7 The Twenty‐First Century

Towards the end of the twentieth century the Internet began to appear in its modern form and now in the first quarter of the twenty‐first century it has transformed much of the way we work and communicate. Various types of digital communication networks, whether they be industrial, government, commercial, domestic or social can now all interact, the advent of the World Wide Web, progress in advanced robotics, machine vision systems and artificial intelligence have all contributed to the ‘Internet of Things’ and what is now called the ‘Fourth’ Industrial Revolution. The impact of these factors on manufacturing industry is discussed later in the book.

The history of man's use of tools shows how manufacturing industry has shaped our society. These first two chapters have attempted to show the significance of manufacturing. The following chapters now describe the composition and operation of the manufacturing industry of today.

Review Questions

- 1 What feature made Homo sapiens different from his precursors, and how was this advantageous?

- 2 Why did Europe lag behind Asia in the development of metalworking?

- 3 Why did the full use of iron take longer than that of copper?

- 4 Why were improvements in the quality of items such as cannon barrels possible in the fifteenth century?

- 5 Explain the features that produced the ‘factory system’ of working in the seventeenth century.

- 6 Describe four factors whose combination produced the Industrial Revolution.

- 7 What was the principal reason for ‘the factory’ emerging as a central manufacturing unit?

- 8 Name and describe three major concepts in manufacturing that appeared during the initial years of the nineteenth century.

- 9 Briefly describe the production method, originally used by Olds, which Ford exploited to revolutionise car manufacture at the beginning of the twentieth century.

- 10 What device led to the emergence of ‘reprogrammable automation’ and why is this type of automation important in today's economy?