Chapter 6. Memory-Restricted Search

This chapter studies different algorithms that explore the middle ground between linear-space algorithms like IDA* and memory-sensitive algorithms like A*. It considers transposition tables for full and lossy/lossless sparse state set representations, as well as omission schemes for frontier and visited state sets.

Keywords: transposition table, fringe search, iterative threshold search, SMAG, enforced hill-climbing, weighted A, overconsistent A*, anytime repairing A*, k-best-first search, beam search, partial A*, partial IDA*, frontier search, sparse-memory graph search, breadth-first heuristic search, locality, beam-stack search, partial expansion A*, two-bit breadth-first search

In the previous chapter, we saw instances of search graphs, which were so large that they inherently call for algorithms capable of running under limited memory resources. So far, we have restricted the presentation to algorithms that consume memory that scales at most linear to the search depth. By the virtue of lower memory requirements, IDA* can solve problems that A* cannot. On the other hand, it cannot avoid revisiting nodes. Thus, there are many problems that neither A* nor IDA* can solve, because A* runs out of memory and IDA* takes too long. There have been several solutions being proposed to use the entire amount of main memory more effectively to store more information on potential duplicates. One problem of introducing memory for duplicate removal is a possible interaction with depth-bounded search. We observe an anomaly that goals are not found even if they have smaller costs than the imposed threshold.

We can coarsely classify the attempts by denoting whether or not they sacrifice completeness or optimality, and if they prune the Closed list, the Open list, or both. A broad class of algorithms that we focus on first uses all the memory that is available to temporarily store states in a cache. This reduces the number of reexpansions. We start with fixed-size hash tables in depth-bounded and iterative-deepening search. Next we consider memory-restricted state-caching algorithms that dynamically extend the search frontier. They have to decide which state to retain in memory and which one to delete.

If we are willing to sacrifice optimality or completeness, then there are algorithms that can obtain good solutions faster. This class includes exploration approaches that strengthen the influence of search heuristics in best-first searches and search algorithms that have limited coverage and look only at some parts of the search space. Approaches that are not optimal but are complete are useful mainly for overcoming inadmissibilities in the heuristic evaluation function and ones that sacrifice completeness.

Another class of algorithms reduces the set of expanded nodes, as full information might not be needed to avoid redundant work. Such a reduction is effective for problems that induce a small search frontier. In most cases, a regular structure of the search space (e.g., an undirected or acyclic graph structure) is assumed. As states on solution paths might no longer be present, after a goal has been found, the according paths have to be reconstructed.

The last class of algorithms applies a reduction to the set of search frontier nodes. The general assumption is that search frontier is large compared to the set of visited nodes. In some cases, the storage of all successors is avoided. Another important observation is that the breadth-first search frontier is smaller than the best-first search frontier, leading to cost-bounded BFS. We present algorithms closer to A* than to IDA*, which are not guaranteed to always find the optimal solution within the given memory limit, but significantly improve the memory requirements.

6.1. Linear Variants Using Additional Memory

A variety of algorithms have been proposed that are guaranteed to find optimal solutions, and that can exploit additionally available memory to reduce the number of expansions and hence the running time.

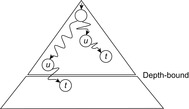

To emphasize the problems that can arise when introducing space for duplicate detection, let us introduce a Closed list in DFS. Unfortunately, bounding the depth to some value d (e.g., to improve some previously encountered goal) does not necessarily imply that every state reachable at a search depth less than d will eventually be visited. To see this, consider depth-bounded DFS as applied in the search tree of Figure 6.1.

The anomaly can be avoided by either reopening expanded nodes if reached on a smaller g-value or by applying an iterative-deepening strategy, which has searched for a low-cost solution before larger thresholds are applied. Table 6.1 shows the execution of such depth-first iterative-deepening exploration together with full duplicate detection for the example of Figure 2.1 (see also Figure 5.3 in Ch. 5).

| Step | Iteration | Selection | Open | Closed | U | U′ | Remarks |

|---|---|---|---|---|---|---|---|

| 1 | 1 | {} | {a} | {} | 0 | ∞ | |

| 2 | 1 | a | {} | {a} | 0 | 2 | g(b) |

| 3 | 2 | {} | {a} | {} | 2 | ∞ | New iteration starts |

| 4 | 2 | a | {b} | {a} | 2 | 6 | g(c) and g(d) > U |

| 5 | 2 | b | {} | {a, b} | 2 | 6 | |

| 6 | 3 | {} | {a} | {} | 6 | ∞ | New iteration starts |

| 7 | 3 | a | {b, c} | {a} | 6 | 10 | g(d) > U |

| 8 | 3 | b | {e, f, c} | {a, b} | 6 | 10 | |

| 9 | 3 | e | {f, c} | {a, b, e} | 6 | 10 | Duplicate |

| 10 | 3 | f | {c} | {a, b, e, f} | 6 | 10 | Duplicate |

| 11 | 3 | c | {} | {a, b, e, f, c} | 6 | 9 | g(d) |

| 12 | 4 | {} | {a} | {} | 9 | ∞ | New iteration starts |

| 13 | 4 | a | {b, c} | {a} | 9 | 10 | g(d) > U |

| 14 | 4 | b | {e, f, c} | {a, b} | 9 | 10 | |

| 15 | 4 | e | {f, c} | {a, b, e} | 9 | 10 | Duplicate |

| 16 | 4 | f | {c} | {a, b, e, f} | 9 | 10 | Duplicate |

| 17 | 4 | c | {d} | {a, b, e, f, c} | 9 | 10 | d Duplicate |

| 18 | 4 | d | {} | {a, b, e, f, c} | 9 | 10 | |

| 19 | 5 | {} | {a} | {} | 10 | 1 | New iteration starts |

| 20 | 5 | a | {b, c, d} | {a} | 10 | ∞ | |

| 21 | 5 | b | {e, f, c, d} | {a, b} | 10 | ∞ | |

| 22 | 5 | e | {f, c, d} | {a, b, e} | 10 | 1 | Duplicate |

| 23 | 5 | f | {c, d} | {a, b, e, f} | 10 | 1 | Duplicate |

| 24 | 5 | c | {d} | {a, b, e, f, c} | 10 | ∞ | |

| 25 | 5 | d | {} | {a, b, e, f, c, d} | 10 | 14 | g(g) |

| 26 | 6 | {} | {a} | {} | 14 | ∞ | New iteration starts |

| 27 | 6 | a | {b, c, d} | {a} | 14 | ∞ | g(d) > U |

| 28 | 6 | b | {e, f, c, d} | {a, b} | 14 | ∞ | |

| 29 | 6 | e | {f, c, d} | {a, b, e} | 14 | ∞ | Duplicate |

| 30 | 6 | f | {c, d} | {a, b, e, f} | 14 | ∞ | Duplicate |

| 31 | 6 | c | {d} | {a, b, e, f, c} | 14 | ∞ | |

| 32 | 6 | d | {g} | {a, b, e, f, c, d} | 14 | ∞ | |

| 33 | 6 | g | {} | {a, b, e, f, c, d} | 14 | ∞ | Goal reached |

6.1.1. Transposition Tables

The storage technique of transposition tables is inherited from the domain of two-player games; the name stems from duplicate game positions that can be reached by performing the same moves in a different order. Especially for single-agent searches, the name transposition table is unfortunate as transposition tables are full-flexible dictionaries that detect duplicates, even if not generated by move transpositions.

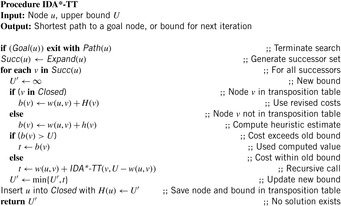

At least for problems with fast successor generators (like the (n2 − 1)-Puzzle) the construction and maintenance of the generated search space can be a time-consuming task compared to depth-first search approaches like IDA*. Transposition tables implemented as hash dictionaries (see Ch. 3), preserve a high performance. They store visited states u together with a cost value H(u) that is updated during the search.

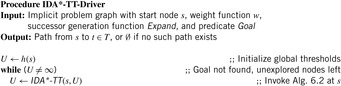

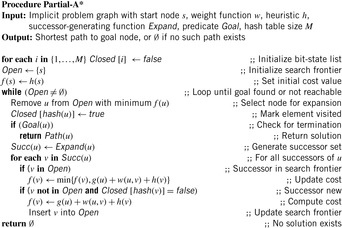

We present the use of transposition tables here as a variant of IDA* (compare Alg. 5.8 on page 205). We assume the implementation of a top-level driver routine (see Alg. 6.1), which matches the one of IDA* except that Closed is additionally initialized to the empty set. Furthermore, we see that Algorithm 6.2 returns the threshold U′ for the next iteration. Closed stores previously explored nodes u, together with a threshold H(u), such that the path costs from the root via u to a descendant of u is g(u) + H(u). Each newly generated node v is first tested against Closed; if this is the case, then the stored value H is a tighter bound than h(v).

The application of the algorithm to the example problem is shown in Table 6.2. We see that the (remaining) upper bound decreases. The approach applies one step less than the original IDA*, since node d is not considered twice.

| Step | Iteration | Selection | Open | Closed | U | U′ | Remarks |

|---|---|---|---|---|---|---|---|

| 1 | 1 | {} | {a} | {} | 11 | ∞ | h(a) |

| 2 | 1 | a | {} | {a} | 11 | 14 | b(b), b(c), and b(d) exceed U |

| 3 | 2 | {} | {a} | {(a, 14)} | 14 | ∞ | New iteration starts |

| 4 | 2 | a | {c} | {(a, 14)} | 14 | ∞ | b(b) and b(d) exceed U |

| 5 | 2 | c | {d} | {(a, 14)} | 8 | ∞ | b(a) exceeds U |

| 6 | 2 | d | {g} | {(a, 14)} | 5 | ∞ | b(a) exceeds U |

| 7 | 2 | g | {} | {(a, 14)} | 0 | Goal found |

Of course, if we store all expanded nodes in the transposition table, we would end up with the same memory requirement as A*, contrary to the original intent of IDA*. One solution to this problem is to embed a replacement strategy into the algorithm. One candidate would be to organize the table in the form of a first-in first-out queue. In the worst case, this will not provide any acceleration. By an adversary strategy argument, it may always happen that nodes just deleted are being requested.

Stochastic node caching can effectively reduce the number of revisits. While transposition tables always cache as many expanded nodes as possible, it stochastically caches expanded nodes. Whenever a node is expanded, we decide whether to keep the node in memory by flipping a (possibly biased) coin. This selective caching allows storing, with high probability, only nodes that are visited most frequently. The algorithm takes an additional parameter p, which is the probability of a node being cached every time it is expanded. It follows that the overall probability of a node being stored after it is expanded t times is 1 − (1 − p)t; the more frequent the same node is expanded, the higher the probability of it being cached becomes.

6.1.2. Fringe Search

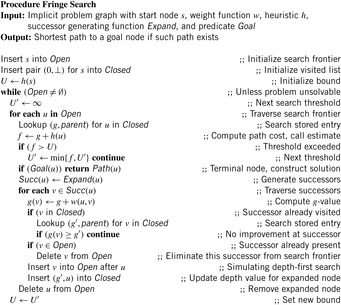

Fringe search also reduces the number of revisits in IDA*. Regarded as a variant of A*, the key idea in fringe search is that Open does not need to be fully sorted, avoiding access to complex data structures. The essential property that guarantees optimal solutions is the same as in IDA*: A state with an f-value exceeding the largest f-value expanded so far must not be expanded, unless there is no state in Open with a smaller f-value.

Fringe search iterates over the frontier of the search tree. The data structure are two plain lists: Opent for the current iteration and Opent + 1 for the next iteration; Open0 is initialized with the initial node s and Open1 is initialized to the empty set.

Unless the goal is found the algorithm simulates IDA*. The first node u in Opent (head) is examined. If f(u) > U then u is removed from Open and inserted into Opent + 1 (at the end). Node u is only generated but not expanded in this iteration, so we save it for the next iteration. If f(u) ≤ U then we generate its successors and insert them into Opent (at the front), after which u is discarded. When a goal has not been found and the iteration completes, the search threshold is increased. Moreover, Opent + 1 becomes Opent, and Opent + 2 is set to empty. It is not difficult to see that fringe search expands nodes in the exact same order as IDA*.

Compared to A*, fringe search may visit nodes that are irrelevant for the current iteration, and A* must insert nodes into a priority queue structure (imposing some overhead). To the contrary, A*'s ordering means that it is more likely to find a goal sooner.

Algorithm 6.3 displays the pseudo code. The implementation is a little tricky, as it embeds Opent and Opent + 1 in one list structure. All nodes before the currently expanded nodes belong to the next search frontier in Opent + 1, whereas all nodes after the currently expanded nodes belong to the current search frontier Opent. Nodes u that are simply passed by executing the continue statement in case f(v) > U move from one list to the other. All other expanded nodes are deleted since they do not belong to the next search frontier. Successors are generated and checked whether or not they are duplicates of already expanded or generated nodes. If one matches a state, then the one with the best g-value (matches the f-value as the h-values are the same) will survive. If not refuted, the new state is inserted directly after the expanded nodes as still to be processed. The order of insertion is chosen such that the expansion order matches the depth-first strategy.

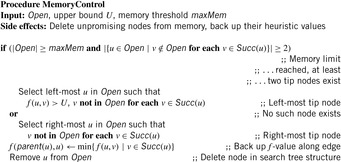

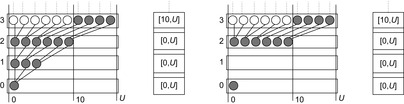

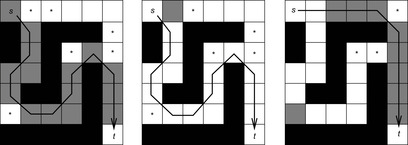

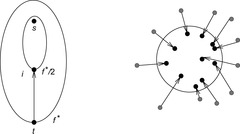

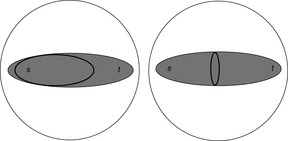

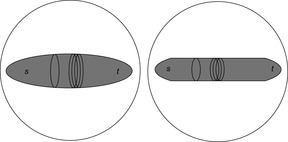

An illustration comparing fringe search (right) with IDA* (left) is given in Figure 6.2. The heuristic estimate is the distance to a leaf (height of the node). Both algorithms start with an initial threshold of h(s) = 3. Before the algorithm proves the problem to be unsolvable with a cost threshold of 3, two nodes are expanded and two nodes are generated. An expanded node has its children generated. A generated node is one where no search is performed because the f-value exceeds the threshold. In the next iteration, the threshold is increased to 4.

|

| Figure 6.2 |

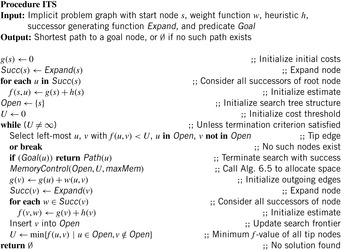

6.1.3. *Iterative Threshold Search

The memory-restricted iterative threshold search algorithm (ITS) also closely resembles that of IDA*. Similar to fringe search its exploration order is depth-first and not best-first. In contrast to fringe search, which assumes enough memory to be available and which does not need a complex data structure to support its search, ITS requires some tree data structure to retract nodes when running out of memory.

Compared to the previous approaches, ITS provides a strategy to replace elements in memory by their cost value. One particular feature is that it maintains information not only for nodes, but also for edges. The value f(u, v) stores a lower-bound estimate of a solution path using edge (u, v). Node v does not have to be generated—it suffices to know the operator leading to it without actually applying it. When a node u is created for the first time, all estimates f(u, v) are initialized to the usual bound f(u) = g(u) + h(u) (to deal with the special case that u has no successors, a dummy node d is assumed with f(u, d) = ∞; for brevity, this is not shown in the pseudo code). An edge (u, v), where v has not been created, is called a tip edge; a tip node is a node of which all outgoing edges are tip edges.

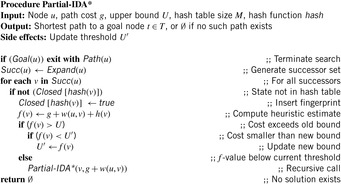

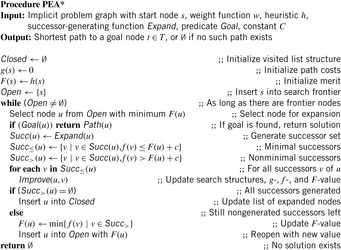

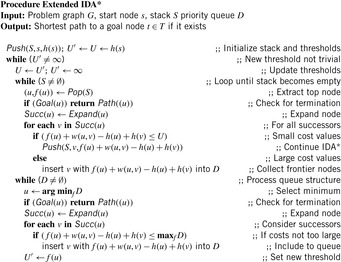

An implementation of the approach is shown in Algorithm 6.4 and Algorithm 6.5. The similarity of the algorithm to IDA* is slightly obscured by the fact that it is formulated in an iterative rather than recursive way; however, this alleviates its exposition. Note that IDA* uses a node ordering that is implicitly defined by the arbitrary but fixed sequence in which successors are generated. It is the same order that we refer to in the following when speaking, for example, of a left-most or right-most tip node.

As before, an upper threshold U bounds the depth-first search stage. The search tree is expanded until a solution is found, or all tip nodes exceed the threshold. Then the threshold is increased by the smallest possible increment to include a new edge, and a new iteration starts. If ITS is given no more memory than (plain) IDA*, every node generated by ITS is also generated by IDA*. However, when additional memory is available, ITS reduces the number of node expansions by storing part of the search tree and backing up heuristic values as more informed bounds.

The inner search loop always selects the left-most tip branch of which the f-value is at most U. The tail of the edge is expanded; that is, its g-value and f-value are computed, and its successor structures are initialized with this value. Finally, it is inserted into the search tree.

Global memory consumption is limited by a threshold maxMem. If this limit is reached, the algorithm first tries to select a node for deletion of which all the successor edges exceed the upper bound. From several such nodes, the left-most one with this condition is chosen. Otherwise, the right-most tip node is chosen. Before dropping the node, the minimum f-value over its successors is backed up to its parent to improve the latter one's estimate and hence reduce the number of necessary reexpansions. Thus, even though the actual nodes are deleted, the heuristic information gained from their expansion is saved.

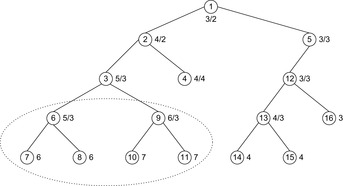

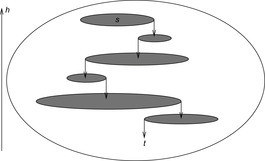

An example of a tree-structured problem graph for applying IDA* (top) and ITS (bottom) is provided in Figure 6.3. The tree is searched with the trivial heuristic (h ≡ 0). The initial node is the root and a single goal node is located at the bottom of the tree. After three iterations (left) all nodes in the top half of the search tree have been traversed by the algorithm. Since the edges to the left of the solution path have not led to a goal they are assigned to cost ∞. As a result, ITS avoids revisits of the nodes in the subtrees below. In contrast, IDA* will reexplore these nodes several times. In particular, in the last iteration (right), IDA* revisits several nodes of the top part.

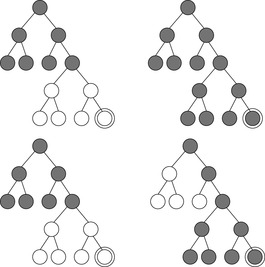

6.1.4. MA*, SMA, and SMAG

The original algorithm MA* underwent several improvements, during the course of which the name changed to SMA* and later to SMAG. We will restrict ourselves to describing the latter.

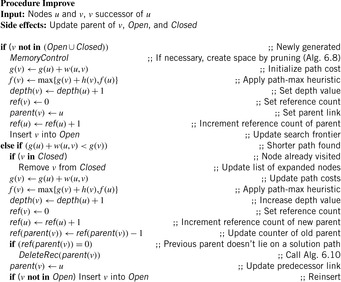

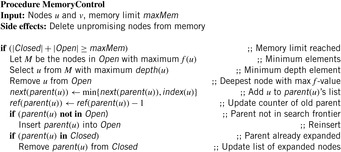

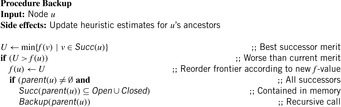

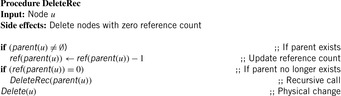

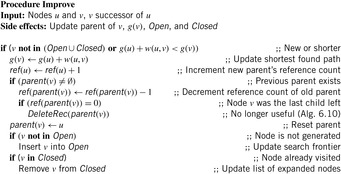

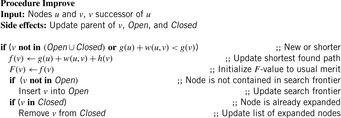

Contrary to A*, SMAG (see Alg. 6.6) generates one successor at a time. Compared to ITS, its cache decisions are based on problem graph nodes rather than on problem graph edges. Memory restoration is based on maintaining reference count(er)s. If a counter becomes 0, the node no longer needs to be stored. The algorithm assumes a fixed upper bound on the number of allocated edges in Open ∪ Closed. When this limit is reached, space is reassigned by dynamically deleting one previously expanded node at a time, and if necessary, moving its parent back to Open such that it can be regenerated. A least-promising node (i.e., one with the maximum f-value) is replaced. If there are several nodes with the same maximum f-value, then the shallowest one is taken. Nodes with the minimum f-value are selected for expansion; correspondingly, the tie-breaking rule prefers the deepest one in the search tree.

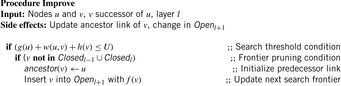

The update procedure Improve is shown in Algorithm 6.7. Here, reference counts and depth values are adapted. If the reference count (at some parent place) decreases to zero, a (possible recursive) node delete procedure for unused nodes is invoked. The working of the function is close to the one of a garbage collector for dynamic memory regions in some programming languages like Java. In the assignment to ref(parent(v)) nothing will happen if v is equal to the initial node s.

Thus, the Open list contains partially expanded nodes. It would be infeasible to keep track of all nodes storing pointers to all successors; instead we assume that each node keeps track of an iterator index next indicating the smallest unexamined child. The information about forgotten nodes is preserved by backing up the minimum f-value of descendants in a completely expanded subtree (see Alg. 6.9). Because f(u) is an estimate of the least-cost solution path through u, and the solution path is restricted to pass one of u's successors, we can obtain a better estimate from If all successors of u have been dropped, we will not know which way to go from u, but we still have an idea of how worthwhile it is to go anywhere from u. The backed-up values provide a more informed estimate.

If all successors of u have been dropped, we will not know which way to go from u, but we still have an idea of how worthwhile it is to go anywhere from u. The backed-up values provide a more informed estimate.

When regenerating forgotten nodes, to reduce the number of repeated expansions we would also like to use the most informed estimate. Unfortunately, the estimates of individual paths are lost. One considerable improvement is the so-called path-max heuristic (as introduced in Sec. 2.2.1): If the heuristic is at least admissible, since a child's goal distance can only be smaller than the parent's by the edge cost, it is valid to apply the bound max{f(v), f(u)}, where v ∈ Succ(u).

One complication of the algorithm is the need to prune a node from the Closed list if it does not occur on the best path to any fringe node. Since these nodes are essentially useless, they can cause memory leaks. The problem can be solved by introducing a reference counter for each node that keeps track of the number of successor nodes of which the parent pointer refers to them. When this count goes to zero, the node can be deleted; moreover, this might give rise to a chain of ancestor deletions as sketched in Algorithm 6.10.

Since the algorithm requires the selection of both the minimum and the maximum f-value, the implementation needs a refined data structure. For example, we could use two heaps or a balanced tree. To select a node according to its depth, a tree of trees could also be employed.

As an example of state generation in SMAG, we take a search tree with six nodes as shown in Figure 6.4. Let the memory limit be assigned to store at most three nodes. Initially, node a is stored in memory with a cost of 20, then nodes b and c are generated next with a costs of 30 and 25, respectively. Now a node has to be deleted to continue exploration. We take node c because it has the highest cost. Node a is annotated with a cost of 30, which is the lowest cost for a deleted child. Successor node d of node b is generated with a cost of 45. Since node d is not a solution it is deleted and node b is annotated with 45. The next child of b, node e, is then generated. Since node e is not a solution either, node e is deleted and node b is regenerated, because node b is the node with the next best cost. After node b is regenerated, node c is deleted, so that goal node f with zero cost is found.

6.2. Nonadmissible Search

In Section 2.2.1 we have seen that the use of an admissible heuristic guarantees that algorithm A* will find an optimal solution. However, as problem graphs are so huge, waiting for the algorithm to terminate becomes unacceptable, if regarding the limitation of main memory the algorithm can be carried out at all. Therefore, variants of heuristic search algorithms were developed that do not insist on the optimal solution, but a good solution in feasible time and space. Some strategies even sacrifice completeness and may fail to find a solution of a solvable problem instance. These algorithms usually come with strategies that decrease the likelihood of such errors. Moreover, they are able to obtain optimal solutions to problems where IDA* and A* fail.

6.2.1. Enforced Hill-Climbing

Hill-climbing is a greedy search engine that selects the best successor node under evaluation function h, and commits the search to it. Then the successor serves as the actual node, and the search continues. Of course, hill-climbing does not necessarily find optimal solutions. Moreover, it can be trapped in state space problem graphs with dead-ends. On the other hand, the method proves to be extremely efficient for some problems, especially the easiest ones.

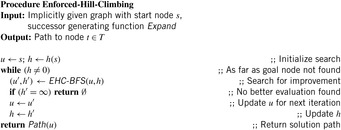

A more stable version is enforced hill-climbing. It picks a successor node, only if it has a strictly better evaluation than the current node. Since this node might not be in the immediate neighborhood of the current node, enforced hill-climbing searches for that node in a breadth-first manner. We have depicted the pseudo code for the driver in Algorithm 6.11. The BFS procedure is shown in Algorithm 6.12. We assume a proper heuristic with h(t) = 0, if and only if t is a goal. An example is provided in Figure 6.5.

Theorem 6.1

(Completeness Enforced Hill-Climbing) If the state space graph contains no dead-ends thenAlgorithm 6.12will find a solution.

Proof

There is only one case that the algorithm does not find a solution; that is, for some intermediate node v, no better evaluated node v can be found. Since BFS is a complete search method, it will find a node on a solution path with better evaluation. In fact, if it were not terminated in the case of h(v) < h(u), but in the case of h(v) = 0, it would find a full solution path.

If we have an unweighted problem graph, then it contains no dead-ends. Moreover, any complete algorithm can be used instead of BFS. However, there is no performance guarantee on the solution path obtained. An illustration of the search plateaus generated by the enforced hill-climbing algorithm is provided in Figure 6.6. The plateaus do not have to be disjoint, as intermediate nodes in one layer can exceed the h-value for which the BFS search was invoked.

|

| Figure 6.6 |

Besides being incomplete in directed graphs the algorithm has other drawbacks. There is evidence that when the heuristic estimation is not very accurate, enforced hill-climbing easily suffers from stagnation or is led astray.

6.2.2. Weighted A*

We often have that the heuristic h drastically underestimates the true distance, so that we can obtain a more realistic estimate by scaling up its influence with regard to some parameter. Although this compromises optimality, it can lead to a significant speedup; this is an appropriate choice when searching under time or space constraints.

If we parameterize  with

with  , we obtain a continuous range of best-first search variants Al, also denoted as weighted A*. For l = 0, we simulate a breadth-first traversal of the problem space; for l = 1, we have greedy best-first search. Algorithm A0.5 selects nodes in the same order as original A*.

, we obtain a continuous range of best-first search variants Al, also denoted as weighted A*. For l = 0, we simulate a breadth-first traversal of the problem space; for l = 1, we have greedy best-first search. Algorithm A0.5 selects nodes in the same order as original A*.

If we choose l appropriately, the monotonicity of f is preserved.

Lemma 6.1

For l ≤ 0.5 and a consistent estimate h, fl is monotone.

Proof

Since h is consistent we have f monotone; that is, f(v) ≥ f(u) for all pairs (u, v) on a solution path. Consequently, since

since  .

.

Let us now relax the restrictions on l to obtain more efficient, though nonadmissible, algorithms. The quality of the solution can still be bounded in the following sense.

Definition 6.1

(ϵ-Optimality) A search algorithm is ϵ-optimal if it terminates with a solution of maximum cost  , with ϵ denoting an arbitrary small positive constant.

, with ϵ denoting an arbitrary small positive constant.

Lemma 6.2

A* where  for an admissible estimate h is ϵ-optimal.

for an admissible estimate h is ϵ-optimal.

Proof

For nodes u in Open that satisfy invariant (I) (Lemma 2.2) we have  and

and  due to the reweighting process. Therefore,

due to the reweighting process. Therefore, Thus, if a node t ∈ T is selected we have

Thus, if a node t ∈ T is selected we have  .

.

ϵ-Optimality allows for more liberal selection of nodes for expansion.

Lemma 6.3

Let  . Then any selection of a node in Focal yields an ϵ-optimal algorithm.

. Then any selection of a node in Focal yields an ϵ-optimal algorithm.

Proof

Let u be the node in invariant (I) (Lemma 2.2) with  and let v be the node with the minimal f-value in Open. Then f(v) ≤ f(u), and for a goal t we have

and let v be the node with the minimal f-value in Open. Then f(v) ≤ f(u), and for a goal t we have  .

.

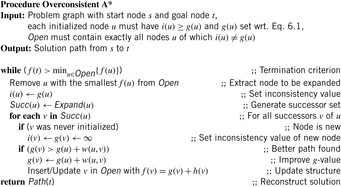

6.2.3. Overconsistent A*

We can reformulate A* search to reuse the search results of its previous executions. To do this, we define the notion of an inconsistent node and then formulate A* search as the repeated expansion of inconsistent nodes. This formulation can reuse the results of previous executions simply by identifying all the nodes that are inconsistent.

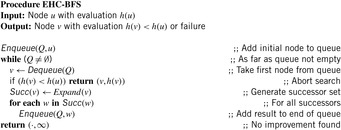

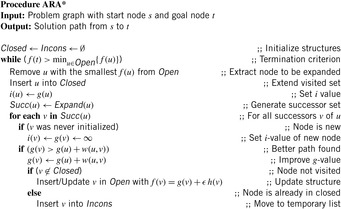

We will first introduce a new variable, called i. Intuitively, these i-values will be estimates of start distances, just like g-values. However, although g(u) is always the cost of the best path found so far from s to u, i(u) will always be equal to the cost of the best path from s to u found at the time of the last expansion of u. If u has never been expanded then i(u) is set to ∞. Thus, every i-value is initially set to ∞ and then it is always reset to the g-value of the node when the node is expanded. The pseudo code of A* that maintains these i-values is given in Algorithm 6.13.

Since we set i(u) to g(u) at the beginning of the expansion of u and we assume nonnegative edge costs, i(u) remains equal to g(u) while u is being expanded. Therefore, setting g(v) to g(u) + w(u, v) is equivalent to setting g(v) to i(u) + w(u, v). As a result, one benefit of introducing i-values is the following invariant that A* always maintains: For every node v ∈ S, More importantly, however, it turns out that Open contains exactly all the nodes u visited by the search for which

More importantly, however, it turns out that Open contains exactly all the nodes u visited by the search for which  . This is the case initially, when all nodes except for s have both i- and g-values infinite and Open only contains s, which has i(s) = ∞ and g(s) = 0. Afterward, every time a node is being selected for expansion it is removed from Open and its i-value is set to its g-value on the very next line. Finally, whenever the g-value of any node is modified it has been decreased and is thus strictly less than the corresponding i-value. After each modification of the g-value, the node is made sure to be in Open.

. This is the case initially, when all nodes except for s have both i- and g-values infinite and Open only contains s, which has i(s) = ∞ and g(s) = 0. Afterward, every time a node is being selected for expansion it is removed from Open and its i-value is set to its g-value on the very next line. Finally, whenever the g-value of any node is modified it has been decreased and is thus strictly less than the corresponding i-value. After each modification of the g-value, the node is made sure to be in Open.

(6.1)

Let us call a node u with  inconsistent and a node with i(u) = g(u) consistent. Thus, Open always contains exactly those nodes that are inconsistent. Consequently, since all the nodes for expansion are chosen from Open, A* search expands only inconsistent nodes.

inconsistent and a node with i(u) = g(u) consistent. Thus, Open always contains exactly those nodes that are inconsistent. Consequently, since all the nodes for expansion are chosen from Open, A* search expands only inconsistent nodes.

An intuitive explanation of the operation of A* in terms of inconsistent node expansions is as follows. Since at the time of expansion a node is made consistent by setting its i-value equal to its g-value, a node becomes inconsistent as soon as its g-value is decreased and remains inconsistent until the next time the node is expanded. That is, suppose that a consistent node u is the best predecessor for some node v:  . Then, if g(u) decreases we get

. Then, if g(u) decreases we get  and therefore

and therefore  . In other words, the decrease in g(s) introduces an inconsistency between the g-value of u and the g-values of its successors. Whenever u is expanded, on the other hand, this inconsistency is corrected by reevaluating the g-values of the successors of u. This in turn makes the successors of u inconsistent. In this way the inconsistency is propagated to the children of u via a series of expansions. Eventually the children no longer rely on u, none of their g-values are lowered, and none of them are inserted into the Open list.

. In other words, the decrease in g(s) introduces an inconsistency between the g-value of u and the g-values of its successors. Whenever u is expanded, on the other hand, this inconsistency is corrected by reevaluating the g-values of the successors of u. This in turn makes the successors of u inconsistent. In this way the inconsistency is propagated to the children of u via a series of expansions. Eventually the children no longer rely on u, none of their g-values are lowered, and none of them are inserted into the Open list.

The operation of this new formulation of A* search is identical to the original version of A* search. The variable i just makes it easy for us to identify all the nodes that are inconsistent: These are all the nodes u with  . In fact, in this version of the subroutine, the g-values only decrease, and since the i-values are initially infinite, all inconsistent nodes have i(u) > g(u). We will call such nodes overconsistent, and nodes u with i(u) < g(u) are called underconsistent.

. In fact, in this version of the subroutine, the g-values only decrease, and since the i-values are initially infinite, all inconsistent nodes have i(u) > g(u). We will call such nodes overconsistent, and nodes u with i(u) < g(u) are called underconsistent.

In the versions of A* just presented, all nodes had their g-values and i-values initialized at the outset. We set the i-values of all nodes to infinity, we set the g-values of all nodes except for s to infinity, and we set g(s) to 0. We now remove this initialization step and the only restriction we make is that no node is underconsistent and all g-values satisfy equation 6.1 except for g(s), which is equal to zero. This arbitrary overconsistent initialization will allow us to reuse previous search results when running multiple searches.

The pseudo code under this initialization is shown in Algorithm 6.14. The only change necessary for the arbitrary overconsistent initialization is the terminating condition of the while loop. For the sake of simplicity we assume a single goal node t ∈ T. The loop now terminates as soon as f(s) becomes less than or equal to the key of the node to be expanded next; that is, the smallest key in Open (we assume that the min operator on an empty set returns ∞). The reason for this addition is that under the new initialization t may never be expanded if it was already correctly initialized. For instance, if all nodes are initialized in such a way that all of them are consistent, then Open is initially empty, and the search terminates without a single expansion. This is correct, because when all nodes are consistent and g(s) = 0, then for every node u ≠ s,  , which means that the g-values are equal to the corresponding start distances and no search is necessary—the solution path from s to t is an optimal solution.

, which means that the g-values are equal to the corresponding start distances and no search is necessary—the solution path from s to t is an optimal solution.

Just like the original A* search, for consistent heuristics, at the time the test of the while loop is being executed, for any node u with h(u) < ∞ and f(u) ≤ f(v) for all v in Open, it holds that the extracted solution path from u to t is optimal.

Given this property and the terminating condition of the algorithm, it is clear that after the algorithm terminates the returned solution is optimal. In addition, this property leads to the fact that no node is expanded more than once if heuristics are consistent: Once a node is expanded its g-value is optimal and can therefore never decrease afterward. Consequently, the node is never inserted into Open again. These properties are all similar to the ones A* search maintains. Different from A* search, however, the Improve function does not expand all the necessary nodes relevant to the computation of the shortest path. It does not expand the nodes of which the i-values were already equal to the corresponding start distances. This property can result in substantial computational savings when using it for repeated search. A node u is expanded at most once during the execution of the Improve function and only if i(u) was not equal to the start distance of u before invocation.

6.2.4. Anytime Repairing A*

In many domains A* search with inflated heuristics (i.e., A* search with f-values equal to g plus ϵ h-values for ϵ ≥ 1) can drastically reduce the number of nodes it has to examine before it produces a solution. While the path the search returns can be suboptimal, the search also provides a bound on the suboptimality, namely, the ϵ by which the heuristic is inflated. Thus, setting ϵ to 1 results in standard A* with an uninflated heuristic and the resulting path is guaranteed to be optimal. For ϵ > 1 the length of the found path is no larger than ϵ times the length of the optimal path, while the search can often be much faster than its version with uninflated heuristics.

To construct an anytime algorithm with suboptimality bounds, we could run a succession of these A* searches with decreasing inflation factors. This naive approach results in a series of solutions, each one with a suboptimality factor equal to the corresponding inflation factor. This approach has control over the suboptimality bound, but wastes a lot of computation since each search iteration duplicates most of the efforts of the previous searches. In the following we explain the ARA* (anytime repairing A*) algorithm, which is an efficient anytime heuristic search that also runs A* with inflated heuristics in succession but reuses search efforts from previous executions in such a way that the suboptimality bounds are still satisfied. As a result, a substantial speedup is achieved by not recomputing the node values that have been correctly computed in the previous iterations.

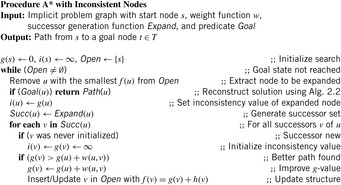

Anytime repairing A* works by executing A* multiple times starting with a large ϵ and decreasing ϵ prior to each execution until ϵ = 1. As a result, after each search a solution is guaranteed to be within a factor ϵ of optimal. ARA* uses the overconsistent A* subroutine to reuse the results of the previous searches and therefore can be drastically more efficient.

The only complication is that the heuristics inflated with ϵ may no longer be consistent. It turns out that the same function applies equally well when heuristics are inconsistent. Moreover, in general, when consistent heuristics are inflated the nodes in A* search may be reexpanded multiple times. However, if we restrict the expansions to no more than one per node, then A* search is still complete and possesses ϵ-suboptimality: the cost of the found solution is no worse than ϵ times the cost of an optimal solution.

The same holds true for the subroutine as well. We therefore restrict the expansions in the function (see Alg. 6.15) using the set Closed: Initially, Closed is empty; afterward, every node that is being expanded is added to it, and no node that is already in Closed is inserted into Open to be considered for expansion. Although this restricts the expansions to no more than one per node, Open may no longer contain all inconsistent nodes. In fact, Open contains only the inconsistent nodes that have not yet been expanded. We need, however, to keep track of all inconsistent nodes since they will be the starting points for the inconsistency propagation in the future search iterations. We do this by maintaining the set Incons of all the inconsistent nodes that are not in Open in Algorithm 6.15. Thus, the union of Incons and Open is exactly the set of all inconsistent nodes, and can be used to reset Open before each call to the subroutine.

The main function of ARA* (see Alg. 6.16) performs a series of search iterations. It does initialization, including setting ϵ to a large value ϵ0, and then repeatedly calls the subroutine with a series of decreasing values of ϵ. Before each call to the subroutine, however, a new Open list is constructed by moving to it the contents of the set Incons. Consequently, Open contains all inconsistent nodes before each call to the subroutine. Since the Open list has to be sorted by the current f-values of nodes, it is reordered. After each call to the function ARA* publishes a solution that is suboptimal by at most a factor of ϵ. More generally, for any node s with an f-value smaller than or equal to the minimum f-value in Open, we have computed a solution path from s to u that is within a factor of ϵ of optimal.

Each execution of the subroutine terminates when f(t) is no larger than the minimum key in Open. This means that the extracted solution path is within a factor ϵ of optimal. Since before each iteration ϵ is decreased, ARA* gradually decreases the suboptimality bound and finds new solutions to satisfy the bound.

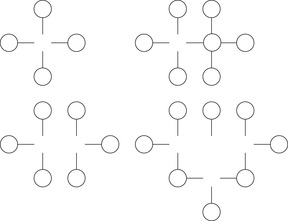

Figure 6.7 shows the operation of ARA* in a simple maze example. Nodes that are inconsistent at the end of an iteration are shown with an asterisk. Although the first call (ϵ = 2.5) is identical to the weighted A* call with the same ϵ, the second call (ϵ = 1.5) expands only one cell. This is in contrast to large number cells expanded by A* search with the same ϵ. For both searches the suboptimality factor, ϵ, decreases from 2.5 to 1.5. Finally, the third call with ϵ set to 1 expands only nine cells.

|

| Figure 6.7 |

ARA* gains efficiency due to the following two properties. First, each search iteration is guaranteed not to expand nodes more than once. Second, it does not expand nodes of which the i-values before a subroutine call function have already been correctly computed by some previous search iteration.

Each solution ARA* publishes comes with a suboptimality equal to ϵ. In addition, an often tighter suboptimality bound can be computed as the ratio between g(t), which gives an upper bound on the cost of an optimal solution, and the minimum unweighted f-value of an inconsistent node, which gives a lower bound on the cost of an optimal solution. This is a valid suboptimality bound as long as the ratio is larger than or equal to one. Otherwise, g(t) is already equal to the cost of an optimal solution. Thus, the actual suboptimality bound, ϵ′, for each solution ARA* publishes can be computed as the minimum between ϵ and this new bound:

(6.2)

The anytime behavior of ARA* strongly relies on the properties of the heuristics they use. In particular, it relies on the assumption that a sufficiently large inflation factor ϵ substantially expedites the search process. Although in many domains this assumption is true, this is not guaranteed. In fact, it is possible to construct pathological examples where the best-first nature of searching with a large ϵ can result in much longer processing times. In general, the key to obtaining anytime behavior in ARA* is finding heuristics for which the difference between the heuristic values and the true distances these heuristics estimate is a function with only shallow local minima. Note that this is not the same as just the magnitude of the differences between the heuristic values and the true distances. Instead, the difference will have shallow local minima if the heuristic function has a shape similar to the shape of the true distance function. For example, in the case of robot navigation a local minimum can be a U-shaped obstacle placed on the straight line connecting a robot to its goal (assuming the heuristic function is Euclidean distance). The size of the obstacle determines how many nodes weighted A*, and consequently ARA*, will have to visit before getting out of the minimum. The conclusion is that with ARA*, the task of developing an anytime planner for various hard search domains becomes the problem of designing a heuristic function that results in shallow local minima. In many cases (although certainly not always) the design of such a heuristic function can be a much simpler task than the task of designing a whole new anytime search algorithm for solving the problem at hand.

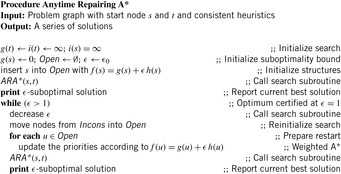

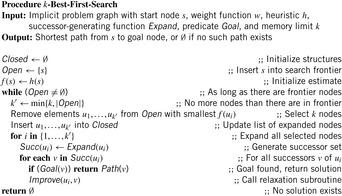

6.2.5. k-Best-First Search

A very different nonoptimal search strategy modifies the selection condition in the priority-queue data structure by considering larger sets of nodes without destroying its internal f-order. The algorithm k-best-first search is a generalization of best-first search in that each cycle expands the best k-nodes from Open instead of the first best node only. Successors are not examined until the rest of the previous k-best nodes are expanded. A pseudo-code implementation is provided in Algorithm 6.17.

In light of this algorithm, best-first search can be regarded as 1-best-first search, and breadth-first search as ∞-best first search, since in each expansion cycle, all nodes in Open are expanded.

The rationale of the algorithm is that if the level of impreciseness in a nonadmissible heuristic function increases, k-best-first search avoids running in the wrong direction and temporarily abandoning overestimated, optimal solution paths. It has been shown to outperform best-first search in a number of domains.

On the other hand, it will not be advantageous in conjunction with admissible, monotonic heuristics, since in this case all nodes that have a cost less than the optimal solution must be expanded anyway. However, when suboptimal solutions are affordable, k-best-first search can be a simple yet sufficiently powerful choice. From this point of view, k-best-first search is a natural competitor not for A*, but for weighted A* with l > 0.5.

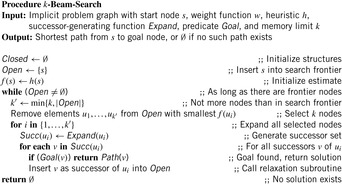

6.2.6. Beam Search

A variation of k-best-first search is k-beam search (see Algorithm 6.18). The former keeps all nodes in the Open list, and the latter discards all but the best k nodes before each expansion step. The parameter k is also known as the beam width and can scale close to the limits of main memory. Different from k-best-first search, beam search makes local decisions and does not move to another part of the search tree.

Restricted to blind breadth-first search exploration, only the most promising nodes at each level of the problem graph are selected for further branching with the other nodes pruned off permanently. This pruning rule is inadmissible; that is, it does not preserve the optimality of the search algorithm. The main motivation to sacrifice optimality and to restrict the beam width is the limit of main memory. By varying the beam width, it is possible to change the search behavior; with width 1 it corresponds to a greedy search behavior, with no limits on width to a complete search using A*. By bounding the width, the complexity of the search becomes linear in the depth of the search instead of exponential. More precisely, the time and memory complexity of beam search is O(kd), where d is the depth of the search tree. Iterative broadening (a.k.a. iterative weakening) performs a sequence of beam searches in which a weaker pruning rule is used in each iteration. This strategy is iterated until a solution of sufficient quality has been obtained, and is illustrated in Figure 6.8 (left).

6.2.7. Partial A* and Partial IDA*

During the study of partial hash functions such as bit-state hashing, double bit-state hashing, and hash compact (see Ch. 3), we have seen that the sizes of the hash tables can be decreased considerably. This is paid for by giving up search optimality, since some states can no longer be disambiguated. As we have seen, partial hashing is a compromise to the space requirements that full state storage algorithms have and can be casted as a nonadmissible simplification to traditional heuristic search algorithms. In the extreme case, partial search algorithms are not even complete, since they can miss an existing goal state due to wrong pruning. The probability can be reduced either by enlarging the number of bits in the remaining vector or by reinvoking the algorithm with different hash functions (see Fig. 6.8, right).

Partial A* applies bit-state hashing for A*'s Closed list. The hash table degenerates to a bit array without any collision strategy (we write Closed[i] to highlight the difference). Note that partial A* is applied without reopening, even if the estimate is not admissible, since the resulting algorithm cannot guarantee optimal solutions anyway. The effect of partial state storage is illustrated in Figure 6.9. If only parts of states are stored, more states fit into main memory.

|

| Figure 6.9 |

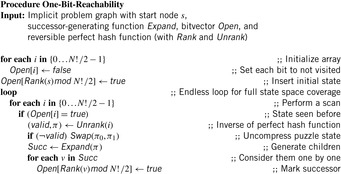

To analyze the consequences of applying nonreversible compression methods, we concentrate on bit-state hashing. Our focus on this technique is also motivated by the fact that bit-state hashing compresses states drastically down to one or a few bits emphasizing the advantages of depth-first search algorithms. Algorithm 6.19 depicts the A* search algorithm with (single) bit-state hashing.

Given M bits of memory, single bit-state hashing is able to store M states. This saves memory of factor  , since the space requirements for an explicit state are at least lg|S| bits. For large state spaces and less efficient state encodings the gains in state space coverage for bit-state hashing are considerable.

, since the space requirements for an explicit state are at least lg|S| bits. For large state spaces and less efficient state encodings the gains in state space coverage for bit-state hashing are considerable.

First of all, states in the search frontier can hardly be compressed. Second, it is often necessary to keep track of the path that leads to each state. An additional observation is that many heuristic functions and algorithms must access the length (or cost) of the optimal path through which the state was reached.

There are two solutions to these problems: either information is recomputed by traversing the path that leads to the state, or it is stored together with the state. The first so-called state reconstruction method increases time complexity, and the second one increases the memory requirements. Still, state reconstruction needs to store a predecessor link, which on a W-bit processor typically requires W bits.

It is not trivial to analyze the amount of information needed to store the set Open, especially considering that problem graphs are not regular. However, experimental results show that the search frontier frequently grows exponentially with the search depth, such that compressing the set of closed states does not help much. Hence, applying bit-state hashing for search algorithms such as BFS is not as effective as it is in DFS.

Nonadmissible bit-state hashing can also be used in combination with linear IDA* search. The implementation of Partial IDA* is shown in Algorithm 6.20. Bit-state hashing can be combined with transposition table updates propagating f-values or h-values back to the root, but as the pruning technique is incomplete and annotating any information at a partially stored state is memory intensive, it is simpler to initialize the hash table in each iteration.

Refreshing large bitvector tables is fast in practice, but for shallow searches with a small number of expanded nodes this scheme can be improved by invoking ordinary IDA* with transposition table updates for smaller thresholds and by applying bitvector exploration in large depths only.

6.3. Reduction of the Closed List

When searching tree-structured state spaces, the Closed list is usually much smaller than the Open list, since the number of generated nodes is exponentially growing with search depth. However, in some problem domains its size might actually dominate the overall memory requirements. For example, in the Gridworld problem, the Closed list is roughly described as an area of quadratic size, and the Open list of linear size. We will see that search algorithms can be modified such that, when running out of space during the search, much or all of the Closed list can be temporarily discarded and only later be partially reconstructed to obtain the solution path.

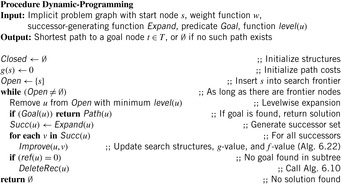

6.3.1. Dynamic Programming in Implicit Graphs

The main precondition of most dynamic programming algorithms is that the search graph has to be acyclic. This ensures a topological order ≼ on the nodes such that u ≼ v whenever u is an ancestor of v.

For example, this is the case for the rectangular lattice of the Multiple Sequence Alignment problem. Typically, the algorithm is described as explicitly filling out the cells of a fixed-size, preallocated matrix. However, we can equivalently transfer the representation to implicitly defined graphs in a straightforward way by modifying Dijkstra's algorithm to use the level of a node as the heap key instead of its g-value. This might save us space, in case we can prune the computation to only a part of the grid. A topological sorting can be partitioned into levels leveli by forming disjoint, exhaustive, and contiguous subsequences of the node ordering. Alignments can be computed by proceeding in rows, columns, antidiagonals, and many more possible partitions.

When dynamic programming traverses a k-dimensional lattice in antidiagonals, the Open list consists of at most k levels (e.g., for k = 2, the parents to the left and top of a cell u at level are at level − 1, and the diagonal parent to the top-left at level − 2); thus, it is of order O(kNk − 1), one dimension smaller than the search space O(Nk).

The only reason to store the Closed list is for tracing back the solution path once the target has been reached. A means to reduce the number of nodes that have to be stored for path reconstruction is to associate, similar as in Section 6.1.4, a reference count with each node that maintains the number of children on the optimal path it lies. The pseudo code is shown in Algorithm 6.21 and the corresponding node relaxation step in Algorithm 6.22, where procedure DeleteRec is the same as in Algorithm 6.10.

In general, reference counting has been experimentally shown to be able to drastically reduce the size of the stored Closed list.

6.3.2. Divide-and-Conquer Solution Reconstruction

Hirschberg first noticed that when we are only interested in determining the cost of an optimal alignment, it is not necessary to store the whole matrix; instead, when proceeding by rows, for example, it suffices to keep track of only k of them at a time, deleting each row as soon as the next one is completed. This reduces the space requirement by one dimension, from O(Nk) to O(kNk − 1); a considerable improvement for long sequences. Unfortunately, this method doesn't provide us with the actual solution path. To recover it after termination of the search, recomputation of the lost cell values is needed. The solution is to apply the algorithm twice to half the grid each, once in a forward direction and once in a backward direction, meeting at some intermediate relay layer. By adding the corresponding forward and backward distances, the cell lying on an optimal path can be recovered. This cell essentially splits the problem into two smaller subproblems, one starting at the top-left corner and the other at the bottom-right corner they can be recursively solved using the same method. Since in two dimensions, solving a problem of half the dimension is roughly four times easier, the overall computation time is at most double of that when storing the full Closed list; the overhead reduces even more in higher dimensions. Further refinements of Hirschberg's algorithm exploit additionally available memory to store more than one node on an optimal path, thereby reducing the number of recomputations.

6.3.3. Frontier Search

Frontier search is motivated by the attempt of generalizing the space reduction for the Closed list achieved by Hirschberg's algorithm to a general best-first search. It mainly applies to problem graphs that are undirected or acyclic but has been extended to more general graph classes. It is especially effective if the ratio of Closed to Open list sizes is large. Figure 6.10 illustrates a frontier search in an undirected Gridworld. All generated nodes as well as tags for the used incoming operators prevent reentering the set of expanded nodes, which initially consists of the start state.

|

| Figure 6.10 |

In directed acyclic graphs a frontier search is even more apparent. Figure 6.11 schematically depicts a snapshot during a two-dimensional alignment problem, where all nodes with an f-value no larger than the current fmin have been expanded. Since the accuracy of the heuristic decreases with the distance to the goal, the typical onion-shaped distribution results, with the bulk being located closer to the start node, and tapering out toward higher levels.

However, in contrast to the Hirschberg algorithm, A* still stores all the explored nodes in the Closed list. As a remedy, we obtain two new algorithms.

Divide-and-conquer bidirectional search performs a bidirectional breadth-first search with Closed lists omitted. When the two search frontiers meet, an optimal path has been found, as well as a node on it in the intersection of the search frontiers. At this point, the algorithm is recursively called for the two subproblems: one from the start node to the middle node, and the other from the middle node to the target.

Divide-and-conquer forward frontier search searches only in a forward direction, without the Closed list. In the first phase, a goal t with optimal cost f* is searched. In the second phase the search is reinvoked with a relay layer at about f*/2. When a node on a relay layer is encountered, all its children store it as their parent. Subsequently, every node past the relay layer saves its respective ancestor on the relay layer that lies on its shortest path from the start node. When the search terminates, the stored node in the relay layer is an intermediate node roughly halfway on an optimal solution path. This intermediate state i from s to t is detected; in the last phase the algorithm is recursively called for the two subproblems from s to i, and from i to t. Figure 6.12 depicts the recursion step (left) and the problem in directed graphs of falling back behind the current search frontier (right), if the width of the search frontier is too small. For this case, several duplicates are generated.

|

| Figure 6.12 |

Apart from keeping track of the solution path, A* uses the stored Closed list to prevent the search from leaking back, in the following sense. A consistent heuristic ensures that (as in the case of Dijkstra's algorithm) at the time a node is expanded, its g-value is optimal, and hence it is never expanded again. However, if we try to delete the Closed nodes, then there can be topologically smaller nodes in Open with a higher f-value; when those are expanded at a later stage, they can lead to the regeneration of the node at a nonoptimal g-value, since the first instantiation is no longer available for duplicate checking. In Figure 6.11, nodes that might be subject to spurious reexpansion are marked “X.” The problem of the search frontier “leaking back” into previously expanded Closed nodes is the main obstacle for Closed list reduction in a best-first search.

One suggested workaround is to save, with each state, a list of move operators describing forbidden moves leading to Closed nodes. However, this implies that the node representation cannot be constant, but grows exponentially with the problem dimension. Another way out is to insert all possible parents of an expanded node into Open specially marked as not yet reached. However, this inflates the Open list and is incompatible with many other pruning schemes.

6.3.4. *Sparse Memory Graph Search

The reduction of frontier search has inspired most of the upcoming algorithms. A promising attempt at memory reduction is sparse memory graph search (SMGS). It is based on a compressed representation of the Closed list that allows the removal of many, but not all, nodes. Compared to frontier search it describes an alternative scheme of dealing with back leaks.

Let Pred(v) denote the set of predecessors for node v; that is,  . The kernel K(Closed) of the set of visited nodes Closed is defined as the set of nodes for which all predecessors are already contained in Closed:

. The kernel K(Closed) of the set of visited nodes Closed is defined as the set of nodes for which all predecessors are already contained in Closed: The rest of the Closed nodes are called the boundary B(Closed):

The rest of the Closed nodes are called the boundary B(Closed):

The Closed nodes form a volume in the search space enclosing the start node; nodes outside this volume cannot reach any node inside it without passing through the boundary. Thus, storing the boundary is sufficient to avoid back leaks.

A sparse solution path is an ordered list  with d ≥ 1 and

with d ≥ 1 and  ; that is, it consists of a sequence of ancestor nodes on an optimal path where vi doesn't necessarily have to be a direct parent of vi + 1. All Closed nodes except boundary nodes and relay nodes can be deleted, such as nodes that are used to reconstruct the corresponding solution path from the sparse representation. SMGS tries to make maximum use of available memory by lazily deleting nodes only if necessary because the algorithm's memory consumption approaches the computer's limit.

; that is, it consists of a sequence of ancestor nodes on an optimal path where vi doesn't necessarily have to be a direct parent of vi + 1. All Closed nodes except boundary nodes and relay nodes can be deleted, such as nodes that are used to reconstruct the corresponding solution path from the sparse representation. SMGS tries to make maximum use of available memory by lazily deleting nodes only if necessary because the algorithm's memory consumption approaches the computer's limit.

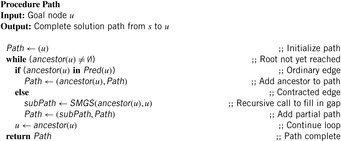

The algorithm SMGS assumes that the in-degree |Pred(v)| of each node v can be computed. Moreover, the heuristic h must be consistent,  for edge u, v, so that no reopening can take place. SMGS is very similar to the standard algorithm, except for the reconstruction of the solution path, which is shown in Algorithm 6.23. Starting from a goal node, we follow the ancestor pointers as usual. However, if we encounter a gap, the problem is dealt with a recursive call of the search procedure. Two successive nodes on the sparse path are taken as start and goal node. Note that these decomposed problems are by far smaller and easier to solve than the original one.

for edge u, v, so that no reopening can take place. SMGS is very similar to the standard algorithm, except for the reconstruction of the solution path, which is shown in Algorithm 6.23. Starting from a goal node, we follow the ancestor pointers as usual. However, if we encounter a gap, the problem is dealt with a recursive call of the search procedure. Two successive nodes on the sparse path are taken as start and goal node. Note that these decomposed problems are by far smaller and easier to solve than the original one.

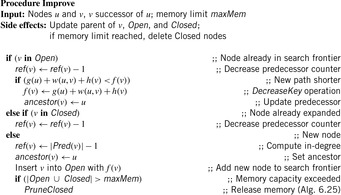

Algorithm 6.24 illustrates the edge relaxation step for SMGS. Each generated and stored node u keeps track of the number of unexpanded predecessors in a variable ref(u). It is initialized with the in-degree of the node minus one, accounting for its parent. During expansion the ref-value is appropriately decremented; kernel nodes can then be easily recognized by ref(u) = 0.

The pruning procedure (see Alg. 6.25) prunes nodes in two steps. Before deleting kernel nodes, it updates the ancestral pointer of its boundary successors to the next higher boundary node. Further pruning of the resulting relay nodes is prevented by setting its ref-value to infinity.

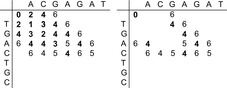

Figure 6.13 gives a small example for the algorithm in the context of the multiple sequence alignment problem. The input consists of the two strings TGACTGC and ACGAGAT, assuming that a match incurs no cost, a mismatch introduces cost 1, and a gap corresponds to cost 2.

|

| Figure 6.13 |

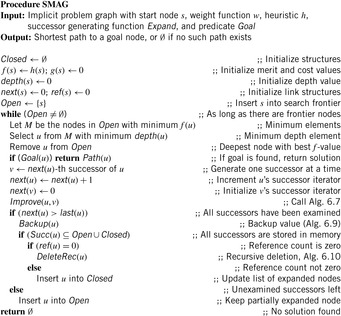

6.3.5. Breadth-First Heuristic Search

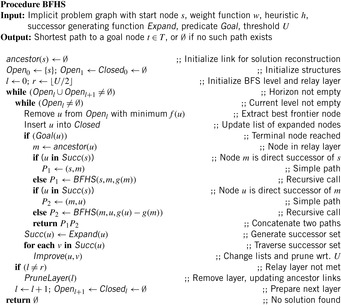

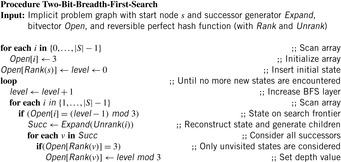

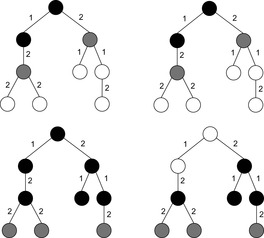

The term breadth-first heuristic search is short for the sparse-memory algorithm breadth-first branch-and-bound search with layered duplicate detection. It is based on the observation that the storage of nodes serves two purposes. First, duplicate detection allows recognition of states that are reached along a different path. Second, it allows reconstruction of the solution path after finding the goal using the links to the predecessors. IDA* can be seen as a method that gives up duplicate detection, and breadth-first heuristic search gives up solution reconstruction.

Breadth-first search divides the problem graph into layers of increasing depth. If the graph is unit cost, then all nodes in one layer have the same g-value. Moreover, as shown for frontier search at least for regular graphs, we can omit the Closed list and reconstruct the solution path based on an existing relay layer kept in main memory.

Subsequently, breadth-first heuristic search combines breadth-first search with an upper-bound pruning scheme (that allows the pruning of frontier nodes according to the combined cost function f = g + h) together with frontier search to eliminate already expanded nodes. The assumption is that the sizes of the search frontiers for breadth-first and best-first search differ and that using divide-and-conquer solution reconstruction is more memory efficient for breadth-first search.

Instead of maintaining used operator edges together with each problem graph node, the algorithms maintain a set of layers of parent nodes. In undirected graphs two parent layers are sufficient, as the successor of a node that is a duplicate has to appear either in the actual layer or in the layer of previous nodes. More formally, assume that the levels Open0, …, Openi − 1 have already been computed correctly. We consider a successor v of a node u ∈ Openi − 1: The distance from s to v is at least i − 2 because otherwise the distance of u would be less than i − 1. Thus,  . Therefore, we can correctly subtract Openi − 1 and Openi − 2 from the set of all successors of Openi − 1 to build the duplicate-free search frontier Openi for the next layer.

. Therefore, we can correctly subtract Openi − 1 and Openi − 2 from the set of all successors of Openi − 1 to build the duplicate-free search frontier Openi for the next layer.

Suppose that an upper bound U on the optimal solution cost f* is known. Then node expansion can immediately discard successor nodes of which the f-values are larger than U. The pseudo-code implementation using two backup BFS layers for storing states assumes an undirected graph and is shown in Algorithm 6.26 and Algorithm 6.27. The lists Open and Closed are partitioned along the nodes' depth values. The relay layer r is initially set to  . During the processing of layer l the elements are moved from Openl to Closedl and new elements are inserted into Openl + 1. After a level is completed l increases. The Closed list for layer l and the Open list for layer (l + 1) are initialized to the empty set. In case a solution is found the algorithm is invoked recursively to enable divide-and-conquer solution reconstruction from s to m and from m to the established goal u, where m is the node in relay layer r that realizes minimum costs with regard to u. Node m is found using the link ancestor(u), which in case a previous layer is deleted, is updated as follows. For all nodes u below the relay layer we set ancestor(u) to s. For all nodes above the relay layer we set ancestor(u) to ancestor(ancestor(u)) unless we encounter a node in the relay layer. (The implementation of DeleteLayer is left as an exercise.)

. During the processing of layer l the elements are moved from Openl to Closedl and new elements are inserted into Openl + 1. After a level is completed l increases. The Closed list for layer l and the Open list for layer (l + 1) are initialized to the empty set. In case a solution is found the algorithm is invoked recursively to enable divide-and-conquer solution reconstruction from s to m and from m to the established goal u, where m is the node in relay layer r that realizes minimum costs with regard to u. Node m is found using the link ancestor(u), which in case a previous layer is deleted, is updated as follows. For all nodes u below the relay layer we set ancestor(u) to s. For all nodes above the relay layer we set ancestor(u) to ancestor(ancestor(u)) unless we encounter a node in the relay layer. (The implementation of DeleteLayer is left as an exercise.)

If all nodes were stored in the main memory, breadth-first heuristic search would usually traverse more nodes than A*. However, like sparse memory graph search, the main impact of breadth-first heuristic search lies in its combination with divide-and-conquer solution reconstruction. We have already encountered the main obstacle for this technique in heuristic search algorithms—the problem of back leaks; to avoid node regeneration, a boundary between the frontier and the interior of the explicitly generated search graph has to be maintained, which dominates the algorithm's space requirements. The crucial observation is that this boundary can be expected to be much smaller for breadth-first search than for best-first search; an illustration is given in Figure 6.14. Essentially, a fixed number of layers suffices to isolate the earlier layers. In addition, the implementation is easier.

|

| Figure 6.14 |

As said, BFHS assumes an upper bound U on the optimal solution cost f* as an input. There are different strategies to find U. One option is to use approximate algorithms like hill-climbing or weighted A* search. Alternatively, we can use an iterative-deepening approach as in IDA*, starting with U ← h(s) and continuously increasing the bound. Since the underlying search strategy is BFS, the algorithm has been called breadth-first iterative deepening.

|

| Algorithm 6.27. |

| Update of a problem graph edge in Algorithm 6.26. |

6.3.6. Locality

How many layers are sufficient for full duplicate detection in general is dependent on a property of the search graph called locality.

Definition 6.2

(Locality) For a weighted problem graph G the locality is defined as

For undirected and unweighted graphs we have w ≡ 1. Moreover, δ(s, u) and δ(s, v) differ by at most 1, so that the locality turns out to be 2. The locality determines the thickness of the search frontier needed to prevent duplicates in the search. Note that the layer that is currently expanded is included in the computation of the locality but the layer that is currently generated is not.

While the locality is dependent on the graph, the duplicate detection scope also depends on the search algorithm applied. We call a search graph a g-ordered best-first search graph if each node is maintained in buckets matching its g-value. For breadth-first search, the search tree is generated with increasing path lengths, whereas for weighted graphs the search tree is generated with increasing path cost (this corresponds to Dijkstra's exploration strategy in 1-Level Bucket data structure).

Theorem 6.2

(Locality Determines Boundary) The number of buckets of a g-ordered best-first search graph that need to be retained to prevent a duplicate search effort is equal to the locality of the search graph.

Proof

Let us consider two nodes u and v, with v ∈ Succ(u). Assume that u has been expanded for the first time, generating the successor v, which has already appeared in the layers  , implying

, implying  . We have

. We have This is a contradiction to w(u, v) > 0.

This is a contradiction to w(u, v) > 0.

To determine the number of shortest path layers prior to the search, it is important to establish sufficient criteria for the locality of a search graph. However, the condition  maximized over all nodes u and v ∈ Succ(u) is not a property that can be easily checked before the search. So the question is, can we find a sufficient condition or upper bound for it? The following theorem proves the existence of such a bound.

maximized over all nodes u and v ∈ Succ(u) is not a property that can be easily checked before the search. So the question is, can we find a sufficient condition or upper bound for it? The following theorem proves the existence of such a bound.

Theorem 6.3

(Upper Bound on Locality) The locality of a problem graph can be bounded by the minimal distance to get back from a successor node v to u, maximized over all u, plus w(u, v).

Proof

For any nodes s, u, v in a graph, the triangular property of shortest paths  is satisfied, in particular for v ∈ Succ(u). Therefore,

is satisfied, in particular for v ∈ Succ(u). Therefore,  and

and

. In positively weighted graphs, we have

. In positively weighted graphs, we have  , such that

, such that  is larger than the locality.

is larger than the locality.

Theorem 6.4

(Upper Bounds in Weighted Graphs) For undirected graphs with maximum edge weight C we have localityG ≤ 2C.

Proof

For undirected graphs with with maximum edge cost C we have

6.4. Reduction of the Open List

In this section we analyze different strategies that reduce the number of nodes in the search frontier. First we look at different traversal policies that can be combined with a branch-and-bound algorithm.

Even if the number of stored layers k is less than the locality of the graph, the number of times a node can be reopened in breadth-first heuristic search is only linear in the depth of the search. This contrasts the exponential number of possible reopenings for linear-space depth-first search strategies.

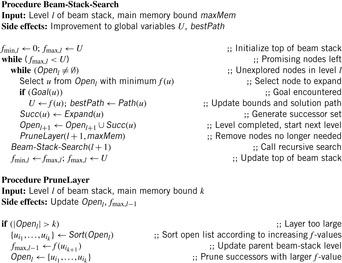

6.4.1. Beam-Stack Search

We have seen that beam search accelerates a search by not maintaining in each layer just one node, but a fixed number, maxMem. No layer of the search graph is allowed to grow larger than the beam width so that the least-promising nodes are pruned from a layer when the memory is full. Unfortunately, this inadmissible pruning scheme means that the algorithm is not guaranteed to terminate with an optimal solution.

Beam-stack search is a generalization of beam search that essentially turns it into an admissible algorithm. It first finds the same solution like beam search, but then continues to backtrack to pruned nodes to improve the solution over time. It can also be seen as a modification of branch-and-bound search, and includes depth-first branch-and-bound search and breadth-first branch-and-bound search as special cases. In the first case the beam width is 1, and in the second case, the beam width is greater than or equal to the size of the largest layer.

The first step toward beam-stack search is divide-and-conquer beam search. It limits the layer width of breadth-first heuristic search to the amount of memory available. For undirected search spaces, divide-and-conquer beam search stores three layers for duplicate detection and one relay layer for solution reconstruction. The difference from traditional beam search is that it uses divide-and-conquer solution reconstruction to reduce memory. Divide-and-conquer beam search has been shown to outperform, for example, weighted A* in planning problems. Unfortunately, as with beam search, it is neither complete nor optimal. An illustration of this strategy is provided in Figure 6.15. To the left we see the four layers (including the relay layer) of currently stored breadth-first heuristic search. To the right we see that divide-and-conquer beam search explores a smaller corridor, leading to less nodes to be stored in main memory.

|

| Figure 6.15 |

The beam-stack search algorithm utilizes a specialized data structure called the beam stack, a generalization of an ordinary stack used in DFS. In addition to the nodes, each layer also contains one record of the breadth-first search graph. To allow backtracking, the beam stack exploits the fact that the nodes can be sorted by their cost function f. We assume that the costs are unique, and that ties are broken by refining the cost function to some secondary order comparison criteria. On the stack in each layer a half-open interval  is stored, such that all nodes u are pruned with

is stored, such that all nodes u are pruned with  and all nodes are eliminated with an

and all nodes are eliminated with an  . All layers are initialized to [0, U) with U being the current upper bound.

. All layers are initialized to [0, U) with U being the current upper bound.

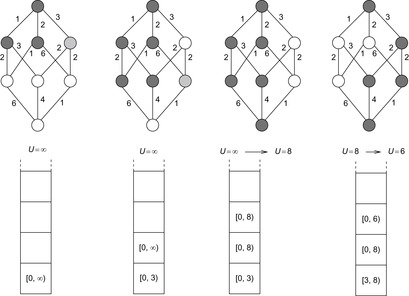

An illustration of beam-stack search is provided in Figure 6.16. The algorithm is invoked with a beam width of 2 and an initial upper bound of ∞. The problem graph is shown at the top of the figure. Nodes currently under consideration are shaded. Light shading corresponds to the fact that a node cannot be stored based on the memory restriction. To the bottom of the graphs, the current value for U and the contents of the beam stack are provided. We have highlighted four iterations. The first iteration expands the start node and generates two of three successors for the next search depth. As all weights are integers, the next possible value 3 is stored as the upper bound for the first layer. When expanding the second layer, again one node does not satisfy the width. In the next iteration we arrive at the goal on two possible paths, suggesting a minimal solution of 8. The value overwrites the initial upper bound and is propagated bottom-up such that 8 is the next upper bound to start with. As we have searched all paths corresponding to a solution value smaller than 3, the lower bound is set to 3. The last step shows the situation for searching the graph with the solution interval [3, 8]. It illustrates that beam-stack search eventually finds the optimal solution of value 6, which in turn is assigned to U and reported at the root node.

|

| Figure 6.16 |