Chapter 13. Constraint Search

This chapter gives a general introduction to constraint satisfaction. It discusses traditional constraint satisfaction problems, temporal constraint networks, and critical path scheduling, and turns to different NP-hard optimization problems. It also discusses recent results on searching backbones and backdoors as well as path and soft constraints

Keywords: consistency, arc consistency, bounds consistency, path consistency, backtracking, backjumping, backmarking, Boolean satisfiability, number partition, bin packing, rectangle packing, vertex cover, independent set, clique, graph partition, temporal constraint network, simple temporal network, PERT scheduling, formula progression, automata encoding, soft constraints, preference constraints, path constraints.

Constraint technology has evolved into one of the most effective search options, which is understandable because its declarative formulation makes it easy to use. The technology is open and extensible, because it differentiates among branching, propagation, and search. Constraint search has been integrated (e.g., in the form of a library) to many existing programming languages and is effective in many practical applications, especially in time tabling, logistics, and scheduling domains.

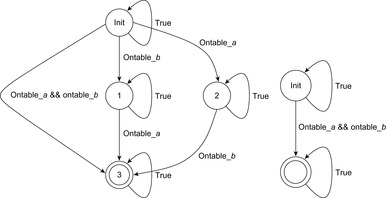

A search constraint is a restriction on the set of possible solutions to a search problem. For goal constraints (the standard setting in state space search), we specify goal states, and these incorporate constraints on the goal. In this case, constraints refer to the end of solution paths, denoting the restriction on the set of possible terminal states. For path constraints, constraints refer to the path as a whole. They are expressed in temporal logic, a common formalism for the specification of desired properties of software systems. Examples are conditions that have to be satisfied always, or achieved at least sometimes during the execution of a solution path.

In constraint modeling we have to decide about variables, their domains, and constraints. There are many different options to encode the same problem, and a good encoding may be essential for an efficient solution process.

Constraints can be of very different kinds; special cases are binary and Boolean constraints. The former ones include at most two constraint variables in each constraint to feature efficient propagation rules, and the latter ones refer to exactly two possible assignments (true and false) to the constraint variables and are known as satisfiability problems.

We further distinguish between hard constraints that have to be satisfied, and soft constraints, the satisfaction of which is preferred but not mandatory. The computational challenge with soft constraints is that they can be contradicting. In such cases, we say that the problem is oversubscribed. We consider soft constraints to be evaluated in a linear objective function with coefficients measuring their desirability.

Constraints express incomplete information such as properties and relations over unknown state variables. Search is needed to restrict the set of possible value assignments. The search process that assigns values to variables is called labeling. Any assignment corresponds to imposing a constraint on the chosen variable. Alternatively, general branching rules that introduce additional constraints may be used to split the search space.

The process to tighten and extend the constraint set is called constraint propagation. In constraint search, labeling and constraint propagation are interleaved. As the most important propagation techniques we exploit arc consistency and path consistency. Specialized consistency rules further enhance the propagation effectiveness. As an example, we explain insights to the inference based on the all-different constraint that requires all variable assignments to be mutually different.

Search heuristics determine an order on how to traverse the search tree. They can be used either to enhance pruning or to improve success rates, for example, by selecting the more promising nodes for a feasible solution. Different from the observation in previous chapters, for constraint search, diverse search paths turn out to be essential. Consequently, as one heuristic search option, we control the search by the number of discrepancies to the standard successor generation module.

Most of the text is devoted to strategies for solving constraint satisfaction problems, asking for satisfying assignments to a set of finite-domain variables. We also address the more general setting of solving constraint optimization problems, which asks for an optimal value assignment with respect to an additionally given objective function. For example, problems with soft constraints are modeled as a constraint optimization problem by introducing additional state variables that fine the violation of preference constraints. We will see how search heuristics in the form of lower bounds can be included into the constraint optimization, and how more general search heuristics apply.

In later parts of the chapter, we subject the search to solving some well-known NP-hard problems with specialized constraint solvers. We will consider instances of SAT, Number Partition, Bin Packing, and Rectangle Packing, as well as graph problems like Graph Partition and Vertex Cover. We present heuristic estimates and further search refinements to enhance the search for (optimal) solutions.

Temporal constraints are ones that restrict the set of possible time points; for example, to wake up between 7 a.m and 8 a.m. For this case variable domains are infinite. We introduce two algorithmic approaches that can deal with temporal constraints.

13.1. Constraint Satisfaction

Constraint satisfaction is a technology for modeling and solving combinatorial problems. Its main parts are domain filtering and local consistency rules together with refined search techniques to traverse the resulting state space. Constraint satisfaction relies on a declarative problem description that consists of a set of variables together with their respective domains. Each domain itself is composed of a set of possible values. Constraints restrict the set of possible combinations of the variables.

Constraints are often expressed in the form of arithmetic (in)equalities over a set of unknown variables; for example, the unary integer constraints X ≥ 0 and X ≤ 9 denote that the value X consists of one digit. Combining a set of constraints can exploit information and yield a set of new constraints. Arithmetic linear constraints, such as X + Y = 7 and X − Y = 5, can be simplified to the constraints: X = 6 and Y = 1. In constraint solving practice, elementary calculus is often not sufficient to determine the set of feasible solutions. In fact, most constraint satisfaction domains we consider are NP-hard.

Definition 13.1

(CSP, Constraint, Solution) A constraint satisfaction problem (CSP) consists of a finite set of variables  of finite domains

of finite domains  , and a finite set of constraints, where a constraint is a(n arbitrary) relation over the set of variables. Constraints can be extensional in the form of a set of compatible tuples or intentional in the form of a formula. A solution to a CSP is a complete assignment of values to variables satisfying all the constraints.

, and a finite set of constraints, where a constraint is a(n arbitrary) relation over the set of variables. Constraints can be extensional in the form of a set of compatible tuples or intentional in the form of a formula. A solution to a CSP is a complete assignment of values to variables satisfying all the constraints.

For the sake of simplicity this definition rules out continuous variables. Examples for extending this class are considered later in this chapter in the form of temporal constraints.

A binary constraint is a constraint involving only two variables. A binary CSP is a CSP with only binary constraints. Unary constraints can be compiled into binary ones, for example, by adding constraint variables with only assignment 0.

Any CSP is convertible to a binary CSP via its dual encoding, where the roles of variables and constraints are exchanged. The constraint variables are encapsulated, meaning one has assigned a domain that is a Cartesian product of the domains of individual variables. The valuation of original variables can to be extracted from the valuation of encapsulated variables. As an example, take the original (non-binary) CSP: X + Y = Z, X < Y, with  ,

,  , and

, and  . The equivalent binary CSP consists of the two encapsulated variables

. The equivalent binary CSP consists of the two encapsulated variables  and

and  together with their domains

together with their domains  and

and  . The binary constraints between V and W require that the components that refer to the same original variables match; for example, the first component in V (namely X) is equal to the first component in W and the second component in V(namely Y) is equal to the second component in W.

. The binary constraints between V and W require that the components that refer to the same original variables match; for example, the first component in V (namely X) is equal to the first component in W and the second component in V(namely Y) is equal to the second component in W.

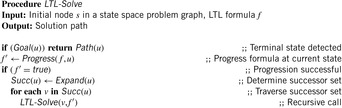

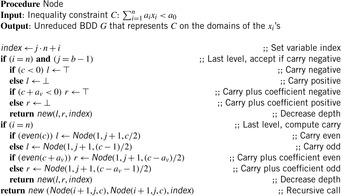

One example is the Eight Queens problem (see Fig. 13.1). The task is to place eight queens on a chess board, but with at most one queen in the same row, column, or diagonal. If variable Vi denotes the column of the queen in row i,  , we have that

, we have that  . An assignment to one variable will restrict the set of possible assignments to other ones. The constraints that induce no conflict are Vi ≠ Vj (vertical threat) and

. An assignment to one variable will restrict the set of possible assignments to other ones. The constraints that induce no conflict are Vi ≠ Vj (vertical threat) and  (diagonal threat) for all 1 ≤ i ≠ j ≤ 8. (Horizontal threats are already taken care of in the constraint model.)

(diagonal threat) for all 1 ≤ i ≠ j ≤ 8. (Horizontal threats are already taken care of in the constraint model.)

Such a problem formulation calls for an efficient search algorithm to find a feasible variable assignment representing valid placements of the queens on the board. A naive strategy considers all 88 possible assignments, which can easily be reduced to 8!. A refined approach maintains a vector for a partial assignment in a vector, which grows with increasing depth and shrinks with each backtrack. To limit the branching during the search, we additionally maintain a global data structure to mark all places that are in conflict with the current assignment.

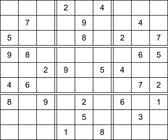

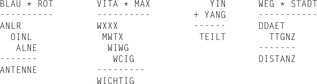

A Sudoku (see Fig. 13.2) is a puzzle with rising popularity in the United States, but with a longer tradition in Asia and Europe. The rules are simple: fill all empty squares with numbers from  such that in each column, in each row, and in each 3 × 3 block, all numbers from 1 to 9 are selected exactly once. If variable Vi,j with

such that in each column, in each row, and in each 3 × 3 block, all numbers from 1 to 9 are selected exactly once. If variable Vi,j with  denotes the number assigned to cell (i, j), where

denotes the number assigned to cell (i, j), where  , as constraints we have

, as constraints we have

•  for all

for all  ,

,  (vertical constraints)

(vertical constraints)

•  for all

for all  ,

,  (horizontal constraints)

(horizontal constraints)

•  for all

for all  ,

,  and

and  (subsquare constraints)

(subsquare constraints)

|

| Figure 13.2 |

As another typical CSP example, we consider a Cryptarithm (a.k.a crypto-arithmetic puzzle or alphametic), in which we have to assign numbers to each individual variable, so that the equation SEND + MORE = MONEY becomes true. The set of variables is {S, E, N, D, M, O, R, Y}. Each variable is an integer from 0 to 9, and leading characters and S and M cannot be assigned 0. The problem is to assign pairwise different values to the variables. It is not difficult to check that the (unique) solution to the problem is given by the assignments [S, E, N, D, M, O, R, Y] = [9, 5, 6, 7, 1, 0, 8, 2] (vector notation).

Since we only consider problems with decimal digits, there are at most 10! different assignments of the digits to variables. So a Cryptarithm is a finite-state space problem, but generalizations to bases other than decimals are provably hard (NP-complete).

Another famous CSP problem is the Lonely Eight problem. The task is to determine all wild cards in the division that is shown in Figure 13.3. For a human using basic calculus and exclusions it is not difficult to obtain the only solution  . However, since no specialized constraints can be used, CSP solvers are often confronted with considerable work.

. However, since no specialized constraints can be used, CSP solvers are often confronted with considerable work.

13.2. Consistency

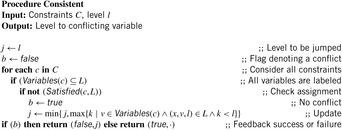

Consistency is an inference mechanism to rule out certain variable assignments, which in turn enhances the search. The simplest consistency check tests a current assignment against the set of constraints. Such a simple consistency algorithm for a set of assigned variables and a set of constraints is provided in Algorithm 13.1. We use Variables(c) to denote the set of variables that are mentioned in the constraint c, and Satisfied(c, L) to denote if the constraint c is satisfied by the current label set L(assignment of values to variables).

In the following we introduce more powerful inference methods like arc consistency and path consistency, and discuss specialized consistency techniques like the all-different constraint.

13.2.1. Arc Consistency

Arc consistency is one of the most powerful propagation techniques for binary constraints. For every value of a variable in the constraint we search for a supporting value to be assigned to the other variable. If there is none, the value can safely be eliminated. Otherwise, the constraint is arc consistent.

Definition 13.2

(Arc Consistency) The pair (X, Y) of constraint variables is arc consistent if for each value  there exists a value

there exists a value  such that the assignments X = x and Y = y satisfy all binary constraints between X and Y. A CSP is arc consistent if all variable pairs are arc consistent.

such that the assignments X = x and Y = y satisfy all binary constraints between X and Y. A CSP is arc consistent if all variable pairs are arc consistent.

Consider a simple CSP with the variables A and B subject to their respective domains  and

and  , as well as the binary constraint A < B. We see that value 1 can be safely removed from DB based on constraint and the restriction we have on A.

, as well as the binary constraint A < B. We see that value 1 can be safely removed from DB based on constraint and the restriction we have on A.

In general, constraints are used actively to remove inconsistencies from the problem. An inconsistency arises if we arrive at a value that cannot be contained in any solution. To abstract from different inference mechanisms we assume a procedure Revise, to be attached to each constraint, which governs the propagation of the domain restrictions.

AC-3 and AC-8

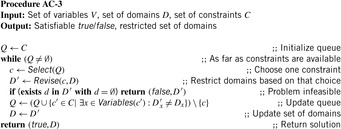

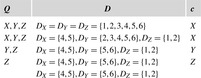

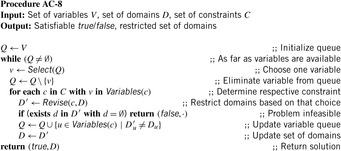

Algorithm AC-3 is one option to organize and perform constraint reasoning for arc consistency. The input of the algorithm are the set of variables V, the set of domains D, and the set of constraints C.

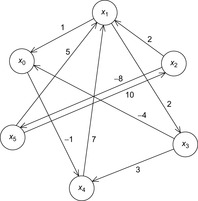

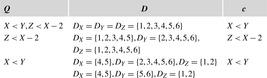

In the algorithm, a queue of constraints is frequently revised. Each time, when the domain of a variable changes, all constraints over this variable are enqueued. The pseudo code of the approach is shown in Algorithm 13.2. As a simple example, take the following CSP with the three variables  , subject to the binary constraints X < Y and Z < X − 2. As X < Y, we have

, subject to the binary constraints X < Y and Z < X − 2. As X < Y, we have  ,

,  , and

, and  . Since Z < X − 2, we infer that

. Since Z < X − 2, we infer that  ,

,  , and

, and  . Now we take constraint X < Y again to find the arc consistent set

. Now we take constraint X < Y again to find the arc consistent set  ,

,  , and

, and  . Snapshots of the algorithms after selecting variable c are provided in Figure 13.4.

. Snapshots of the algorithms after selecting variable c are provided in Figure 13.4.

|

| Figure 13.4 |

An alternative to AC-3 is to use a queue of variables instead of a queue of constraints. The modified algorithm is referred to as AC-8. It assumes that a user will specify, for each constraint, when constraint revision should be executed. The pseudo code of the approach is shown in Algorithm 13.3 and a step-by-step example is given in Figure 13.5.

13.2.2. Bounds Consistency

Arc consistency works well in binary CSPs. However, if we are confronted with constraints that involve more than two variables (e.g., X = Y + Z), the application of arc consistency is limited. Unfortunately, hyperarc consistency techniques are involved, and similar to the complexity of Set Covering and Graph Coloring-NP-hard for n ≥ 3. The problem is that we must determine which values of the variables are legitimate and which is a nontrivial problem.

The trick is to use an approximation of the set of possible assignments in the form of an interval. A domain range  denotes the set of integers

denotes the set of integers  with minD = a and maxD = b.

with minD = a and maxD = b.

For bounds consistency we only look at arithmetic CSPs that range over finite-domain variables for which all constraints are arithmetic expressions. A primitive constraint is bounds consistent if for each variable X that is in the constraint there is an assignment for all other variables (in their domain range) that is compatible with setting X to minD and X to maxD. An arithmetic CSP is bounds consistent if each primitive constraint is bounds consistent.

Consider the constraint X = Y + Z and rewrite it as X = Y + Z, Y = X − Z, and Z = X − Y. Reasoning about minimum and maximum values on the right side, we establish the following six necessary conditions:  ,

,  ,

,  ,

,  ,

,  , and

, and  . For example, the domains

. For example, the domains  ,

,  , and

, and  are refined to

are refined to  ,

,  , and

, and  without missing any solution.

without missing any solution.

13.2.3. *Path Consistency

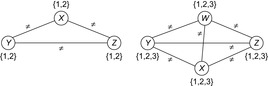

The good news about arc consistency is that it is fast in practice. The bad news is that arc consistency does not detect all inconsistencies. As a simple example consider the CSP shown in Figure 13.6 (left) with the three variables X, Y, Z with  subject to X ≠ Y, Y ≠ Z, and X ≠ Z. The CSP is arc consistent but not solvable. Therefore, we introduce a stronger form of consistency.

subject to X ≠ Y, Y ≠ Z, and X ≠ Z. The CSP is arc consistent but not solvable. Therefore, we introduce a stronger form of consistency.

|

| Figure 13.6 |

Definition 13.3

(Path Consistency) A path  is path consistent if, for all x in the domain of V0, and for all y in the domain of Vm satisfying all binary constraints on V0 and Vm, there exists an assignment to

is path consistent if, for all x in the domain of V0, and for all y in the domain of Vm satisfying all binary constraints on V0 and Vm, there exists an assignment to  , s.t. all binary constraints between Vi and Vi+1 for

, s.t. all binary constraints between Vi and Vi+1 for  are satisfied.

are satisfied.

A CSP is path consistent, if every path is consistent.

This definition is long but not difficult to decipher. On top of binary constraints between two variables, path consistency certifies binary consistency between the variables on a path. It is not difficult to see that path consistency implies arc consistency. An example to show that path consistency is still incomplete is provided in Figure 13.6 (right).

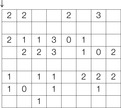

For restricting the computational efforts, it is sufficient to explore paths of length two only (see Exercises). To come up with a path consistency algorithm, we consider the following example, consisting of the three variables A, B, C with DA = DB = DC = {1, 2, 3}, subject to B > 1, A < C, A = B, and B > C − 2. Each constraint can be expressed in a (Boolean) matrix, denoting whether a variable combination is possible or not: Let Ri,j be the matrix entry for the constraint between variable i and j; Rk,k models the domain of k. Then consistency on the path (i, k, j) can be recursively determined by applying the equation

Let Ri,j be the matrix entry for the constraint between variable i and j; Rk,k models the domain of k. Then consistency on the path (i, k, j) can be recursively determined by applying the equation The concatenations correspond to Boolean matrix multiplications, such as the scalar product of the rows and columns in the matrix. The final conjunction is element-by-element.

The concatenations correspond to Boolean matrix multiplications, such as the scalar product of the rows and columns in the matrix. The final conjunction is element-by-element.

For the example  , we establish the following equation:

, we establish the following equation: We observe that path consistency restricts the set of possible instantiations in the constraint.

We observe that path consistency restricts the set of possible instantiations in the constraint.

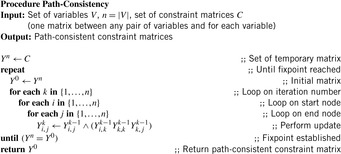

For a path-consistent CSP, we have to repeat the earlier revisions of paths. The pseudo code is shown in Algorithm 13.4. It is a straightforward extension to the All-Pairs Shortest Paths algorithm of Floyd and Warshall (see Ch. 2). Mathematically spoken, it is the same algorithm applied to a different semiring, where minimization is substituted by conjunction and addition is substituted by multiplication.

13.2.4. Specialized Consistency

As path consistency is comparably slow with respect to arc consistency, it is not always the best solution for overall CSP solving. In some cases specialized constraints (together with effective propagation rules) are often more effective.

One important specialized constraint is the all-different constraint (AD) that we already came across in the Sudoku and Cryptarithm domains at the beginning of this chapter.

Definition 13.4

(All-Different Constraint) The all-different constraint covers a set of binary inequality constraints among all variables X1 ≠ X2, X1 ≠ X3, …, Xk−1 ≠ Xk:

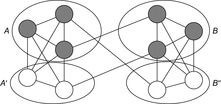

Propagating the all-different constraint, we achieve strong pruning. Its efficient implementation is based on matching bipartite graphs, where the set of nodes V is partitioned into two disjoint sets V′ and V′′: for each edge its source and target nodes are contained in a different set, and a matching is a node-disjoint selection of edges.

The assignment graph for the all-different constraint consists of two sets. On one hand, we have the variables, and, on the other hand, we have the values that are in the domain of at least one of the variables. Any assignment to the variables that satisfies the all-different constraint is a maximum matching. The running time for solving bipartite matching problems in graph G = (V, E) is  (using maximum flow algorithms). An example for the propagation of the all-different constraint is provided in Figure 13.7.

(using maximum flow algorithms). An example for the propagation of the all-different constraint is provided in Figure 13.7.

13.3. Search Strategies

As with path consistency, even strong propagation techniques are often incomplete. Search is needed for resolving the set of remaining uncertainties for the current variable assignments.

The most important way to span a search tree is labeling, which assigns different values to variables. In a more general view, the search for solving constraint satisfaction problems resolves disjunctions. For example, an assignment not only assigns variables on one branch but also denotes that this value is no longer available on other branches of the search tree.

This observation leads to very different branching rules to generate a CSP search tree. They define the shape of the search tree. As an example, we can produce a binary search tree, by setting  for one particular value x and branch on only these two constraints. Another important branching rule is domain splitting, which also generates a binary search tree. An example is to split the search tree according to the disjunction

for one particular value x and branch on only these two constraints. Another important branching rule is domain splitting, which also generates a binary search tree. An example is to split the search tree according to the disjunction  . The next option is disjunctions on the variable ordering, such as

. The next option is disjunctions on the variable ordering, such as  .

.

Walking along this line, we see that each search tree node can be viewed as a set of constraints, indicating which knowledge on the set of variables is currently known for solving the problem. For example, assigning a value x to a variable X for generating a current search tree node u adds the constraint X = x to the constraint set of the predecessor node parent(u).

In the following we concentrate on labeling and on the selection of the variable to be labeled next. One often applied search heuristic is first-fail. It prefers the variable, the instantiation of which will lead to a failure with the highest probability. The intuition behind the strategy is to work on the simpler problems first. A consequent rule for this policy is to test variables with the smallest domain first. Alternatively, we may select most constrained variables first.

For value selection, the succeed-first principle has shown good performance. It prefers the values that might belong to the solution with the highest probability. Value selection criteria define the order of branches to be explored and are often problem-dependent.

13.3.1. Backtracking

The labeling process is combined with consistency techniques that prune the search space. For each search tree node we propagate constraints to make the problem locally consistent, which in turn reduces the options for labeling. The labeling procedure will backtrack upon failure, and continue with a search tree node that has not yet resolved completely.

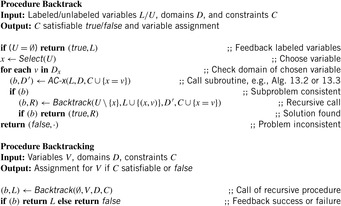

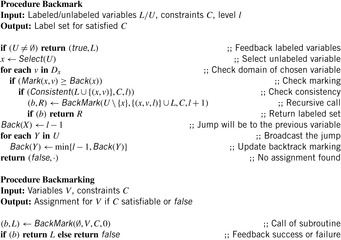

The pseudo code for such a backtracking approach is shown in Algorithm 13.5. In the initial call of the recursive subprocedure Backtrack, the variable assignment set V is divided into the set of already labeled variables L and the set of yet unlabeled variables U. If all variables are successfully assigned the problem is solved and the assignment can be returned. As with the consistency algorithm an additional flag is attached to the return value to distinguish between success and failure.

There is a trade-off between the time spent for search and the time spent for propagation. The consistency call matches the parameters of the arc consistency algorithms AC-3 and AC-8. It is not difficult to include more powerful consistency methods like path consistency. On the other hand, more aggressive consistency mechanisms only check if the current assignment leads to no contradiction with the current set of constraints. Such a pure backtracking algorithm is shown in Algorithm 13.6. Other consistency techniques remove incompatible values only from connected but currently unlabeled variables. This technique is called forward checking. Forward checking is cheap. It does not increase the time complexity of pure backtracking, as the checks are only drawn earlier in the search process.

13.3.2. Backjumping

One weakness of the backtrack procedure is that it throws away the reason for the conflict. Suppose that we are given constraint variables A, B, C, D with  and a constraint A > D. Backtracking starts with labeling A = 1, and then tries all the assignments for B and C before finding that A has to be larger than 1. A better option is to jump back to A at the first time D is labeled, since this is the source of the conflict.

and a constraint A > D. Backtracking starts with labeling A = 1, and then tries all the assignments for B and C before finding that A has to be larger than 1. A better option is to jump back to A at the first time D is labeled, since this is the source of the conflict.

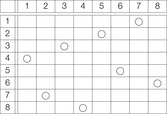

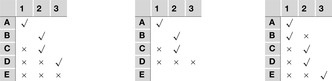

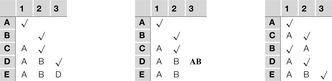

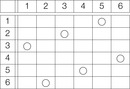

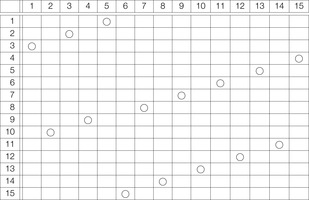

We explain the working of the backjumping algorithm for the example with variables A, B, C, D, E, all of domain {1, 2, 3}, and constraints A ≠ C, A ≠ D, A ≠ E, B ≠ D, E ≠ B, and E ≠ D. The according constraint graph is shown in Figure 13.8. Some snapshots of the backjumping algorithm for this example are provided in Figure 13.9, where the variables are plotted against their possible value assignments following the order of labeling.

|

| Figure 13.9 Conflict matrix evolution for backjumping in the example of Figure 13.8; matrix entries denote impossible assignment, and check denotes current assignment. Value assignment until first backtrack at E (left). After backtracking from E to the previous level, there is no possible variable assignment (middle). Backjumping to variable B, since this is the source of the conflict, eventually finds a satisfying assignment (right). |

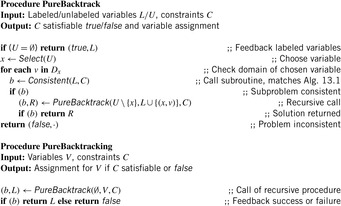

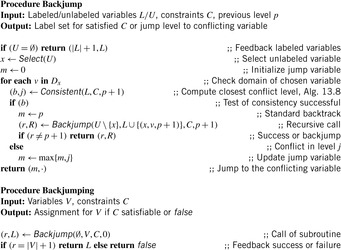

The pseudo code for the backjumping procedure is shown in Algorithm 13.7. The additional parameter for the algorithm is the previous level from which the procedure is invoked. The return value includes the jump level instead of simply denoting success or failure. The values  are the otherwise impossible jump values chosen for success. The implementation is tricky. It invokes a variant of the (simple) consistency check, for which the closest conflicting level is computed, in addition to the test of satisfaction of the constraints. The pseudo code is shown in Algorithm 13.8. Its parameters are the currently label set L, the constraint set C, and the backjump level l. The implementation of Algorithm 13.8 is not much different from Algorithm 13.1 in the sense that it returns a Boolean value, denoting if the consistency check was successful and the level j on which the conflict has been detected. The update of j takes the current label set L into account, which now consists of triples with the third component being the level to which a variable is assigned. This value j then determines the return value in Algorithm 13.7 and the level m for the next backjump.

are the otherwise impossible jump values chosen for success. The implementation is tricky. It invokes a variant of the (simple) consistency check, for which the closest conflicting level is computed, in addition to the test of satisfaction of the constraints. The pseudo code is shown in Algorithm 13.8. Its parameters are the currently label set L, the constraint set C, and the backjump level l. The implementation of Algorithm 13.8 is not much different from Algorithm 13.1 in the sense that it returns a Boolean value, denoting if the consistency check was successful and the level j on which the conflict has been detected. The update of j takes the current label set L into account, which now consists of triples with the third component being the level to which a variable is assigned. This value j then determines the return value in Algorithm 13.7 and the level m for the next backjump.

The reassignment of variable C that is in between B and D is actually not needed. This brings us to the next search strategy.

13.3.3. Dynamic Backtracking

A refinement to backjumping is dynamic backtracking. It handles the problem of losing the in-between assignments when jumping back. Dynamic backtracking remembers the source of the conflict, monitors the source of the conflict, and changes the order of variables.

Consider again the example of Figure 13.8. The iterations shown in Figure 13.10 illustrate how the sources of conflicts are maintained together with the assignments to the variables and how this information eventually allows the change of variable ordering. if we assign A to 1 and B to 2 we need no conflict information. When assigning C to 2 we store variable A at C as the source of a conflict of choosing 1. Setting D to 3 leads to the memorization of conflict with A(value 1) and B(value 2). Now E has no further assignment (left) so that we jump back to D but carry the conflict source AB from variable E to D(middle). This leads to another jump from D to C and a change of order of the variable B and C(right). A final assignment is A to 1, C to 2 (with conflict source A), B to 1 (with conflict source A), D to 2 (with conflict source A), and E to 3 (with conflict sources A and B). In difference to backjumping, vertex C is not reassigned.

|

| Figure 13.10 Conflict matrix evolution for dynamic backtracking in the example of Figure 13.8; matrix entries denote source of conflict for chosen assignments, check denotes current assignment, bold variables are transposed. |

13.3.4. Backmarking

Another problem for backtracking is redundant work for which unnecessary constraint checks are repeated. For example, consider A, B, C, D with  with the constraints A + 8 < C, B = 5D. Consider the search tree generated by labeling A, B, C, D (in this order). There is much redundant computation in different subtrees when labeling variable C (after setting B = 1, B = 2, …, B = 10). The reason is that the change in B does not influence variable C at all. Therefore, we aim at removing redundant constraint checks.

with the constraints A + 8 < C, B = 5D. Consider the search tree generated by labeling A, B, C, D (in this order). There is much redundant computation in different subtrees when labeling variable C (after setting B = 1, B = 2, …, B = 10). The reason is that the change in B does not influence variable C at all. Therefore, we aim at removing redundant constraint checks.

The proposed solution is to remember previous (either good or no-good) assignments. This so-called backmarking algorithm removes redundant constraint checks by memorizing negative and positive tests. It maintains the values

• Mark(x,v), for the farthest (instantiated) variable x in conflict with the current assignment v (conflict marking).

• Back(x), for the farthest variable to which we backtracked since the last attempt to instantiate x (backtrack marking).

Constraint checks can be omitted for the case Mark(x,v) < Back(x). An illustration is given in Figure 13.11. We detect that the assignment from X to 1 on the left branch on the tree is inconsistent with the assignment of Y to 1, but consistent with all other variables above X. The assignment from X to 1 on the right branch on the tree is still inconsistent with the assignment of Y to 1 and does not need to be checked again.

The pseudo-code implementation is provided in Algorithm 13.9 as an extension to the pure backtracking algorithm. We see that checking if the conflict marking is larger than or equal to the backtrack marking is performed before every consistency check. The algorithm also illustrates how the value Back is updated. The conflict marking is assumed to be stored together with each assignment. Note that as with backjumping (see Alg. 13.8), the consistency procedure is assumed to compute the conflict level together with each call.

The backmarking algorithm is illustrated for the Eight Queens problem of Figure 13.1 in Figure 13.12. The farthest conflict queen (the conflict marking) is denoted on the board, and the backtrack marking is written to the right of the board. The sixth queen cannot be allocated, despite the assignment to the fifth queen, such that all further assignments to the fifth queen are discarded. It is not difficult to observe that backmarking can be combined with backjumping.

|

| Figure 13.12 |

13.3.5. Search Strategies

In practical application of constraint satisfaction for real-life problems we frequently encounter that search spaces are so huge that they cannot be fully explored.

This immediately suggests heuristics to guide the search process into the direction of an assignment that satisfies the constraints and optimizes the objective function. In constraint satisfaction search heuristics are often encoded to recommend a value for an assignment in a labeling algorithm. This approach often leads to a fairly good solution on the early trials.

Backtracking mainly takes care of the bottom part of the search tree. It repairs later assignments rather than earliest ones. Consequently, backtracking search relies on the fact that search heuristics guide well in the top part of the search tree. As a drawback, backtracking is less reliable in the earlier parts of the search tree. This is due to the fact that, as the search process proceeds, more and more information is available and the number of violations to a search heuristic is small in practice.

Limited Discrepancy Search

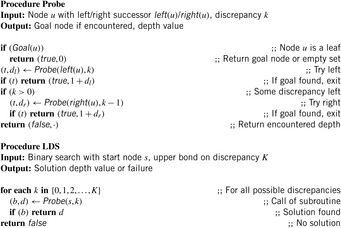

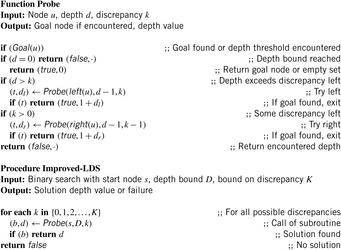

Errors in the heuristic values have also been examined in the context of limited discrepancy search (LDS). It can be seen as a modification of depth-first search. On hard combinatorial problems like Number Partition (see later) it outperforms traditional depth-first search.

Given a heuristic estimate, it would be most beneficial to order successors of a node according to their h-value, and then to choose the left one for expansion first. A search discrepancy means to stray from this heuristic preference at some node, and instead examine some other node that was not suggested by the heuristic estimate.

For ease of exposition, we assume binary search trees (i.e., two successors per node expansion). In fact, binary search trees are the only case that has been considered in literature and extensions to multi-ary trees are not obvious. A discrepancy corresponds to a right branch in an ordered tree. LDS performs a series of depth-first searches up to a maximum depth d. In the first iteration, it first looks at the path with no discrepancies, the left-most path, then at all paths that take one right branch, then with two right branches, and so forth. In Figure 13.13 paths with zero (first path), one (next three paths), two (next three paths), and three discrepancies (last path) in a binary tree are shown.

To measure the time complexity of LDS, we count the number of explored leaves.

Theorem 13.1

(Complexity LDS) The number of leaves generated in limited discrepancy search in a complete binary tree of depth d is (d + 2)2d − 1.

The pseudo code for LDS is provided in Algorithm 13.10. Figure 13.14 visualizes the branches selected (bold lines) in different iterations of linear discrepancy search.

|

| Figure 13.14 |

An obvious drawback of this basic scheme is that the i th iteration generates all paths with i discrepancies or less, hence it replicates the work of the previous iteration. In particular, to explore the right-most path in the last iteration, LDS regenerates the entire tree. LDS has been improved later using an upper bound on the maximum depth of the tree. In the i th iteration, it visits the leaf at the depth limit with exactly i discrepancies. The modified pseudo code for improved LDS is shown in Algorithm 13.11. An example is provided in Figure 13.15. This modification saves a factor of (d + 2)/2.

Theorem 13.2

(Complexity-Improved LDS) The number of leaves generated in improved limited discrepancy search in a complete binary tree of depth d is 2d.

Proof

Since each iteration of improved LDS generates those paths with exactly k discrepancies, each leaf is generated exactly once for a total of 2d leaf nodes.

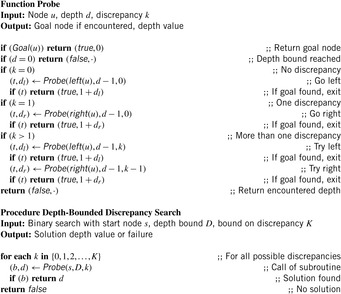

A slightly different strategy, called depth-bounded discrepancy search, biases the search toward discrepancies high up in the search tree by means of an iteratively increasing depth bound. In the i th iteration, depth-bounded discrepancy explores those branches on which discrepancies occur at depth i or less. Algorithm 13.12 shows the pseudo code of depth-bounded discrepancy search. For the sake of simplicity, again we consider the traversal in binary search trees only.

Compared to improved LDS, depth-bounded LDS explores more discrepancies at the top of the search tree (see Fig. 13.16). While improved discrepancy search on a binary tree of depth d explores in its first iteration branches with at most one discrepancy, depth-bounded discrepancy search explores some branches with up to lgd discrepancies.

13.4. NP-Hard Problem Solving

For an NP-complete problem L we require

NP-containment a nondeterministic Turing machine M that recognizes L in polynomial time, and

NP-hardness a polynomial-time transformation f, one for each problem L′ in NP, such that  if and only if

if and only if  .

.

The running time of M is defined as the length of the shortest path to a final state. A deterministic Turing machine may simulate all possible computations of M in exponential time. Therefore, NP problems are state space problems with a state space of configurations of M, operators are transitions to successor configurations, the initial state is the start configuration of M, and the goal is defined by its final configuration(s).

NP-completeness makes scaling successful approaches difficult. Nonetheless, hard problems are frequent for search practice and for hundreds of thousands of problem instances that have been classified.

13.4.1. Boolean Satisfiability

Boolean CSPs are CSPs in which all variable domains are Boolean. Hence, the only two assignments that are allowed are true and false. If we are looking at variables of finite domains only, any CSP is convertible to a Boolean CSP, via an encoding of the variable domains. In such an encoding we impose an assignment of the form X = x for each variable X and each value  .

.

Let a literal be a positive or negated Boolean variable, and a clause be a disjunction of literals. We use the truth values true/false and their numerical equivalent 0/1 interchangeably.

Definition 13.5

(Satisfiability Problem) In Satisfiability (SAT) we are given a formula f as a conjunction of clauses over the literals  . The task is to search for an assignment

. The task is to search for an assignment  for

for  , so that f(a) = true.

, so that f(a) = true.

By setting x3 to false the example function  is satisfiable.

is satisfiable.

Theorem 13.3

(Complexity SAT) SAT is NP-complete.

Proof

We only give the proof idea. The containment of SAT in NP is trivial since we can test a nondeterministically chosen assignment in linear time. To show that for all L ∈ NP we have polynomial reduction to SAT, the computation of a nondeterministic Turing machine for L is simulated with (polynomially many) clauses.

Definition 13.6

(k-Satisfiability) In k-SAT the SAT instances consist of clauses of the form

Dealing with clauses of length smaller than k is immediate. For example, by adding the redundant literal  to the second clause, f is converted to 3-SAT notation. Even if k-SAT instances are simpler than general SAT problems, for k ≥ 3 the k-SAT problem is still intractable.

to the second clause, f is converted to 3-SAT notation. Even if k-SAT instances are simpler than general SAT problems, for k ≥ 3 the k-SAT problem is still intractable.

Theorem 13.4

(Complexity k-SAT) k-SAT is NP-hard for k ≥ 3.

Proof

The proof applies a simple local replacement strategy. For each clause C in the original formula,  extra variables are introduced for linking shorter clauses together. For example, satisfiability of

extra variables are introduced for linking shorter clauses together. For example, satisfiability of  is equivalent to satisfiability of the 3-SAT formula

is equivalent to satisfiability of the 3-SAT formula  .

.

It is known that 2-SAT is in P. Since  is equivalent to

is equivalent to  and

and  , respectively, we construct a graph Gf with nodes

, respectively, we construct a graph Gf with nodes  and edges that correspond to the induced implications. It is not difficult to see that f is not satisfiable, if and only if Gf has a cycle that contains the same variable, once positive and once negative.

and edges that correspond to the induced implications. It is not difficult to see that f is not satisfiable, if and only if Gf has a cycle that contains the same variable, once positive and once negative.

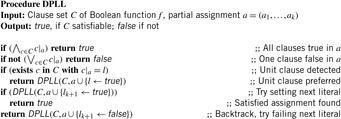

David-Putnam Logmann-Loveland Algorithm

The most popular methods to solve Boolean satisfiability problems are refinements to the Davis-Putnam Logmann-Loveland algorithm (DPLL). DPLL (as shown in Alg. 13.13) is a depth-first variable labeling strategy with unit (clause) propagation. Unit propagation detects literals l in clauses c that have a forced assignment, since all other literals in the clause are false. For a given assignment a we write  for this case. The DPLL algorithm incrementally constructs an assignment and backtracks if this partial assignment already implies that the formula is not satisfiable. Refined implementations of the DPLL algorithm simplify the clauses parallel to the elimination of variables, that proved to be not satisfiable. Moreover, if a clause with only one literal is generated, the literal is preferred and its satisfaction can be propagated through the formula, which can lead to an early backtracking.

for this case. The DPLL algorithm incrementally constructs an assignment and backtracks if this partial assignment already implies that the formula is not satisfiable. Refined implementations of the DPLL algorithm simplify the clauses parallel to the elimination of variables, that proved to be not satisfiable. Moreover, if a clause with only one literal is generated, the literal is preferred and its satisfaction can be propagated through the formula, which can lead to an early backtracking.

There are so many refinements to the basic algorithms that we can only discuss a few. First, we can preprocess the input to allow the algorithms to operate without branching as far as possible. Moreover, preprocessing can help to learn conflicts during the search.

DPLL is sensitive to the selection of the next variable. Many search heuristics have been applied that aim at a compromise between the efficiency to compute the heuristic and the informedness to guide the search process. As a thumb rule, it is preferable not to change the strategy too often during the search and to choose variables that appear often in the formula. For unit propagation we have to know how many literals are not false already. The update of these numbers can be time consuming. Instead, two literals in each clause are marked as observed literals. For each variable x, a list of clauses in which x is true and a list of clauses in which x is false are maintained. If x is assigned to a value, all clauses in its list are verified and another variable in each of these clauses is observed. The main advantage of the approach is that the lists of observables are not updated during backtracking.

Conflicts can be analyzed to determine when and to which depth to backtrack. Such a backjump is a nonchronological backtrack that forgets about the variable assignments that are not in the current conflict set, and has shown consistent performance gains.

As said, the running times of DPLL algorithms depend on the choice/ordering of branching variables in the top level of the search tree. A restart is a reinvocation of the algorithm after spending some fixed time without finding a solution. For each restart, a different set of branching variables can be chosen, leading to a completely different search tree. Often only a good choice of a few variable assignments are needed to show satisfiability or unsatisfiability. This is the reason why rapid restarts are often more effective than continuing the search.

An alternative for solving large satisfiability problems is GSAT, an algorithm that performs a randomized local search. The algorithm throws a coin and performs some variable flips to improve the number of satisfied clauses. If different variables are equally good one is chosen by random. Such and more advanced random strategies are considered in Chapter 14.

Phase Transition

One observation is that many randomly generated formulas are either trivially satisfiable or trivially nonsatisfiable. This reflects a fundamental problem to the complexity analysis of NP-hard problems. Even if the worst case may be hard, many instances can be very simple. As this observation is encountered in several other NP-hard problems, researchers have started to analyze the average case complexity with results much closer to practical problem solving. Another option is to separate problem instances into those that are hard and those that are trivial, and study problem parameters at which (randomly chosen) instances turn from simple to hard or from hard to simple. The change is also known as phase transition. In other words, these instances can be viewed as witnesses for the problem's (NP) hardness. Often it is the case that the phase transition is empirically studied. In some domains, however, theoretical results in the form of upper and lower bounds for the parameters are available.

For Satisfiability, a phase transition effect has been analyzed by looking at the ratio of the number of clauses m and the number of variables n.

The generation of m = αn random formulas in 3-SAT is simple, for example, by a

• random choice of variables, followed by a

• random choice of their signs (positive or negated).

In such random 3-SAT samples, empirically the hard problems have been detected at  . Moreover, the complexity peak (measured in medium computational costs) appeared to be independent to the algorithms chosen. Problems with a ratio smaller than α are underconstrained and easy to satisfy, and problems with a ratio larger than 4.3 are overconstrained and easily shown to be nonsatisfiable.

. Moreover, the complexity peak (measured in medium computational costs) appeared to be independent to the algorithms chosen. Problems with a ratio smaller than α are underconstrained and easy to satisfy, and problems with a ratio larger than 4.3 are overconstrained and easily shown to be nonsatisfiable.

A simple bound for unsatisfiability is derived as follows. A random clause with three (different) literals according to a fixed assignment a is satisfied with probability 7/8 (only one assignment fails the formula). Therefore, for a fixed assignment a, the entire formula is satisfied with probability  . Given that 2n is the number of different assignments, this implies that the formula is satisfiable with probability

. Given that 2n is the number of different assignments, this implies that the formula is satisfiable with probability  and unsatisfiable with probability

and unsatisfiable with probability  . Subsequently, if

. Subsequently, if  , then the probability for unsatisfiability is larger than 50%. Similar observations have been made for many other problems.

, then the probability for unsatisfiability is larger than 50%. Similar observations have been made for many other problems.

Backbones and Backdoors

The backbone of an SAT problem is the set of literals that are true in every satisfying truth assignment. If a problem has a large backbone, then there are many options to choose an incorrect assignment to the critical variables. Search cost correlates with the backbone size. If the wrong value is assigned to such a critical backbone variable early during the search, the correction of this mistake is very costly. Backbone variables are hard to find.

Theorem 13.5

(Hardness of Finding Backbones) If P ≠ NP, no algorithm can return a backbone literal of a (nonempty) SAT backbone in polynomial time for all formulas.

Proof

Suppose there is such an algorithm. It returns a backbone literal, if the backbone is nonempty. We set this literal to true and simplify the formula. We then call the procedure and repeat. This procedure will find a satisfying assignment if one exists in polynomial time, contradicting the assumption that SAT is NP-hard.

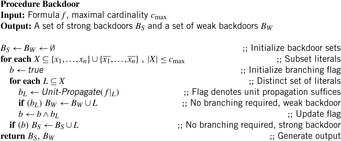

A backdoor into an SAT instance is a set of variables that eases solving the instance. It is weak if the set of variables defines a polynomially satisfiable formula, given a satisfiable instance. It is strong if it gives a polynomially tractable formula for a satisfiable or unsatisfiable problem. For example, there are strong 2-SAT or Horn backdoors. In general, backdoors depend on the algorithm applied, but might be strengthened to the condition that unit propagation will directly solve the remaining problem.

It is not hard to see that that backbones and backdoors are not strongly correlated. There are problems, in which the backbone and backdoor variables are disjoint. However, statistical connections seem to exist. Hard combinatorial SAT problems appear to have large strong backdoors and backbones. Spoken otherwise, the sizes of strong backdoors and backbones are good predictors for the hardness of the problem.

A simple algorithm to calculate backdoors simply tests every combination of literals up to a fixed cardinality. Algorithm 13.14 has the advantage that for small problems, every weak and strong backdoor up to the given size is generated. However, this procedure can only be used for small problems.

13.4.2. Number Partition

The problem of dividing a set of numbers into two parts of equal sums is defined as follows.

Definition 13.7

Let  be a set of numbers, and

be a set of numbers, and  . In Number Partition we search for an index set

. In Number Partition we search for an index set  , so that

, so that  .

.

As an example take a = (4, 5, 6, 7, 8). A possible index set is I = {1, 2, 3} since 4 + 5 + 6 = 7 + 8. The problem is solvable only if  is even. The problem is NP-complete, so that we cannot expect a polynomial time algorithm for solving it.

is even. The problem is NP-complete, so that we cannot expect a polynomial time algorithm for solving it.

Theorem 13.6

(Complexity Number Partition) Number Partition is NP-hard.

Proof

Number Partition can be reduced from the following (specialized) knapsack problem: Given  and an integer A, decide whether there is a set

and an integer A, decide whether there is a set  for a

for a  . (Knapsack itself can be shown to be NP-hard by a reduction from SAT.) Given

. (Knapsack itself can be shown to be NP-hard by a reduction from SAT.) Given  as an input for knapsack an instance of Number Partition can be derived as

as an input for knapsack an instance of Number Partition can be derived as  . If I is a solution to knapsack then

. If I is a solution to knapsack then  is a solution of Number Partition, since

is a solution of Number Partition, since  .

.

To improve the trivial 2n algorithm for enumerating all possible partitions, we arbitrarily divide the original set of n elements into two of size  and

and  . Then, all subset sums of these smaller sets are computed and sorted. Finally, the two lists are combined in a parallel scan to find value

. Then, all subset sums of these smaller sets are computed and sorted. Finally, the two lists are combined in a parallel scan to find value  .

.

For the example, we generate the lists (4, 6, 8) and (5, 7). The sorted subset sums are (0, 4, 6, 8, 10, 12, 14, 18) and (0, 5, 7, 12) with target value 15. The algorithm takes two pointers i and j; the first one starts at the beginning of the first list and is monotonic increasing, while the second one starts at the end of the second list and is monotonic decreasing. For i = 1 and j = 4 we have 0 + 12 = 12 < 15. Increasing i yields 4 + 12 = 16 > 15, which is slightly too large. Now we decrease j for 4 + 7 = 11 < 15, and increase i twice in turn for 6 + 7 = 13 < 15 and 8 + 7 = 15, yielding the solution to the problem.

Generating all subset sums can be done in time  by full enumeration of all subsets. They can be sorted in time

by full enumeration of all subsets. They can be sorted in time  using any efficient sorting algorithm (see Ch. 3). Scanning the two lists can be performed in linear time, for the overall time complexity of

using any efficient sorting algorithm (see Ch. 3). Scanning the two lists can be performed in linear time, for the overall time complexity of  . The running time can be improved to time

. The running time can be improved to time  by applying a refined sorting strategy (see Exercises).

by applying a refined sorting strategy (see Exercises).

Heuristics

We introduce two heuristics for this problem: Greedy and Karmakar-Karp. The first heuristic sorts the numbers in a in decreasing order, and successively places the largest number in the smaller subset. For the example we get the subset sums (8, 0), (8, 7), (8, 13), (13, 13), and (13, 17) for a final difference of 4. The algorithm takes  to sort and O(n) to assign them for a total of

to sort and O(n) to assign them for a total of  .

.

The Karmakar-Karp heuristic also sorts the numbers in a in decreasing order. It successively takes the two largest numbers and computes their difference, which is reinserted into the sorted order of the remaining list of numbers. In the example, the sorted list is (8, 7, 6, 5, 4) and 8 and 7 are taken. Their difference is 1, which is reinserted for the remaining list (6, 5, 4, 1). Now 6 and 5 are selected yielding (4, 1, 1). The next step gives (3, 1) and the final difference 2.

To compute the actual partition, the algorithm builds a tree with one node for each original number. Each operation adds an edge between these nodes. The larger of the nodes represents the difference, so it remains active for subsequent computation. In the example, we have  , with 8 representing difference 1;

, with 8 representing difference 1;  , with 6 representing difference value 1;

, with 6 representing difference value 1;  , with 4 representing the difference; and

, with 4 representing the difference; and  with 3 representing the difference. The edges inserted are (8, 7), (6, 5), (4, 8), and (6, 4). The resulting graph is a (spanning) tree on the set of nodes. This tree is to be two-colored to determine the actual partition. Using a simple DFS a two-coloring is available in O(n) time. Therefore, due to the sorting requirement the total time for the Karmakar-Karp heuristic is

with 3 representing the difference. The edges inserted are (8, 7), (6, 5), (4, 8), and (6, 4). The resulting graph is a (spanning) tree on the set of nodes. This tree is to be two-colored to determine the actual partition. Using a simple DFS a two-coloring is available in O(n) time. Therefore, due to the sorting requirement the total time for the Karmakar-Karp heuristic is  as in the previous case.

as in the previous case.

Complete Algorithms

The complete greedy algorithm (CGA) generates a binary search tree as follows. The left branch assigns the next number to one subset and the right one assigns it to the other one. If the difference of the two sides of the equation  at a leaf is zero, a solution has been established. The algorithm produces the greedy solution first and continuous to search for better solutions. At any node, where the difference between the current subset sums is greater than or equal to the sum of all remaining unassigned numbers, the remaining numbers are placed into the smaller subset. An optimization is that whenever the two subset sums are equal we only assign a number to one of the lists.

at a leaf is zero, a solution has been established. The algorithm produces the greedy solution first and continuous to search for better solutions. At any node, where the difference between the current subset sums is greater than or equal to the sum of all remaining unassigned numbers, the remaining numbers are placed into the smaller subset. An optimization is that whenever the two subset sums are equal we only assign a number to one of the lists.

The complete Karmakar-Karp algorithm (CKKA) builds a binary tree from left to right, where at each node we replace the two largest of the remaining numbers. The left branch replaces them by their difference, the right branch replaces them by their sum. The difference is added to the list, as seen before, and the sum is added to the head of the list. Consequently, the first solution corresponds to the Karmakar-Karp heuristic, and the algorithm continues to find better partitions until a solution is found and verified. Similar pruning rules apply as in CGA. If the largest number at a node is greater than the subset sum of the others, it can be safely pruned.

If there is no solution, both algorithms have to traverse the entire tree, such that the algorithms perform equally bad in the worst case. However, CKKA produces better heuristic values and better partitions. Moreover, the pruning rule in CKKA is more effective. For example, in CKKA (4, 1, 1) and (11, 4, 1) are the successors of (6, 5, 4, 1), and the largest number is greater than the sum of the others, so that both branches are pruned. In CGA, the two children of the subtrees with difference 5 and difference 7 have to be expanded.

13.4.3. *Bin Packing

Definition 13.8

(Bin Packing) Given n objects of size  the task in Bin Packing is to distribute them among the bins of size b in such a way that a minimal number of bins is used. The corresponding decision problem is to find a mapping

the task in Bin Packing is to distribute them among the bins of size b in such a way that a minimal number of bins is used. The corresponding decision problem is to find a mapping  for a given k such that for each j the sum of all objects ai with

for a given k such that for each j the sum of all objects ai with  is not larger than b.

is not larger than b.

Here we see that optimization problems are identified with corresponding decision problems, thresholding the objective to be optimized to some fixed value.

Theorem 13.7

(Complexity of Bin Packing) The decision problem for Bin Packing is NP-hard.

Proof

NP-hardness can be shown using a polynomial reduction from Number Partition. Let  be the input of Number Partition. The input for Bin Packing is n objects of size

be the input of Number Partition. The input for Bin Packing is n objects of size  and

and  . If

. If  is odd, then Number Partition is not solvable. If

is odd, then Number Partition is not solvable. If  is even and the original problem is solvable, then the objects have a perfect fit into the two bins of size A.

is even and the original problem is solvable, then the objects have a perfect fit into the two bins of size A.

There are polynomial-time approximation algorithms for Bin Packing like first fit (best fit and worst fit), which incrementally searches for the first (best, worst) placement of an object in the bins (the quality of the fit is measured in terms of the remaining space). First fit and best fit are known to have an asymptotic worst-case approximation ratio of 1.7, by means that they cannot generally produce solutions that are better than 1.7 times the optimum in the limit for large optimization values.

The modifications first fit decreasing and best fit decreasing presort the objects according to their size. The rationale is that starting with the larger objects first will be better than having them be placed at the end. Postponing the smaller ones, we can expect a better fit. Indeed using a decreasing order of object sizes together with either strategy first fit or best fit can be shown to guarantee solutions that are at most a factor of 11/9 off from the optimal one. Both algorithms run in  time.

time.

Bin Completion

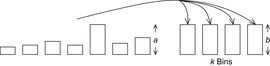

The algorithm for optimal Bin Packing is based on depth-first branch-and-bound (see Ch. 5). The objects are first sorted in decreasing order. The algorithm then computes an approximate solution as initial upper bound, using the best solution among first fit, best fit, and worst fit decreasing. The algorithm next branches on the different bins in which an object can be placed.

The bin completion strategy is based on feasible sets of objects with a sum that fits with respect to the bin capacity. Rather than assigning objects one at a time to bins, it branches on the different feasible sets that can be used to complete each bin. Each node of the search tree, except the root node, represents a complete assignment of objects to a particular bin. The children of the root represent different ways of completing the bin containing the largest object. The nodes at the next level represent different feasible sets that include the largest remaining object. The depth of any branch of the tree is the number of bins in the corresponding solution.

The key property that makes bin completion more efficient is a dominance condition on the feasible completions of a bin. For example, let A = {20, 30, 40} and B = {5, 10, 10, 15, 15, 25} be two feasible sets. Now partition B into the subsets {5, 10}, {25}, and {10, 15, 15}. Since 5 + 10 ≤ 20, 25 ≤ 30, and 10 + 15 + 15 ≤ 40, set A dominates set B.

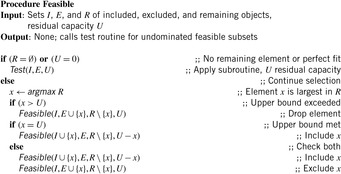

To generate the nondominated feasible sets efficiently we use a recursive strategy illustrated in Algorithm 13.15. The algorithm generates feasible sets and immediately tests them for dominance, so it never stores multiple dominated sets. The inputs are sets of included, excluded, and remaining objects that are adjusted in the different recursive calls. In the initial call (not shown) the set of remaining elements is the set of all objects, while the other two sets are both empty. The cases are as follows. If all elements have been selected or rejected or we have a perfect fit, we continue with testing for dominance, otherwise we select the largest remaining object. If it is oversized with respect to remaining space we reject it. If it has a perfect fit we immediately include it (the best fit for the bin has been obtained) and continue with the rest; in the other cases we check for both inclusion and exclusion. Procedure Test checks dominance by comparing subset sums of included elements to excluded elements rather than comparing pairs of sets for dominance. The worst-case running time of procedure Feasible is exponential, since by reducing set R we obtain the recurrence relation  .

.

Improvements

To improve the algorithm there are different options. The first idea is forced placements that reduce the branching. If only one more object can be added to a bin, which is easy to be checked by scanning through the set of remaining elements, then we only add the largest of such objects to it, and if only two more objects can be added, we generate all undominated two-element completions in linear time.

For pruning the search space, we consider the following strategy: Given a node with more than one child, when searching the subtree of any child but the first, we do not need to consider bin assignments that assign to the same bin all the objects used to complete the current bin in a previously explored child node. One implementation of this rule propagates a list of no-good sets along the tree. After generating the undominated completions for a given bin, we check each one to see if it contains any current no-good sets as a subset. If it does, we ignore that bin completion. The list of no-goods is pruned as follows. Whenever there is a no-good set, but the no-good set is not a subset of the bin completion, we remove that no-good set from the list that is passed down to the children of that bin completion. The reason is that by including at least one but not all the objects in the no-good set, we guarantee that it cannot be a subset of any bin completion below that node in the search tree.

13.4.4. *Rectangle Packing

Rectangle Packing considers packing a set of rectangles into an enclosing rectangle. It is not difficult to devise a binary CSP for the problem. There is a variable for each rectangle, the legal values of which are the positions it could occupy without exceeding the boundaries of the enclosing rectangle. Additionally, we have a binary constraint between each pair of rectangles that they cannot overlap.

Definition 13.9

(Rectangle Packing) In the decision variant of the Rectangle Packing problem we are given a set of rectangles ri of width wi and height hi,  and an enclosing rectangle of width W and height H. The task is to find an assignment to all the left upper corner coordinates

and an enclosing rectangle of width W and height H. The task is to find an assignment to all the left upper corner coordinates  of all rectangles ri such that

of all rectangles ri such that

• each rectangle is entirely contained in the enclosing rectangle; that is, for all  we have

we have  ,

,  ,

,  , and

, and  .

.

• no two rectangles ri and rj with  overlap; that is,

overlap; that is,

The optimization variant of the rectangle problem asks the smallest enclosing rectangle for which an assignment to the variable is possible.

When placing unoriented rectangles, both orientations are to be considered.

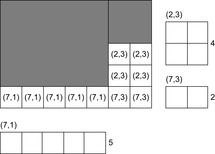

Rectangle Packing is important for VLSI and scheduling applications. Consider n jobs, where each job i requires a number of machines mi and a specific processing time di,  . Finding a minimal-cost schedule is equivalent to Rectangle Packing with

. Finding a minimal-cost schedule is equivalent to Rectangle Packing with  and

and  for

for  .

.

Theorem 13.8

(Complexity Rectangle Packing) The decision problem for Rectangle Packing is NP-hard.

Proof

Rectangle Packing can be polynomially reduced from Number Partition as follows. Assume we have an instance of number partition . Now we create an instance for Rectangle Packing as follows. First we choose an enclosing rectangle with width W and height

. Now we create an instance for Rectangle Packing as follows. First we choose an enclosing rectangle with width W and height  . If

. If  is odd then there is no possible solution to Number Partition. (W is chosen small enough to disallow changing orientation of the rectangle.) The rectangles to be placed have width W ∕ 2 and height ai. Since the entire space of

is odd then there is no possible solution to Number Partition. (W is chosen small enough to disallow changing orientation of the rectangle.) The rectangles to be placed have width W ∕ 2 and height ai. Since the entire space of  cells has to be covered, any solution to the Rectangle Packing problem immediately provides a solution to the Number Partition. If we do not find a solution to the rectangle packing problem it is clear that there is no partitioning of a into two sets of equal sum.

cells has to be covered, any solution to the Rectangle Packing problem immediately provides a solution to the Number Partition. If we do not find a solution to the rectangle packing problem it is clear that there is no partitioning of a into two sets of equal sum.

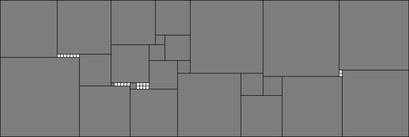

In the following we concentrate on rectangles of integer size. As an example, Figure 13.18 shows the smallest enclosing rectangle for 1 × 1 to 25 × 25. This suggests an alternative CSP encoding based on cells. Each cell cij with  and

and  corresponds to a finite-domain variable

corresponds to a finite-domain variable  , which denotes if the cell cij is free (0) or the index of the rectangle that is placed on it. To check for overlapping rectangles, a two-dimensional array representing the actual layout of cells is used. When placing a new rectangle we only need to check if all cells on the boundary of the new rectangle are occupied.

, which denotes if the cell cij is free (0) or the index of the rectangle that is placed on it. To check for overlapping rectangles, a two-dimensional array representing the actual layout of cells is used. When placing a new rectangle we only need to check if all cells on the boundary of the new rectangle are occupied.

Wasted Space Computation

As rectangles are placed, the remaining empty space gets chopped up into smaller irregular regions. Many of these regions cannot accommodate any of the remaining rectangles and must remain empty. The challenge is to efficiently bound the amount in a partial solution.

A first option for computing wasted space is to perform horizontal and vertical slices of the empty space. Consider the example of Figure 13.19. Suppose that we have to pack one (1 × 1) and two (2 × 2) rectangles into the empty area. Looking on the vertical strips we find that there are five squares that can only accommodate the squares (1 × 1), such that 5 − 1 = 4 squares have to remain empty. On the other hand, 11 − 4 = 7 squares are not sufficient to accommodate the three rectangles of total size 9.

The key idea to improve the wasted space calculation is to consider the vertical and horizontal dimensions together rather than performing separate calculations in the two dimensions and taking the maximum. When packing oriented rectangles we have at least different choices for computing wasted space. One is to use our new lower bound that integrates both dimensions but using the minimum dimension of each rectangle to determine where it can fit. Another option is to use the new bound but use both the height and width of each empty rectangle.

For each empty cell, we store the width of the empty row and the height of the empty column it occupies. In an area of free cells, empty cells are grouped together if both values match. We refer to these values as the maximum width and height of the group of empty cells. A rectangle cannot occupy any of a group of empty cells if its width or height is greater than the maximum width or height of the group, respectively. This results in a Bin Packing problem (see Fig. 13.20). There is one bin for each group of empty cells with the same maximum height and width. The capacity of each bin is the number of empty cells in the group. There is one element for each rectangle to be placed, the size of which is the area of the rectangle. There is a bipartite relation between the bins and the elements, specifying which elements can be placed in which bins, based on their heights and widths. These additional constraints simplify the Bin Packing problem. For example, if any rectangle can only be placed in one bin, and the capacity of that bin is smaller than the area of the rectangle, then the problem is unsolvable. If any rectangle can only be placed in one bin, and the capacity of the bin is sufficient to accommodate it, then the rectangle is placed in the bin, eliminated from the problem, and the capacity of the bin is decreased by the area of the rectangle. If any bin can only contain a single rectangle, and its capacity is greater than or equal to the area of the rectangle, the rectangle is eliminated from the problem, and the capacity of the bin is reduced by the area of the rectangle. If any bin can only contain a single rectangle, and its capacity is less than the area of the rectangle, then the bin is eliminated from the problem, and the remaining area of the rectangle is reduced by the capacity of the bin.

Considering again the example of packing one (1 × 1) and two (2 × 2) rectangles, we immediately see that the first block can accommodate one (2 × 2) rectangle, but the second (2 × 2) rectangle has no fit.

Applying any of these simplifying rules may lead to further simplifications. When the remaining problem cannot be simplified further, we compute a lower bound on the wasted space. We identify a bin for which the total area of the rectangles it could contain is less than the capacity of the bin. The excess capacity is wasted space. The bin and the rectangles involved are eliminated from the problem. We then look for another bin with this property. Note that the order of bins can affect the total amount of wasted space computed.

Dominance Conditions

The largest rectangle is placed first in the top-left corner of the enclosing rectangle. Its next position will be one unit down. This leaves an empty strip one unit high above the rectangle. While this strip may be counted as wasted space, if the area of the enclosing rectangle is large relative to that of the rectangles to be packed, this partial solution may not be pruned based on wasted space. Partial solutions that leave empty strips to the left, of or above rectangle placements are often dominated by solutions that do not leave such strips, and hence can be pruned from considerations (see Fig. 13.21).

A simple dominance condition applies whenever there is a perfect rectangle of empty space of the same width immediately above a placed rectangle, with solid boundaries above, to the left, and to the right. The boundaries may consist of other rectangles or the boundary of the enclosing rectangle. Similarly, it also applies to a perfect rectangle of empty space of the same height immediately to the left of a placed rectangle.

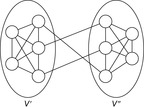

13.4.5. *Vertex Cover, Independent Set, Clique

For the next set of NP-hard problems we are given an undirected graph  . The problems Vertex Cover, Clique, and Independent Set are closely related.

. The problems Vertex Cover, Clique, and Independent Set are closely related.

Definition 13.10

(Vertex Cover, Clique, Independent Set) In Vertex Cover we are asked to find a node subset V′ such that for all edges in E at least one of the end nodes is contained in V′. Given G and k, Clique decides, whether or not there is a subset  such that for all

such that for all  we have

we have  . Clique is the dual to the Independent Set problem, which searches for a set of k nodes that contains no edge connecting any two of them.

. Clique is the dual to the Independent Set problem, which searches for a set of k nodes that contains no edge connecting any two of them.

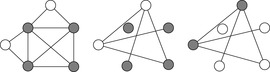

An illustration for the three problems is provided in Figure 13.22.

|

| Figure 13.22 |

Theorem 13.9

(Complexity Vertex, Cover, Clique, Independent Set) Vertex Cover, Clique, and Independent Set are NP-hard.

Proof

For Clique and Independent Set, we simply have to invert edges to convert the problem instances. To reduce Independent Set to Vertex Cover, we take an instance  and k to Independent Set and let the Vertex Cover algorithm run on the same graph with bound n − k. An independent set with k nodes implies that all other n − k nodes supervise all edges. Otherwise, if n − k nodes cover the edge set, then all other k nodes build an independent set.