Chapter 8. External Search

This chapter studies the integration of disk space into the search, trying to minimize the number of block accesses. It introduces the theory of external memory algorithms and studies disk-based search with the delayed detection of duplicates and its variants.

Keywords: virtual memory, external scanning, external sorting, external priority queue, external explicit graph depth-first search, external explicit graph breadth-first search, delayed duplicate detection, external breadth-first branch-and-bound, external enforced hill-climbing, external A*, hash-based duplicate detection, structured duplicate detection, pipelining, external iterative-deepening search, external pattern databases, external value iteration, flash-memory search

Often search spaces are so large that even in compressed form they fail to fit into the main memory. During the execution of a search algorithm, only a part of the graph can be processed in the main memory at a time; the remainder is stored on a disk.

It has been observed that the law of Intel cofounder Gordon Moore moves toward external devices. His prediction, popularly known as Moore's Law, states that the number of transistors on a chip doubles about every two years. The costs for large amounts of disk space have decreased considerably. But hard disk operations are about  to

to  times slower than main memory accesses and technological progress yields annual rates of about 40% increase in processor speeds, while disk transfers improve only by about 10%. This growing disparity has led to a growing attention to the design of I/O-efficient algorithms in recent years.

times slower than main memory accesses and technological progress yields annual rates of about 40% increase in processor speeds, while disk transfers improve only by about 10%. This growing disparity has led to a growing attention to the design of I/O-efficient algorithms in recent years.

Most modern operating systems hide secondary memory accesses from the programmer, but offer one consistent address space of virtual memory that can be larger than internal memory. When the program is executed, virtual addresses are translated into physical addresses. Only those portions of the program currently needed for the execution are copied into main memory. Caching and prefetching heuristics have been developed to reduce the number of page faults (the referenced page does not reside in the cache and has to be loaded from a higher memory level). By their nature, however, these methods cannot always take full advantage of the locality inherent in algorithms. Algorithms that explicitly manage the memory hierarchy can lead to substantial speedups, since they are more informed to predict and adjust future memory access.

We first give an introduction to the more general topic of I/O-efficient algorithms. We introduce the most widely used computation model, which counts inputs/outputs (I/Os) in terms of block transfers of fixed-size records to and from secondary memory. As external memory algorithms often run for weeks and months, a fault-tolerant hardware architecture is needed and discussed in the text. We describe some basic external memory algorithms like scanning and sorting, and introduce data structures relevant to graph search.

Then we turn to the subject of external memory graph search. In this part we are mostly concerned with breadth-first and Single-Source Shortest Path search algorithms that deal with graphs stored on disk, but we also provide insights on external (memory) depth-first search. The complexity for the general case is improved by exploiting properties of certain graph classes.

For state space search, we adapt external breadth-first search to implicit graphs. As the use of early duplicate pruning in a hash table is limited, in external memory search the pruning concept has been coined to the term delayed duplicate detection for frontier search. The fact that no external access to the adjacency list is needed reduces the I/O complexity to the minimum possible.

External breadth-first branch-and-bound conducts a cost-bounded traversal of the state space for more general cost functions. Another impact of external breadth-first search is that it serves as a subroutine in external enforced hill-climbing. Next, we show how external breadth-first search can be extended to feature A*. As external A* operates on sets of states, it shares similarities with the symbolic implementation of A*, introduced in Chapter 7.

We discuss different sorts of implementation refinements; for example, we improve the I/O complexity for regular graphs and create external pattern databases to compute better search heuristics. Lastly, we turn to the external memory algorithm for nondeterministic and probabilistic search spaces. With external value iteration we provide a general solution for solving Markov decision process problems on disk.

8.1. Virtual Memory Management

Modern operating systems provide a general-purpose mechanism for processing data larger than available main memory called virtual memory. Transparent to the program, swapping moves parts of the data back and forth from disk as needed. Usually, the virtual address space is divided into units calledpages; the corresponding equal-size units in physical memory are called page frames. A page table maps the virtual addresses on the page frames and keeps track of their status (loaded/absent). When a page fault occurs (i.e., a program tries to use an unmapped page), the CPU is interrupted; the operating system picks a rarely picked page frame and writes its contents back to the disk. It then fetches the referenced page into the page frame just freed, changes the map, and restarts the trapped instruction. In modern computers memory management is implemented on hardware with a page size commonly fixed at 4,096 bytes.

Various paging strategies have been explored that aim at minimizing page faults. Belady has shown that an optimal offline page exchange strategy deletes the page that will not be used for a long time. Unfortunately, the system, unlike possibly the application program itself, cannot know this in advance. Several different online algorithms for the paging problem have been proposed, such as last-in-first-out (LIFO), first-in-first-out (FIFO), least-recently-used (LRU), and least-frequently-used (LFU). Despite that Sleator and Tarjan proved that LRU is the best general online algorithm for the problem, we reduce the number of page faults by designing data structures that exhibit memory locality, such that successive operations tend to access nearby memory cells.

Sometimes it is even desirable to have explicit control of secondary memory manipulations. For example, fetching data structures larger than the system page size may require multiple disk operations. A file buffer can be regarded as a kind of software paging that mimics swapping on a coarser level of granularity. Generally, an application can outperform the operating system's memory management because it is well informed to predict future memory access.

Particularly for search algorithms, system paging often becomes the major bottleneck. This problem has been experienced when applying A* to the domain of route planning. Moreover, A* does not respect memory locality at all; it explores nodes in the strict order of f-values, regardless of their neighborhood, and hence jumps back and forth in a spatially unrelated way.

8.2. Fault Tolerance

External algorithms often run for a long time and have to be robust with respect to the reliability of existing hardware. Unrecoverable error rates on hard disks happen at a level of about 1 in  bits. If such an error occurs in critical system areas, the entire file system is corrupted. In conventional usage, such errors happen every 10 years. However, in extensive usage with file I/O in the order of terabytes per second, such a worst-case scenario may happen every week. As one solution to the problem, a redundant array of inexpensive disks (RAID) 1 is appropriate. Some of its levels are: 0 (striping: efficiency improvement for the exploration due to multiple disk access without introducing redundancy); 1 (mirroring: reliability improvement for the search due to option of recovering data); and 5 (performance and parity: reliability and efficiency improvement for the search, automatically recovers from one-bit disk failures).

bits. If such an error occurs in critical system areas, the entire file system is corrupted. In conventional usage, such errors happen every 10 years. However, in extensive usage with file I/O in the order of terabytes per second, such a worst-case scenario may happen every week. As one solution to the problem, a redundant array of inexpensive disks (RAID) 1 is appropriate. Some of its levels are: 0 (striping: efficiency improvement for the exploration due to multiple disk access without introducing redundancy); 1 (mirroring: reliability improvement for the search due to option of recovering data); and 5 (performance and parity: reliability and efficiency improvement for the search, automatically recovers from one-bit disk failures).

Another problem with long-term experiments are environmental faults that lead to switched-down power supply. Even if data is stored on disk, it is not certain that all data remains accessible in case of a failure. As hard disks may have individual reading and writing buffers, disk access is probably not under full control by the application program or the operating system. Therefore, it can happen that a file is deleted when the file reading buffer is still unprocessed. One solution to this problem is uninteruptable power supplies (UPSs) that assist in writing volatile data to disk. In many cases like external breadth-first search it continues the search from some certified flush like the last layer fully expanded.

8.3. Model of Computation

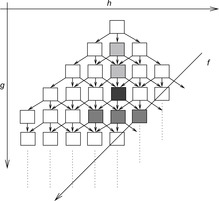

Recent developments of hardware significantly deviate from the von Neumann architecture; for example, the next generation of processors has multicore processors and several processor cache levels (see Fig. 8.1). Consequences like cache anomalies are well known; for example, recursive programs like Quicksort perform unexpectedly well in practice when compared to other theoretically stronger sorting algorithms.

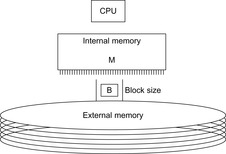

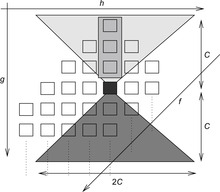

The commonly used model for comparing the performances of external algorithms consists of a single processor, small internal memory that can hold up to M data items, and unlimited secondary memory. The size of the input problem (in terms of the number of records) is abbreviated by N. Moreover, the block size B governs the bandwidth of memory transfers. It is often convenient to refer to these parameters in terms of blocks, so we define  and

and  . It is usually assumed that at the beginning of the algorithm, the input data is stored in contiguous blocks on external memory, and the same must hold for the output. Only the number of block read and writes are counted, and computations in internal memory do not incur any cost (see Fig. 8.2). An extension of the model considers D disks that can be accessed simultaneously. When using disks in parallel, the technique of disk striping can be employed to essentially increase the block size by a factor of D. Successive blocks are distributed across different disks. Formally, this means that if we enumerate the records from zero, the i th block of the j th disk contains record number

. It is usually assumed that at the beginning of the algorithm, the input data is stored in contiguous blocks on external memory, and the same must hold for the output. Only the number of block read and writes are counted, and computations in internal memory do not incur any cost (see Fig. 8.2). An extension of the model considers D disks that can be accessed simultaneously. When using disks in parallel, the technique of disk striping can be employed to essentially increase the block size by a factor of D. Successive blocks are distributed across different disks. Formally, this means that if we enumerate the records from zero, the i th block of the j th disk contains record number  through

through  . Usually, it is assumed that

. Usually, it is assumed that  and

and  .

.

We distinguish two general approaches of external memory algorithms: either we can devise algorithms to solve specific computational problems while explicitly controlling secondary memory access, or we can develop general-purpose external memory data structures, such as stacks, queues, search trees, priority queues, and so on, and then use them in algorithms that are similar to their internal memory counterparts.

8.4. Basic Primitives

It is often convenient to express the complexity of external memory algorithms using two frequently occurring primitive operations. These primitives, together with their complexities, are summarized in Table 8.1. The simplest operation is external scanning, which means reading a stream of records stored consecutively on secondary memory. In this case, it is trivial to exploit disk and block parallelism. The number of I/Os is  .

.

Sorting is a fundamental problem that arises in almost all areas of computer science. For the heuristic search exploration, sorting is essential to arrange similar states together, for example, to find duplicates. For this purpose, sorting is useful to eliminate I/O accesses. The proposed external sorting algorithms fall into two categories: those based on the merging paradigm, and those based on the distribution paradigm.

| Operation | Complexity | Optimality Achieved By |

|---|---|---|

| Trivial sequential access | ||

| merge or distribution sort |

External Mergesort converts the input into a number of elementary sorted sequences of length M using internal memory sorting. Subsequently, a merging step is applied repeatedly until only one run remains. A set of k sequences  can be merged into one run with

can be merged into one run with  I/O operations by reading each sequence in a blockwise manner. In internal memory, k cursors

I/O operations by reading each sequence in a blockwise manner. In internal memory, k cursors  are maintained for each of the sequences; moreover, it contains one buffer block for each run, and one output buffer. Among the elements pointed to by the

are maintained for each of the sequences; moreover, it contains one buffer block for each run, and one output buffer. Among the elements pointed to by the  , the one with the smallest key, say

, the one with the smallest key, say  , is selected; the element is copied to the output buffer, and

, is selected; the element is copied to the output buffer, and  is incremented. Whenever the output buffer reaches the block size B, it is written to disk and emptied; similarly, whenever a cached block for an input sequence has been fully read, it is replaced with the next block of the run in external memory. When using one internal buffer block per sequence and one output buffer, each merging phase uses

is incremented. Whenever the output buffer reaches the block size B, it is written to disk and emptied; similarly, whenever a cached block for an input sequence has been fully read, it is replaced with the next block of the run in external memory. When using one internal buffer block per sequence and one output buffer, each merging phase uses  operations. The best result is achieved when k is chosen as big as possible,

operations. The best result is achieved when k is chosen as big as possible,  . Then sorting can be accomplished in

. Then sorting can be accomplished in  phases, resulting in the overall optimal complexity.

phases, resulting in the overall optimal complexity.

On the other hand, External Quicksort partitions the input data into disjoint sets  ,

,  , such that the key of each element in

, such that the key of each element in  is smaller than that of any element in

is smaller than that of any element in  , if

, if  . To produce this partition, a set of splitters

. To produce this partition, a set of splitters  is chosen, and

is chosen, and  is defined to be the subset of elements

is defined to be the subset of elements  with

with  . The splitting can be done I/O-efficiently by streaming the input data through an input buffer, and using an output buffer. Then each subset

. The splitting can be done I/O-efficiently by streaming the input data through an input buffer, and using an output buffer. Then each subset  is recursively processed, unless its size allows sorting in internal memory. The final output is produced by concatenating all the elementary sorted subsequences. Optimality can be achieved by a good choice of splitters, such that

is recursively processed, unless its size allows sorting in internal memory. The final output is produced by concatenating all the elementary sorted subsequences. Optimality can be achieved by a good choice of splitters, such that  . It has been proposed to calculate the splitters in linear time based on the classic internal memory selection algorithm to find the k-smallest element. We note that although we will be concerned only with the case of a single disk (

. It has been proposed to calculate the splitters in linear time based on the classic internal memory selection algorithm to find the k-smallest element. We note that although we will be concerned only with the case of a single disk ( ), it is possible to make optimal use of multiple disks with

), it is possible to make optimal use of multiple disks with

I/Os. Simple disk striping, however, does not lead to optimal external sorting. It has to be ensured that each read operation brings in

I/Os. Simple disk striping, however, does not lead to optimal external sorting. It has to be ensured that each read operation brings in  blocks, and each write operation must store

blocks, and each write operation must store  blocks on disk. For External Quicksort, the buckets have to be hashed to the disks almost uniformly. This can be achieved using a randomized scheme.

blocks on disk. For External Quicksort, the buckets have to be hashed to the disks almost uniformly. This can be achieved using a randomized scheme.

8.5. External Explicit Graph Search

External explicit graphs are problem graphs that are stored on disk. Examples are large maps for route planning systems. Under external explicit graph search, we understand search algorithms that operate in explicitly specified directed or undirected graphs that are too large to fit in main memory. We distinguish between assigning BFS or DFS numbers to nodes, assigning BFS levels to nodes, or computing the BFS or DFS tree edges. However, for BFS in undirected graphs it can be shown that all these formulations are reducible to each other in  I/Os, where V and E are the sets of nodes and edges of the input graph (see Exercises).

I/Os, where V and E are the sets of nodes and edges of the input graph (see Exercises).

The input graph consists of two arrays, one that contains all edges sorted by the start node, and one array of size  that stores, for each vertex, its out-degree and offset into the first array.

that stores, for each vertex, its out-degree and offset into the first array.

8.5.1. *External Priority Queues

External priority queues for general weights are involved. An I/O-efficient algorithm for the Single-Source Shortest Paths problem simulates Dijkstra's algorithm by replacing the priority queue with the Tournament Tree data structure. It is a priority queue data structure that was developed with the application to graph search algorithms in mind; it is similar to an external heap, but it holds additional information. The tree stores pairs  , where

, where  identifies the element, and y is called the key. The Tournament Tree is a complete binary tree, except for possibly some right-most leaves missing. It has

identifies the element, and y is called the key. The Tournament Tree is a complete binary tree, except for possibly some right-most leaves missing. It has  leaves. There is a fixed mapping of elements to the leaves, namely, IDs in the range from

leaves. There is a fixed mapping of elements to the leaves, namely, IDs in the range from  through the

through the  map to the i th leaf. Each element occurs exactly once in the tree. Each node has an associated list of

map to the i th leaf. Each element occurs exactly once in the tree. Each node has an associated list of  to M elements, which are the smallest ones among all descendants. Additionally, it has an associated buffer of size M. Using an amortization argument, it can be shown that a sequence of k Update, Delete, or DeleteMin operations on a tournament tree containing N elements requires at most

to M elements, which are the smallest ones among all descendants. Additionally, it has an associated buffer of size M. Using an amortization argument, it can be shown that a sequence of k Update, Delete, or DeleteMin operations on a tournament tree containing N elements requires at most  accesses to external memory.

accesses to external memory.

The Buffered Repository Tree is a variant of the Tournament Tree that provides two operations: Insert  inserts element x under key y, where several elements can have the same key. ExtractAll

inserts element x under key y, where several elements can have the same key. ExtractAll  returns and removes all elements that have key y. As in a Tournament Tree, keys come from a key set

returns and removes all elements that have key y. As in a Tournament Tree, keys come from a key set  , and the leaves in the static height-balanced binary tree are associated with the key ranges in the same fixed way. Each internal node stores elements in a buffer of size B, which is recursively distributed to its two children when it becomes full. Thus, an Insert operation needs

, and the leaves in the static height-balanced binary tree are associated with the key ranges in the same fixed way. Each internal node stores elements in a buffer of size B, which is recursively distributed to its two children when it becomes full. Thus, an Insert operation needs  I/O amortized operations. An ExtractAll operation requires

I/O amortized operations. An ExtractAll operation requires  accesses to secondary memory, where the first term corresponds to reading all buffers on the path from the root to the correct leaf, and the second term reflects reading the x reported elements from the leaf. Moreover, a Buffered Repository Tree T is used to remember nodes that were encountered earlier. When v is extracted, each incoming edge

accesses to secondary memory, where the first term corresponds to reading all buffers on the path from the root to the correct leaf, and the second term reflects reading the x reported elements from the leaf. Moreover, a Buffered Repository Tree T is used to remember nodes that were encountered earlier. When v is extracted, each incoming edge  is inserted into T under key u. If at some later point u is extracted, then ExtractAll

is inserted into T under key u. If at some later point u is extracted, then ExtractAll  on T yields a list of edges that should not be traversed because they would lead to duplicates. The algorithm takes

on T yields a list of edges that should not be traversed because they would lead to duplicates. The algorithm takes  I/Os to access adjacency lists. The

I/Os to access adjacency lists. The  operations on the priority queues take at most

operations on the priority queues take at most  times, leading to a cost of

times, leading to a cost of  . Additionally, there are

. Additionally, there are  Insert and

Insert and  ExtractAll operations on T, which add up to

ExtractAll operations on T, which add up to  I/Os; this term also dominates the overall complexity of the algorithm.

I/Os; this term also dominates the overall complexity of the algorithm.

More efficient algorithms can be developed by exploiting properties of particular classes of graphs. In the case of directed acyclic graphs (DAGs) like those induced in Multiple Sequence Alignment problems, we can solve the shortest path problem following a topological ordering, in which for each edge  the index of u is smaller than that of v. The start node has index 0. Nodes are processed in this order. Due to the fixed ordering, we can access all adjacency lists in

the index of u is smaller than that of v. The start node has index 0. Nodes are processed in this order. Due to the fixed ordering, we can access all adjacency lists in  time. Since this procedure involves

time. Since this procedure involves  priority queue operations, the overall complexity is

priority queue operations, the overall complexity is  .

.

It has been shown that the Single-Source Shortest Paths problem can be solved with  I/Os for many subclasses of sparse graphs; for example, for planar graphs that can be drawn in a plane in the natural way without having edges cross between nodes. Such graphs naturally decompose the plane into faces. For example, route planning graphs without bridges and tunnels are planar. As most special cases are local, virtual intersections may be inserted to exploit planarity.

I/Os for many subclasses of sparse graphs; for example, for planar graphs that can be drawn in a plane in the natural way without having edges cross between nodes. Such graphs naturally decompose the plane into faces. For example, route planning graphs without bridges and tunnels are planar. As most special cases are local, virtual intersections may be inserted to exploit planarity.

We next consider external DFS and BFS exploration for more general graph classes.

8.5.2. External Explicit Graph Depth-First Search

External DFS relies on an external stack data structure. The search stack is often small compared to the overall search but in the worst-case scenario it can become large. For an external stack, the buffer is just an internal memory array of 2B elements that at any time contains the  elements most recently inserted. We assume that the stack content is bounded by at most N elements. A pop operation incurs no I/O, except for the case when the buffer has run empty, where

elements most recently inserted. We assume that the stack content is bounded by at most N elements. A pop operation incurs no I/O, except for the case when the buffer has run empty, where  I/O to retrieve a block of B elements is sufficient. A push operation incurs no I/O, except for the case the buffer has run full, where

I/O to retrieve a block of B elements is sufficient. A push operation incurs no I/O, except for the case the buffer has run full, where  I/O is needed to retrieve a block of B elements. Insertion and deletion take

I/O is needed to retrieve a block of B elements. Insertion and deletion take  I/Os in the amortized sense.

I/Os in the amortized sense.

The I/O complexity for external DFS for explicit (possibly directed) graphs is  . There are

. There are  phases where the internal buffer for the visited state set becomes full, in which case it is flushed. Duplicates are eliminated not via sorting (as in the case of external BFS) but by removing marked states from the external adjacency list representation by a file scan. This is possible as the adjacency is explicitly represented on disk and done by generating a simplified copy of the graph and writing it to disk. Successors in the unexplored adjacency lists that are visited are marked not to be generated again, such that all states in the internal visited list can be eliminated. As with external BFS in explicit graphs,

phases where the internal buffer for the visited state set becomes full, in which case it is flushed. Duplicates are eliminated not via sorting (as in the case of external BFS) but by removing marked states from the external adjacency list representation by a file scan. This is possible as the adjacency is explicitly represented on disk and done by generating a simplified copy of the graph and writing it to disk. Successors in the unexplored adjacency lists that are visited are marked not to be generated again, such that all states in the internal visited list can be eliminated. As with external BFS in explicit graphs,  I/Os are due to the unstructured access to the external adjacency list. Computing strongly connected components in explicit graphs also takes

I/Os are due to the unstructured access to the external adjacency list. Computing strongly connected components in explicit graphs also takes  I/Os.

I/Os.

Dropping the term of  I/O as with external BFS, however, is a challenge. For implicit graphs, no access to an external adjacency list is possible, so that we cannot access the search graph that has not been seen so far. Therefore, the major problem for external DFS exploration in implicit graphs is that adjacencies defining the successor relation cannot be filtered out as done for explicit graphs.

I/O as with external BFS, however, is a challenge. For implicit graphs, no access to an external adjacency list is possible, so that we cannot access the search graph that has not been seen so far. Therefore, the major problem for external DFS exploration in implicit graphs is that adjacencies defining the successor relation cannot be filtered out as done for explicit graphs.

8.5.3. External Explicit Graph Breadth-First Search

Recall the standard internal memory BFS algorithm, visiting each reachable node of the input problem graph G one by one utilizing a FIFO queue. After a node is extracted, its adjacency list (the sets of successors in G) is examined, and those that haven't been visited so far are inserted into the queue in turn. Naively running the standard internal BFS algorithm in the same way in external memory will result in  I/Os for unstructured accesses to the adjacency lists, and

I/Os for unstructured accesses to the adjacency lists, and  I/Os to check if successor nodes have already been visited. The latter task is considerably easier for undirected graphs, since duplicates are constrained to be located in adjacent levels.

I/Os to check if successor nodes have already been visited. The latter task is considerably easier for undirected graphs, since duplicates are constrained to be located in adjacent levels.

The algorithm of Munagala and Ranade improves I/O complexity for the case of undirected graphs, in which duplicates are constrained to be located in adjacent levels.

The algorithm builds  from

from  as follows: Let

as follows: Let  be the multiset of successors of nodes in

be the multiset of successors of nodes in  ;

;  is created by concatenating all adjacency lists of nodes in

is created by concatenating all adjacency lists of nodes in  . Then the algorithm removes duplicates by external sorting followed by an external scan. Since the resulting list

. Then the algorithm removes duplicates by external sorting followed by an external scan. Since the resulting list  is still sorted, filtering out the nodes already contained in the sorted lists

is still sorted, filtering out the nodes already contained in the sorted lists  or

or  is possible by parallel scanning. This completes the generation of

is possible by parallel scanning. This completes the generation of  . Set U maintains all unvisited nodes necessary to be looked at when the graph is not completely connected. Algorithm 8.1 provides the implementation of the algorithm of Munagala and Ranade in pseudo code. The algorithm can record the nodes' BFS level in additional

. Set U maintains all unvisited nodes necessary to be looked at when the graph is not completely connected. Algorithm 8.1 provides the implementation of the algorithm of Munagala and Ranade in pseudo code. The algorithm can record the nodes' BFS level in additional  time using an external array.

time using an external array.

Theorem 8.1

(Efficiency Explicit Graph External BFS) On an undirected explicit problem graph, the algorithm of Munagala and Ranade requires at most  I/Os to compute the BFS level for each state.

I/Os to compute the BFS level for each state.

Proof

For the correctness argument, we assume that the state levels  have already been assigned to the correct BFS level. Now we consider a successor v of a node

have already been assigned to the correct BFS level. Now we consider a successor v of a node  : The distance from s to v is at least

: The distance from s to v is at least  because otherwise the distance of u would be less than

because otherwise the distance of u would be less than  . Thus,

. Thus,  . Therefore, we can correctly assign

. Therefore, we can correctly assign  to

to  .

.

For the complexity argument we assume that after preprocessing, the graph is stored in adjacency-list representation. Hence, successor generation takes  I/Os. Duplicate elimination within the successor set takes

I/Os. Duplicate elimination within the successor set takes  I/Os. Parallel scanning can be done using

I/Os. Parallel scanning can be done using  I/Os. Since

I/Os. Since  and

and  , the execution of external BFS requires

, the execution of external BFS requires  time, where

time, where  is due to the external representation of the graph and the initial reconfiguration time to enable efficient successor generation.

is due to the external representation of the graph and the initial reconfiguration time to enable efficient successor generation.

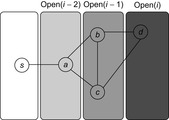

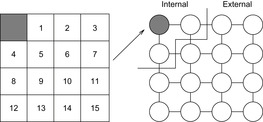

An example is provided in Figure 8.3. When generating  we unify

we unify  with

with  . Removing the duplicates in

. Removing the duplicates in  yields set

yields set  . Removing

. Removing  reduces the set to

reduces the set to  ; omitting

; omitting  results in the final node set

results in the final node set  .

.

The bottleneck of the algorithm are the  unstructured accesses to adjacency lists. The following refinement of Mehlhorn and Meyer consists of a preprocessing and a BFS phase, arriving at a complexity of

unstructured accesses to adjacency lists. The following refinement of Mehlhorn and Meyer consists of a preprocessing and a BFS phase, arriving at a complexity of  I/Os.

I/Os.

The preprocessing phase partitions the graph into K disjoint subgraphs  with small internal shortest path distances; the adjacency lists are accordingly partitioned into consecutively stored sets

with small internal shortest path distances; the adjacency lists are accordingly partitioned into consecutively stored sets  as well. The partitions are created by choosing seed nodes independently with uniform probability

as well. The partitions are created by choosing seed nodes independently with uniform probability  . Then K BFS are run in parallel, starting from the seed nodes, until all nodes of the graph have been assigned to a subgraph. In each round, the active adjacency lists of nodes lying on the boundary of their partition are scanned; the requested destination nodes are labeled with the partition identifier, and are sorted (ties between partitions are arbitrarily broken). Then, a parallel scan of the sorted requests and the graph representation can extract the unvisited part of the graph, as well as label the new boundary nodes and generate the active adjacency lists for the next round. The expected I/O bound for the graph partitioning is

. Then K BFS are run in parallel, starting from the seed nodes, until all nodes of the graph have been assigned to a subgraph. In each round, the active adjacency lists of nodes lying on the boundary of their partition are scanned; the requested destination nodes are labeled with the partition identifier, and are sorted (ties between partitions are arbitrarily broken). Then, a parallel scan of the sorted requests and the graph representation can extract the unvisited part of the graph, as well as label the new boundary nodes and generate the active adjacency lists for the next round. The expected I/O bound for the graph partitioning is  ; the expected shortest path distance between any two nodes within a subgraph is

; the expected shortest path distance between any two nodes within a subgraph is  . The main idea of the second phase is to replace the nodewise access to adjacency lists by a scanning operation on a file H that contains all

. The main idea of the second phase is to replace the nodewise access to adjacency lists by a scanning operation on a file H that contains all  in sorted order such that the current BFS level has at least one node in

in sorted order such that the current BFS level has at least one node in  . All subgraph adjacency lists in

. All subgraph adjacency lists in  are merged with H completely, not node by node. Since the shortest path within a partition is of order

are merged with H completely, not node by node. Since the shortest path within a partition is of order  , each

, each  stays in H accordingly for at most

stays in H accordingly for at most  levels. The second phase uses

levels. The second phase uses  I/Os in total; choosing

I/Os in total; choosing  , we arrive at a complexity of

, we arrive at a complexity of  I/Os. An alternative to the randomized strategy of generating the partition described here is a deterministic variant using a Euler tour around a minimum spanning tree. Thus, the bound also holds in the worst case.

I/Os. An alternative to the randomized strategy of generating the partition described here is a deterministic variant using a Euler tour around a minimum spanning tree. Thus, the bound also holds in the worst case.

8.6. External Implicit Graph Search

An implicit graph is a graph that is not residing on disk but generated by successively applying a set of actions to nodes selected from the search frontier. The advantage in implicit search is that the graph is generated by a set of rules, and hence no disk accesses for the adjacency lists are required.

Considering the I/O complexities, bounds like those that include  are rather misleading, since we are often trying to avoid generating all nodes. Hence, value

are rather misleading, since we are often trying to avoid generating all nodes. Hence, value  is needed only to derive worst-case bounds. In almost all cases,

is needed only to derive worst-case bounds. In almost all cases,  can safely be substituted by the number of expanded nodes.

can safely be substituted by the number of expanded nodes.

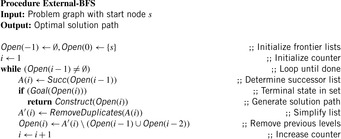

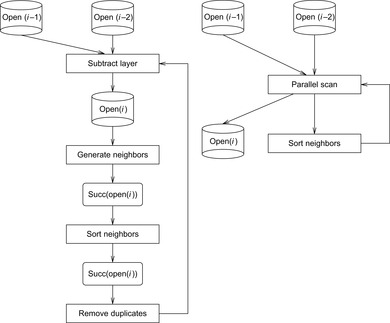

8.6.1. Delayed Duplicate Detection for BFS

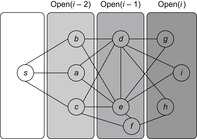

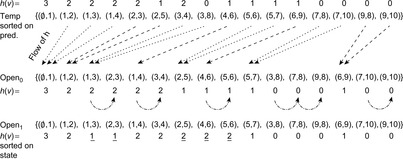

A variant of Munagala and Ranade's algorithm for BFS in implicit graphs has been coined with the term delayed duplicate detection for frontier search. Let s be the initial node, and  be the implicit successor generation function. The algorithm maintains BFS layers on disk. Layer

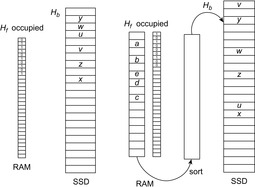

be the implicit successor generation function. The algorithm maintains BFS layers on disk. Layer  is scanned and the set of successors are put into a buffer of a size close to the main memory capacity. If the buffer becomes full, internal sorting followed by a duplicate elimination phase generates a sorted duplicate-free node sequence in the buffer that is flushed to disk. The outcome of this phase are k presorted files. Note that delayed internal duplicate elimination can be improved by using hash tables for the blocks before being flushed to disk. Since the node set in the hash table has to be stored anyway, the savings by early duplicate detection are often small.

is scanned and the set of successors are put into a buffer of a size close to the main memory capacity. If the buffer becomes full, internal sorting followed by a duplicate elimination phase generates a sorted duplicate-free node sequence in the buffer that is flushed to disk. The outcome of this phase are k presorted files. Note that delayed internal duplicate elimination can be improved by using hash tables for the blocks before being flushed to disk. Since the node set in the hash table has to be stored anyway, the savings by early duplicate detection are often small.

In the next step, external merging is applied to unify the files into  by a simultaneous scan. The size of the output files is chosen such that a single pass suffices. Duplicates are eliminated. Since the files were presorted, the complexity is given by the scanning time of all files. We also have to eliminate

by a simultaneous scan. The size of the output files is chosen such that a single pass suffices. Duplicates are eliminated. Since the files were presorted, the complexity is given by the scanning time of all files. We also have to eliminate  and

and  from

from  to avoid recomputations; that is, nodes extracted from the external queue are not immediately deleted, but kept until the layer has been completely generated and sorted, at which point duplicates can be eliminated using a parallel scan. The process is repeated until

to avoid recomputations; that is, nodes extracted from the external queue are not immediately deleted, but kept until the layer has been completely generated and sorted, at which point duplicates can be eliminated using a parallel scan. The process is repeated until  becomes empty, or the goal has been found.

becomes empty, or the goal has been found.

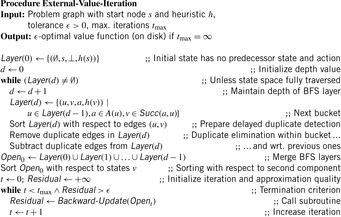

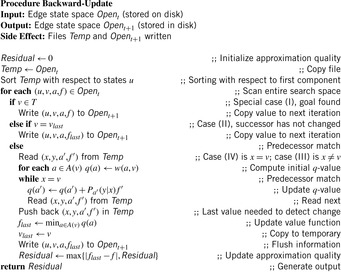

The corresponding pseudo code is shown in Algorithm 8.2. Note that the explicit partition of the set of successors into blocks is implicit. Termination is not shown, but imposes no additional implementation problem.

Theorem 8.2

(Efficiency Implicit External BFS) On an undirected implicit problem graph, external BFS with delayed duplicate elimination requires at most  I/Os.

I/Os.

Proof

The proof for implicit problem graphs is essentially the same for explicit problem graphs with the exception that the access to the external device for generating the successors of a state resulting in at most  I/Os is not needed.

I/Os is not needed.

As with the algorithm of Munagala and Ranade, delayed duplicate detection applies  I/Os. As no explicit access to the adjacency list is needed, by

I/Os. As no explicit access to the adjacency list is needed, by  and

and  , the total execution time is

, the total execution time is  I/Os.

I/Os.

In search problems with a bounded branching factor we have  , and thus the complexity for implicit external BFS reduces to

, and thus the complexity for implicit external BFS reduces to  I/Os. If we keep each

I/Os. If we keep each  in a separate file for sparse problem graphs (e.g., simple chains), file opening and closing would accumulate to

in a separate file for sparse problem graphs (e.g., simple chains), file opening and closing would accumulate to  I/Os. The solution for this case is to store the nodes in

I/Os. The solution for this case is to store the nodes in  ,

,  , and so forth, consecutively in internal memory. Therefore, I/O is needed, only if a level has at most B nodes.

, and so forth, consecutively in internal memory. Therefore, I/O is needed, only if a level has at most B nodes.

The algorithm shares similarities with the internal frontier search algorithm (see Ch. 6) that was used for solving the Multiple Sequence Alignment problem. In fact, the implementation has been applied to external memory search with considerable success. The BFS algorithm extends to graphs with bounded locality. For this case and to ease the description of upcoming algorithms, we assume to be given a general file subtraction procedure as implemented in Algorithm 8.3.

In an internal, not memory-restricted setting, a plan is constructed by backtracking from the goal node to the start node. This is facilitated by saving with every node a pointer to its predecessor. For memory-limited frontier search, a divide-and-conquer solution reconstruction is needed for which certain relay layers have to be stored in the main memory. In external search divide-and-conquer solution reconstruction and relay layers are not needed, since the exploration fully resides on disk.

There is one subtle problem: predecessors of the pointers are not available on disk. This is resolved as follows. Plans are reconstructed by saving the predecessor together with every state, by scanning with decreasing depth the stored files, and by looking for matching predecessors. Any reached node that is a predecessor of the current node is its predecessor on an optimal solution path. This results in a I/O complexity that is at most linear to scanning time  .

.

Even if conceptually simpler, there is no need to store the Open list in different files  ,

,  . We may store successive layers appended in one file.

. We may store successive layers appended in one file.

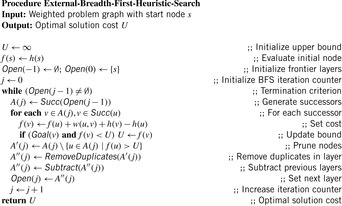

8.6.2. * External Breadth-First Branch-and-Bound

In weighted graphs, external BFS with delayed duplicate detection does not guarantee an optimal solution. A natural extension of BFS is to continue the search when a goal is found and keep on searching until a better goal is found or the search space is exhausted. In searching with nonadmissible heuristics we hardly can prune states with an evaluation larger than the current one. Essentially, we are forced to look at all states. However, if  with monotone heuristic function h we can prune the exploration.

with monotone heuristic function h we can prune the exploration.

For the domains where cost  is monotonically increasing, external breadth-first branch-and-bound (external BFBnB) with delayed duplicate detection does not prune away any node that is on the optimal solution path and ultimately finds the optimal solution. In Algorithm 8.4, the algorithm is presented in pseudo code. The sets Open denote the BFS layers and the sets A,

is monotonically increasing, external breadth-first branch-and-bound (external BFBnB) with delayed duplicate detection does not prune away any node that is on the optimal solution path and ultimately finds the optimal solution. In Algorithm 8.4, the algorithm is presented in pseudo code. The sets Open denote the BFS layers and the sets A,  ,

,  are temporary variables to construct the search frontier for the next iteration. States with

are temporary variables to construct the search frontier for the next iteration. States with  are pruned and states with

are pruned and states with  lead to a bound that is updated.

lead to a bound that is updated.

Theorem 8.3

(Cost-Optimality External BFBnB with Delayed Duplicate Detection) For a state space with  , where g denotes the depth and h is a consistent estimate, external BFBnB with delayed duplicate detection terminates with the optimal solution.

, where g denotes the depth and h is a consistent estimate, external BFBnB with delayed duplicate detection terminates with the optimal solution.

Proof

In BFBnB with cost function  , where g is the depth of the search and h a consistent search heuristic, every duplicate node with a smaller depth has been explored with a smaller f-value. This is simple to see as the h-values of the query node and the duplicate node match, and BFS generates a duplicate node with a smaller g-value first. Moreover, u is safely pruned if

, where g is the depth of the search and h a consistent search heuristic, every duplicate node with a smaller depth has been explored with a smaller f-value. This is simple to see as the h-values of the query node and the duplicate node match, and BFS generates a duplicate node with a smaller g-value first. Moreover, u is safely pruned if  exceeds the current threshold, as an extension of the path to u to a solution will have a larger f-value. Since external BFBnB with delayed duplicate detection expands all nodes u with

exceeds the current threshold, as an extension of the path to u to a solution will have a larger f-value. Since external BFBnB with delayed duplicate detection expands all nodes u with  the algorithm terminates with the optimal solution.

the algorithm terminates with the optimal solution.

Furthermore, we can easily show that if there exists more than one goal node in the state space with a different solution cost, then external BFBnB with delayed duplicate detection will explore less nodes than a complete external BFS with delayed duplicate detection.

Theorem 8.4

(Gain of External BFBnB wrt. External BFS) If  is the number of nodes expanded by external BFBnB with delayed duplicate detection for

is the number of nodes expanded by external BFBnB with delayed duplicate detection for  , and

, and  the number of nodes expanded by a complete run of external BFS with delayed duplicate detection, then

the number of nodes expanded by a complete run of external BFS with delayed duplicate detection, then  .

.

Proof

External BFBnB does not change the order in which nodes are looked at during a complete external BFS. There can be two cases. In the first case, there exists just one goal node t, which is also the last node in a BFS tree. For this case, clearly  . If there exists more than one goal node in the search tree, let

. If there exists more than one goal node in the search tree, let  be the two goal nodes with

be the two goal nodes with  and

and  . Since

. Since  will be expanded first,

will be expanded first,  will be used as the pruning value for all the next iterations. In this case, there does not exist any node u in the search tree between

will be used as the pruning value for all the next iterations. In this case, there does not exist any node u in the search tree between  and

and  with

with  ,

,  , otherwise

, otherwise  .

.

Table 8.2 gives an impression of cost-optimal search in a selected optimization problem, reporting the number of nodes in each layer obtained after refinement with respect to the previous layers. An entry in the goal cost column corresponds to the best goal cost found in that layer.

For unit cost search graphs the external branch-and-bound algorithm simplifies to external breadth-first heuristic search (see Ch. 6).

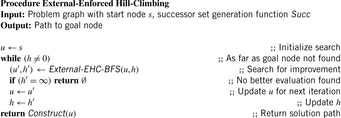

8.6.3. * External Enforced Hill-Climbing

In Chapter 6 we have introduced enforced hill-climbing (a.k.a. iterative improvement) as a more conservative form of hill-climbing search. Starting from a start state, a (breadth-first) search for a successor with a better heuristic value is started. As soon as such a successor is found, the hash tables are cleared and a fresh search is started. The process continues until the goal is reached. Since the algorithm performs a complete search on every state with a strictly better heuristic value, it is guaranteed to find a solution in directed graphs without dead-ends.

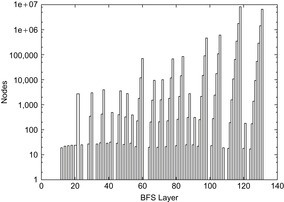

Having external BFS in hand, an external algorithm for enforced hill-climbing can be constructed by utilizing the heuristic estimates. In Algorithm 8.5 we show the algorithm in pseudo-code format. The externalization is embedded in the subprocedure Algorithm 8.6 that performs external BFS for a state that has an improved heuristic estimate. Figure 8.5 shows parts of an exploration for solving a action planning instance. It provides a histogram (logarithmic scale) on the number of nodes in BFS layers for external enforced hill-climbing in a selected planning problem.

Theorem 8.5

(Complexity External Enforced Hill-Climbing) Let  be the heuristic estimate of the initial state. External enforced hill-climbing with delayed duplicate elimination in a problem graph with bounded locality requires at most

be the heuristic estimate of the initial state. External enforced hill-climbing with delayed duplicate elimination in a problem graph with bounded locality requires at most  I/Os.

I/Os.

Enforced hill-climbing has one important drawback: its results are not optimal. Moreover, in directed search spaces with unrecognized dead-ends it can be trapped, without finding a solution to a solvable problem.

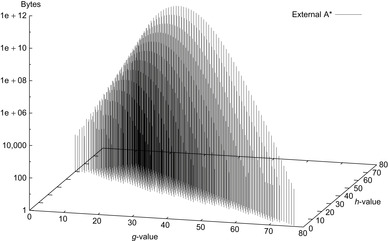

8.6.4. External A*

In the following we study how to extend external breadth-first exploration in implicit graphs to an A*-like search. If the heuristic is consistent, then on each search path, the evaluation function f is nondecreasing. No successor will have a smaller f-value than the current one. Therefore, the A* algorithm, which traverses the node set in f-order, expands each node at most once. Take, for example, a sliding-tile puzzle. As the Manhattan distance heuristic is consistent, for every two successive nodes u and v the difference of the according estimate evaluations  is either −1 or 1. By the increase in the g-value, the f-values remain either unchanged, or

is either −1 or 1. By the increase in the g-value, the f-values remain either unchanged, or  .

.

As earlier, external A* maintains the search frontier on disk, possibly partitioned into main memory–size sequences. In fact, the disk files correspond to an external representation of a bucket implementation of a priority queue data structure (see Ch. 3). In the course of the algorithm, each bucket addressed with index i contains all nodes u in the set Open that have priority  . An external representation of this data structure will memorize each bucket in a different file.

. An external representation of this data structure will memorize each bucket in a different file.

We introduce a refinement of the data structure that distinguishes between nodes with different g-values, and designates bucket  to all nodes u with path length

to all nodes u with path length  and heuristic estimate

and heuristic estimate  . Similar to external BFS, we do not change the identifier Open to separate generated from expanded nodes. In external A* (see Alg. 8.7), bucket

. Similar to external BFS, we do not change the identifier Open to separate generated from expanded nodes. In external A* (see Alg. 8.7), bucket  refers to nodes that are in the current search frontier or belong to the set of expanded nodes. During the exploration process, only nodes from one currently active bucket

refers to nodes that are in the current search frontier or belong to the set of expanded nodes. During the exploration process, only nodes from one currently active bucket  , where

, where  , are expanded, up to its exhaustion. Buckets are selected in lexicographic order for

, are expanded, up to its exhaustion. Buckets are selected in lexicographic order for  ; then, the buckets

; then, the buckets  with

with  and

and  are closed, whereas the buckets

are closed, whereas the buckets  with

with  or with

or with  and

and  are open. Depending on the actual node expansion progress, nodes in the active bucket are either open or closed.

are open. Depending on the actual node expansion progress, nodes in the active bucket are either open or closed.

To estimate the maximum number of buckets once more we consider Figure 7.14 as introduced in the analysis of the number of iteration in Symbolic A* (see Ch. 7), in which the g-values are plotted with respect to the h-values, such that nodes with the same  value are located on the same diagonal. For nodes that are expanded in

value are located on the same diagonal. For nodes that are expanded in  the successors fall into

the successors fall into  ,

,  , or

, or  . The number of naughts for each diagonal is an upper bound on the number of buckets that are needed. In Chapter 7, we have already seen that the number is bounded by

. The number of naughts for each diagonal is an upper bound on the number of buckets that are needed. In Chapter 7, we have already seen that the number is bounded by  .

.

By the restriction for f-values in the (n2 − 1)-Puzzle only about half the number of buckets have to be allocated. Note that  is not known in advance, so that we have to construct and maintain the files on-the-fly.

is not known in advance, so that we have to construct and maintain the files on-the-fly.

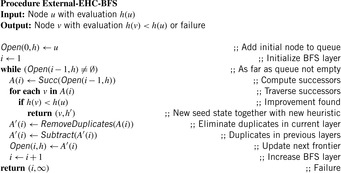

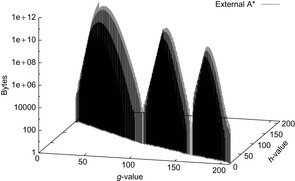

Figure 8.5 shows the memory profile of external A* on a Thirty-Five-Puzzle instance (with 14 tiles permuted). The exploration started in bucket  and terminated while expanding bucket

and terminated while expanding bucket  . Similar to external BFS but in difference to ordinary A*, external A* terminates while generating the goal, since all states in the search frontier with a smaller g-value have already been expanded. For this experiment three disjoint 3-tile and three disjoint 5-tile pattern databases were loaded, which, together with the buckets for reading and flushing, consumed about 4.9 gigabytes of RAM. The total disk space taken was 1,298,389,180,652 bytes, or 1.2 terabytes, with a state vector of

. Similar to external BFS but in difference to ordinary A*, external A* terminates while generating the goal, since all states in the search frontier with a smaller g-value have already been expanded. For this experiment three disjoint 3-tile and three disjoint 5-tile pattern databases were loaded, which, together with the buckets for reading and flushing, consumed about 4.9 gigabytes of RAM. The total disk space taken was 1,298,389,180,652 bytes, or 1.2 terabytes, with a state vector of  bytes: 32 bytes for the state vector plus information for incremental heuristic evaluation; 1 byte for each value stored, multiplied by six sets of at most 12 pattern databases plus 1 value each for their sum. Factor 2 is due to symmetry lookups. The exploration took about two weeks.

bytes: 32 bytes for the state vector plus information for incremental heuristic evaluation; 1 byte for each value stored, multiplied by six sets of at most 12 pattern databases plus 1 value each for their sum. Factor 2 is due to symmetry lookups. The exploration took about two weeks.

The following result restricts duplicate detection to buckets of the same h-value.

Lemma 8.1

In external A* for all  with

with  we have

we have  .

.

Proof

As in the algorithm of Munagala and Ranade, we can exploit the observation that in an undirected problem graph, duplicates of a node with BFS level i can at most occur in levels i,  , and

, and  . In addition, since h is a total function, we have

. In addition, since h is a total function, we have  if

if  .

.

For ease of describing the algorithm, we consider each bucket for the Open list as a different file. Very sparse graphs can lead to bad I/O performance, because they may lead to buckets that contain by far less than B elements and dominate the I/O complexity. For the following, we generally assume large graphs for which  and

and  .

.

Algorithm 8.7 depicts the pseudo code of the external A* algorithm for consistent estimates and unit cost and undirected graphs. The algorithm maintains the two values  and

and  to address the currently considered buckets. The buckets of

to address the currently considered buckets. The buckets of  are traversed for increasing

are traversed for increasing  up to

up to  . According to their different h-values, successors are arranged into three different frontier lists:

. According to their different h-values, successors are arranged into three different frontier lists:  ,

,  , and

, and  ; hence, at each instance only four buckets have to be accessed by I/O operations. For each of them, we keep a separate buffer of size

; hence, at each instance only four buckets have to be accessed by I/O operations. For each of them, we keep a separate buffer of size  ; this will reduce the internal memory requirements to M. If a buffer becomes full then it is flushed to disk. As in BFS it is practical to presort buffers in one bucket immediately by an efficient internal algorithm to ease merging, but we could equivalently sort the unsorted buffers for one bucket externally.

; this will reduce the internal memory requirements to M. If a buffer becomes full then it is flushed to disk. As in BFS it is practical to presort buffers in one bucket immediately by an efficient internal algorithm to ease merging, but we could equivalently sort the unsorted buffers for one bucket externally.

There can be two cases that can give rise to duplicates within an active bucket (see Fig. 8.6, black bucket): two different nodes of the same predecessor bucket generating a common successor, and two nodes belonging to different predecessor buckets generating a duplicate. These two cases can be dealt with by merging all the presorted buffers corresponding to the same bucket, resulting in one sorted file. This file can then be scanned to remove the duplicate nodes from it. In fact, both the merging and duplicates removal can be done simultaneously.

Another special case of the duplicate nodes exists when the nodes that have already been evaluated in the upper layers are generated again (see Fig. 8.6). These duplicate nodes have to be removed by a file subtraction process for the next active bucket  by removing any node that has appeared in

by removing any node that has appeared in  and

and  (buckets shaded in light gray). This file subtraction can be done by a mere parallel scan of the presorted files and by using a temporary file in which the intermediate result is stored. It suffices to remove duplicates only in the bucket that is expanded next,

(buckets shaded in light gray). This file subtraction can be done by a mere parallel scan of the presorted files and by using a temporary file in which the intermediate result is stored. It suffices to remove duplicates only in the bucket that is expanded next,  . The other buckets might not have been fully generated, and hence we can save the redundant scanning of the files for every iteration of the innermost while loop.

. The other buckets might not have been fully generated, and hence we can save the redundant scanning of the files for every iteration of the innermost while loop.

When merging the presorted sets  with the previously existing Open buckets (both residing on disk), duplicates are eliminated, leaving the sets Open

with the previously existing Open buckets (both residing on disk), duplicates are eliminated, leaving the sets Open  , Open

, Open  , and Open

, and Open  duplicate free. Then the next active bucket Open

duplicate free. Then the next active bucket Open  is refined not to contain any node in Open

is refined not to contain any node in Open  or Open

or Open  . This can be achieved through a parallel scan of the presorted files and by using a temporary file in which the intermediate result is stored, before Open

. This can be achieved through a parallel scan of the presorted files and by using a temporary file in which the intermediate result is stored, before Open

is updated. It suffices to perform file subtraction lazily only for the bucket that is expanded next.

is updated. It suffices to perform file subtraction lazily only for the bucket that is expanded next.

Theorem 8.6

(Optimality of External A*) In a unit cost graph external A* is complete and optimal.

Proof

Since external A* simulates A* and only changes the order of expanded nodes that have the same f-value, completeness and optimality are inherited from the properties shown for A*.

Theorem 8.7

(I/O Performance of External A* in Undirected Graphs) The complexity for external A* in an implicit unweighted and undirected graph with a consistent estimate is bounded by  I/Os.

I/Os.

Proof

By simulating internal A*, delayed duplicate elimination ensures that each edge in the problem graph is looked at at most once. Similar to the analysis for external implicit BFS  , I/Os are needed to eliminate duplicates in the successor lists. Since each node is expanded at most once, this adds

, I/Os are needed to eliminate duplicates in the successor lists. Since each node is expanded at most once, this adds  I/Os to the overall runtime. Filtering, evaluating nodes, and merging lists is available in scanning time of all buckets in consideration. During the exploration, each bucket Open will be referred to at most six times, once for expansion, at most three times as a successor bucket, and at most two times for duplicate elimination as a predecessor of the same h-value as the currently active bucket. Therefore, evaluating, merging, and file subtraction add

I/Os to the overall runtime. Filtering, evaluating nodes, and merging lists is available in scanning time of all buckets in consideration. During the exploration, each bucket Open will be referred to at most six times, once for expansion, at most three times as a successor bucket, and at most two times for duplicate elimination as a predecessor of the same h-value as the currently active bucket. Therefore, evaluating, merging, and file subtraction add  I/Os to the overall runtime. Hence, the total execution time is

I/Os to the overall runtime. Hence, the total execution time is  I/Os.

I/Os.

If we additionally have  , the complexity reduces to

, the complexity reduces to  I/Os. We next generalize the result to directed graphs with bounded locality.

I/Os. We next generalize the result to directed graphs with bounded locality.

Theorem 8.8

(I/O Performance of External A* in Graphs with Bounded Locality) The complexity for external A* in an implicit unweighted problem graph with bounded locality and consistent estimate is bounded by  I/Os.

I/Os.

Proof

Consistency implies that we do not have successors with an f-value that is smaller than the current minimum. If we subtract a bucket  from

from  with

with  and

and  being smaller than the locality l, then we arrive at full duplicate detection. Consequently, during the exploration each problem graph node and edge is considered at most once. The efforts due to removing nodes in each bucket individually accumulate to at most

being smaller than the locality l, then we arrive at full duplicate detection. Consequently, during the exploration each problem graph node and edge is considered at most once. The efforts due to removing nodes in each bucket individually accumulate to at most  I/Os, and subtraction adds

I/Os, and subtraction adds  I/Os to the overall complexity.

I/Os to the overall complexity.

Internal costs have been neglected in this analysis. Since each node is considered only once for expansion, the internal costs are  times the time

times the time  for successor generation, plus the efforts for internal duplicate elimination and sorting. By setting the weight of all edges

for successor generation, plus the efforts for internal duplicate elimination and sorting. By setting the weight of all edges  to

to  for a consistent heuristic h, A* can be cast as a variant of Dijkstra's algorithm that requires internal costs of

for a consistent heuristic h, A* can be cast as a variant of Dijkstra's algorithm that requires internal costs of  ,

,  successor of

successor of  on a bucket-based priority queue. Due to consistency we have

on a bucket-based priority queue. Due to consistency we have  , so that, given

, so that, given  , internal costs are bounded by

, internal costs are bounded by  , where

, where  refers to the total internal sorting efforts.

refers to the total internal sorting efforts.

To reconstruct a solution path, we store predecessor information with each node on disk (thus doubling the state vector size), and apply backward chaining, starting with the target node. However, this is not strictly necessary: For a node in depth g, we intersect the set of possible predecessors with the buckets of depth  . Any node that is in the intersection is reachable on an optimal solution path, so that we can iterate the construction process. The time complexity is bounded by the scanning time of all buckets in consideration, namely by

. Any node that is in the intersection is reachable on an optimal solution path, so that we can iterate the construction process. The time complexity is bounded by the scanning time of all buckets in consideration, namely by  I/Os.

I/Os.

Up to this point, we have made the assumption of unit cost graphs; in the rest of this section, we generalize the algorithm to small integer weights in  . Due to consistency of the heuristic, it holds for every node u and every successor v of u that

. Due to consistency of the heuristic, it holds for every node u and every successor v of u that  . Moreover, since the graph is undirected, we equally have

. Moreover, since the graph is undirected, we equally have  , or

, or  ; hence,

; hence,  . This means that the successors of the nodes in the active bucket are no longer spread across three, but over

. This means that the successors of the nodes in the active bucket are no longer spread across three, but over  buckets. In Figure 8.7, the region of successors is shaded in dark gray, and the region of predecessors is shaded in light gray.

buckets. In Figure 8.7, the region of successors is shaded in dark gray, and the region of predecessors is shaded in light gray.

For duplicate reduction, it is sufficient to subtract the  buckets

buckets  from the active bucket

from the active bucket  prior to the expansion of its nodes (indicated by the shaded rectangle in Fig. 8.7). We assume

prior to the expansion of its nodes (indicated by the shaded rectangle in Fig. 8.7). We assume  I/Os for accessing the files is negligible.

I/Os for accessing the files is negligible.

Theorem 8.9

(I/O Performance of External A* in Nonunit Cost Graphs) The I/O complexity for external A* in an implicit undirected unit cost graph, where the weights are in  , with a consistent estimate, is bounded by

, with a consistent estimate, is bounded by  .

.

Proof

It can be shown by induction over  that no duplicates exist in smaller buckets. The claim is trivially true for

that no duplicates exist in smaller buckets. The claim is trivially true for  . In the induction step, assume to the contrary that for some node

. In the induction step, assume to the contrary that for some node  ,

,  contains a duplicate

contains a duplicate  with

with  ; let

; let  be the predecessor of v. Then, by the undirected graph structure, there must be a duplicate

be the predecessor of v. Then, by the undirected graph structure, there must be a duplicate  . But since

. But since  , this is a contradiction to the induction hypothesis.

, this is a contradiction to the induction hypothesis.

The derivation of the I/O complexity is similar to the unit cost case; the difference is that each bucket is referred to at most  times for bucket subtraction and expansion. Therefore, each edge in the problem graph is considered at most once.

times for bucket subtraction and expansion. Therefore, each edge in the problem graph is considered at most once.

If we do not impose a bound C on the maximum integer weight, or if we allow directed graphs, the runtime increases to  I/Os. For larger edge weights and

I/Os. For larger edge weights and  -values, buckets become sparse and should be handled more carefully.

-values, buckets become sparse and should be handled more carefully.

Let us consider how to externally solve Fifteen-Puzzle problem instances that cannot be solved internally with A* and the Manhattan distance estimate. Internal sorting is implemented by applying Quicksort. The external merge is performed by maintaining the file pointers for every flushed buffer and merging them into a single sorted file. Since we have a simultaneous file pointers capacity bound imposed by the operating system, a two-phase merging applies. Duplicate removal and bucket subtraction are performed on single passes through the bucket file. As said, the successor's f-value differs from the parent node by exactly 2.

In Table 8.3 we show the diagonal pattern of nodes that is developed during the exploration for a simple problem instance. Table 8.4 illustrates the impact of duplicate removal ( ) and bucket subtraction (

) and bucket subtraction ( ) for problem instances of increasing complexity. In some cases, the experiment is terminated because of the limited hard disk capacity.

) for problem instances of increasing complexity. In some cases, the experiment is terminated because of the limited hard disk capacity.

One interesting feature of our approach from a practical point of view is the ability to pause and resume the program execution in large problem instances. This is desirable, for example, in the case when the limits of secondary storage are reached, because we can resume the execution with more disk space.

8.6.5. * Lower Bound for Delayed Duplicate Detection

Is the complexity for external A* I/O-optimal?

Recall the definition of big-oh notation in Chapter 1: We say  if there are two constants

if there are two constants  and c, such that for all

and c, such that for all  we have

we have  . To devise lower bounds for external computation, the following variant of the big-oh notation is appropriate: We say

. To devise lower bounds for external computation, the following variant of the big-oh notation is appropriate: We say  if there is a constant c, such that for all M and B there is a value

if there is a constant c, such that for all M and B there is a value  , such that for all

, such that for all  we have

we have  . The classes

. The classes  and

and  are defined analogously. The intuition for universally quantifying M and B is that the adversary first chooses the machine, and then we, as the good guys, evaluate the bound.

are defined analogously. The intuition for universally quantifying M and B is that the adversary first chooses the machine, and then we, as the good guys, evaluate the bound.

External sorting in this model has the aforementioned complexity of  I/Os. As internal set inequality, set inclusion, and set disjointness require at least

I/Os. As internal set inequality, set inclusion, and set disjointness require at least  comparisons, the lower bounds on the number of I/Os for these problems are also

comparisons, the lower bounds on the number of I/Os for these problems are also  .

.

For the internal duplicate elimination problem the known lower bound on the number of comparisons needed is  , where

, where  is the multiplicity of record i. The main argument is that after the duplicate removal, the total order of the remaining records is known. This result can be lifted to external search and leads to an I/O complexity of at most

is the multiplicity of record i. The main argument is that after the duplicate removal, the total order of the remaining records is known. This result can be lifted to external search and leads to an I/O complexity of at most for external delayed duplicate detection. For the sliding-tile puzzle with two preceding buckets and a branching factor

for external delayed duplicate detection. For the sliding-tile puzzle with two preceding buckets and a branching factor  we have

we have  . For general consistent estimates in unit cost graphs we have

. For general consistent estimates in unit cost graphs we have  , with c being an upper bound on the maximal branching factor.

, with c being an upper bound on the maximal branching factor.

Theorem 8.10

(I/O Performance Optimality for External A*) If  , delayed duplicate bucket elimination in an implicit unweighted and undirected graph A* search with consistent estimates that need at least

, delayed duplicate bucket elimination in an implicit unweighted and undirected graph A* search with consistent estimates that need at least  I/O operations.

I/O operations.

Proof

Since each node gives rise to at most c successors and there are at most three preceding buckets in A* search with consistent estimates in a unit cost graph, given that previous buckets are mutually duplicate free, we have at most  nodes that are the same. Therefore, all sets

nodes that are the same. Therefore, all sets  are bounded by

are bounded by  . Since k is bounded by N we have that

. Since k is bounded by N we have that  is bounded by

is bounded by  . Therefore, the lower bound for duplicate elimination for N nodes is

. Therefore, the lower bound for duplicate elimination for N nodes is  .

.

A related lower bound also applicable to the multiple disk model establishes that solving the duplicate elimination problem with N elements having P different values needs at least  I/Os, since the depth of any decision tree for the duplicate elimination problem is at least

I/Os, since the depth of any decision tree for the duplicate elimination problem is at least  . For a search with consistent estimates and a bounded branching factor, we assume to have

. For a search with consistent estimates and a bounded branching factor, we assume to have  , so that the I/O complexity reduces to

, so that the I/O complexity reduces to  .

.

8.7. * Refinements

As an additional feature, external sorting can be avoided to some extent, by a single or a selection of hash functions that splits larger files into smaller pieces until they fit into main memory. As with the h-value in the preceding case a node and its duplicate will have the same hash address.

8.7.1. Hash-Based Duplicate Detection

Hash-based duplicate detection is designed to avoid the complexity of sorting. It is based on either one or two orthogonal hash functions. The primary hash function distributes the nodes to different files. Once a file of successors has been generated, duplicates are eliminated. The assumption is that all nodes with the same primary hash address fit into the main memory. The secondary hash function (if available) maps all duplicates to the same hash address. This approach can be illustrated by sorting a card deck of 52 cards. If we have only 13 internal memory places the best strategy is to hash cards to different files based on their suit in one scan. Next, we individually read each of the files to the main memory to sort the cards or search for duplicates.

The idea goes back to Bucket Sort. In its first phase, real numbers  are thrown into n different buckets