Chapter 11. Real-Time Search

Sven Koenig

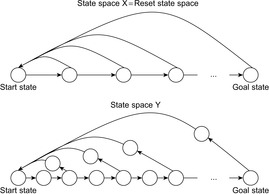

The on-line search algorithms in this chapter interleave searches and action executions. They decide on a local search space, search it, and determine which actions to execute within it. Then, they execute these actions and repeat the process from their new state until they reach a goal state.

Keywords: agent-centered search, convergence detection and speed-up, coverage, edge counting, fast h-value updates, fast learning and converging search (FALCONS), goal-directed navigation (in known and unknown terrain), learning real-time A* (LRTA*), localization, local search space, minimal lookahead, min-max learning real-time A* (min-max LRTA*), node counting, non-deterministic state space, on-line search, probabilistic learning real-time A* (probabilistic LRTA*), probabilistic state space, real-time A* (RTA*), real-time adaptive A* (RTAA*), real-time (heuristic) search, robot navigation, safely-explorable state space

A real-time search method that does not satisfy our definition but only needs a constant search time before the first action execution and between action executions, for example, has been described by Parberry (1995). Moreover, there is a line of work done by Carlos Hernndez and Pedro Meseguer on real-time search with significant improvements to LRTA* (Hernández and Meseguer, 2005, 2007a,b).

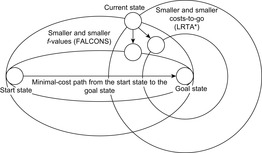

In this chapter, we describe real-time (heuristic) search and illustrate it with examples. Real-time search is sometimes used to describe search methods that only need a constant search time between action executions. However, we use a more restrictive definition of real-time search in this chapter, namely that of real-time search as a variant of agent-centered search. Interleaving or overlapping searches and action executions often has advantages for intelligent systems (agents) that interact directly with the world. Agent-centered search restricts the search to the part of the state space around the current state of the agent; for example, the current position of a mobile robot or the current board position of a game. The part of the state space around the current state of the agent is the part of the state space that is immediately relevant for the agent in its current situation (because it contains the states that the agent will soon be in) and sometimes might be the only part of the state space that the agent knows about. Agent-centered search usually does not search all the way from the start state to a goal state. Instead, it decides on the local search space, searches it, and determines which actions to execute within it. Then, it executes these actions (or only the first action) and repeats the process from its new state until it reaches a goal state.

The best-known example of agent-centered search is probably game playing, such as playing Chess (as studied in Chapter 12). In this case, the states correspond to board positions and the current state corresponds to the current board position. Game-playing programs typically perform a minimax search with a limited lookahead (i.e., search horizon) around the current board position to determine which move to perform next. The reason for performing only a limited local search is that the state spaces of realistic games are too large to perform complete searches in a reasonable amount of time and with a reasonable amount of memory. The future moves of the opponent cannot be predicted with certainty, which makes the search tasks nondeterministic. This results in an information limitation that can only be overcome by enumerating all possible moves of the opponent, which results in large search spaces. Performing agent-centered search allows game-playing programs to choose a move in a reasonable amount of time while focusing on the part of the state space that is the most relevant to the next move decision.

Traditional search methods, such as A*, first determine minimal-cost paths and then follow them. Thus, they are offline search methods. Agent-centered search methods, on the other hand, interleave search and action execution and are thus online search methods. They can be characterized as revolving search methods with greedy action-selection steps that solve suboptimal search tasks. They are suboptimal since they are looking for any path (i.e., sequence of actions) from the start state to a goal state. The sequence of actions that agent-centered search methods execute is such a path. They are revolving, because they repeat the same process until they reach a goal state. Agent-centered search methods have the following two advantages:

Time constraints: Agent-centered search methods can execute actions in the presence of soft or hard time constraints since the sizes of their local search spaces are independent of the sizes of the state spaces and can thus remain small. The objective of the search in this case is to approximately minimize the execution cost subject to the constraint that the search cost (here: runtime) between action executions is bounded from above, for example, in situations where it is more important to act reasonably in a timely manner than to minimize the execution cost after a long delay. Examples include steering decisions when driving a car or movement decisions when crossing a busy street.

Sum of search and execution cost: Agent-centered search methods execute actions before their complete consequences are known and thus are likely to incur some overhead in terms of the execution cost, but this is often outweighed by a decrease in the search cost, both because they trade off the search and execution cost and because they allow agents to gather information early in nondeterministic state spaces, which reduces the amount of search they have to perform for unencountered situations. Thus, they often decrease the sum of the search and execution cost compared to search methods that first determine minimal-cost paths and then follow them, which can be important for search tasks that need to be solved only once.

Agent-centered search methods have to ensure that they do not cycle without making progress toward a goal state. This is a potential problem since they execute actions before their consequences are completely known. Agent-centered search methods have to ensure both that it remains possible to achieve the goal and that they eventually do so. The goal remains achievable if no actions exist of which the execution makes it impossible to reach a goal state, if the agent-centered search methods can avoid the execution of such actions in case they do exist, or if the agent-centered search methods have the ability to reset the agent into the start state. Real-time search methods are agent-centered search methods that store a value, called h-value, in memory for each state that they encounter during the search and update them as the search progresses, both to focus the search and avoid cycling, which accounts for a large chunk of their search time per search episode.

11.1. LRTA*

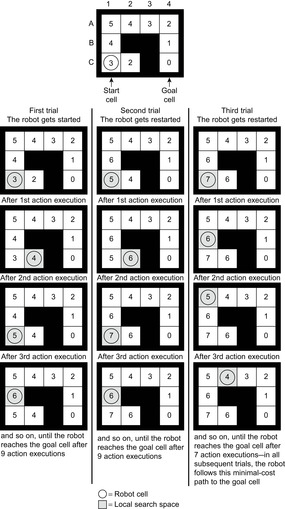

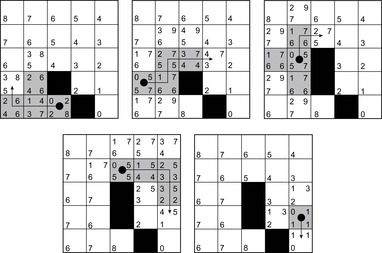

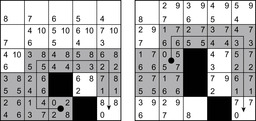

Learning real-time A* (LRTA*) is probably the most popular real-time search method, and we relate all real-time search methods in this chapter to it. The h-values of LRTA* approximate the goal distances of the states. They can be initialized using a heuristic function. They can be all zero if more informed h-values are not available. Figure 11.1 illustrates the behavior of LRTA* using a simplified goal-directed navigation task in known terrain without uncertainty about the start cell. The robot can move one cell to the north, east, south, or west (unless that cell is blocked). All action costs are one. The task of the robot is to navigate to the given goal cell and then stop. In this case, the states correspond to cells, and the current state corresponds to the current cell of the robot. We assume that there is no uncertainty in actuation and sensing. The h-values are initialized with the Manhattan distances. A robot under time pressure could reason as follows: Its current cell C1 is not a goal cell. Thus, it needs to move to one of the cells adjacent to its current cell to get to a goal cell. The cost-to-go of an action is its action cost plus the estimated goal distance of the successor state reached when executing the action, as given by the h-value of the successor state. If it moves to cell B1, then the action cost is one and the estimated goal distance from there is four as given by the h-value of cell B1. The cost-to-go of moving to cell B1 is thus five. Similarly, the cost-to-go of moving to cell C2 is three. Thus, it looks more promising to move to cell C2. Figure 11.2 visualizes the h-value surface formed by the initial h-values. However, a robot does not reach the goal cell if it always executes the move with the minimal cost-to-go and thus performs steepest descent on the initial h-value surface. It moves back and forth between cells C1 and C2 and thus cycles forever due to the local minimum of the h-value surface at cell C2.

|

| Figure 11.1 |

|

| Figure 11.2 Initial h-value surface, by Koenig (2001). |

We could avoid this problem by randomizing the action-selection process slightly, possibly together with resetting the robot into a start state (random restart) after the execution cost has become large. LRTA*, however, avoids this problem by increasing the h-values to fill the local minima in the h-value surface. Figure 11.3 shows how LRTA* performs a search around the current state of the robot to determine which action to execute next if it breaks ties among actions in the following order: north, east, south, and west. It operates as follows:

Local Search Space–Generation Step: LRTA* decides on the local search space. The local search space can be any set of nongoal states that contains the current state. We say that a local search space is minimal if and only if it contains only the current state. We say that it is maximal if and only if it contains all nongoal states. In Figure 11.1, for example, the local search spaces are minimal. In this case, LRTA* can construct a search tree around the current state. The local search space consists of all nonleaves of the search tree. Figure 11.3 shows the search tree for deciding which action to execute in the start cell C1.

Value-Update Step: LRTA* assigns each state in the local search space its correct goal distance under the assumption that the h-values of the states just outside of the local search space correspond to their correct goal distances. In other words, it assigns each state in the local search space the minimum of the execution cost for getting from it to a state just outside of the local search space, plus the estimated remaining execution cost for getting from there to a goal state, as given by the h-value of the state just outside of the local search space. The reason for this is that the local search space does not include any goal state. Thus, a minimal-cost path from a state in the local search space to a goal state has to contain a state just outside of the local search space. Thus, the smallest estimated cost of all paths from the state via a state just outside of the local search space to a goal state is an estimate of the goal distance of the state. Since this lookahead value is a more accurate estimate of the goal distance of the state in the local search space, LRTA* stores it in memory, overwriting the existing h-value of the state.

In the example, the local search space is minimal and LRTA* can simply update the h-value of the state in the local search space according to the following rule provided that it ignores all actions that can leave the current state unchanged. LRTA* first assigns each leaf of the search tree the h-value of the corresponding state. The leaf that represents cell B1 is assigned an h-value of four, and the leaf that represents cell C2 is assigned an h-value of two. This step is marked in Figure 11.3. The new h-value of the root of the search tree, that represents cell C1, then is the minimum of the costs-to-go of the actions that can be executed in it, that is, the minimum of five and three. ②. This h-value is then stored in memory for cell C1 . Figure 11.4 shows the result of one value-update step for a different example where the local search space is nonminimal.

Action-Selection Step: LRTA* selects an action for execution that is the beginning of a path that promises to minimize the execution cost from the current state to a goal state (ties can be broken arbitrarily). In the example, LRTA* selects the action with the minimal cost-to-go. Since moving to cell B1 has cost-to-go five and moving to cell C2 has cost-to-go three, LRTA* decides to move to cell C2.

Action-Execution Step: LRTA* executes the selected action and updates the state of the robot, and repeats the process from the new state of the robot until the robot reaches a goal state. If its new state is outside of the local search space, then it needs to repeat the local search space–generation and value-update steps. Otherwise, it can repeat these steps or proceed directly to the action-selection step. Executing more actions before generating the next local search space typically results in a smaller search cost (because LRTA* needs to search less frequently); executing fewer actions typically results in a smaller execution cost (because LRTA* selects actions based on more information).

|

| Figure 11.3 Illustration of LRTA*, by Koenig (2001). |

|

| Figure 11.4 LRTA* with a larger local search space, by Koenig (2001). |

The left column of Figure 11.1 shows the result of the first couple of steps of LRTA* for the example. The values in grey cells are the new h-values calculated by the value-update step because the corresponding cells are part of the local search space. The robot reaches the goal cell after nine action executions; that is, with execution cost nine. We assumed here that the terrain is known and the reason for using real-time search is time pressure. Another reason for using real-time search could be missing knowledge of the terrain and the resulting desire to restrict the local search spaces to the known parts of the terrain.

We now formalize LRTA*, using the following assumptions and notation for deterministic and nondeterministic search tasks, which will be used throughout this chapter: S denotes the finite set of states of the state space,  the start state, and

the start state, and  the set of goal states.

the set of goal states.  denotes the finite, nonempty set of (potentially nondeterministic) actions that can be executed in state

denotes the finite, nonempty set of (potentially nondeterministic) actions that can be executed in state  .

.  denotes the action cost that results from the execution of action

denotes the action cost that results from the execution of action  in state

in state  . We assume that all action costs are one unless stated otherwise.

. We assume that all action costs are one unless stated otherwise.  denotes the minimal action cost of any action.

denotes the minimal action cost of any action.  denotes the set of successor states that can result from the execution of action

denotes the set of successor states that can result from the execution of action  in state

in state  .

.  denotes the state that results from an actual execution of action

denotes the state that results from an actual execution of action  in state

in state  . In deterministic state spaces,

. In deterministic state spaces,  contains only one state, and we use

contains only one state, and we use  also to denote this state. Thus,

also to denote this state. Thus,  in this case. An agent starts in the start state and has to move to a goal state. The agent always observes what its current state is and then has to select and execute its next action, which results in a state transition to one of the possible successor states. The search task is solved when the agent reaches a goal state. We denote the number of states by

in this case. An agent starts in the start state and has to move to a goal state. The agent always observes what its current state is and then has to select and execute its next action, which results in a state transition to one of the possible successor states. The search task is solved when the agent reaches a goal state. We denote the number of states by  and the number of state-action pairs (loosely called actions) by

and the number of state-action pairs (loosely called actions) by  ; that is, an action that is applicable in more than one state counts more than once. Moreover, δ (u, T) ≥ 0 denotes the goal distance of state

; that is, an action that is applicable in more than one state counts more than once. Moreover, δ (u, T) ≥ 0 denotes the goal distance of state  ; that is, the minimal execution cost with which a goal state can be reached from state u. The depth d of the state space is its maximal goal distance,

; that is, the minimal execution cost with which a goal state can be reached from state u. The depth d of the state space is its maximal goal distance,  . The expression

. The expression  returns one of the elements

returns one of the elements  that minimize f (x); that is, one of the elements from the set

that minimize f (x); that is, one of the elements from the set  . We assume that all search tasks are deterministic unless stated otherwise, and discuss nondeterministic state spaces in 11.5.7 and 11.6.4.

. We assume that all search tasks are deterministic unless stated otherwise, and discuss nondeterministic state spaces in 11.5.7 and 11.6.4.

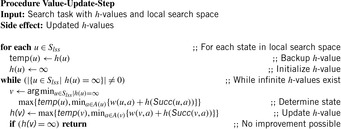

Algorithm 11.1 shows pseudo code for LRTA* in deterministic state spaces. It associates a nonnegative h-value h (u) with each state  . In practice, they are not initialized upfront but rather can be initialized as needed. LRTA* consists of a termination-checking step, a local search space–generation step, a value-update step, an action-selection step, and an action-execution step. LRTA* first checks whether it has already reached a goal state and thus can terminate successfully. If not, it generates the local search space

. In practice, they are not initialized upfront but rather can be initialized as needed. LRTA* consists of a termination-checking step, a local search space–generation step, a value-update step, an action-selection step, and an action-execution step. LRTA* first checks whether it has already reached a goal state and thus can terminate successfully. If not, it generates the local search space  . Although we require only that

. Although we require only that  and

and  , in practice LRTA* often uses forward search (that searches from the current state to the goal states) to select a continuous part of the state space around the current state u of the agent. LRTA* could determine the local search space, for example, with a breadth-first search around state u up to a given depth or with a (forward) A* search around state u until a given number of states have been expanded (or, in both cases, a goal state is about to be expanded). The states in the local search space correspond to the nonleaves of the corresponding search tree, and thus are all nongoal states. In Figure 11.1, a (forward) A* search that expands two states picks a local search space that consists of the expanded states C1 and C2. LRTA* then updates the h-values of all states in the local search space. Based on these h-values, LRTA* decides which action to execute next. Finally, it executes the selected action, updates its current state, and repeats the process.

, in practice LRTA* often uses forward search (that searches from the current state to the goal states) to select a continuous part of the state space around the current state u of the agent. LRTA* could determine the local search space, for example, with a breadth-first search around state u up to a given depth or with a (forward) A* search around state u until a given number of states have been expanded (or, in both cases, a goal state is about to be expanded). The states in the local search space correspond to the nonleaves of the corresponding search tree, and thus are all nongoal states. In Figure 11.1, a (forward) A* search that expands two states picks a local search space that consists of the expanded states C1 and C2. LRTA* then updates the h-values of all states in the local search space. Based on these h-values, LRTA* decides which action to execute next. Finally, it executes the selected action, updates its current state, and repeats the process.

Algorithm 11.2 shows how LRTA* updates the h-values in the local search space using a variant of Dijkstra's algorithm. It assigns each state its goal distance under the assumption that the h-values of all states in the local search space do not overestimate the correct goal distances and the h-values of all states outside of the local search space correspond to their correct goal distances. It does this by first assigning infinity to the h-values of all states in the local search space. It then determines a state in the local search space of which the h-value is still infinity and which minimizes the maximum of its previous h-value and the minimum over all actions in the state of the cost-to-go of the action. This value then becomes the h-value of this state, and the process repeats. The way the h-values are updated ensures that the states in the local search space are updated in the order of their increasing new h-values. This ensures that the h-value of each state in the local search space is updated at most once. The method terminates when either the h-value of each state in the local search space has been assigned a finite value or an h-value would be assigned the value infinity. In the latter case, the h-values of all remaining states in the local search space would be assigned the value infinity as well, which is already their current value.

Lemma 11.1

For all times t = 0, 1, 2,… (until termination): Consider the (t + 1) st value-update step of LRTA* (i.e., call to procedure Value-Update-Step inAlgorithm 11.1). Let  refer to its local search space. Let

refer to its local search space. Let  and

and  refer to the h-values immediately before and after, respectively, the value-update step. Then, for all states

refer to the h-values immediately before and after, respectively, the value-update step. Then, for all states  , the value-update step terminates with

, the value-update step terminates with

Proof

by induction on the number of iterations within the value-update step (see Exercises).

Lemma 11.2

Admissible initial h-values (that is, initial h-values that are lower bounds on the corresponding goal distances) remain admissible after every value-update step of LRTA* and are monotonically nondecreasing. Similarly, consistent initial h-values (i.e., initial h-values that satisfy the triangle inequality) remain consistent after every value-update step of LRTA* and are monotonically nondecreasing.

Proof

by induction on the number of value-update steps, using Lemma 11.1 (see Exercises).

After the value-update step has updated the h-values, LRTA* greedily chooses the action  in the current nongoal state u with the minimal cost-to-go

in the current nongoal state u with the minimal cost-to-go  for execution to minimize the estimated execution cost to a goal state. Ties can be broken arbitrarily, although we explain later that tie breaking can be important. Then, LRTA* has a choice. It can generate another local search space, update the h-values of all states that it contains, and select another action for execution. This version of LRTA* results when the repeat-until loop in Algorithm 11.1 is executed only once, which is why we refer to this version as LRTA* without repeat-until loop. If the new state is still part of the local search space (the one that was used to determine the action of which the execution resulted in the new state), LRTA* can also select another action for execution based on the current h-values. This version of LRTA* results when the repeat-until loop in Algorithm 11.1 is executed until the new state is no longer part of the local search space (as shown in the pseudo code), which is why we refer to this version as LRTA* with repeat-until loop. It searches less often and thus tends to have smaller search costs but larger execution costs. We analyze LRTA* without repeat-until loop, which is possible because LRTA* with repeat-until loop is a special case of LRTA* without repeat-until loop. After LRTA* has used the value-update step on some local search space, the h-values do not change if LRTA* uses the value-update step again on the same local search space or a subset thereof. Whenever LRTA* repeats the body of the repeat-until loop, the new current state is still part of the local search space Slss and thus not a goal state. Consequently, LRTA* could now search a subset of Slss that includes the new current state, for example, using a minimal local search space, which does not change the h-values and thus can be skipped.

for execution to minimize the estimated execution cost to a goal state. Ties can be broken arbitrarily, although we explain later that tie breaking can be important. Then, LRTA* has a choice. It can generate another local search space, update the h-values of all states that it contains, and select another action for execution. This version of LRTA* results when the repeat-until loop in Algorithm 11.1 is executed only once, which is why we refer to this version as LRTA* without repeat-until loop. If the new state is still part of the local search space (the one that was used to determine the action of which the execution resulted in the new state), LRTA* can also select another action for execution based on the current h-values. This version of LRTA* results when the repeat-until loop in Algorithm 11.1 is executed until the new state is no longer part of the local search space (as shown in the pseudo code), which is why we refer to this version as LRTA* with repeat-until loop. It searches less often and thus tends to have smaller search costs but larger execution costs. We analyze LRTA* without repeat-until loop, which is possible because LRTA* with repeat-until loop is a special case of LRTA* without repeat-until loop. After LRTA* has used the value-update step on some local search space, the h-values do not change if LRTA* uses the value-update step again on the same local search space or a subset thereof. Whenever LRTA* repeats the body of the repeat-until loop, the new current state is still part of the local search space Slss and thus not a goal state. Consequently, LRTA* could now search a subset of Slss that includes the new current state, for example, using a minimal local search space, which does not change the h-values and thus can be skipped.

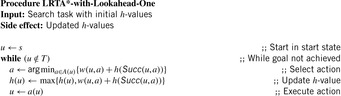

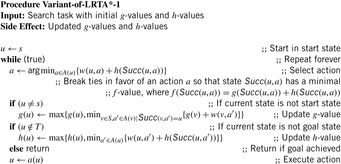

11.2. LRTA* with Lookahead One

We now state a variant of LRTA* with minimal local search spaces, the way it is often stated in the literature. Algorithm 11.3 shows the pseudo code of LRTA* with lookahead one. Its action-selection step and value-update step can be explained as follows. The action-selection step greedily chooses the action  in the current nongoal state u with the minimal cost-to-go

in the current nongoal state u with the minimal cost-to-go  for execution to minimize the estimated execution cost to a goal state. (The cost-to-go

for execution to minimize the estimated execution cost to a goal state. (The cost-to-go  is basically the f-value of state

is basically the f-value of state  of an A* search from u toward the goal states.) The value-update step replaces the h-value of the current state with a more accurate estimate of the goal distance of the state based on the costs-to-go of the actions that can be executed in it, which is similar to temporal difference learning in reinforcement learning. If all h-values are admissible, then both h (u) and the minimum of the costs-to-go of the actions that can be executed in state u are lower bounds on its goal distance, and the larger of these two values is the more accurate estimate. The value-update step then replaces the h-value of state u with this value. The value-update step of LRTA* is sometimes stated as

of an A* search from u toward the goal states.) The value-update step replaces the h-value of the current state with a more accurate estimate of the goal distance of the state based on the costs-to-go of the actions that can be executed in it, which is similar to temporal difference learning in reinforcement learning. If all h-values are admissible, then both h (u) and the minimum of the costs-to-go of the actions that can be executed in state u are lower bounds on its goal distance, and the larger of these two values is the more accurate estimate. The value-update step then replaces the h-value of state u with this value. The value-update step of LRTA* is sometimes stated as  . Our slightly more complex variant guarantees that the h-values are nondecreasing. Since the h-values remain admissible and larger admissible h-values tend to guide the search better than smaller admissible h-values, there is no reason to decrease them. If the h-values are consistent then both value-update steps are equivalent. Thus, LRTA* approximates Bellman's optimal condition for minimal-cost paths in a way similar to Dijkstra's algorithm. However, the h-values of LRTA* with admissible initial h-values approach the goal distances from below and are monotonically nondecreasing, while the corresponding values of Dijkstra's algorithm approach the goal distances from above and are monotonically nonincreasing.

. Our slightly more complex variant guarantees that the h-values are nondecreasing. Since the h-values remain admissible and larger admissible h-values tend to guide the search better than smaller admissible h-values, there is no reason to decrease them. If the h-values are consistent then both value-update steps are equivalent. Thus, LRTA* approximates Bellman's optimal condition for minimal-cost paths in a way similar to Dijkstra's algorithm. However, the h-values of LRTA* with admissible initial h-values approach the goal distances from below and are monotonically nondecreasing, while the corresponding values of Dijkstra's algorithm approach the goal distances from above and are monotonically nonincreasing.

LRTA* with lookahead one and LRTA* with minimal local search spaces behave identically in state spaces where the execution of all actions in nongoal states necessarily results in a state change, that is, it cannot leave the current state unchanged. In general, actions that are not guaranteed to result in a state change can safely be deleted from state spaces because there always exists a path that does not include them (such as the minimal-cost path), if there exists a path at all. LRTA* with arbitrary local search spaces, including minimal and maximal local search spaces, never executes actions whose execution can leave the current state unchanged but LRTA* with lookahead one can execute them. To make them identical, one can change the action-selection step of LRTA* with lookahead one to “ . However, this is seldom done in the literature.

. However, this is seldom done in the literature.

In the following, we refer to LRTA* as shown in Algorithm 11.1 and not to LRTA* with lookahead one as shown in Algorithm 11.3 when we analyze the execution cost of LRTA*.

11.3. Analysis of the Execution Cost of LRTA*

A disadvantage of LRTA* is that it cannot solve all search tasks. This is so because it interleaves searches and action executions. All search methods can solve only search tasks for which the goal distance of the start state is finite. Interleaving searches and action executions limits the solvable search tasks because actions are executed before their complete consequences are known. Thus, even if the goal distance of the start state is finite, it is possible that LRTA* accidentally executes actions that lead to a state with infinite goal distance, such as actions that “blow up the world," at which point the search task becomes unsolvable for the agent. However, LRTA* is guaranteed to solve all search tasks in safely explorable state spaces. State spaces are safely explorable if and only if the goal distances of all states are finite; that is, the depth is finite. (For safely explorable state spaces where all action costs are one, it holds that  .) To be precise: First, all states of the state space that cannot possibly be reached from the start state, or can be reached from the start state only by passing through a goal state, can be deleted. The goal distances of all remaining states have to be finite. Safely explorable state spaces guarantee that LRTA* is able to reach a goal state no matter which actions it has executed in the past. Strongly connected state spaces (where every state can be reached from every other state), for example, are safely explorable. In state spaces that are not safely explorable, LRTA* either stops in a goal state or reaches a state with goal distance infinity and then executes actions forever. We could modify LRTA* to use information from its local search spaces to detect that it can no longer reach a goal state (e.g., because the h-values have increased substantially) but this is complicated and seldom done in the literature. In the following, we assume that the state spaces are safely explorable.

.) To be precise: First, all states of the state space that cannot possibly be reached from the start state, or can be reached from the start state only by passing through a goal state, can be deleted. The goal distances of all remaining states have to be finite. Safely explorable state spaces guarantee that LRTA* is able to reach a goal state no matter which actions it has executed in the past. Strongly connected state spaces (where every state can be reached from every other state), for example, are safely explorable. In state spaces that are not safely explorable, LRTA* either stops in a goal state or reaches a state with goal distance infinity and then executes actions forever. We could modify LRTA* to use information from its local search spaces to detect that it can no longer reach a goal state (e.g., because the h-values have increased substantially) but this is complicated and seldom done in the literature. In the following, we assume that the state spaces are safely explorable.

LRTA* always reaches a goal state with a finite execution cost in all safely explorable state spaces, as can be shown by contradiction (the cycle argument ). If LRTA* did not reach a goal state eventually, then it would cycle forever. Since the state space is safely explorable, there must be some way out of the cycle. We show that LRTA* eventually executes an action that takes it out of the cycle, which is a contradiction: If LRTA* did not reach a goal state eventually, it would execute actions forever. In this case, there is a time t after which LRTA* visits only those states again that it visits infinitely often; it cycles in part of the state space. The h-values of the states in the cycle increase beyond any bound, since LRTA* repeatedly increases the h-value of the state with the minimal h-value in the cycle by at least the minimal action cost wmin of any action. It gets into a state u with the minimal h-value in the cycle and all of its successor states then have h-values that are no smaller than its own h-value. Let h denote the h-values before the value-update step and h′ denote the h-values after the value-update step. According to Lemma 11.1, the h-value of state u is then set to

. In particular, the h-values of the states in the cycle rise by arbitrarily large amounts above the h-values of all states that border the cycle. Such states exist since a goal state can be reached from every state in safely explorable state spaces. Then, however, LRTA* is forced to visit such a state after time t and leave the cycle, which is a contradiction.

. In particular, the h-values of the states in the cycle rise by arbitrarily large amounts above the h-values of all states that border the cycle. Such states exist since a goal state can be reached from every state in safely explorable state spaces. Then, however, LRTA* is forced to visit such a state after time t and leave the cycle, which is a contradiction.

The performance of LRTA* is measured by its execution cost until it reaches a goal state. The complexity of LRTA* is its worst-case execution cost over all possible topologies of state spaces of the same sizes, all possible start and goal states, and all tie-breaking rules among indistinguishable actions. We are interested in determining how the complexity scales as the state spaces get larger. We measure the sizes of state spaces as nonnegative integers and use measures x, such as x = nd, the product of the number of states and the depth. An upper complexity bound Ox has to hold for every x that is larger than some constant. Since we are mostly interested in the general trend (but not outliers) for the lower complexity bound, a lower complexity bound Ω (x) has to hold only for infinitely many different x. Furthermore, we vary only x. If x is a product, we do not vary both of its factors independently. This is sufficient for our purposes. A tight complexity bound  means both Oxand

means both Oxand  . To be able to express the complexity in terms of the number of states only, we often make the assumption that the state spaces are reasonable. Reasonable state spaces are safely explorable state spaces with

. To be able to express the complexity in terms of the number of states only, we often make the assumption that the state spaces are reasonable. Reasonable state spaces are safely explorable state spaces with  (or, more generally, state spaces of which the number of actions does not grow faster than the number of states squared). For example, safely explorable state spaces where the execution of different actions in the same state results in different successor states are reasonable. For reasonable state spaces where all action costs are one, it holds that

(or, more generally, state spaces of which the number of actions does not grow faster than the number of states squared). For example, safely explorable state spaces where the execution of different actions in the same state results in different successor states are reasonable. For reasonable state spaces where all action costs are one, it holds that  and

and  . We also study Eulerian state spaces. A Eulerian state space is a state space where there are as many actions that leave a state as there are actions that enter it. For example, undirected state spaces are Eulerian. An undirected state space is a state space where every action in a state u of which the execution results in a particular successor state v has a unique corresponding action in state v of which the execution results in state u.

. We also study Eulerian state spaces. A Eulerian state space is a state space where there are as many actions that leave a state as there are actions that enter it. For example, undirected state spaces are Eulerian. An undirected state space is a state space where every action in a state u of which the execution results in a particular successor state v has a unique corresponding action in state v of which the execution results in state u.

11.3.1. Upper Bound on the Execution Cost of LRTA*

In this section, we provide an upper bound on the complexity of LRTA* without repeat-until loop. Our analysis is centered around the invariant from Lemma 11.3. The time superscript t refers to the values of the variables immediately before the (t + 1) st value-update step of LRTA*. For instance, u0 denotes the start state s and  the action executed directly after the (t + 1) st value-update step. Similarly,

the action executed directly after the (t + 1) st value-update step. Similarly,  denotes the h-values before the (t + 1) st value-update step and

denotes the h-values before the (t + 1) st value-update step and  denotes the h-values after the

denotes the h-values after the  st value-update step. In the following, we prove an upper bound on the execution cost after which LRTA* is guaranteed to reach a goal state in safely explorable state spaces.

st value-update step. In the following, we prove an upper bound on the execution cost after which LRTA* is guaranteed to reach a goal state in safely explorable state spaces.

Lemma 11.3

For all times t = 0,1,2,… (until termination) it holds that the execution cost of LRTA* with admissible initial h-values h0 at time t is at most  . (Sums have a higher precedence than other operators. For example,

. (Sums have a higher precedence than other operators. For example,  .)

.)

Proof

by induction: The h-values are admissible at time t according to Lemma 11.2. Thus, they are bounded from above by the goal distances, which are finite since the state space is safely explorable. For t = 0, the execution cost and its upper bound are both zero, and the lemma thus holds. Now assume that the theorem holds at time t. The execution cost increases by  and the upper bound increases by

and the upper bound increases by and the lemma thus continues to hold.

and the lemma thus continues to hold.

Theorem 11.1 uses Lemma 11.3 to derive an upper bound on the execution cost.

Theorem 11.1

(Completeness of LRTA*) LRTA* with admissible initial h-values h0 reaches a goal state with an execution cost of at most  .

.

Proof

Since the goal distances are finite in safely explorable state spaces and the minimal action cost wmin of any action is strictly positive, Theorem 11.1 shows that LRTA* with admissible initial h-values reaches a goal state after a bounded number of action executions in safely explorable state spaces; that is, it is complete. More precisely: LRTA* reaches a goal state with an execution cost of at most  , and thus after at most

, and thus after at most  action executions. Thus, it reaches a goal state with finite execution cost in safely explorable state spaces. One consequence of this result is that search tasks in state spaces where all states are clustered around the goal states are easier to solve with LRTA* than search tasks in state spaces that do not possess this property. Consider, for example, sliding-tile puzzles, which are sometimes considered to be hard search tasks because they have a small goal density. The Eight-Puzzle has 181,440 states but only one goal state. However, the average goal distance of the Eight-Puzzle (with the goal configuration where the tiles form a ring around the center) is only 21.5 and its maximal goal distance is only 30. This implies that LRTA* never moves far away from the goal state even if it makes a mistake and executes an action that does not decrease the goal distance. This makes the Eight-Puzzle easy to search with LRTA* relative to other state spaces with the same number of states.

action executions. Thus, it reaches a goal state with finite execution cost in safely explorable state spaces. One consequence of this result is that search tasks in state spaces where all states are clustered around the goal states are easier to solve with LRTA* than search tasks in state spaces that do not possess this property. Consider, for example, sliding-tile puzzles, which are sometimes considered to be hard search tasks because they have a small goal density. The Eight-Puzzle has 181,440 states but only one goal state. However, the average goal distance of the Eight-Puzzle (with the goal configuration where the tiles form a ring around the center) is only 21.5 and its maximal goal distance is only 30. This implies that LRTA* never moves far away from the goal state even if it makes a mistake and executes an action that does not decrease the goal distance. This makes the Eight-Puzzle easy to search with LRTA* relative to other state spaces with the same number of states.

11.3.2. Lower Bound on the Execution Cost of LRTA*

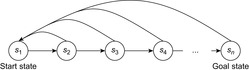

LRTA* reaches a goal state with an execution cost of at most  , and it holds that

, and it holds that  in safely explorable state spaces where all action costs are one. Now assume that LRTA* with minimal local search spaces is zero-initialized, which implies that it is uninformed. In the following, we show that the upper complexity bound is then tight for infinitely many n. Figure 11.5 shows an example for which the execution cost of zero-initialized LRTA* with minimal local search spaces is

in safely explorable state spaces where all action costs are one. Now assume that LRTA* with minimal local search spaces is zero-initialized, which implies that it is uninformed. In the following, we show that the upper complexity bound is then tight for infinitely many n. Figure 11.5 shows an example for which the execution cost of zero-initialized LRTA* with minimal local search spaces is  in the worst case until it reaches a goal state. The upper part of the figure shows the state space. All action costs are one. The states are annotated with their goal distances, their initial h-values, and their names. The lower part of the figure shows the behavior of LRTA*. On the right, the figure shows the state space with the h-values after the value-update step but before the action-execution step. The current state is shown in bold. On the left, the figure shows the searches that resulted in the h-values shown on the right. Again, the states are annotated with their h-values after the value-update step but before the action-execution step. The current state is on top. Ellipses show the local search spaces, and dashed lines show the actions that LRTA* is about to execute. For the example search task, after LRTA* has visited a state for the first time, it has to move through all previously visited states again before it is able to visit another state for the first time. Thus, the execution cost is quadratic in the number of states. If LRTA* breaks ties in favor of successor states with smaller indices, then its execution cost f (n) until it reaches the goal state satisfies the recursive equations

in the worst case until it reaches a goal state. The upper part of the figure shows the state space. All action costs are one. The states are annotated with their goal distances, their initial h-values, and their names. The lower part of the figure shows the behavior of LRTA*. On the right, the figure shows the state space with the h-values after the value-update step but before the action-execution step. The current state is shown in bold. On the left, the figure shows the searches that resulted in the h-values shown on the right. Again, the states are annotated with their h-values after the value-update step but before the action-execution step. The current state is on top. Ellipses show the local search spaces, and dashed lines show the actions that LRTA* is about to execute. For the example search task, after LRTA* has visited a state for the first time, it has to move through all previously visited states again before it is able to visit another state for the first time. Thus, the execution cost is quadratic in the number of states. If LRTA* breaks ties in favor of successor states with smaller indices, then its execution cost f (n) until it reaches the goal state satisfies the recursive equations  and

and  . Thus, its execution cost is

. Thus, its execution cost is  (for

(for  ). The execution cost equals exactly the sum of the goal distances because LRTA* was zero-initialized and its final h-values are equal to the goal distances. For example,

). The execution cost equals exactly the sum of the goal distances because LRTA* was zero-initialized and its final h-values are equal to the goal distances. For example,  for n = 5. In this case, LRTA* follows the path s1, s2, s1, s3, s2, s1, s4, s3, s2, s1, and s5.

for n = 5. In this case, LRTA* follows the path s1, s2, s1, s3, s2, s1, s4, s3, s2, s1, and s5.

|

| Figure 11.5 LRTA* in a state space with worst-case execution cost, by Koenig (2001). |

Overall, the previous section showed that the complexity of zero-initialized LRTA* with minimal local search spaces is  over all state spaces where all actions costs are one, and the example in this section shows that it is

over all state spaces where all actions costs are one, and the example in this section shows that it is  . Thus, the complexity is

. Thus, the complexity is  over all state spaces where all actions costs are one and

over all state spaces where all actions costs are one and  over all safely explorable state spaces where all actions costs are one (see Exercises).

over all safely explorable state spaces where all actions costs are one (see Exercises).

11.4. Features of LRTA*

In this section, we explain the three key features of LRTA*.

11.4.1. Heuristic Knowledge

LRTA* uses heuristic knowledge to guide its search. The larger its initial h-values, the smaller the upper bound on its execution cost provided by Theorem 11.1. For example, LRTA* is fully informed if and only if its initial h-values equal the goal distances of the states. In this case, Theorem 11.1 predicts that the execution cost is at most  until LRTA* reaches a goal state. Thus, its execution cost is worst-case optimal and no other search method can do better in the worst case. In general, the execution cost is the smaller the more informed the initial h-values are although this correlation is not guaranteed to be perfect.

until LRTA* reaches a goal state. Thus, its execution cost is worst-case optimal and no other search method can do better in the worst case. In general, the execution cost is the smaller the more informed the initial h-values are although this correlation is not guaranteed to be perfect.

11.4.2. Fine-Grained Control

LRTA* allows for fine-grained control over how much search to perform between action executions by varying the sizes of its local search spaces. For example, LRTA* with repeat-until loop and maximal or, more generally, sufficiently large local search spaces performs a complete search without interleaving searches and action executions, which is slow but produces minimal-cost paths and thus minimizes the execution cost. On the other hand, LRTA* with minimal local search spaces performs almost no searches between action executions. There are several advantages to this fine-grained control.

In the presence of time constraints, LRTA* can be used as an anytime contract algorithm for determining which action to execute next, which allows it to adjust the amount of search performed between action executions to the search and execution speeds of robots or the time a player is willing to wait for a game-playing program to make a move. Anytime contract algorithms are search methods that can solve search tasks for any given bound on their search cost, so that their solution quality tends to increase with the available search cost.

The amount of search between action executions does not only influence the search cost but also the execution cost and thus also the sum of the search and execution cost. Typically, reducing the amount of search between action executions reduces the (overall) search cost but increases the execution cost (because LRTA* selects actions based on less information), although theoretically the search cost could also increase if the execution cost increases sufficiently (because LRTA* performs more searches). The amount of search between action executions that minimizes the sum of the search and execution cost depends on the search and execution speeds of the agent.

Fast-acting agents: A smaller amount of search between action executions tends to benefit agents of which the execution speed is sufficiently fast compared to their search speed since the resulting increase in execution cost is small compared to the resulting decrease in search cost, especially if heuristic knowledge focuses the search sufficiently well. For example, the sum of the search and execution cost approaches the search cost as the execution speed increases, and the search cost can often be reduced by reducing the amount of search between action executions. When LRTA* is used to solve search tasks offline, it only moves a marker within the computer (that represents the state of a fictitious agent), and thus action execution is fast. Small local search spaces are therefore optimal for solving sliding-tile puzzles with the Manhattan distance.

Slow-acting agents: A larger amount of search between action executions is needed for agents of which the search speed is sufficiently fast compared to their execution speed. For example, the sum of the search and execution cost approaches the execution cost as the search speed increases, and the execution cost can often be reduced by increasing the amount of search between action executions. Most robots are examples of slow-acting agents.

We will discuss later in this chapter that larger local search spaces sometimes allow agents to avoid executing actions from which they cannot recover in state spaces that are not safely explorable. On the other hand, larger local search spaces might not be practical in state spaces that are not known in advance and need to get learned during execution since there can be an advantage to restricting the search to the known part of the state space.

11.4.3. Improvement of Execution Cost

If the initial h-values are not completely informed and the local search spaces are small, then it is unlikely that the execution cost of LRTA* is minimal. In Figure 11.1, for example, the robot could reach the goal cell in seven action executions. However, LRTA* improves its execution cost, although not necessarily monotonically, as it solves search tasks with the same set of goal states (even with different start states) in the same state spaces until its execution cost is minimal; that is, until it has converged. Thus, LRTA* can always have a small sum of search and execution cost and still minimize the execution cost in the long run in case similar search tasks unexpectedly repeat, which is the reason for the “learning” in its name. Assume that LRTA* solves a series of search tasks in the same state space with the same set of goal states. The start states need not be identical. If the initial h-values of LRTA* are admissible for the first search task, then they are also admissible for the first search task after LRTA* has solved the search task and are statewise at least as informed as initially. Thus, they are also admissible for the second search task and LRTA* can continue to use the same h-values across search tasks. The start states of the search tasks can differ since the admissibility of h-values does not depend on them. This way, LRTA* can transfer acquired knowledge from one search task to the next one, thereby making its h-values more informed. Ultimately, more informed h-values result in an improved execution cost, although the improvement is not necessarily monotonic. (This also explains why LRTA* can be interrupted at any state and resume execution at a different state.) The following theorems formalize this knowledge transfer in the mistake-bounded error model. The mistake-bounded error model is one way of analyzing learning methods by bounding the number of mistakes that they make. Here, it counts as one mistake when LRTA* reaches a goal state with an execution cost that is larger than the goal distance of the start state and thus does not follow a minimal-cost path from the start state to a goal state.

Theorem 11.2

(Convergence of LRTA*) Assume that LRTA* maintains h-values across a series of search tasks in the same safely explorable state space with the same set of goal states. Then, the number of search tasks for which LRTA* with admissible initial h-values reaches a goal state with an execution cost of more than δ (s, T) (where s is the start state of the current search task) is bounded from above.

Proof

It is easy to see that the agent follows a minimal-cost path from the start state to a goal state if it follows a path from the start state to a goal state where the h-value of every state is equal to its goal distance. If the agent does not follow such a path, then it transitions at least once from a state u of which the h-value is not equal to its goal distance to a state v of which the h-value is equal to its goal distance, since it reaches a goal state and the h-value of the goal state is zero since the h-values remain admissible according to Lemma 11.2. Let a denote the action of which the execution in state u results in state v. Let h denote the h-values before the value-update step and h′ denote the h-values after the value-update step. According to Lemma 11.1, the h-value of state u is set to

. Thus,

. Thus,  since the h-values cannot become inadmissible according to Lemma 11.2. After the h-value of state u is set to its goal distance, the h-value can no longer change since it could only increase according to Lemma 11.2 but would then make the h-values inadmissible, which is impossible according to the same lemma. Since the number of states is finite, it can happen only a bounded number of times that the h-value of a state is set to its goal distance. Thus, the number of times that the agent does not follow a minimal-cost path from the start state to a goal state is bounded.

since the h-values cannot become inadmissible according to Lemma 11.2. After the h-value of state u is set to its goal distance, the h-value can no longer change since it could only increase according to Lemma 11.2 but would then make the h-values inadmissible, which is impossible according to the same lemma. Since the number of states is finite, it can happen only a bounded number of times that the h-value of a state is set to its goal distance. Thus, the number of times that the agent does not follow a minimal-cost path from the start state to a goal state is bounded.

Assume that LRTA* solves the same search task repeatedly from the same start state, and that the action-selection step always breaks ties among the actions in the current state according to a fixed ordering of the actions. Then, the h-values no longer change after a finite number of searches, and LRTA* follows the same minimal-cost path from the start state to a goal state during all future searches. (If LRTA* breaks ties randomly, then it eventually discovers all minimal-cost paths from the start state to the goal states.) Figure 11.1 (all columns) illustrates this aspect of LRTA*. In the example, LRTA* with minimal local search spaces breaks ties among successor cells in the following order: north, east, south, and west. Eventually, the robot always follows a minimal-cost path to the goal cell. Figure 11.6 visualizes the h-value surface formed by the final h-values. The robot now reaches the goal cell on a minimal-cost path if it always moves to the successor cell with the minimal h-value (and breaks ties in the given order) and thus performs steepest descent on the final h-value surface.

|

| Figure 11.6 h-value surface after convergence, by Koenig (2001). |

11.5. Variants of LRTA*

We now discuss several variants of LRTA*.

11.5.1. Variants with Local Search Spaces of Varying Sizes

LRTA* with small local search spaces executes a large number of actions to escape from depressions (that is, valleys) in the h-value surface (see Exercises). It can avoid this by varying the sizes of its local search spaces during a search, namely by increasing the sizes of its local search spaces in depressions. For example, LRTA* can use minimal local search spaces until it reaches the bottom of a depression. It can detect this situation because then the h-value of its current state is smaller than the costs-to-go of all actions that can be executed in it (before it executes the value-update step). In this case, it determines the local search space that contains all states that are part of the depression by starting with its current state and then repeatedly adding successor states of states in the local search space to the local search space so that, once a successor state is added, the h-value of each state in the local search space is less than the cost-to-go of all actions that can be executed in it and result in successor states outside of the local search space. The local search space is complete when no more states can be added. In Figure 11.1 (left), for example, LRTA* picks the minimal local search space that contains only state C1 when it is in state C1. It notices that it has reached the bottom of a depression and picks a local search space that consists of the states B1, C1, and C2 when it is in state C2. Its value-update step then sets the h-values of B1, C1, and C2 to 6, 7, and 8, respectively, which completely eliminates the depression.

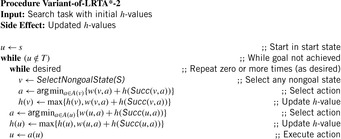

11.5.2. Variants with Minimal Lookahead

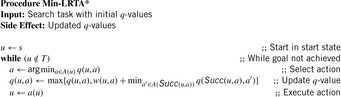

LRTA* needs to predict the successor states of actions (i.e., the successor states resulting from their execution). We can decrease its lookahead further if we associate the values with state-action pairs rather than states. Algorithm 11.4 shows pseudo code for Min-LRTA*, which associates a q-value  with each action

with each action  that can be executed in state

that can be executed in state  . The q-values are similar to the signs used by SLRTA* and the state-action values of reinforcement-learning methods, such a Q-Learning, and correspond to the costs-to-go of the actions. The q-values are updated as the search progresses, both to focus the search and avoid cycling. Min-LRTA* has minimal lookahead (essentially lookahead zero) because it uses only the q-values local to the current state to determine which action to execute. Thus, it does not even project one action execution ahead. This means that it does not need to learn an action model of the state space, which makes it applicable to situations where the action model is not known in advance, and thus the agent cannot predict the successor states of actions before it has executed them at least once. The action-selection step of Min-LRTA* always greedily chooses an action with the minimal q-value in the current state. The value-update step of Min-LRTA* replaces

. The q-values are similar to the signs used by SLRTA* and the state-action values of reinforcement-learning methods, such a Q-Learning, and correspond to the costs-to-go of the actions. The q-values are updated as the search progresses, both to focus the search and avoid cycling. Min-LRTA* has minimal lookahead (essentially lookahead zero) because it uses only the q-values local to the current state to determine which action to execute. Thus, it does not even project one action execution ahead. This means that it does not need to learn an action model of the state space, which makes it applicable to situations where the action model is not known in advance, and thus the agent cannot predict the successor states of actions before it has executed them at least once. The action-selection step of Min-LRTA* always greedily chooses an action with the minimal q-value in the current state. The value-update step of Min-LRTA* replaces  with a more accurate lookahead value. This can be explained as follows: The q-value

with a more accurate lookahead value. This can be explained as follows: The q-value  of any state-action pair is the cost-to-go of action a′ in state v and thus a lower bound on the goal distance if one starts in state v, executes action a′, and then behaves optimally. Thus,

of any state-action pair is the cost-to-go of action a′ in state v and thus a lower bound on the goal distance if one starts in state v, executes action a′, and then behaves optimally. Thus,  is a lower bound on the goal distance of state v, and

is a lower bound on the goal distance of state v, and  is a lower bound on the goal distance if one starts in state u, executes action a, and then behaves optimally. Min-LRTA* always reaches a goal state with a finite execution cost in all safely explorable state spaces, as can be shown with a cycle argument in a way similar to the proof of the same property of LRTA*.

is a lower bound on the goal distance if one starts in state u, executes action a, and then behaves optimally. Min-LRTA* always reaches a goal state with a finite execution cost in all safely explorable state spaces, as can be shown with a cycle argument in a way similar to the proof of the same property of LRTA*.

Theorem 11.3

(Execution Cost of Real-Time Search with Minimal Lookahead) The complexity of every real-time search method that cannot predict the successor states of actions before it has executed them at least once is Ω (ed) over all state spaces where all action costs are one. Furthermore, the complexity is Ω (n3) over all reasonable state spaces where all action costs are one.

Proof

Figure 11.7 shows a complex state space, which is a reasonable state space in which all states (but the start state) have several actions that lead back toward the start state. All action costs are one. Every real-time search method that cannot predict the successor states of actions before it has executed them at least once has to execute Ω (ed) or, alternatively, Ω (n3) actions in the worst case to reach a goal state in complex state spaces. It has to execute each of the  actions in nongoal states that lead away from the goal state at least once in the worst case. Over all of these cases, it has to execute

actions in nongoal states that lead away from the goal state at least once in the worst case. Over all of these cases, it has to execute  actions on average to recover from the action, for a total of

actions on average to recover from the action, for a total of  actions. In particular, it can execute at least

actions. In particular, it can execute at least  actions before it reaches the goal state (for

actions before it reaches the goal state (for  ). Thus, the complexity is

). Thus, the complexity is  and

and  since

since  (for

(for  ) and

) and  (for

(for  ).

).

Theorem 11.3 provides lower bounds on the number of actions that zero-initialized Min-LRTA* executes. It turns out that these lower bounds are tight for zero-initialized Min-LRTA* over all state spaces where all action costs are one and remain tight over all undirected state spaces and Eulerian state spaces where all action costs are one (see Exercises).

11.5.3. Variants with Faster Value Updates

Real-time adaptive A* (RTAA*) is a real-time search method that is similar to LRTA* but its value-update step is much faster. Assume that we have to perform several (forward) A* searches with consistent h-values in the same state space and with the same set of goal states but possibly different start states. Assume that v is a state that was expanded during such an A* search. The distance from the start state s to any goal state via state v is equal to the distance from the start state s to state v plus the goal distance δ (v, T) of state v. It clearly cannot be smaller than the goal distance δ (s, T)of the start state s. Thus, the goal distance δ (v, T) of state v is no smaller than the goal distance δ (s, T) of the start state s(i.e., the f-value  of the goal state

of the goal state  that was about to be expanded when the A* search terminates) minus the distance from the start state s to state v(i.e., the g-value g (v) of state v when the A* search terminates):

that was about to be expanded when the A* search terminates) minus the distance from the start state s to state v(i.e., the g-value g (v) of state v when the A* search terminates):

Consequently,  provides a nonoverestimating estimate of the goal distance δ (v, T) of state v and can be calculated quickly. More informed consistent h-values can be obtained by calculating and assigning this difference to every state that was expanded during the A* search and thus is in the closed list when the A* search terminates. We now use this idea to develop RTAA*, which reduces to the case above if its local search spaces are maximal.

provides a nonoverestimating estimate of the goal distance δ (v, T) of state v and can be calculated quickly. More informed consistent h-values can be obtained by calculating and assigning this difference to every state that was expanded during the A* search and thus is in the closed list when the A* search terminates. We now use this idea to develop RTAA*, which reduces to the case above if its local search spaces are maximal.

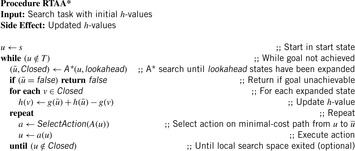

Algorithm 11.5 shows pseudo code for RTAA*, which follows the pseudo code of LRTA*. The h-values of RTAA* approximate the goal distances of the states. They can be initialized using a consistent heuristic function. We mentioned earlier that LRTA* could use (forward) A* searches to determine the local search spaces. RTAA* does exactly that. The (forward) A* search in the pseudo code is a regular A* search that uses the current h-values to search from the current state of the agent toward the goal states until a goal state is about to be expanded or lookahead > 0 states have been expanded. After the A* search, we require  to be the state that was about to be expanded when the A* search terminated. We denote this state consistently with

to be the state that was about to be expanded when the A* search terminated. We denote this state consistently with  . We require that

. We require that  if the A* search terminated due to an empty open list, in which case it is impossible to reach a goal state with finite execution cost from the current state and RTAA* thus returns failure. Otherwise,

if the A* search terminated due to an empty open list, in which case it is impossible to reach a goal state with finite execution cost from the current state and RTAA* thus returns failure. Otherwise,  is either a goal state or the state that the A* search would have been expanded as the (lookahead + 1) st state. We require the closed list Closed to contain the states expanded during the A* search and the g-value g (v) to be defined for all generated states v, including all expanded states. We define the f-values

is either a goal state or the state that the A* search would have been expanded as the (lookahead + 1) st state. We require the closed list Closed to contain the states expanded during the A* search and the g-value g (v) to be defined for all generated states v, including all expanded states. We define the f-values  for these states v. The expanded states v form the local search space, and RTAA* updates their h-values by setting

for these states v. The expanded states v form the local search space, and RTAA* updates their h-values by setting  . The h-values of the other states remain unchanged. We give an example of the operation of RTAA* in Section 11.6.2. For example, consider Figure 11.16. The states in the closed list are shown in gray, and the arrows point to the states

. The h-values of the other states remain unchanged. We give an example of the operation of RTAA* in Section 11.6.2. For example, consider Figure 11.16. The states in the closed list are shown in gray, and the arrows point to the states  .

.

RTAA* always reaches a goal state with a finite execution cost in all safely explorable state spaces no matter how it chooses its values of lookahead and whether it uses the repeat-until loop or not, as can be shown with a cycle argument in a way similar to the proof of the same property of LRTA*. We now prove several additional properties of RTAA* without repeat-until loop. We make use of the following known properties of A* searches with consistent h-values. First, they expand every state at most once. Second, the g-values of every expanded state v and state  are equal to the distance from the start state to state v and state

are equal to the distance from the start state to state v and state  , respectively, when the A* search terminates. Thus, we know minimal-cost paths from the start state to all expanded states and state

, respectively, when the A* search terminates. Thus, we know minimal-cost paths from the start state to all expanded states and state  . Third, the f-values of the series of expanded states over time are monotonically nondecreasing. Thus,

. Third, the f-values of the series of expanded states over time are monotonically nondecreasing. Thus,  for all expanded states v and

for all expanded states v and  for all generated states v that remained unexpanded when the A* search terminates.

for all generated states v that remained unexpanded when the A* search terminates.

|

| Figure 11.16 |

Lemma 11.4

Consistent initial h-values remain consistent after every value-update step of RTAA* and are monotonically nondecreasing.

Proof

We first show that the h-values are monotonically nondecreasing. Assume that the h-value of a state v is updated. Then, state v was expanded and it thus holds that  . Consequently,

. Consequently,  and the value-update step cannot decrease the h-value of state v since it changes the h-value from h (v) to

and the value-update step cannot decrease the h-value of state v since it changes the h-value from h (v) to  . We now show that the h-values remain consistent by induction on the number of A* searches. The initial h-values are provided by the user and consistent. It thus holds that

. We now show that the h-values remain consistent by induction on the number of A* searches. The initial h-values are provided by the user and consistent. It thus holds that  for all goal states v. This continues to hold since goal states are not expanded and their h-values thus not updated. (Even if RTAA* updated the h-value of state

for all goal states v. This continues to hold since goal states are not expanded and their h-values thus not updated. (Even if RTAA* updated the h-value of state  , it would leave the h-value of that state unchanged since

, it would leave the h-value of that state unchanged since  . Thus, the h-values of the goal states would remain zero even in that case.) It also holds that

. Thus, the h-values of the goal states would remain zero even in that case.) It also holds that  for all nongoal states v and actions a that can be executed in them, since the h-values are consistent. Let h denote the h-values before the value-update step and h′ denote the h-values after the value-update step. We distinguish three cases for all nongoal states v and actions a that can be executed in them:

for all nongoal states v and actions a that can be executed in them, since the h-values are consistent. Let h denote the h-values before the value-update step and h′ denote the h-values after the value-update step. We distinguish three cases for all nongoal states v and actions a that can be executed in them:

• Second, state v was expanded but state  was not, which implies that

was not, which implies that  and

and  . Also,

. Also,  for the same reason as in the first case, and

for the same reason as in the first case, and  since state

since state  was generated but not expanded. Thus,

was generated but not expanded. Thus,

.

.

• Third, state v was not expanded, which implies that  . Also,

. Also,  since the h-values of the same state are monotonically nondecreasing over time. Thus,

since the h-values of the same state are monotonically nondecreasing over time. Thus,  .

.

Thus,  in all three cases and the h-values thus remain consistent.

in all three cases and the h-values thus remain consistent.

Theorem 11.4

(Convergence of RTAA*) Assume that RTAA* maintains h-values across a series of search tasks in the same safely explorable state space with the same set of goal states. Then, the number of search tasks for which RTAA* with consistent initial h-values reaches a goal state with an execution cost of more than δ (s, T) (where s is the start state of the current search task) is bounded from above.

Proof

The proof is identical to the proof of Theorem 11.2, except for the part where we prove that the h-value of state v is set to its goal distance if the agent transitions from a state v of which the h-value is not equal to its goal distance to a state w of which the h-value is equal to its goal distance. When the agent executes some action  in state v and transitions to state w, then state v is a parent of state w in the search tree produced during the last call of A* and it thus holds that (1) state v was expanded during the last call of A*, (2) either state w was also expanded during the last call of A* or

in state v and transitions to state w, then state v is a parent of state w in the search tree produced during the last call of A* and it thus holds that (1) state v was expanded during the last call of A*, (2) either state w was also expanded during the last call of A* or  , and (3)

, and (3)  . Let h denote the h-values before the value-update step and h′ denote the h-values after the value-update step. Then,

. Let h denote the h-values before the value-update step and h′ denote the h-values after the value-update step. Then,  and

and  . The last equality holds because we assumed that the h-value of state w was equal to its goal distance and thus can no longer change since it could only increase according to Lemma 11.4 but would then make the h-values inadmissible and thus inconsistent, which is impossible according to the same lemma. Consequently,

. The last equality holds because we assumed that the h-value of state w was equal to its goal distance and thus can no longer change since it could only increase according to Lemma 11.4 but would then make the h-values inadmissible and thus inconsistent, which is impossible according to the same lemma. Consequently,  , proving that

, proving that  since a larger h-value would make the h-values inadmissible and thus inconsistent, which is impossible according to Lemma 11.4. Thus, the h-value of state v is indeed set to its goal distance.

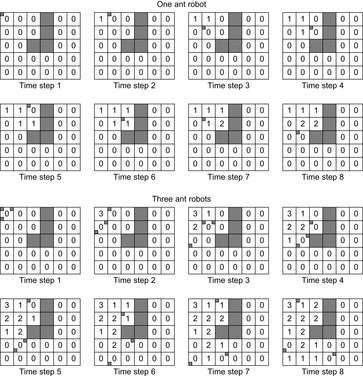

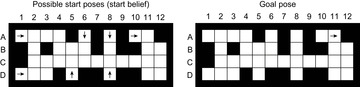

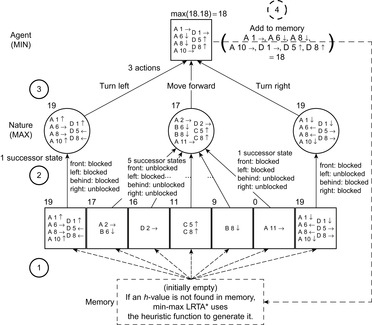

since a larger h-value would make the h-values inadmissible and thus inconsistent, which is impossible according to Lemma 11.4. Thus, the h-value of state v is indeed set to its goal distance.