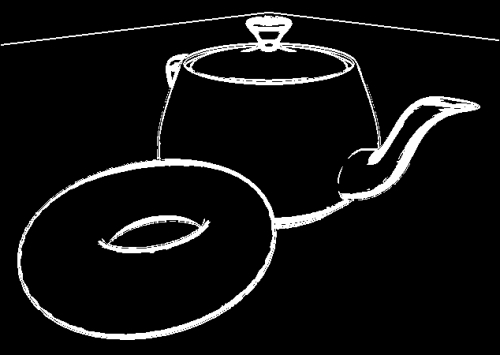

Edge detection is an image processing technique that identifies regions where there is a significant change in the brightness of the image. It provides a way to detect the boundaries of objects and changes in the topology of the surface. It has applications in the field of computer vision, image processing, image analysis, and image pattern recognition. It can also be used to create some visually interesting effects. For example, it can make a 3D scene look similar to a 2D pencil sketch as shown in the following image. To create this image, a teapot, and torus were rendered normally, and then an edge detection filter was applied in a second pass.

The edge detection filter that we'll use here involves the use of a convolution filter, or convolution kernel (also called a filter kernel). A convolution filter is a matrix that defines how to transform a pixel by replacing it with the sum of the products between the values of nearby pixels and a set of pre-determined weights. As a simple example, consider the following convolution filter:

The 3 x 3 filter is shaded in gray superimposed over a hypothetical grid of pixels. The bold faced numbers represent the values of the filter kernel (weights), and the non-bold faced values are the pixel values. The values of the pixels could represent gray-scale intensity or the value of one of the RGB components. Applying the filter to the center pixel in the gray area involves multiplying the corresponding cells together and summing the results. The result would be the new value for the center pixel (25). In this case, the value would be (17 + 19 + 2 * 25 + 31 + 33) or 150.

Of course, in order to apply a convolution filter, we need access to the pixels of the original image and a separate buffer to store the results of the filter. We'll achieve this here by using a two-pass algorithm. In the first pass, we'll render the image to a texture; and then in the second pass, we'll apply the filter by reading from the texture and send the filtered results to the screen.

One of the simplest, convolution-based techniques for edge detection is the so-called Sobel operator. The Sobel operator is designed to approximate the gradient of the image intensity at each pixel. It does so by applying two 3 x 3 filters. The results of the two are the vertical and horizontal components of the gradient. We can then use the magnitude of the gradient as our edge trigger. When the magnitude of the gradient is above a certain threshold, then we assume that the pixel is on an edge.

The 3 x 3 filter kernels used by the Sobel operator are shown in the following equation:

If the result of applying Sx is sx and the result of applying Sy is sy, then an approximation of the magnitude of the gradient is given by the following equation:

If the value of g is above a certain threshold, we consider the pixel to be an edge pixel, and we highlight it in the resulting image.

In this example, we'll implement this filter as the second pass of a two-pass algorithm. In the first pass, we'll render the scene using an appropriate lighting model, but we'll send the result to a texture. In the second pass, we'll render the entire texture as a screen-filling quad, and apply the filter to the texture.

Set up a framebuffer object (refer to the Rendering to a texture recipe in Chapter 4, Using Textures) that has the same dimensions as the main window. Connect the first color attachment of the FBO to a texture object in texture unit zero. During the first pass, we'll render directly to this texture. Make sure that the mag and min filters for this texture are set to GL_NEAREST. We don't want any interpolation for this algorithm.

Provide vertex information in vertex attribute zero, normals in vertex attribute one, and texture coordinates in vertex attribute two.

The following uniform variables need to be set from the OpenGL application:

Width: This is used to set the width of the screen window in pixelsHeight: This is used to set the height of the screen window in pixelsEdgeThreshold: This is the minimum value of g squared required to be considered "on an edge"RenderTex: This is the texture associated with the FBO

Any other uniforms associated with the shading model should also be set from the OpenGL application.

To create a shader program that applies the Sobel edge detection filter, use the following steps:

- Use the following code for the vertex shader:

layout (location = 0) in vec3 VertexPosition; layout (location = 1) in vec3 VertexNormal; out vec3 Position; out vec3 Normal; uniform mat4 ModelViewMatrix; uniform mat3 NormalMatrix; uniform mat4 ProjectionMatrix; uniform mat4 MVP; void main() { Normal = normalize( NormalMatrix * VertexNormal); Position = vec3( ModelViewMatrix * vec4(VertexPosition,1.0) ); gl_Position = MVP * vec4(VertexPosition,1.0); } - Use the following code for the fragment shader:

in vec3 Position; in vec3 Normal; // The texture containing the results of the first pass layout( binding=0 ) uniform sampler2D RenderTex; uniform float EdgeThreshold; // The squared threshold // This subroutine is used for selecting the functionality // of pass1 and pass2. subroutine vec4 RenderPassType(); subroutine uniform RenderPassType RenderPass; // Other uniform variables for the Phong reflection model // can be placed here… layout( location = 0 ) out vec4 FragColor; const vec3 lum = vec3(0.2126, 0.7152, 0.0722); vec3 phongModel( vec3 pos, vec3 norm ) { // The code for the basic ADS shading model goes here… } // Approximates the brightness of a RGB value. float luminance( vec3 color ) { return dot(lum, color); } subroutine (RenderPassType) vec4 pass1() { return vec4(phongModel( Position, Normal ),1.0); } subroutine( RenderPassType ) vec4 pass2() { ivec2 pix = ivec2(gl_FragCoord.xy); float s00 = luminance( texelFetchOffset(RenderTex, pix, 0, ivec2(-1,1)).rgb); float s10 = luminance( texelFetchOffset(RenderTex, pix, 0, ivec2(-1,0)).rgb); float s20 = luminance( texelFetchOffset(RenderTex, pix, 0, ivec2(-1,-1)).rgb); float s01 = luminance( texelFetchOffset(RenderTex, pix, 0, ivec2(0,1)).rgb); float s21 = luminance( texelFetchOffset(RenderTex, pix, 0, ivec2(0,-1)).rgb); float s02 = luminance( texelFetchOffset(RenderTex, pix, 0, ivec2(1,1)).rgb); float s12 = luminance( texelFetchOffset(RenderTex, pix, 0, ivec2(1,0)).rgb); float s22 = luminance( texelFetchOffset(RenderTex, pix, 0, ivec2(1,-1)).rgb); float sx = s00 + 2 * s10 + s20 - (s02 + 2 * s12 + s22); float sy = s00 + 2 * s01 + s02 - (s20 + 2 * s21 + s22); float g = sx * sx + sy * sy; if( g > EdgeThreshold ) return vec4(1.0); else return vec4(0.0,0.0,0.0,1.0); } void main() { // This will call either pass1() or pass2() FragColor = RenderPass(); }

In the render function of your OpenGL application, follow these steps for pass #1:

- Select the framebuffer object (FBO), and clear the color/depth buffers.

- Select the

pass1subroutine function (refer to the Using subroutines to select shader functionality recipe in Chapter 2, The Basics of GLSL Shaders). - Set up the model, view, and projection matrices, and draw the scene.

For pass #2, carry out the following steps:

- Deselect the FBO (revert to the default framebuffer), and clear the color/depth buffers.

- Select the

pass2subroutine function. - Set the model, view, and projection matrices to the identity matrix.

- Draw a single quad (or two triangles) that fills the screen (-1 to +1 in x and y), with texture coordinates that range from 0 to 1 in each dimension.

The first pass renders all of the scene's geometry sending the output to a texture. We select the subroutine function pass1, which simply computes and applies the Phong reflection model (refer to the Implementing per-vertex ambient, diffuse, and specular (ADS) shading recipe in Chapter 2, The Basics of GLSL Shaders).

In the second pass, we select the subroutine function pass2, and render only a single quad that covers the entire screen. The purpose of this is to invoke the fragment shader once for every pixel in the image. In the pass2 function, we retrieve the values of the eight neighboring pixels of the texture containing the results from the first pass, and compute their brightness by calling the luminance function. The horizontal and vertical Sobel filters are then applied and the results are stored in sx and sy.

We then compute the squared value of the magnitude of the gradient (in order to avoid the square root) and store the result in g. If the value of g is greater than EdgeThreshold, we consider the pixel to be on an edge, and we output a white pixel. Otherwise, we output a solid black pixel.

The Sobel operator is somewhat crude, and tends to be sensitive to high frequency variations in the intensity. A quick look at Wikipedia will guide you to a number of other edge detection techniques that may be more accurate. It is also possible to reduce the amount of high frequency variation by adding a "blur pass" between the render and edge detection passes. The "blur pass" will smooth out the high frequency fluctuations and may improve the results of the edge detection pass.

The technique discussed here requires eight texture fetches. Texture accesses can be somewhat slow, and reducing the number of accesses can result in substantial speed improvements. Chapter 24 of GPU Gems: Programming Techniques, Tips and Tricks for Real-Time Graphics, edited by Randima Fernando (Addison-Wesley Professional 2004), has an excellent discussion of ways to reduce the number of texture fetches in a filter operation by making use of so-called "helper" textures.

- D. Ziou and S. Tabbone (1998), Edge detection techniques: An overview, International Journal of Computer Vision, Vol 24, Issue 3

- Frei-Chen edge detector: http://rastergrid.com/blog/2011/01/frei-chen-edge-detector/

- The Using subroutines to select shader functionality recipe in Chapter 2, The Basics of GLSL Shaders

- The Rendering to a texture recipe in Chapter 4, Using Textures

- The Implementing per-vertex ambient, diffuse, and specular (ADS) shading recipe in Chapter 2, The Basics of GLSL Shaders