We will now see how to do area filtering, that is, 2D image convolution to implement effects like sharpening, blurring, and embossing. There are several ways to achieve image convolution in the spatial domain. The simplest approach is to use a loop that iterates through a given image window and computes the sum of products of the image intensities with the convolution kernel. The more efficient method, as far as the implementation is concerned, is separable convolution which breaks up the 2D convolution into two 1D convolutions. However, this approach requires an additional pass.

This recipe is built on top of the image loading recipe discussed in the first chapter. If you feel a bit lost, we suggest skimming through it to be on page with us. The code for this recipe is contained in the Chapter3/Convolution directory. For this recipe, most of the work takes place in the fragment shader.

Let us get started with the recipe as follows:

- Create a simple pass-through vertex shader that outputs the clip space position and the texture coordinates which are to be passed into the fragment shader for texture lookup.

#version 330 core in vec2 vVertex; out vec2 vUV; void main() { gl_Position = vec4(vVertex*2.0-1,0,1); vUV = vVertex; } - In the fragment shader, we declare a constant array called

kernelwhich stores our convolutionkernel. Changing the convolutionkernelvalues dictates the output of convolution. The defaultkernelsets up a sharpening convolution filter. Refer toChapter3/Convolution/shaders/shader_convolution.fragfor details.const float kernel[]=float[9] (-1,-1,-1,-1, 8,-1,-1,-1,-1);

- In the fragment shader, we run a nested loop that loops through the current pixel's neighborhood and multiplies the

kernelvalue with the current pixel's value. This is continued in an n x n neighborhood, where n is the width/height of thekernel.for(int j=-1;j<=1;j++) { for(int i=-1;i<=1;i++) { color += kernel[index--] * texture(textureMap, vUV+(vec2(i,j)*delta)); } } - After the nested loops, we divide the color value with the total number of values in the

kernel. For a 3 x 3kernel, we have nine values. Finally, we add the convolved color value to the current pixel's value.color/=9.0; vFragColor = color + texture(textureMap, vUV);

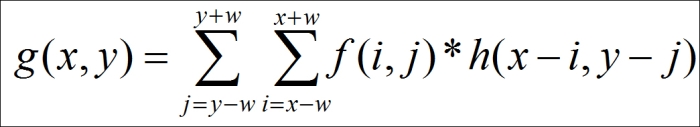

For a 2D digital image f(x,y), the processed image g(x,y), after the convolution operation with a kernel h(x,y), is defined mathematically as follows:

For each pixel, we simply sum the product of the current image pixel value with the corresponding coefficient in the kernel in the given neighborhood. For details about the kernel coefficients, we refer the reader to any standard text on digital image processing, like the one given in the See also section.

The overall algorithm works like this. We set up our FBO for offscreen rendering. We render our image on the offscreen render target of the FBO, instead of the back buffer. Now the FBO attachment stores our image. Next, we set the output from the first step (that is, the rendered image on the FBO attachment) as input to the convolution shader in the second pass. We render a full-screen quad on the back buffer and apply our convolution shader to it. This performs convolution on the input image. Finally, we swap the back buffer to show the result on the screen.

After the image is loaded and an OpenGL texture has been generated, we render a screen-aligned quad. This allows the fragment shader to run for the whole screen. In the fragment shader, for the current fragment, we iterate through its neighborhood and sum the product of the corresponding entry in the kernel with the look-up value. After the loop is terminated, the sum is divided by the total number of kernel coefficients. Finally, the convolution sum is added to the current pixel's value. There are several different kinds of kernels. We list the ones we will use in this recipe in the following table.

Tip

Based on the wrapping mode set for the texture, for example, GL_CLAMP or GL_REPEAT, the convolution result will be different. In case of the GL_CLAMP wrapping mode, the pixels out of the image are not considered, whereas, in case of the GL_REPEAT wrapping mode, the out of the image pixel information is obtained from the pixel at the wrapping position.

We just touched the topic of digital image convolution. For details, we refer the reader to the See also section. In the demo application, the user can set the required kernel and then press the Space bar key to see the filtered image output. Pressing the Space bar key once again shows the normal unfiltered image.

- Digital Image Processing, Third Edition, Rafael C. Gonzales and Richard E. Woods, Prentice Hall

- FBO tutorial by Song Ho Ahn: http://www.songho.ca/opengl/gl_fbo.html