In this recipe, we will learn about implementing simple global illumination using spherical harmonics. Spherical harmonics is a class of methods that enable approximation of functions as a product of a set of coefficients with a set of basis functions. Rather than calculating the lighting contribution by evaluating the bi-directional reflectance distribution function (BRDF), this method uses special HDR/RGBE images that store the lighting information. The only attribute required for this method is the per-vertex normal. These are multiplied with the spherical harmonics coefficients that are extracted from the HDR/RGBE images.

The RGBE image format was invented by Greg Ward. These images store three bytes for the RGB value (that is, the red, green, and blue channel) and an additional byte which stores a shared exponent. This enables these files to have an extended range and precision of floating point values. For details about the theory behind the spherical harmonics method and the RGBE format, refer to the references in the See also section of this recipe.

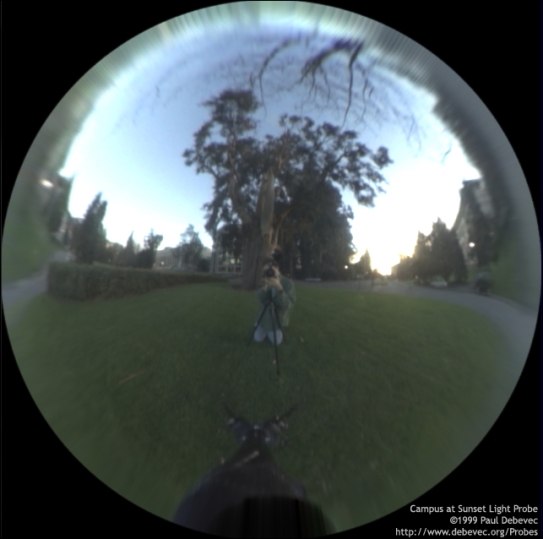

To give an overview of the recipe, using the probe image, the SH coefficients (C1 to C5) are estimated by projection. Details of the projection method are given in the references in the See also section. For most of the common lighting HDR probes, the spherical harmonic coefficients are documented. We use these values as constants in our vertex shader.

The code for this recipe is contained in the Chapter6/SphericalHarmonics directory. For this recipe, we will be using the Obj mesh loader discussed in the previous chapter.

Let us start this recipe by following these simple steps:

- Load an

objmesh using theObjLoaderclass and fill the OpenGL buffer objects and the OpenGL textures, using the material information loaded from the file, as in the previous recipes. - In the vertex shader that is used for the mesh, perform the lighting calculation using spherical harmonics. The vertex shader is detailed as follows:

#version 330 core layout(location = 0) in vec3 vVertex; layout(location = 1) in vec3 vNormal; layout(location = 2) in vec2 vUV; smooth out vec2 vUVout; smooth out vec4 diffuse; uniform mat4 P; uniform mat4 MV; uniform mat3 N; const float C1 = 0.429043; const float C2 = 0.511664; const float C3 = 0.743125; const float C4 = 0.886227; const float C5 = 0.247708; const float PI = 3.1415926535897932384626433832795; //Old town square probe const vec3 L00 = vec3( 0.871297, 0.875222, 0.864470); const vec3 L1m1 = vec3( 0.175058, 0.245335, 0.312891); const vec3 L10 = vec3( 0.034675, 0.036107, 0.037362); const vec3 L11 = vec3(-0.004629, -0.029448, -0.048028); const vec3 L2m2 = vec3(-0.120535, -0.121160, -0.117507); const vec3 L2m1 = vec3( 0.003242, 0.003624, 0.007511); const vec3 L20 = vec3(-0.028667, -0.024926, -0.020998); const vec3 L21 = vec3(-0.077539, -0.086325, -0.091591); const vec3 L22 = vec3(-0.161784, -0.191783, -0.219152); const vec3 scaleFactor = vec3(0.161784/ (0.871297+0.161784), 0.191783/(0.875222+0.191783), 0.219152/(0.864470+0.219152)); void main() { vUVout=vUV; vec3 tmpN = normalize(N*vNormal); vec3 diff = C1 * L22 * (tmpN.x*tmpN.x - tmpN.y*tmpN.y) + C3 * L20 * tmpN.z*tmpN.z + C4 * L00 - C5 * L20 + 2.0 * C1 * L2m2*tmpN.x*tmpN.y + 2.0 * C1 * L21*tmpN.x*tmpN.z + 2.0 * C1 * L2m1*tmpN.y*tmpN.z + 2.0 * C2 * L11*tmpN.x +2.0 * C2 * L1m1*tmpN.y +2.0 * C2 * L10*tmpN.z; diff *= scaleFactor; diffuse = vec4(diff, 1); gl_Position = P*(MV*vec4(vVertex,1)); } - The per-vertex color calculated by the vertex shader is interpolated by the rasterizer and then the fragment shader sets the color as the current fragment color.

#version 330 core uniform sampler2D textureMap; uniform float useDefault; smooth in vec4 diffuse; smooth in vec2 vUVout; layout(location=0) out vec4 vFragColor; void main() { vFragColor = mix(texture(textureMap, vUVout)*diffuse, diffuse, useDefault); }

Spherical harmonics is a technique that approximates the lighting, using coefficients and spherical harmonics basis. The coefficients are obtained at initialization from an HDR/RGBE image file that contains information about lighting. This allows us to approximate the same light so the graphical scene feels more immersive.

The method reproduces accurate diffuse reflection using information extracted from an HDR/RGBE light probe. The light probe itself is not accessed in the code. The spherical harmonics basis and coefficients are extracted from the original light probe using projection. Since this is a mathematically involved process, we refer the interested readers to the references in the See also section. The code for generating the spherical harmonics coefficients is available online. We used this code to generate the spherical harmonics coefficients for the shader.

The spherical harmonics is a frequency space representation of an image on a sphere. As was shown by Ramamoorthi and Hanrahan, only the first nine spherical harmonic coefficients are enough to give a reasonable approximation of the diffuse reflection component of a surface. These coefficients are obtained by constant, linear, and quadratic polynomial interpolation of the surface normal. The interpolation result gives us the diffuse component which has to be normalized by a scale factor which is obtained by summing all of the coefficients as shown in the following code snippet:

vec3 tmpN = normalize(N*vNormal); vec3 diff = C1 * L22 * (tmpN.x*tmpN.x - tmpN.y*tmpN.y) + C3 * L20 * tmpN.z*tmpN.z + C4 * L00 – C5 * L20 + 2.0 * C1 * L2m2*tmpN.x*tmpN.y +2.0 * C1 * L21*tmpN.x*tmpN.z + 2.0 * C1 * L2m1*tmpN.y*tmpN.z + 2.0 * C2 * L11*tmpN.x +2.0 * C2 * L1m1*tmpN.y +2.0 * C2 * L10*tmpN.z;diff *= scaleFactor;

The obtained per-vertex diffuse component is then forwarded through the rasterizer to the fragment shader where it is directly multiplied by the texture of the surface.

vFragColor = mix(texture(textureMap, vUVout)*diffuse, diffuse, useDefault);

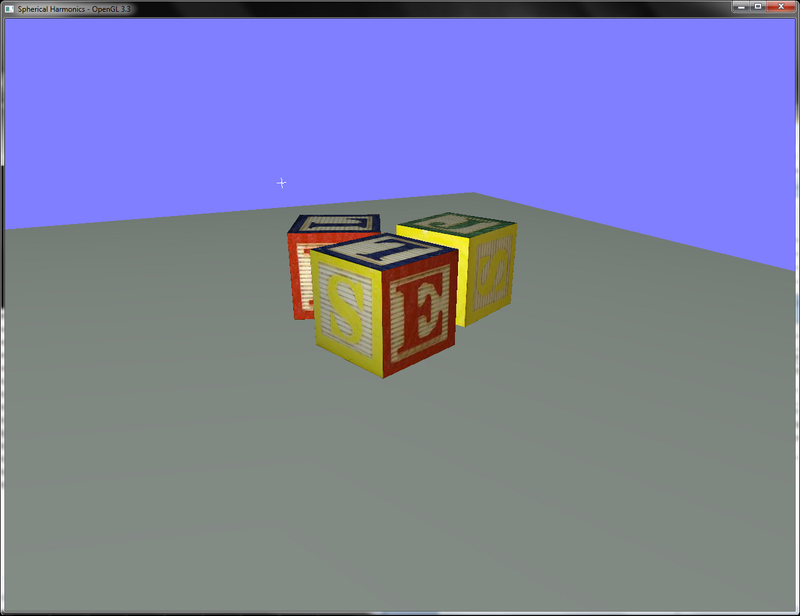

The demo application implementing this recipe renders the same scene as in the previous recipes, as shown in the following figure. We can rotate the camera view using the left mouse button, whereas, the point light source can be rotated using the right mouse button. Pressing the Space bar toggles the use of spherical harmonics. When spherical harmonics lighting is on, we get the following result:

Without the spherical harmonics lighting, the result is as follows:

The probe image used for this image is shown in the following figure:

Note that this method approximates global illumination by modifying the diffuse component using the spherical harmonics coefficients. We can also add the conventional Blinn Phong lighting model as we did in the earlier recipes. For that we would only need to evaluate the Blinn Phong lighting model using the normal and light position, as we did in the previous recipe.

- Ravi Ramamoorthi and Pat Hanrahan, An Efficient Representation for Irradiance Environment Maps: http://www1.cs.columbia.edu/~ravir/papers/envmap/index.html

- Randi J. Rost, Mill M. Licea-Kane, Dan Ginsburg, John M. Kessenich, Barthold Lichtenbelt, Hugh Malan, Mike Weiblen, OpenGL Shading Language, Third Edition, Section 12.3, Lighting and Spherical Harmonics, Addison-Wesley Professional

- Kelly Dempski and Emmanuel Viale, Advanced Lighting and Materials with Shaders, Chapter 8, Spherical Harmonic Lighting, Jones & Bartlett Publishers

- The RGBE image format specifications: http://www.graphics.cornell.edu/online/formats/rgbe/

- Paul Debevec HDR light probes: http://www.pauldebevec.com/Probes/

- Spherical harmonics lighting tutorial: http://www.paulsprojects.net/opengl/sh/sh.html