Now that we have introduced the basics of GLSL using a simple example, we will incorporate further complexity to provide a complete framework that enables users to modify any part of the rendering pipeline in the future.

The code in this framework is divided into smaller modules to handle the shader programs (shader.cpp and shader.hpp), texture mapping (texture.cpp and texture.hpp), and user inputs (controls.hpp and controls.hpp). First, we will reuse the mechanism to load shader programs in OpenGL introduced previously and incorporate new shader programs for our purpose. Next, we will introduce the steps required for texture mapping. Finally, we will describe the main program, which integrates all the logical pieces and prepares the final demo. In this section, we will show how we can load an image and convert it into a texture object to be rendered in OpenGL. With this framework in mind, we will further demonstrate how to render a video in the next section.

To avoid redundancy here, we will refer readers to the previous section for part of this demo (in particular, shader.cpp and shader.hpp).

First, we aggregate all the common libraries used in our program into the common.h header file. The common.h file is then included in shader.hpp, controls.hpp, texture.hpp, and main.cpp:

#ifndef _COMMON_h #define _COMMON_h #include <stdlib.h> #include <string.h> #include <stdio.h> #include <string> #include <GL/glew.h> #include <GLFW/glfw3.h> using namespace std; #endif

We previously implemented a mechanism to load a fragment and vertex shader program from files, and we will reuse the code here (shader.cpp and shader.hpp). However, we will modify the actual vertex and shader programs as follows.

For the vertex shader (transform.vert), we will implement the following:

#version 150

in vec2 UV;

out vec4 color;

uniform sampler2D textureSampler;

void main(){

color = texture(textureSampler, UV).rgba;

}For the fragment shader (texture.frag), we will implement the following:

#version 150

in vec3 vertexPosition_modelspace;

in vec2 vertexUV;

out vec2 UV;

uniform mat4 MVP;

void main(){

//position of the vertex in clip space

gl_Position = MVP * vec4(vertexPosition_modelspace,1);

UV = vertexUV;

}For the texture objects, in texture.cpp, we provide a mechanism to load images or video stream into the texture memory. We also take advantage of the SOIL library for simple image loading and the OpenCV library for more advanced video stream handling and filtering (refer to the next section).

In texture.cpp, we will implement the following:

- Include the

texture.hppheader and SOIL library header for simple image loading:#include "texture.hpp" #include <SOIL.h>

- Define the initialization of texture objects and set up all parameters:

GLuint initializeTexture(const unsigned char *image_data, int width, int height, GLenum format){ GLuint textureID=0; //create and bind one texture element glGenTextures(1, &textureID); glBindTexture(GL_TEXTURE_2D, textureID); glPixelStorei(GL_UNPACK_ALIGNMENT,1); /* Specify target texture. The parameters describe the format and type of the image data */ glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, width, height, 0, format, GL_UNSIGNED_BYTE, image_data); /* Set the wrap parameter for texture coordinate s & t to GL_CLAMP, which clamps the coordinates within [0, 1] */ glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE); /* Set the magnification method to linear and return weighted average of four texture elements closest to the center of the pixel */ glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR); /* Choose the mipmap that most closely matches the size of the pixel being textured and use the GL_NEAREST criterion (the texture element nearest to the center of the pixel) to produce a texture value. */ glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR); glGenerateMipmap(GL_TEXTURE_2D); return textureID; } - Define the routine to update the texture memory:

void updateTexture(const unsigned char *image_data, int width, int height, GLenum format){ // Update Texture glTexSubImage2D (GL_TEXTURE_2D, 0, 0, 0, width, height, format, GL_UNSIGNED_BYTE, image_data); /* Sets the wrap parameter for texture coordinate s & t to GL_CLAMP, which clamps the coordinates within [0, 1]. */ glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE); /* Set the magnification method to linear and return weighted average of four texture elements closest to the center of the pixel */ glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR); /* Choose the mipmap that most closely matches the size of the pixel being textured and use the GL_NEAREST criterion (the texture element nearest to the center of the pixel) to produce a texture value. */ glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR); glGenerateMipmap(GL_TEXTURE_2D); } - Finally, implement the texture-loading mechanism for images. The function takes the image path and automatically converts the image into various compatible formats for the texture object:

GLuint loadImageToTexture(const char * imagepath){ int width, height, channels; GLuint textureID=0; //Load the images and convert them to RGBA format unsigned char* image = SOIL_load_image(imagepath, &width, &height, &channels, SOIL_LOAD_RGBA); if(!image){ printf("Failed to load image %s ", imagepath); return textureID; } printf("Loaded Image: %d x %d - %d channels ", width, height, channels); textureID=initializeTexture(image, width, height, GL_RGBA); SOIL_free_image_data(image); return textureID; }

On the controller front, we capture the arrow keys and modify the camera model parameter in real time. This allows us to change the position and orientation of the camera as well as the angle of view. In controls.cpp, we implement the following:

- Include the GLM library header and the

controls.hppheader for the projection matrix and view matrix computations:#define GLM_FORCE_RADIANS #include <glm/glm.hpp> #include <glm/gtc/matrix_transform.hpp> #include "controls.hpp"

- Define global variables (camera parameters as well as view and projection matrices) to be updated after each frame:

//initial position of the camera glm::vec3 g_position = glm::vec3( 0, 0, 2 ); const float speed = 3.0f; // 3 units / second float g_initial_fov = glm::pi<float>()*0.4f; //the view matrix and projection matrix glm::mat4 g_view_matrix; glm::mat4 g_projection_matrix;

- Create helper functions to return the most updated view matrix and projection matrix:

glm::mat4 getViewMatrix(){ return g_view_matrix; } glm::mat4 getProjectionMatrix(){ return g_projection_matrix; } - Compute the view matrix and projection matrix based on the user input:

void computeViewProjectionMatrices(GLFWwindow* window){ static double last_time = glfwGetTime(); // Compute time difference between current and last frame double current_time = glfwGetTime(); float delta_time = float(current_time - last_time); int width, height; glfwGetWindowSize(window, &width, &height); //direction vector for movement glm::vec3 direction(0, 0, -1); //up vector glm::vec3 up = glm::vec3(0,-1,0); if (glfwGetKey(window, GLFW_KEY_UP) == GLFW_PRESS){ g_position += direction * delta_time * speed; } else if (glfwGetKey(window, GLFW_KEY_DOWN) == GLFW_PRESS){ g_position -= direction * delta_time * speed; } else if (glfwGetKey(window, GLFW_KEY_RIGHT) == GLFW_PRESS){ g_initial_fov -= 0.1 * delta_time * speed; } else if (glfwGetKey(window, GLFW_KEY_LEFT) == GLFW_PRESS){ g_initial_fov += 0.1 * delta_time * speed; } /* update projection matrix: Field of View, aspect ratio, display range : 0.1 unit <-> 100 units */ g_projection_matrix = glm::perspective(g_initial_fov, (float)width/(float)height, 0.1f, 100.0f); // update the view matrix g_view_matrix = glm::lookAt( g_position, // camera position g_position+direction, // viewing direction up // up direction ); last_time = current_time; }

In main.cpp, we will use the various previously defined functions to complete the implementation:

- Include the GLFW and GLM libraries as well as our helper functions, which are stored in separate files inside a folder called the

commonfolder:#define GLM_FORCE_RADIANS #include <stdio.h> #include <stdlib.h> #include <GL/glew.h> #include <GLFW/glfw3.h> #include <glm/glm.hpp> #include <glm/gtc/matrix_transform.hpp> using namespace glm; #include <common/shader.hpp> #include <common/texture.hpp> #include <common/controls.hpp> #include <common/common.h>

- Define all global variables for the setup:

GLFWwindow* g_window; const int WINDOWS_WIDTH = 1280; const int WINDOWS_HEIGHT = 720; float aspect_ratio = 3.0f/2.0f; float z_offset = 2.0f; float rotateY = 0.0f; float rotateX = 0.0f; //Our vertices static const GLfloat g_vertex_buffer_data[] = { -aspect_ratio,-1.0f,z_offset, aspect_ratio,-1.0f,z_offset, aspect_ratio,1.0f,z_offset, -aspect_ratio,-1.0f,z_offset, aspect_ratio,1.0f,z_offset, -aspect_ratio,1.0f,z_offset }; //UV map for the vertices static const GLfloat g_uv_buffer_data[] = { 1.0f, 0.0f, 0.0f, 0.0f, 0.0f, 1.0f, 1.0f, 0.0f, 0.0f, 1.0f, 1.0f, 1.0f }; - Define the keyboard

callbackfunction:static void key_callback(GLFWwindow* window, int key, int scancode, int action, int mods) { if (action != GLFW_PRESS && action != GLFW_REPEAT) return; switch (key) { case GLFW_KEY_ESCAPE: glfwSetWindowShouldClose(window, GL_TRUE); break; case GLFW_KEY_SPACE: rotateX=0; rotateY=0; break; case GLFW_KEY_Z: rotateX+=0.01; break; case GLFW_KEY_X: rotateX-=0.01; break; case GLFW_KEY_A: rotateY+=0.01; break; case GLFW_KEY_S: rotateY-=0.01; break; default: break; } } - Initialize the GLFW library with the OpenGL core profile enabled:

int main(int argc, char **argv) { //Initialize the GLFW if(!glfwInit()){ fprintf( stderr, "Failed to initialize GLFW " ); exit(EXIT_FAILURE); } //enable anti-alising 4x with GLFW glfwWindowHint(GLFW_SAMPLES, 4); //specify the client API version glfwWindowHint(GLFW_CONTEXT_VERSION_MAJOR, 3); glfwWindowHint(GLFW_CONTEXT_VERSION_MINOR, 2); //make the GLFW forward compatible glfwWindowHint(GLFW_OPENGL_FORWARD_COMPAT, GL_TRUE); //enable the OpenGL core profile for GLFW glfwWindowHint(GLFW_OPENGL_PROFILE, GLFW_OPENGL_CORE_PROFILE); - Set up the GLFW windows and keyboard input handlers:

//create a GLFW windows object window = glfwCreateWindow(WINDOWS_WIDTH, WINDOWS_HEIGHT, "Chapter 4 - Texture Mapping", NULL, NULL); if(!window){ fprintf( stderr, "Failed to open GLFW window. If you have an Intel GPU, they are not 3.3 compatible. Try the 2.1 version of the tutorials. " ); glfwTerminate(); exit(EXIT_FAILURE); } /* make the context of the specified window current for the calling thread */ glfwMakeContextCurrent(window); glfwSwapInterval(1); glewExperimental = true; // Needed for core profile if (glewInit() != GLEW_OK) { fprintf(stderr, "Final to Initialize GLEW "); glfwTerminate(); exit(EXIT_FAILURE); } //keyboard input callback glfwSetInputMode(window,GLFW_STICKY_KEYS,GL_TRUE); glfwSetKeyCallback(window, key_callback); - Set a black background and enable alpha blending for various visual effects:

glClearColor(0.0f, 0.0f, 0.0f, 1.0f); glEnable(GL_BLEND); glBlendFunc(GL_SRC_ALPHA,GL_ONE_MINUS_SRC_ALPHA);

- Load the vertex shader and fragment shader:

GLuint program_id = LoadShaders( "transform.vert", "texture.frag" );

- Load an image file into the texture object using the SOIL library:

char *filepath; //load the texture from image with SOIL if(argc<2){ filepath = (char*)malloc(sizeof(char)*512); sprintf(filepath, "texture.png"); } else{ filepath = argv[1]; } int width; int height; GLuint texture_id = loadImageToTexture(filepath, &width, &height); aspect_ratio = (float)width/(float)height; if(!texture_id){ //if we get 0 with no texture glfwTerminate(); exit(EXIT_FAILURE); } - Get the locations of the specific variables in the shader programs:

//get the location for our "MVP" uniform variable GLuint matrix_id = glGetUniformLocation(program_id, "MVP"); //get a handler for our "myTextureSampler" uniform GLuint texture_sampler_id = glGetUniformLocation(program_id, "textureSampler"); //attribute ID for the variables GLint attribute_vertex, attribute_uv; attribute_vertex = glGetAttribLocation(program_id, "vertexPosition_modelspace"); attribute_uv = glGetAttribLocation(program_id, "vertexUV");

- Define our Vertex Array Objects (VAO):

GLuint vertex_array_id; glGenVertexArrays(1, &vertex_array_id); glBindVertexArray(vertex_array_id);

- Define our VAO for vertices and UV mapping:

//initialize the vertex buffer memory. GLuint vertex_buffer; glGenBuffers(1, &vertex_buffer); glBindBuffer(GL_ARRAY_BUFFER, vertex_buffer); glBufferData(GL_ARRAY_BUFFER, sizeof(g_vertex_buffer_data), g_vertex_buffer_data, GL_STATIC_DRAW); //initialize the UV buffer memory GLuint uv_buffer; glGenBuffers(1, &uv_buffer); glBindBuffer(GL_ARRAY_BUFFER, uv_buffer); glBufferData(GL_ARRAY_BUFFER, sizeof(g_uv_buffer_data), g_uv_buffer_data, GL_STATIC_DRAW);

- Use the shader program and bind all texture units and attribute buffers:

glUseProgram(program_id); //binds our texture in Texture Unit 0 glActiveTexture(GL_TEXTURE0); glBindTexture(GL_TEXTURE_2D, texture_id); glUniform1i(texture_sampler_id, 0); //1st attribute buffer: vertices for position glEnableVertexAttribArray(attribute_vertex); glBindBuffer(GL_ARRAY_BUFFER, vertex_buffer); glVertexAttribPointer(attribute_vertex, 3, GL_FLOAT, GL_FALSE, 0, (void*)0); //2nd attribute buffer: UVs mapping glEnableVertexAttribArray(attribute_uv); glBindBuffer(GL_ARRAY_BUFFER, uv_buffer); glVertexAttribPointer(attribute_uv, 2, GL_FLOAT, GL_FALSE, 0, (void*)0);

- In the main loop, clear the screen and depth buffers:

//time-stamping for performance measurement double previous_time = glfwGetTime(); do{ //clear the screen glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT); glClearColor(1.0f, 1.0f, 1.0f, 0.0f); - Compute the transforms and store the information in the shader variables:

//compute the MVP matrix from keyboard and mouse input computeMatricesFromInputs(g_window); //obtain the View and Model Matrix for rendering glm::mat4 projection_matrix = getProjectionMatrix(); glm::mat4 view_matrix = getViewMatrix(); glm::mat4 model_matrix = glm::mat4(1.0); model_matrix = glm::rotate(model_matrix, glm::pi<float>() * rotateY, glm::vec3(0.0f, 1.0f, 0.0f)); model_matrix = glm::rotate(model_matrix, glm::pi<float>() * rotateX, glm::vec3(1.0f, 0.0f, 0.0f)); glm::mat4 mvp = projection_matrix * view_matrix * model_matrix; //send our transformation to the currently bound shader //in the "MVP" uniform variable glUniformMatrix4fv(matrix_id, 1, GL_FALSE, &mvp[0][0]); - Draw the elements and flush the screen:

glDrawArrays(GL_TRIANGLES, 0, 6); //draw a square //swap buffers glfwSwapBuffers(window); glfwPollEvents(); - Finally, define the conditions to exit the

mainloop and clear all the memory to exit the program gracefully:} // Check if the ESC key was pressed or the window was closed while(!glfwWindowShouldClose(window) && glfwGetKey(window, GLFW_KEY_ESCAPE )!=GLFW_PRESS); glDisableVertexAttribArray(attribute_vertex); glDisableVertexAttribArray(attribute_uv); // Clean up VBO and shader glDeleteBuffers(1, &vertex_buffer); glDeleteBuffers(1, &uv_buffer); glDeleteProgram(program_id); glDeleteTextures(1, &texture_id); glDeleteVertexArrays(1, &vertex_array_id); // Close OpenGL window and terminate GLFW glfwDestroyWindow(g_window); glfwTerminate(); exit(EXIT_SUCCESS); }

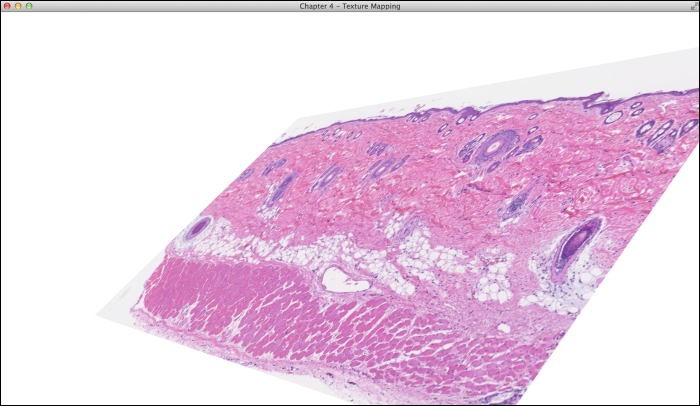

To demonstrate the use of the framework for data visualization, we will apply it to the visualization of a histology slide (an H&E cross-section of a skin sample), as shown in the following screenshot:

An important difference between this demo and the previous one is that here, we actually load an image into the texture memory (texture.cpp). To facilitate this task, we use the SOIL library call (SOIL_load_image) to load the histology image in the RGBA format (GL_RGBA) and the glTexImage2D function call to generate a texture image that can be read by shaders.

Another important difference is that we can now dynamically recompute the view (g_view_matrix) and projection (g_projection_matrix) matrices to enable an interactive and interesting visualization of an image in the 3D space. Note that the GLM library header is included to facilitate the matrix computations. Using the keyboard inputs (up, down, left, and right) defined in controls.cpp with the GLFW library calls, we can zoom in and out of the slide as well as adjust the view angle, which gives an interesting perspective of the histology image in the 3D virtual space. Here is a screenshot of the image viewed with a different perspective:

Yet another unique feature of the current OpenGL-based framework is illustrated by the following screenshot, which is generated with a new image filter implemented into the fragment shader that highlights the edges in the image. This shows the endless possibilities for the real-time interactive visualization and processing of 2D images using OpenGL rendering pipeline without compromising on CPU performance. The filter implemented here will be discussed in the next section.