To give more realism to 3D graphic scenes, we add lighting. In OpenGL's fixed function pipeline, per-vertex lighting is provided (which is deprecated in OpenGL v3.3 and above). Using shaders, we can not only replicate the per-vertex lighting of fixed function pipeline but also go a step further by implementing per-fragment lighting. The per-vertex lighting is also known as Gouraud shading and the per-fragment shading is known as Phong shading. So, without further ado, let's get started.

In this recipe, we will render many cubes and a sphere. All of these objects are generated and stored in the buffer objects. For details, refer to the CreateSphere and CreateCube functions in Chapter4/PerVertexLighting/main.cpp. These functions generate both vertex positions as well as per-vertex normals, which are needed for the lighting calculations. All of the lighting calculations take place in the vertex shader of the per-vertex lighting recipe (Chapter4/PerVertexLighting/), whereas, for the per-fragment lighting recipe (Chapter4/PerFragmentLighting/) they take place in the fragment shader.

Let us start our recipe by following these simple steps:

- Set up the vertex shader that performs the lighting calculation in the view/eye space. This generates the color after the lighting calculation.

#version 330 core layout(location=0) in vec3 vVertex; layout(location=1) in vec3 vNormal; uniform mat4 MVP; uniform mat4 MV; uniform mat3 N; uniform vec3 light_position; //light position in object space uniform vec3 diffuse_color; uniform vec3 specular_color; uniform float shininess; smooth out vec4 color; const vec3 vEyeSpaceCameraPosition = vec3(0,0,0); void main() { vec4 vEyeSpaceLightPosition = MV*vec4(light_position,1); vec4 vEyeSpacePosition = MV*vec4(vVertex,1); vec3 vEyeSpaceNormal = normalize(N*vNormal); vec3 L = normalize(vEyeSpaceLightPosition.xyz –vEyeSpacePosition.xyz); vec3 V = normalize(vEyeSpaceCameraPosition.xyz- vEyeSpacePosition.xyz); vec3 H = normalize(L+V); float diffuse = max(0, dot(vEyeSpaceNormal, L)); float specular = max(0, pow(dot(vEyeSpaceNormal, H), shininess)); color = diffuse*vec4(diffuse_color,1) + specular*vec4(specular_color, 1); gl_Position = MVP*vec4(vVertex,1); } - Set up a fragment shader which, inputs the shaded color from the vertex shader interpolated by the rasterizer, and set it as the current output color.

#version 330 core layout(location=0) out vec4 vFragColor; smooth in vec4 color; void main() { vFragColor = color; } - In the rendering code, set the shader and render the objects by passing their modelview/projection matrices to the shader as shader uniforms.

shader.Use(); glBindVertexArray(cubeVAOID); for(int i=0;i<8;i++) { float theta = (float)(i/8.0f*2*M_PI); glm::mat4 T = glm::translate(glm::mat4(1), glm::vec3(radius*cos(theta), 0.5,radius*sin(theta))); glm::mat4 M = T; glm::mat4 MV = View*M; glm::mat4 MVP = Proj*MV; glUniformMatrix4fv(shader("MVP"), 1, GL_FALSE, glm::value_ptr(MVP)); glUniformMatrix4fv(shader("MV"), 1, GL_FALSE, glm::value_ptr(MV)); glUniformMatrix3fv(shader("N"), 1, GL_FALSE, glm::value_ptr(glm::inverseTranspose(glm::mat3(MV)))); glUniform3fv(shader("diffuse_color"),1, &(colors[i].x)); glUniform3fv(shader("light_position"),1,&(lightPosOS.x)); glDrawElements(GL_TRIANGLES, 36, GL_UNSIGNED_SHORT, 0); } glBindVertexArray(sphereVAOID); glm::mat4 T = glm::translate(glm::mat4(1), glm::vec3(0,1,0)); glm::mat4 M = T; glm::mat4 MV = View*M; glm::mat4 MVP = Proj*MV; glUniformMatrix4fv(shader("MVP"), 1, GL_FALSE, glm::value_ptr(MVP)); glUniformMatrix4fv(shader("MV"), 1, GL_FALSE, glm::value_ptr(MV)); glUniformMatrix3fv(shader("N"), 1, GL_FALSE, glm::value_ptr(glm::inverseTranspose(glm::mat3(MV)))); glUniform3f(shader("diffuse_color"), 0.9f, 0.9f, 1.0f); glUniform3fv(shader("light_position"),1, &(lightPosOS.x)); glDrawElements(GL_TRIANGLES, totalSphereTriangles, GL_UNSIGNED_SHORT, 0); shader.UnUse(); glBindVertexArray(0); grid->Render(glm::value_ptr(Proj*View));

We can perform the lighting calculations in any coordinate space we wish, that is, object space, world space, or eye/view space. Similar to the lighting in the fixed function OpenGL pipeline, in this recipe we also do our calculations in the eye space. The first step in the vertex shader is to obtain the vertex position and light position in the eye space. This is done by multiplying the current vertex and light position with the modelview (MV) matrix.

vec4 vEyeSpaceLightPosition = MV*vec4(light_position,1); vec4 vEyeSpacePosition = MV*vec4(vVertex,1);

Similarly, we transform the per-vertex normals to eye space, but this time we transform them with the inverse transpose of the modelview matrix, which is stored in the normal matrix (N).

vec3 vEyeSpaceNormal = normalize(N*vNormal);

Tip

In the OpenGL versions prior to v3.0, the normal matrix was stored in the gl_NormalMatrix shader uniform, which is the inverse transpose of the modelview matrix. Compared to positions, normals are transformed differently since the scaling transformation may modify the normals in such a way that the normals are not normalized anymore. Multiplying the normals with the inverse transpose of the modelview matrix ensures that the normals are only rotated based on the given matrix, maintaining their unit length.

Next, we obtain the vector from the position of the light in eye space to the position of the vertex in eye space, and do a dot product of this vector with the eye space normal. This gives us the diffuse component.

vec3 L = normalize(vEyeSpaceLightPosition.xyz-vEyeSpacePosition.xyz); float diffuse = max(0, dot(vEyeSpaceNormal, L));

We also calculate two additional vectors, the view vector (V) and the half-way vector (H) between the light and the view vector.

vec3 V = normalize(vEyeSpaceCameraPosition.xyz-vEyeSpacePosition.xyz); vec3 H = normalize(L+V);

These are used for specular component calculation in the Blinn Phong lighting model. The specular component is then obtained using pow(dot(N,H), σ), where σ is the shininess value; the larger the shininess, the more focused the specular.

float specular = max(0, pow(dot(vEyeSpaceNormal, H), shininess));

The final color is then obtained by multiplying the diffuse value with the diffuse color and the specular value with the specular color.

color = diffuse*vec4( diffuse_color, 1) + specular*vec4(specular_color, 1);

The fragment shader in the per-vertex lighting simply outputs the per-vertex color interpolated by the rasterizer as the current fragment color.

smooth in vec4 color;

void main() {

vFragColor = color;

}Alternatively, if we move the lighting calculations to the fragment shader, we get a more pleasing rendering result at the expense of increased processing overhead. Specifically, we transform the per-vertex position, light position, and normals to eye space in the vertex shader, shown as follows:

#version 330 core

layout(location=0) in vec3 vVertex;

layout(location=1) in vec3 vNormal;

uniform mat4 MVP;

uniform mat4 MV;

uniform mat3 N;

smooth out vec3 vEyeSpaceNormal;

smooth out vec3 vEyeSpacePosition;

void main()

{

vEyeSpacePosition = (MV*vec4(vVertex,1)).xyz;

vEyeSpaceNormal = N*vNormal;

gl_Position = MVP*vec4(vVertex,1);

}In the fragment shader, the rest of the calculation, including the diffuse and specular component contributions, is carried out.

#version 330 core

layout(location=0) out vec4 vFragColor;

uniform vec3 light_position; //light position in object space

uniform vec3 diffuse_color;

uniform vec3 specular_color;

uniform float shininess;

uniform mat4 MV;

smooth in vec3 vEyeSpaceNormal;

smooth in vec3 vEyeSpacePosition;

const vec3 vEyeSpaceCameraPosition = vec3(0,0,0);

void main() {

vec3 vEyeSpaceLightPosition=(MV*vec4(light_position,1)).xyz;

vec3 N = normalize(vEyeSpaceNormal);

vec3 L = normalize(vEyeSpaceLightPosition-vEyeSpacePosition);

vec3 V = normalize(vEyeSpaceCameraPosition.xyz-vEyeSpacePosition.xyz);

vec3 H = normalize(L+V);

float diffuse = max(0, dot(N, L));

float specular = max(0, pow(dot(N, H), shininess));

vFragColor = diffuse*vec4(diffuse_color,1) + specular*vec4(specular_color, 1);

}We will now dissect the per-fragment lighting fragment shader line-by-line. We first calculate the light position in eye space. Then we calculate the vector from the light to the vertex in eye space. We also calculate the view vector (V) and the half way vector (H).

vec3 vEyeSpaceLightPosition = (MV * vec4(light_position,1)).xyz; vec3 N = normalize(vEyeSpaceNormal); vec3 L = normalize(vEyeSpaceLightPosition-vEyeSpacePosition); vec3 V = normalize(vEyeSpaceCameraPosition.xyz-vEyeSpacePosition.xyz); vec3 H = normalize(L+V);

Next, the diffuse component is calculated using the dot product with the eye space normal.

float diffuse = max(0, dot(vEyeSpaceNormal, L));

The specular component is calculated as in the per-vertex case.

float specular = max(0, pow(dot(N, H), shininess));

Finally, the combined color is obtained by summing the diffuse and specular contributions. The diffuse contribution is obtained by multiplying the diffuse color with the diffuse component and the specular contribution is obtained by multiplying the specular component with the specular color.

vFragColor = diffuse*vec4(diffuse_color,1) + specular*vec4(specular_color, 1);

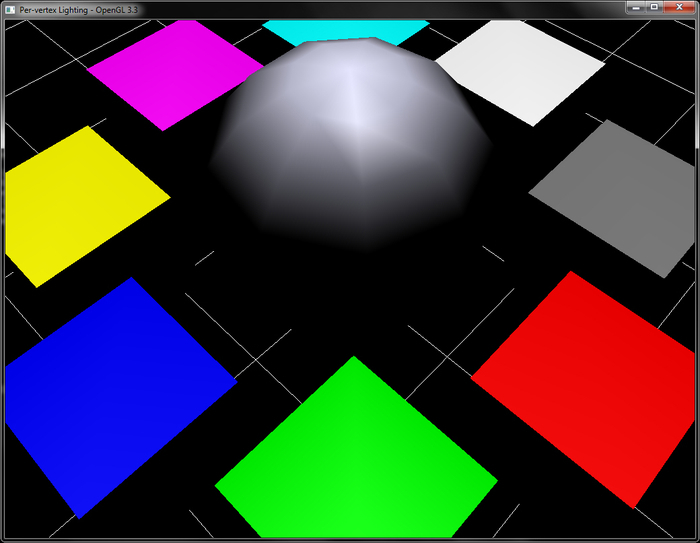

The output from the demo application for this recipe renders a sphere with eight cubes moving in and out, as shown in the following screenshot. The following figure shows the result of the per-vertex lighting. Note the ridge lines clearly visible on the middle sphere, which represents the vertices where the lighting calculations are carried out. Also note the appearance of the specular, which is predominantly visible at vertex positions only.

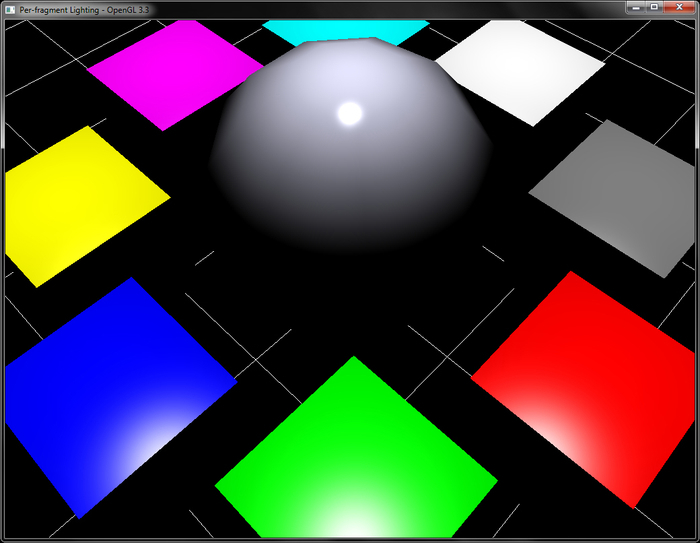

Now, let us see the result of the same demo application implementing per-fragment lighting:

Note how the per-fragment lighting gives a smoother result compared to the per-vertex lighting. In addition, the specular component is clearly visible.

Learning Modern 3D Graphics Programming, Section III, Jason L. McKesson: http://www.arcsynthesis.org/gltut/Illumination/Illumination.html