When rendering for most output devices (monitors or televisions), the device only supports a typical color precision of 8 bits per color component, or 24 bits per pixel. Therefore, for a given color component, we're limited to a range of intensities between 0 and 255. Internally, OpenGL uses floating-point values for color intensities, providing a wide range of both values and precision. These are eventually converted to 8 bit values by mapping the floating-point range [0.0, 1.0] to the range of an unsigned byte [0, 255] before rendering.

Real scenes, however, have a much wider range of luminance. For example, light sources that are visible in a scene, or direct reflections of them, can be hundreds to thousands of times brighter than the objects that are illuminated by the source. When we're working with 8 bits per channel, or the floating-point range [0.0, -1.0], we can't represent this range of intensities. If we decide to use a larger range of floating point values, we can do a better job of internally representing these intensities, but in the end, we still need to compress down to the 8-bit range.

The process of computing the lighting/shading using a larger dynamic range is often referred to as High Dynamic Range rendering (HDR rendering). Photographers are very familiar with this concept. When a photographer wants to capture a larger range of intensities than would normally be possible in a single exposure, he/she might take several images with different exposures to capture a wider range of values. This concept, called High Dynamic Range imaging (HDR imaging), is very similar in nature to the concept of HDR rendering. A post-processing pipeline that includes HDR is now considered a fundamentally essential part of any game engine.

Tone mapping is the process of taking a wide dynamic range of values and compressing them into a smaller range that is appropriate for the output device. In computer graphics, generally, tone mapping is about mapping to the 8-bit range from some arbitrary range of values. The goal is to maintain the dark and light parts of the image so that both are visible, and neither is completely "washed out".

For example, a scene that includes a bright light source might cause our shading model to produce intensities that are greater than 1.0. If we were to simply send that to the output device, anything greater than 1.0 would be clamped to 255, and would appear white. The result might be an image that is mostly white, similar to a photograph that is over exposed. Or, if we were to linearly compress the intensities to the [0, 255] range, the darker parts might be too dark, or completely invisible. With tone mapping, we want to maintain the brightness of the light source, and also maintain detail in the darker areas.

The mathematical function used to map from one dynamic range to a smaller range is called the Tone Mapping Operator (TMO). These generally come in two "flavors", local operators and global operators. A local operator determines the new value for a given pixel by using its current value and perhaps the value of some nearby pixels. A global operator needs some information about the entire image, in order to do its work. For example, it might need to have the overall average luminance of all pixels in the image. Other global operators use a histogram of luminance values over the entire image to help fine-tune the mapping.

In this recipe, we'll use a simple global operator that is described in the book Real Time Rendering. This operator uses the log-average luminance of all pixels in the image. The log-average is determined by taking the logarithm of the luminance and averaging those values, then converting back, as shown in the following equation:

Lw(x, y) is the luminance of the pixel at (x, y). The 0.0001 term is included in order to avoid taking the logarithm of zero for black pixels. This log-average is then used as part of the tone mapping operator shown as follows:.

The a term in this equation is the key. It acts in a similar way to the exposure level in a camera. The typical values for a range from 0.18 to 0.72. Since this tone mapping operator compresses the dark and light values a bit too much, we'll use a modification of the previous equation that doesn't compress the dark values as much, and includes a maximum luminance (Lwhite), a configurable value that helps to reduce some of the extremely bright pixels.

This is the tone mapping operator that we'll use in this example. We'll render the scene to a high-resolution buffer, compute the log-average luminance, and then apply the previous tone-mapping operator in a second pass.

However, there's one more detail that we need to deal with before we can start implementing. The previous equations all deal with luminance. Starting with an RGB value, we can compute its luminance, but once we modify the luminance, how do we modify the RGB components to reflect the new luminance, but without changing the hue (or chromaticity)?

The solution involves switching color spaces. If we convert the scene to a color space that separates out the luminance from the chromaticity, then we can change the luminance value independently. The CIE XYZ color space has just what we need. The CIE XYZ color space was designed so that the Y component describes the luminance of the color, and the chromaticity can be determined by two derived parameters (x and y). The derived color space is called the CIE xyY space, and is exactly what we're looking for. The Y component contains the luminance and the x and y components contain the chromaticity. By converting to the CIE xyY space, we've factored out the luminance from the chromaticity allowing us to change the luminance without affecting the perceived color.

So the process involves converting from RGB to CIE XYZ, then converting to CIE xyY, modifying the luminance and reversing the process to get back to RGB. To convert from RGB to CIE XYZ (and vice-versa) can be described as a transformation matrix (refer to the code or the See also section for the matrix).

The conversion from XYZ to xyY involves the following:

Finally, converting from xyY back to XYZ is done using the following equations:

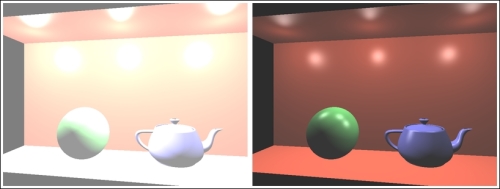

The following images show an example of the results of this tone mapping operator. The left image shows the scene rendered without any tone mapping. The shading was deliberately calculated with a wide dynamic range using three strong light sources. The scene appears "blown out" because any values that are greater than 1.0 simply get clamped to the maximum intensity. The image on the right uses the same scene and the same shading, but with the previous tone mapping operator applied. Note the recovery of the specular highlights from the "blown-out" areas on the sphere and teapot.

The steps involved are the following:

- Render the scene to a high-resolution texture.

- Compute the log-average luminance (on the CPU).

- Render a screen-filling quad to execute the fragment shader for each screen pixel. In the fragment shader, read from the texture created in step 1, apply the tone mapping operator, and send the results to the screen.

To get set up, create a high-res texture (using GL_RGB32F or similar format) attached to a framebuffer with a depth attachment. Set up your fragment shader with a subroutine for each pass. The vertex shader can simply pass through the position and normal in eye coordinates.

To implement HDR tone mapping, we'll use the following steps:

- In the first pass we want to just render the scene to the high-resolution texture. Bind to the framebuffer that has the texture attached and render the scene normally. Apply whatever shading equation strikes your fancy.

- Compute the log average luminance of the pixels in the texture. To do so, we'll pull the data from the texture and loop through the pixels on the CPU side. We do this on the CPU for simplicity, a GPU implementation, perhaps with a compute shader, would be faster.

GLfloat *texData = new GLfloat[width*height*3]; glActiveTexture(GL_TEXTURE0); glBindTexture(GL_TEXTURE_2D, hdrTex); glGetTexImage(GL_TEXTURE_2D, 0, GL_RGB, GL_FLOAT, texData); float sum = 0.0f; int size = width*height; for( int i = 0; i < size; i++ ) { float lum = computeLuminance( texData[i*3+0], texData[i*3+1], texData[i*3+2])); sum += logf( lum + 0.00001f ); } delete [] texData; float logAve = expf( sum / size ); - Set the

AveLumuniform variable usinglogAve. Switch back to the default frame buffer, and draw a screen-filling quad. In the fragment shader, apply the tone mapping operator to the values from the texture produced in step 1.// Retrieve high-res color from texture vec4 color = texture( HdrTex, TexCoord ); // Convert to XYZ vec3 xyzCol = rgb2xyz * vec3(color); // Convert to xyY float xyzSum = xyzCol.x + xyzCol.y + xyzCol.z; vec3 xyYCol = vec3(0.0); if( xyzSum > 0.0 ) // Avoid divide by zero xyYCol = vec3( xyzCol.x / xyzSum, xyzCol.y / xyzSum, xyzCol.y); // Apply the tone mapping operation to the luminance // (xyYCol.z or xyzCol.y) float L = (Exposure * xyYCol.z) / AveLum; L = (L * ( 1 + L / (White * White) )) / ( 1 + L ); // Using the new luminance, convert back to XYZ if( xyYCol.y > 0.0 ) { xyzCol.x = (L * xyYCol.x) / (xyYCol.y); xyzCol.y = L; xyzCol.z = (L * (1 - xyYCol.x - xyYCol.y))/xyYCol.y; } // Convert back to RGB and send to output buffer FragColor = vec4( xyz2rgb * xyzCol, 1.0);

In the first step, we render the scene to an HDR texture. In step 2, we compute the log-average luminance by retrieving the pixels from the texture and doing the computation on the CPU (OpenGL side).

In step 3, we render a single screen-filling quad to execute the fragment shader for each screen pixel. In the fragment shader, we retrieve the HDR value from the texture and apply the tone-mapping operator. There are two "tunable" variables in this calculation. The variable Exposure corresponds to the a term in the tone mapping operator, and the variable White corresponds to LWhite. For the previous image, we used values of 0.35 and 0.928 respectively.

Tone mapping is not an exact science. Often, it is a process of experimenting with the parameters until you find something that works well and looks good.

We could improve the efficiency of the previous technique by implementing step 2 on the GPU using compute shaders (refer to Chapter 10, Using Compute Shaders) or some other clever technique. For example, we could write the logarithms to a texture, then iteratively downsample the full frame to a 1 x 1 texture. The final result would be available in that single pixel. However, with the flexibility of the compute shader, we could optimize this process even more.

- Bruce Justin Lindbloom has provided a useful web resource for conversion between color spaces. It includes among other things the transformation matrices needed to convert from RGB to XYZ. Visit: http://www.brucelindbloom.com/index.html?Eqn_XYZ_to_RGB.html.

- The Rendering to a texture recipe in Chapter 4, Using Textures.