CHAPTER 35

Windows Server Virtualization

IN THIS CHAPTER

![]() Understanding Microsoft’s Virtualization Strategy

Understanding Microsoft’s Virtualization Strategy

![]() What’s New in Windows Server 2016 Hyper-V

What’s New in Windows Server 2016 Hyper-V

![]() Introduction to Dockers and Containers

Introduction to Dockers and Containers

![]() Planning Your Implementation of Hyper-V

Planning Your Implementation of Hyper-V

![]() Installing the Microsoft Hyper-V Role

Installing the Microsoft Hyper-V Role

![]() Becoming Familiar with the Hyper-V Administrative Console

Becoming Familiar with the Hyper-V Administrative Console

![]() Installing a Guest Operating System Session

Installing a Guest Operating System Session

![]() Modifying Guest Session Configuration Settings

Modifying Guest Session Configuration Settings

![]() Launching a Hyper-V Guest Session

Launching a Hyper-V Guest Session

![]() Using Snapshots of Guest Operating System Sessions

Using Snapshots of Guest Operating System Sessions

![]() Quick Migration and Live Migration

Quick Migration and Live Migration

Hyper-V within Windows Server 2012 underwent a series of significant improvements until it met and exceeded the feature and function capabilities of what used to be the top virtualization technologies in the market. Now stand as far back from the server as possible because Hyper-V on Windows Server 2016 rocks.

Hyper-V on Windows Server 2016 was enhanced to support 64 virtual CPUs and a terabyte of RAM per virtual machine, it has built-in host-to-host failover clustering, and it can do site-to-site failover in an extremely efficient manner, all built on the base Windows Server 2012 operating system that network administrators know how to configure and operate. In just a few short years, Microsoft not only jumped into the virtual server marketplace, but has invested and expanded the capabilities of Hyper-V over the years. Now with Hyper-V in Windows Server 2016, it has surpassed the competition in features, functions, and ease of implementation of core high-availability, scalability, and disaster recovery technologies, but Microsoft has also surpassed itself.

We begin this chapter with some history and background on Windows Server virtualization and then we get into the specifics of Windows Server 2016 support for Hyper-V and the new support for Dockers and Containers.

Understanding Microsoft’s Virtualization Strategy

Server virtualization is the ability for a single system to host multiple guest operating system sessions, effectively taking advantage of the processing capabilities of a very powerful server. Just a couple years ago, most servers in datacenters were running under 5% to 10% processor utilization, meaning that there was significant excess capacity on the servers unused. By combining multiple servers running within a virtual server host operating system, organizations can now utilize more capacity of a server. Even with server virtualization, however, organizations are still only pushing their servers to 40% to 50% utilization, leaving more than half the capacity of the server unused.

The key to pushing capacity to 70% or 80% or more falls on the virtualization technology to better support redundancy, failover, capacity utilization, monitoring, and automated management. This is what Microsoft has added in Hyper-V in Windows Server 2016, along with even more enhanced monitoring and management from the System Center 2016 family of products it recently released (covered in Chapter 36, “Integrating System Center Operations Manager 2016 with Windows Server 2016”). With the core technology improvements within Windows Server 2012 Hyper-V, organizations can safely push server utilization to higher limits, which includes the ability to build in failover redundancy and capacity management for a more efficiently managed and maintained virtual server environment.

History of Windows Virtualization

Microsoft’s position in the virtualization marketplace before the release of the last version of Windows Server (Windows Server 2012 R2) wasn’t one where Microsoft particularly had a bad product. However, because Microsoft had only jumped into the server virtualization space just a couple years prior to the release of Windows 2008 R2, it was a relatively newcomer to server virtualization and so required more maturity in its product.

Acquisition of Virtual PC

Microsoft jumped into the virtualization marketplace through the acquisition of a company called Connectix in 2003. At the time of the acquisition, Virtual PC provided a virtual session of Windows on either a Windows system or on a Macintosh computer system. Virtual PC was used largely by organizations testing server software or performing demos of Windows systems on desktop and laptop systems—or in the case of Virtual PC for the Mac, the ability for a Macintosh user to run Windows on their Macintosh computer.

Microsoft later dropped the development of Virtual PC for the Mac; however, it continued to develop virtualization for Windows systems with the release of Virtual PC 2007. Virtual PC 2007 enabled users running Windows XP or Vista to install, configure, and run virtual guest sessions of Windows or even non-Windows operating systems.

Microsoft Virtual Server

Virtual PC was targeted at operating under an operating system that was typically optimized for personal or individual applications, so Virtual PC did not scale for a datacenter wanting to run four, eight, or more sessions on a single system. At the time of the acquisition of Connectix, Connectix was in development of a virtual server solution that allowed for the operation of virtualization technologies on a Windows 2003 host server system.

Because a Windows Server 2003 system provided more RAM availability, supported multiple processors, and generally had more capacity and capabilities than a desktop client system, Microsoft Virtual Server provided organizations with more capabilities for server-based virtualization in a production environment.

Virtual Server 2005

Although the initial Virtual Server acquired through the Connectix acquisition provided basic server virtualization capabilities, it wasn’t until Virtual Server 2005 that Microsoft had its first internally developed product. Virtual Server 2005 provided better support and integration into a Windows 2003 environment, better support for multiprocessor systems and systems with more RAM, and better integration and support with other Microsoft server products.

In just two years, Microsoft went from having no virtual server technologies to a second-generation virtual server product; however, even with Virtual Server 2005, Microsoft was still very far behind its competitors.

Virtual Server 2005 R2

Over the subsequent two years, Microsoft released two major updates to Virtual Server 2005 with the release of an R2 edition of the Virtual Server 2005 product and a service pack for the R2 edition. Virtual Server 2005 R2 Service Pack 1 provided the following capabilities:

![]() Virtual Server host clustering—This technology allowed an organization to cluster host systems to one another, thus allowing guest sessions to have higher redundancy and reliability.

Virtual Server host clustering—This technology allowed an organization to cluster host systems to one another, thus allowing guest sessions to have higher redundancy and reliability.

![]() x64 host support—x64 host support meant that organizations had the capability to use the 64-bit version of Windows 2003 as the host operating system, thus providing better support for more memory and system capacity found in x64-bit systems. Guest operating systems, however, were still limited to x86 platforms.

x64 host support—x64 host support meant that organizations had the capability to use the 64-bit version of Windows 2003 as the host operating system, thus providing better support for more memory and system capacity found in x64-bit systems. Guest operating systems, however, were still limited to x86 platforms.

![]() Hardware-assisted virtualization—New to processors released from Intel (Intel VT) and AMD (AMD-V) were processors that provided better distribution of processor resources to virtual guest sessions.

Hardware-assisted virtualization—New to processors released from Intel (Intel VT) and AMD (AMD-V) were processors that provided better distribution of processor resources to virtual guest sessions.

![]() iSCSI support—This technology allowed virtual guest sessions to connect to iSCSI storage systems, thus providing better storage management and storage access for the guest sessions running on a virtual server host.

iSCSI support—This technology allowed virtual guest sessions to connect to iSCSI storage systems, thus providing better storage management and storage access for the guest sessions running on a virtual server host.

![]() Support for more than 16GB virtual disk sizes—Virtual disk sizes were now able to reach 2TB in size, thus allowing organizations the ability to have guest sessions with extremely large storage capacity.

Support for more than 16GB virtual disk sizes—Virtual disk sizes were now able to reach 2TB in size, thus allowing organizations the ability to have guest sessions with extremely large storage capacity.

These capabilities—among other capabilities of the latest Virtual Server 2005 product—brought Microsoft a little closer to its competition in the area of server virtualization.

Hyper-V in Windows Server 2008 and Window Server 2008 R2

It really wasn’t until the release of Windows Server 2008 just a few short years ago that Microsoft truly had a server virtualization offering. Microsoft knew it had to make significant investments in Hyper-V to be able to be taken seriously in the faster growing server virtualization marketplace, and with the release of Windows Server 2008 and Hyper-V, Microsoft finally had a contender. Over the subsequent two years, Microsoft released major updates to Hyper-V in its Windows Server 2008 R2 and Windows Server 2008 R2 SP1 updates.

Major enhancement in Hyper-V in the Windows Server 2008 family of operating systems include the following:

![]() Support for 64-bit guest sessions—This was critical, until Hyper-V, Microsoft would only support 32-bit guest sessions. By 2008, in a world where servers were 64-bit, Microsoft needed to support 64-bit guest sessions, and Hyper-V supported that capability!

Support for 64-bit guest sessions—This was critical, until Hyper-V, Microsoft would only support 32-bit guest sessions. By 2008, in a world where servers were 64-bit, Microsoft needed to support 64-bit guest sessions, and Hyper-V supported that capability!

![]() Guest sessions with up to 64GB memory—With support for 64-bit server guest sessions, Microsoft had to support more than 16GB or 32GB of RAM memory, and with each subsequent release of Hyper-V, Microsoft expanded its support for more and more RAM in each guest session.

Guest sessions with up to 64GB memory—With support for 64-bit server guest sessions, Microsoft had to support more than 16GB or 32GB of RAM memory, and with each subsequent release of Hyper-V, Microsoft expanded its support for more and more RAM in each guest session.

![]() Ability to support four virtual CPUs per virtual guest sessions—With physical hardware supporting 8-, 16-, and 32-core processors, Microsoft provided support for up to four virtual CPUs per virtual guest session, thus enabling scalability of the processing support within each guest session.

Ability to support four virtual CPUs per virtual guest sessions—With physical hardware supporting 8-, 16-, and 32-core processors, Microsoft provided support for up to four virtual CPUs per virtual guest session, thus enabling scalability of the processing support within each guest session.

![]() Built-in Live Migration high availability—As organizations were putting multiple server workloads onto a single physical server, if that physical server failed, an organization could lose several guest session systems simultaneously. Microsoft added Live Migration to Hyper-V in Windows Server 2008, thus enabling failover of a single guest session or multiple guest sessions from one Hyper-V host server to another Hyper-V host server with (typically) no interruption to the application or user’s accessing the application. Live Migration enabled organizations to successfully failover host-to-host servers and to have redundancy just in case a host server failed.

Built-in Live Migration high availability—As organizations were putting multiple server workloads onto a single physical server, if that physical server failed, an organization could lose several guest session systems simultaneously. Microsoft added Live Migration to Hyper-V in Windows Server 2008, thus enabling failover of a single guest session or multiple guest sessions from one Hyper-V host server to another Hyper-V host server with (typically) no interruption to the application or user’s accessing the application. Live Migration enabled organizations to successfully failover host-to-host servers and to have redundancy just in case a host server failed.

All of these capabilities finally got Microsoft into contention in the virtual server marketplace, and for small businesses and for relatively basic application workloads, Hyper-V was an excellent solution for organizations. Hyper-V was included in Windows Server licensing, it used a familiar Windows interface for administration and management, and it worked on any hardware platform that Windows Server 2008/2008 R2 worked on. It simply provided server virtualization without special hardware, configuration, or complexity. However, Hyper-V was still a step (or two) behind its biggest rival, VMware, in terms of core scalability, high availability, and functionality. With the release of Windows Server 2016, that changed as Microsoft has now met and exceeded the capabilities of VMware.

Integration of Hypervisor Technology in Windows Server

To leap beyond its competition in the area of server virtualization, Microsoft had to make some significant changes to the operating system that hosted its next-generation virtual server technology. Starting with the original development of Hyper-V within Windows Server 2008, Microsoft took the opportunity to lay the foundation for the integrated server virtualization technology right within the core Windows Server operating system. The core technology, commonly known as the hypervisor, is effectively the layer within the host operating system that provides better support for guest operating systems sessions. As has been noted already in this chapter, Microsoft calls this hypervisor-based technology Hyper-V.

Prior to the inclusion of Hyper-V in Windows 2008 and Windows 2012, the virtualization “stack” sat on top of the host operating system and effectively required all guest operating systems to share system resources, such as network communications, video-processing capabilities, memory allocation, and system resources. In the event that the host operating system had a system failure of something like the host network adapter driver, all guest sessions would simultaneously fail to communicate on the network.

Technologies such as VMware ESX, Citrix XenServer, and Hyper-V leverage a hypervisor-based technology that allows the guest operating systems to effectively bypass the host operating system and communicate directly with system resources. In some instances, the hypervisor manages shared guest session resources, and in other cases guest sessions bypass the hypervisor and process requests directly to the hardware layer of the system. By providing better independence of systems communications, the hypervisor-supported environment provides organizations better scalability, better performance, and, ultimately, better reliability of the core virtual host environment.

Hyper-V is available right within the Windows Server Standard and Datacenter editions.

NOTE

Hyper-V in Windows Server 2016 is only supported on x64-bit systems that have hardware-assisted virtualization support. CPUs must support Intel VT or AMD-V option and Data Execution Protection (DEP). Also, these features must be enabled in the computer BIOS. Fortunately, almost all new servers purchased since late 2006 include these capabilities.

Windows Server Hyper-V

As mentioned, significant improvements in Hyper-V in Windows Server finally make Hyper-V not only a comparative contender in the server virtualization marketplace, but now a leader that has raised the bar on what virtual server technologies need to be and do for an organization. There are many long-awaited features and technologies built in to Hyper-V. These can be broken down into three major categories: increased host and guest session capacity, integrated high-availability technologies, and enhanced manageability.

Increased Host and Guest Session Capacity

Microsoft not only improved host and guest session capacity: It blew the roof off what it supports in terms of the amount of memory support within a virtual guest session and the number of virtual CPUs supported per guest session, among other core guest session capabilities. Table 35.1 compares Hyper-V in Windows Server 2016 with what was supported in Hyper-V in Windows Server 2008 R2 and what is supported in Microsoft’s biggest competitor, VMware.

TABLE 35.1 Hyper-V Capacity Comparison

Capability |

Hyper-V (Windows Server 2008 R2) |

VMware ESXi 5.0/vSphere 5.0 Enterprise Plus |

Hyper-V (Windows Server 2012) |

Hyper-V (Windows Server 2016) |

Logical processors (per host) |

64 |

160/160 |

320 |

320 |

Physical memory (per host) |

1TB |

32GB/2TB |

4TB |

4TB |

Virtual CPUs per virtual guest session |

4 |

8/32 |

64 |

64 |

Memory per guest session |

64GB |

32GB/1TB |

1TB |

1TB |

Active virtual guests per host |

384 |

512/512 |

1,024 |

1,024 |

Maximum nodes in a cluster |

16 |

NA/32 |

64 |

64 |

Integrated High-Availability Technologies

Hyper-V in Windows Server 2012 also greatly improved high availability of Hyper-V host and guest sessions on everything from zero-downtime patching and updating of hosts and guest sessions as well as server-to-server and site-to-site failover. Specifically, the integrated technologies in Hyper-V for high availability include the following:

![]() Live migration failover (no SAN required)—Live migration is the ability to failover a guest session from one Hyper-V host server to another without the end users connected to the guest session losing connectivity to their application. Introduced in Windows 2008 Hyper-V, Live Migration at that time required a storage-area network (SAN) as the shared storage for the guest session failover. This made Live Migration expensive and not as flexible for smaller businesses or sites of large organizations. With Hyper-V in Windows Server 2016, live migrations of guest sessions can now be done with just a basic Windows 2016 file server as the shared storage for the cluster failover.

Live migration failover (no SAN required)—Live migration is the ability to failover a guest session from one Hyper-V host server to another without the end users connected to the guest session losing connectivity to their application. Introduced in Windows 2008 Hyper-V, Live Migration at that time required a storage-area network (SAN) as the shared storage for the guest session failover. This made Live Migration expensive and not as flexible for smaller businesses or sites of large organizations. With Hyper-V in Windows Server 2016, live migrations of guest sessions can now be done with just a basic Windows 2016 file server as the shared storage for the cluster failover.

![]() Zero downtime patching/updating—Another challenge with server virtualization is the reliance of one host server managing several (sometimes a dozen or more) live guest sessions. When the host operating system has to be patched or updated, it requires bringing down all the virtual guest sessions, or Live Migrating the guest sessions to other servers. With Windows Server 2016, Microsoft now includes Cluster Aware Updates (CAU), a feature that automatically updates a node of a cluster (like a Hyper-V cluster node) without interruption to end users by automatically failing the cluster node to another cluster node during the patching process. You can find more information about CAU in Chapter 28, “System-Level Fault Tolerance (Clustering/Network Load Balancing).

Zero downtime patching/updating—Another challenge with server virtualization is the reliance of one host server managing several (sometimes a dozen or more) live guest sessions. When the host operating system has to be patched or updated, it requires bringing down all the virtual guest sessions, or Live Migrating the guest sessions to other servers. With Windows Server 2016, Microsoft now includes Cluster Aware Updates (CAU), a feature that automatically updates a node of a cluster (like a Hyper-V cluster node) without interruption to end users by automatically failing the cluster node to another cluster node during the patching process. You can find more information about CAU in Chapter 28, “System-Level Fault Tolerance (Clustering/Network Load Balancing).

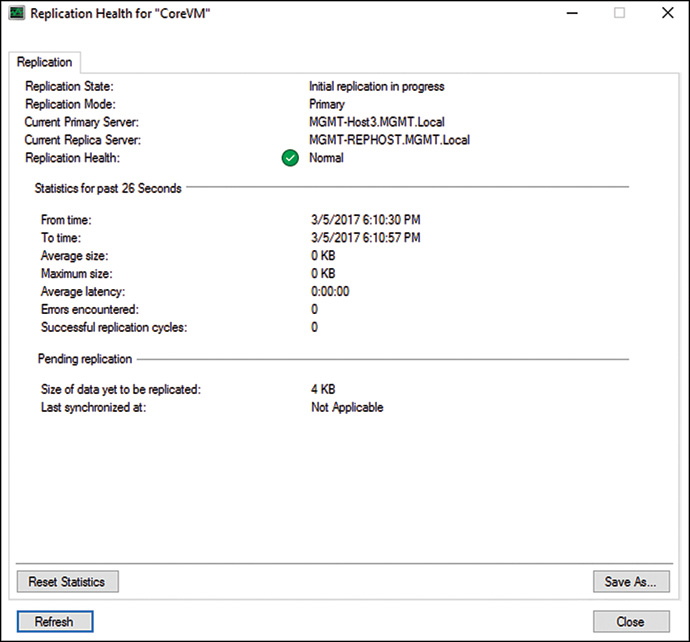

![]() Integrated site-to-site replication—As much as failover of guest sessions has been supported in Hyper-V since Windows Server 2008 Hyper-V, the ability to failover guest session between sites has not been a strength in Hyper-V. Now with Windows Server 2016 Hyper-V, Microsoft has included a technology called Hyper-V Replica that replicates virtual guest session data between sites so that in the event of a site failure, another site can come online with replicated copies of the guest session systems. You can find more about site-to-site replication in the section “Utilizing Hyper-V Replica for Site-to-Site Redundancy.”

Integrated site-to-site replication—As much as failover of guest sessions has been supported in Hyper-V since Windows Server 2008 Hyper-V, the ability to failover guest session between sites has not been a strength in Hyper-V. Now with Windows Server 2016 Hyper-V, Microsoft has included a technology called Hyper-V Replica that replicates virtual guest session data between sites so that in the event of a site failure, another site can come online with replicated copies of the guest session systems. You can find more about site-to-site replication in the section “Utilizing Hyper-V Replica for Site-to-Site Redundancy.”

![]() Built-in NIC teaming—Network interface card (NIC) teaming, effectively the ability to have multiple network adapters in a virtual server host system sharing network communications load, is nothing new in the industry, as hardware vendors like Hewlett-Packard, Dell, and IBM have provided drivers and support for NIC teaming. The significance of NIC teaming in Windows Server 2016 is that it is now built-in to the operating system. Now when NIC aggregation or NIC separation is configured on a Hyper-V host for performance/redundancy and a Hyper-V host server fails over to another host server, Windows Server 2016 Hyper-V understands the underlying networking operations to support the failover. There’s no more finger-pointing between vendors of drivers and functionality because the technology is now core to Windows Server 2016.

Built-in NIC teaming—Network interface card (NIC) teaming, effectively the ability to have multiple network adapters in a virtual server host system sharing network communications load, is nothing new in the industry, as hardware vendors like Hewlett-Packard, Dell, and IBM have provided drivers and support for NIC teaming. The significance of NIC teaming in Windows Server 2016 is that it is now built-in to the operating system. Now when NIC aggregation or NIC separation is configured on a Hyper-V host for performance/redundancy and a Hyper-V host server fails over to another host server, Windows Server 2016 Hyper-V understands the underlying networking operations to support the failover. There’s no more finger-pointing between vendors of drivers and functionality because the technology is now core to Windows Server 2016.

Enhanced Manageability

Core to consolidating physical servers to virtual guest sessions on a limited number of physical host servers is the ability to more easily manage and support the guest sessions. Without manageability, “server sprawl” exists, where it is so easy to spin up a server that organizations do so without realizing it. Eventually, the organization has a lot more servers than it needs, doesn’t have an easy way to manage or administer the servers, and spends more time managing the guest sessions than it did before with physical servers.

Key to Windows Server 2016 is its ability to more easily manage and support systems (physical and virtual). Key improvements in manageability include the following:

![]() Server Manager console—Windows Server 2016 Server Manager is a centralized server management console that enables an administrator to virtually see, organizationally group, and centrally manage systems, whether physical or virtual. By being able to see, manage, and administer groups of systems at a time, a simple configuration or a simple update can be done once to many systems simultaneously. Unlike other virtual server technologies that focus just on the ability to create more virtual guest sessions, Windows Server 2016 with Hyper-V provides not only a better way of spinning up guest sessions, but also a better way of managing the guest sessions. Server Manager and Windows systems management is covered in Chapter 17, “Windows Server 2016 Administration.”

Server Manager console—Windows Server 2016 Server Manager is a centralized server management console that enables an administrator to virtually see, organizationally group, and centrally manage systems, whether physical or virtual. By being able to see, manage, and administer groups of systems at a time, a simple configuration or a simple update can be done once to many systems simultaneously. Unlike other virtual server technologies that focus just on the ability to create more virtual guest sessions, Windows Server 2016 with Hyper-V provides not only a better way of spinning up guest sessions, but also a better way of managing the guest sessions. Server Manager and Windows systems management is covered in Chapter 17, “Windows Server 2016 Administration.”

![]() IP address mobility—During a failover from one datacenter to another, one of the biggest challenges for organizations is the need to change IP addresses based on the subnet and configuration of network resources after the site failover. Windows Server 2016 enables you to make IP addresses, including Dynamic Host Configuration Protocol (DHCP)-issued addresses, portable between sites. Upon failover, address tables are automatically updated, and issued IP addresses are available in the redundant datacenter for immediate operations with no need for IT to readdress systems in the surviving datacenter. You can find more information about IP address mobility in Chapter 10, “DHCP, IPv6, IPAM.”

IP address mobility—During a failover from one datacenter to another, one of the biggest challenges for organizations is the need to change IP addresses based on the subnet and configuration of network resources after the site failover. Windows Server 2016 enables you to make IP addresses, including Dynamic Host Configuration Protocol (DHCP)-issued addresses, portable between sites. Upon failover, address tables are automatically updated, and issued IP addresses are available in the redundant datacenter for immediate operations with no need for IT to readdress systems in the surviving datacenter. You can find more information about IP address mobility in Chapter 10, “DHCP, IPv6, IPAM.”

![]() BitLocker encryption of hosts and guests—With virtual guest sessions spinning up virtually everywhere in an enterprise, even in small and remote sites, the need for better security of hosts and guest sessions becomes critical for the security of the enterprise. Windows Server 2016 supports BitLocker encryption of both host and guest sessions with the ability to encrypt local disk storage, encrypt failover cluster disks, and encrypt cluster shared volumes. This helps an organization better improve security of Hyper-V hosts and guests. You can find more about BitLocker encryption in Chapter 12, “Server-Level Security.”

BitLocker encryption of hosts and guests—With virtual guest sessions spinning up virtually everywhere in an enterprise, even in small and remote sites, the need for better security of hosts and guest sessions becomes critical for the security of the enterprise. Windows Server 2016 supports BitLocker encryption of both host and guest sessions with the ability to encrypt local disk storage, encrypt failover cluster disks, and encrypt cluster shared volumes. This helps an organization better improve security of Hyper-V hosts and guests. You can find more about BitLocker encryption in Chapter 12, “Server-Level Security.”

NOTE

Hyper-V provides the capability to host-guest operating systems for Windows servers, client systems, and non-Windows systems, and additional tools and virtual host and guest session management can be enhanced with the use of Microsoft System Center 2016 Virtual Machine Manager (VMM).

VMM enables you to do physical-to-virtual image creation and virtual-to-virtual image copying and extends beyond just virtual guest and host management to also include the management of the “fabric” of a network. The fabric of a network includes the management of SANs, creation of virtual local-area networks (VLANs), and the automatic creation of entire two-tier and three-tier bundles of servers.

System Center 2016 is complementary to the management and administration of Hyper-V hosts. The entire System Center 2016 suite of products is covered in the Sams Publishing book System Center 2016 Unleashed, which addresses not only VMM for automating the process of spinning up guest session environments, but also covers Configuration Manager for patching and managing host servers and virtual guest sessions, Data Protection Manager for backing up hosts and guests, and the other components in the System Center family.

Microsoft Hyper-V Server as a Role in Windows Server 2016

Hyper-V is enabled as a server role just as Windows Server 2016 Remote Desktop Services, DNS Server, or Active Directory Domain Services are added to the server.

New and Improved Windows Server 2016 Hyper-V

This section describes what’s new and updated in Hyper-V on Windows Server 2016 and Microsoft Hyper-V Server 2016. To use new features on virtual machines created with Windows Server 2016 R2 and moved or imported to a server that runs Hyper-V on Windows Server 2016, you’ll need to manually upgrade the virtual machine configuration version.

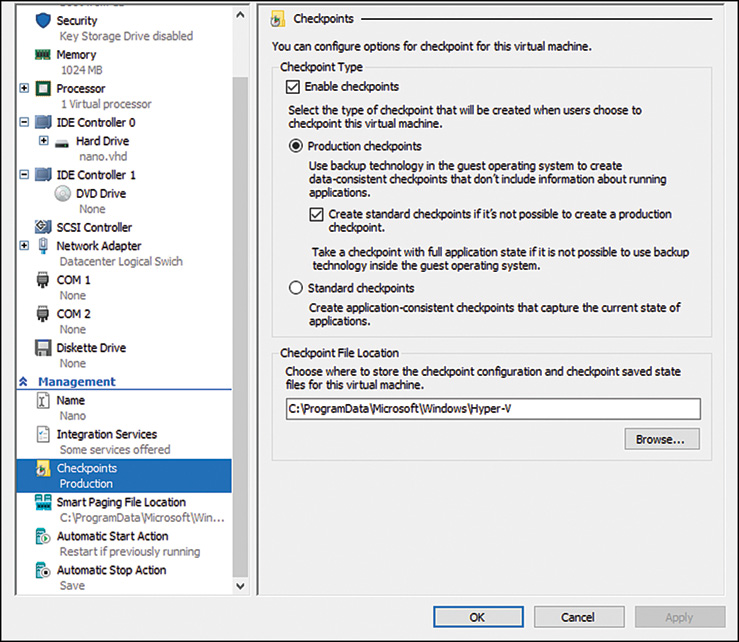

Production checkpoints

Virtual Machine Checkpoints, or, in older versions, Virtual Machine Snapshots, were a great solution to take a state of a virtual machine and save it. You could then make some changes, and if something failed, you could simply revert back to the time you took the checkpoint. This was not really supported to use in production, since a lot of applications couldn’t handle that process. Microsoft has now changed that behavior and now fully supports it in production environments.

For this, Production Checkpoints are now using VSS instead of the Saved State to create the checkpoint. This means if you are restoring a checkpoint, this is just like restoring a system from a backup. For the user, everything works as before and there is no difference in how you have to take the checkpoint. Production Checkpoints are enabled by default (see Figure 35.1), but you can change back to the old behavior if you need to. Still, using Checkpoints brings some other challenges, like the growing .avhdx files, which still apply.

Host Resource Protection

Host resource protection is a technology that was initially built and designed for Microsoft’s hyperscale public cloud, Azure, and is now making its way into private cloud environments within Windows Server 2016. Host Resource protection is enabled by default whenever you install Windows Serer 2016. Malware, ransomware, and other malicious activities are becoming the norm both in public and private cloud environments. Host Resource Protection aims to identify abnormal patterns of access by leveraging its heuristics-based approach to dynamically detect malicious code. When an issue is identified, the VM’s performance is throttled back as to not affect the performance of the other VMs that reside on the Hyper-V host.

This monitoring and enforcement is off by default. Use Windows PowerShell to turn it on or off. To turn it on, run this command:

Set-VMProcessor -EnableHostResourceProtection $true

Hot add and remove for network adapters and memory

Windows Server 2016 give you the ability to add and remove network adapters on the fly, without downtime. The VM, however, will need to be a generation 2 VM. You can also adjust the memory of the VM while it is running. This works on generation 1 and 2 VMs, and it doesn’t even require dynamic memory to be enabled for a specific VM.

Discrete device assignment protection

This allows users to take some of the PCI Express devices in their PCs and pass them directly through to the VM. This performance-enhancing feature allows the VM to access the PCI device directly, so it bypasses the virtualization stack. You may want to get the most out of Photoshop or some other thing that just needs a graphics processor, or GPU. If you have GPUs in your machine that aren’t needed by the Windows management OS, you can dismount them and pass them through to a guest VM.

Nested virtualization

Nested virtualization provides the ability of running a virtualization environment inside a virtual machine (VM). You may ask, why would I do that? One of the most common uses for nested virtualization is in lab environments and training environment as it reduces the number of physical servers needed to run hypervisors to train users.

In short, nested virtualization allows you to install the Hyper-V role on a physical server, create a VM that executes in the Hyper-V hypervisor, install and run the Hyper-V role in that VM, and create a new VM inside the original.

Windows PowerShell Direct

PowerShell Direct lets you remote connect to a Virtual Machine running on a Hyper-V host, without any network connection inside the Virtual Machine. PowerShell Direct uses the Hyper-V VMBus to connect inside the Virtual Machine. This feature is really handy if you need it for automation and configuration for Virtual Machines or if you, for example, messed up network configuration inside the virtual machine and you don’t have console access.

To get started, open PowerShell on your Hyper-V host and type the VM as it appears in Hyper-V. Enter the credential of the Admin account on the virtual machine. After you’ve connected, you can run any cmdlet under the virtual machine.

Linux Secure Boot

Microsoft added a secure boot mode option for Hyper-V virtual machines with Windows Server 2016 R2, but the option wasn’t available for Linux VMs. However, in Windows Server 2016, administrators will now be able to enable secure boot mode for VMs running a variety of Linux operating systems.

Secure boot mode is a signature-checking process that occurs during the OS boot up. Secure boot ensures that only approved OS components are loaded during the boot. This feature prevents malicious code from running under the security context of the system account and then gain access to OS components.

To enable secure boot mode using the Hyper-V Manager, go to the property of a Linux VM, select the security tab, check the Enable Secure Boot checkbox in the right pane, and then select Microsoft UEFI Certificate Authority from the template drop-down list.

Shared virtual hard disks

Shared VHDX was first introduced in Windows Server 2012 R2. It provides shared storage for use by virtual machines without having to “break through” the virtualization layer. However, shared VHDX has some limitations:

![]() Resizing and migrating a shared VHDX is not supported.

Resizing and migrating a shared VHDX is not supported.

![]() Making a backup or a replica of a shared VHDX is not supported.

Making a backup or a replica of a shared VHDX is not supported.

The new way of installing is based on a VHD Set. This feature has not these limitations. However, VHD Set is available only for Windows Server 2016 guest operating system.

Shared VHDX is still available to us in Windows Server 2016. The benefit of this is that you will not be forced to upgrade your Windows Server 2016 R2 guest clusters when you move them to Windows Server 2016 Hyper-V cluster hosts.

To create a VHD Set, you can use the Graphical User Interface GUI or PowerShell cmdlets. From the GUI, open the Hyper-V Manager, select New and then Virtual Disk.

Hyper-V Manager Improvements

![]() Alternate credentials support—You can now use a different set of credentials in Hyper-V Manager when you connect to another Windows Server 2016 or Windows 10 remote host. You can also save these credentials to make it easier to log on again.

Alternate credentials support—You can now use a different set of credentials in Hyper-V Manager when you connect to another Windows Server 2016 or Windows 10 remote host. You can also save these credentials to make it easier to log on again.

![]() Manage earlier versions—With Hyper-V Manager in Windows Server 2016 and Windows 10, you can manage computers running Hyper-V on Windows Server 2012, Windows 8, Windows Server 2012 R2, and Windows 8.1.

Manage earlier versions—With Hyper-V Manager in Windows Server 2016 and Windows 10, you can manage computers running Hyper-V on Windows Server 2012, Windows 8, Windows Server 2012 R2, and Windows 8.1.

![]() Updated management protocol—Hyper-V Manager now communicates with remote Hyper-V hosts using the WS-MAN protocol, which permits CredSSP, Kerberos, or NTLM authentication. When you use CredSSP to connect to a remote Hyper-V host, you can do a live migration without enabling constrained delegation in Active Directory. The WS-MAN-based infrastructure also makes it easier to enable a host for remote management. WS-MAN connects over port 80, which is open by default.

Updated management protocol—Hyper-V Manager now communicates with remote Hyper-V hosts using the WS-MAN protocol, which permits CredSSP, Kerberos, or NTLM authentication. When you use CredSSP to connect to a remote Hyper-V host, you can do a live migration without enabling constrained delegation in Active Directory. The WS-MAN-based infrastructure also makes it easier to enable a host for remote management. WS-MAN connects over port 80, which is open by default.

New Storage Quality of Service (QoS)

In Windows Server 2016, Storage QoS can centrally manage and monitor storage performance for Hyper-V servers and the virtual machines that they host. And Storage QoS is built into the Hyper-V role in Windows 2016. It can be used with either a Scale-Out File Server or traditional block storage in the form of CSV. Storage QoS is represented as a cluster resource in Failover Cluster Manager and is managed directly by the failover cluster. After some virtual machines are using the Scale-Out File Server or CSV, Storage QoS monitors, and tracks storage flow.

Integration services delivered through Windows Update

In Windows Server 2016 Integration Services, updates will be delivered by Windows Update, which is a great way to get more of those VMs with the latest components. This functionality will also allow for tenants or workload owners to have control over this process, so they are not dependent on infrastructure services anymore.

The vmguest.iso image file is no longer needed, so it isn’t included with Hyper-V on Windows Server 2016.

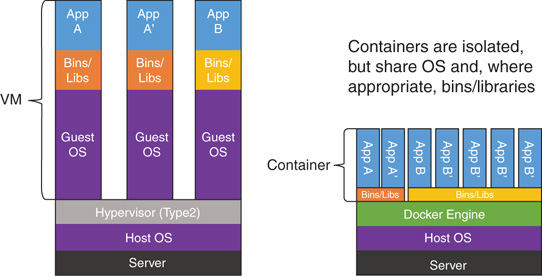

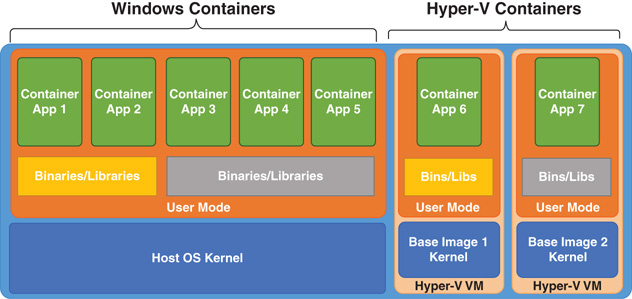

Windows Containers and Hyper-V Containers in Windows Server 2016

Containers are a very popular technology in the Linux world today as they solve a number of challenges related to application deployment.

Containers create a complete dependency for an application including middleware, runtimes, libraries, and even the OS requirements. Additionally, each of these dependencies/layers are packaged up and run in their own user-mode container, isolating them from other applications avoiding problems with applications’ not being compatible with each other. These applications running in containers have their own view of the file system, registry, and even networking addresses.

We will return to more on containers later in this chapter.

Planning Your Implementation of Hyper-V

For the organization that chooses to leverage the capabilities of Windows Server 2016 virtualization, a few moments should be spent to determine the proper size, capacity, and capabilities of the host server that would be used as the virtual server host system. Many server system applications get installed with little assessment as to resource requirements of the application itself because most servers in a datacenter are running less than 10% server utilization, so there is plenty of excess server capacity to handle server workload capabilities.

With Hyper-V, however, because each guest session is a discretely running operating system, the installation of as few as three or four high-performance guest sessions could quickly bring a server to 60% or 70% of the server performance limits, and as much as you want host servers to be running at 60% to 80% utilization, balancing the server load and optimization utilization is the key. So, the planning phase is an important step in a Hyper-V implementation.

Sizing Your Windows Server 2016 Server to Support Virtualization

The minimum requirements for server compatibility for Windows Server 2016 applies, but because server virtualization is the focus of this server system, the minimum Windows Server 2016 server requirements will not be sufficient to run Hyper-V virtualization.

In addition, although Windows Server 2016 supports up to 320 processor cores, 4TB of RAM, and 1,024 concurrently running virtual machines, the reality on the scaling of Windows virtualization comes down to the raw capabilities of network I/O that can be driven from a single host server. In many environments where a virtualized guest system has a relatively low system utilization and network traffic demand, a single host system could easily support a dozen, two dozen, or more guest sessions. In other environments where virtualized guest sessions have an extremely high system utilization, lots of disk I/O, and significant server network I/O, the organization might find that a single host server would maximize its capacity with as few as seven or eight guest sessions.

RAM for the Host Server

The rule of thumb for memory of a Windows Server 2016 server running Hyper-V is to have 2GB of RAM for the host server, plus enough memory for each guest session. Therefore, if a guest session needs to have 2GB of RAM, and there are three such guest sessions running on the host system, the host system should be configured with at least 8GB of RAM. If a guest session requires 8GB of memory and three of those systems are running on the system, the server should be configured with 24GB of memory to support the three guest sessions, plus at least 2GB of memory for the host system itself.

Processors for the Host Server

The host server itself in Windows Server 2016 virtualization has very little processor I/O requirements. In the virtualized environment, the processor demands of each guest session dictate how much processing capacity is needed for the server. If a guest session requires two cores to support the processing requirements of the application, and seven guest sessions are running on the system, the server should have at least 15 cores available in the system. With quad-core processors, the system would need four physical processors. With dual-core processors, the system would need at least eight physical processors. Because Microsoft licenses Windows Server 2016 based on the number of physical processor sockets, the organization is best off getting a single quad-core system than two dual-core processors. Processor density is the balance between maximizing the most number of core processors in as few sockets as possible.

With Windows Server 2016 virtualization, each host server can have up to 320 core processors, so processing capacity can be distributed, either equally or as necessary to meet the performance demands of the organization. By sharing cores among several virtual machines that have low processing needs, an organization can more fully utilize their investment in hardware systems.

Disk Storage for the Host Server

A host server typically has the base Windows Server 2016 operating system running on the host system itself with additional guest sessions either sharing the same disk as the host session or the guest sessions virtual disks being stored on a SAN or some form of external storage.

Each guest session takes up at least 7GB of disk space. For guest sessions running databases or other storage-intensive configurations, the guest image can exceed 10GB, 20GB, or more. When planning disk storage for the virtual server system, plan to have enough disk space to support the host operating system files (typically about 7GB of actual files plus space for the pagefile) and then disk space available to support the guest sessions.

Running Other Services on the Hyper-V System

On a system running Hyper-V, typically an organization would not run other services on the host system, such as making the host server also a file and print server, a SharePoint server, or so on. Typically, a server running virtualization is already going to be a system that will maximize the memory, processor, and disk storage capabilities of the system. So, instead of impacting the performance of all the guest sessions by having a system-intensive application like SharePoint running on the host system, organizations choose to make servers running virtualization dedicated solely to the operation of virtualized guest sessions.

Of course, exceptions apply to this general recommendation. If a system will be used for demonstration purposes, frequently the host system is set up to run Active Directory Domain Services, DNS, DHCP, and other domain utility services. So, effectively, the host server is the Active Directory system. Then, the guest sessions are created to run things like Microsoft Exchange, SharePoint, or other applications in the guest sessions that connect back to the host for directory services.

Other organizations might choose to not make the host system the Active Directory server, but instead put the global catalog functions in yet another guest session and keep the host server dedicated to virtualization.

Planning for the Use of Snapshots on the Hyper-V System

A technology built in to Hyper-V is the concept of a snapshot. A snapshot uses the Microsoft Volume Shadow Copy Service (VSS) to make a duplicate copy of a file; however, in the case of virtualization, the file is the virtual server guest virtual disk.

The first time a snapshot is taken, the snapshot contains a compressed copy of the contents of RAM on the system along with a bitmap of the virtual disk image of the guest session. If the original guest image is 8GB in size, the snapshot will be significantly smaller in size; however, the server storage system still needs to have additional disk space to support both the original disk image, plus the amount of disk space needed for the contents of the snapshot image.

Subsequent snapshots can be taken of the same guest session. However, the way VSS works, each additional snapshot just identifies the bits that are different from the original snapshot, thus reducing the required disk space for those additional snapshots to be just the same as needed for the incremental difference from the original snapshot to the current snapshot. This difference might be just megabytes in size.

The use of snapshots in a Windows virtualization environment is covered in more detail later in this chapter in the section “Using Snapshots of Guest Operating System Sessions.”

Installing the Microsoft Hyper-V Role

With the basic concepts of Windows virtualization covered so far in this chapter, and the background on sizing and planning for server capacity and storage, this section now focuses on the installation of the Microsoft Hyper-V Server role on a Windows Server 2016 system.

Installing Windows Server 2016 as the Host Operating System

The first step is to install Windows Server 2016 with Hyper-V as the host operating system. The step-by-step guidance to install the Windows operating system is covered in Chapter 3, “Installing Windows Server 2016 and Server Core.” Typically, the installation of a Windows Server 2016 to run the Hyper-V role is a new clean server installation, so the “Installing a Clean Version of Windows Server 2016 Operating System” section in Chapter 3 is the section to follow to set up Windows Server 2016 for virtualization.

Running Server Manager to Add the Hyper-V Role

After the base image of Windows Server 2016 has been installed, some basic initial tasks should be completed, as noted in Chapter 3. The basic tasks are as follows:

1. Change the server name to be a name that you want the virtual server to be.

2. Configure the server to have a static IP address.

3. Join the server to an Active Directory domain (assuming the server will be part of a managed Active Directory environment with centralized administration).

4. Run Windows Update to confirm that all patches and updates have been installed and applied to the server.

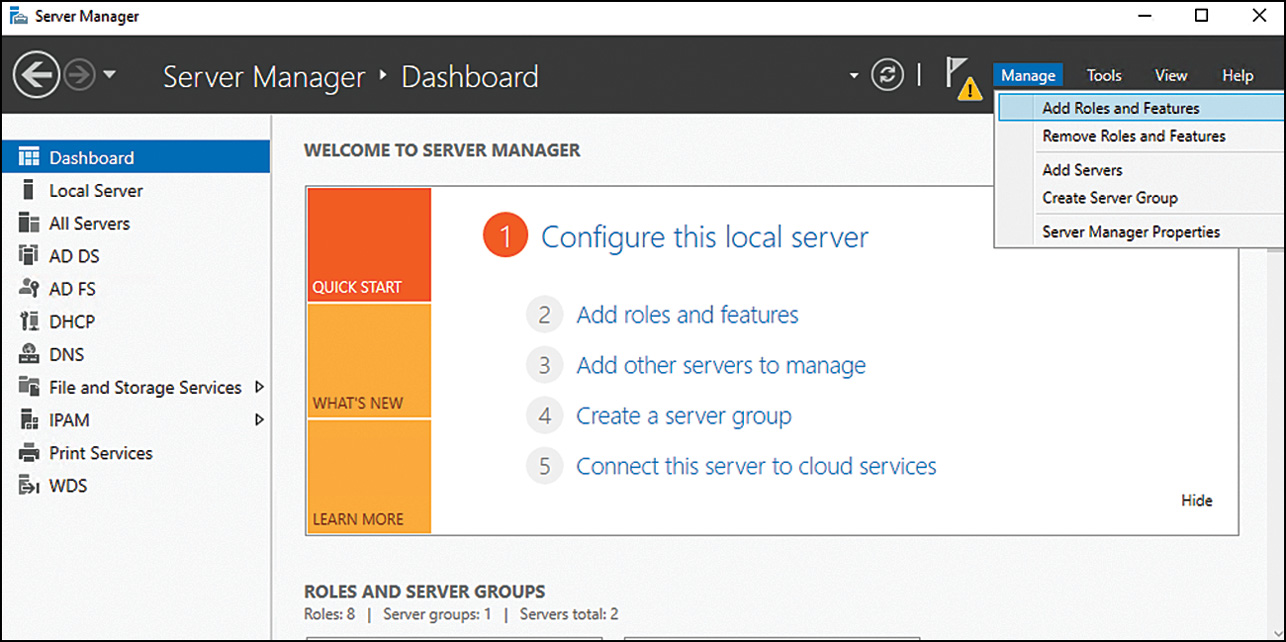

After these basic tasks have been completed, the next step is to add the Hyper-V role to the server system. Do the following to add the server role to the system:

1. Make sure you are logged on to the server with local administrator or domain admin privileges.

2. Start the Server Manager console if it is not already running on the system.

3. Click Manage in the upper-right side of the console and select Add Roles and Features, as shown in Figure 35.2.

4. After the Add Roles Wizard loads, click Next to continue past the Welcome screen.

5. On the Select installation type page, select Role-Based or Feature-Based Installation, and then Next.

6. On the Select Destination Server page, chose Select a Server from the Server Pool, which should have highlighted the server you are on, and then click Next.

7. On the Select Server Roles page, select the Hyper-V role, and then click Next.

NOTE

Hyper-V requires a supported version of hardware-assisted virtualization. Both Intel VT and AMD-V chipsets are supported by Hyper-V. In addition, virtualization must be enabled in the BIOS. Check your server documentation for details on how to enable this setting.

8. When prompted to Add Features that include the Remote Server Administration Tools and Hyper-V Management Tools, click Add Features, and then click Next.

9. On the Select features page, just click Next because you are not adding any new features beyond the Hyper-V role and features.

10. On the Hyper-V page, just click Next.

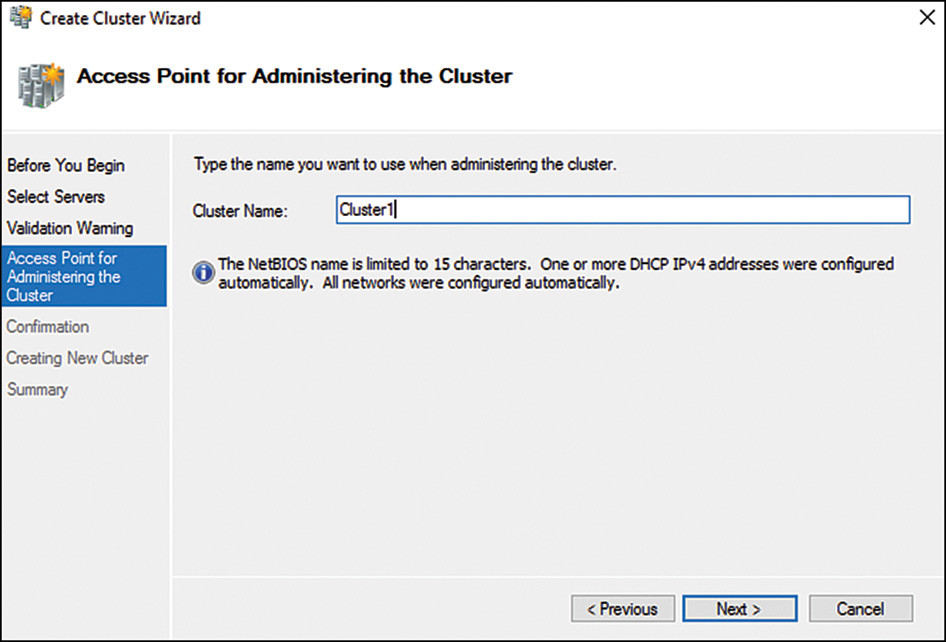

11. On the Create Virtual Switches page, select the LAN adapters you want to have shared with guest sessions. Click Next to continue.

NOTE

It is recommended that you reserve one network adapter for remote access to the host server. To reserve a network, do not select it to be used as a virtual network.

12. When prompted about whether you want to Allow This Server to Send and Receive Live Migrations of Virtual Machines, if you plan to use this machine for failover of guest sessions between hosts, check the check box; if not, leave it unchecked, and then click Next.

NOTE

If you choose not to select the send and receive live migration option at this time, it can be configured later from the Hyper-V Manager console (Actions, Hyper-V Settings, Live Migrations)

13. For the default stores, choose where you want the VHDX virtual server files and configuration files for the guest sessions stored by default, and then click Next.

14. On the Confirm Installation Selections page, review the selections made, and then click Install.

NOTE

On the Confirm Installation Selections page, checking the Restart the Destination Server Automatically if Required check box will reboot the server upon completion. This is usually preferred because the server will need to be rebooted; it might as well do it automatically upon completion.

15. After the server restarts, log on to the server with local administrator or domain admin privileges.

16. After logging on, the installation and configuration will continue for a few more moments. When complete, the Installation Results page will be displayed. Review the results on the page and confirm that the Windows Hyper-V role has been installed successfully. Click Close.

NOTE

The server’s network configuration will change when virtual networking is installed. When network adapters are used in virtual networks, the physical network adapter becomes a Microsoft virtual switch and a new virtual network adapter will be created. By default, this virtual network adapter is shared between the host and the guest VMs.

Installing the Hyper-V Role Using PowerShell

Another option for installing the Hyper-V Server role is to using PowerShell. PowerShell is convenient in that with just a handful of line commands, a server is built, simplifying the installation process, and allowing organizations to more consistently build servers because the PowerShell installation script can be simply copy/pasted or run as a PS1 PowerShell script.

To install the Hyper-V role using PowerShell, do the following

1. From Server Manager, click the Tools option in the upper right of the console and choose Windows PowerShell to launch PowerShell.

2. In PowerShell, type Install-Windows Feature Name Hyper-V Include Management Tools.

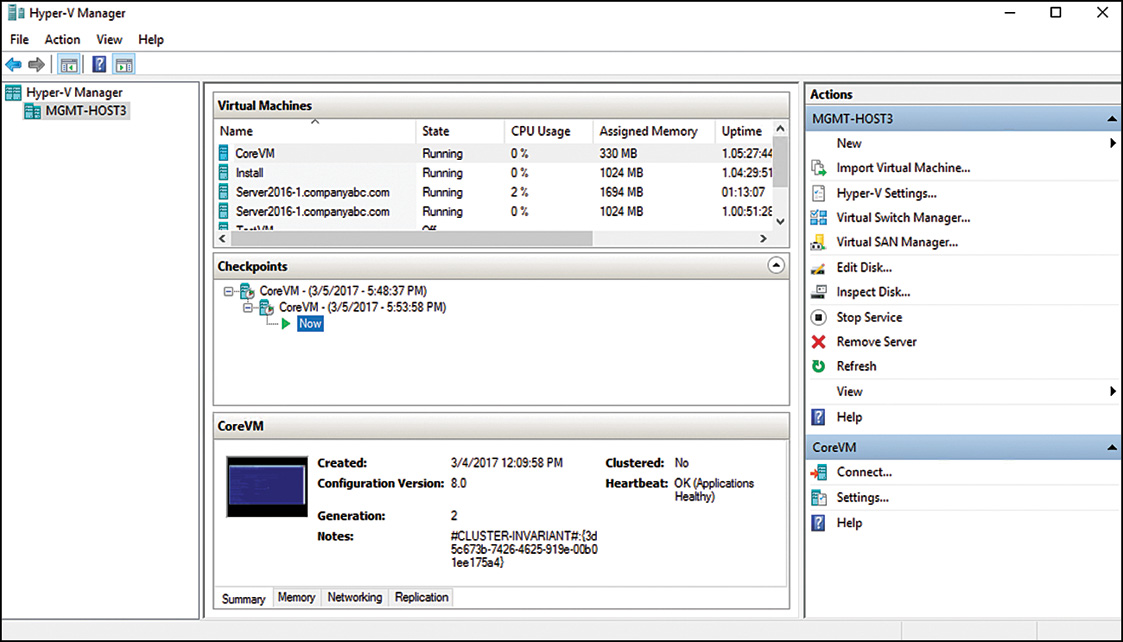

Becoming Familiar with the Hyper-V Administrative Console

After Hyper-V has been installed, the next step is to install guest images that will run on the virtual server. However, before you jump into the installation of guest images, here is a quick guide on navigating the Hyper-V administrative console and the virtual server settings available to be configured that apply to all guest sessions on the server.

Launching the Hyper-V Administrative Console

There are (at least) two ways to open the Hyper-V administrative console and access the server’s configuration options. One way is to use the Server Manager console and launch the Hyper-V Manager from the Tools option, and the other option is to launch the Hyper-V Manager straight from the Administrative Tools of the host server.

NOTE

In earlier versions of Windows Server, the Server Manager provided the ability to run administrative functions from within the Server Manage console. With Windows 2016, the Server Manager allows systems to be centrally viewed and tools to be launched, but the actual administrative console for Hyper-V is the separate Hyper-V Manager tool.

To launch the Hyper-V Manager from within the Server Manager console, follow these steps:

1. Click Tools in the upper-right corner of Server Manager and choose Hyper-V Manager.

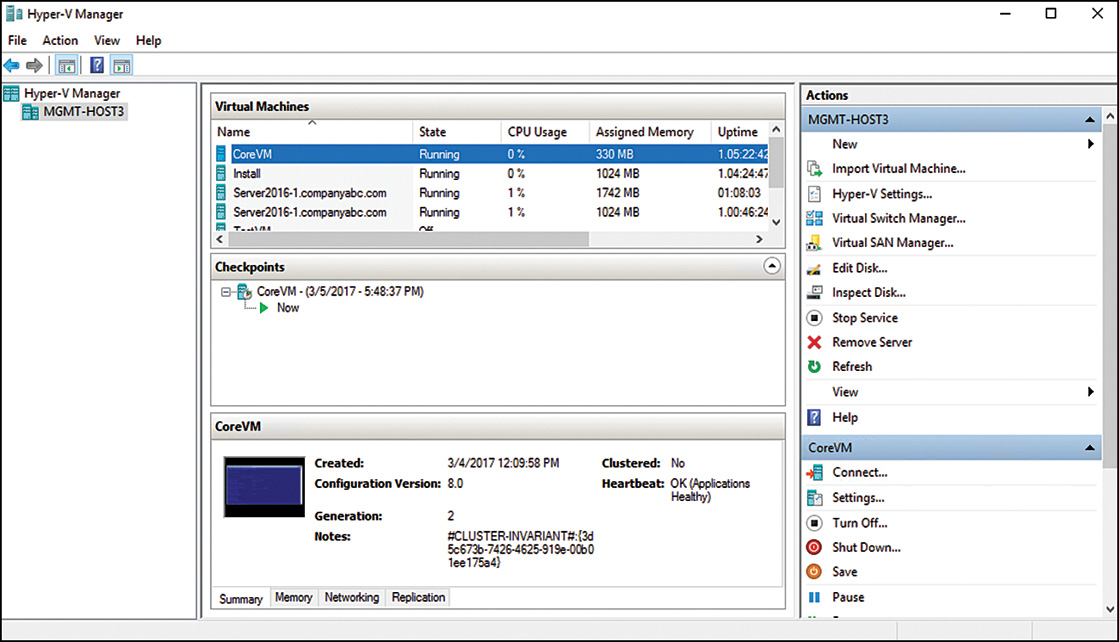

2. Click the name of one of the virtual hosts, and then select one of the virtual machines listed to see details and actions available for the guest system. By default, the Hyper-V Manager will have the local virtual server system listed, as shown in Figure 35.3.

Connecting to a Remote Hyper-V Host

If you want to administer or manage a remote Hyper-V host system, you can connect to that server using the Hyper-V Manager. To connect to a remote virtual server, follow these steps:

1. From within the Hyper-V Manager Console, click the Hyper-V Manager object in the left pane.

2. In the actions pane, click Connect to Server.

3. Select Another Computer and either enter in the name of the server and click OK, or click Browse to search Active Directory for the name of the Hyper-V server you want to remotely monitor and administer.

4. When the server appears in the Hyper-V Manager Console, click to select the server to see the actions available for administering and managing that server.

Navigating and Configuring Host Server Settings

Once in the Hyper-V Manager, there are host server settings you’d want to set, such as specifying where virtual guest session images are stored, networking configuration settings, and the like. When you click the virtual server system you want to administer, action settings become available. These action settings appear on the right side of the Hyper-V console.

Hyper-V Settings

When you select the Hyper-V Settings action item in the actions pane, you have access to configure default paths and remote control keyboard settings. Specifics on these settings are as follows:

![]() Virtual Hard Disks and Virtual Machines—This option enables you to set the drive path for the location where virtual hard disk files and virtual machine configuration files are stored. This might be on the local C: volume of the server system or could be stored on an external SAN or storage system.

Virtual Hard Disks and Virtual Machines—This option enables you to set the drive path for the location where virtual hard disk files and virtual machine configuration files are stored. This might be on the local C: volume of the server system or could be stored on an external SAN or storage system.

![]() Physical GPUs—This option allows for the enabling of physical graphical processing units (GPUs) that are used for RemoteFX when the Hyper-V host server is used as a Remote Desktop Server (RDS) or for Virtual Desktop Infrastructure (VDI) guest sessions. If you are using the Hyper-V host for remote guest session access for client systems and want to improve video graphic rendering and processing, a GPU video graphic card (frequently used for online gaming) can drastically improve the graphical experience of guest sessions connected to the Hyper-V host.

Physical GPUs—This option allows for the enabling of physical graphical processing units (GPUs) that are used for RemoteFX when the Hyper-V host server is used as a Remote Desktop Server (RDS) or for Virtual Desktop Infrastructure (VDI) guest sessions. If you are using the Hyper-V host for remote guest session access for client systems and want to improve video graphic rendering and processing, a GPU video graphic card (frequently used for online gaming) can drastically improve the graphical experience of guest sessions connected to the Hyper-V host.

![]() NUMA Spanning—This option is enabled by default and allows for more virtual machines to run at the same time, but NUMA Spanning does result in the decrease of performance of the virtual guest sessions. Non-Uniform Memory Architecture (NUMA) allocates memory per CPU in the system based on the architecture of the system motherboard. If the system motherboard has two CPU sockets each running a quad-core processor with eight memory sockets, four memory sockets are often allocated to each CPU, or effectively one memory socket per core processor. The relationship between core CPU and memory is allocated by the NUMA boundaries. Crossing the NUMA boundary by enabling NUMA spanning provides a broader distribution of guest sessions on a host system, although a slight performance degradation occurs as more guest sessions cross the NUMA boundaries during execution.

NUMA Spanning—This option is enabled by default and allows for more virtual machines to run at the same time, but NUMA Spanning does result in the decrease of performance of the virtual guest sessions. Non-Uniform Memory Architecture (NUMA) allocates memory per CPU in the system based on the architecture of the system motherboard. If the system motherboard has two CPU sockets each running a quad-core processor with eight memory sockets, four memory sockets are often allocated to each CPU, or effectively one memory socket per core processor. The relationship between core CPU and memory is allocated by the NUMA boundaries. Crossing the NUMA boundary by enabling NUMA spanning provides a broader distribution of guest sessions on a host system, although a slight performance degradation occurs as more guest sessions cross the NUMA boundaries during execution.

![]() Live Migrations—Enabling incoming and outgoing live migrations allows the Hyper-V host server to move guest sessions to and from other Hyper-V host servers. Live migrations are covered later in this chapter in the “Live Migrations” section.

Live Migrations—Enabling incoming and outgoing live migrations allows the Hyper-V host server to move guest sessions to and from other Hyper-V host servers. Live migrations are covered later in this chapter in the “Live Migrations” section.

![]() Storage Migrations—Storage migrations are the ability to move the VHDs of guest sessions from one host server to another as a method of redundancy. By default, a Hyper-V host can migrate two guest session VHDs at the same time. This number can be increased. Increasing the number impacts disk and LAN performance for other Hyper-V functions during the migration process.

Storage Migrations—Storage migrations are the ability to move the VHDs of guest sessions from one host server to another as a method of redundancy. By default, a Hyper-V host can migrate two guest session VHDs at the same time. This number can be increased. Increasing the number impacts disk and LAN performance for other Hyper-V functions during the migration process.

![]() Replication Configuration—Enabling replication allows Hyper-V guest sessions to move between host server, typically across a wide-area network to a different datacenter site. You can find more on Hyper-V replication in the section “Utilizing Hyper-V Replica for Site-to-Site Redundancy.”

Replication Configuration—Enabling replication allows Hyper-V guest sessions to move between host server, typically across a wide-area network to a different datacenter site. You can find more on Hyper-V replication in the section “Utilizing Hyper-V Replica for Site-to-Site Redundancy.”

![]() Keyboard—This option specifies where special Windows key combinations (for example, Alt+Tab and the Windows key) are sent. These keys can always be sent to the virtual machine, the host machine, or the virtual machine only when it is running in full screen.

Keyboard—This option specifies where special Windows key combinations (for example, Alt+Tab and the Windows key) are sent. These keys can always be sent to the virtual machine, the host machine, or the virtual machine only when it is running in full screen.

![]() Mouse Release Key—By default, the key combination that releases the guest session so the administrator can gain keyboard control back to the host console is Ctrl+Alt+left arrow. The Remote Control/Release Key option allows for the selection of other key options.

Mouse Release Key—By default, the key combination that releases the guest session so the administrator can gain keyboard control back to the host console is Ctrl+Alt+left arrow. The Remote Control/Release Key option allows for the selection of other key options.

![]() Reset Check Boxes—Selecting to reset this option returns Hyper-V confirmation messages and wizard pages back to default so that pages and messages are not hidden.

Reset Check Boxes—Selecting to reset this option returns Hyper-V confirmation messages and wizard pages back to default so that pages and messages are not hidden.

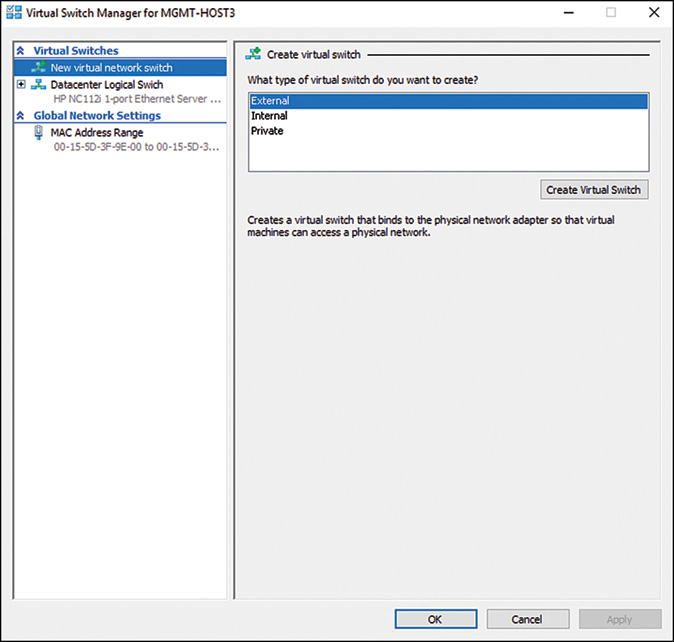

Virtual Switch Manager

By selecting the Virtual Switch Manager action item, you have access to configure the virtual network switches, as shown in Figure 35.4. Here is where you configure the LAN and WAN connections available for the guest sessions of the virtual server host.

Configuration settings include the following:

![]() Create Virtual Switch—This configuration option allows for the addition of a new external, internal, or private network segment available to the guest sessions. An external network binds to the physical network so the virtual machines can access the physical network, just like any other host on the network. An internal network segment would be a connection that is solely within the virtual server system, where you might want to set up a VLAN so that the virtual server guests within a system can talk to each other and the host, but not with the physical network. A private network segment can only be used by the virtual machines that run on that host. They are completely isolated and cannot even communicate directly with the host server.

Create Virtual Switch—This configuration option allows for the addition of a new external, internal, or private network segment available to the guest sessions. An external network binds to the physical network so the virtual machines can access the physical network, just like any other host on the network. An internal network segment would be a connection that is solely within the virtual server system, where you might want to set up a VLAN so that the virtual server guests within a system can talk to each other and the host, but not with the physical network. A private network segment can only be used by the virtual machines that run on that host. They are completely isolated and cannot even communicate directly with the host server.

NOTE

The option to Allow Management Operating System to Share This Network Adapter in external networks (when checked) simplified communications where both network traffic from virtual guest sessions and Hyper-V management all goes across a single network adapter. However, by deselecting this option, you isolate the management operating system from communications between virtual machines and other computers on a physical network, thus improving security by separating Hyper-V management from normal Hyper-V communications traffic.

Here, the administrator can also choose to configure VLAN identification (VLAN ID) for the management operating system. This enables the administrator to tag the virtual network for a specified VLAN.

![]() Virtual Switches—If the system you are managing already has virtual networks configured, they will be listed individually in the left pane of the Virtual Network Manager dialog box. By selecting an existing virtual network switch, you can change the name of the virtual network; change the internal, private, or external connection that the network has access to; or remove the network altogether.

Virtual Switches—If the system you are managing already has virtual networks configured, they will be listed individually in the left pane of the Virtual Network Manager dialog box. By selecting an existing virtual network switch, you can change the name of the virtual network; change the internal, private, or external connection that the network has access to; or remove the network altogether.

![]() MAC Address Range—Every virtual network adapter must have a unique Media Access Control (MAC) address to communicate on an Ethernet network. The administrator can define the range of MAC addresses that can be assigned dynamically to these adapters.

MAC Address Range—Every virtual network adapter must have a unique Media Access Control (MAC) address to communicate on an Ethernet network. The administrator can define the range of MAC addresses that can be assigned dynamically to these adapters.

Virtual SAN Manager

New to Windows Server 2016 Hyper-V is the concept of a virtual Fibre Channel SAN. The Fibre Channel SAN groups physical host bus adapter (HBA) ports together so that virtual Fibre Channel adapters can be added to a virtual machine and can be connected to a SAN.

Edit Disk

The Edit Disk option enables you to modify an existing virtual hard disk (VHD) image. Specifically, the options are as follows:

![]() Compact—This option enables you to shrink a virtual hard disk to remove portions of the disk image file that are unused. This is commonly used when a disk image will be archived and stored and having the smallest disk image file possible is preferred.

Compact—This option enables you to shrink a virtual hard disk to remove portions of the disk image file that are unused. This is commonly used when a disk image will be archived and stored and having the smallest disk image file possible is preferred.

![]() Convert—This option enables you to convert a virtual hard disk to VHD format (that support a 2TB VHD) or to VHDX (that supports up to 64TB VHDX). In addition, the VHD/VHDX can be set to a fixed size or dynamically expanding size disk

Convert—This option enables you to convert a virtual hard disk to VHD format (that support a 2TB VHD) or to VHDX (that supports up to 64TB VHDX). In addition, the VHD/VHDX can be set to a fixed size or dynamically expanding size disk

![]() Expand—This option enables you grow the size of a dynamic disk image. For example, you might have initially created the disk image to only be 8GB maximum in size, and now that you’ve added a lot of applications to the guest image, you are running out of space in the VHD file. By expanding the image file, you effectively have the ability to add more applications and data to the guest session without having to recreate the guest session all over again.

Expand—This option enables you grow the size of a dynamic disk image. For example, you might have initially created the disk image to only be 8GB maximum in size, and now that you’ve added a lot of applications to the guest image, you are running out of space in the VHD file. By expanding the image file, you effectively have the ability to add more applications and data to the guest session without having to recreate the guest session all over again.

![]() Merge—This option allows you to merge changes stored in a differencing disk into the parent disk or another disk.

Merge—This option allows you to merge changes stored in a differencing disk into the parent disk or another disk.

![]() Shrink—This option allows you to reduce the storage capacity of a virtual hard disk.

Shrink—This option allows you to reduce the storage capacity of a virtual hard disk.

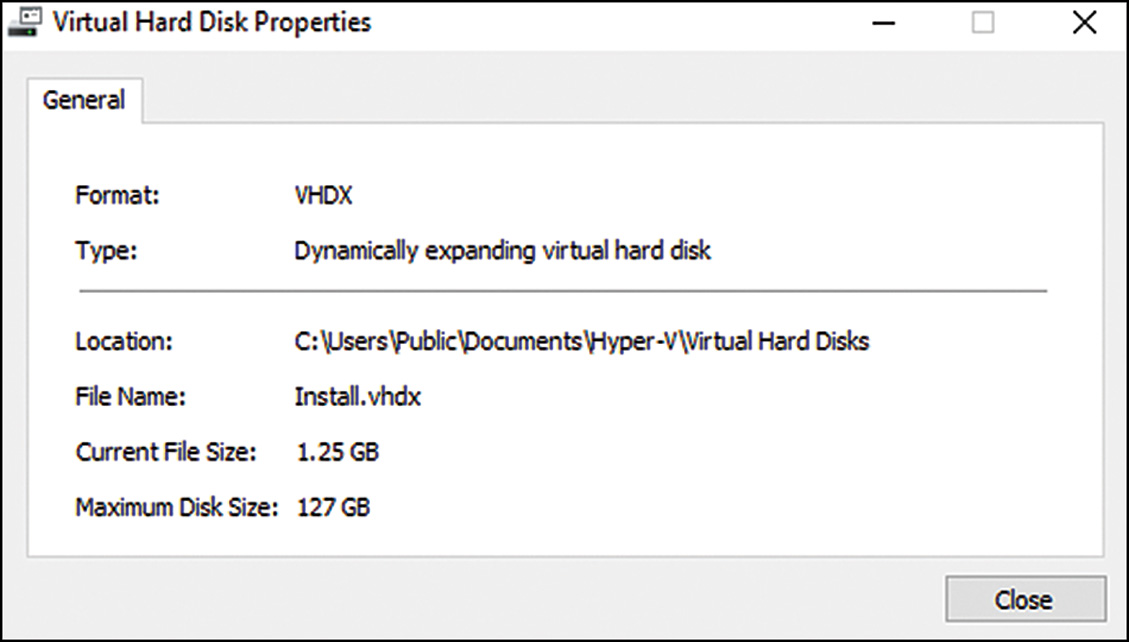

Inspect Disk

The Inspect Disk option in the Virtual Network Manager action item menu enables you to view the settings of an existing virtual image file. For the example shown in Figure 35.5, the disk image is currently 4MB in size, can dynamically grow up to the maximum limit of 127GB, and is located on the local hard drive in the directory C:VMS1.

Stop Service

The Stop Service option in the Hyper-V Manager actions pane provides for the ability to stop the Hyper-V Virtual Machine Management on the Hyper-V host machine being managed. You might choose to stop the service if you needed to perform maintenance or begin the shutdown process of an administered system.

New Configuration Wizard

One of the options listed in the Hyper-V Manager actions pane (in fact, at the top of the Actions list) is a wizard that allows for the creation of new virtual machines, hard disks, and floppy disks. Configuration options are as follows:

![]() New–Virtual Machine—This option enables you to create a new virtual guest session. The whole purpose of running Windows virtualization is to run virtual guest sessions, and this option is the one that enables you to create new guest sessions.

New–Virtual Machine—This option enables you to create a new virtual guest session. The whole purpose of running Windows virtualization is to run virtual guest sessions, and this option is the one that enables you to create new guest sessions.

![]() New–Hard Disk—This option enables you to create a new virtual hard disk (VHD/VHDX) images. When you create a new virtual machine in the first option, this includes creating a hard disk image for the operating system; however, some servers will need additional virtual hard disks. This wizard walks you through the configuration of a new virtual hard disk image.

New–Hard Disk—This option enables you to create a new virtual hard disk (VHD/VHDX) images. When you create a new virtual machine in the first option, this includes creating a hard disk image for the operating system; however, some servers will need additional virtual hard disks. This wizard walks you through the configuration of a new virtual hard disk image.

![]() New–Floppy Disk—This option enables you to take an existing floppy disk and create a virtual floppy disk image from the physical disk. This might be used to create an image of a bootable floppy disk that would later be used in configuring or managing a guest image, or used to create a floppy disk image of a disk that has drivers or utilities on it that will be used in a virtual guest session.

New–Floppy Disk—This option enables you to take an existing floppy disk and create a virtual floppy disk image from the physical disk. This might be used to create an image of a bootable floppy disk that would later be used in configuring or managing a guest image, or used to create a floppy disk image of a disk that has drivers or utilities on it that will be used in a virtual guest session.

Installing a Guest Operating System Session

One of the key tasks noted in the previous section is to begin the installation of a new guest operating system session. The guest operating system installation is wizard driven and enables the administrator to configure settings for the guest session, and to begin the installation of the guest operating system software itself. A guest session could be a server-based session running something like Windows Server 2008 or Windows Server 2016, a client-based session running Windows 8 or Windows 7, or a guest session running a non-Windows operating system.

Gathering the Components Needed for a Guest Session

When creating a guest operating system, the administrator needs to make sure they have all the components needed to begin the installation. The components needed are as follows:

![]() Operating system media—A copy of the operating system installation media is required for the installation of the guest image. The media could be either a DVD or an ISO image of the media disc itself.

Operating system media—A copy of the operating system installation media is required for the installation of the guest image. The media could be either a DVD or an ISO image of the media disc itself.

![]() License key—During the installation of the operating system software, if you are normally prompted to enter in the license key for the operating system, you should have a copy of the license key available.

License key—During the installation of the operating system software, if you are normally prompted to enter in the license key for the operating system, you should have a copy of the license key available.

Other things you should do before starting to install a guest operating system on the virtual server system include the following:

![]() Guest session configuration settings—You will be prompted to answer several core guest session configuration setting options, such as how much RAM you want to allocate for the guest session, how much disk space you want to allocate for the guest image, and so on. Either jump ahead to the next section, “Beginning the Installation of the Guest Session,” so that you can gather up the information you’ll need to answer the questions you’ll be asked, or be prepared to answer the questions during the installation process.

Guest session configuration settings—You will be prompted to answer several core guest session configuration setting options, such as how much RAM you want to allocate for the guest session, how much disk space you want to allocate for the guest image, and so on. Either jump ahead to the next section, “Beginning the Installation of the Guest Session,” so that you can gather up the information you’ll need to answer the questions you’ll be asked, or be prepared to answer the questions during the installation process.

![]() Host server readiness—If you will be preplanning the answers to the questions that you’ll be asked, make sure that the host system has enough RAM, disk space, and so on to support the addition of your guest session to the virtual server system. If your requirements exceed the physical capacity of the server, stop and add more resources (memory, disk space, and so on) to the server before beginning the installation of the guest operating system.

Host server readiness—If you will be preplanning the answers to the questions that you’ll be asked, make sure that the host system has enough RAM, disk space, and so on to support the addition of your guest session to the virtual server system. If your requirements exceed the physical capacity of the server, stop and add more resources (memory, disk space, and so on) to the server before beginning the installation of the guest operating system.

Beginning the Installation of the Guest Session

After you are ready to begin the installation of the guest operating system, launch the guest operating system Installation Wizard as follows:

1. From the actions pane, choose New, Virtual Machine. The New Virtual Machine Wizard will launch.

2. Click Next to continue past the initial Before You Begin screen.

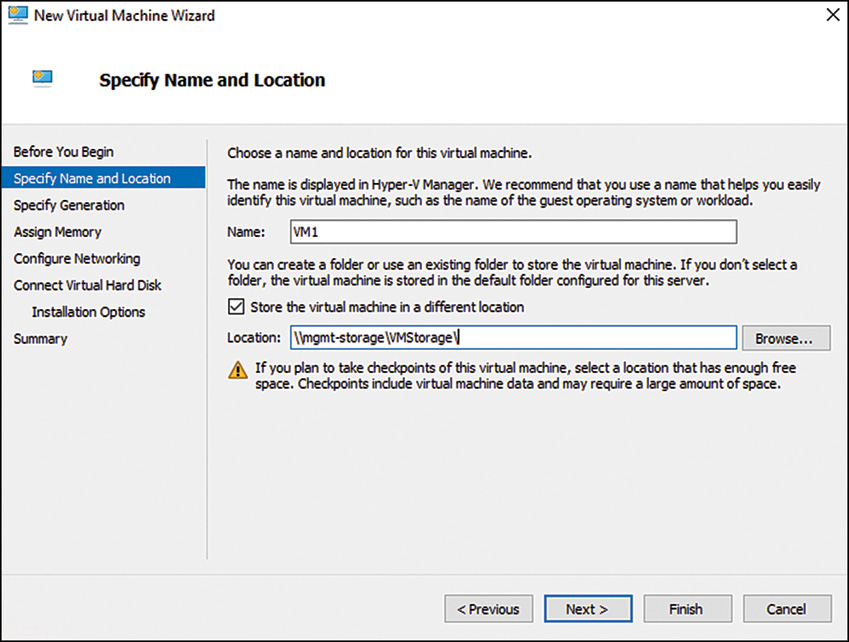

3. Give your virtual machine a name that will be descriptive of the virtual guest session you are creating, such as AD Global Catalog Server, or Exchange 2010 Client Access Server 1, or SharePoint Frontend.

4. If you had set the default virtual machine folder location where guest images are stored, the new image for this virtual machine will be placed in a subfolder of that default folder. However, if you need to select a different location where the image files should be stored, click Store the Virtual Machine in a Different Location, and select Browse to choose an existing disk directory or to create a new directory where the image file for this guest session should be stored. Click Next to continue.