CHAPTER 3

TOWARD A NEW FRAMEWORK FOR INFORMATION SECURITY*

Donn B. Parker, CISSP

3.1 PROPOSAL FOR A NEW INFORMATION SECURITY FRAMEWORK

3.2 SIX ESSENTIAL SECURITY ELEMENTS

3.2.1 Loss Scenario 1: Availability

3.2.2 Loss Scenario 2: Utility

3.2.3 Loss Scenario 3: Integrity

3.2.4 Loss Scenario 4: Authenticity

3.2.5 Loss Scenario 5: Confidentiality

3.2.6 Loss Scenario 6: Possession

3.2.7 Conclusions about the Six Elements

3.3 WHAT THE DICTIONARIES SAY ABOUT THE WORDS WE USE

3.4 COMPREHENSIVE LISTS OF SOURCES AND ACTS CAUSING INFORMATION LOSSES

3.4.1 Complete List of Information Loss Acts

3.4.2 Examples of Acts and Suggested Controls

3.4.3 Physical Information and Systems Losses

3.4.4 Challenge of Complete Lists

3.5 FUNCTIONS OF INFORMATION SECURITY

3.6 SELECTING SAFEGUARDS USING A STANDARD OF DUE DILIGENCE

3.7 THREATS, ASSETS, VULNERABILITIES MODEL

3.1 PROPOSAL FOR A NEW INFORMATION SECURITY FRAMEWORK.

Information security, historically, has been limited by the lack of a comprehensive, complete, and analytically sound framework for analysis and improvement. The persistence of the classic triad of CIA (confidentiality, integrity, availability) is inadequate to describe what security practitioners include and implement when doing their jobs. We need a new information security framework that is complete, correct, and consistent to express, in practical language, the means for information owners to protect their information from any adversaries and vulnerabilities.

The current focus on computer systems security is attributable to the understandable tendency of computer technologists to protect what they know best—the computer and network systems rather than the application of those systems. With a technological hammer in hand, everything looks like a nail. The primary security challenge comes from people misusing or abusing information, and often—but not necessarily—using computers and networks. Yet the individuals who currently dominate the information security folk art are neither criminologists nor computer application specialists.

This chapter presents a comprehensive new information security framework that resolves the problems of the existing models. The chapter demonstrates the need for six security elements—availability, utility, integrity, authenticity, confidentiality, and possession—to replace incomplete CIA security (which does not even seem to include security for information that is not confidential) in the new security framework. This new framework is used to list all aspects of security at a basic level. The framework is also presented in another form, the Threats, Assets, Vulnerabilities Model, which includes detailed descriptors for each topic in the model. This model supports the new security framework, demonstrating its contribution to advance information security from its current technological stage, and as a folk art, into the basis for an engineering and business art in cyberspace.

The new security framework model incorporates six essential parts:

- Security elements of information to be preserved are:

- Availability

- Utility

- Integrity

- Authenticity

- Confidentiality

- Possession

- Sources of loss of these security elements of information:

- Abusers and misusers

- Accidental occurrences

- Natural physical forces

- Acts that cause loss:

- Destruction

- Interference with use

- Use of false data

- Modification or replacement

- Misrepresentations or repudiation

- Misuse or failure to use

- Location

- Disclosure

- Observation

- Copying

- Taking

- Endangerment

- Safeguard functions to protect information from these acts:

- Audit

- Avoidance

- Deterrence

- Detection

- Prevention

- Mitigation

- Transference

- Investigation

- Sanctions and rewards

- Recovery

- Methods of safeguard selection:

- Use due diligence

- Comply with regulations and standards

- Enable business

- Meet special needs

- Objectives to be achieved by information security:

- Avoid negligence

- Meet requirements of laws and regulations

- Engage in successful commerce

- Engage in ethical conduct

- Protect privacy

- Minimize impact of security on performance

- Advance an orderly and protected society

In summary, this model is based on the goal of meeting owners' needs to protect the desired security elements of their information from sources of loss that engage in harmful acts and events by applying safeguard functions that are selected by accepted methods to achieve desired objectives. The sections of the model are explained next. It is important to note that security risk, return on security investment (ROSI), and net present value (NPV) based on unknown future losses and enemies and their intentions are not identified in this model since they are not measurable and, hence, not manageable.

3.2 SIX ESSENTIAL SECURITY ELEMENTS.

Six security elements in the proposed framework model are essential to information security. If any one of them is omitted, information security is deficient in protecting information owners. Six scenarios of information losses, all derived from real cases, are used to demonstrate this contention. We show how each scenario involves violation of one, and only one, element of information security. Thus, if we omit that element from information security, we also remove that scenario from the concerns of information security, which would be unacceptable. It is likely that information security professionals will agree that all of these scenarios fall well within the range of the abuse and misuse that we need to protect against.

3.2.1 Loss Scenario 1 : Availability.

A rejected contract programmer, intent on sabotage, removed the name of a data file from the file directories in a credit union's computer. Users of the computer and the data file no longer had the file available to them because the computer operating system recognizes the existence of information available for users only if it is named in the file directories. The credit union was shut down for two weeks while another programmer was brought in to find and correct the problem so that the file would be available. The perpetrator was eventually convicted of computer crime.

Except for availability, the other elements of information security—utility, integrity, authenticity, confidentiality, and possession—do not address this loss, and their state does not change in the scenario. The owner of the computer (the credit union) retained possession of the data file. Only the availability of the information was lost, but it is a loss that clearly should have been prevented by information security. Thus, the preservation of availability must be accepted as a purpose of information security.

It is true that good security practice might have prevented the disgruntled programmer from having use of the credit union application system, and credit union management could have monitored his work more carefully. They should not have depended on the technical capabilities and knowledge of only one person, and they should have employed several controls to preserve or restore the availability of data files in the computer, such as by maintaining a backup directory with the names of erased files and pointers to their physical location. The loss might have been prevented, or minimized, through good backup practices, good usage controls for computers and specific data files, use of more than one name to identify and find a file, and the availability of utility programs to search for files by content or to mirror file storage. These safeguards would at least have made the attack more difficult and would have confronted the programmer with the malfeasance of his act.

The severity of availability loss can vary considerably. A perpetrator may destroy copies of a data file in a manner that eliminates any chance of recovery. In other situations, the data file may be partially usable, with recovery possible for a moderate cost, or the user may have inconvenienced or delayed use of the file for some period of time, followed by complete recovery.

3.2.2 Loss Scenario 2: Utility.

In this case, an employee routinely encrypted the only copy of valuable information stored in his organization's computer and then accidentally erased the encryption key. The usefulness of the information was lost and could be restored only through difficult cryptanalysis.

Although this scenario can be described as a loss of availability or authenticity of the encryption key, the loss focuses on the usefulness of the information rather than on the key, since the only purpose of the key was to facilitate encryption. The information in this scenario is available, but in a form that is not useful. Its integrity, authenticity, and possession are unaffected, and its confidentiality, unfortunately, is greatly improved.

To preserve utility of information in this case, management should require mandatory backup copies of all critical information and should control the use of powerful protective mechanisms such as cryptography. Management should require security walk-through tests during application development to limit unusable forms of information. It should minimize the adverse effects of security on information use and should control the types of activities that enable unauthorized persons to reduce the usefulness of information.

The loss of utility can vary in severity. The worst-case scenario would be the total loss of usefulness of the information, with no possibility of recovery. Less severe cases may range from a partially useful state with the potential for full restoration of usefulness at moderate cost.

3.2.3 Loss Scenario 3: Integrity.

In this scenario, a software distributor purchased a copy (on DVD) of a program for a computer game from an obscure publisher. The distributor made copies of the DVD and removed the name of the publisher from the DVD copies. Then, without informing the publisher or paying any royalties, the distributor sold the DVD copies in a foreign country. Unfortunately, the success of the program sales was not deterred by the lack of an identified publisher on the DVD or in the product promotional materials.

Because the DVD copies of the game did not identify the publisher that created the program, the copies lacked integrity. (“Integrity” means a state of completeness, wholeness, and soundness, or adhering to a code of moral values.) However, the copies did not lack authenticity, since they contained the genuine game program and only lacked the identity of the publisher, which was not necessary for the successful use of the product. Information utility of the DVD was maintained, and confidentiality and availability were not at issue. Possession also was not at issue, since the distributor bought the original DVD. But copyright protection was violated as a consequence of the loss of integrity and unauthorized copying of the otherwise authentic program.

Several controls can be applied to prevent the loss of information integrity, including using and checking sequence numbers, checksums, and/or hash totals to ensure completeness and wholeness for a series of items. Other controls include performing manual and automatic text checks for required presence of records, subprograms, paragraphs, or titles, and testing to detect violations of specified controls.

The severity of information integrity loss also varies. Significant parts of the information can be missing or misordered (but still available), with no potential for recovery. Or missing or misordered information can be restored, with delay and at moderate cost. In the least severe cases, an owner can recover small amounts of misordered or mislocated information in a timely manner at low cost.

3.2.4 Loss Scenario 4: Authenticity.

In a variation of the preceding scenario, another software distributor obtained the program (on DVD) for a computer game from an obscure publisher. The distributor changed the name of the publisher on the DVD and in title screens to that of a well-known publisher, then made copies of the DVD. Without informing either publisher, the distributor then proceeded to distribute the DVD copies in a foreign country. In this case, the identity of a popular publisher on the DVDs and in the promotional materials significantly added to the success of the product sales.

Because the distributor misrepresented the publisher of the game, the program did not conform to reality: It was not an authentic game from the well-known publisher. Availability and utility are not at issue in this case. The game had integrity because it identified a publisher and was complete and sound. (Certainly the distributor lacked personal integrity because his acts did not conform to ethical practice, but that is not the subject of the scenario.) The actual publisher did not lose possession of the game, even though copies were deceptively represented as having come from a different publisher. And, although the distributor undoubtedly tried to keep his actions secret from both publishers, confidentiality of the content of the game was not at issue.

What if someone misrepresents your information by claiming that it is his? Violation of CIA does not include this act. A stockbroker in Florida cheated his investors in a Ponzi (pyramid sales) scheme. He stole $50 million by claiming that he used a supersecret computer program on his giant computer to make profits of 60 percent per day by arbitrage, a stock trading method in which the investor takes advantage of a small difference in prices of the same stock in different markets. He showed investors the mainframe computer at a Wall Street brokerage firm and falsely claimed that it and the information stored therein were his, thereby lending believability to his claims of successful trading.

This stockbroker's scheme was certainly a computer crime, but the CIA elements do not address it as such because its definition of integrity does not include misrepresentation of information. “Integrity” means only that information is whole or complete; it does not address the validity of information. Obviously, confidentiality and availability do not cover misrepresentation either. The best way to extend CIA to include misrepresentation is to use the more general term “authenticity.” We can then assign the correct English meaning to the phrase “integrity of information”: wholeness, completeness, and good condition. Dr. Peter Neumann at SRI International is correct when he says that information with integrity means that the information is what you expect it to be. This does not, however, necessarily mean that the information is valid (you may expect it to be invalid). “Authenticity” is the word that means conformance to reality.

A number of controls can be applied to ensure authenticity of information. These include confirming transactions, names, deliveries, and addresses; validating products; checking for out-of-range or incorrect information; and using digital signatures and watermarks to authenticate documents.

The severity of authenticity loss can take several forms, including lack of conformance to reality with no recovery possible; moderately false or deceptive information with delayed recovery at moderate cost; or factually correct information with only annoying discrepancies. If the CIA elements included authenticity, with misrepresentation of information as an important associated threat, Kevin Mitnick (the notorious criminal hacker who used deceit as his principal tool for penetrating security barriers) might have faced a far more difficult challenge in perpetrating his crimes. The computer industry might have understood the need to prove computer operating system updates and Web sites genuine, to avoid misrepresentation with fakes before their customers used them in their computers.

3.2.5 Loss Scenario 5: Confidentiality.

A thief deceptively obtained information from a bank's technical maintenance staff. He used a stolen key to open the maintenance door of an automated teller machine (ATM) and secretly inserted a radio transmitter that he purchased from a Radio Shack store. The radio received signals from the touch-screen display in the ATM that customers use to enter their personal identification numbers (PINs) and to receive account balance information. The radio device broadcast the information to the thief's radio receiver in his nearby car, which recorded the PINs and account balances on tape in a modified videocassette recorder. The thief used the information to loot the customers' accounts from other ATMs. The police and the Federal Bureau of Investigation caught the thief after elaborate detective and surveillance efforts. He was sentenced to 10 years in a federal prison.

The thief violated the secrecy of the customers' PINs and account balances, and he violated their privacy. Availability, utility, integrity, and authenticity were unaffected in this violation of confidentiality. The customers' and the bank's exclusive possession of the PINs and account balance information was lost, but not possession per se because they still held and owned the information. Therefore, this was primarily a case of lost confidentiality.

According to most security experts, confidentiality deals with disclosure, but confidentiality also can be lost by observation, whether that observation is voluntary or involuntary, and whether the information is disclosed or not disclosed. For example, if you leave sensitive information displayed on an unattended computer monitor screen, you have disclosed it and it may or may not lose its confidentiality. If you turn the monitor off, leaving a blank screen, you have not disclosed sensitive information, but if someone turns the monitor on and reads its contents without permission, then confidentiality is lost by observation. We must prevent both disclosure and observation in order to protect confidentiality.

Controls to maintain confidentiality include using cryptography, training employees to resist deceptive social engineering attacks intended to obtain their technical knowledge, and controlling the use of computers and computer devices. Good security also requires that the cost of resources for protection not exceed the value of what may be lost, especially with low incidence. For example, protecting against radio frequency emanations in ATMs (as in this scenario) is probably not advisable, considering the cost of shielding and the paucity of such high-tech attacks.

The severity of loss of confidentiality can vary. The worst-case scenario loss is when a party with the intent and ability to cause harm observes a victim's sensitive information. In this case, unrecoverable damage may result. But information also may be known to several moderately harmful parties, with a moderate loss effect, or be known to one harmless, unauthorized party with short-term recoverable effect.

3.2.6 Loss Scenario 6: Possession.

A gang of burglars aided by a disgruntled, recently fired operations supervisor broke into a computer center and stole tapes and disks containing the company's master files. They also raided the backup facility and stole all backup copies of the files. They then held the materials for ransom in an extortion attempt against the company. The burglary resulted in the company's losing possession of all copies of the master files as well as the media on which they were stored. The company was unable to continue business operations. The police eventually captured the extortionists with help from the company during the ransom payment, and they recovered the stolen materials. The burglars were convicted and served long prison sentences.

Loss of possession occurred in this case. The perpetrators delayed availability, but the company could have retrieved the files at any time by paying the ransom. Alternatively, the company could have re-created the master files from paper documents, but at great cost. Utility, integrity, and authenticity were not issues in this situation. Confidentiality was not violated because the burglars had no reason to read or disclose the files. Loss of ownership and permanent loss of possession would have been accomplished if the perpetrators had never returned the materials or if the company had stopped trying to recover them.

The security model must include protecting the possession of information so as to prevent theft, whether the information is confidential or not. Confidentiality, by definition, deals only with secret information that people may possess. Our increasing use of computers magnifies this difference; huge amounts of information are possessed for automated use and not necessarily held confidentially for only specified people to know. Computer object programs are examples of proprietary but not confidential information we do not know but possess by selling, buying, bartering, giving, receiving, and trading until we ultimately control, transport, and use them. We have incorrectly defined possession if we include only the protective efforts for confidential material.

We protect the possession of information by preventing people from unauthorized taking, from making copies, and from holding or controlling it—whether confidentiality is involved or not. The loss of possession of information also includes the loss of control of it, and may allow the new possessor to violate its confidentiality at will. Thus, loss of confidentiality may accompany loss of possession. But we must treat confidentiality and possession separately to determine what actions criminals might take and what controls we need to apply to prevent their actions. Otherwise, we may overlook a particular threat or an effective control. The failure to anticipate a threat and vulnerability is one of the greatest dangers we face in security.

Controls that can protect the possession of information include using copyright laws, implementing physical and logical usage limitations, preserving and examining computer audit logs for evidence of stealing, inventorying tangible and intangible assets, using distinctive colors and labels on media containers, and assigning ownership to enforce accountability of organizational information assets.

The severity of loss of possession varies with the nature of the offense. In a worst-case scenario, a criminal may take information, as well as all copies of it, and there may be no means of recovery—either from the perpetrator or from other sources such as paper documentation. In a less harmful scenario, a criminal might take information for some period of time but leave some opportunity for recovery at a moderate cost. In the least harmful situation, an owner could possess more than one copy of information, leaving open the possibility of recovery from other sources (e.g., backup files) within a reasonable period of time.

3.2.7 Conclusions about the Six Elements.

We need to understand some important differences between integrity and authenticity. For one, integrity deals with the intrinsic condition of information, while authenticity deals with the extrinsic value or meaning relative to external sources and uses. Integrity does not deal with the meaning of the information with respect to external sources, that is, whether the information is timely and not obsolete. Authenticity, in contrast, concerns the question of whether information is genuine or valid and not out of date with respect to its potential use. A user who enters false information into a computer possibly has violated authenticity, but as long as the information remains unchanged, it has integrity. An information security technologist who designs security into computer operating systems is concerned only with application information integrity because the designer cannot know if any user is entering false information. In this case, the security technologist's job is to ensure that both true and false information remain whole and complete. It is the information owner, with guidance from an information security advisor, who has the responsibility of ensuring that the information conforms to reality, in other words, that it has authenticity.

Some types of loss that information security must address require the use of all six elements of the framework model to determine the appropriate security to apply. Each of the six elements can be violated independently of the others, with one important exception: A violation of confidentiality always results in loss of exclusive possession, at the least. Loss of possession, however—even exclusive possession—does not necessarily result in loss of confidentiality.

Other than that exception, the six elements are unique and independent, and often require different security controls. Maintaining the availability of information does not necessarily maintain its utility; information may be available but useless for its intended purpose, and vice versa. Maintaining the integrity of information does not necessarily mean that the information is valid, only that it remains the same or, at least, whole and complete. Information can be invalid and, therefore, without authenticity, yet it may be present and identical to the original version and, thus, have integrity. Finally, who is allowed to view and know information and who possesses it are often two very different matters.

Unfortunately, the written information security policies of many organizations do not acknowledge the need to address many kinds of information loss. This is because their policies are limited to achieving CIA. To define information security completely, the policies must address all six elements presented. Moreover, to eliminate (or at least reduce) security threats adequately, all six elements need to be considered to ensure that nothing is overlooked in applying appropriate controls. These elements are also useful for identifying and anticipating the types of abusive actions that adversaries may take—before such actions are undertaken.

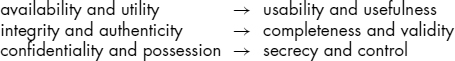

For simplification and ease of reference, we can pair the six elements into three double elements, which should be used to identify threats and select proper controls, and we can associate them with synonyms so as to facilitate recall and understanding:

Availability and utility fit together as the first double element. Controls common to these elements include secure location, appropriate form for secure use, and usability of backup copies. Integrity and authenticity also fit together; one is concerned with internal structure and the other with conformance to external facts or reality. Controls for both include double entry, reasonableness checks, use of sequence numbers and checksums or hash totals, and comparison testing. Control of change applies to both as well. Finally, confidentiality and possession go together because, as discussed, they are interrelated. Commonly applied controls for both include copyright protection, cryptography, digital signatures, escrow, and secure storage.

The order of the elements here is logical, since availability and utility are necessary for integrity and authenticity to have value, and these first four elements are necessary for confidentiality and possession to have material meaning.

3.3 WHAT THE DICTIONARIES SAY ABOUT THE WORDS WE USE.

CIA would be adequate for security purposes if the violation of confidentiality were defined to be anything done with information, if integrity were defined to be anything done to information, and if availability were to include utility, but these definitions would be incorrect and not understood by many people. Information professionals are already defining the term “integrity” incorrectly, and we would not want to make matters worse. These definitions of security and the elements are relevant abstractions from Webster's Third New International Dictionary and Webster's Collegiate Dictionary, 10th edition.

Security—freedom from danger, fear, anxiety, care, uncertainty, doubt; basis for confidence; measures taken to ensure against surprise attack, espionage, observation, sabotage; resistance of a cryptogram to cryptanalysis usually measured by the time and effort needed to solve it.

Availability—present or ready for immediate use.

Utility—useful, fitness for some purpose.

Integrity—unimpaired or unmarred condition; soundness; entire correspondence with an original condition; adherence to a code of moral, artistic or other values; the quality or state of being complete or undivided; material wholeness.

Authenticity—quality of being authoritative, valid, true, real, genuine, worthy of acceptance or belief by reason of conformity to fact and reality.

Confidentiality—quality or state of being private or secret; known only to a limited few, containing information whose unauthorized disclosure could be prejudicial to the national interest.

Possession—act or condition of having or taking into one's control or holding at one's disposal; actual physical control of property by one who holds for himself, as distinguished from custody; something owned or controlled.

We lose credibility and confuse information owners if we do not use words precisely and consistently. When defined correctly, the six words are independent (with the exception that information possession is always violated when confidentiality is violated). They are also consistent, comprehensive, and complete. In other words, the six elements themselves possess integrity and authenticity, and therefore they have great utility. This does not mean that we will not find new elements or replace some of them as our insights develop and technology advances. (I first presented this demonstration of the need for the six elements in 1991 at the fourteenth U.S. National Security Agency/National Institute of Standards and Technology National Computer Security Conference in Baltimore.)

My definitions of the six elements are considerably shorter and simpler than the dictionary definitions, but appropriate for information security.

Availability—usability of information for a purpose.

Utility—usefulness of information for a purpose.

Integrity—completeness, wholeness, and readability of information and quality being unchanged from a previous state.

Authenticity—validity, conformance, and genuineness of information.

Confidentiality—limited observation and disclosure of knowledge.

Possession—holding, controlling, and having the ability to use information.

3.4 COMPREHENSIVE LISTS OF SOURCES AND ACTS CAUSING INFORMATION LOSSES.

The losses that we are concerned about in information security come from people who engage in unauthorized and harmful acts against information, communications, and systems, such as embezzlers, fraudsters, thieves, saboteurs, and criminal hackers. They engage in harmful using, taking, misrepresenting, observing, and every other conceivable form of human misbehavior. Natural physical forces such as air and earth movements, heat and cold, electromagnetic energy, living organisms, gravity and projectiles, and water and gases also are threats to information, as are inadvertent human errors.

Extensive lists of losses found in information security often include fraud, theft, sabotage, and espionage along with disclosure, usage, repudiation, and copying. The first four losses in this list are criminal justice terms at a different level of abstraction from the last four and require an understanding of criminal law, which many information owners and security specialists lack. For example, fraud includes theft only if it is performed using deception, and larceny includes burglary and theft from a victim's premises. What constitutes “premises” in an electronic network environment? This is a legal issue.

Many important types of information-related acts, such as false data entry, failure to perform, replacement, deception, misrepresentation, prolongation of use, delay of use, and even the obvious taking copies of information, are frequently omitted from lists of adverse incidents. Each of these losses may require different prevention and detection controls. This may be easily overlooked if our list of potential acts is incomplete—even though the acts that we typically omit are among the most common reported in actual loss experience. The people who cause losses often are aware that information owners have not provided adequate security and have not considered the full array of possible acts. It is, therefore, essential to include all types of potential harmful acts in our lists, especially when unique safeguards are applicable. Otherwise, we are in danger of being negligent, and those to whom we are accountable will view information security as incomplete or poorly conceived and implemented when a loss does occur.

The complete list of information loss acts in the next section is a comprehensive, nonlegalistic list of potential acts resulting in losses to or with information that I compiled from my 35 years in research about computer crime and security. I have simplified it to a single, low level of abstraction to facilitate understanding by information owners and to enable them to select effective controls. The list makes no distinction among the causes of the losses; as such, it applies equally well to accidental and intentional acts. Cause is largely irrelevant at this level of security analysis, as is the underlying intent or lack thereof. (Identifying cause is important at another level of security analysis. We need to determine the sources and motivation of threats in order to identify appropriate avoidance, deterrence, correction, and recovery controls.) In addition, the list makes no distinction between electronic and physical causes of loss, or among spoken, printed, or electronically recorded information.

The acts in the list are grouped to correspond to the six elements of information security outlined previously (e.g., availability and utility, etc.). Some types of acts in one element grouping may have a related effect in another grouping as well. For example, if no other copies of information exist, destroying the information (under availability) also may cause loss of possession, and taking (under possession) may cause loss of availability. Yet loss of possession and loss of availability are quite different, and may require different controls. I have placed acts in the most obvious categories, where a loss prevention analyst is likely to look first.

Here is an abbreviated version of the complete loss list for convenient use in the information security framework model:

- Destroy

- Interfere with use

- Introduce false data

- Modify or replace

- Misrepresent or repudiate

3.4.1 Complete List of Information Loss Acts

Availability and Utility Losses

- Destruction, damage, or contamination

- Denial, prolongation, acceleration, or delay in use or acquisition

- Movement or misplacement

- Conversion or obscuration

Integrity and Authenticity Losses

- Insertion, use, or production of false or unacceptable data

- Modification, replacement, removal, appending, aggregating, separating, or reordering

- Misrepresentation

- Repudiation (rejecting as untrue)

- Misuse or failure to use as required

Confidentiality and Possession Losses

- Locating

- Disclosing

- Observing, monitoring, and acquiring

- Copying

- Taking or controlling

- Claiming ownership or custodianship

- Inferring

- Exposing to all of the other losses

- Endangering by exposing to any of the other losses

- Failure to engage in or to allow any of the other losses to occur when instructed to do so

Users may be unfamiliar with some of the words in the lists of acts, at least in the context of security. For example, “repudiation” is a word that we seldom hear or use outside of the legal or security context. According to dictionaries, it means to refuse to accept acts or information as true, just, or of rightful authority or obligation. Information security technologists became interested in repudiation when the Massachusetts Institute of Technology (MIT) developed a secure network operating system for its internal use. The system was named Kerberos, taking the name of the three-headed dog that guarded the underworld in Greek mythology. Kerberos provides a means of forming secure links and paths between users and the computers serving them. Unfortunately, however, in early versions it allowed users to falsely deny using the links. This did not present any particular problems in the academic environment, but it did make Kerberos inadequate for business, even though its other security aspects were attractive. As the use of Kerberos spread into business, repudiation became an issue, and nonrepudiation controls became important.

Repudiation is an important issue in electronic transactions such as in electronic banking, purchases, and auctions used by so many people to automate their purchasing functions and Internet commerce, which require digital signatures, escrow, time stamps, and other authentication controls. I could, for example, falsely claim that I never ordered merchandise and that the order form or electronically transmitted ordering information that the merchant possesses is false. Repudiation is also a growing problem because of the difficulty of proving authorship or the source of electronic missives. And the inverse of repudiation—claiming that an act that did not happen actually did happen, or claiming that false information is true—is also important to security, although it is often overlooked. Repudiation and its inverse are both types of misrepresentation, but I include both “repudiation” and “misrepresentation” on the list because they may require different types of controls.

Other words in the list of acts may seem somewhat obscure. For example, we seldom think of prolonging or delaying use as a loss of availability or a denial of use, yet they are losses that are often inflicted by computer virus attacks.

I use the word “locate” in the list rather than “access” because access can be confusing with regard to information security. Although it is commonly used in computer terminology, its use frequently causes confusion, as it did in the trial of Robert T. Morris for releasing the Internet worm of November 2, 1988, and in computer crime laws. For example, access may mean just knocking on a door or opening the door but not going in. How far “into” a computer must you go to “access” it? A perpetrator can cause a loss simply by locating information, because the owner may not want to divulge possession of such information. In this case, no access is involved. For these reasons, I prefer to use the terms “entry,” “intrusion,” and “usage”—as well as “locate”—to refer to a computer as the object of the action. I have a similar problem with the use of the word “disclosure” and ignoring observation as I indicated earlier. “Disclose” is a verb that means to divulge, reveal, make known, or report knowledge to others. We can disclose knowledge by:

- Broadcasting

- Speaking

- Displaying

- Showing

- Leaving it in the presence and view of another person

- Leaving it in possible view where another person is likely to be

- Handing or sending it to another person

Disclosure is what an owner or potential victim might do inadvertently or intentionally, not what a perpetrator does, unless it is the second act after stealing, such as selling stolen intellectual property to another person. Disclosure can be an abuse if a person authorized to know information discloses it to an unauthorized person, or if an unauthorized person discloses knowledge to another person without permission. In any case, confidentiality is lost or is potentially lost, and the person disclosing the information may be accused of negligence, violation of privacy, conspiracy, or espionage.

Loss of confidentiality also can occur by observation, whether the victim or owner disclosed knowledge, resisted disclosure, or did nothing either to protect or to disclose it. Observing is an abuse of listening, spying by eavesdropping, shoulder surfing (looking over another person's shoulder or overhearing), looking at or listening to a stolen copy of information, or even by tactile feeling, as in the case of reading Braille. We should think about loss of confidentiality as a loss caused by inadvertent disclosure by the victim, observation by the perpetrator, and disclosure by the perpetrator who passes information to a third party. Disclosure and observation of information that is not knowledge converts it into knowledge if cognition takes place. Disclosure always results in loss of confidentiality by putting information into a state where there is no longer any secrecy, but observation results in loss of confidentiality only if cognition or use to the detriment of the owner takes place. Privacy is a right that is a whole other topic that I do not cover here. (This issue is discussed in Chapter 69.)

Loss of possession of information (including knowledge) is the loss from the unintended or regretful giving or taking of information. At a higher level of crime description, we call it larceny (theft or burglary) or fraud (when deceit is involved). Possession seems to be most closely associated with confidentiality. The two are placed together in the list because they share the common losses of taking and copying (loss of exclusive possession). I could have used “ownership” of information, since it is a synonym for possession, but “ownership” seems to be not as broad, because someone may rightly or wrongly possess information that is rightfully owned by another. The concepts of owner or possessor of information, along with user, provider, or custodian of information, are important distinctions in security for assigning asset accountability. This provides another reason for including possession in the list.

The act of endangerment is quite different from, but applies to, the other losses. It means putting information in harm's way, or that a person has been remiss (and possibly negligent) by not applying sufficient protection to information, such as leaving sensitive or valuable documents in an unlocked office or open trash bin. Leaving a computer unnecessarily connected to the Internet is another example. Endangerment of information may lead to charges of negligence or criminal negligence and civil liability suits that may be more costly than direct loss incidents. My objectives of security in the framework model invokes a standard of due diligence to deal with this exposure.

The last act in the list—failure to engage in or allow any of the other acts when instructed to do so—may seem odd at first glance. It means that an information owner may require an act resulting in any of the other acts to be carried out. Or the owner may wish that an act be allowed to occur, or information to be put into danger of loss. There are occasions when information should be put in harm's way for testing purposes or to accomplish a greater good. For example, computer programmers and auditors often create information files that are purposely invalid for use as input to a computer to make sure that the controls to detect or mitigate a loss are working correctly. A programmer bent on crime might remove invalid data in a test input file to avoid testing a control that the perpetrator has neutralized or has avoided implementing for nefarious purposes. The list would surely be incomplete without this type of loss, yet I have never seen it included or discussed in any other information security text.

The acts in the list are described at the appropriate level for deriving and identifying appropriate security controls. At the next lower level of abstraction (e.g., read, write, and execute), the losses would not be so obvious and would not necessarily suggest important controls. At the level that I choose, there is no attempt to differentiate acts that make no change to information from those that do, since these differences are not important for identifying directly applicable controls or for performing threat analyses. For example, an act of modification changes the information, while an act of observation does not, but encryption is likely to be employed as a powerful primary control against both acts.

3.4.2 Examples of Acts and Suggested Controls.

The next examples illustrate the relationships between acts and controls in threat analysis. Groups of acts are followed by examples of the losses and applicable controls.

3.4.2.1 Destroy, Damage, or Contaminate.

Perpetrators or harmful forces can damage, destroy, or contaminate information by electronically erasing it, writing other data over it, applying high-energy radio waves to damage delicate electronic circuits, or physically damaging the media (e.g., paper, flash memory, or disks) containing it.

Controls include disaster prevention safeguards such as locked facilities, safe storage of backup copies, and write-usage authorization requirements.

3.4.2.2 Deny, Prolong, Delay Use or Acquisition.

Perpetrators can make information unavailable by hiding it or denying its use through encryption and not revealing the means to restore it, or by keeping critical processing units busy with other work, such as in a denial-of-service attack. Such actions would not necessarily destroy the information. Similarly, a perpetrator may prolong information use by making program changes that slow the processing in a computer or by slowing the display of the information on a screen. Such actions might cause unacceptable timing for effective use of the information. Information acquisition may be delayed by requiring too many passwords to retrieve it or by slowing retrieval. These actions can make the information obsolete by the time it becomes available.

Controls include making multiple copies available from different sources, preventing overload of processing by selective allowance of input, or preventing the activation of harmful mechanisms such as computer viruses by using antiviral utilities.

3.4.2.3 Enter, Use, or Produce False Data.

Data diddling, my term for false data entry and use, is a common form of computer crime, accounting for much of the financial and inventory fraud. Losses may be either intentional, such as those resulting from the use of Trojan horses (including computer viruses), or unintentional, such as those from input errors.

Most internal controls such as range checks, audit trails, separation of duties, duplicate data entry detection, program proving, and hash totals for data items protect against these threats.

3.4.2.4 Modify, Replace, or Reorder.

These acts are often intelligent changes rather than damage or destruction. Reordering, which is actually a form of modification, is included separately because it may require specific controls that could otherwise be overlooked. Similarly, replacement is included because users might not otherwise include the idea of replacing an entire data file when considering modification. Any of these actions can produce a loss inherent in the threats of entering and modifying information, but including all of them covers modifying data both before entry and after entry, since each requires different controls.

Cryptography, digital signatures, usage authorization, and message sequencing are examples of controls to protect against these acts, as are detection controls to identify anomalies.

3.4.2.5 Misrepresent.

The claim that information is something different from what it really is or has a different meaning from what was intended arises in counterfeiting, forgery, fraud, impersonation (of authorized users), and many other deceptive activities. Hackers use misrepresentation in social engineering to deceive people into revealing information needed to attack systems. Misrepresenting old data as new information is another act of this type.

Controls include user and document authentication methods such as passwords, digital signatures, and data validity tests. Making trusted people more resistant to deception by reminders and training is another control.

3.4.2.6 Repudiate.

This type of loss, in which perpetrators generally deny having made transactions, is prevalent in electronic data interchange (EDI) and Internet commerce. Oliver North's denial of the content of his e-mail messages is a notable example of repudiation, but as I mentioned earlier, the inverse of repudiation also represents a potential loss.

Repudiation can be controlled most effectively through the use of digital signatures and public key cryptography. Trusted third parties, such as certificate authorities with secure computer servers, provide the independence of notary publics to resist denial of truthful information as long as they can be held liable for their failures.

3.4.2.7 Misuse or Fail to Use as Required.

Misuse of information is clearly an act resulting in many information losses. Misuse by failure to perform duties such as updating files or backing up information is not so obvious and needs explicit identification. Implicit misuse by conforming exactly to inadequate or incorrect instructions is a sure way to sabotage systems.

Information usage control and internal application controls that constrain the modification or use of trusted software help to avoid these problems. Keeping secure logs of routine activities can help catch operational vulnerabilities.

3.4.2.8 Locate.

Unauthorized use of someone's computer or data network to locate and identify information is a crime under most computer crime statutes—even if there is no overt intention to cause harm. Such usage is a violation of privacy, and trespass to engage in such usage is a crime under other laws.

Log-on and usage controls are major features in many operating systems such as Microsoft Windows and some versions of Unix as well as in add-on security utilities such as RACF and ACF2 for large IBM computers and many security products for personal computers.

3.4.2.9 Disclose.

Preventing information from being revealed to people not authorized to know it is the purpose of business, personal, and government secrecy. Disclosure may be verbal, by mail, or by transferring messages or files electronically or on disks, flash memories, or tape. Disclosure can result in loss of privacy and trade secrets.

Military organizations have advanced protection of information confidentiality to an elaborate art form.

3.4.2.10 Observe or Monitor.

Observation, which requires action on the part of a perpetrator, is the inverse of disclosure, which results from actions of a possessor. Workstation display screens, communication lines, and monitoring devices such as recorders and audit logs are common targets of observation and monitoring. Observation of output from printers is another possible source, as is shoulder surfing—the technique of watching screens of other computer users.

Physical entry protection for input and output devices represents the major control to prevent this type of loss. Preventing wiretapping and eavesdropping is also important.

3.4.2.11 Copy.

Copy machines and the software copy command are the major sources of unauthorized copying. Copying is used to violate exclusive possession and privacy. Copying can destroy authenticity, as when used to counterfeit money or other business instruments.

Location and use controls are effective against copying, as are unique markings such as those used on U.S. currency and watermarks on paper and in computer files.

3.4.2.12 Take.

Transferring data files in computers or networks constitutes taking. So does taking small computers and DVDs or documents for the value of the information stored in them. Perpetrators can easily take copies of information without depriving the owner of possession or confidentiality.

A wide range of physical and logical location controls applies to these losses; most are based on common sense and a reasonable level of due care.

3.4.2.13 Endanger.

Putting information into locations or conditions in which others may cause loss in any of the previously described ways clearly endangers the information, and the perpetrator may be accused of negligence, at least.

Physical and logical means of preventing information from being placed in danger are important. Training people to be careful, and holding them accountable for protecting information, may be the most effective means of preventing endangerment.

3.4.3 Physical Information and Systems Losses.

Information also can suffer from physical losses such as those caused by floods, earthquakes, radiation, and fires. Although these losses may not directly affect the information itself (e.g., knowledge of operating procedures held in the minds of operators), they can damage or destroy the media and the environment that contain representations of the information. Water, for example, can destroy printed pages and damage magnetic disks; physical shaking or radio frequency radiation can short-out electronic circuits, and fires can destroy all types of media. Overall, physical loss may occur in seven natural ways by application of:

- Extreme temperature

- Gases

- Liquids

- Living organisms

- Projectiles

- Movements

- Energy anomalies

Each way, of course, comes from specific sources of loss (e.g., smoke or water). And the various ways can be broken down further, to identify the underlying cause of the source of loss. For example, the liquid that destroys information may be water flowing from a plumbing break above the computer workstation, caused in turn by freezing weather. The next list presents examples of each of the seven major sources of physical loss.

- Extreme temperature. Heat or cold. Examples: sunlight, fire, freezing, hot weather, and the breakdown of air-conditioning equipment.

- Gases. War gases, commercial vapors, humid or dry air, suspended particles. Examples: sarin nerve gas, PCBs from exploding transformers, release of Freon from air conditioners, smoke and smog, cleaning fluid, and fuel vapors.

- Liquids. Water, chemicals. Examples: floods, plumbing failures, precipitation, fuel leaks, spilled drinks, acid and base chemicals used for cleaning, and computer printer fluids.

- Living organisms. Viruses, bacteria, fungi, plants, animals, and human beings. Examples: sickness of key workers, molds, contamination from skin oils and hair, contamination and electrical shorting from defecation and release of body fluids, consumption of information media such as paper or of cable insulation, and shorting of microcircuits from cobwebs.

- Projectiles. Tangible objects in motion, powered objects. Examples: meteorites, falling objects, cars and trucks, airplanes, bullets and rockets, explosions, and windborne objects.

- Movement. Collapse, shearing, shaking, vibration, liquefaction, flows, waves, separation, slides. Examples: dropping or shaking fragile equipment, earthquakes, earth slides, lava flows, sea waves, and adhesive failures.

- Energy anomalies. Electric surge or failure, magnetism, static electricity, aging circuitry; radiation, sound, light, radio, microwave, electromagnetic, atomic. Examples: electric utility failures, proximity of magnets and electromagnets, carpet static, electromagnetic pulses (EMP) from nuclear explosions, lasers, loudspeakers, high-energy radio frequency (HERF) guns, radar systems, cosmic radiation, and explosions.

Although meteorites, for example, clearly pose little danger to computers, it is nonetheless important to include all such unlikely events in a thorough analysis of potential threats. In general, include every possible act included in a threat analysis. Then consider it carefully; if it is too unlikely, document the consideration and discard the item. It is better to have thought of a source of loss and to have discarded it than to have overlooked an important one. Invariably, when you present a threat analysis to others, someone will try to surprise the developer with another source of loss that has been overlooked.

Insensitive practitioners have ingrained inadequate loss lists in the body of knowledge from the very inception of information security. Proposing a major change at this late date is a bold action that may take significant time to accomplish. However, we must not perpetuate our past inadequacies by using the currently accepted destruction, disclosure, use, and modification (DDUM) as a complete list of losses. We must not underrate or simplify the complexity of our subject at the expense of misleading information owners. Our adversaries are always looking for weaknesses in information security, but our strength lies in anticipating sources of threats and having plans in place to prevent the losses that they may cause.

It is impossible to collect a truly complete list of the sources of information losses that can be caused by the intentional or accidental acts of people. We really have no idea what people may do—now or in the future. We base our lists on experience, but until we can conceive of an act, or until a threat actually surfaces or occurs, we cannot include it on the list. And not knowing the threat means that we cannot devise a plan to protect against it. This is one of the reasons that information security is still a folk art rather than a science.

3.4.4 Challenge of Complete Lists.

I believe that my lists of physical sources of loss and information losses are complete, but I am always interested in expanding them to include new sources of loss that I may have overlooked.

While I was lecturing in Australia, for example, a delegate suggested that I had omitted an important category. His computer center had experienced an invasion of field mice with a taste for electrical insulation. The intruders proceeded to chew through the computer cables, ruining them. Consequently, I had to add rodents to my list of sources. I then heard about an incident in San Francisco in which the entire evening shift of computer operations workers ate together in the company cafeteria to celebrate a birthday. Then they all contracted food poisoning, leaving their company without sufficient operations staff for two weeks. I combined the results of these two events into a category named “Living Organisms.”

3.5 FUNCTIONS OF INFORMATION SECURITY.

The model for information security that I have proposed includes 12 security functions instead of the 3 (prevention, detection, and recovery) included in previous models. These functions describe the activities that information security practitioners and information owners engage in to protect information as well as the objectives of the security controls that they use. Every control serves one or more of these functions.

Although some security specialists add other functions to the list, such as quality assurance and reliability, I consider these to be outside the scope of information security; other specialized fields deal with them. Reliability is difficult to relate to security except as endangerment when perpetrators destroy the reliability of information and systems, which is a violation of security. Thus, security must preserve a state of reliability but need not necessarily attempt to improve it. Security must protect the auditability of information and systems while, at the same time, security itself must be reliable and auditable. I believe that my security definitions include destruction of the reliability and auditability of information at a high level of abstraction. For example, reliability is reduced when the authenticity of information is put into question by changing it from a correct representation of fact.

Similarly, I do not include such functions as authentication of users and verification in my lists, since I consider these to be control objectives to achieve the 12 functions of information security.

There is a definite logic to the order in which I present the 12 functions in my list. A methodical information security practitioner is likely to apply the functions in this order when resolving security vulnerabilities.

- Information security must first be independently audited in an adversarial manner in order to document its state and to identify its weaknesses and strengths.

- The practitioner must determine if a security problem can be avoided altogether.

- If the problem cannot be avoided, the practitioner needs to try to deter potential abusers or forces from misbehaving.

- If the threat cannot be avoided or deterred, the practitioner attempts to detect its activation.

- If detection is not assured, then the practitioner tries to prevent the act from occurring.

- If prevention fails and an act occurs, then the practitioner needs to stop it or minimize its harmful effects through mitigation.

- The practitioner needs to determine if transferring the responsibility to another individual or department might be more effective at resolving the situation resulting from the attack, or if another party (e.g., an insurer) might be held accountable for the cost of the loss.

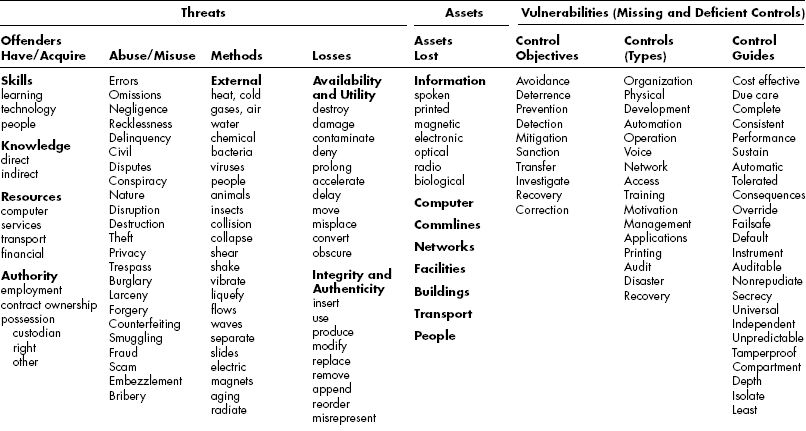

EXHIBIT 3.1 Threats, Assets, and Vulnerabilities Model

Source: Donn B. Parker, Fighting Computer Crime (New York: John Wiley & Sons, 1998).

- After a loss occurs, the practitioner needs to investigate and search for the individual(s) or force(s) that caused or contributed to the incident as well as for any parties that played a role in it—positively or negatively.

- When identified, all parties should be sanctioned or rewarded as appropriate.

- After an incident is concluded, the victim needs to recover or assist with recovery.

- The stakeholders should take corrective actions to prevent the same type of incident from occurring again.

- The stakeholders must learn from the experience in order to advance their knowledge of information security and educate others.

3.6 SELECTING SAFEGUARDS USING A STANDARD OF DUE DILIGENCE.

Information security practitioners usually refer to the process of selecting safeguards as risk assessment, risk analysis, or risk management. Selecting safeguards based on risk calculations can be a fruitless and expensive process. Although many security experts and associations advocate using risk assessment methods, many organizations ultimately find that using a standard of due diligence (or care) is far superior and more practical. Often one sad experience of using security risk assessment is sufficient to convince information security departments and corporate management of their limitations. Security risk is a function of probability or frequency of occurrence of rare loss events and their impact, and neither is sufficiently measurable or predictable for investment in security. Note that risk applies only to rare events. Events such as computer virus attacks or credit card fraud are occurring continuously and are not risks; they are certainties and can be measured, controlled, and managed.

The standard of due diligence approach is simple and obvious; it is the default process that I recommend and that is commonly used today instead of more elaborate “scientific” approaches. The standard of due diligence approach is recognized and accepted by many legal documents and organizations and is documented in numerous business guides. The 1996 U.S. federal statute on protecting trade secrets (18 USC § 1831), for example, states in (3) (a) that the owner of information must take “reasonable measures to keep such information secret” for it to be defined as a trade secret. (See Chapter 45.)

3.7 THREATS, ASSETS, VULNERABILITIES MODEL.

Pulling all of the aspects together in one place is a useful way to analyze security threats and vulnerabilities and to create effective scenarios to test real information systems and organizations. The model illustrated in Exhibit 3.1 is designed to help readers do this. Users can outline a scenario or analyze a real case by circling and connecting the appropriate descriptors in each column of the model.

In this version of the model, the Controls column lists only the subject headings of control types; a completed model would contain hundreds of controls. If the model is being used to conduct a review, I suggest that the Vulnerabilities section of the model be renamed to Recommended Controls.

3.8 CONCLUSION.

The security framework proposed in this chapter represents an attempt to overcome the dominant technologist view of information security by focusing more broadly on all aspects of security, including the information that we are attempting to protect, the potential sources of loss, the types of loss, the controls that we can apply to avoid loss, the methods for selecting those controls, and our overall objectives in protecting information. This broad focus should have two beneficial effects: advancing information security from a narrow folk art to a broad-based discipline and—most important—helping to reduce many of the losses associated with information, wherever it exists.

* This chapter is a revised excerpt from Donn B. Parker, Fighting Computer Crime (New York: John Wiley & Sons, 1998), Chapter 10, “A New Framework for Information Security,” pp. 229–255.