CHAPTER 20

SPAM, PHISHING, AND TROJANS: ATTACKS MEANT TO FOOL

Stephen Cobb

20.1 UNWANTED E-MAIL AND OTHER PESTS: A SECURITY ISSUE

20.2 E-MAIL: AN ANATOMY LESSON

20.2.1 Simple Mail Transport Protocol

20.3.1 Origins and Meaning of Spam (not SPAM™)

20.3.3 Spam's Two-Sided Threat

20.4.1 Enter the Spam Fighters

20.4.4 Black Holes and Block Lists

20.5.2 Growth and Extent of Phishing

20.6.2 Basic Anti-Trojan Tactics

20.6.3 Lockdown and Quarantine

20.1 UNWANTED E-MAIL AND OTHER PESTS: A SECURITY ISSUE.

Three oddly named threats to computer security are addressed in this chapter: spam, phishing, and Trojan code. Spam is unsolicited commercial e-mail. Phishing is the use of deceptive unsolicited e-mail to obtain—to fish electronically for—confidential information. Trojan code, a term derived from the Trojan horse, is software designed to achieve unauthorized access to systems by posing as legitimate applications. In this chapter, we outline the threats posed by spam, phishing, and Trojans as well as the mitigation of those threats.

These threats might have strange names, but they are no strangers to those whose actions undermine the benefits of information technology. Every year, for at least the last three years, the Internal Revenue Service (IRS) has had to warn the public about e-mail scams that take the name of the IRS in vain, attempting to defraud taxpayers of their hard-earned money by aping the agency's look and feel in e-mail messages. The fact that an IRS spokesperson has stated that the agency “never ever uses e-mail to communicate with taxpayers” is a sad comment on our society, for there is no good reason why this should be the case. After all, the agency allows electronic filing of annual tax returns, and every day hundreds of millions of people around the world do their banking and bill paying online, in relative safety. The technology exists to make e-mail safe and secure. In many ways, this chapter is a sad catalog of what has happened because we have not deployed the technology effectively.

20.1.1 Common Elements.

Each of these threats is quite different from the other in some respects, but all three have some important elements in common; first, and most notably, they use deception. These threats prey on the gullibility of computer users and achieve their ends more readily when users are ill-trained and ill-informed (albeit aided and abetted, in some cases, by poor system design and poor management of services, such as broadband connectivity).

Second, all three attacks are enabled by system services that are widely used for legitimate purposes. Although the same might be said of computer viruses—they are code and computers are built to run code—the three threats that are the focus of this chapter typically operate at a higher level, the application layer. Indeed, this fact may contribute to the extent of their deployment—these threats can be carried out with relatively little technical ability, relying more on the skills associated with social engineering than with coding. For example, anyone with an Internet connection and an e-mail program can send spam. That spam can spread a ready-made Trojan. Using a toolkit full of scripts, you can add a Web site with an input form to your portfolio and go phishing for personal data to collect and abuse.

Another reason for considering these three phenomena together is the fact that they often are combined in real-world exploits. The same mass e-mailing techniques used to send spam may be employed to send out messages that spread Trojan code. As described in Chapter 15 in this Handbook, Trojan code may be used to aid phishing operations. Systems compromised by Trojan code may be used for spamming, and so on. What we see in these attacks today are the very harmful and costly result of combining relatively simple strategies and techniques with standards of behavior that range from the foolish and irresponsible to the unabashedly criminal.

These three threats also share the distinction of having been underestimated when they first emerged. All three have evolved and expanded in the twenty-first century, even as some threats—for example, viruses and worms—have stalled. By the end of 2006, it was widely recognized that spam, phishing, and Trojan code could, through a combination of technology abuse and social engineering, potentially defeat even the most sophisticated controls. All three threats, alone or in combination, are capable of imposing enormous and costly burdens on system resources. (In the second half of 2007, some 500,000 new malicious code threats were reported to Symantec, one of the world's largest computer security software producers. Of the top 10 new malicious code families, 5 were Trojans, 2 were worms, 2 were worms with a Trojan component, and 1 was a worm with a virus component.1

One other factor unites these three threats: their association with the emergence of financial gain as a primary motivator for writing malicious code and abusing Internet connectivity. The hope of making money is the primary driver of spam. Phishing is done to facilitate fraud for gain through theft of personal data and credentials, either for use by the thief or through resale in the underground economy. Trojan code is used to advance the goals of both spammers and perpetrators of phishing scams. In short, all three constitute a very real threat to computer security, the well-being of any computer-using organization, and the online economy.

There is some irony in this, particularly with respect to spam. By 2006, spam was consuming over 90 percent of e-mail resources worldwide.2 This is a staggering level of system abuse by any standard. However, when a handful of security professionals claimed, just five years earlier, that spam was a computer security threat, they were met with considerable skepticism and some suspicion. (This might have been due, in part, to the fact that the world had just experienced the anticlimax of Y2K; also, there was doubtless an element of the recurrent suspicion that security professionals trumpet new threats to drum up business—a strange notion, given the perennial abundance of opportunities for experts in a field that persistently reports near-zero levels of unemployment.)

When phishing attacks started to proliferate a few years later, warnings still were met with some skepticism, but thankfully not as much. It is hoped that, in 2008 and beyond, with spam accounting for over 90 percent of all e-mail on the Internet and phishing attacks constantly evolving to steal targeted data, often executed over systems compromised by Trojan code, security professionals will not deny that these are serious threats.

20.1.2 Chapter Organization.

After a brief e-mail anatomy lesson, each of the three threats addressed by this chapter will be examined in turn. The e-mail anatomy lesson is provided because e-mail plays such a central role in these threats, enabling spam and phishing and the spread of Trojan code. Responses to the three threats are discussed with respect to each other, along with consideration of broader responses that may be used to mitigate all three.

For an introduction to the general principles of social engineering, see Chapter 19 in this Handbook.

20.2 E-MAIL: AN ANATOMY LESSON.

E-mail plays a role in numerous threats to information and information systems. Not only does it enable spam and phishing, it is used to spread Trojan code, viruses, and worms. A basic understanding of how e-mail works will help to understand these threats and the various countermeasures that have been developed.

20.2.1 Simple Mail Transport Protocol.

All e-mail transmitted across the Internet is sent using an agreed-on industry standard: the Simple Mail Transport Protocol (SMTP). Any server that “speaks” SMTP is able to act as a Mail Transfer Agent (MTA) and send mail to, and receive mail from, any other server that speaks SMTP. To understand how “simple” SMTP really is, Exhibit 20.1 presents an example of an SMTP transaction.3 The text that follows represents the actual data being sent and received by e-mail servers (as viewed in a telnet session, with the words in CAPS, such as HELO and DATA, being the SMTP commands defined in the relevant standards, and the numbers being the standard telnet responses).

EXHIBIT 20.1 Basic E-mail Protocol

Developed at a time when computing resources were relatively expensive, and designed to operate even when a server was processing a dozen or more message connections per second, the SMTP “conversation” was kept very simple in order to be very brief. However, that simplicity is both a blessing and a curse. As you can see from the example, only two pieces of identity information are received before the mail is delivered: the identity of the sending server, in this case example.com, and the “From” address, in this case [email protected]. SMTP has no process for verifying the validity of those identity assertions, so both of those identifiers can be trivially falsified. The remaining contents of the e-mail, including the subject and other header information, are transmitted in the data block and are not considered a meaningful part of the SMTP conversation. In other words, no SMTP mechanism exists to verify assertions such as “this message is from your bank and concerns your account” or “this message contains the tracking number for your online order” or “here is the investment newsletter that you requested.”

As described in more detail later, some e-mail services do perform whitelist or blacklist look-ups on the Internet Protocol (IP) address of the sending server during the SMTP conversation, but those inquiries can dramatically slow mail processing, requiring extra capacity to offset the loss of efficiency. A whitelist identifies e-mail senders that are trusted; a blacklist identifies those that are not trusted. Maintenance of whitelists can be time-consuming, and blacklists have a long history of inaccuracies and legal disputes. In short, the need for speed creates a system in which there are virtually no technical consequences for misrepresentations in mail delivery. And this is precisely why spammers have been, and continue to be, incredibly effective at getting unwanted e-mail delivered.

SMTP is, as Winston Churchill might have put it, the worst way of “doing” e-mail, except for all the others that have been tried. The reality is that SMTP works reliably and has been widely implemented. To supplant SMTP with anything “better” would mean a wholesale redesign of the entire global e-mail infrastructure, a task that few in the industry have been willing to undertake. Some people have endeavored to develop solutions that can ride atop the existing SMTP infrastructure, allowing SMTP to continue functioning efficiently while giving those who use it the option of engaging more robust features that help differentiate legitimate mail from spam. There is more on these solutions later in the chapter.

20.2.2 Heads-Up.

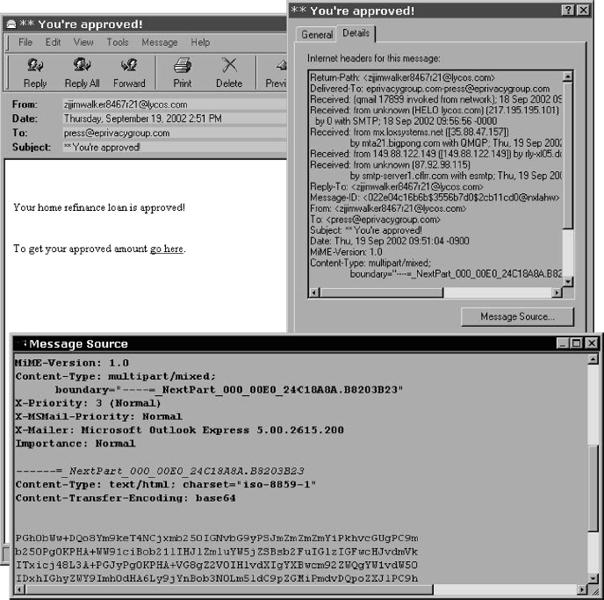

E-mail cannot be delivered without something called a header, and every e-mail message has one, a section of the message that is not always displayed in the recipient's e-mail program but is there nonetheless, describing where the message came from, how it was addressed, and how it was delivered. Examining the header can tell you a lot about a message. Consider how a message appears in Microsoft Outlook Express, as illustrated in Exhibit 20.2.

At the top you can see the “From,” the “To,” and the “Subject.” For example, you can see part of a message that appears to be from [email protected] to [email protected] with the subject: *You're approved! As you may have guessed, [email protected] is not a real person. This is just an address that appears on a company Web site as a contact for the press, and of course, nobody actually used this address on a mortgage application. The address was “harvested” by a program that automatically scours the Web for e-mail addresses.

EXHIBIT 20.2 Viewing Message Header Details in Outlook Express

When you open this message in Outlook Express and use the File/Properties command, you can click on the Details tab to see how the message made its way through the Internet. The first thing you see is a box labeled headers, as shown in Exhibit 20.2. Reading this will tell you that the message was routed through several different e-mail servers. Qmail is the company's mail program, which is the first instance of “Received.” The next three below that are intermediaries, until you get to the last one, smtp-server1.cflrr.com. That is an e-mail server in Central Florida (cfl) on the Road Runner (rr) cable modem network, which supplies high-speed Internet access to tens of thousands of households.

So who sent this message? That is very hard to say. As you might expect, there is no such address as [email protected]. The best way to determine who sent a spam message like this is to examine the content. Spam cannot reel in suckers unless there is some way for the suckers to contact the spammer. Sometimes this is a phone number, but in this message it is a hyperlink to a Web page. However, clicking links in spam messages is a dangerous way to surf—a much safer technique to learn more about links in spam is message source inspection. Outlook Express provides a Message Source view, but this may only show the encoded content and not be readable ASCII.

One way to get at the content of such messages is to forward them to a different e-mail client, for example Qualcomm's Eudora, then open the mailbox file with a text editor such as TextPad. This reveals the “http” reference for the link that this spammer wants the recipient to click. In this case, the sucker who clicks on the link that says “To get your approved amount go here” is presented with a form that is not about mortgages, but about data gathering. To what use the data thus gathered will be put is impossible to say, but a little additional checking using the ping and whois commands reveals that the Web server collecting the data is in Beijing. The chances of finding out who set it up are slim. The standard operating procedure is to set up and take down a Web site like this very quickly, gathering as much data as you can before someone starts to investigate. However, over the last 10 years, the resources devoted to investigating spammers have been minimal when compared to the resources that have gone into sending spam. Even when lawmakers can agree on what spam is and how to declare it illegal, government funds are rarely allocated for spam fighting. There have been a few high-profile arrests and prosecutions, often driven by large companies, such as Microsoft and AOL, but spamming remains a relatively low risk form of computer abuse.

20.3 SPAM DEFINED.

The story of spam is a rags-to-riches saga of a mere nuisance that became a multibillion-dollar burden on the world's computing resources. For all the puns and jokes one can make about spam, it may be the largest single thief of computer and telecommunication resources since computers and telecommunications were invented. One research company predicted that the global cost of spam in 2007 would be $100 billion, compared to $50 billion in 2005, with spam expected to cost $35 billion in the United States in 2007, up from $19 billion two years earlier.

If these numbers seem high, consider them from a different perspective: Take the total amount of money the world spends on e-mail systems and services in one year, divide by 10, then multiply by 9. The result is a rough measure of how much money is wasted on spam, based on the fact that several different sources put the percentage of all e-mail that is spam at 9 out of 10 messages. Alternatively, consider a snapshot, the amount of spam received by a small publishing company, Dreva Hill, which put up its Web site in 2002. An e-mail address was provided for people to contact the company. The address was not used anywhere else. By 2007, that e-mail address was receiving over 77 spam messages per day. In the first two weeks of March, it received 1,086 messages of which 1,079 were spam.

Perhaps it is no surprise that spam has become a costly problem because spam was the first large-scale computer threat to be purely profit-driven. The goal of most spam is to make money, and it cannot make money if people do not receive it. In fact, spam software will not send spam to a mail server that has a slow response time; it simply moves on to other targets that can receive e-mail at the high message-per-minute rate needed for spam to generate income (based on 1 person in perhaps 100,000 actually responding to the spam message). If spam does not generate income, it becomes pointless because it takes money to send spam. You need access to servers and bandwidth (which you either have to pay for or steal). Ironically, a few simple changes to current e-mail standards could put an end to most spam by more reliably identifying and authenticating e-mail senders, a subject addressed later in this chapter.

20.3.1 Origins and Meaning of Spam (not SPAM−).

SPAM has been a trademark of Hormel Foods for over 70 years. For reasons that will be discussed in a moment, the world has settled on “spam” as a term to describe unwanted commercial e-mail. However, uppercase “SPAM” is still a trademark, and associating SPAM, or pictures of SPAM, with something other than the Hormel product could be a serious violation of trademark law. Security professionals should take note of this. The word “Spam” is acceptable at the start of a sentence about unsolicited commercial e-mail, but “SPAM” can be used for spam only if the rest of the text around it is uppercase, as in “TIRED OF SPAM CLUTTERING YOUR INBOX?”

The use of the word “spam” in the context of electronic messages stems from a comedy sketch in the twenty-fifth episode of the BBC television series Monty Python's Flying Circus.4 First aired in 1968, before e-mail was invented, the sketch featured a restaurant in which SPAM dominated the menu. When a character called Mr. Bun asks the waitress “Have you got anything without SPAM in it?” she replies: “Well, there's SPAM, egg, sausage and SPAM, that's not got much SPAM in it.” The banter continues in this vein until Mr. Bun's exasperated wife screams, “I don't like SPAM!” She is further exasperated by an incongruous group of Viking diners singing a song, the lyrics of which consist almost entirely of the word “SPAM.” A similar feeling of exasperation at having someone foist on you something that you do not want, and did not ask for, clearly helped to make “spam” a fitting term for the sort of unsolicited commercial e-mail that can clutter inboxes and clog e-mail servers.

In fact, the first use of “spam” as a term for abuse of networks did not involve e-mail. The gory details of spam were carefully researched in 2003 by Brad Templeton, former chairman of the board of the Electronic Frontier Foundation.5 According to Templeton, the origins lie in annoying and repetitive behavior observed in multiuser dungeons (also know by the acronym MUD, an early term for a real-time, multiperson, shared environment). From there the term “spam” migrated to bulletin boards—where it was used to describe automated, repetitive message content—thence to USENET, where it was applied to messages that were posted to multiple newsgroups.

A history of USENET, and its relationship to the history of spam, is salutary for several reasons. First of all, spam pretty much killed USENET, which was once a great way to meet and communicate with other Internet users who shared common interests, whether those happened to be political humor or HTML coding. Newsgroups got clogged with spam to the point where users sought alternative channels of communication. In other words, a valuable and useful means of communication and an early form of social networking was forever tainted by the bad behavior of a few individuals who were prepared to flaunt the prevailing standards of conduct.

The second spam-USENET connections is that spam migrated from USENET to e-mail through the harvesting of e-mail addresses, a technique that spammers—the people who distribute spam—then applied to Web pages and other potential sources of target addresses. Spammers found that software could easily automate the process of reading thousands of newsgroup postings and extracting, or harvesting, any e-mail addresses that appeared in them. It is important to remember that, although it might seem naive today, the practice of including one's e-mail address in a message posted to a newsgroup was common until the mid-1990s. After all, if you posted a message looking for an answer, then including your e-mail address made it easy for people to reply. It may be hard for some computer users to imagine a time when e-mail addresses were so freely shared, but that time is worth remembering because it shows how easily the abuse of a system by a few can erode its value to the many. Harvesting, which resulted in receiving spam at an e-mail address provided in a newsgroup posting for the purposes of legitimate communication, was an enormous “advance” in abuse of the Internet and a notable harbinger of things to come. Elaborate countermeasures had to be developed, all of which impeded the free flow of communication. Much of the role fulfilled by newsgroups migrated to closed forums, where increasingly elaborate measures are used to prevent abuse. A useful historical perspective on spam's depressing impact on e-mail is provided by the reports freely available at the MessageLabs Web site. Its annual reports provide conservative estimates of the growth in spam; for example, the 2007 annual report6 showed total spam hovering around 85 percent of all e-mails from 2005 through 2007, with new varieties (those previously unidentified by type or source) keeping fairly steady at around 75 percent of all e-mail.

20.3.2 Digging into Spam.

Around 1996, as early adopters of e-mail began experiencing increasing amounts of unsolicited e-mail, efforts were made to define exactly what kind of message constituted spam. This was no academic exercise, and the stakes were surprisingly high. We have already employed one definition: unsolicited commercial e-mail (sometimes referred to as UCE). This definition seems simple enough, capturing as it does two essential points: Spam is e-mail that people did not ask to receive, and spam is somehow commercial in nature.

However, while UCE eventually became the most widely used definition of spam it did not satisfy everyone. Critics point out that it does not address unsolicited messages of a political or noncommercial nature (such as a politician seeking your vote or a charity seeking donations). Furthermore, the term “unsolicited” is open to a lot of interpretation, a fact exploited by early mass e-mailings by mainstream companies whose marketing departments used the slimmest of pretexts to justify sending people e-mail. As the universe of Internet users expanded in the late 1990s, encompassing more and more office workers and consumers, three things about spam became increasingly obvious:

- People did not like spam.

- Spammers could make money.

- Legitimate companies were tempted to send unsolicited e-mail.

20.3.2.1 “Get Rich Quick” Factor.

How much money could a spammer make? Consider the business plan of one Arizona company, C.P. Direct, that proved very profitable until it was shut down, in 2002, by the U.S. Customs Service and the Arizona Department of Public Safety. Here are some of the assets that authorities seized:

- Nearly $3 million in cash plus a large amount of expensive jewelry.

- More than $20 million in bank accounts.

- Twelve luxury imported automobiles (including eight Mercedes, plus assorted models from Lamborghini, Rolls-Royce, Ferrari, and Bentley).

- One office building and assorted luxury real estate in Paradise Valley and Scotts-dale.

These profits were derived from the sale of $74 million worth of pills that promised to increase the dimensions of various parts of the male and female anatomy. The company had used spam to sell these products, but in fact it was not shuttered for sending spam, the legal status of which remains ambiguous to this day, having been defined differently by different jurisdictions (not least of which was the U.S. Congress, which arguably made a hash of it in 2004).

C.P. Direct crossed several lines, not least of which was the making of false promises about its products (none of which it ever tested and all of which turned out to contain the same ingredients, regardless of which part of the human anatomy they were promised to enhance). The company compounded its problems by refusing to issue refunds when the products did not work as claimed. However, rather than discourage spammers, this case proved that spam can make you rich, quick. Indeed, the hope of getting rich quick remains the main driver of spam. The fact that a handful of people were prosecuted for conducting a dubious enterprise by means of spam was not perceived as a serious deterrent.

20.3.2.2 Crime and Punishment.

Spammers keep on spamming because risks are perceived to be small relative to both the potential gains and the other options for getting rich quick. The two people at the center of the C.P. Direct case, Michael Consoli and his nephew and partner, Vincent Passafiume, admitted their guilt in plea agreements signed in August 2003; they but were out of jail before May 2004, and they seemed to have suffered very little in the shame department., Two years after their release, the pair asked the state Court of Appeals to overturn the convictions and give back whatever was left of the seized assets.

Consider what has happened with Jeremy Jaynes, named as one of the world's top 10 spammers in 2003 by Spamhaus, a spam-fighting organization discussed later in Section 20.4.1 of this chapter. When he was prosecuted for spamming, Jaynes was thought to be sending out 10 million e-mails a day. How much did he earn from this? Prosecutors claimed it was about $750,000 a month. In 2004, Jaynes was convicted of sending unsolicited e-mails with forged headers, in violation of Virginia law. He was sentenced to nine years in prison. However, as of the start of 2008, Jaynes had not served any time, remaining free on bail (set at less than two months' worth of his spam earnings). Apparently he is waiting for his case to get to the U.S. supreme Court.

20.3.2.3 A Wasteful Game.

In many ways, spam is heir to the classic sucker's game played out in classified advertisements that promise to teach you how to “get cash in your mailbox.” The trick is to get people to send you money to learn how to get cash in their mailbox. If a spammer sends out 25 million e-mail messages touting a product, she may sell enough product to make a profit, but there are real production costs associated with a real product. In contrast, if she sends out enough messages touting a list of 25 million proven e-mail addresses for $79.95, she may reel in enough suckers willing to buy that list and make a significant profit because the list costs essentially nothing to generate. The fact that most addresses on such lists turn out to be useless does not seem to stop people from buying or selling them.

One form of spam that does not rely on selling a product is the pump-and-dump stock scam. Sending out millions of messages telling people how hot an obscure stock is about to become can create a self-fulfilling prophecy. Here is how it works:

- Buy a lot of shares on margin or a lot of shares in a company that is trading at a few pennies a share.

- Spam millions of people with a message talking up the stock you just bought.

- Wait for the price of the shares to go up, and then sell your shares for a lot more than you paid for them.

Such a scheme breaks a variety of laws but can prove profitable if you are not caught (you can hide your identity as a sender of spam); nevertheless, regulators still can review large buy and sell orders for shares referred to in stock spams.

The underlying reasons for the continuing rise in spam volume can be found in the economics of the medium. Sending out millions of e-mail messages costs the sender very little. An ordinary personal computer (PC) connected to the Internet via a $10-per-month dial-up modem connection can pump out hundreds of thousands of messages a day; a small network of PCs connected via a $50-per-month cable modem or digital subscriber line (DSL) can churn out millions. Obviously, the economic barrier to entry into “get-rich-quick” spam schemes is very low. The risk of running into trouble with the authorities is also very low. The costs of spam are borne downstream, in a number of ways, by several unwilling accomplices:

E-mail Recipient

- Spends time separating junk e-mail from legitimate e-mail. Unlike snail mail, which typically is delivered and sorted once per day, e-mail arrives throughout the day, and night. Every time you check it you face the time-wasting distraction of having to sort out spam.

- Pays to receive e-mail. There are no free Internet connections. When you connect to the Internet, somebody pays. The typical consumer at home pays a flat rate every month, but the level of that rate is determined, in part, by the volume of data that the Internet Service Provider handles, and spam inflates that volume, thus inflating cost.

Enterprise

- Loses productivity because employees, many of whom must check e-mail for business purposes, are spending time weeding spam out of their company e-mail inbox. Companies that allow employees to access personal e-mail at work also pay for time lost to personal spam weeding.

- Wastes resources because spam inflates bandwidth consumption, processing cycles, and storage space.

Internet Service Providers (ISPs) and E-mail Service Providers (ESPs)7

- Wastes resources on handling spam that inflates bandwidth consumption, processing cycles, and storage space.

- Have to spend money on spam filtering, block list administration, spam-related customer complaints, and filter/block-related complaints.

- Have to devote resources to policing their users to avoid getting block-listed. (There is more about filters and block lists in the next section.)

Two other economic factors are at work in the rise of spam: hard times and delivery rates. When times are tough, more people are willing to believe that get-rich-schemes like spamming are worth trying, so there are more spammers (tough times affect the receiving end as well, with recipients more willing to believe fraudulent promises of lotteries won and easy money to be made). When delivery rates for spam go down—due to the use of spam filters and other techniques that are discussed in Section 20.4.5—spammers compensate by sending even more spam.

20.3.2.4 How Big Is the Spam Problem?

Although most companies and consumers probably will concur that spam has grown from a mere annoyance to a huge burden, some people will say spam is not a big problem. These include:

- Consumers who have not been using e-mail for very long.

- Office users who do not see the spam addressed to them due to some form of antispam device or service deployed by their company.

These perceptions obscure the fact, noted earlier, that spam consumes vast amounts of resources that could be put to better use. The consumer might get cheaper, better Internet service if spam was not consuming so much bandwidth, server capacity, storage, and manpower. In the author's experience working with a regional ISP, growth in spam volume is directly reflected in server costs. The ISP had to keep adding servers to handle e-mail. By the time it got to four servers, 75 percent of all the e-mail was determined to be spam. The ISP had incurred server costs four times greater than needed to handle legitimate e-mail. Furthermore, even with four servers, spikes in spam volumes were causing servers to crash, incurring the added cost of service calls in the middle of the night, not to mention the loss of subscribers annoyed by outages.

In August 2002, three different e-mail service providers, Brightmail, Postini, and MessageLabs, predicted that spam was on track to becoming the majority of message traffic on the Internet by the end of the year. These predictions were met with skepticism in some quarters, discounted as a sales pitch by companies that offered spam-fighting products and services. However, sober analysis indicated that the problem was real.8 Brightmail's interception exhibits for July 2002 showed that spam made up 36 percent of all e-mail traveling over the Internet, up from 8 percent a year earlier. Postini found that spam made up 33 percent of its customers' e-mail in the same month, up from 21 percent in January. MessageLabs reported that its customers were now classifying from 35 percent to 50 percent of their e-mail traffic as spam. In September 2002, Microsoft let it slip that 80 percent of the e-mail passing through its servers was “junk.”

Since that time, spam statistics produced by companies like Postini (now owned by Google) have been validated as genuine and not just marketing hype. According to Postini, 94 percent of all e-mail in December 2007 was spam. According to MessageLabs, spam volume per user was up 57 percent in 2007 over 2006, which implies that the average unprotected user would have received 36,000 spam messages in 2007—nearly 100 per day, seven days a week—compared with 23,000 spam messages in 2006.

As alluded to earlier, these numbers have a direct effect on infrastructure spending. Storage is one of the biggest hardware and maintenance costs incurred by e-mail. If 80 to 90 percent of all e-mail is unsolicited junk, then companies that process e-mail are spending way more on storage than they would if all e-mail was legitimate. The productivity “hit” for companies whose employees waste time weeding spam out of their inboxes is also enormous. Beyond these costs, consider the possibility that spam actually acts as a brake on Internet growth rates, having a negative effective on economies, such as America's, that derive considerable strength from Internet-related goods and services. Although the Internet appears to grow at a healthy clip, it may be a case of doing so well that it is hard to know how badly we are doing. Perhaps the only reason such an effect has not yet been felt is that spam's impact on new users is limited. As noted earlier, when new users first get e-mail addresses, they typically do not get a lot of spam. According to some studies, it can take 6 to 12 months for spammers to find an e-mail address, but when they do, the volume of spam to that address can increase very quickly. This leads some people to cut back on their use of e-mail and the Internet.

20.3.3 Spam's Two-Sided Threat.

When mail servers slow down, falter, and finally crash under an onslaught of spam, the results include lost messages, service interruptions, and unanticipated help desk and tech support costs. Sales are missed. Customers do not get the service they expect. The cost of keeping spam out of your inbox and your enterprise is one thing, the cost of preventing spam from impacting system availability and business operations is another; but there is another side of the spam threat, the temptation to become an abusive mass mailer, otherwise known as a spammer. This is something that spam has in common with competitive intelligence, otherwise known as industrial espionage. When done badly, mass mailings, like competitive intelligence, can tarnish a company's reputation.

Leaving aside the matter of the increasingly nasty payloads delivered with spam, things like Trojans and phishing attacks, even plain old male enhancement spam constitutes a threat to both network infrastructure and productivity. For a start, spam constitutes a theft of network resources. The inflationary effect on server budgets has already been mentioned. The negative impact on bandwidth may be less obvious but is definitely real. In 2002 and 2003, the author was involved in the beta testing of a prototype network-level antispam router. It was not unusual for a company installing this device to discover that spam had been consuming from two-thirds to three-quarters of its network bandwidth. Whether this impact was seen as performance degradation or cost inflation, very few companies were willing to remove this device once it had been installed. In other words, when companies see what their network performance and bandwidth cost is like when spam is taken out of the equation, they realize just what a negative impact spam has. This is something that might otherwise be hard to detect given that spam has risen in volume over time.

An even more dramatic illustration of the damage that spam can cause comes when a network is targeted by a really big “spam cannon” (a purpose-built configuration of MTA devices connected to a really big broadband connection; for example, a six-pack of optimized MTAs can fire off 3.6 million messages an hour). The effect can be to crash the receiving mail servers, with all of the attendant cost and risk that involves. One way to prevent this happening, besides deployment of something like an antispammer router, is to sign up for an antispam service, which intercepts all of your incoming e-mail and screens out the spam. Such a solution addresses a number of e-mail-related problems but at considerable ongoing cost, which still constitutes a theft of resources by spammers.

The company Commtouch.com provides a spam cost calculator that produces some interesting numbers.9 Consider these input values for a medium-size business:

- Employees: 800

- Average annual salary: $45,000

- Average number of daily e-mails per recipient: 75

- Average percentage of e-mail that is spam: 80%

According to the calculator, total annual cost of spam to this organization, which is assumed to be deploying no antispam measures, is just over $ 1 million. This is based on certain assumptions, such as the time taken to delete spam messages, but in general, it seems fairly realistic. Of course, the size of the productivity hit caused by the spam that makes it to an employee inbox has been hotly debated over the years, but it is clearly more than negligible and not the only hit. Even if antispam filtering is introduced, there will still be a need to review or adjust the decisions made by the filter to ensure that no important legitimate messages are erroneously quarantined or deleted. In other words, even if the company spends $50,000 per year on antispam filtering, it will not reclaim all of the $1 million wasted by spam.

20.3.3.1 Threat of Outbound Spam.

Even as organizations like the Coalition Against Unsolicited Commercial E-mail (CAUCE) were trying to persuade companies that spamming was an ill-advised marketing technique that could backfire in the form of very annoyed recipients, and just as various government entities were trying to create rules outlawing spam, some companies were happy to stretch the rules and deluge consumers with e-mail offers, whether those consumers had asked for them or not. This led some antispammers to condemn all companies in the same breath. The author's own experience, working with large companies that have respected brand names, was that none of them actually wanted to offend consumers. Big companies always struggle to rein in maverick marketing activities, and mass e-mailing is very tempting when you are under pressure to produce sales; but upper management is unlikely to condone anything that could be mistaken for spamming.

Not that the motives for corporate responsibility in e-mail are purely altruistic. Smart companies can see that the perpetuation of disreputable e-mail tactics only dilutes the tremendous potential of e-mail as a business tool. Whatever you think of spam, there is no denying that, as a business tool, bulk e-mail is incredibly powerful. It is also very seductive. When you have a story to tell or a product to sell, and a big list of e-mail addresses just sitting there, bulk e-mail can be very tempting. You can find yourself thinking “Where's the harm?” and “Who's going to object?” But unless you have documented permission to send your message to the people on that list, the smart business decision is to resist the temptation. Remember, it only takes one really ticked off recipient of your unsolicited message to ruin your day.

20.3.3.2 Mass E-mail Precautions.

One of the most basic business e-mail precautions is this: Never send a message unless you are sure you know what it will look like to the person who receives it. This covers the formatting, the language you use, and, above all, the addressing. If you want to address the same message to more than one person at a time, you have three main options, each of which should be handled carefully:

- Place the e-mail addresses of all recipients in the “To” field or the Copy (Cc) field so all the recipients will be able to see the addresses of the other people to whom you sent the message. This is sometimes appropriate for communications within a small group of people.

- If the number of people in the group exceeds about 20, or if you do not want everyone to know who is getting the message, move all but one of them to the Blind Copies (Bcc) field. The one address in the “To” field may be your own. If the disclosure of recipients is likely to cause any embarrassment whatsoever, do a test mailing first. Send a copy of the message to yourself and to at least one colleague outside the company, and then have the message looked at to make sure the “Bcc” entries were made correctly.

- To handle large groups of recipients, or to personalize one message to many recipients, use a specialized application like Group Mail that can reliably build individual, customized messages to each person on a list. This neatly sidesteps errors related to the “To” and “Cc” fields. Group Mail stores e-mail addresses in a database and builds messages on the fly using a merge feature like a word processor. You can insert database fields in the message. For example, a message can refer to the recipient by name. The program also offers extensive testing of messages, so that you can see what recipients will see before you send any messages. And the program has the ability to send messages in small groups, spread over time, according to the capabilities of your Internet connection.

Whether you use a program like Group Mail for your legitimate bulk e-mail, or something even more powerful, depends on several factors, such as the size of your organization, the number of messages you need to send, and your privacy policy. Privacy comes into play because some software used to send e-mail, such as Group Mail, allows the user of the program access to the database containing the addresses to which the mail is being sent. This is generally not a problem in smaller companies, or when the database consists solely of names and addresses without any special context; but it can be an issue when the database contains sensitive information or the context is sensitive. For example, a list of names and addresses can be sensitive if the person handling the list knows, or can infer, that they belong to patients undergoing a certain kind of medical treatment.

You may not want to allow system operators or even programmers to have access to sensitive data simply because they are charged with sending or programming messages. Fortunately, mailing programs can be written that allow an operator to compose mail and send it without seeing the names and addresses of the people to whom it is being sent. Test data can, and should, be used to “proof” the mailing before it is executed.

Another basic e-mail precaution is never to send any message that might offend any of the recipients. In choosing your wording, your design, your message, know your audience. Use particular caution when it comes to humor, politics, religion, sex, or any other sensitive subject. When using e-mail for business e-mail, it is better to stand accused of a lack of humor rather than a lack or judgment.

Respect people's preferences, if you know them, for content. If people have expressed a preference for text-only messages, do not send them HTML and hope they decide to change their minds. Ask first because the “forgiveness later” path is not cost effective when you have to deal with thousands of unhappy recipients calling your switchboard. When you do use HTML content, still try to keep size to a minimum, unless you have recipients who specifically requested large, media-rich messages.

Antispam measures deployed by many ISPs, companies, and consumers sometimes produce “false positives,” flagging legitimate e-mail as spam, potentially preventing your e-mail from reaching the intended recipients, even when they have asked to receive your e-mail. If your company's e-mail is deemed to be particularly egregious spam, the server through which it is sent is likely to be blocked. If this is your company's general e-mail server, blocking could affect delivery of a lot more than just the “spam.” If the servers through which your large mailing is sent belong to a service provider, and its servers get blocked, that could be a problem for you too. And if you use a service provider to execute the mailing for you but fail to choose wisely, your mail may be branded as spam just because of the bad reputation of the servers through which it passes. Be aware that using spam to advertise your products could place you in a violation of the CAN-SPAM Act of 2003 even if you do not send the spam yourself. (See Section 20.4.9 for more on CAN-SPAM.)

What can be done to prevent messages that are not spam from falling victim to antispam measures? Adherence to responsible e-mail practices is a good first step. Responsible management of the company's e-mail servers will also help, as will selection of reputable service providers. You should also consider tasking someone with tracking antispam measures, to make sure that your e-mail is designed to avoid, as much as possible, any elements of content or presentation that are currently being flagged as spam.

To make sure your company is associated with responsible e-mail practices, become familiar with the “Six Resolutions for Responsible E-Mailers.” These were created by the Council for Responsible E-mail (CRE), which was formed under the aegis of the Association for Interactive Marketing (AIM), a subsidiary of the Direct Market Association (DMA). Some of the country's largest companies, and largest legitimate users of e-mail, belong to these organizations, and they have a vested interest in making sure that e-mail is not abused. Here are the six resolutions:

- Marketers must not falsify the sender's domain name or use a nonresponsive IP address without implied permission from the recipient or transferred permission from the marketer.

- Marketers must not purposely falsify the content of the subject line or mislead readers from the content of the e-mail message.

- All bulk e-mail marketing messages must include an option for the recipient to unsubscribe (be removed from the list) from receiving future messages from that sender, list owner, or list manager.

- Marketers must inform the respondent at the time of online collection of the e-mail address for what marketing purpose the respondent's e-mail address will be used.

- Marketers must not harvest e-mail addresses with the intent to send bulk unsolicited commercial e-mail without consumers' knowledge or consent. (“Harvest” is defined as compiling or stealing e-mail addresses through anonymous collection procedures such as via a Web spider, through chat rooms, or other publicly displayed areas listing personal or business e-mail addresses.)

- The CRE opposes sending bulk unsolicited commercial e-mail to an e-mail address without a prior business or personal relationship. (“Business or personal relationship” is defined as any previous recipient-initiated correspondence, transaction activity, customer service activity, third-party permission use, or proven off-line contact.)

These six resolutions may be considered a reasonable middle ground between antispam extremists and marketing-at-all-costs executives. If everyone abided by these resolutions, there would be no spam, at least according to most people's definition of spam. After all, if nobody received more than one or two messages per week that were unwanted and irrelevant, antispam sentiment would cool significantly.

20.3.3.3 Appending and Permission Issues.

Some privacy advocates have objected to the sixth resolution because it permits e-mail appending. This is the practice of finding an e-mail address for a customer who has not yet provided one. Companies such as Yesmail and AcquireNow will do this for a fee. For example, if you are a bank, you probably have physical addresses for all your customers, but you may not have e-mail addresses for all of them. You can hire a firm to find e-mail addresses for customers who have not provided one. However, these customers may not have given explicit permission for the bank to contact them via e-mail, so some people would say that sending e-mail messages to them is spamming.

Whether you agree with that assessment or not, several factors need to be assessed carefully if your company is considering using an e-mail append service. First of all, make sure that nothing in your privacy policy forbids it. Next, think hard about the possible reaction from customers, bearing in mind that e-mail appending is not a perfect science. For more on how appending works, enter “e-mail append” as a search term in Google—you will find a lot of companies offering to explain how they do it, matching data pulled from many different sources using complex algorithms.

Another concern that must be considered is that some messages will go to people who are not customers. For that reason, you probably want to make your first contact a tentative one, such as a polite request to make further contact. Then you can formulate the responses to build a genuine opt-in list. As you might expect, you can outsource this entire process to the append service, which will have its own, often automated, methods of dealing with bounced messages, complaints, and so on.

Do not include any sensitive personal information in the initial contact, since you have no guarantee that [email protected] is the Robert Jones you have on your customer list. When Citibank did an append mailing in the summer of 2002, encouraging existing customers to use the bank's online account services, it came in for some serious criticism. Although the bank was not providing immediate online access to appended customers, the mere perception of this, combined with a number of mistaken e-mail identities, produced negative publicity. Here is what Citibank said in the message it sent to people it believed to be customers, even though these people had not provided their e-mail address directly to the bank:

Citibank would like to send you e-mail updates to keep you informed about your Citi Card, as well as special services and benefits… With the help of an e-mail service provider, we have located an e-mail address that we believe belongs to you.

Although this message is certainly polite, it clearly raised questions in the minds of recipients. Two questions could undermine appending as a business practice: Where exactly is this service provider looking for these e-mail addresses? Why doesn't the company that wants my e-mail address just write and ask for it? The fact is, conversion rates from e-mail contact are higher than from snail mail, so the argument for append services is that companies that use them move more quickly to the cheaper and better medium of e-mail than those that do not. The counterpoint is that too many people will be offended in the process.

Consider to what lengths you are stretching the “prior business relationship” principle cited in the sixth responsible e-mail resolution. A bank probably has a stronger case for appending e-mail addresses to its account holder list than does a mail order company that wants to append a list of people who requested last year's catalog. The extent to which privacy advocates accept or decry the concept of “prior business relationship” is largely dependent on how reasonable companies are in their interpretation of it.

Marketing to addresses that were not supplied with a clear understanding that they would be used for such purposes is not advisable. Depending on your privacy statement, it could be a violation of your company's privacy policy. Going ahead with such a violation could not only annoy customers, but also draw the attention of industry regulators, such as the Federal Trade Commission (FTC). Of course, you will have to decide for yourself if it is your job or responsibility to point this out to management. And be sure to provide a simple way for recipients to opt out of any further mailings. (A link to a Web form is best for this. Avoid asking the recipient to reply to the message. If the e-mail address to which you mailed the message is no longer their primary address, they may have trouble opting out.)

20.4 FIGHTING SPAM.

The fight against incoming spam can be addressed both specifically, as in “What can I do to protect my systems from spam?” and in general, “What can be done to prevent spam in general?” Of course, if the activity of spamming were to be eliminated, everyone would have one less threat to worry about.

20.4.1 Enter the Spam Fighters.

In the five-year period from 1997 to 2002, a diverse grouping of interests and organizations fought to make the world aware of the problem of spam, and to encourage counter-measures. The efforts of the Coalition Against Unsolicited Commercial E-mail, which continue to this day, spurred lawmakers into passing antispam legislation and helped bring order to the blacklisting process by which spam-sending servers are identified. In 1998, the Spamhaus Project, a volunteer effort founded by Steve Linford, began tracking spammers and spam-related activity. (The name comes from a pseudo-German expression, coined by Linford, to describe any ISP or other firm that sends spam or willingly provides services to spammers.)

Commercial antispam products started to appear in the late 1990s, starting with spam filters that could be used by individuals. Filtering supplied as a service to enterprises appeared in the form of Brightmail, founded in 1998 and now owned by Symantec, and Postini, founded in 1999 and now owned by Google. A host of other solutions, some free and open source, others commercial, attempted to tackle spam from several different directions. Nevertheless, despite the concerted efforts of volunteers, lawmakers, and entrepreneurs, spam has continued to sap network resources while evolving into part of a subculture of system abuse that includes delivering payloads that do far worse things than spark moral outrage.

20.4.2 A Good Reputation?

Since spam is created by humans, it is notoriously difficult, if not impossible, for computers to identify spam with 100 percent reliability. This fact led some spam fighters to consider an alternative approach: reliably identifying legitimate e-mail. If an e-mail recipient or a Mail Transfer Agent could verify that certain incoming messages originated from legitimate sources, all other e-mail could be ignored. One form of this approach is the challenge-response system, perhaps the largest deployment being the one that Earthlink rolled out in 2003. When you send a message to someone at Earthlink who is using this system, and who has not received a message from you before, your message is not immediately delivered. Instead, Earthlink sends an e-mail asking you to confirm your identity, in a way that would be difficult for a machine to fake. The recipient is then informed of your message and decides whether to accept it or not. Unfortunately, this approach can be problematic if the user does a lot of e-commerce involving e-mail coming from many sources that are essentially automated responders (which cannot pass the challenge). Manually building the whitelist of allowed respondents can be tiresome, and failure to do so may mean some messages do not get through (e.g., if the sender is a machine that does not know how to handle the challenge). One solution to this problem is to compile an independent whitelist of legitimate e-mailers whose messages are allowed through without question. This is the reputational approach to spam fighting, and it works like this:

- Bank of America pledges never to spam its customers and only send them e-mail they sign up for (be it online statement notification or occasional news of new bank services).

- Bank of America e-mail is always fast-tracked through ISPs and not blocked as spam.

- Bank of America remains true to its pledge because its reputation as a legitimate mailer enables it to conduct e-mail activities with greater efficiency.

There are considerable financial and logistical obstacles to making such a system work, and it tends to work better with bigger mailers. Adoption also faced skepticism from some privacy and antispam advocates who suspected that the goal of such systems was merely to legitimize mass mailings by companies that still did not understand the need to conduct permission-only mailings. The relentless assault of spam on the world's e-mail systems eventually may lead all legitimate companies to foreswear unsolicited e-mail and thus make a reputational system universally feasible. Tough economic times, though, may tempt formerly “clean” companies to turn to spam in a desperate effort to boost flagging sales.

Another and more universal method of excluding spam would be for ISPs to deliver, and consumers to accept, only those e-mails that were stamped with a verifiable cryptographic seal of some kind. A relatively simple automated system to accomplish this was developed in 2001 by a company called ePrivacy Group, and it proved very successful in real-world trials conducted by MSN and several other companies. If adopted universally, such a system could render spamming obsolete, but that “if” proved to be unattainable. The success of this approach depended on widespread deployment, and the project ultimately was doomed by infighting between the larger ISPs, despite ePrivacy Group's willingness to release the underlying technology to the public domain.

The ultimate in reputation-based approaches to solving the spam problem may well be something that information security experts have urged for years: widespread use of e-mail encryption. If something like Secure/Multipurpose Internal Mail Extensions (S/MIME) was universally implemented, everyone could ignore messages that were not signed by people from whom they were happy to receive e-mail. Of course, the fact that e-mail encryption can be made to work reliably is not proof that it always will, and the encryption approach does not, in itself, solve the fundamental barrier to any universal effort at outlawing spam: the willingness of everyone to participate. One of the qualities that led e-mail to become the most widely used application on the Internet, the lack of central control, is a weakness when it comes to effecting any major change to the way it operates.

20.4.3 Relaying Trouble.

When considering the problem of spam in general, the question is why ISPs allow people to send spam. The fact is, many do not. Spamming is a violation of just about every ISP's terms of service. Accounts used for spamming are frequently closed. However, there are some exceptions. As you might imagine, a few ISPs allow spam as a way to get business (albeit business that few others want). Some of these ISPs use facilities in fringe countries to avoid regulatory oversight. Furthermore, some spammers find it easier, and cheaper, to steal service and use unauthorized access to other people's servers to send their messages. A phenomenon of e-mail is mail relaying. According to Janusz Lukasiak of the University of Manchester, mail relaying occurs “when a mail server processes a mail message from an unauthorized external source with neither the sender nor the recipient being a local user. The mail server is an entirely unrelated third party to this transaction and the message should not be passed via the server.”10 The problem with unauthenticated third parties is that they can hide their identity.

In the early days of the Internet, many servers were left open so that people could conveniently relay their e-mail through them at any time. It was quite acceptable for individuals to send their e-mail through just about any mail server, since the impact on resources was minimal. Abuses by spammers, whose mass e-mailings do impact resources, led to ISPs instituting restrictions. Some ISPs require the use of port 587 for SMTP authentication. Others require logging in to the POP server to collect incoming mail before sending any out. Since that requires a user name and password, it prevents “strangers” from sending e-mail through the server.

Open relays are now frowned on. However, relaying continues to occur, partly because configuring servers to prevent it requires effort. Also, spammers keep finding new ways to exploit resources that are not totally protected. For example, port 25 filtering, instituted to reduce spamming, can be bypassed with tricks like asynchronous routing and proxies. A relatively recent development is the use of botnets, groups of compromised computers, to deliver spam. (For more about botnets see Chapters 15, 16, 17, 30, 32, and 41 in this Handbook.)

A war is continually being waged between spammers and ISPs, and that war extends to the ISPs ISP—most Internet Service Providers actually get their service from an even larger service provider, companies like AT&T, MCI, and Sprint, which are the backbone of the Internet. How much trouble do these companies have with spam? As far back as 2002, network abuse (spam) generated 350,000 trouble tickets each month at a single carrier. These companies are working hard to prevent things like mail relaying and to defeat the latest tricks that spammers have devised to get around their preventive measures.

20.4.4 Black Holes and Block Lists.

Black hole lists, or block lists, catalog server IP addresses from ISPs whose customers are deemed responsible for spam and from ISPs whose servers are hijacked for spam relaying. ISPs and organizations subscribe to these lists to find out which sending IP addresses should be blocked. The receiving end, such as the consumer recipient's ISP, checks the list for the connecting IP address. If the IP address matches one on the list, then the connection gets dropped before accepting any traffic. Other ISPs choose simply to ignore, or “black hole,” IP packets at their routers. Among the better known block lists are RBL, otherwise known as MAPS Realtime Blackhole List, Spamcop, and Spamhaus.11

How does an entity's IP address get on these lists? If an ISP openly permits spam, or does not adequately protect its resources against abuse by spammers, it will likely be reported to the list by one or more recipients of such spam. Reports are filed by people who take the time to examine the spam's header, identify the culpable ISP, and make a “nomination” to a block list. Different block lists have different standards for verifying nominations. Some test the nominated server; others take into account the number of nominations. If an ISP, organization, or individual operating a mail server finds itself on the list by mistake, it can request to be removed, which usually involves a test by the organization operating the block-list.

Note that none of this block-list policing of spam is “official.” All block lists are self-appointed and self-regulated. They set, and enforce, their own standards. The only recourse for entities that feel they have been unfairly blocked—and there have been plenty of these over the years—is legal action. Some block lists are operated outside of the United States, but if an overseas organization block-lists a server located in the United States, it probably can be sued in a U.S. court. However, it is important to note that the blocking is not done by the operator of the block list, it is done by ISPs that subscribe to, and are guided by, the lists.

20.4.5 Spam Filters.

Block-list systems filter out messages from certain do-mains or IP addresses, or a range of IP addresses; they do not examine the content of messages. Filtering out spam, based on content, can be done at several levels.

20.4.5.1 End User Filters.

Spam filtering probably began at the client level, and even today many e-mail users perform manual negative filtering for spam, identifying spam by a process of elimination. This is easy to do with any e-mail application that allows user-defined filters or rules to direct messages to different inboxes or folders. Many people have separate mailboxes into which they filter all of the messages they get from their usual correspondents, friends, family, colleagues, subscribed newsletters, and so on. This means that whatever is left in the in basket is likely to be spam, with the notable exception of messages from new correspondents for whom a separate mailbox and filter has not yet been created.

To perform a filter that positively identifies spam, elements common to spam messages need to be identified. Many products do this, and they usually come with a default set of filters that look for things like “From” addresses that contain a lot of numbers and “Subject” text that contains a lot of punctuation characters—spammers often add these in an attempt to defeat filters based on specific text, so the Subject line “You're Approved” might be randomly concatenated with special characters and spaces like this: “**You~re Ap proved!**”

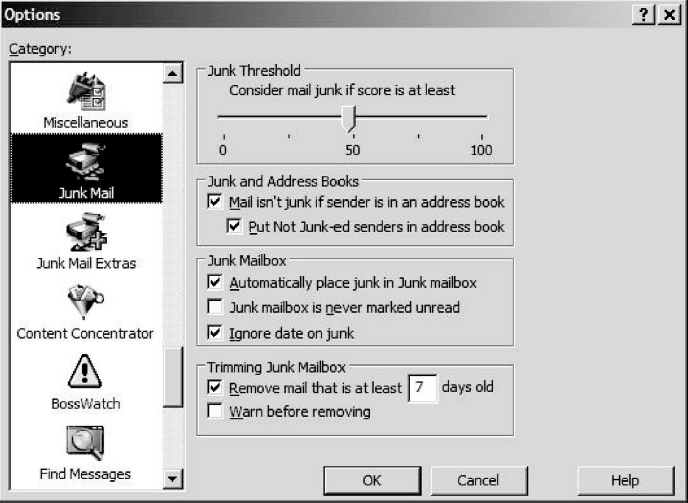

In fact, the tricks and tweaks used by spammers in crafting messages designed to bypass filters are practically endless. Nevertheless, even the spam filtering built into some basic e-mail programs offers a useful line of defense. For example, in Exhibit 20.3 you can see the control panel for spam filtering in the Eudora e-mail application, which places mail in a Junk folder based on a “score” derived from common spam indicators, giving users control over how high they want to set the bar.

Unfortunately, some of the language found in spam also appears in legitimate messages, such as “You are receiving this message because you subscribed to this list” or “To unsubscribe, click here.” This means that the default setting in a spam filter is likely to block some messages that you want to get. The answer is to either weaken the filtering or create a whitelist of legitimate “From” addresses so that the spam filter will allow through anything from these addresses. Most personal spam filters, like the one shown in Exhibit 20.3, can read your address book, and add all of the entries to your personal whitelist. New correspondents can be added over time.

Most personal spam filters direct the messages they identify as spam into a special folder or in basket where they can be reviewed by the user, a process sometimes referred to as quarantining. To avoid wasting hard drive space, the filtering software can be programmed to empty the quarantine folder at set intervals or simply to delete suspected spam older than a set number of days. This quarantine approach gives the user time to review and reclaim wrongly suspected spam.

20.4.5.2 ISP Filtering.

Faced with customer complaints about increased levels of spam around the turn of the century, ISPs began to institute spam filtering. However, they were hesitant to conduct content-based spam filtering, fearing that it might be construed as reading other people's e-mail and lead to claims of privacy invasion. Yet ISPs must be able to read headers to route e-mail, so filtering on the “From” address and “Subject” field was introduced (hence the increasingly inventive attempts by spammers to randomly vary Subject text). Some ISPs found that distaste for spam reached a level at which some users were prepared to accept revised terms of agreement, to allow machine reading of e-mail content to perform more effective spam filtering. Sticky legal issues still exist for ISPs, however, especially since there is no generally accepted definition of spam and no consensus on the extent to which freedom of speech applies to e-mail. For example, do political candidates have a right to send unsolicited e-mail to constituents? Do ISPs have a right to block it? These are questions on which the courts of law and public opinion have yet to render a conclusive verdict.

EXHIBIT 20.3 Junk Mail Filter Controls

One place where content-based spam filters are being deployed with little or no concern for legal challenges is the corporate network. Based on the fact that the company network belongs to the company, the right to control how it is used trumps concerns over privacy. Employees do not have the right to receive, at work, whatever kind of e-mail they want to receive. And most companies would argue that there is virtually no corporate obligation to deliver e-mail to employees.

20.4.5.3 Filtering Services.

Companies like Brightmail and Postini arose in the late 1990s to offer filtering services at the enterprise level. Brightmail developed filters that sit at the gateway to the enterprise network and filter based on continuously updated rules derived from real-time research about the latest spam outbreaks. Postini actually directs all of a company's incoming e-mail to its servers and filters it before sending it on. With specialization, filtering services can utilize a wide range of spam fighting techniques, including:

- Block lists

- Header analysis

- Content analysis

- Filtering against a database of known spam

- Heuristic filtering for “spamlike” attributes

- Whitelisting through reputational schemes

Because these systems constantly deal with huge amounts of spam, they are able to refine their filters, both positive and negative, quickly and relatively effectively. And they catch a lot of spam that would otherwise reach consumers. For example, by 2008, nine of the top 12 ISPs were using Brightmail, which claims a very low false positive rate and 95 percent catch rate. Unfortunately, that still means that some spam gets through, and when spammers can direct millions of messages an hour at a network, the volume of what gets through still can pose a threat.

Another approach uses the collective intelligence of subscribers to identify spam quickly and spread the word via network connections. Cloudmark, for example, had several million subscribers at the time of writing (April 2008) paying about $40 per year to receive nearly instantaneous updates, from servers that receive and categorize reports from members on any spam that gets through. A member's credibility score rises with every correct identification of spam and sinks with incorrect labeling of legitimate e-mail as spam (e.g., newsletters the member has forgotten subscribing to). The reliability of reporters helps the system screen false information from spammers that might try to game the system by claiming that their own junk e-mail was legitimate.

20.4.5.4 Collateral Damage.

There are two major drawbacks to spam filters. First, they allow spammers to continue spamming. In other words, because they must decide, on a message-by-message basis, which messages are spam and which are not, filters consume a lot of resources, in some cases more than if all the spam was allowed through. When block lists and whitelists work well, they can substantially reduce the amount of spam that reaches the filtering stage, but eventually all filters are serial, and thus resource constrained and resource intensive.

Second, filters sometimes err, in one of two ways. They sometimes produce a false positive, flagging a legitimate message as spam, preventing a much-needed message from getting to the recipient in a timely manner. And they sometimes produce false negatives, allowing spam messages into the inbox. False negatives and false positives impose a drag on productivity.

20.4.6 Network Devices.

The rapid rise of spam volumes in the late 1990s occurred at a time of massive e-mail-enabled computer virus and worm attacks, arising from the classic malware motivation of bragging rights. Analysis of these problems, according to the classic factors of means, motive, and opportunity, revealed that spammers differed quite significantly from virus writers. Spammers are mostly motivated by money, not bragging rights. This insight opened up a new line of defense against spam, removing that motivation.12

All that remained was to understand how spammers make money, that is, the economics of spam, then to find a way to disrupt those economics. The classic spam model is to send out a vast number of e-mails offering a product or service, relying on the fact that at least some of these offers will reach real people, at least some of whom will make a purchase. If enough sales are made to create a profit over and above the cost of doing business, the spammer will continue to operate. Although a good public education and awareness program can reduce the number of people who buy the spammers' offers, it is unlikely to reduce that number enough. Because filtering spam out of the message stream reduces the spammer's return on investment, it tends to make spammers more inventive in their attempts to beat filters and to send even more spam in the hopes that enough will get through. In fact, spammers were able to find companies willing to sell them bandwidth on a massive scale, including dedicated point-to-point T3 digital phone lineconnections.

Another way to attack the economics of spam is by “following the money.” Every deal has to be closed, typically via a Web site. Is it possible to shut down the spammer's Web sites? This line of inquiry led the author to an interesting finding: Spam goes stale very quickly. An examination of spam archives showed that most links in old spam were dead, sometimes because the Web site was shut down by the hosting company, sometimes because the spammer did not want to risk being identified. Thus the key to the economics of spam was revealed: time. If you slow spam down, spammers cannot generate enough responses before response sites are taken down.

Shortly after this realization, network security expert David Brussin devised a means of slowing down spam through TCP/IP traffic shaping. This became the technology at the heart of the antispam router. Instead of looking at each message in turn to determine if it is spam, the antispam router samples message traffic in real time and slows that traffic down if the sample suggests the traffic contains spam. To spammers, or rather the spamming software used by spammers, a network protected with an antispam router behaves as if it is on a 300-baud modem. In other words, the connection is too slow to deliver enough messages quickly enough for that one-in-a-million hit that the spam scheme needs in order to make money before the Web site is shut down. The spamming software quickly drops the connection; no messages have been lost and no messages have been falsely labeled as spam. Delivery of legitimate e-mail has not been affected. One or two spam messages have been delivered, but a lot less than are allowed through by the 95 percent catch rate of a typical spam filter.

There are two main tricks to this technology. One is tuning the TCP/IP traffic shaping, the other is tuning the sampling process. The latter needs regular updates about what spam looks like currently, so that it can identify a “spammy connection” as quickly as possible. These updates can be derived from services that constantly identify new spam. An application control panel is used to handle the tuning of the traffic shaping. Network administrators have found that a properly tuned antispam router can reduce bandwidth requirements by as much as 75 percent. Unfortunately, an antispam router works best on a high-volume connection, protecting MTAs at ISPs, larger companies, schools, and government agencies. There is no desktop version. Still, the technology has become a valuable part of the antispam arsenal, although the scourge of spam still continues.

20.4.7 E-mail Authentication.

On a technical level, what allows spam to continue to proliferate is the lack of sender authentication in the SMTP protocol. Several initiatives have sought to change this situation, either by changing the SMTP protocol or by adding another layer to it. One obvious way to authenticate e-mail is via the sender's domain name, and numerous schemes to accomplish this have been proposed:

- Sender Policy Framework (SFP). An extension to SMTP that allows software to identify and reject forged addresses in the SMTP MAIL FROM (Return-Path) that are typically indicative of spam. SPF is defined in Experimental RFC 4408.13

- Certified Server Validation (CSV). A technical method of e-mail authentication that focuses on the SMTP HELO-identity of MTAs.

- SenderlD. An antispoofing proposal from the former MARID IETF working group that joined Sender Policy Framework and Caller ID. Sender ID is defined primarily in Experimental RFC 4406.14

- DomainKeys. A method for e-mail authentication that provides end-to-end integrity from a signing MTA to a verifying MTA acting on behalf of the recipient. It uses a signature header verified by retrieving and validating the sender's public key through the Domain Name System (DNS)

- DomainKeys Identified Mail (DKIM). A specification that merges DomainKeys and Identified Internet Mail, an e-mail authentication scheme supported by Cisco, to create an enhanced implementation.

When properly implemented, all of these methods can be effective in stopping the kind of forgery that has enabled spam to dominate e-mail. Each has varying pros and cons and requirements. SPF, CSV, and SenderID authenticate just a domain name. SPF and CSV can reject forgeries before message data is transferred. DomainKeys and DKIM use a digital signature to authenticate a domain name and the integrity of message content. In order for SenderID and DomainKeys to work, they must process the headers, and so the message must be transmitted.