CHAPTER 9

MATHEMATICAL MODELS OF COMPUTER SECURITY

Matt Bishop

9.2.1 Access-Control Matrix Model

9.2.2 Harrison, Ruzzo, and Ullman and Other Results

9.2.3 Typed Access Control Model

9.3.1 Mandatory and Discretionary Access-Control Models

9.3.2 Originator-Controlled Access-Control Model and DRM

9.3.3 Role-Based Access Control Models and Groups

9.4.2 Biba's Strict Integrity Policy Model

9.1 WHY MODELS ARE IMPORTANT.

When you drive a new car, you look for specific items that will help you control the car: the accelerator, the brake, the shift, and the steering wheel. These exist on all cars and perform the function of speeding the car up, slowing it down, and turning it left and right. This forms a model of the car. With these items properly working, you can make a convincing argument that the model correctly describes what a car must have in order to move and be steered properly.

A model in computer security serves the same purpose. It presents a general description of a computer system (or collection of systems). The model provides a definition of “protect” (e.g., “keep confidential” or “prevent unauthorized change to”) and conditions under which the protection is provided. With mathematical models, the conditions can be shown to provide the stated protection. This provides a high degree of assurance that the data and programs are protected, assuming the model is implemented correctly.

This last point is critical. To return to our car analogy, notice the phrase “with these items properly working.” This also means that the average driver must be able to work them correctly. In most, if not all, cars the model is implemented in the obvious way: The accelerator pedal is to the right of the brake pedal, and speeds the car up; the brake pedal slows it down; and turning the steering wheel moves the car to the left or right, depending on the direction that the wheel is turned. The average driver is familiar with this implementation and so can use it properly. Thus, the model and the implementation together show that this particular car can be driven.

Now, suppose that the items are implemented differently. All the items are there, but the steering wheel is locked so it cannot be turned. Even though the car has all the parts that the model requires, they do not work the way the model requires them to work. The implementation is incorrect, and the argument that the model provides does not apply to this car, because the model makes assumptions—like the steering wheel turning—that are incorrect for this car. Similarly, in all the models we present in this chapter, the reader should keep in mind the assumptions that the models make. When one applies these models to existing systems, or uses them to design new systems, one must ensure that the assumptions are met in order to gain the assurance that the model provides.

This chapter presents several mathematical models, each of which serves a different purpose. We can divide these models into several types.

The first set of models is used to determine under what conditions one can prove types of systems secure. The access-control matrix model presents a general description of a computer system that this type of model uses, and it will give some results about the decidability of security in general and for particular classes of systems.

The second type of model describes how the computer system applies controls. The mandatory access-control model and the discretionary access-control model form the basis for components of the models that follow. The originator controlled access-control model ties control of data to the originator rather than the owner, and has obvious applications for digital rights management systems. The role-based access-control model uses job function, rather than identity, to provide controls and so can implement the principle of least privilege more effectively than many models.

The next few models describe confidentiality and integrity. The Bell-LaPadula model describes a class of systems designed to protect confidentiality and was one of the earliest, and most influential, models in computer security. The Biba model's strict integrity policy is closely related to the Bell-LaPadula model and is in widespread use today; it is applied to programs to determine when their output can be trusted. The Clark-Wilson model is also an integrity model, but it differs fundamentally from Biba's model because the Clark-Wilson model describes integrity in terms of processes and process management rather than in terms of attributes of the data.

The fourth type of model is the hybrid model. The Chinese Wall model examines conflicts of interest, and is an interesting mix of both confidentiality and integrity requirements. This type of model arises when many real-world problems are abstracted into mathematical representations, for example, when analyzing protections required for medical records and for the process of recordation of real estate.1

The main goal of this chapter is to provide the reader with an understanding of several of the main models in computer security, of what these models mean, and of when they are appropriate to use. An ancillary goal is to make the reader sensitive to how important assumptions in computer security are. Dorothy Denning said it clearly and succinctly in her speech when accepting the National Computer Systems Security Award in 1999:

The lesson I learned was that security models and formal methods do not establish security. They only establish security with respect to a model, which by its very nature is extremely simplistic compared to the system that is to be deployed, and is based on assumptions that represent current thinking. Even if a system is secure according to a model, the most common (and successful) attacks are the ones that violate the model's assumptions. Over the years, I have seen system after system defeated by people who thought of something new.2

Given this, the obvious question is: Why are models important? Models provide a framework for analyzing systems and for understanding where to focus our security efforts: on either validating the assumptions or ensuring that the assumptions are met in the environment in which the system exists. The mechanisms that do this may be technical; they may be procedural. Their quality determines the security of the system. So the model provides a basis for asserting that, if the mechanisms work correctly, then the system is secure—and that is far better than simply implementing security mechanisms without understanding how they work together to meet security requirements.

9.2 MODELS AND SECURITY.

Some terms recur throughout our discussion of models.

- A subject is an active entity, such as a process or a user.

- An object is a passive entity, such as a file.

- A right describes what a subject is allowed to do to an object; for example, the read right gives permission for a subject to read a file.

- The protection state of a system simply refers to the rights held by all subjects on the system.

The precise meaning of each right varies from actual system to system. For example, on Linux systems, if a process has write permission for a file, that process can alter the contents of the file. But if a process has write permission for a directory, that process can create, delete, or rename files in that directory. Similarly, having read rights over a process may mean the possessor can participate as a recipient of interprocess communications messages originating from that process. The point is that the meaning of the rights depends on the interpretation of the system involved. The assignment of meaning to the rights used in a mathematical model is called instantiating the model.

The first model we explore is the foundation for much work on the fundamental difficulty of analyzing systems to determine whether they are secure.

9.2.1 Access-Control Matrix Model.

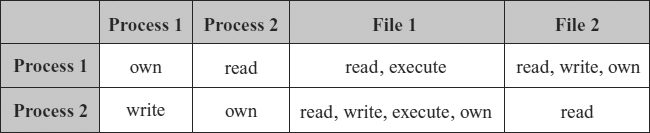

The access-control matrix model3 is perhaps the simplest model in computer security. It consists of a matrix, the rows of which correspond to subjects and the columns of which correspond to entities (subjects and objects). Each entry in the matrix contains the set of rights that the subject (row) has over the entity (column). For example, the access-control matrix in Exhibit 9.1 shows a system with two processes and two files. The first process has: own rights over itself; read rights over the second process; read and execute rights over the first file; and read, write, and own rights over the second file. The second process: can write to the first process; owns itself; can read, write, execute, and owns the first file; and can read the second file.

EXHIBIT 9.1 Example Access-Control Matrix with Two Processes and Two Files

The access-control matrix captures a protection state of a system. But systems evolve; their protection state does not remain constant. So the contents of the access-control matrix must change to reflect this evolution. Perhaps the simplest set of rules for changing the access-control matrix are these primitive operations:4

- Create subject s creates a new row and column, both labeled s

- Create object o creates a new column labeled o

- Enter r into A[s, o] adds the right r into the entry in row s and column o; it corresponds to giving the subject s the right r over the entity o

- Delete r from A[s, o] removes the right r from the entry in row s and column o; it corresponds to deleting the subject s's right r over the entity o

- Destroy subject s removes the row and column labeled s

- Destroy object o removes the column labeled o

These operations can be combined into commands. The next command creates a file f and gives the process p read and own rights over that file:

command createread(p, f) create object f enter read into A[p, f] enter own into A[p, f] end.

A mono-operational command consists of a single primitive operation. For example, the command

command grantwrite(p, f) enter write into A[p, f] end.

which gives p write rights over f, is mono-operational.

Commands may include conditions. For example, the next command gives the subject p execute rights over a file f if p has read rights over f:

command grantexec(p, f) if read in A[p, f] then enter execute into A[p, f] end.

If p does not have read rights over f when this command is executed, it does nothing. This command has one condition and so is called monoconditional. Biconditional commands have two conditions joined by and:

command copyread(p, q, f) if read in A[p, f] and own in A[p, f] then enter read into A[q, f] end.

This command gives a subject q read rights over the object f if the subject p owns f and has read rights over f.

Commands may have conditions only at the beginning, and if the condition is false, the command terminates. Commands may contain other commands as well as primitive operations.

If all commands in a system are mono-operational, the system is said to be mono-operational; if all the commands are monoconditional or biconditional, then the system is said to be monoconditional or biconditional, respectively. Finally, if the system has no commands that use the delete or destroy primitive operations, the system is said to be monotonic.

The access-control matrix provides a theoretical basis for two widely used security mechanisms: access-control lists and capability lists. In the realm of modeling, it provides a tool to analyze the difficulty of determining how secure a system is.

9.2.2 Harrison, Ruzzo, and Ullman and Other Results.

The question of how to test whether systems are secure is critical to understanding computer security. Define secure in the simplest possible way: A system is secure with respect to a generic right r if that right cannot be added to an entity in the access-control matrix unless that square already contains it. In other words, a system is secure with respect to r if r cannot leak into a new entry in the access-control matrix. The question then becomes:

Safety Question. Is there an algorithm to determine whether a given system with initial state σ is secure with respect to a given right?

In the general case:

Theorem (Harrison, Ruzzo, and Ullman [HRU] Result).5 The safety question is undecidable.

The proof is to reduce the halting problem to the safety question.6 This means that, if the safety question were decidable, so would the halting problem be. But the undecidability of the halting problem is well known,7 so the safety problem must also be undecidable.8

These results mean that one cannot develop a general algorithm for determining whether systems are secure. One can do so in limited cases, however, and the models that follow are examples of such cases. The characteristics that classes of systems must meet in order for the safety question to be decidable are not yet known fully, but for specific classes of systems, the safety question can be shown to be decidable. For example:

Theorem.9 There is an algorithm that will determine whether mono-operational systems are secure with respect to a generic right r.

But these classes are sensitive to the commands allowed:

Theorem.10 The safety question for monotonic systems is undecidable.

Limiting the set of commands to biconditional commands does not help:

Theorem.11 The safety question for biconditional monotonic systems is undecidable.

But limiting them to monoconditional operations:

Theorem.12 There is an algorithm that will determine whether mono-conditional monotonic systems are secure with respect to a generic right r.

In fact, adding the delete primitive operation does not affect this result (although the proof is different):

Theorem.13 There is an algorithm that will determine whether monotonic systems that do not use the destroy primitive operations are secure with respect to a generic right r.

9.2.3 Typed Access Control Model.

A variant of the access-control matrix model adds type to the entities. The typed access control matrix model, called TAM,14 associates a type with each entity and modifies the rules for matrix manipulation accordingly. This notion allows entities to be grouped into finer categories than merely subject and object, and enables a slightly different analysis than the HRU result suggests.

In TAM, a rule set is acyclic if neither an entity E nor any of its descendants can create a new entity with the same type as E. Given that definition:

Theorem.15 There is an algorithm that will determine whether acyclic, monotonic typed matrix models are secure with respect to a generic right r.

Thus, a system being acyclic and monotonic is sufficient to make the safety question decidable. But we still do not know exactly what properties are necessary to make the safety question decidable.

We now turn to models that have direct application to systems and environments and that focus on more complex definitions of “secure” and the mechanisms needed to achieve them.

9.3 MODELS AND CONTROLS.

Models of computer security focus on control: who can access files and resources, and what types of access are allowed. The next characterizations of these controls organize them by flexibility of use and by the roles of the entities controlling the access. These are essential to understanding how more sophisticated models work.

9.3.1 Mandatory and Discretionary Access-Control Models.

Some access-control methods are rule based; that is, users have no control over them. Only the system or a special user called (for example) the system security officer (SSO) can change them. The government classification system works this way. Someone without a clearance is forbidden to read TOP SECRET material, even if the person who has the document wishes to allow it. This rule is called mandatory because it must be followed, without exception. Examples of other mandatory rules are the laws in general, which are to be followed as written, and one cannot absolve another of liability for breaking the laws; or the Multics ring-based access-control mechanism, in which accessing a data segment from below the lower bound of the segment's access bracket is forbidden regardless of the access permissions. This type of access control is called a mandatory access control, or MAC. These rules base the access decision on attributes of the subject and object (and possibly other information).

Other access-control methods allow the owner of the entity to control access. For example, a person who keeps a diary decides who can read it. She need not show it to anyone, and if a friend asks to read it, she can say no. Here the owner allows access to the diary at her discretion. This type of control is called discretionary. Discretionary access control, or DAC, is the most common type of access-control mechanism on computers.

Controls can be (and often are) combined. When mandatory and discretionary controls are combined to enforce a single access-control policy, the mandatory controls are applied first. If they deny access, the system denies access and the discretionary controls need never be invoked. If the mandatory rules permit access, then the discretionary controls are consulted. If both allow the accesses, access is granted.

9.3.2 Originator-Controlled Access-Control Model and DRM.

Other types of access controls contain elements of both mandatory and discretionary access controls. Originator-controlled access control,16 or ORCON,17 mechanisms allow the originator to determine who can access a resource or data.

Consider a large government research agency that produces a study of projected hoe-handle sales for the next year. The market for hoe handles is extremely volatile, and if the results of the study leak out prematurely, certain vendors will obtain a huge market advantage. But the study must be circulated to regulatory agencies so they can prepare appropriate regulations that will be in place when the study is released. Thus, the research agency must retain control of the study even as it circulates it among other groups.

More precisely, an originator-controlled access control satisfies two conditions. Suppose an object o is marked as ORCON for organization X. X decides to release o to subjects acting on behalf of another organization Y. Then

- The subjects to whom the copies of o are given cannot release o to subjects acting on behalf of other organizations without X's consent; and

- Any copies of o must bear these restrictions.

Consider a control that implements these requirements. In theory, mandatory access controls could solve this problem. In practice, the required rules must anticipate all the organizations to which the data will be made available. This requirement, combined with the need to have a separate rule for each possible set of objects and organizations that are to have access to the object, makes a mandatory access control that satisfies the requirements infeasible. But if the control were discretionary, each entity that received a copy of the study could grant access to its copy without permission of the originator. So originator-controlled access control is neither discretionary nor mandatory.

However, a combination of discretionary and mandatory access controls can implement this control. The mandatory access-control mechanisms forbid the owner from changing access permissions on an object o and require that every copy of that object have the same access-control permissions as are on o. The discretionary access control says that the originator can change the access-control permissions on any copy of o.

As an example of the use of this model in a more popular context, record companies want to control the use of their music. Conceptually, they wish to retain control over the music after it is sold in order to prevent owners from distributing unauthorized copies to their friends. Here the originator is the record company and the protected resource is the music.

In practice, originator-controlled access controls are difficult to implement technologically. The problem is that access-control mechanisms typically control access to entities, such as files, devices, and other objects. But originator-controlled access control requires that access controls be applied to information that is contained in the entities—a far more difficult problem for which there is not yet a generally accepted mechanism.

9.3.3 Role-Based Access Control Models and Groups.

In real life, job function often dictates access permissions. The bookkeeper of an office has free access to the company's bank accounts, whereas the sales people do not. If Anne is hired as a salesperson, she cannot access the company's funds. If she later becomes the bookkeeper, she can access those funds. So the access is conditioned not on the identity of the person but on the role that person plays.

This example illustrates role-based access control (RBAC).18 It assigns a set of roles, called the authorized roles of the subject s, to each subject s. At any time, s may assume at most one role, called the active role of s. Then

Axiom. The rule of role authorization says that the active role of s must be in the set of authorized roles of s.

This axiom restricts s to assuming those roles that it is authorized to assume. Without it, s could assume any role, and hence do anything.

Extending this idea, let the predicate canexec(s, c) be true when the subject s can execute the command c.

Axiom. The rule of role assignment says that if canexec(s, c) is true for any s and any c, then s must have an active role.

This simply says that in order to execute a command c, s must have an active role. Without such a role, it cannot execute any commands. We also want to restrict the commands that s can execute; the next axiom does this.

Axiom. The rule of transaction authorization says that if canexec(s, c) is true, then only those subjects with the same role as the active role of s may also execute transaction.

This means that every role has a set of commands that it can execute, and if c is not in the set of commands that the active role of s can execute, then s cannot execute it.

As an example of the power of this model, consider two common problems: containment of roles and separation of duty. Containment of roles means that a subordinate u is restricted to performing a limited set of commands that a superior s can also perform; the superior may also perform other commands. Assign role a to the superior and role b to the subordinate; as everything a subject with active role b can do, a subject with active role a can do, we say that role a contains role b. Then we can say that if a is an authorized role of s, and a contains b, then b is also an authorized role of s. Taking this further, if a subject is authorized to assume a role that contains other (subordinate) roles, it can also assume any of the subordinate roles.

Separation of duty is a requirement that multiple entities must combine their efforts to perform a task. For example, a company may require two officers to sign a check for over $50,000. The idea is that a single person may breach security, but two people are less likely to combine forces to breach security.19 One way to handle separation of duty is to require that two distinct roles complete the task and make the roles mutually exclusive. More precisely, let r be a role and meauth(r), the mutually exclusive authorization set of r, be the set of roles that a subject with authorized role r can never assume. Then separation of duty is:

Axiom. The rule of separation of duty says that if a role a is in the set meauth(b), then no subject for which a is an authorized role may have b as another authorized role.

This rule is applied to a task that requires two distinct people to complete. The task is broken down into steps that two people are to complete. Each person is assigned a separate role, and each role is in the mutually exclusive authorization set of the other. This prevents either person from completing the task; they must work together, each in their respective role, to complete it.

Roles bear a resemblance to groups, but the goals of groups and roles are different. Membership in a group is defined by essentially arbitrary rules, set by the managers of the system. Membership in a role is defined by job function and is tied to a specific set of commands that are necessary to perform that job function. Thus, a role is a type of group, but a group is broader than a role and need not be tied to any particular set of commands or functions.

9.3.4 Summary.

The four types of access controls discussed in this section have different focuses. Mandatory, discretionary, and originator-controlled access controls are data-centric, determining access based on the nature or attributes of the data. Role-based access control focuses on the subject's needs. The difference is fundamental.

The principle of least privilege20 says that subjects should have no more privileges than necessary to perform their tasks. Role-based access control, if implemented properly, does this by constraining the set of commands that a subject can execute. The other three controls do this by setting attributes on the data to control access to the data rather than by restricting commands. Mandatory access controls have the attributes set by a system security officer or other trusted process; discretionary access controls, by the owner of the object; and originator-controlled access controls, by the creator or originator of the data.

As noted, these mechanisms can be combined to make the controls easier to use and more precise in application. We now discuss several models that do so.

9.4 CLASSIC MODELS.

Three models have played an important role in the development of computer security. The Bell-LaPadula model, one of the earliest formal models in computer security, influenced the development of much computer security technology, and it is still in widespread use. Biba, its analog for integrity, now plays an important role in program analysis. The Clark-Wilson model describes many commercial practices to preserve integrity of data. We examine each of these models in this section.

9.4.1 Bell-LaPadula Model.

The Bell-LaPadula model21 is a formalization of the famous government classification system using UNCLASSIFIED, CONFIDENTIAL, SECRET, and TOP SECRET levels. We begin by using those four levels to explain the ideas underlying the model and then augment those levels to present the full model. Because the model involves multiple levels, it is an example of a multilevel security model.

The four-level version of the model assumes that the levels are ordered from lowest to highest as UNCLASSIFIED, CONFIDENTIAL, SECRET, and TOP SECRET. Objects are assigned levels based on their sensitivity. An object at a higher level is more sensitive than an object at a lower level. Subjects are assigned levels based on what objects they can access. A subject is cleared into a level, and that level is called the subject's security clearance. An object is classified at a level, and that level is called the object's security classification. The goal of the classification system is to prevent information from leaking, or flowing downward (e.g., a subject at CONFIDENTIAL should not be able to read information classified TOP SECRET).

For convenience, we write level(s) for a subject's security clearance and level(o) for an object's security classification. The name of the classification is called a label. So an object classified at TOP SECRET has the label TOP SECRET.

Suppose Tom is cleared into the SECRET level. Three documents, called Paper, Article, and Book, are classified as CONFIDENTIAL, SECRET, and TOP SECRET, respectively. As Tom's clearance is lower than Book's classification, he cannot read Book. As his clearance is equal to or greater than Article's and Paper's classification, he can read them.

Definition. The simple security property says that a subject s can read an object o if and only if level(o) ≤ level(s).

This is sometimes called the no-reads-up rule, and it is a mandatory access control.

But that is insufficient to prevent information from flowing downward. Suppose Donna is cleared into the CONFIDENTIAL level. By the simple security property, she cannot read Article because

level(Article) = SECRET > CONFIDENTIAL = level(Donna).

But Tom can read the information in Article and write it on Paper. And Donna can read Paper. Thus, SECRET information has leaked to a subject with CONFIDENTIAL clearance.

To prevent this, Tom must be prevented from writing to Paper:

Definition. The * -property says that a subject s can write an object o if and only if level(s) ≤ level(o).

This is sometimes called the no-writes-down rule, and it too is a mandatory access control. It is also known as the star property and the confinement property.

Under this rule, as level(Tom) = SECRET > level(Paper), Tom cannot write to Paper. This solves the problem.

Finally, the Bell-LaPadula model allows owners of objects to use discretionary access controls:

Definition. The discretionary security property says that a subject s can read an object o only if the access-control matrix entry for s and o contains the read right.

So, in order to determine whether Tom can read Paper, the system checks the simple security property and the discretionary security problem. As both hold for Tom and Paper, Tom can read Paper. Similarly, the system checks the *-property to determine whether Tom can write to Paper. As the *-property does not hold for Tom and Paper, Tom cannot read Paper. Note that the discretionary security property need not be checked, because the relevant mandatory access-control property (the *-property) denies access.

The basic security theorem states that, if a system starts in a secure state, and every operation obeys the three properties, then the system remains secure:

Basic Security Theorem. Let a system Σ have a secure initial state σ0. Further, let every command in this system obey the simple security property, the *-property, and the discretionary security property. Then every state σi, i ≥ 0, is also secure.

We can generalize this to an arbitrary number of levels. Let L0, …, Ln be a set of security levels that are linearly ordered (i.e., L0 <… < Ln). Then the simple security property, the *-property, and the discretionary security property all apply, as does the Basic Security Theorem. This allows us to have many more than the four levels described.

Now suppose Erin works for the European Department of a government agency, and Don works for the Asia Department for the same agency. Erin and Don are both cleared for SECRET. But some information Erin will see is information that Don has no need to know, and vice versa. Introducing additional security levels will not help here, because then either Don would be able to read all of the documents that Erin could, or vice versa. We need an alternate mechanism.

The alternate mechanism is an expansion of the idea of “security level.” We define a category to be a kind of information. A security compartment is a pair (level, category set) and plays the role that the security level did previously.

As an example, suppose the category for the European Department is EUR, and the category for the Asia Department is ASIA. Erin will be cleared into the compartment (SECRET, {EUR}) and Don into the compartment (SECRET, {ASIA}). Documents have security compartments as well. The paper EurDoc may be classified as (CONFIDENTIAL, {EUR}), and the paper AsiaDoc may be (SECRET, {ASIA}). The paper EurAsiaDoc contains information about both Europe and Asia, and so would be in compartment (SECRET, {EUR, ASIA}). As before, we write level(Erin) = (SECRET, {EUR}), level(EurDoc) = (CONFIDENTIAL, {EUR}), and level (EurAsiaDoc) = (SECRET, {EUR, ASIA}).

Next, we must define the analog to “greater than.” As noted earlier, security compartments are no longer linearly ordered because not every pair of compartments can be compared. For example, Don's compartment is not “greater” than Erin's, and Erin's is not “greater” than Don's. But the classification of EurAsiaDoc is clearly “greater” than that of both Don and Erin.

We compare compartments using the relation dom, for “dominates.”

Definition. Let L and L′ be security levels and let C and C′ be category sets. Then (L, C) dom (L′, C′) if and only if L′ ≤ L and C′ ![]() C

C

The dom relation plays the role that “greater than or equal to” did for security levels. Continuing our example, level(Erin) = (SECRET, {EUR}) dom (CONFIDENTIAL, {EUR}) = level(EurDoc), and level(EurAsiaDoc) = (SECRET, {EUR, ASIA}) dom (SECRET, {EUR}) = level(Erin).

We now reformulate the simple security property and *-property in terms of dom:

Definition. The simple security property says that a subject s can read an object o if and only if level(s) dom level(o).

Definition. The *-property says that a subject s can write to an object o if and only if level(o) dom level(s).

In our example, assume the discretionary access controls are set to allow any subject all types of access. In that case, as level(Erin) dom level(EurDoc), Erin can read EurDoc (by the simple security property) but not write EurDoc (by the *-property). Conversely, as level(EurAsiaDoc) dom level(Erin), Erin cannot read EurAsiaDoc (by the simple security property) but can write to EurAsiaDoc (by the *-property).

A logical question is how to determine the highest security compartment that both Erin and Don can read and the lowest that both can write. In order to do this, we must review some properties of dom.

First, note that level(s) dom level(s); that is, dom is reflexive. The relation is also antisymmetric, because if both level(s) dom level(o) and level(o) dom level(s) are true, then level(s) = level(o). It is transitive, because if level(s1) dom level(o) and level(o) dom level (s2), then level(s1) dom level (s2).

We also define the greatest lower bound (glb) of two compartments as:

Definition. Let A = (L, C) and B = (L′, C′). Then glb(A, B) = (min(L, L′), C ∩ C′).

This answers the question of the highest security compartment that two subjects s and s′ can read an object in. It is glb(level(s), level(s′)). For example, Don and Erin can both read objects in:

glb(level(Don), level(Erin)) = (SECRET, Ø).

This makes sense because Don cannot read an object in any compartment except those with the category set {ASIA} or the empty set, and Erin can only read objects in a compartment with the category set {EUR} or the empty set. Both are at the SECRET level, so the compartment must also be at the SECRET level.

We can define the least upper bound (lub) of two compartments analogously:

Definition. Let A = (L, C) and B = (L′, C′). Then lub(A, B) = (max(L, L′), C ![]() C′).

C′).

We can now determine the lowest security compartment into which two subjects s and s′ can write. It is lub(level(s), level(s′)). For example, Don and Erin can both write to objects in:

glb((level(Don), level(Erin)) = (SECRET, {EUR, ASIA}).

This makes sense because Don cannot write to an object in any compartment except those with ASIA in the category set, and Erin can only write to objects in a compartment with EUR in the category set. The smallest category set meeting both these requirements is {EUR, ASIA}. Both are at the SECRET level, so the compartment must also be at the SECRET level.

The five properties of dom (reflexive, antisymmetric, transitive, existence of a least upper bound for every pair of elements, and existence of a greatest lower bound for every pair of elements) mean that the security compartments form a mathematical structure called a lattice. This has useful theoretical properties, and is important enough so models exhibiting this type of structure are called lattice models.

When the model is implemented on a system, the developers often make some modifications. By far the most common one is to restrict writing to the current compartment or to within a limited set of compartments. This prevents confidential information from being altered by those who cannot read it. The structure of the model can also be used to implement protections against malicious programs that alter files such as system binaries. To prevent this, place the system binaries in a compartment that is dominated by those compartments assigned to users. By the simple security property, then users can read the system binaries, but by the *-property, users cannot write them. Hence if a computer virus infects a user's programs or documents,22 it can spread within that user's compartment but not to system binaries.

The Bell-LaPadula model is the basis for several other models. We explore one of its variants that models integrity rather than confidentiality.

9.4.2 Biba's Strict Integrity Policy Model.

Biba's strict integrity policy model,23 usually called Biba's model, is the mathematical dual of the Bell-LaPadula model.

Consider the issue of trustworthiness. When a highly trustworthy process reads data from an untrusted file and acts based on that data, the process is no longer trustworthy—as the saying goes, “garbage in, garbage out.” But if a process reads data more trustworthy than the process, the trustworthiness of that process does not change. In essence, the trustworthiness of the result is as trustworthy as the least trustworthy of the process and the data.

Define a set of integrity classes in the same way that we defined security compartments for the Bell-LaPadula model, and let i-level(s) be the integrity compartment of s. Then the preceding text says that “reads down” (a trustworthy process reading untrustworthy data) should be banned, because it reduces the trustworthiness of the process. But “reads up” is allowed, because it does not affect the trustworthiness of the process. This is exactly the opposite of the simple security property.

Definition. The simple integrity property says that a subject s can read an object o if and only if i-level(o) dom i-level(s).

This definition captures the notion of allowing “reads up” and disallowing “reads down.”

Similarly, if a trustworthy process writes data to an untrustworthy file, the trustworthiness of the file may (or may not) increase. But if an untrustworthy process writes data to a trustworthy file, the trustworthiness of that file drops. s “writes down” should be allowed and “writes up” forbidden.

Definition. The *-integrity property says that a subject s can write to an object o if and only if i-level(s) dom i-level(o).

This property blocks attempts to “write up” while allowing “writes down.”

A third property relates to execution of subprocesses. Suppose process date wants to execute the command time as a subprocess. If the integrity compartment of date dominates that of time, then any information date passes to time is passed to a less trustworthy process, and hence is allowed under the *-integrity property. But if the integrity compartment of time dominates that of date, then the *-integrity property is violated. Hence

Definition. The execution integrity property says that a subject s can execute a subject s′ if and only if i-level(s′) dom i-level(s).

Given these three properties, one can show:

Theorem. If information can be transferred from object o1 to object on, then by the simple integrity property, the *-integrity property, and the execution integrity property, i-level(o1) dom i-level(on).

In other words, if all the rules of Biba's model are followed, the integrity of information cannot be corrupted because information can never flow from a less trustworthy object to a more trustworthy object.

This model suggests a method for analyzing programs to prevent security breaches. When the program runs, it reads data from a variety of sources: itself, the system, the network, and the user. Some of these sources are trustworthy, such as the process itself and the system. The user and the network are under the control of ordinary users (or remote users) and so are less trustworthy. So, apply Biba's model with two integrity compartments, (UNTAINTED, Ø) (this means the set of categories in the compartment is empty) and (TAINTED, Ø), where (UNTAINTED, Ø) dom (TAINTED, Ø). For notational convenience, we shall write (UNTAINTED, Ø) as UNTAINTED and (TAINTED, Ø) as TAINTED; and dom as ≥. Thus, UNTAINTED ≥ TAINTED.

The technique works with either static or dynamic analysis but is usually used for dynamic analysis. In this mode, all constants are assigned the integrity label UNTAINTED. Variables are assigned labels based on the data flows within the program. For example, in an assignment, the integrity label of the variable being assigned to is set to the integrity label of the expression assigned to it. When UNTAINTED and TAINTED variables are mixed in the expression, the integrity label of the expression is TAINTED. If a variable is assigned a value from an untrusted source, the integrity label of the variable is set to TAINTED.

When data are used as (for example) parameters of system calls or library functions, the system checks that the integrity label of the variable dominates that of the parameter. If it does not, the program takes some action, such as aborting, or logging a warning, or throwing an exception. This action either prevents an exploit or alerts the administrator of the attack.

For example, suppose a programmer wishes to prevent a format string attack. This is an attack that exploits a vulnerability in the C printing function printf. The first argument to printf is a format string, and the contents of that string determine how many other arguments printf expects. By manipulating the contents of a format string, an attacker can overwrite values of variables and corrupt the stack, causing the program to malfunction—usually to the attacker's benefit. The key step of the attack is to input an unexpected value for the format string. Here is a code fragment with the flaw:

if (fgets(buf, sizeof(buf), stdin) != NULL) printf(buf);

This reads a line of characters from the input into an array buf and immediately prints the contents of the array. If the input is “xyzzy%n”, then some element of the stack will be overwritten by the value 5.24 Hence, the first parameter to printf must always have integrity class UNTAINTED.

Under this analysis technique, when the input function fgets is executed, the variable buf would be assigned an integrity label of TAINTED, because the input (which is untrusted) is stored in it. Then, at the call to printf, the integrity class of buf is compared to that required for the first parameter of printf. The former is TAINTED; the latter is UNTAINTED. But we require that the variable's integrity class (TAINTED) dominate that of the parameter (UNTAINTED), and here TAINTED ≤ UNTAINTED. Hence, the analysis has found a disallowed flow and acts accordingly.

9.4.3 Clark-Wilson Model.

Lipner25 identified five requirements for commercial integrity models:

- Users may not write their own programs to manipulate trusted data. Instead, they must use programs authorized to access that data.

- Programmers develop and test programs on nonproduction systems, using non-production copies of production data if necessary.

- Moving a program from nonproduction systems to production systems requires a special process.

- That special process must be controlled and audited.

- Managers and system auditors must have access to system logs and the system's current state.

Biba's model can be instantiated to meet the first and last conditions by appropriate assignment of integrity levels, but the other three focus on integrity of processes. Hence, while Biba's model works well for some problems of integrity, it does not satisfy these requirements for a commercial integrity model.

The Clark-Wilson model26 was developed to describe processes within many commercial firms. There are several specialized terms and concepts needed to understand the Clark-Wilson mode; these are best introduced using an example:

- Consider a bank. If D are the day's deposits, W the day's withdrawals, I the amount of money in bank accounts at the beginning of the day, and F the amount of money in bank accounts at the end of the day, those values must satisfy the constraint I + D − W = F.

- This is called an integrity constraint because, if the system (the set of bank accounts) does not satisfy it, the bank's integrity has been violated.

- If the system does satisfy its integrity constraints, it is said to be in a consistent state.

- When in operation, the system moves from one consistent state to another. The operations that do this are called well-formed transactions. For example, if a customer transfers money from one account to another, the transfer is the well-formed transaction. Its component actions (withdrawal from the first account and deposit in the second) individually are not well-formed transactions, because if only one completes, the system will be in an inconsistent state.

- Procedures that verify that all integrity constraints are satisfied are called integrity verification procedures (IVPs).

- Data that must satisfy integrity constraints are called constrained data items (CDIs), and when they satisfy the constraints are said to be in a valid state.

- All other data are called unconstrained data items (UDIs).

- In addition to integrity constraints on the data, the functions implementing the well-formed transactions themselves are constrained. They must be certified to be well formed and to be implemented correctly. Such a function is called a transformation procedure (TP).

The model provides nine rules, five of which relate to the certification of data and TPs and four of which describe how the implementation of the model must enforce the certifications.

The first rule captures the requirement that the system be in a consistent state:

Certification Rule 1. An IVP must ensure that the system is in a consistent state.

The relation certified associates some set of CDIs with a TP that transforms those CDIs from one valid state to a (possibly different) valid state. The second rule captures this.

Certification Rule 2. For some set of associated CDIs, a TP transforms those CDIs from a valid state to a (possibly different) valid state.

The first enforcement rule ensures that the system keeps track of the certified relation and prevents any TP from executing with a CDI not in its associated certified set:

Enforcement Rule 1. The system must maintain the certified relation, and ensure that only TPs certified to run on a CDI manipulates that CDI.

In a typical firm, the set of users who can use a TP is restricted. For example, in a bank, a teller cannot move millions of dollars from one bank to another; doing that requires a bank officer. The second enforcement rule ensures that only authorized users can run TPs on CDIs by defining a relation allowed that associates a user, a TP, and the set of CDIs that the TP can access on that user's behalf:

Enforcement Rule 2. The system must associate a user with each TP and set of CDIs. The TP may access those CDIs on behalf of the associated user. If a user is not associated with a particular TP and set of CDIs, then the TP cannot access those CDIs on behalf of that user.

This implies that the system can correctly identify users. The next rule enforces this:

Enforcement Rule 3. The system must authenticate each user attempting to execute a TP.

This ensures that the identity of a person trying to execute a TP is correctly bound to the corresponding user identity within the computer. The form of authentication is left up to the instantiation of the model, because differing environments suggest different authentication requirements. For example, a bank officer may use a biometric device and a password to authenticate herself to the computer that moves millions of dollars; a teller whose actions are restricted to smaller amounts of money may need only to supply a password.

Separation of duty, already discussed, is a key consideration in many commercial operations. The Clark-Wilson model captures it in the next rule:

Certification Rule 3. The allowed relation must meet the requirements imposed by separation of duty.

A cardinal principle of commercial integrity is that the operations must be auditable. This requires logging of enough information to determine what the transaction did. The next rule captures this requirement:

Certification Rule 4. All TPs must append enough information to reconstruct the operation to an append-only CDI.

The append-only CDI is, of course, a log.

So far we have considered all inputs to TPs to be CDIs. Unfortunately, that is infeasible. In our bank example, the teller will enter account information and deposit and withdrawal figures; but those are not CDIs; the teller may mistype something. Before a TP can use that information, it must be vetted to ensure it will enable the TP to work correctly. The last certification rule captures this:

Certification Rule 5. A TP that takes a UDI as input must perform either a well-formed transaction or no transaction for any value of the UDI. Thus, it either rejects the UDI or transforms it into a CDI.

This also covers poorly crafted TPs; if the input can exploit vulnerabilities in the TP to cause it to act in unexpected ways, it cannot be certified under this rule.

Within the model lies a possible conflict. In the preceding rules, one user could certify a TP to operate on a CDI and then execute the TP on that CDI. The problem is that a malicious user may certify a TP that does not perform a well-formed transaction, causing the system to violate the integrity constraints. Clearly, an application of the principle of separation of duty would solve this problem, and indeed the last rule in the model does just that:

Enforcement Rule 4. Only the certifier of a TP may change the certified relation for that TP. Further, no certifier of a TP, or of any CDI associated with that TP, may execute the TP on the associated CDI.

This separates the ability to certify a TP from the ability to execute that TP and the ability to certify a CDI for a given TP from the ability to execute that TP on that CDI. This enforces the separation of duty requirement.

Now, revisit Lipner's requirements for commercial integrity models. The TPs correspond to Lipner's programs and the CDIs to the production data. To meet requirement 1, the Clark-Wilson certifiers need to be trusted, and ordinary users cannot certify either TPs or CDIs. Then Enforcement Rule 4 and Certification Rule 5 enforce this requirement. Requirement 2 is met by not certifying the development programs; as they are not TPs, they cannot be run on production data. The “special process” in requirement 3 is a TP. Certification Rule 4 describes a log; the special process in requirement 3 being a TP, it will append information to the log that can be audited. Further, the TP is by definition a controlled process, and Enforcement Rule 4 and Certification Rule 5 control its execution. Before the installation, the program being installed is a UDI; after it is installed, it is a CDI (and a TP). Thus, requirement 4 is satisfied. Finally, the Clark-Wilson model has a log that captures all aspects of what a TP does, and that is the log the managers and auditors will have access to. They also have access to the system state because they can run an IVP to check its integrity. Thus, Lipner's requirement 5 is met. So the Clark-Wilson model is indeed a satisfactory commercial integrity model.

This model is important for two reasons. First, it captures the way most commercial firms work, including applying separation of duty (something that Biba's model does not capture well). Second, it separates the notions of certification and enforcement. Enforcement typically can be done within the instantiation of the model. But the model cannot enforce how certification is done; it can only require that a certifier claim to have done it. This is true of all models, of course, but the Clark-Wilson model specifically states the assumptions it makes about certification.

9.4.4 Chinese Wall Model.

Sometimes called the Brewer-Nash model, the goal of the Chinese Wall model27 is to prevent conflicts of interest. It does so by grouping objects that belong to the same company into company data sets and company data sets into conflict-of-interest classes. If two companies (represented by their associated company data sets) are in the same conflict-of-interest class, then a lawyer or stockbroker representing both would have a conflict of interest. The rules of the model ensure that a subject can read only one company data set in each conflict-of-interest class.

In general, objects are documents or resources that contain information that the company wishes to (or is required to) keep secret. There is, however, an exception. Companies release data publicly, in the form of annual reports; that information is carefully sanitized to remove all confidential content. To reflect business practice, the model must allow all subjects to see that data. The model therefore defines a conflict-of-interest class called the sanitized class that has one company data set holding only objects containing sanitized data.

Now consider a subject reading an object. If the subject has never read any object in the object's conflict-of-interest class, reading the object presents no conflict of interest. If the subject has read an object in the same company data set, then the only information that the subject has seen in that conflict-of-interest class is from the same company as the object it is trying to read, which is allowed. But if the subject has read an object in the same conflict-of-interest class but a different company data set, then were the new read request granted, the subject would have read information from two different companies for which there is a conflict of interest—exactly what the model is trying to prevent. So that is disallowed.

The next rule summarizes this:

Definition. The CW-simple security property says that a subject s can read an object o if and only if either:

- s has not read any other object in o's conflict of interest class; or

- The only objects in o's conflict of interest class that s has read are all in o's company data set.

To see why this works, suppose all banks are in the same conflict-of-interest class. A stockbroker represents The Big Bank. She is approached to represent The Bigger Bank. If she agreed, she would need access to The Bigger Bank's information, specifically the objects in The Bigger Bank's company data set. But that would mean she could read objects from two company data sets in the same conflict-of-interest class, something the CW-simple security property forbids. The temporal element of the model is important; even if she resigned her representation of The Big Bank, she cannot represent The Bigger Bank because condition 2 of the CW-simple security property considers all objects she previously read. This makes sense, because she has had access to The Big Bank, and could unintentionally compromise the interests of her previous employer while representing The Bigger Bank.

The CW-simple security property implicitly says that s can read any sanitized object. To see this, note that if s has never read a sanitized object, condition 1 holds. If s has read a sanitized object, then condition 2 holds because all sanitized objects are in the same company data set.

Writing poses another problem. Suppose Barbara represents The Big Bank, and Percival works for The Bigger Bank. Both also represent The Biggest Toy Company, which—not being a financial institution—is in a different conflict-of-interest class from either bank. Thus, there is no conflict of interest in either Barbara's or Percival's representation of a bank and the toy company. But there is a path along which information can flow from Barbara to Percival, and vice versa, that enables a conflict of interest to occur. Barbara can read information from an object in The Big Bank's company data set and write it to an object in The Biggest Toy Company's company data set. Percival can read the information from the object in The Biggest Toy Company's company data set, thereby effectively giving him access to The Big Bank's information—which is a conflict of interest. That he needs Barbara's help does not detract from the problem. The goal of the model requires that this conspiracy be prevented. The next rule does so:

Definition. The CW-*-property says that a subject s can write to an object o if and only if both of the following conditions are met:

- The CW-simple security property allows s to read o; and

- All unsanitized objects that s can read are in the same company data set as o.

Now Barbara can read objects in both The Big Bank's company data set and The Biggest Toy Company's data set. But when she tries to write to The Biggest Toy Company's data set, the CW-*-property prevents her from doing so as condition 2 is not met (because she can read an object in The Big Bank's company data set).

This also accounts for sanitized objects. Suppose that Skyler represents The Biggest Toy Company and no other company. He can also read information from the sanitized class. When he tries to write to an object in The Biggest Toy Company's company data set, he meets both conditions of the CW-simple security property (because he has only read objects in that company data set), and all unsanitized objects that he can read are in the same company data set as the object he can read. Thus both conditions of the CW-*-property are met, so Skyler can write the object.

The conditions of the CW-*-property are very restrictive; effectively, a subject can write to an object only if it has access to the company data set containing that object, and no other company data set except the company data set in the sanitized class. But without this restriction, conflicts of interest are possible.

9.4.5 Summary.

The four models discussed in this section have played critical roles in the development of our understanding of computer security. Although it is not the first model of confidentiality, the Bell-LaPadula model describes a widely used security scheme. The Biba model captured notions of “trust” and “trustworthiness” in an intuitive way, and recent advances in the analysis of programs for vulnerabilities have applied that model to great effect. The Clark-Wilson model moved the notion of commercial integrity models away from multilevel models to models that examine process integrity as well as data integrity. The Chinese Wall model explored conflict of interest, an area that often arises when one is performing confidential services for multiple companies or has access to confidential information from a number of companies. These models are considered classic because their structure and ideas underlie the rules and structures of many other models.

9.5 OTHER MODELS.

Some models examine specific environments. The Clinical Information Systems Security model28 considers the protection of health records, emphasizing accountability as well as confidentiality and integrity. Traducement29 describes the process of real estate recordation, which requires a strict definition of integrity and accountability with little to no confidentiality.

Other models generalize the classic models. The best known are the models of noninterference security and deducibility security. Both are multilevel security models with two levels, HIGH and LOW. The noninterference model30 defines security as the ability of a HIGH subject to interfere with what the LOW subject sees. For example, if a HIGH subject can prevent a LOW subject from acquiring a resource at a particular time, the HIGH subject can transmit information to the LOW subject. In essence, the interference is a form of writing, and must be prevented just as the Bell-LaPadula model prevents a HIGH subject from writing to a LOW object. The deducibility model31 examines whether a LOW subject can infer anything about a HIGH subject's actions by examining only the LOW outputs. Both these models are useful in analyzing the security of systems32 and intrusion detection mechanisms,33 and led to work that showed connecting two secure compute systems may produce a nonsecure system.34 Further work is focusing on establishing conditions under which connecting two secure systems produces a secure system.35

9.6 CONCLUSION.

The efficacy of mathematical modeling depends on the application of those models. Typically, the models capture system-specific details and describe constraints ensuring the security of the system or the information on the system. If the model does not correctly capture the details of the entire system, the results may not be comprehensive, and the analysis may miss ways in which security could be compromised.

This is an important point. For example, the Bell-LaPadula model captures a notion of what the system must do to prevent a subject cleared for TOP SECRET leaking information to a subject cleared for CONFIDENTIAL. But if the system enforces that model, the TOP SECRET subject could still meet the CONFIDENTIAL subject and hand her a printed version of the TOP SECRET information. That is outside the system and so was not captured by the model. But if the model also embraces procedures, then a procedure is necessary to prevent this “writing down.” In that case, the flaw would be in the implementation of the procedure that failed to prevent the transfer of information—in other words, an incorrect instantiation of the model, exactly what Dorothy Denning's comment in the introduction to this section referred to.

The models described in this section span the foundational (access-control matrix model) to the applied (Bell-LaPadula, Biba, Clark-Wilson, and Chinese Wall). All play a role in deepening our understanding of what security is and how to enforce it.

The area of mathematical modeling is a rich and important area. It provides a basis for demonstrating that the design of systems is secure, for specific definitions of secure. Without these models, our understanding of how to secure systems would be diminished.

9.7 FURTHER READING

Anderson, R. “A Security Policy Model for Clinical Information Systems,” Proceedings of the 1996 IEEE Symposium on Security and Privacy (May 1996): 34–48.

Bell, D., and LaPadula, L. “Secure Computer Systems: Unified Exposition and Multics Interpretation,” Technical Report MTR-2997 rev. 1, MITRE Corporation, Bedford, MA (March 1975).

Biba, K. “Integrity Considerations for Secure Computer Systems,” Technical Report MTR-3153, MITRE Corporation, Bedford, MA (April 1977).

Bishop, M. Computer Security: Art and Science. Boston: Addison-Wesley Professional, 2002.

Brewer, D., and M. Nash. “The Chinese Wall Security Policy,” Proceedings of the 1989 IEEE Symposium on Security and Privacy (May 1989): 206–212.

Clark, D., and D. Wilson. “A Comparison of Commercial and Military Security Policies,” Proceedings of the 1987 IEEE Symposium on Security and Privacy (April 1987): 184–194.

Demillo, D., D. Dobkin, A. Jones, and R. Lipton, eds. Foundations of Secure Computing. New York: Academic Press, 1978.

Denning, D. “The Limits of Formal Security Models,” National Information Systems Security Conference, October 18, 1999; available at www.cs.georgetown.edu/~denning/infosec/award.html.

Denning, P. “Third Generation Computer Systems,” Computing Surveys 3, No. 4 (December 1976): 175–216.

Engeler, E. Introduction to the Theory of Computation. New York: Academic Press, 1973.

Ferraiolo, D., J. Cugini, and D. Kuhn. “Role-Based Access Control (RBAC): Features and Motivations,” Proceedings of the Eleventh Annual Computer Security Applications Conference (December 1995): 241–248.

Gougen, J., and J. Meseguer. “Security Policies and Security Models,” Proceedings of the 1982 IEEE Symposium on Privacy and Security (April 1982): 11–20.

Graubert, R. “On the Need for a Third Form of Access Control,” Proceedings of the Twelfth National Computer Security Conference (October 1989): 296–304.

Haigh, J., R. Kemmerer, J. McHugh, and W. Young. “An Experience Using Two Covert Channel Analysis Techniques on a Real System Design,” IEEE Transactions in Software Engineering 13, No. 2 (February 1987): 141–150.

Harrison, M., and W. Ruzzo, “Monotonic Protection Systems.” In D. Demillo et al., eds. Foundations of Secure Computing, pp. 337–363. New York: Academic Press, 1978.

Harrison, M., W. Ruzzo, and J. Ullman. “Protection in Operating Systems,” Communications of the ACM 19, No. 8 (August 1976): 461–471.

Ko, C., and T. Redmond. “Noninterference and Intrusion Detection,” Proceedings of the 2002 IEEE Symposium on Security and Privacy (May 2002): 177–187.

Lampson, B. “Protection.” Proceedings of the Fifth Princeton Symposium of Information Science and Systems (March 1971): 437–443.

Lipner, S. “Non-Discretionary Controls for Commercial Applications,” Proceedings of the 1982 IEEE Symposium on Privacy and Security (April 1982): 2–10.

Mantel, H. “On the Composition of Secure Systems,” Proceedings of the 2002 IEEE Symposium on Security and Privacy (May 2002): 88–101.

McCullough, D. “Non-Interference and the Composability of Security Properties,” Proceedings of the 1987 IEEE Symposium on Privacy and Security (April 1988): 177–186.

Sandhu, R. “The Typed Access Matrix Model,” Proceedings of the 1992 IEEE Symposium on Security and Privacy (April 1992): 122–136.

Seacord, R. Secure Coding in C and C++. Boston: Addison-Wesley, 2005.

Walcott, T., and M. Bishop. “Traducement: A Model for Record Security,” ACM Transactions on Information Systems Security 7, No. 4 (November 2004): 576–590.

9.8 NOTES

1. Recordation of real estate refers to recording deeds, mortgages, and other information about property with the county recorder. See http://ag.ca.gov/erds1/index.php.

2. D. Denning, “The Limits of Formal Security Models,” National Information Systems Security Conference, October 18, 1999; available at www.cs.georgetown.edu/~denning/infosec/award.html.

3. B. Lampson, “Protection,” Proceedings of the Fifth Princeton Symposium of Information Science and Systems (March 1971): 437–443; P. Denning, “Third Generation Computer Systems,” Computing Surveys 3, No. 4 (December 1976): 175–216.

4. Harrison, 1976.

5. M. Harrison, W. Ruzzo, and J. Ullman, “Protection in Operating Systems,” Communications of the ACM 19, No. 8 (August 1976): 461–471.

6. The halting problem is the question “Is there an algorithm to determine whether any arbitrary program halts?” The answer, “No,” was proved by Alan Turing in 1936. See www.nist.gov/dads/HTML/haltingProblem.html. See also E. Engeler, Introduction to the Theory of Computation (New York: Academic Press, 1973).

7. E. Engeler, Introduction to the Theory of Computation (New York: Academic Press, 1973).

8. The interested reader is referred to Harrison et al., “Protection in Operating Systems,” or to M. Bishop, Computer Security: Art and Science (Boston: Addison-Wesley Professional, 2002), p. 47 ff., for the proof.

9. Harrison et al., “Protection in Operating Systems.”

10. M. Harrison and W. Ruzzo, “Monotonic Protection Systems,” in D. Demillo et al., eds., Foundations of Secure Computing, pp. 337–363 (New York: Academic Press, 1978).

11. Harrison and Ruzzo, “Monotonic Protection Systems.”

12. Harrison and Ruzzo, “Monotonic Protection Systems.”

13. Harrison and Ruzzo, “Monotonic Protection Systems.”

14. R. Sandhu, “The Typed Access Matrix Model,” Proceedings of the 1992 IEEE Symposium on Security and Privacy (April 1992): 122–136.

15. Sandhu, “The Typed Access Matrix Model.”

16. Also sometimes called organization controlled access control, or ORGCON.

17. R. Graubert, “On the Need for a Third Form of Access Control,” Proceedings of the Twelfth National Computer Security Conference (October 1989): 296–304.

18. D. Ferraiolo, J. Cugini, and D. Kuhn, “Role-Based Access Control (RBAC): Features and Motivations,” Proceedings of the Eleventh Annual Computer Security Applications Conference (December 1995): 241–248.

19. As Benjamin Franklin once said, “Three can keep a secret if two of them are dead.”

20. Saltzer and Schroeder 1975

21. D. Bell and L. LaPadula, “Secure Computer Systems: Unified Exposition and Multics Interpretation,” Technical Report MTR-2997 rev. 1, MITRE Corporation, Bedford, MA (March 1975).

22. A macro virus can infect a document. See, for example, Bishop, Computer Security, section 22.3.8, and Chapter 16 in this Handbook.

23. Biba proposed three models: the Low-Water-Mark Policy model, the Ring Policy model, and the Strict Integrity Policy model. See Bishop, Computer Security, Section 6.2.

24. The explanation is too complex to go into here. The interested reader is referred to R. Seacord, Secure Coding in C and C++ (Boston: Addison-Wesley, 2005), Chapter 6, for a discussion of this problem.

25. S. Lipner, “Non-Discretionary Controls for Commercial Applications,” Proceedings of the 1982 IEEE Symposium on Privacy and Security (April 1982): 2–10.

26. D. Clark and D. Wilson, “A Comparison of Commercial and Military Security Policies,” Proceedings of the 1987 IEEE Symposium on Security and Privacy (April 1987): 184–194.

27. D. Brewer and M. Nash, “The Chinese Wall Security Policy,” Proceedings of the 1989 IEEE Symposium on Security and Privacy (May 1989): 206–212.

28. R. Anderson, “A Security Policy Model for Clinical Information Systems,” Proceedings of the 1996 IEEE Symposium on Security and Privacy (May 1996): 34–48.

29. T. Walcott and M. Bishop, “Traducement: A Model for Record Security,” ACM Transactions on Information Systems Security 7, No. 4 (November 2004): 576–590.

30. J. Gougen and J. Meseguer, “Security Policies and Security Models,” Proceedings of the 1982 IEEE Symposium on Privacy and Security (April 1982): 11–20.

31. Gougen and Meseguer, “Security Policies and Security Models.”

32. J. Haigh, R. Kemmerer, J. McHugh, and W. Young, “An Experience Using Two Covert Channel Analysis Techniques on a Real System Design,” IEEE Transactions in Software Engineering 13, No. 2 (February 1987): 141–150.

33. C. Ko and T. Redmond, “Noninterference and Intrusion Detection.” Proceedings of the 2002 IEEE Symposium on Security and Privacy (May 2002): 177–187.

34. D. McCullough, “Non-Interference and the Composability of Security Properties,” Proceedings of the 1987 IEEE Symposium on Privacy and Security (April 1988): 177–186.

35. H. Mantel, “On the Composition of Secure Systems,” Proceedings of the 2002 IEEE Symposium on Security and Privacy (May 2002): 88–101.