CHAPTER 55

CYBER INVESTIGATION1

Peter Stephenson

55.1.1 Defining Cyber Investigation

55.1.2 Distinguishing between Cyber Forensics and Cyber Investigation

55.1.3 DFRWS Framework Classes

55.2 END-TO-END DIGITAL INVESTIGATION

55.2.2 Analysis of Individual Events

55.2.3 Preliminary Correlation

55.2.6 Second-Level Correlation

55.2.8 Chain of Evidence Construction

55.3 APPLYING THE FRAMEWORK AND EEDI

55.3.1 Supporting the EEDI Process

55.3.2 Investigative Narrative

55.4 USING EEDI AND THE FRAMEWORK

55.5 MOTIVE, MEANS, AND OPPORTUNITY: PROFILING ATTACKERS

55.1 INTRODUCTION.

Cyber investigation (also widely known as digital investigation) as a discipline has changed markedly since publication of the fourth edition of this Handbook in 2002. In 1999, when Investigating Computer Related Crime2 was published, practitioners in the field were just beginning to speculate as to how cyber investigations would be carried out. At that time, the idea of cyber investigation was almost completely congruent with the practice of computer forensics. Today (as this is being written in April 2008), we know that such a view is too confining for investigations in the current digital environment.

Cyber investigation today is evolving into a discipline that is not only becoming commonplace in information technology but is also finding acceptance by both law enforcement and the forensic science community. The American Academy of Forensic Science, for example, now recognizes the forensic computer-related crime investigator as a legitimate discipline within its general category of membership.3

This chapter defines cyber investigation and examines some of its forensic components. It discusses a useful cyber investigation approach called end-to-end digital investigation (EEDI). Finally, the chapter explores some forensic tools and considers their usefulness both practically and theoretically.

55.1.1 Defining Cyber Investigation.

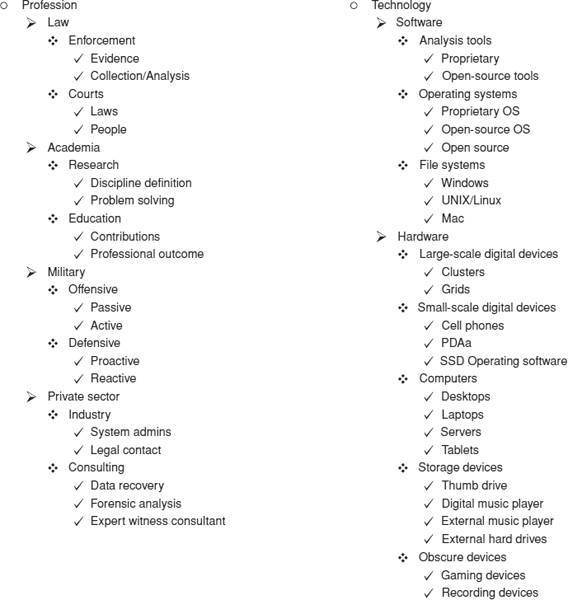

Rogers, Brinson, and Robinson4 approach the definition of cyber forensics in an ontology (“the question of how many fundamentally distinct sorts of entities compose the universe”5); it can also be viewed as a taxonomy (“the practice or principles of classification”6). Although their descriptive schema is not complete, it is informative. Most important, Rogers's approach recognizes that there is no simple definition of cyber investigation; ontology and taxonomy are therefore particularly useful in clarifying meaning and structure for such a field.

The Rogers cyber forensic ontology consists of five layers. Ontology generally contains classes, sub-classes, slots, and instances. These elements also contain constraints and relations. Rogers's five layers can be considered to be a superclass with two classes and four nested subclasses. He does not define the other elements in his paper. The Rogers schema is easy to extend, however, to a proper ontology using a tool such as Protégé.7 For our purposes, we will refer to his model as the Rogers taxonomy.

The core of the Rogers taxonomy as a definition resides in the relationships inherent in the various subclasses. Exhibit 55.1 presents the taxonomy as a hierarchy with two major classes, Profession and Technology.

Starting with cyber forensics at the top and with two classes represented in columns, the farther to the right in each class, the more granularity one adds to the characterization. Ontology supports understanding by providing a collection of characteristics that describe a concept; each class and nested subclass adds characteristics to that concept and contributes to a functional definition of cyber forensics. At the same time, concepts not included help to constrain the description and increase the precision of the ontological definition.

55.1.2 Distinguishing between Cyber Forensics and Cyber Investigation.

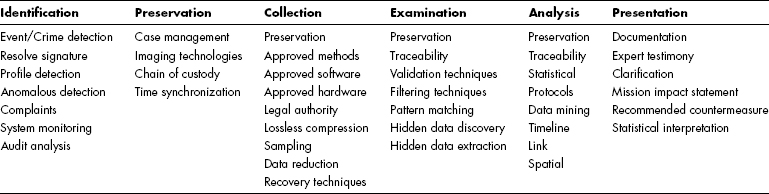

Cyber investigation uses the tools of cyber forensics as part of investigative procedures. The Digital Forensics Research Workshop (DFRWS)8 in 2001 developed a useful framework for digital investigation,9 a version of which appears in Exhibit 55.2.

Although this framework appeared in 2001, it has not been improved on markedly in the intervening years.10

55.1.3 DFRWS Framework Classes.

The DFRWS framework is a matrix. The columns are called classes and the cells are called elements. In the class descriptions that follow, the quoted italicized descriptions are taken verbatim from the original publication.11

The DFRWS framework classes contain key elements that are under constant review by the digital forensics community. The continuity among the classes is important; for example, the Preservation class continues as an element of the Collection, Examination, and Analysis classes. This indicates that preservation of evidence (as characterized by case management, imaging technologies, chain of custody, and time synchronization) is an ongoing requirement throughout the digital investigative process. Thus preservation is “a guarded principle across ‘forensic’ categories.”12 Traceability, likewise, is a guarded principle, but not across all forensic categories. The next topics discuss each of the DFRWS framework classes in more detail. Elements marked with an asterisk (*) in the discussions are required in all cyber investigations.

EXHIBIT 55.1 Rogers Cyber Forensics Taxonomy

55.1.3.1 Identification Class.

The DFRWS defines the Identification class in this way:

Determining items, components and data possibly associated with the allegation or incident. Perhaps employing triage techniques.13

EXHIBIT 55.2 DFRWS Digital Investigation Framework

The Identification class describes the method by which the investigator is notified of a possible incident. Since about 50 percent of all reported incidents have benign explanations,14 processing evidence in this class is critical to the rest of the investigation. Likewise, as it is the first step in the EEDI process, it is the only primary evidence not corroborated directly by other primary evidence. Therefore, a more significant amount of secondary evidence is needed to validate the existence of an actual event.

The author has adopted the next definitions of the individual framework classes for the purposes of EEDI. The DFRWS has, as of this writing, not developed such definitions. Elements marked with an asterisk (*) are required elements within the DFRWS class. The elements of the Identification class are:

- Event/crime detection.* This element implies direct evidence of an event. An example of such direct evidence is discovery that a large number of credit card numbers have been downloaded from a server.

- Resolve signature. This applies to the use of some automated event detection system, such as an intrusion system or antivirus software program. The system in use must make its determination (of the presence of an event of interest) by means of signature analysis and mapping.

- Profile detection. Like signature resolution, profile detection usually relies on some automated event detection system. However, in this instance, the event will be characterized through matching with a particular profile as opposed to an explicit signature. Signatures generally apply to an individual event. Events, however, may come together in an attack scenario or attack profile. Such a profile may consist of a number of events, a pattern of behavior, or pattern of specific results of an attack.

- Anomalous detection. Again, like the preceding two elements, this usually relies on a detection system. However, in the case of anomalous detection, the event is deduced from the detection of patterns of behavior outside of the observed norm. Anomalies can include the presence of unusual behavior but also, as in the classic Sherlock Holmes case of the dog that “did nothing in the night-time,” the absence of expected behavior.15

- Complaints. This element relies on the direct reporting of a potential event by an observer. This person may observe the event directly or simply the end result of the event.

- System monitoring. System monitoring explicitly requires some sort of intrusion detection, antivirus, or similar system in place. It is less specific than other elements requiring a specific action (e.g., anomaly, profile of signature detection) and may be used together with another element of this class.

- Audit analysis. This element refers particularly to the analysis of various audit logs produced by source, target, and intermediate devices.

55.1.3.2 Preservation Class.

The Preservation class deals with those elements that relate to the management of items of evidence. The DFRWS describes this class as “a guarded principle across ‘forensic’ categories.” The requirement for proper evidence handing is basic to the digital investigative process as it relates to legal actions.

The DFRWS defines this class as “[e]nsuring evidence integrity or state.”

- Case management.* This element covers the management of the investigative process by investigators and digital forensic examiners. Typical in this element are investigator notes, process controls, quality controls, and procedural issues.

- Imaging technologies. This element is separate from the elements in the Collection class in that it does not refer to specific hardware, software, or techniques. The imaging technologies element refers to the technology used for imaging computer media. For example, physical imaging or bitstream backup may be considered an appropriate imaging technology whereas a logical backup would not be. The term “imaging” as used here is rather broad. It encompasses not only the technology used to create an image of computer media but also the technology used to extract such items as logs from a device. In this case, the log might be extracted from a bitstream image or it might be read out of the device to a peripheral as a result of a keystroke command issued by the investigator.

- Chain of custody.* This element refers to the process of limiting access to and subsequent alteration of evidence. In most jurisdictions chain of custody rules require that the evidence custodian be able to account for all accesses or possible accesses to items of evidence within his or her care from the time it is collected until the time it is used in a legal proceeding,

- Time synchronization.* This element refers to the synchronizing of evidence items to a common time base. Since logs and other evidence are collected from a number of devices during the conduct of an investigation, it is clear that those devices can differ from each other in terms of time base. If all devices are in a single time-synchronized network, they will not, of course, differ. However, that rarely is the case, and some effort must be made to obtain a common time base for all devices. There are two approaches one might take. The first is to adjust all times on evidence to a common device. The second is to use a common time zone (TZ), such as Universal Time (UT) or Greenwich Mean Time (GMT), as a baseline. No evidence is modified. The investigator simply notes the variance of a particular log or other piece of digital evidence from the predetermined time standard. This also is referred as normalizing time stamps.

55.1.3.3 Collection Class.

The Collection class is concerned with the specific methods and products used by the investigator and forensic examiner to acquire evidence in a digital environment. As has been noted, the Preservation class continues as an element of this class. With the exception of the legal authority element, the elements of this class are largely technical.

The DFRWS defines this class as “[e]xtracting or harvesting individual items or groupings.”

- Approved methods.* This element refers to the techniques used by the forensic examiner or investigator to extract digital evidence. The concept of being approved refers to the general acceptance in courts of the techniques and training or certifications of the individual performing the evidence collection. The most rigorous test of methods and technologies is the Daubert test. (For more information on expert testimony, see Chapter 73 in this Handbook.) However, due largely to the immaturity of digital forensic science, most court tests have not had this level of rigor applied. For this reason, those elements in this class that relate to approval derive their authority from cases where the technique, technology, or product has been challenged in a court of the same level as the case in question and has survived the test.

- Approved software. This element addresses the specific software product used to collect evidence. The discussion of the approval process in the last bullet point applies. There is an issue specifically involving software used for digital forensic data collection. In order for a software program to be considered approved, it must be identical in every way to the software that has survived either a Daubert hearing or a court challenge. That means that the software source code must be in every way identical in both instances of the program. Failing that, the program may need to undergo its own court testing. For the purposes of the framework and subsequent EEDI procedures, however, a program that has any differences (e.g., version level, bug fixes, source code changes, etc.) from the program tested originally is not considered to be approved software.

- Approved hardware. This element describes the hardware, if any, used to collect evidence. Usually this is not an issue unless the hardware is designed specifically for use in a digital forensic evidence collection environment. To a lesser extent, the caveats of sameness that apply to approved software apply to approved hardware. The hardware device used must in every way be identical to instances of the device that have survived court challenges. The approved hardware element does not apply to simple computers, disks, or other media used by the examiner to collect evidence unless the device was developed explicitly for digital forensic evidence collection and contains special unique features for use in that environment only.

- Legal authority.* The legal authority element is the only element of this class that is nontechnical. In most jurisdictions, some legal authority is required prior to extracting information from computer media. This authority could be a policy, a subpoena, or a search warrant, for example. Failure to comply with applicable laws may render the evidence collected useless in a court of law.

- Lossless compression. This element refers to the compression techniques, if any, used by backup, encryption, or digital signature software used to collect and/or preserve evidence. If the software program uses compression, it must be proven to be lossless, that is, to cause no change whatever upon the integrity of the evidence on which it is used.

- Sampling. If sampling techniques are used to collect evidence, it must be shown that the technique causes no degradation of the evidence collected, or, if it has, that the effects can be demonstrated clearly and unambiguously. It must also be shown that the sampling method is valid (generally accepted by the mathematical community) and that the conclusions that may be drawn from the sample are defined clearly.

- Data reduction. When techniques and/or programs (such as normalization) are used to reduce data that contain or may contain evidence, it must be shown that such techniques or programs produce valid, repeatable, provable results that do not affect, in any way whatever, the evidence being collected. For example, using data reduction directly on evidence would alter the evidence and would not be acceptable. However, using such methods or tools on a copy of the evidence would have no direct affect on the evidence. Its affect on the analysis of the evidence (the validity of conclusions, e.g.) is an issue for the Examination and Analysis classes.

- Recovery techniques. This element refers to the recovery of data that may contain evidence from a digital device. It specifically describes the methods used by the forensic examiner to extract evidence using approved hardware, software, and methods. Whereas the elements of approved hardware, software, and methods refer to the naming (or brief description of) the element and the connection between the element and the appropriate court test by which it is approved, “recovery techniques” describes in detail the actual process used to recover the evidence. By extension, whennonforensic methods are used to collect information (e.g., traditional investigation methods such as interviewing), we consider these techniques also to be recovery techniques and we apply the same rules to them (e.g., approved methods, legal authority, etc.) as we would in adigital environment. However, we apply the rules in the context of the technique used.

55.1.3.4 Examination Class.

The Examination class deals with the tools and techniques used to examine evidence. The DFRWS gives describes the Examination class in this way: “Closer scrutiny of items and their attributes (characteristics).” It is concerned with evidence discovery and extraction rather than the conclusions to be drawn from the evidence (Analysis class). Whereas the Collection class deals with gross procedures to collect data that may contain evidence (such as imaging of computer media), the Examination class is concerned with the examination of those data and the identification and extraction of possible evidence from them. Note that the Preservation class continues to be pervasive in the Examination class.

- Traceability.* This element is, arguably, the most important element in the EEDI process. It is the traceability and continuity of a chain of evidence throughout an investigation that leads to the credibility and correctness of the conclusions. According to the DFRWS, “[t]raceability (cross referencing and linking) is key as evidence unfolds.”

- Validation techniques. This element refers to techniques used to corroborate evidence. Evidence may be corroborated in a variety of ways. Traditionally, evidence is corroborated by other, relevant evidence. However, digital evidence may stand on its own merit if its technical validity can be established. For example, a fragment of text extracted from an image of a computer disk may be shown to be a valid piece of evidence through various technical validation techniques. Its applicability or usefulness as an element of proof in an investigation may be open to interpretation, but that it is valid data would not be in dispute. A log, however, if extracted from a device that had been penetrated by a criminal hacker, would require additional corroboration (validation) to show that the hacker had not altered its contents.

- Filtering techniques. When dealing with evidence acquired from certain types of digital systems (such as intrusion detection systems), it is not uncommon to find that the gross data have been filtered for expediency by the system. Although many intrusion detection experts would agree that filtering at the source (the incoming data flow from sensors) is not as appropriate as filtering the display while preserving the original data, such source filtering does occur. This element requires that the investigator and/or forensic examiner determine and describe the filtering techniques used, if any, and apply the results of that description to the determination of the validity of the data as evidence. Another application of filtering is the extraction of potential evidence from a gross data collection16 such as a bit-stream image of digital media. Some digital forensic tools use filters to extract data of a particular type, such as graphical images. This element requires that the filtering technique be defined clearly and understood by the investigator or forensic examiner. These tools may also use the filtering technique of matching a known hash value to digital items on a gross data collection. Items that match the known hash are presumed to be the same as the item for which the hash value was originally generated. Again, the techniques and tools applied must be clearly understood by the investigator or the forensic examiner.

- Pattern matching. This element addresses methods used to identify potential events by some predetermined signature or pattern. Examples are pattern-based intrusion detection systems and signature-based virus checkers. When the pattern or signature is unclear, ambiguous, or demonstrates a large number false positives or negatives, the evidence and conclusion following from it are open to challenge.

- Hidden data discovery. This element refers to the discovery of evidence that is hidden in some manner on computer media. The data may be hidden using encryption, steganography, or any other data-hiding technique. It may also include data that have been deleted but are forensically recoverable.

- Hidden data extraction. This element addresses the extraction of hidden evidence from a gross data collection.

55.1.3.5 Analysis Class.

This class is described by the DFRWS as “[f]usion, correlation and assimilation of material for reasoned conclusions.”

The Analysis class refers to those elements that are involved in the analysis of evidence collected, identified, and extracted from a gross data collection. The validity of techniques used in analysis of potential evidence impact as directly the validity of the conclusions drawn from the evidence and the credibility of the evidence chain constructed therefrom. The Analysis class contains, and is dependent on, the Preservation class and the Traceability element of the Examination class.

The various elements of the Analysis class refer to the means by which a forensic examiner or investigator might develop a set of conclusions regarding evidence presented from the other five classes. As with all elements of the framework, a clear understanding of the applicable process is required. Wherever possible, adherence to standard tools, technologies and techniques is critical.

The link element is the key element used to form a chain of evidence. It is related to traceability and, as such, is a required element.

55.1.3.6 Presentation Class.

DFRWS describes the Presentation class in this way: “Reporting facts in an organized, clear, concise and objective manner.”

This class refers to the tools and techniques used to present the conclusions of the investigator and the digital forensic examiner to a court of inquiry or other finder of fact. Each of these techniques has its own elements, and a discussion of expert witnessing is beyond the scope of this chapter. However, for our purposes, we will stipulate that the EEDI process emphasizes the use of timelines as an embodiment of the clarification element of this class. For more information on expert witness testimony, see Chapter 73 in this Handbook.

55.2 END-TO-END DIGITAL INVESTIGATION.

The end-to-end digital investigation process takes into account a structured process including the network involved, the attack computer, the victim computer, and all of the intermediate devices on the network. Structurally, it consists of nine steps:

- Collecting evidence

- Analysis of individual events

- Preliminary correlation

- Event normalizing

- Event deconfliction

- Second-level correlation (consider both normalized and nonnormalized events)

- Timeline analysis

- Chain of evidence construction

- Corroboration (consider only nonnormalized events)

55.2.1 Collecting Evidence.

A formal definition of the term “evidence collection” is:

The use of approved tools and techniques by trained technicians to obtain digital evidence from computer devices, networks and media. By “approved” we mean those tools and techniques generally accepted by the discipline and the courts where collected evidence will be presented.17

The collection of evidence in a computer security incident is time sensitive. When an event occurs (or when an expected event fails to occur), we have the first warning of a potential incident. An event may not be, by itself, particularly noteworthy. However, taken in the context of other events, it may become extremely important. From the forensic perspective, we want to consider all relevant events, whether they appear to have been tied to an incident or not. Events are the most granular elements of an incident.

We define an incident as a collection of events that lead to, or could lead to, a compromise of some sort. That compromise may include unauthorized change of control over data or systems; disclosure or modification of a system or its data; data or system destruction of the system; or unauthorized alterations in availability or utility or its data. An incident becomes a crime when a law or laws is/are violated.

Collecting evidence from all possible locations where it may reside must begin as soon as possible in the context of an incident. The methods vary according to the type of evidence (forensic, logs, indirect, traditionally developed, etc.). It is important to emphasize that EEDI is concerned not only with digital evidence. Gathering witness information should be accomplished as early in the evidence collection process as possible. Witness impressions and information play a crucial role in determining the steps the forensic examiner must take to uncover digital evidence.

Critical in this process are:

- Images of effected computers

- Logs of intermediate devices, especially those on the Internet

- Logs of effected computers

- Logs and data from intrusion detection systems, firewalls, and so on

55.2.2 Analysis of Individual Events.

An alert or incident is made up of one or more individual events. These events may be duplicates reported in different logs from different devices. These events and duplications have value both as they appear and after they are normalized. This analysis step examines isolated events and assesses what value they may have to the overall investigation and how they may tie into each other.

55.2.3 Preliminary Correlation.

The formal definition of the term “correlation” is:

The comparison of evidentiary information from a variety of sources with the objective of discovering information that stands alone, in concert with other information, or corroborates or is corroborated by other evidentiary information.

Preliminary correlation examines the individual events to correlate them into a chain of evidence. The main purpose is to understand in broad terms what happened, what systems or devices were involved, and when the events occurred.

The term “chain of evidence” refers to the chain of events related in some consistent way that describes the incident. The relationship may be temporal or causal. Temporal chains are also called timelines. Essentially they say “This happened, then that happened.” Causal relationships imply cause and effect. They say “That happened because this happened.”

The term “chain of evidence” must not be used as a synonym for “chain of custody,” which this chapter defines in the discussion of the Preservation class of the DFRWS framework.

55.2.4 Event Normalizing.

The formal definition of “normalization” is:

The combining of evidentiary data of the same type from different sources with different vocabularies into a single, integrated terminology that can be used effectively in the correlation process.

Some events may be reported from multiple sources. During part of the analysis (timeline analysis, e.g.), these duplications must be eliminated. This process is known as normalizing. EEDI uses both normalized and nonnormalized events.

55.2.5 Event Deconfliction.

The formal definition of “deconfliction” is:

The combining of multiple reportings of the same evidentiary event by the same or different reporting sources, into a single, reported, normalized evidentiary event.

Sometimes events are reported multiple times from the same source. An example is a denial-of-service attack where multiple packets are directed against a target and each one is reported individually by a reporting resource. The EEDI process should not count each of those packets as a separate event. The process of viewing the packets as a single event instead of multiple events is called deconfliction.

55.2.6 Second-Level Correlation.

Second-level correlation is an extension of earlier correlation efforts. However, at this point, views of various events have been refined through normalization or deconfliction. For example, during the process of deconfliction or normalization, we have simplified the collection of events to eliminate redundancies and/or ambiguities. The resulting data set now represents the event universe at it simplest (or nearly simplest). These events (some of which actually may be compound events, i.e., events composed of multiple subevents) now represent the building blocks with which we may build chains of evidence.

55.2.7 Timeline Analysis.

In this step, normalized and deconflicted events are used to build a timeline using an iterative process that should be updated constantly as the investigation continues to develop new evidence. The entire process is iterative: Event analysis, correlation, deconfliction, and timeline analysis are repeated in sequence as required.

55.2.8 Chain of Evidence Construction.

Once there is a preliminary timeline of events, the process of developing a coherent chain of evidence begins. Ideally, each link in the chain, supported by one or more pieces of evidence, will lead to the next link. That rarely happens in large-scale network traces, however, because there often are gaps in the evidence-gathering process due to lack of logs or other missing event data.

Such problems do not invalidate this step, however. Although we may not always be able to construct a chain of evidence directly, we can nonetheless infer missing links in the chain. Now, an inferred link is not evidence: It is more properly referred to as a lead. Leads can point us to valid evidence and that valid evidence can, at some point, become the evidence link. Thus again, the iterative process of refinement of evidence makes it perfectly reasonable to start with a chain that is part evidence and part leads and refine it into an acceptable chain of evidence.

A second approach to handling gaps in evidence is to corroborate the questionable link very heavily. If all corroboration points to a valid link, it may be acceptable. For most purposes, however, the former approach is best.

55.2.9 Corroboration.

In this stage, we attempt to corroborate each piece of evidence and each event in our chain with other, independent evidence or events. For this process, we use the noncorrelated event data as well as any other evidence developed either digitally or traditionally. The best evidence is that which has been developed digitally and corroborated through traditional investigation or vice versa. The final evidence chain consists of primary evidence corroborated by additional secondary evidence. This chain will consist of both digital and traditional evidence. The overall process does not differ materially between an investigation and an event postmortem except in use of the outcome.

55.3 APPLYING THE FRAMEWORK AND EEDI.

The important issue in application is evidence management. Both the framework and EEDI help the investigator manage evidence. They do not substitute for good investigative techniques. The incident may or may not be a criminal act; even if it is such an act, it may not be treated as one. No matter. The approach to investigating is essentially the same in either case. In order to understand how these investigative tools might apply, it is useful to begin with a generalized framework for understanding the execution of a cyber incident.

55.3.1 Supporting the EEDI Process.

Experienced traditional investigators often resist a process-based approach to investigation. However, in numerous interviews with such investigators, the author determined that what these investigators do by habit and experience is almost exactly the same process discussed in this chapter. Thus it is convenient to view the DFRWS framework and EEDI as tools that investigators can use to apply past physical investigation experience to the more complicated requirements of digital investigation.

55.3.2 Investigative Narrative.

The investigative narrative typically consists of the investigator's notes. The EEDI process supports the construction of an investigation around an investigative framework. For the purposes of this chapter, we use the DFRWS framework shown in Exhibit 55.2. The framework includes the basic areas where investigative and forensic controls are required. Once the narrative is complete, it can be translated into a more structured evidence support and management process using the framework and EEDI.

For example, under the Collection class, we find reference to approved software, hardware, and methods. This indicates that the forensic software, hardware, and methods used by the investigator or digital forensic examiner must meet some standard of acceptance within the investigative community. That standard usually refers to court testing. Should the investigator or forensic examiner not adhere to that standard, the evidence collected will be subject to challenge. At critical points in the investigation, such as the collection of primary evidence, such a lapse could jeopardize the outcome materially.

Again, the framework does not necessarily alter the generalized investigative techniques of experienced investigators and forensic examiners. Rather, it adds a dimension of rigor and quality assurance to the digital investigative process. It also ties the functions of the forensic examiner and the investigator tightly together, ensuring that the chain of evidence is properly supported, developed, and maintained.

55.3.3 Intrusion Process.

Today (2008), there are many ways in which an attack can occur. There has been a shift since about 2000 in types of incidents from manual hacking to automated malware-generated attacks. The sources of these attacks vary widely over time, and there is no definitive pattern to how they work. Today's assaults typically are some form of blended attack. Examples are botnets, spam, Trojan horses, rootkits, worms, and other malware. (See Chapters 2, 13, 15, 16, 17, and 18 in this Handbook for more details of attack methods.)

A blended attack is one in which various intrusion techniques are combined to deliver a payload. The payload can be a malicious outcome, such as damaging the target computer, or it can be more subtle, such as a spybot that harvests personal information and calls home periodically to deliver its collected information.

Regardless of the intrusion technique—manual hacking or automated malware—there is a generalized pattern of activities associated with a cyber attack. These steps are:

- Information gathering. Information gathering usually does not touch the victim. During this phase, the attacker selects his or her target, researches it, and determines such things as expected range of IP addresses exposed to the Internet. There is an exception to not touching the victim: Sometimes an attacker performs a scan of a block of IP addresses to determine whether there are any vulnerable devices in the IP block. This is what security professionals usually call a script-kiddie attack. The usual use for this step, however, is to select a victim for a targeted attack.

- Footprinting. Footprinting is the act of scanning a block of addresses owned by a victim for those that are on line and may be vulnerable. The attacker is obtaining the footprint of the victim's presence online.

- Enumerating. “Enumerating” refers to the analysis of exposed addresses for potential weaknesses. In this step, the attack may grab banners to determine the versions of various services running on the target. The objective is to understand what the victim is running on exposed machines. Once that information is gathered, the attacker can select potentially successful exploits based on published vulnerabilities. Although this process will leave a footprint on the target, it is likely to remain hidden in thousands of lines of logs (as in the footprinting phase). If these two steps (footprinting and enumerating) are performed carefully, they will not trigger most intrusion detection systems.

In a fully automated attack, such as one resulting from some form of social engineering (phishing, e.g.), these two steps are replaced by research that focuses on people and their access methods (e.g., e-mail addresses). (For more information on social engineering, see Chapter 19 in this Handbook;, for more on phishing, see Chapter 20.)

- Probing for weaknesses. In this step, the attacker may perform a vulnerability scan or some more stealthy probes to determine specific exploitable vulnerabilities. In an automated attack, this phase may consist of attempting to seduce large numbers of targets to give up important information that can be used in the next step. In this case, the probes consist of social engineering attempts.

- Penetration. This is the entry phase. It is essentially the same no matter if the attack is manual or automated. Only the penetration technique changes. The objective always is to reach inside of the victim system.

- Backdoors, Trojans, rootkits, and so on. In this phase, the attacker deposits the payload. The objective may be to reenter the target at some later date, plant some malware, or take the system down with a denial-of-service attack. Most experts seem to agree that denial-of-service attacks are on the decline. The objective of today's intruders is more frequently stealing information than damaging systems. (For more about denial-of-service attacks, see Chapter 18 in this Handbook.)

- Cleanup. In a traditional hack, this step is where the attacker removes tools from the target, alters logs, and performs other actions to cover his or her tracks and obscure his or her presence from system administrators and forensic analysts. In an automated attack, the tasks are similar and have similar objectives, but the techniques are a bit different and the forensics required to find evidence are a bit different.

55.3.4 Describing Attacks.

Three factors lead to a successful attack:

- The attacker can access the target.

- There is a significant vulnerability to attack, and that vulnerability can be identified by the attacker.

- The attacker needs to have effective command and control and the prospects for attribution are minimized.

It is important to be able to describe a cyber event in clear terms. There are several attack taxonomies and descriptive languages available to help do that. However, these tools, while useful, are inconsistent with each other (there is no universally accepted descriptive language or taxonomy) and are often unnecessarily complicated for smaller investigations. The author recommends Howard's common language (see Chapter 8 in this Handbook)18 as a good starting point for something simple and concise. Before applying a common language, however, there are several questions that need to be asked.

- Description of the attack. This is a narrative describing how the attack appeared when analyzed: What is happening? What service is being targeted? Does the service have known vulnerabilities or exposures?

- Type of attack. Is it benign, an exploit, a denial-of-service attack or reconnaissance?

- Attack mechanism. This is a narrative describing how the attack was carried out: How did the attacker do it?

- Correlations. How does this attack compare with other similar attacks? Are there any other attacks happening at the moment that might help trace/explain the attack?

- Evidence of active targeting. Is the attack leveled against one or more specific targets, or is it a generic “blast”?

- Severity.

Severity = (Target Criticality + Attack Lethality) − (System Countermeasures + Network Countermeasures)

Severity formula values usually are from 1 to 5 with 5 representing the highest measure. These computations are for heuristic purposes only and do not purport to be rigorous metrics.

In answering these questions, the analyst may apply the common language (or other formal approach) as appropriate.

There are also 10 generalized questions about the incident that need to be resolved early in the investigation. These can form an informal template for early interviews.

- What is the nature of the incident?

- How can we be sure that there even was an incident?

- What was the entry point into the target system? Was there only one?

- What would evidence of an attack look like? What are we looking for?

- What monitoring systems were in place that might have collected useful data before and during the incident?

- What legal issues need to be addressed (policies, privacy, subpoenas, warrants, etc.)?

- Who was in a position to cause/allow the incident to occur?

- What security measures were in place at the time of the incident?

- What nontechnical (business) issues may have impacted the success or failure of the attack?

- Who knew what about the attack, and when did they know it?

55.3.5 Strategic Campaigns.

The steps in an attack are, largely, tactical. This is what may be expected from an attacker during an individual attack. Often the tactical attacks are part of larger strategic campaigns. Examples are spam, identity theft carried out by organized groups, and politically motivated cyber war. There are three important differences between a tactical attack and a strategic campaign:

- Single objective versus ongoing objectives

- Low-hanging fruit versus sustained effort to penetrate

- Trivial versus complicated targets and objectives

Attacks may be part of campaigns. However, just because a company experiences a tactical attack does not mean that it is part of a sustained campaign. Campaigns have distinct phases:

- Mapping and battle space preparation

- Offensive and defensive planning

- Initial execution

- Probes, skirmishes

- Adjustment and sustainment

- Success and termination

55.4 USING EEDI AND THE FRAMEWORK.

Once we understand how attackers attack, we can see how EEDI and the framework can help us. The first, and most obvious, application of EEDI is the application of the investigative process to identify the results of each of the attack phases above. The act of collecting evidence, for example, may lead us to performing a forensic analysis of the target computer. When we do that, we will want to refer to the framework to ensure that we have not missed some important task. We may apply each step of the EEDI process to each step of the attack process to gather, correlate, and analyze evidence of an attack.

- Collecting evidence. Perform appropriate log collection, forensic imaging, and so on. Conduct interviews. There are 10 classes of evidence that the investigator should collect:

- Logs from monitoring devices

- Logs from hosts and servers

- Firewall and router (especially edge router) logs

- Interviews with involved personnel

- Interviews with business and technical managers

- Device configuration files

- Network maps

- Event observation timelines

- Notes of relevant meetings

- Response team notes and observations

- Analysis of individual events. Consider each of the events revealed by the collection and analysis of evidence in step one. Look for evidence of enumeration, penetration, and cleanup.

- Preliminary correlation. Tie together events from logs so that a smaller evidence set emerges. Consider each step of the attack process that applies to the device or system under analysis. Generate a straw-man chain of evidence.

- Event normalizing. The first and most important aspect of normalizing is time stamping. It is unlikely that all of the involved devices are on the same time tick. In this step, some allowance for differences in time zones and clocks must be made. Other normalizing tasks include combining different reports of the same events.

- Event deconfliction. This simply is an extension of event normalizing.

- Second-level correlation. This is a further refinement of the evidence and the straw-man chain of evidence. Here an important task is correlating log events, media forensic results, and results of interviews into a clear chain of evidence. This chain should be both causal and temporal, although the emphasis on the temporal chain is in the next step. This step is distinguished from the next two steps by level of detail. This correlation should offer significant detail. The next steps refine that detail making the results more comprehensible to laypeople.

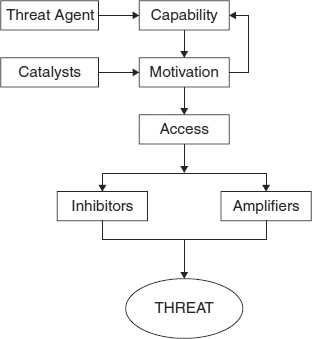

EXHIBIT 55.3 Jones's Threat Delivery Model

- Timeline analysis. Refine the level of detail in the temporal chain of evidence.

- Chain of evidence construction. Refine the level of detail in the causal chain of evidence.

- Corroboration. Apply as much corroboration to evidence associated with each step of the attack process as you can.

55.5 MOTIVE, MEANS, AND OPPORTUNITY: PROFILING ATTACKERS.

Attacks, even automated ones, are performed by people. In order to understand attacks, we must understand the people who deliver them. We call those people threat agents because an attack simply is the delivery of a threat against a target. The act of delivering a threat is described concisely by Jones.19 His model appears in Exhibit 55.3.

According to Jones, the threat agent first must have the capability of delivering the threat: The attacker must have access to the threat and know what to do with it. An example is an attacker who delivers a virus to a target system. The virus is the threat, and the attacker is the threat agent.

The threat agent then must be motivated to carry out the attack. However, just because motivation exists does not mean that a wily attacker will deliver the threat immediately. Usually there needs to be a catalyst which tells the attacker that, for whatever reason, the time has come to attack.

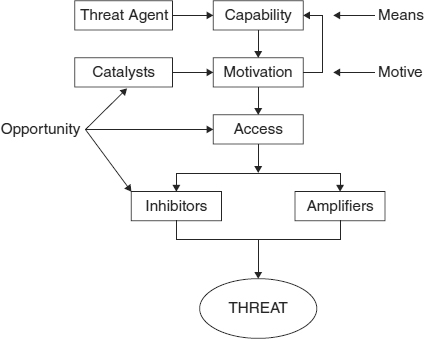

The next step in the process, access, is the first place that we can manage the attack. Denying the threat agent access to the target denies the threat agent the ability to deliver the threat. However, assuming that the attacker can obtain access, the effectiveness of the attack is now determined by the amplifiers and inhibitors present in the target system. These steps have direct analogs in the notion of motive, means, and opportunity, as Exhibit 55.4 shows.

EXHIBIT 55.4 Jones's Model Showing Motive, Means, and Opportunity

55.5.1 Motive.

Motive is critically important to understanding an attack. Why an attacker attacks often helps us understand the attack and, ultimately perhaps, who the attacker is. Traditional profiling matches evidence to known perpetrator profiles to understand the perpetrator. We do the same thing in cyber investigation.

An attacker with the motive of stealing personally identifiable information for the purpose of identity theft or credit card fraud may have an entirely different motive from a disgruntled employee who performs the same act to get even with his or her employer. In the former case, the attacker is motivated strictly by financial gain. In the latter, the attacker is motivated by a need for revenge.

Understanding the motivation helps us to determine the level of threat. In the former case, since the motivation is financial, the threat is significant and potentially far reaching because the attacker could attempt to maximize financial return. In the latter case, the threat could be somewhat less because, having stolen the information, the attacker might be likely to publicize his or her act in order to get the revenge desired. This might result in extortion attempts or leaks to the media but not in the attempt to sell or profit from the theft through sale of the information.

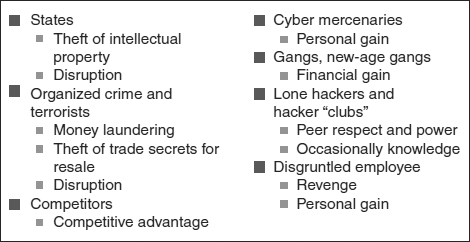

Additionally, analysis of motivation can help determine if the attacker is likely to be an individual or is part of a group. Groups tend to have somewhat different motivations from individuals, and they manage their attacks differently. There are several classes of threat agents, and each one has its own unique motive set. A summary appears in Exhibit 55.5.

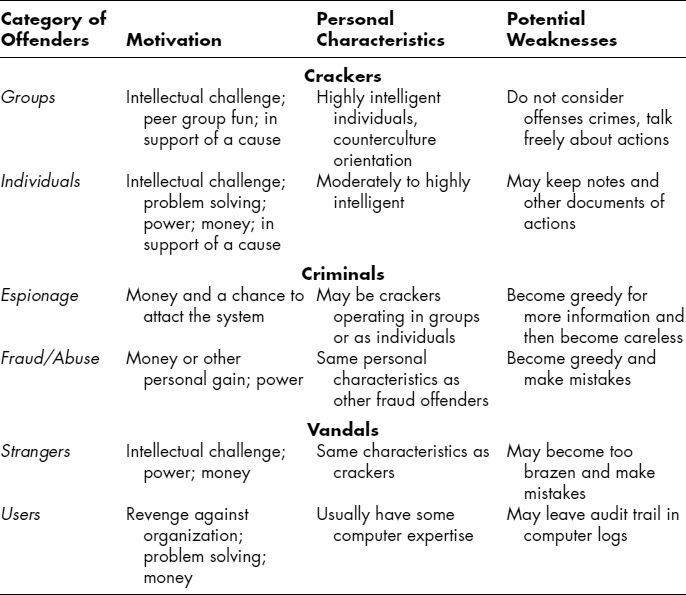

The FBI, prior to 1995, described motive in general terms in its adversarial matrix.20 Although this matrix needs updating for current trends and technology, it still is amazingly accurate and provides useful guidelines to the investigator. The behavioral characteristics appear in Exhibit 55.6, which is drawn from a British source.21 This equates, approximately, to motive.

Jones also describes a motivation taxonomy:

- Political

- Secular

EXHIBIT 55.5 Types of Threat Agents and Their Motivations

- Crime

- Personal gain

- Revenge

- Financial

- Knowledge or information

EXHIBIT 55.6 Adversarial Matrix Behavioral Characteristics

55.5.2 Means.

Means is a very important part of the profile. In cyber investigation, we generally equate means directly to the tools and techniques used in the attack. Indirectly we relate means to skill level of the attacker. We live in an Internet-enabled society. That state of affairs extends to computer-related crime. Relatively unskilled threat agents can deliver moderately sophisticated attacks simply because the means is readily available on the Internet to enable their actions. Analysis of evidence for indications of skill level helps us to eliminate suspects.

A word on investigative process is in order here. Although it may seem as if the right approach to an investigation is to focus on the probable perpetrator, in reality, it is far more productive, initially at least, to focus on those who absolutely could not be the perpetrator. This is an extension of the Sherlock Holmes' admonition: “It is an old maxim of mine that when you have excluded the impossible, whatever remains, however improbable, must be the truth.”22 The point here is that it is far more efficient to eliminate those who could not have participated in the attack. In cyber investigations, doing this becomes even more important because of the huge universe of possible participants in a network-based attack.

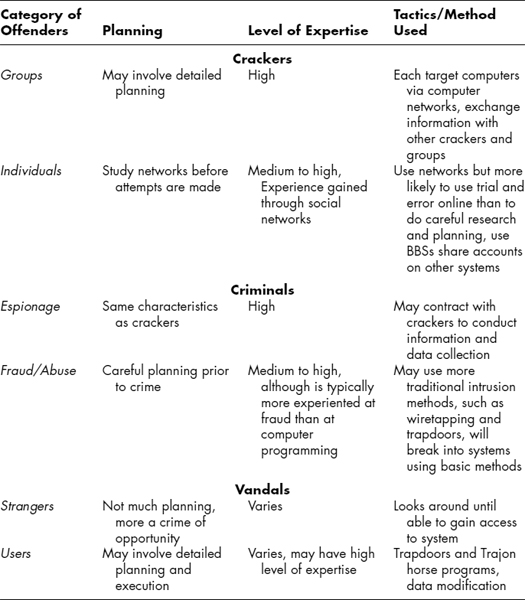

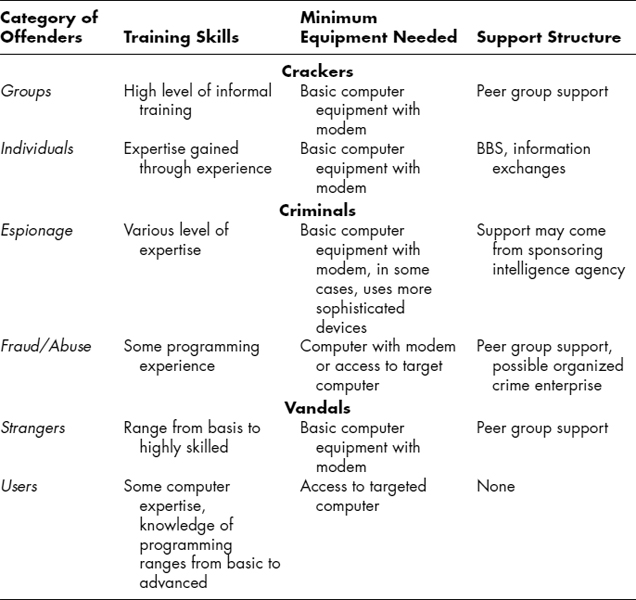

The FBI adversarial matrix of operational characteristics appears in Exhibit 55.7. Exhibit 55.8 extends the operational characteristics and includes specific tools, techniques, and support required. These equate, approximately, to means.

55.5.3 Opportunity.

The Jones threat model describes the notion of opportunity. Considered with means, opportunity helps determine whether an attacker is a credible threat agent. Opportunity not only reflects Jones' threat model, it includes such things as knowledge of the victim system. The insider threat is an important piece of the opportunity puzzle. In this case, an insider can be the attacker him- or herself or a confederate of the threat agent. Besides insider help, the attacker may have the support of a group of some sort, such as a hacker gang, drug gang, or organized crime group.

55.6 SOME USEFUL TOOLS.

The usual tools for digital investigation include tools for:

- Computer forensic imaging and analysis

- Network forensic/log aggregation and analysis

- Malware discovery

- Media imaging (without analysis)

- Network discovery

- Remote (over-the-network) computer forensic analysis and imaging

EXHIBIT 55.7 FBI Adversarial Matrix of Operational Characteristics

These tools are well known in the cyber forensic community and do not warrant further elucidation here.23 Specific products come and go so it is not appropriate to provide that level of detail here.

In addition, there are some nonstandard tools that can make the EEDI process much easier. These include:

- Link analysis

- Attack-tree analysis

- Modeling

- Statistical analysis

Unlike the standard tools, these specialized requirements bear explanation. Again, however, specific products come and go. Where examples are provided, the specific product that generated the example will be listed along with its source at the time of writing.

EXHIBIT 55.8 FBI Adversarial Matrix Resource Characteristics

55.6.1 Link Analysis.

In this author's view, link analysis is the single most useful technique for cyber investigators. Link analysis allows the investigator to analyze large data sets for nonobvious relationships. Link analysis is used in the investigation of complicated criminal activities, such as fraud, drug-related activities and groups, terrorist acts and groups, and organized crime. It is used far less commonly in analysis of cyber crime, but it should be integrated into cyber investigations.

The core theory behind link analysis is that pairings of related items (e.g., people and addresses, source and destination IP addresses, hacker aliases and real names, etc.) can be analyzed to find relationships that are not immediately obvious. We do that by testing for associations in suitable pairs of data sets.

An example of such an analysis is an analysis of hackers and groups. We might start by pairing hacker aliases and real names. These can be collected in a simple spreadsheet in columns with the first column as hacker aliases. Through research, the real names of some of the hackers may be discovered and be placed into their own column next to their associated aliases. Next, research may reveal hacks associated with individual aliases or, perhaps, real names. These go into their own column in the same row with their associated hackers. Finally, perhaps the groups with which the hackers are associated can provide useful connections. These groups are researched and placed in their own column on the rows associated with the hackers who are members.

The link analyzer accepts the input two columns at a time and iteratively analyzes the relationships. Some of the things that the analysis might reveal are multiple aliases for the same hacker, multiple hackers participating in the researched hacks, and membership in various hacker groups. In turn, membership in a particular hacker group may imply participation in individual hacks by members of the group who were not initially reported as being involved.

A more difficult problem to solve is the relationship between attack source IP addresses and target addresses. Often these relationships are clustered. In link analysis language, a cluster is

a group of entities that are bound more tightly to one another by the links between them than they are to the entities that surround them.24

Since the entities within a cluster are more tightly interconnected within the cluster than they are with entities outside of the cluster, these clustered entities usually represent activities of interest.

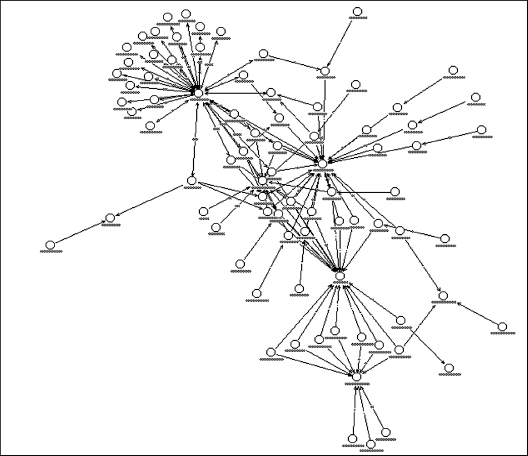

Applying this concept to a large log from a firewall, we might take all of the denied connections, place their source and destination addresses in the link analyzer, and look for relationships. That might yield a complicated pattern such as the one shown in Exhibit 55.9.

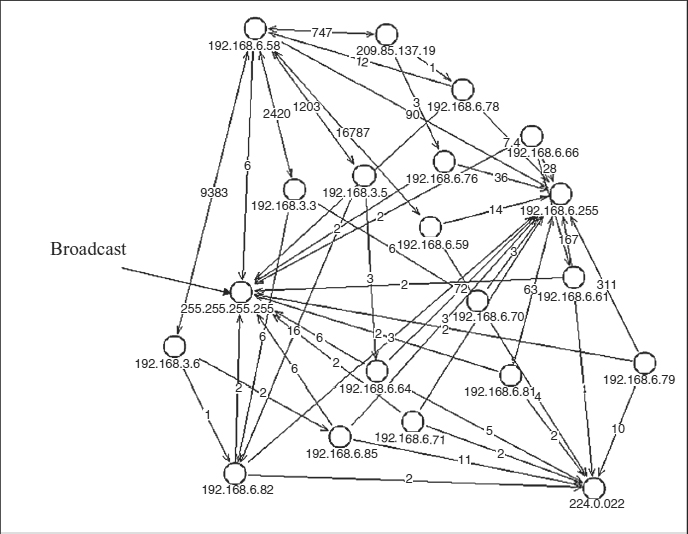

This map can be simplified by performing a cluster analysis as shown in Exhibit 55.10.

The clustering operation on the data in Exhibit 55.9 reveals the cluster in Exhibit 55.10. This simplified analysis in turn shows two interesting relationships:

- It quickly identifies the IP addresses that are broadcasting.

- It clearly shows several IP addresses that are receiving a lot of traffic as well as the sources of that traffic.

The cluster map in Exhibit 55.10 is far easier to read than the map in Exhibit 55.9. Considering that the original log had several thousand entries, this is a much improved way to see nonobvious relationships; it is much easier than reading the log data or manipulating a spreadsheet.

55.6.2 Attack-Tree Analysis.

Attack-tree analysis is a technique for analyzing possible attack scenarios on a compromised network. Its purpose is to hypothesize the attack methodology and calculate the probabilities of several possible scenarios. Once these probabilities are calculated, the investigator can hypothesize where to look for evidence. In a complicated attack on a large distributed network, having a set of these models on hand can be a big help. The analyst prepares various attack-tree models in advance and then modifies them to be consistent with the facts of a particular incident. This limits the number of high-probability attack paths and simplifies the evidence discovery process.

55.6.3 Modeling.

“Attack modeling” is a broad term that refers to all of the techniques used to simulate attack behavior. The author uses Coloured Petri Nets (CPNets).25 CPNets are a graphical representation of a mathematical formalism that allows the modeling and simulation of attack behavior on a network. To use CPNets effectively, the investigator must understand the enterprise in terms of its security-policy domains. The author defines security-policy domains in this way: “A security policy domain, Ep, consists of all of the elements, e, of an enterprise that conform to the same security policy, p.”26

EXHIBIT 55.9 Link Analyzer Relationship Map of Source and Destination IP Addresses

EXHIBIT 55.10 Map in Exhibit 55.9 Reduced through Cluster Analysis

The policy domains are represented along with the communications links between them in a graphical chart. Once the mathematical definitions of the domains and the communications conditions that exist between them have been defined, the behavior of the attack can be simulated. The simulations assist the investigator in tracing the attack source.

55.7 CONCLUDING REMARKS.

In this chapter, we discussed a structured approach to cyber investigation along with some of the tools and techniques that support such an approach. Nordby27 suggests that reliable methods of inquiry possess the characteristics of “integrity, competence, defensible technique and relevant experience.” The nature of cyber investigation and cyber forensics supports this through rigor, underlying models and frameworks, and investigators who adhere to those characteristics.

55.8 FURTHER READING

Brown, C. L. T. Computer Evidence: Collection & Preservation. Hingham, MA: Charles River Media, 2005.

Carrier. B. File System Forensic Analysis. Upper Saddle River, NJ: Addison-Wesley, 2005.

Carvey, H. Windows Forensics and Incident Recovery. Upper Saddle River, NJ: Addison-Wesley, 2004.

Farmer, D., and W. Venema. Forensic Discovery. Upper Saddle River, NJ: Addison-Wesley, 2005.

Jones, K. J., R. Beijtlich and C. W. Rose. Real Digital Forensics: Computer Security and Incident Response. Upper Saddle River, NJ: Addison-Wesley, 2005.

Marcella, A., and D. Menendez. Cyber Forensics: A Field Manual for Collecting, Examining, and Preserving Evidence of Computer Crimes, Second Edition. Boca Raton, FL: Auerbach, 2007.

Steel, C. Windows Forensics: The Field Guide for Corporate Computer Investigations. Hoboken, NJ: John Wiley & Sons, 2006.

55.9 NOTES

1. Much of this chapter is excerpted with minor edits from P. Stephenson, “Structured Investigation of Digital Incidents in Complex Computing Environments,” PhD diss., Oxford Brookes University, 2004, available in the British Library.

2. P. Stephenson, Investigating Computer Related Crime (New York: CRC Press, 1999).

3. American Academy of Forensic Science, 2008: www.aafs.org/default.asp?section_id=membership&page_id=how_to_become_an_aafs_member#General.

4. M. Rogers, A. Brinson, and A. Robinson, “A Cyber Forensics Ontology: Creating a New Approach to Studying Cyber Forensics,” Journal of Digital Investigation (2006): S37–S43.

5. Microsoft® Encarta® 2008, “Metaphysics” [DVD] (Redmond, WA: Microsoft Corporation, 2008).

6. Microsoft® Encarta® 2008 Dictionary [DVD] (Redmond, WA: Microsoft Corporation, 2008).

7. Open-source ontology editor available from http://protege.stanford.edu/.

8. Digital Forensic Research Workshop: http://dfrws.org/.

9. DFRWS attendees, “A Road Map for Digital Forensic Research,” Report from the First Digital Forensic Research Workshop, August 7–8, 2001, Utica, NY. Available: Air Force Research Laboratory, Rome Research Site, Rome, NY, http://dfrws.org/2001/dfrws-rm-final.pdf.

10. There have also been attempts to relate cyber investigation to the generalized Zachman Enterprise Architecture Framework: J. A. Zachman, “A Framework for Information Systems Architecture,” IBM Systems Journal 26, No. 3 (1987), www.research.ibm.com/journal/sj/263/ibmsj2603E.pdf.

11. DFRWS attendees, “Road Map for Digital Forensic Research.”

12. DFRWS attendees, “Road Map for Digital Forensic Research.”

13. The DFRWS definitions in these sections are from Digital Forensics Research Workshop, “Day 1-DF-Science, Group A Session 1 (D1-A1), Digital Forensic Framework,” Work notes for the 2003 Digital Forensics Research Workshop, August 6, 2003. For more information on the DFRWS Framework, see DFRWS attendees, “Road Map for Digital Forensic Research.”

14. Author's experience over 20 years of conducting incident response.

15. A. C. Doyle, “Silver Blaze.” In: The Memoirs of Sherlock Holmes (London: Newnes, 1893).

16. A gross data collection is a file or files containing data collected from a digital source that may contain individual evidentiary data.

17. This and other formal definitions appear in Stephenson, “Structured Investigation of Digital Incidents in Complex Computing Environments.”

18. See Chapter 8, Sections 8.1 to 8.6, in this Handbook.

19. Andrew Jones describes a cyber threat delivery model in his paper “Identification of a Method for the Calculation of Threat in an Information Environment,” unpublished (April 2002, revised 2004).

20. D. Icove, K. Seger, and W. VonStorch, Computer Crime: A Crimefighter's Handbook (Sebastopol, CA: O'Reilly & Associates, 1995.

21. Stephenson, “Structured Investigation of Digital Incidents in Complex Computing Environments.”

22. A. C. Doyle, The Sign of Four (London: Blackett, 1890).

23. For more details, see the recommendations for further reading.

24. i2 Limited “About Clusters,” Analyst's Notebook v6 Help file, August 9, 2005; www.i2.co.uk.

25. Coloured Petri Nets at the University of Aarhus: www.daimi.au.dk/CPnets.

26. P. Stephenson and P. Prueitt, “Towards a Theory of Cyber Attack Mechanics,” First IFIP 11.9 Digital Forensics Conference, 2005.

27. S. H. James and J. J. Nordby, Forensic Science: An Introduction to Scientific and Investigative Techniques (Boca Raton, FL: CRC Press, 2003).