CHAPTER 57

DATA BACKUPS AND ARCHIVES

M. E. Kabay and Don Holden

57.2.2 Hierarchical Storage Systems

57.3.1 Selecting the Backup Technology

57.4 DATA LIFE CYCLE MANAGEMENT

57.4.3 Media Longevity and Technology Changes

57.5.1 Environmental Protection

57.6.2 Data and Media Destruction

57.8 OPTIMIZING FREQUENCY OF BACKUPS

57.1 INTRODUCTION.

Nothing is perfect. Equipment breaks, people make mistakes, and data files become corrupted or disappear. Everyone, and every system, needs a well-thought-out backup and retrieval policy. In addition to making backups, data processing personnel also must consider requirements for archival storage and for retrieval of data copies. Backups also apply to personnel, equipment, and electrical power; for other applications of redundancy, see Chapters 23 and 45 in this Handbook.

57.1.1 Definitions.

Backups are copies of data files or records, made at a moment in time, and primarily used in the event of failure of the active files. Normally, backups are stored on different media from the original data. In particular, a copy of a file on the same disk as the original is an acceptable backup only for a short time; the *.bak, *.bk!, *.wbk, and *.sav files created by programs such as word processors are examples of limited-use backups. However, even a copy on a separate disk loses value as a backup once the original file is modified, unless incremental or differential backups also are made. These terms are described in Section 57.3.3. Typically, backups are taken on a schedule that balances the costs and inconvenience of the process with the probable cost of reconstituting data that were modified after each backup; rational allocation of resources is discussed in Section 57.8.

Deletion or corruption of an original working file converts the backup into an original. Those who do not understand this relationship mistakenly believe that once they have a backup, they can safely delete the original file. However, before original files are deleted, as when a disk volume is to be formatted, there must be at least double backups of all required data. Double backups will ensure continued operations should there be a storage or retrieval problem on any one backup medium.

This chapter uses these abbreviations to denote data storage capacities:

KB = kilobyte = 1,024 bytes (characters) (approximately 103)

MB = megabyte = 1,024 KB = 1,048,576 bytes (∼106)

GB = gigabyte = 1,024 MB = 1,073,741,824 bytes (∼109)

TB = terabyte = 1,024 GB = 1,099,511,627,776 bytes (∼1012)

PB = petabyte = 1,024 TB = 1,125,899,906,842,624 bytes (∼1015)

EB = Exabyte = 1,024 PB = 1,152,921,504,606, 846,976 bytes (∼1018)1

Archives—Although backups are used to store and retrieve operational data chronologically, data archives are used to store data that is seldom or no longer used but, when needed for reference, compliance (e.g., Federal Drug Administration (FDA), Health Insurance Portability and Accountability Act of 1996 (HIPAA), Securities and Exchange Commission (SEC)), or legal actions such as e-discovery (turning over electronic evidence in a legal proceeding), must be retrieved in a quick, logical mode. Some new data storage management systems combine both backup and this expanded archival capability.

Continuous Data Protection (CDP)—The Continuous Data Protection Special Interest Group (CDP SIG) of the Storage Networking Industry Association (SNIA) defines CDP as:

a methodology that continuously captures or tracks data modifications and stores changes independent of the primary data, enabling recovery points from any point in the past. CDP systems may be block-, file- or application-based and can provide fine granularities of restorable objects to infinitely variable recovery points. So, according to this definition, all CDP solutions incorporate these three fundamental attributes:

- Data changes are continuously captured or tracked

- All data changes are stored in a separate location from the primary storage

- Recovery point objectives are arbitrary and need not be defined in advance of the actual recovery.2

Virtual Tape Library (VTL)—A disk-based system that emulates a tape library, tape drives, and media. These systems are used in disk-based backup and restore operations.3

57.1.2 Need.

Backups and storage archives are used for many purposes:

- To replace lost or corrupted data with valid versions

- To satisfy audit and legal requirements for access to retained data

- In forensic examination of data to recognize and characterize a crime and to identify suspects

- For statistical purposes in research

- To satisfy requirements of due care and diligence in safeguarding corporate assets

- To meet unforeseen requirements

57.2 MAKING BACKUPS.

Because data change at different rates in different applications, backups may be useful when made at frequencies ranging from milliseconds to years.

57.2.1 Parallel Processing.

The ultimate backup strategy is to do everything twice at the same time. Computer systems such as HP NonStop and Stratus use redundant components at every level of processing; for example, they use arrays of processors, dual input/output (I/O) buses, multiple banks of random access memory, and duplicate disk storage devices to permit immediate recovery should anything go awry. Redundant systems use sophisticated communications between processors to ensure identity of results. If any computational components fail, processing can continue uninterrupted while the defective components are replaced.

57.2.2 Hierarchical Storage Systems.

Large computer systems with terabytes or petabytes of data typically use a hierarchical storage system to place often-used data on fast, relatively expensive disks while migrating less-used data to less expensive, somewhat slower storage media such as slower magnetic disks, optical media, or magnetic tapes. However, users need have no knowledge of, or involvement in, such migration; all files are listed by the file system and can be accessed without special commands. Because the secondary storage media are stored in dense cylindrical arrays, usually called silos, they may have total capacities in the PB per silo, with fast-moving robotic arms that can locate and load the right unit within seconds. Users may experience a brief delay of a few seconds as data are copied from the secondary storage units back onto the primary hard disks, but otherwise there is no problem for the users. This system provides a degree of backup simply because data are not erased from the silo media when they are copied to magnetic disk on the system, nor are data removed from disk when they are appended to the secondary storage; this data remanence provides a degree of temporary backup because of the duplication of data. For more information on disk-based storage systems, see Chapter 36 in this Handbook.

57.2.3 Disk Mirroring.

There are several methods for duplicating disk operations so that disk failures cause limited or no damage to critical data. Continuous data protection (CDP) provides infinitely granular recovery point objectives (RPO), and some implementations can provide near instant recovery times (RTO). This is because CDP protection typically is done on a write transaction by-write transaction basis. Every modification of data is recorded, and the recovery can occur to any point, down to the demarcation of individual write operations.

57.2.3.1 RAID.

Redundant arrays of independent (originally inexpensive) disks (RAID) were described in the late 1980s and have become a practical approach to providing fault-tolerant mass storage. The falling price of disk storage has allowed inexpensive disks to be combined into highly reliable units, containing different levels of redundancy among the components, for applications with requirements for full-time availability. The disk architecture involves special measures for ensuring that every sector of the disk can be checked for validity at every input and output operation. If the primary copy of a file shows data corruption, the secondary file is used and the system automatically makes corrections to resynchronize the primary file. From the user's point of view, there is no interruption in I/O and no error.

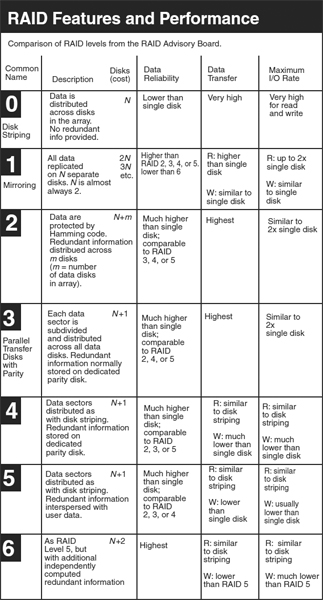

RAID levels define how data are distributed and replicated across multiple drives. The most commonly used levels are described in Exhibit 57.1.4

57.2.3.2 Storage Area Network and Network Area Storage.

A storage area network (SAN) is a network that allows more than one server to communicate with more than one device via a serial Small Computer System Interface (SCSI) protocol. SANs share devices via the SCSI protocol, running on top of a serial protocol such as Fibre Channel or Internet SCSI (iSCSI). They offer multiple servers access to raw devices, such as disk and tape drives.

Network-Attached Storage (NAS) effectively moves storage out from behind the server and puts it directly on the transport network. Unlike file servers that have SCSI and local area network (LAN) adapters, a NAS appliance offers multiple servers access to a file or file system via standard file-sharing protocols: Network File System (NFS), Common Internet File System (CIFS), Direct Access File System (DAFS), or others. One NAS disadvantage is that it shifts storage transactions from parallel SCSI connections to the production network. Therefore, the backbone LAN has to handle both normal end user traffic and storage disk requests, including backup operations. For more information on SAN and NAS, see Chapter 36.

57.2.3.3 Workstation and Personal Computer Mirroring.

Anyone who has more than one computer may have to synchronize files to make them the same on two or more computers. In addition to the simple matter of convenience, synchronizing the computers provides excellent backup, which supplements daily incremental backups (i.e., backups of all the files that have changed since the previous incremental backup) and periodic full backups. The inactive synchronized computers serve as daily full backups of the currently active computer. For example, the Laplink Gold and Microsoft SyncToy products (see also Section 57.3.4.4) unfailingly check files on the source and target machines and allow one to choose how to transfer changes:

- Clone the target machine using the source machine as the standard (i.e., make the target identical to the source).

- Add all new files from the source to the target without deleting any files on the target.

- Add all new files from either machine to the other without deleting files.

Software solutions also can provide automatic copying of data onto separate internal or external disks. There are dozens of such utilities available, many of them free.5

EXHIBIT 57.1 RAID Levels

Source: Used with kind permission of Alan Freedman, Computer Desktop Encyclopedia (Point Pleasant, PA: Computer Language Company, 2008), www.computerlanguage.com.

- SureSync software, which runs on Windows 2003, XP, and Vista, and which can replicate and synchronize files on Windows, MAC and Linux.

- NFTP 1.64, which runs on Windows, OS/2, BeOS, Linux, and Unix; it includes “transfer resume, automatic reconnect, secure authentication, extensive firewall and proxy support, support for many server types, built-in File Transfer Protocol (FTP) search, mirroring, preserving timestamps and access rights, control connection history.”6

- UnixWare Optional Services include Disk Mirroring software for what was originally the Santa Cruz Operation (SCO) Unix.

- Double-Take software goes beyond periodic backup by capturing byte-level changes in real time and replicating them to an alternate server, either locally or across the globe.

Early users of software-based disk mirroring suffered from slower responses when updating their primary files because the system had to complete output operations to the mirror file before releasing the application for further activity. However, today's software uses part of system memory as a buffer to prevent performance degradation. Secondary (mirror) files are nonetheless rarely more than a few milliseconds behind the current status of the primary file.

57.2.4 Logging and Recovery.

If real-time access to perfect data is not essential, a well-established approach to high-availability backups is to keep a log file of all changes to critical files. Roll-forward recovery requires:

- Backups that are synchronized with log files to provide an agreed-on starting point

- Markers in the log files to indicate completed sequences of operations (called transactions) that can be recovered

- Recovery software that can read the log files and redo all the changes to the data, leaving out incomplete transactions

An alternative to roll-forward recovery is roll-backward recovery, in which diagnostic software scans log files and identifies only the incomplete transactions and then returns the data files to a consistent state. For a detailed discussion of logging, see Chapter 53 in this Handbook.

MS Windows XP and later versions include the System Restore function.7 System Restore uses a disk buffer of at least 200 MB to store several time-stamped copies of critical executable files and settings, including system registry settings, when users install new software or patches. Users can also create restore points themselves. Users can thus return their operating system to a state consistent with that of a prior time. In addition, Windows offers a device driver rollback function for a single level of driver recovery.8

57.2.5 Backup Software.

All operating systems have utilities for making backups. However, sometimes the utilities that are included with the installation sets are limited in functionality; for example, they may not provide the full flexibility required to produce backups on different kinds of removable media. Generally, manufacturers of removable media include specialized backup software suitable for use with their own products.

There are so many backup products on the market for so many operating environments that readers will be able to locate suitable candidates easily with elementary searches. When evaluating backup software, users will want to check for these minimum requirements:

- The software should allow complete control over which files are backed up. Users should be able to obtain a report on exactly which files were successfully backed up and detailed explanations of why certain files could not be backed up.

- Restore operations should be configurable to respect the read-only attribute on files or to override that attribute globally or selectively.

- Data compression should be available.

- A variety of standard, open, (not proprietary) data encryption algorithms should be available.

- Backups should include full directory paths on demand, for all files.

- Scheduling should be easy, and not require human intervention once a backup is scheduled.

- Backups must be able to span multiple volumes of removable media; a backup must not be limited to the space available on a single volume.

- If free space is available, it should be possible to put more than one backup on a single volume.

- The backup software must be able to verify the readability of all backups as part of the backup process.

- It should not be easy to create backup volumes that have the same name.

- The restore function should allow selective retrieval of individual files and folders or directories.

- The destination of restored data should be controllable by the user.

- During the restore process, the user should be able to determine whether to overwrite files that are currently in place; the overwriting should be controllable both with file-by-file confirmation dialogs and globally, without further dialog.

57.2.6 Removable Media.

The density of data storage on removable media has increased thousands of times in the last half century. For example, in the 1970s, an 8-inch word-processing diskette could store up to 128 KB. In contrast, at the time of writing (April 2008), a removable disk cartridge 3 inches in diameter stored 240 GB, provided data transfer rates of 30 MB per second, and cost around $100. Moore's Law predicts that computer equipment functional capabilities and capacity double every 12 to 18 months for a given cost; this relationship definitely applies to mass storage and backup media.9

57.2.6.1 External Hard Disk Drives.

Many styles of portable, removable stand-alone disk drives with Universal Serial Bus (USB) and FireWire connections are available at relatively low cost. For example, a USB 2.0 portable unit measuring 1.8 centimeters (cm) × 12.4 cm × 7.9 cm, with 250 GB capacity, and weighing 155 gm cost $143 in April 2008; it fit easily into a briefcase or a purse to provide backup and recovery for travelers with laptop computers.

For office or higher-volume applications, a 2 TB Western Digital MyBook USB 2.0/Firewire 400/Firewire 800 external hard drive cost $526. The 17.4 cm × 15.9 cm × 10.4 cm device weighed 1.9 kilograms, had its own power supply, and allowed for RAID 1 configuration or for RAID 0 striping for higher throughput.

Such devices make it easy to create one or more encrypted clones (exact copies, although not necessarily bit-for-bit copies)10 of all of one's laptop or tower computer data, and can allow one to synchronize the clones as often as necessary. The disadvantage compared with using multiple independent storage media is that if the unit fails, all the backup data are lost.

57.2.6.2 Removable Hard Disk Drives.

Hard disk cartridges offer small businesses and home offices a convenient removable storage medium for backups. For example, the Odyssey system from Imation uses disks that are about 6.4 to 8.9 cm in diameter, with capacities ranging from 40 GB to 120 GB, that fit an external docking station with a USB 2.0 interface, or internal units that fit in a standard floppy disk drive on a personal computer. In May 2008, the 120 GB disk cartridges cost $230 each and the drive unit cost about $200.11

In 2002, a number of disk manufacturers formed the Information Versatile Disc for Removable Usage (iVDR) Consortium to standardize the characteristics of hard disk cartridges, making it as easy to slip a disk drive into a reader slot on a computer as it used to be to slip a floppy disk into a floppy disk drive. The consortium aimed to establish at least three shapes of removable cartridges:

- iVDR: 110 millimeters (mm) × 80 mm × 12.7 mm

- iVDR Mini: 67 mm × 80 mm × 10 mm

- iVDR Micro: 50 mm × 50 mm × 8 mm

The first iVDR product was the 160 GB Hitachi Maxell Removable Hard Disk Drive iV, introduced in March 2007; the first consumer appliance using iVDR disks was the Hitachi Wooo high-definition plasma television set. Industry analysts predicted that iVDR disks would quickly reach 0.5 TB capacities, allowing for storage of 5,000 commercial movies per disk and providing an attractive storage medium for data backups and archives.

57.2.6.3 Optical Storage.

Many systems now use optical storage for backups. Compact disk read-write (CD-RW) disks are the most widely used format; each disk can hold approximately 700 MB of data. However, single-layer DVDs can store 4.7 GB, while dual-layer DVDs can store 8.5 GB on media that cost less than a dollar per disk. Write parameters in the optical drivers allow users to specify whether they intend to add to the data stored on disks or whether the disks should be closed to further modification at the end of the write cycle. Internal and external optical read/write drives cost less than $100.

Blu-ray discs™ (BDs) are an optical disk alternative to the DVD format that won out over the competing High Definition (HD) DVD standard in 2008. Single-layer BDs can store 25 GB in the same size disk as CDs and DVDs; dual-layer BDs store 50 GB.

An upcoming technology to monitor is the holographic disk, with storage capacities of up to 1.6 TB. However, at the time of writing in April 2008, these devices were still relatively expensive: around $180 per disk and $18,000 per disk drive.12

Large numbers of CDs, DVDs, and BDs are easily handled using jukeboxes that apply robotics to access specific disks, from collections of hundreds or thousands, on demand. Network-capable jukeboxes allow for concurrent access to multiple disks; stand-alone models suitable for home or home office use are also available at lower cost.

57.2.6.4 Tape Cartridge Systems.

The old 9-track, reel-to-reel 6,250 bytes-per-inch (bpi) systems used in the 1970s and 1980s held several hundred MB. Today's pocket-size tape cartridges hold GBs. For example, the industry leader in this field, a Sony LTO3 cartridge, has 400 GB native and 800 GB compressed maximum storage capacity per cartridge and a transport speed of 80 MB/s native and 160 MB/s compressed data transfer. Cartridges have mean-time-between-failure (MTBF) of 250,000 hours with 100 percent duty cycles and can tolerate 1 million tape passes. All such systems have streaming Input/Output (I/O) using random access memory (RAM) buffers to prevent interruption of the read/write operations from and to the tapes and thus to keep the tape moving smoothly to maximize data transfer rates.

Although this buffering is effective for directly attached tape systems, this is not effective for backing up data over a LAN. When backing up data over a LAN, users will probably experience shoeshining of the tape drive (rapid back-and-forth movement of the tape), which reduces the effective throughput rate significantly. For example, a 50 MB per second (ps) tape drive, receiving a data stream at 25 MBps, does not write at 25 MBps. Rather it fills a buffer and writes short bursts at 50 MBps. The mechanical motion of the tape moves empty tape over the read/write head after the data transfer ends. The tape motor then stops, rewinds to reposition the tape at the appropriate place on the tape for continued writing, and prepares itself to write another short burst at 50 MBps. This repositioning process of stopping, rewinding, and getting back up to speed is called backhitching. Each backhitch can take as long as a few seconds. Frequent backhitching is called shoeshining because the tape activity mimics the movement of a cloth being used to shine shoes. The farther one operates from the designed throughput rate, the less time one spends writing data and the more time backhitching. A 50 MBps tape drive that is receiving data at 40 MBps will actually write at 35 MBps because it is spending at least 20 percent of its time shoeshining. Similarly, a data stream at 30 MBps will actually be written at 20 MBps, a 20 MBps data stream at 10 MBps, and a 10 MBps data stream at less than 1 MBps.

In conjunction with automated tape library systems, holding many cartridges and capable of switching automatically to the next cartridge, tape cartridge systems are ideal for backing up servers and mainframes with TBs of data. Small library systems keep 10 to 20 cartridges in position for immediate access, taking approximately 9 seconds for an exchange. These libraries have approximately 2 million mean exchanges between failures, with MTBF of around 360,000 hours at 100 percent duty cycle.

The large enterprise-class library systems such as the 8-frame Spectra Logic's T950 can be configured with up to 10,050 slots and 120 LTO (Linear Tape-Open, the open-software alternative to DLT or Digital Linear Tape) drives. The 8-frame T950 offered a native throughput of 14.4 GB/second using LTO-4 drives. These library systems can encrypt, compress, and decrypt data using the strongest version of the Advanced Encryption Standard (AES), to meet regulatory and security compliance requirements. As another example, the Plasmon Ultra Density Optical (UDO) Library offered up to 19 TB of archival storage capacity for enterprise systems at a price of about $100,000.

57.2.6.5 Virtual Tape Library.

Virtual tape libraries (VTLs) that are used for backup emulate tape drive and library functions and can provide a transition to an integrated disk-based backup and recovery. These units (e.g., the IBM Virtual Tape Server introduced in 1997) provide backward compatibility for older production systems by using optical, or modern high-capacity magnetic disk storage but presenting the data as if they were stored on magnetic tapes and cartridges. VTLs can use existing storage architecture and can be used remotely. Naturally, their throughput is far greater than the older tape-based backup systems.

57.2.6.6 Personal Storage Devices: Flash Drives.

USB flash drives have become ubiquitous due to the decline in price and the vast amount of data that they can store. Cheap multi-MB and multi-GB USB flash drives (also called memory sticks and thumb drives) are available at drugstores, are given away at conferences, and are available in a variety of forms, such as pens, pocketknives, dolls, and even sushi. Other personal devices can also be used to store large amounts of data. These include music players, personal digital assistants, and mobile phones. Although these devices can provide a convenience for the user who wants to transfer large amounts of data, they pose a serious security risk to businesses since sensitive company data can be easily removed, against company policy. Although some USB flash drives have built-in encryption to protect the contents from being exposed if the device is lost or stolen, most flash drives and other personal devices store data in unencrypted form—a serious problem if the device is lost or stolen. Some companies have implemented technical means to block the use of USB flash and other devices from downloading data from corporate PCs. Just as these devices can download data from a computer, they can also be a vector for transferring malware to a computer and to a network. User policies and education are the primary defenses to supplement technical controls.

Flash drives are sometimes recommended as a backup medium for end-users. However, they suffer from a number of disadvantages:

- They are usually physically small and therefore easy to misplace or lose.

- They are relatively fragile (e.g., their enclosures break easily if struck and are not water resistant).

- They are expensive per storage unit compared with magnetic or optical media.

57.2.6.7 Millipede: A Future Storage Technology.

IBM research scientists have developed Millipede, a method of storing data using an atomic force microscope to punch nanometer-sized depressions in thin polymer films.13 The storage density is about 100 GB per square cm.

57.2.7 Labeling.

Regardless of the size of a backup, every storage device, from diskettes to tape cartridges, should be clearly and unambiguously tagged, both electronically and with adhesive labels. Removable media used on Windows systems, for example, can be labeled electronically with up to 11 letters, numbers, or the underscore character. Larger-capacity media, such as cartridges used for UNIX and mainframe systems, have extensive electronic labeling available. On some systems, it is possible to request specific storage media and have the system automatically refuse the wrong media if they are mounted in error. Tape library systems typically use optical bar codes that are generated automatically by the backup software and then affixed to each cartridge for unique identification. Magnetic tapes and cartridges have electronic labels written onto the start of each unit, with specifics that are particular to the operating system and tape-handling software.

An unlabeled storage medium or one with a flimsily attached label is evidence of a bad practice that will lead to confusion and error. Sticky notes, for example, are not a good way to label diskettes and removable disks. If the notes are taken off, they can get lost; if they are left on, they can jam the disk drives. There are many types of labels for storage media, including printable sheets suitable for laser or inkjet printers and using adhesive that allows removal of the labels without leaving a sticky residue. At the very least, an exterior label should include this information:

- Date the volume was created (e.g., “2010–09–08”)

- Originator (e.g., “Bob R. Jones, Accounting Dept.”)

- Description of the contents (e.g., “Engineering Accounting Data for 2010”)

- Application program (e.g., “Quicken v2010”)

- Operating system (e.g., Windows VISTA Enterprise)

Storing files with canonical names (names with a fixed structure) on the media themselves is also useful. An example of a canonical file much used on installation disks is “READ.ME.” An organization can mandate these files as minimum standards for its storage media:

- ORIGIN.mmm (where mmm represents a sequence number for unique identification of the storage set) indicating the originating system (e.g., “Accounting Workstation number 3875-3” or “Bob Whitmore's SPARC in Engineering”)

- DATE.mmm showing the date (preferably in year-month-day sequence) on which the storage volume was created (e.g., “2010–09–08”)

- SET.mmm to describe exactly which volumes are part of a particular set; contents could include “SET 123; VOL 444, VOL 445, VOL 446”

- INDEX.mmm, an index file listing all the files on all the volumes of that particular storage set; for example, “SET 123; VOL 444 FIL F1, F2; VOL 445 FIL F3, F4; VOL 446 FIL F5”

- VOLUME.nnn (where nnn represents a sequence number for unique identification of the medium) that contains an explanation such as “VOL 444 SET 123 NUMBER 1 OF 3”

- FILES.nnn, which lists all the files on that particular volume of that particular storage set; for example, contents could include “SET 123, VOL 444, Files F1, F2, F3”

Such labeling is best handled by an application program; many backup programs automatically generate similar files.

57.2.8 Indexing and Archives.

As implied earlier, backup volumes need a mechanism for identifying the data stored on each medium. Equally important is the capacity to locate the storage media where particular files are stored; otherwise, one would have to search serially through multiple media to locate specific data. Although not all backup products for personal computers (PCs) include such functionality, many do; server and mainframe utilities routinely include automatic indexing and retrieval. These systems allow the user to specify file names and dates, using wild-card characters to signify ranges and also to display a menu of options from which the user can select the appropriate files for recovery.

This file-level indexing, though, is not sufficient for retrieving unstructured content such as word processing documents, e-mails, instant messages, diagrams, Web pages, and images that may be required for regulators, business research, and legal subpoenas (e-discovery). The Federal Rules of Civil Procedure (FRCP) specifically address discovery and the duty to disclose evidence in preparation for trial.14 Recent changes to FRCP specifically address electronic data. Due to the cost of locating and retrieving data for litigation, companies are changing the way they manage the life cycle of data. For instance, the cost to do a forensics search of one backup tape to locate e-mails that contain certain relevant content can be $35,000 per tape. In some recent cases, a search of e-mail tapes has involved over 100 tapes. Companies are now implementing archiving systems to address this requirement.

A method of providing fast access to fixed content (data that are not expected to be updated) is content-addressed storage (CAS). CAS assigns content a permanent place on disk. CAS stores content so that an object cannot be duplicated or modified once it has been stored; thus, its location is unambiguous. EMC's Centera product first released in 2002 is an example of a CAS product.

When an object is stored in CAS, the object is given a unique name that also specifies the storage location. This type of address is called a content address. It eliminates the need for a centralized index, so it is not necessary to track the location of stored data. Once an object has been stored, it cannot be deleted until the specified retention period has expired. In CAS, data is stored on disk, not tape. This method streamlines the process of searching for stored objects. A backup copy of every object is stored to enhance reliability and to minimize the risk of catastrophic data loss. A remote monitoring system will notify the system administrator in the event of a hardware failure.

Combined archiving and backup systems can reduce the amount of stored data by reducing the amount of duplicate data, thereby reducing the storage cost and time to retrieve or restore data files.

57.3 BACKUP STRATEGIES.

There are different approaches to backing up data. This section looks at what kinds of data can be backed up and then reviews appropriate ways of choosing and managing backups for different kinds of computer systems. Some of the challenges that a backup strategy should address are:

- Rapid growth of electronic data

- Shrinking time for backing up data

- Increasing backup costs

- Performance bottlenecks

- Compliance and legal discovery

57.3.1 Selecting the Backup Technology.

Initially, computers used directly attached tape drives for long-term storage and then centralized tape libraries. With the decline in price of disk drives, their reduced form factor, and high reliability, it is now feasible to use disks in addition to or in place of tapes. Two alternatives to tape backups are the disk-to-disk and disk-to-disk-to-tape.

- Disk to Disk (D2D). Current backup software supports writing to a disk file as if it were another backup device. One way to do this is by emulating a tape device, allowing the disk file or “virtual tape” to integrate into the existing software architecture. D2D backup uses these virtual tapes to save backup data by writing to virtual tape libraries, and then restores the backup data by reading from the VTL. Disk to disk takes advantage of random access, high-volume manufacturing, disk reliability, RAID, and familiar technology.

- Disk to Disk to Tape (D2D2T). Another approach to backup and archive is a hybrid that uses both disk and tape backup media. With a disk to disk to tape (D2D2T), data are initially copied to backup storage on a disk storage system and then periodically copied again to a tape storage system (or possibly to an optical storage system). Many businesses still back up data directly to tape systems. For high-availability applications, where it is important to have data immediately accessible, a secondary disk can provide faster restoration if the data on the primary disk becomes inaccessible (e.g., a server failure or data corruption). The time to restore data from tape may be unacceptable. However, tape often is used for off-site backup and restoration in case of a disaster, because tape is a more economical alternative for long-term storage and is more portable.

57.3.2 Exclusive Access.

All backup systems have trouble with files that are currently in use by processes that have opened them with write access (i.e., which may be adding or changing data within the files). The danger in copying such files is that they may be in an inconsistent state when the backup software copies their data. For example, a multiphase transaction may have updated some records in a detail file, but the corresponding master records may not yet have been posted to disk. Copying the data before the transaction completes will store a corrupt version of the files and lead to problems when they are later restored to disk.

Backup software usually generates a list of everything backed up and of all the files not backed up; for the latter, there is usually an explanation or a code showing the reason for the failure. Operators always must verify that all required files have been backed up and must take corrective action if files have been omitted.

Some high-speed, high-capacity backup software packages provide a buffer mechanism to allow high-availability systems to continue processing while backups are in progress. In these systems, files are frozen in a consistent state so that backup can proceed, and all changes are stored in buffers on disk for later entry into the production databases. However, even this approach cannot obviate the need for a minimum period of quiescence so that the databases can reach a consistent state. In addition, it is impossible for full functionality to continue if changes are being held back from the databases until a backup is complete; all dependent transactions (those depending on the previously changed values of records) also must be held up until the files are unlocked.

57.3.3 Types of Backups.

Backups can include different amounts and kinds of data:

- Full backups store a copy of everything that resides on the mass storage of a specific system. To restore a group of files from a full backup, the operator mounts the appropriate volume of the backup set and restores the files in a single operation.

- Differential backups store all the data that have changed since a specific date or event; typically, a differential backup stores everything that has changed since the last full backup. The number of volumes of differential backups can increase with each additional backup. To restore a group of files from a differential backup, the operator needs to locate the latest differential set and also the full backup upon which it is based to ensure that all files are restored. For example, suppose a full backup on Sunday contains copies of files A, B, C, D, and E. On Monday, suppose that files A and B are changed to A' and B' during the day; then the Monday evening differential backup would include only copies of files A' and B'. On Tuesday, suppose that files C and D are changed to C' and D'; then the Tuesday evening backup would include copies of files A', B', C', and D'. If there were a crash on Wednesday morning, the system could be rebuilt from Sunday's full backup (files A, B, C, D, and E), and then the Tuesday differential backup would overwrite the changed files and restore A', B', C', and D', resulting in the correct combination of unchanged and changed files (A', B', C', D', and E).

- Incremental backups are a more limited type of differential backups that typically store everything that has changed since the previous full or incremental backup. As long as multiple backup sets can be put on a single volume, the incremental backup requires fewer volumes than a normal differential backup for a given period. To restore a set of files from incremental backups, the operator may have to mount volumes from all the incremental sets plus the full backup upon which they are based. For example, using the same scenario as in the explanation of differential backups, suppose a full backup on Sunday contains copies of files A, B, C, D, and E. On Monday, suppose that files A and B are changed to A' and B' during the day; then the Monday evening incremental backup would include only copies of files A' and B'. On Tuesday, suppose that files C and D are changed to C' and D'; then the Tuesday evening backup would include copies only of files C' and D'. If there were a crash on Wednesday morning, the system could be rebuilt from Sunday's full backup (files A, B, C, D, and E) and then the Monday incremental backup would allow restoration of A' and B' and the Tuesday incremental backup would restore C' and D', resulting in the correct combination of unchanged and changed files (A', B', C', D', andE).

- Delta backups store only the portions of files that have been modified since the last full or delta backup; delta backups are a rarely used type, more akin to logging than to normal backups. Delta backups use the fewest backup volumes of all the methods listed; however, to restore data using delta backups, the operator must use special-purpose application programs and mount volumes from all the delta sets plus the full backup upon which they are based. In the scenario used earlier, the delta backup for Monday night would have records for the changes in files A in delta-A and records for the changes in file B stored in file delta-B. The Tuesday night delta backup would have records for the changes in files C and D stored in files delta-C and delta-D, respectively. To restore the correct conditions as of Tuesday night, the operator would restore the A and B files from the Sunday night full backup, then run a special recovery program to install the changes from the delta-A and a (possibly different) recovery program to install the changes from delta B. Then it would be necessary to load the Tuesday night delta backup and to run recovery programs using files C and D with corresponding files delta-C and delta-D, respectively.

Another aspect of backups is whether they include all the data on a system or only the data particular to specific application programs or groups of users:

- System backups copy everything on a system.

- Application backups copy the data needed to restore operations for particular software systems.

In addition to these terms, operators and users often refer to daily and partial backups. These terms are ambiguous and should be defined in writing when setting up procedures.

57.3.4 Computer Systems.

Systems with different characteristics and purposes can require different backup strategies. This section looks at large production systems (mainframes), smaller computers used for distributed processing (servers), individual computers used primarily by one user (workstations), and portable computers (laptops) or handheld computers and personal digital assistants (PDAs).

57.3.4.1 Mainframes.

Large production systems using mainframes, or networks of servers, routinely do full system backups every day because of the importance of rapid recovery in case of data loss (see Chapters 42 and 43 of this Handbook). Using high-capacity tape libraries with multiple drives and immediate access to tape cartridges, these systems are capable of data throughput of up to 2 TB per hour. Typically, all backups are performed automatically during the period of lowest system utilization. Because of the problems caused by concurrent access, mainframe operations usually reserve a time every day during which users are not permitted to access production applications. A typical approach sends a series of real-time messages to all open sessions announcing “Full Backup in xx minutes; please log off now.” Operations staff have been known to phone offending users who are still logged on to the network when backups are supposed to start. To prevent unattended sessions from interfering with backups (as well as to reduce risks from unauthorized use of open sessions), most systems configure a time-out after a certain period of inactivity (typically 10 minutes). If users have left their sessions online despite the automatic logoff, mechanisms such as forced logoffs can be implemented to prevent user processes from continuing to hold production files open.

In addition to system backups, mainframe operations may be instructed to take more frequent backups of high-utilization application systems. Mission-critical transaction-processing systems, for example, may have several incremental or delta backups performed throughout the day. Transaction log files may be considered so important that they are also copied to backup media as soon as the files are closed. Typically, a log file is closed when it reaches its maximum size, and a new log file is initiated for the application programs.

57.3.4.2 Servers.

Managers of networks with many servers have the same options as mainframe operations staff, but they also have increased flexibility because of the decentralized, distributed nature of the computing environment. Many network architectures allocate specific application systems or groups of users to specific servers; therefore, it is easy to schedule system backups at times convenient for the various groups. In addition to flexible system backups, the distributed aspect of such networks facilitates application backups.

Web servers, in particular, are candidates for high-availability clones of the entire Web site. Some products provide for regeneration of a damaged Web site from a readonly clone, one that includes hash totals allowing automatic integrity checking of all elements of the exposed and potentially damaged data on the public Web site.15

57.3.4.3 Workstations.

Individual workstations pose special challenges for backup. Although software and backup media are readily available for all operating systems, the human factor interferes with reliable backup. Users typically are not focused on their computing infrastructure; taking care of backups is not a high priority for busy professionals. Even technically trained users, who are aware of the dangers, sometimes skip their daily backups; many novice or technically unskilled workers do not even understand the concept of backups.

If the workstations are connected to a network, then automated, centralized, backup software utilities can protect all the users' files. However, with user disk drives containing as many as 500 GB of storage, and with the popularity of large files such as pictures and videos, storing the new data, let alone the full system, for hundreds of workstations can consume TBs of backup media and saturate limited bandwidths. It takes a minimum of 29 hours to transfer 1 TB over a communications channel running at 10 MB (100 Mb) per second. There are also privacy issues in such centralized backup if users fail to encrypt their hard disk files. In addition, it is impossible to compress encrypted data, so encryption and backup software should always compress before encrypting data.

57.3.4.4 Laptop Computers.

A laptop computer is sometimes the only computer a user owns or is assigned; in other cases, the portable computer is an adjunct to a desktop computer. Laptop computers that are the primary system must be treated like workstations. Laptops that are used as adjuncts—for example, when traveling—can be backed up separately, or they can be synchronized with the corresponding desktop system.

Synchronization software, such as LapLink, offers a number of options to meet user needs:

- A variety of hardwired connection methods, including cables between serial ports, parallel ports, SCSI ports, and USB ports.

- Remote access protocols allowing users to reach their computer workstations via modem, or through TCP/IP connections via the Internet, to ensure synchronization or file access.

- Cloning, which duplicates the selected file structure of a source computer onto the target computer; cloning deletes files from the target that are not found on the source.

- Filtering, which prevents specific files, or types of files, from being transferred between computers.

- Synchronization, in which all changes on the source computer(s) are replicated onto the target computer(s). One-way synchronization updates the target only; two-way synchronization makes changes to both the target and the source computers.

- Compression and decompression routines to increase throughput during transfers and synchronizations.

- Data comparison functions to update only those portions of files that are different on source and target; for large files, this feature raises effective throughput by orders of magnitude.

- Security provisions to prevent unauthorized remote access to users' computers.

- Log files to record events during file transfers and synchronization.

Windows systems can also use the simple and free SyncToy software.

In addition to making it easier to leave the office with all the right files on one's hard disk, synchronization of portable computers has the additional benefit of creating a backup of the source computer's files. For more complete assurance, the desktop system may be backed up daily onto one or two removable drives, at the same time that the portable computer's files are synchronized. If the portable is worked on overnight, all files should be synchronized again in the morning.

57.3.4.5 Mobile Computing Devices.

Another area that is often overlooked is mobile computing devices: Smart phones and Personal Digital Assistants (PDAs), including Palm, BlackBerry, daVinci, iPAQ, Axim and Rex. These devices often contain critically important information for their users, but not everyone realizes the value of making regular backups. Actually, synchronizing a PDA with a workstation has the added benefit of creating a backup on the workstation's disk. Security managers would do well to circulate an occasional reminder for users to synchronize or back up their PDAs to prevent data loss should they lose or damage their valuable tool. Some PDA docking cradles have a prominent button that activates instant synchronization, which is completed in only a minute or two. BlackBerries can be backed up using Desktop Manager or a wireless connection.

57.3.5 Testing.

Modern backup software automatically verifies the readability of backups as it writes them; this function must not be turned off. As mentioned earlier, when preparing for any operation that destroys or may destroy the original data, two independent backups of critical data should be made, one of which may serve as the new original; it is unlikely that exactly the same error will occur in both copies of the backup. Such risky activities include partitioning disk drives, physical repair of systems, moving disk drives from one slot or system to another, and installation of new versions of the operating system.

57.4 DATA LIFE CYCLE MANAGEMENT.

Data management has become increasingly important in the United States with electronic discovery and with the passage of laws, such as the Gramm Leach Bliley Act (GLBA), Health Insurance Portability and Accountability Act (HIPAA), and the Sarbanes-Oxley Act (SOX), that regulate how organizations must deal with particular types of data. (For more on these regulations, see Chapter 64 in this Handbook.) Data life cycle management (DLM) is a comprehensive approach to managing an organization's data, involving procedures and practices as well as applications. DLM is a policy-based approach to managing the flow of an information system's data throughout its life cycle, from creation and initial storage, to the time when it becomes obsolete and is destroyed. DLM products automate the processes involved, typically organizing data into separate tiers according to specified policies, and automating data migration from one tier to another, based on those policies. As a rule, newer data, and data that must be accessed more frequently, are stored on faster but more expensive storage media, while less critical data are stored on cheaper but slower media.

Having created a backup set, what should be done with it? And how long should the backups be kept? This section looks at issues of archive management and retention from apolicy perspective. Section 57.5 looks at the issues of physical storage of backup media, and Section 57.6 reviews policies and techniques for disposing of discarded backup media.

57.4.1 Retention Policies.

One of the obvious reasons to make backup copies is to recover from damage to files. With the 2006 changes to the Federal Rules for Civil Procedures (FRCP), the corporate legal staff should provide advice regarding the retention period of certain data in order to comply with legal and regulatory requirements and to avoid potential litigation. In all cases, the combination of business and legal requirements necessitates consultation outside the information technology (IT) department; decisions on data retention policies must involve more than technical resources. Backup data may be used to retrieve files needed for regulatory and business requirements; the next section looks at archive systems as a more effective method of storing and retrieving data needed for business and legal purposes.

The probability that a backup will be useful declines with time. The backup from yesterday is more likely to be needed, and more valuable, than the same kind of backup from last week or last month. Yet each backup contains copies of files that were changed in the period covered by that backup but that may have been deleted since the backup was made. Data center policies on retention vary because of perceived needs and experience as well as in response to business and legal demands. The next sample policy illustrates some of the possibilities in creating retention policies:

- Keep daily backups for 1 month.

- Keep end-of-week backups for 3 months.

If your company does not have a data archive system, or if the backup system does not include archival features, you may need to keep the backup media for longer periods:

- Keep end-of-month backups for 5 years.

- Keep end-of-year backups for 10 years.

With such a policy in place, after 1 year there will be 55 backups in the system. After 5 years, there will be 108, and after 10 years, 113 backups will be circulating. Proper labeling, adequate storage space, and stringent controls are necessary to ensure the availability of any required backups. The 2006 changes to the FRCP may require the corporate legal staff to advise retention of certain data for even longer periods, as support for claims of patent rights, or if litigation is envisaged. In all cases, the combination of business and legal requirements necessitates consultation outside the IT department; decisions on data retention policies must involve more than technical resources and may include engineering, accounting, human resources, and so on.

57.4.2 Rotation.

Reusing backup volumes makes economic and functional sense. In general, when planning a backup strategy, different types of backups may be kept for different lengths of time. To ensure even wear on media, volumes should be labeled with the date on which they are returned to a storage area of available media and should be used in order of first in, first out. Backup volumes destined for longer retention should use newer media. An expiry date should be stamped on all tapes when they are acquired so that operations staff will know when to discard outdated media.

57.4.3 Media Longevity and Technology Changes.

For short-term storage, there is no problem ensuring that stored information will be usable. Even if a software upgrade changes file formats, the previous versions are usually readable. In 1 year, technological changes such as new storage formats will not make older formats unreadable.

Over the medium term—up to 5 years—difficulties of compatibility do increase, although not catastrophically. There are certainly plenty of 5-year old systems still in use, and it is unlikely that this level of technological inertia will be seriously reduced in the future.

Over the longer term, however, there are serious problems to overcome in maintaining the availability of electronic records. During the last 10 to 20 years, certain forms of storage have become essentially unusable. As an example, the AES company was a powerful force in the dedicated word processor market in the 1970s; 8-inch disks held dozens or hundreds of pages of text and could be read in almost any office in North America. By the late 1980s, however, AES had succumbed to word processing packages running on general-purpose computers; by 1990, the last company supporting AES equipment closed its doors. Today it would be extremely difficult to find the equipment for reading AES diskettes.

The problems of obsolescence include data degradation, software incompatibilities, and hardware incompatibilities.

57.4.3.1 Media Degradation.

Magnetic media degrade over time. Over a period of a few years, thermal disruption of magnetic domains gradually blurs the boundaries of the magnetized areas, making it harder for I/O devices to distinguish between the domains representing 1s and those representing 0s. These problems affect tapes, diskettes, and magnetic disks and cause increasing parity errors. Specialized equipment and software can compensate for these errors and recover most of the data on such old media.

Tape media suffer from an additional source of degradation: The metal oxide becomes friable and begins to flake off the Mylar backing. Such losses are unrecoverable. They occur within a few years in media stored under inadequate environmental controls and within 5 to 10 years for properly maintained media. Regular regeneration by copying the data before the underlying medium disintegrates prevents data loss.

Optical disks, which use laser beams to etch bubbles in the substrate, are much more stable than magnetic media. Current estimates are that CD-ROMs and CD-RW and DVD disks will remain readable, in theory, for at least a decade, and probably longer. However, they will remain readable in practice if, and only if, future optical storage systems include backward compatibility.

USB flash drives have largely replaced floppy diskettes and zip disks as a means of storing or transporting temporary data. Flash drives, similar to flash memory, have a finite number of cycles of writing and erasing before slowing down and failing. Typically, this is not an issue, as the number of cycles is in the hundreds of thousands.

57.4.3.2 Software Changes.

Software incompatibilities include the application software and the operating system.

The data may be readable, but will they be usable? Manufacturers provide backward compatibility, but there are limits. For example, MS-Word 2007 can convert files from earlier versions of Word—but only back to version 6 for Windows. Over time, application programs evolve and drop support of the earliest data formats. Database programs, e-mail, spreadsheets—all of tomorrow's versions may have trouble interpreting today's data files correctly.

In any case, all conversions raise the possibility of data loss since new formats are not necessarily supersets of old formats. For example, in 1972, RUNOFF text files on mainframe systems included instructions to pause a daisy-wheel impact printer so the operator could change daisy wheels—but there was no requirement to document the desired daisy wheel. The operator made the choice. What would document conversion do with that instruction?

Even operating systems evolve. Programs intended for Windows 3.11 of the early 1990s do not necessarily function on Windows VISTA in the year 2008. Many older operating systems are no longer supported and do not even run on today's hardware.

Finally, even hardware eventually becomes impossible to maintain. As mentioned, it would be extremely difficult to retrieve and interpret data from word processing equipment from even 20 years ago. No one outside museums or hobbyists can read an 800 bpi 9-track ![]() -inch magnetic tape from the very popular 1980 HP3000 Series III minicomputer. Over time, even such parameters as data encoding standards (e.g., Binary Coded Decimal (BCD), Extended Binary Coded Decimal (EBCDIC), and American Standard Code for Information Interchange (ASCII)) may change, making obsolete equipment difficult to use even if they can be located.

-inch magnetic tape from the very popular 1980 HP3000 Series III minicomputer. Over time, even such parameters as data encoding standards (e.g., Binary Coded Decimal (BCD), Extended Binary Coded Decimal (EBCDIC), and American Standard Code for Information Interchange (ASCII)) may change, making obsolete equipment difficult to use even if they can be located.

The most robust method developed to date for long-term storage of data is COM (Computer Output to Microfilm). Documents are printed to microfilm, appearing exactly as if they had been printed on paper and then microphotographed. Storage densities are high, storage costs are low, and, if necessary, the images can be read with a source of light and a simple lens or converted to the current machine-readable form using optical character recognition (OCR) software.

57.5 SAFEGUARDING BACKUPS.

Where and how backup volumes are stored affects their longevity, accessibility, and usability for legal purposes.

57.5.1 Environmental Protection.

Magnetic and optical media can be damaged by dust, mold, condensation, freezing, and excessive heat. All locations considered for storage of backup media should conform to the media manufacturer's environmental tolerances; typical values are 40 to 60 percent humidity and temperatures of about 50 to 75° F (about 10 to 25° C). In addition, magnetic media should not be stacked horizontally in piles; the housings of these devices are not built to withstand much pressure, so large stacks can cause damaging contact between the protective shell and the data storage surface. Electromagnetic pulses and magnetic fields are also harmful to magnetic backup media; mobile phones, both wireless and cellular, should be kept away from magnetic media. If degaussers are used to render data more difficult to read before discarding media (see Section 57.6), these devices should never be allowed into an area where magnetic disks or tapes are in use or stored.

57.5.2 On-Site Protection.

It is obviously unwise to keep backups in a place where they are subject to the same risks of destruction as the computer systems they are intended to protect. However, unless backups are made through telecommunications channels to a remote facility, they must spend at least some time in the same location as the systems on which they were made.

At a minimum, backup policies should stipulate that backups are to be removed to a secure, relatively distant location as soon as possible after completion. Temporary on-site storage areas that may be suitable for holding backups until they can be moved off-site, include specialized fire-resistant media storage cabinets or safes, secure media storage rooms in the data center, a location on a different floor of a multifloor building, or an appropriate location in a different building of a campus. What is not acceptable is to store backup volumes in a cabinet right next to the computer that was backed up. Even worse is the unfortunate habit of leaving backup volumes in a disorganized heap on top of the computer from which the data were copied.

In a small office, backups should be kept in a fire-resistant safe, if possible, while waiting to take the media somewhere else.

57.5.3 Off-Site Protection.

As mentioned, it is normal to store backups away from the computers and buildings where the primary copies of the backed-up data reside.

57.5.3.1 Care during Transport.

When sending backup media out for storage, operations staff should use lockable carrying cases designed for the specific media to be transported. If external firms, specializing in data storage, pick up media, they usually supply such cases as part of a contract. If media are being transported by corporate staff, it is essential to explain the dangers of leaving such materials in a car: In the summer cars can get so hot that they melt the media, whereas in winter they can get so cold that the media instantly attract harmful water condensation when they are brought inside. In any case, leaving valuable data in an automobile exposes them to theft.

57.5.3.2 Homes.

The obvious, but dangerous, choice for people in small offices is to send backup media to the homes of trusted employees. There are a number of problems with this storage choice:

- Although the employee may be trustworthy, members of that person's family may not be so. Especially where teenage and younger children are present, keeping an organization's backups in a private home poses serious security risks.

- Environmental conditions in homes may be incompatible with safe long-term storage of media. For example, depending on the cleaning practices of the household, storing backups in a cardboard box under the bed may expose the media to dust, insects, cats, dogs, rodents, and damage from vacuum cleaners. In addition, temperature and humidity controls may be inadequate for safe storage of magnetic media.

- Homeowner's insurance policies are unlikely to cover loss of an employer's property and surely will not cover consequential losses resulting from damage to crucial backup volumes.

- Legal requirements for a demonstrable chain of custody for corporate documentation on backup volumes will not be met if the media are left in a private home, where unknown persons may have access to them.

57.5.3.3 Safes.

There are no fireproof safes, only fire-resistant safes. Safes are available with different degrees of guaranteed resistance to specific temperatures commonly found in ordinary fires (those not involving arson and flame accelerants). Sturdy, small safes of 1 or 2 cubic feet are available for use in small offices or homes; they can withstand the heat during the relatively short time required to burn a house or small building down. They can withstand a fall through one or two floors without breaking open. However, for use in taller buildings, only more expensive and better-built safes are appropriate to protect valuable data.

57.5.3.4 Banks.

Banks have facilities for secured, environmentally controlled storage of valuables. Aside from the cost of renting and accessing such boxes, the main problem for backup storage in banks is that banks are open only part of the day; it is almost impossible to access backups in a safe deposit box after normal banking hours. In any case, it is impossible to rent such boxes with enough room for more than a few dozen small backup media. Banks are not usable as data repositories for any but the smallest organizations and should not be considered as a reasonable alternative even for them.

57.5.3.5 Data Vaults.

Most enterprises will benefit from contracting with professional, full-time operations that specialize in maintaining archives of backup media. Some of the key features to look for in evaluating such facilities include:

- Storage areas made of concrete and steel construction to reduce risk of fire

- No storage of paper documents in the same building as magnetic or optical media storage

- Full air-conditioning including humidity, temperature, and dust controls throughout the storage vaults

- Fire sensors and fire-retardant technology, preferably without the use of water

- Full-time security monitoring including motion detectors, guards, and tightly controlled access

- Uniformed, bonded personnel

- Full time, 24/7/365 data pickup and delivery services

- Efficient communications with procedures for authenticating requests for changes in the lists of client personnel authorized to access archives

- Evidence of sound business planning and stability

References from customers similar in size and complexity to the inquiring enterprise will help a manager make a wise choice among alternative suppliers.

57.5.3.6 Online Backups.

An alternative to making on-site backup copies is to pay a third party to make automatic backups via high-speed telecommunications channels and to store the data in a secure facility. Some firms involved in these services move data to magnetic or optical backup volumes, but others use RAID (see Section 57.2.3.1) for instant access to the latest backups. Additional features to look for when evaluating online backup facilities:

- Compatibility of backup software with the computing platform, operating system, and application programs

- Availability of different backup options: full, differential, incremental, and delta

- Handling of files that are held in an open state by application programs

- Availability and costs of sufficient bandwidth to support desired data backup rates

- Encryption of data during transmission and when stored at the service facility

- Strong access controls to limit access to stored data to authorized personnel

- Physical security at the storage site, and other criteria similar to those listed in Section 57.5.3.5

- Methods for restoring files from these backups, and the speed with which it can be accomplished

In a meta-analysis of 24 reviews of a total of 39 online backup services, ConsumerSearch concluded in 2007 that the best online backup service was MozyPro; other useful services included iBackup and Box.net.16

A related topic is the converse of online backups: backing up online services such as Gmail and AOL, where critical data reside on a server out of a user's control. For an e-mail service, use an e-mail client configured to access the service using Post Office Protocol (POP) or Internet Message Access Protocol (IMAP) (see Chapter 5 in this Handbook), or use scripting languages to extract data from the server to a hard disk.17

57.6 DISPOSAL.

Before throwing out backup media containing unencrypted sensitive information, operations and security staff should ensure that the media are unreadable. This section looks at the problem of data scavenging and then recommends methods for preventing such unauthorized data recovery.

57.6.1 Scavenging.

Discarded disk drives with fully readable information have repeatedly been found for sale by computer resellers, at auctions, at used-equipment exchanges, on eBay, and at flea markets and yard sales.18 In a formal study of the problem from November 2000 through August 2002, MIT scientists Simson Garfinkel and Abhi Shelat bought 158 used disk drives from many types of sources and studied the data they found on the drives. Using special analytical tools, the scientists found a total of 75 GB of readable data. They wrote:

With several months of work and relatively little financial expenditure, we were able to retrieve thousands of credit card numbers and extraordinarily personal information on many individuals. We believe that the lack of media reports about this problem is simply because, at this point, few people are looking to repurposed hard drives for confidential material. If sanitization practices are not significantly improved, it's only a matter of time before the confidential information on repurposed hard drives is exploited by individuals and organizations that would do us harm.19

Computer crime specialists have described unauthorized access to information left on discarded media as scavenging, browsing, and Dumpster-diving (from the trade-marked name of metal bins often used to collect trash outside office buildings).

Scavenging can take place within an enterprise; for example, there have been documented cases of criminals who arranged to read scratch tapes, used for temporary storage of data, before they were reused or erased. Often they found valuable data left by previous users. Operations policies should not allow scratch tapes, or other media containing confidential data, to be circulated; all scratch media, including backup media that are being returned to the available list, should be erased before they are put on the media rack.

Before deciding to toss potentially valuable documents or backup media into the trash can, managers should realize that in the United States, according to a U.S. Supreme Court ruling, discarded waste is not considered private property under the law. Anything that is thrown out is fair game for warrantless searches or inspection by anyone who can gain access to it, without violating laws against physical trespass. Readers in other jurisdictions should obtain legal advice on the applicable statutes.

Under these circumstances, the only reasonable protection against data theft is to make the trash unreadable.

57.6.2 Data and Media Destruction.

Most people know that when a file is erased or purged from a magnetic disk, most operating systems leave the information entirely or largely intact, only removing the pointers from the directory. Unerase utilities search the disk and reconstruct the chain of extents (areas of contiguous storage), usually with human intervention to verify that the data are still good.

57.6.2.1 Operating System Erase.

Multiuser operating systems remove pointers from the disk directory and return all sectors in a purged file to a disk's free space map, but the data in the original extents (sections of contiguous disk space) persist until overwritten, unless specific measures are taken to obliterate them.

Formatting a disk is generally believed to destroy all of its data; however, even formatting and overwriting files on magnetic media may not make them unreadable to sophisticated equipment. Since information on magnetic tapes and disks resides in the difference in intensity between highly magnetized areas (1s) and less-magnetized areas (0s), writing the same 0s or 1s in all areas to be obliterated merely reduces the signal-to-noise ratio. That is, the residual magnetic fields still vary in more or less the original pattern—they are just less easily distinguished. Using highly sensitive readers, a magnetic tape or disk that has been zeroed will yield much of the original information.

57.6.2.2 Field Research.

Garfinkel and Shelat reported on the many failed attempts to destroy (sanitize) data on disk drives, including erasure (leaves data almost entirely intact), overwriting (good enough or even perfect but not always properly applied), physical destruction (evidently, renders what is left of the drive unusable), and degaussing (using strong magnetic fields to distort the magnetic domains into unreadability).

With regard to ordinary file-system formatting, the authors noted:

most operating system format commands only write a minimal disk file system; they do not rewrite the entire disk. To illustrate this assertion, we took a 10-Gbyte hard disk and filled every block with a known pattern. We then initialized a disk partition using the Windows 98 FDISK command and formatted the disk with the format command. After each step, we examined the disk to determine the number of blocks that had been written…. Users might find these numbers discouraging: despite warnings from the operating system to the contrary, the format command overwrites barely more than 0.1 percent of the disk's data. Nevertheless, the command takes more than eight minutes to do its job on the 10-Gbyte disk—giving the impression that the computer is actually overwriting the data. In fact, the computer is attempting to read all of the drive's data so it can build a bad-block table. The only blocks that are actually written during the format process are those that correspond to the boot blocks, the root directory, the file allocation table, and a few test sectors scattered throughout the drive's surface.20

57.6.2.3 Clearing Data.

One way of clearing data21 on magnetic media is to overwrite several passes of random patterns. The random patterns make it far more difficult, but not impossible, to extract useful information from the discarded disks and tapes. For this reason, the U.S. Government no longer allows overwriting of classified media to obliterate data remanence when moving media to a lower level of protection. Small removable thumb drives of 2 GB, and Advanced Technology Attachment (ATA) disk drives of 15 GB or more can use a secure erase to purge data.22 ATA drives have secure erase in their firmware. Secure erase for thumb drives can be downloaded from the University of California, San Diego (UCSD) CMRR site.23

Degaussers can be used to remove data remanence physically. A degausser is a device that generates a magnetic field used to sanitize magnetic media. Degaussers are rated based on the type (i.e., low energy or high energy) of magnetic media they can purge. Degaussers operate using either a strong permanent magnet or an electromagnetic coil. These devices range from simple handheld units suitable for low-volume usage to high-energy units capable of erasing magnetic media either oxide or metal based, up to 1,700 Oersteds (Oe). These devices remove all traces of previously recorded data allowing media to be used again. The U.S. Government has approved certain degaussers for secure erasure of classified data. Degaussing of any hard drive assembly usually destroys the drive, as degaussing also erases firmware that manages the device. There are other downsides to using degaussers.

Ensconce Data Technology, a firm specializing in secure data destruction, has argued that software overwriting alone is not trustworthy because the choice of algorithm may be inadequate and because certain portions of the drive may not be overwritten at all. Degaussing is unreliable and even dangerous; sometimes drives are damaged so that they cannot be checked by users to evaluate the completeness of data wiping—even though expert data recovery specialists may be able to extract usable data from the degaussed disks. The strong magnetic fields can also unintentionally damage other equipment. Outsourcing degaussing introduces problems of having to store drives until pickup, losing control over data, and having to trust third parties to provide trustworthy records of the data destruction. Physical shredders are expensive and usually offered only by outside companies, leading to similar problems of temporary storage, relinquishing control, and dubious audit trails. Ensconce Data Technology's Digital Shredder is a small, portable hardware device that provides a wide range of interfaces called personality modules that allow a variety of disk drives to be wiped securely. The design objectives, quoting the company, were to provide:

- Destruction of data beyond forensic recovery

- Retention of care, custody, and control

- Certification and defendable audit trail

- Ease of deployment

- Ability to recycle the drive for reuse.

The unit can wipe up to three disks at once. It includes its own touch screen; offers user authentication with passwords to ensure that it is not misused by unauthorized personnel; provides positive indications through colored light-emitting diodes (LEDs) to show the current status of each bay; can format drives for a range of file systems; and can be used to reimage a drive by make bitwise copies from a master drive in one bay to a reformatted drive in another.