Deciding what a benchmark should measure depends on the kind of application for which it should assist performance tuning.

An application that is optimized for throughput has relatively simple needs. The only thing that matters is performing as many operations as possible in a given time interval. Used as a regression test, a throughput benchmark verifies that the application still can do x operations in y seconds on the baselined hardware. Once this criterion is fulfilled, the benchmark can be used to verify that it is maintained.

Again, as we have learned from the chapter on memory management, throughput alone is not usually a real life problem (except in, for example, batch jobs or offline processing). However, as can be easily understood, writing a benchmark that measures throughput is very simple. Its functionality can usually be extracted from a larger application without the need for elaborate software engineering tricks.

Throughput benchmarks can be improved to more accurately reflect real life use cases. Typically, throughput can be measured with a fixed response time demand. The previously mentioned and easily extractable throughput benchmark can usually, with relatively small effort, be modified to accommodate this.

If a fixed response time is added as a constraint to a throughput benchmark, the benchmark will also factor in latencies into its problem set. The benchmark can then be used to verify that an application keeps it service level agreements, such as preset response times, under different amounts of load.

Note

The internal JRockit JVM benchmark suite used by JRockit QA contains many throughput benchmarks with response time requirements. These benchmarks are used to verify that the deterministic garbage collector fulfils its service level agreements for various kinds of workloads.

Low latency is typically more important to customers than high throughput, at least for client/server type systems. Writing relevant benchmarks for low latency is somewhat more challenging.

Note

Normally, simple web applications can get through with response times on the order of a second or so. In the financial industry, however, applications that require pause time targets of less than 10 milliseconds, all the way down to single digits, are becoming increasingly common. A similar case can be made for the telecom industry that usually requests pause times of no more than 50 milliseconds. Both the customer and the JVM vendor need to do latency benchmarking in order to understand how to meet these challenges. For this, relevant benchmarks are required.

Benchmarking for scalability is all about measuring resource utilization. Good scalability means that service levels can be maintained while the workload is increasing. If an application does not scale well, it isn't fully utilizing the hardware. Consequently, throughput will suffer. In an ideal world, linearly increasing load on the application should at most linearly degrade service levels and performance.

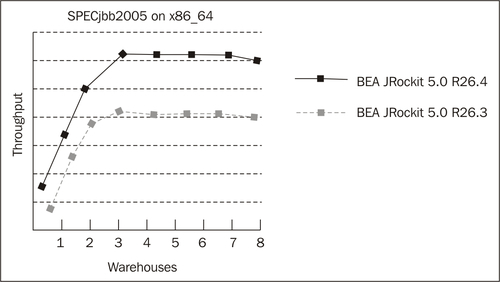

The following figure illustrates a nice example of near linear scalability on a per-core basis. It shows the performance in ops per second for an older version of the JRockit JVM running the well-known SPECjbb2005 benchmark. SPECjbb2005 is a multithreaded benchmark that gradually increases load on a transaction processing framework.

A SPECjbb run starts out using fewer load generating worker threads than cores in the physical machine (one thread per virtual warehouse in the benchmark). Gradually, throughout the run, threads are added, enabling more throughput (sort of an incremental warm-up). From the previous graph, we can see that adding more threads (warehouses) makes the throughput score scale linearly until the number of threads equals the number of cores, that is when saturation is reached. Adding even more warehouses maintains the same service level until the very end. This means that we have adequate scalability. SPECjbb will be covered in greater detail later in this chapter.

A result like this is good. It means that the application is indeed scalable on the JVM. Somewhat simplified, if data sets grow larger, all that is required to ensure that the application can keep up is throwing more hardware at it. Maintaining scalability is a complex equation that involves the algorithms in the application as well as the ability of the JVM and OS to keep up with increased load in the form of network traffic, CPU cycles, and number of threads executing in parallel.

Note

While scalability is a most desirable property, as a target goal, it is usually enough to maximize performance up to the most powerful hardware specification (number of cores, and so on) that the application will realistically be deployed on. Optimizing for good scalability on some theoretical mega-machine with thousands of cores may be wasted effort. Focusing too much on total scalability may also run the risk of decreasing performance on smaller configurations. It is actually quite simple to construct a naive but perfectly scalable system that has horrible overall performance.

Power consumption is a somewhat neglected benchmarking area that is becoming increasingly more important. Power consumption matters, not only in embedded systems, but also on the server side if the server cluster is large enough. Minimizing power consumption is becoming increasingly important due to cooling costs and infrastructure issues. Power consumption is directly related to datacenter space requirements. Virtualization is an increasingly popular way to get more out of existing hardware, but it also makes sense to benchmark power consumption on the general application level.

Optimizing an application for low power consumption may, for example, involve minimizing used CPU cycles by utilizing locks with OS thread suspension instead of spin locks. It may also be the other way around—doing less frequent expensive transitions between OS and application can be the key to low power usage instead.

One might also take a more proactive approach by making sure during development that performance criteria can be fulfilled on lower CPU frequencies or with the application bound only to a subset of the CPUs in a machine.

Naturally, these are just a few of the possible areas where application performance might need to be benchmarked. If performance for an application is important in a completely different area, another kind of benchmark will be more appropriate. Sometimes, just quantifying what "performance" should mean is surprisingly difficult.