CHAPTER 30

E-COMMERCE AND WEB SERVER SAFEGUARDS

Robert Gezelter

30.2 BUSINESS POLICIES AND STRATEGIES

30.2.1 Step 1: Define Information Security Concerns Specific to the Application

30.2.2 Step 2: Develop Security Service Options

30.2.3 Step 3: Select Security Service Options Based on Requirements

30.2.4 Step 4: Ensures the Ongoing Attention to Changes in Technologies and Requirements

30.2.5 Using the Security Services Framework

30.3.1 Web Site–Specific Measures

30.3.5 Working with Law Enforcement

30.3.8 Appropriate Responses to Attacks

30.4.3 Loss of Customers/Business

30.4.5 Proactive versus Reactive Threats

30.4.6 Threat and Hazard Assessment

30.5.1 Ubiquitous Internet Protocol Networking

30.5.8 Multiple Security Domains

30.5.9 What Needs to Be Exposed?

30.5.12 Maintaining Site Integrity

30.6.4 Multiple Security Domains

30.6.8 Read-Only File Security

30.7.2 Customer Monitoring, Privacy, and Disclosure

30.7.4 Application Service Providers

30.1 INTRODUCTION.

Today, electronic commerce involves the entire enterprise. While the most obvious e-commerce applications involve business transactions with outside customers on the World Wide Web (WWW or Web), they are merely the proverbial tip of the iceberg. The presence of e-commerce has become far more pervasive, often involving the entire logistical and financial supply chains that are the foundations of modern commerce. Even the smallest organizations now rely on the Web for access to services and information.

The pervasive desire to improve efficiency often causes a convergence between the systems supporting conventional operations with those supporting the organization's online business. It is thus common for internal systems at bricks-and-mortar stores to utilize the same back-office systems as are used by Web customers. It is also common for kiosks and cash registers to use wireless networks to establish connections back to internal systems. These interconnections have the potential to provide intruders with access directly into the heart of the enterprise.

The TJX case, which came to public attention in the beginning of 2007, was one of a series of large-scale compromises of electronically stored information on back-office and e-commerce systems. Most notably, the TJX case appears to have started with an insufficiently secured corporate network and the associated back-office systems, not a Web site penetration. This breach escalated into a security breach of corporate data systems. It has been reported that at least 94 million credit cards were compromised.1 On November 30, 2007, it was reported that TJX, the parent organization of stores including TJ Maxx and Marshall's, agreed to settle bank claims related to VISA cards for US$ 40.9M.2

E-commerce has now come of age, giving rise to fiduciary risks that are important to senior management and to the board of directors. The security of data networks, both those used by customers and those used internally, now has reached the level where it significantly affects the bottom line. TJX has suffered both monetarily and in public relations, with stories concerning the details of this case appearing in the Wall Street Journal, the New York Times, Business Week, and many industry trade publications. Data security is no longer an abstract issue of concern only to technology personnel. The legal settlements are far in excess of the costs directly associated with curing the technical problem.

Protecting e-commerce information requires a multifaceted approach, involving business policies and strategies as well as the technical issues more familiar to information security professionals.

Throughout the enterprise, people and information are physically safeguarded. Even the smallest organizations have a locked door and a receptionist to keep outsiders from entering the premises. The larger the organization, the more elaborate the precautions needed. Small businesses have simple locked doors; larger enterprises often have many levels of security, including electronic locks, security guards, and additional levels of receptionists. Companies also jealously guard the privacy of their executive conversations and research projects. Despite these norms, it is not unusual to find that information security practices are weaker than physical security measures. Connection to the Internet (and within the company, to the intranet) worsens the problem by greatly increasing the risk and decreasing the difficulty, of attacks.

30.2 BUSINESS POLICIES AND STRATEGIES.

In the complex world of e-commerce security, best practices are constantly evolving. New protocols and products are announced regularly. Before the Internet explosion, most companies rarely shared their data and their propriety applications with any external entities, and information security was not a high priority. Now companies taking advantage of e-commerce need sound security architectures for virtually all applications. Effective information security has become a major business issue. This chapter provides a flexible framework for building secure e-commerce applications and assistance in identifying the appropriate and required security services. The theoretical examples shown are included to facilitate the reader's understanding of the framework in a business-to-customer (B2C) and business-to-business (B2B) environment.

A reasonable framework for e-commerce security is one that:

- Defines information security concerns specific to the application.

- Defines the security services needed to address the security concerns.

- Selects security services based on a cost-benefit analysis and risk versus reward issues.

- Ensures the ongoing attention to changes in technologies and requirements as both threats and application requirements change.

This four-step approach is recommended to define the security services selection and decision-making processes.

30.2.1 Step 1: Define Information Security Concerns Specific to the Application.

The first step is to define or develop the application architecture and the data classification involved in each transaction. This step considers how the application will function. As a general rule, if security issues are defined in terms of the impact on the business, it will be easier to discuss with management and easier to define security requirements.

The recommended approach is to develop a transactional follow-the-flow diagram that tracks transactions and data types through the various servers and networks. This should be a functional and logical view of how the application is going to work—that is, how transactions will occur, what systems will participate in the transaction management, and where these systems will support the business objectives and the organization's product value chain. Data sources and data interfaces need to be identified, and the information processed needs to be classified. In this way a complete transactional flow can be represented. (See Exhibit 30.1.)

EXHIBIT 30.1 Trust Levels for B2C Security Services

Common tiered architecture points include:

- Clients. These may be PCs, thin clients (devices that use shared applications from a server and have small amounts of memory), personal digital assistants (PDAs), and wireless application protocol (WAP) telephones.

- Servers. These may include World Wide Web, application, database, and middleware processors, as well as back-end servers and legacy systems.

- Network devices. Switches, routers firewalls, NICs, codecs, modems, and internal and external hosting sites.

- Network spaces. Network demilitarized zones (DMZs), intranets, extranets, and the Internet.

It is important at this step of the process to identify the criticality of the application to the business and the overriding security concerns: transactional confidentiality, transactional integrity, or transactional availability. Defining these security issues will help justify the security services selected to protect the system. The more completely the architecture can be described, the more thoroughly the information can be protected via security services.

30.2.2 Step 2: Develop Security Service Options.

The second step considers the security services alternatives for each architecture component and the data involved in each transaction. Each architectural component and data point should be analyzed and possible security services defined for each. Cost and feasibility should not be considered to any great degree at this stage. The objective is to form a complete list of security service options with all alternatives considered. The process should be comparable with, or use the same techniques as, brainstorming. All ideas, even if impractical or far-fetched, should be included.

Decisions should not be made during this step; that process is reserved for Step 3.

The information security organization provides services to an enterprise. The services provided by information security organizations vary from company to company. Several factors will determine the required services, but the most significant considerations include:

- Industry factors

- The company's risk appetite

- Maturity of the security function

- Organizational approach (centralized or decentralized)

- Impact of past security incidents

- Internal organizational factors

- Political factors

- Regulatory factors

- Perceived strategic value of information security

Several factors contribute to the services that information security organizations provide. “Security services” are defined as safeguards and control measures to protect the confidentiality, integrity, and accountability of information and computing resources. Security services that are required to secure e-commerce transactions need to be based on the business requirements and on the willingness to assume or reduce the risk of the information being compromised. Information security professionals can be subject-matter experts, but they are rarely equipped to make the business decisions required to select the necessary services. Twelve security services that are critical for successful e-commerce security have been identified:

- Policy and procedures are a security service that defines the amount of information security that the organization requires and how it will be implemented. Effective policy and procedures will dovetail with system strategy, development, implementation, and operation. Each organization will have different policies and procedures; best practice dictates that organizations have policies and procedures based on the risk the organization is willing to take with its information. At a minimum, organizations should have a high-level policy that dictates the proper use of information assets and the ramifications of misuse.

- Confidentiality and encryption are a security service that secures data while they are stored or in transit from one machine to another. A number of encryption schemes and products exist; each organization needs to identify those products that best integrate with the application being deployed. For a discussion of cryptography, see Chapter 7 in this Handbook.

- Authentication and identification are a security service that differentiates users and verifies that they are who they claim to be. Typically, passwords are used, but stronger methods include tokens, smart cards, and biometrics. These stronger methods verify what you have (e.g., token) or who you are (e.g., biometrics), not just what you know (password). Two-factor authentication combines two of these three methods and is referred to as strong authentication. For more on this subject, see Chapter 28 in this Handbook.

- Authorization determines what access privileges a user requires within the system. Access includes data, operating system, transactional functions, and processes. Access should be approved by management who own or understand the system before access is granted. Authorized users should be able to access only the information they require for their jobs.

- Authenticity is a security service that validates a transaction and binds the transaction to a single accountable person or entity. Also called nonrepudiation, authenticity ensures that a person cannot dispute the details of a transaction. This is especially useful for contract and legal purposes.

- Monitoring and audit provide an electronic trail for a historical record of the transaction. Audit logs consist of operating system logs, application transaction logs, database logs, and network traffic logs. Monitoring these logs for unauthorized events is considered a best practice.

- Access controls and intrusion detection are technical, physical, and administrative services that prevent unauthorized access to hardware, software, or information. Data are protected from alteration, theft, or destruction. Access controls are preventive—stopping unauthorized access from occurring. Intrusion detection catches unauthorized access after it has occurred, so that damage can be minimized and access cut off. These controls are especially necessary when confidential or critical information is being processed.

- Trusted communication is a security service that assures that communication is secure. In most instances involving the Internet, this means that the communication will be encrypted. In the past, communication was trusted because it was contained within an organization's perimeter. Communication is currently ubiquitous and can come from almost anywhere, including extranets and the Internet.

- Antivirus is a security service that prevents, detects, and cleans viruses, Trojan horse programs, and other malware.

- System integrity controls are security services that help to assure that the system has not been altered or tampered with by unauthorized access.

- Data retention and disposal are a security service that keeps required information archived, or deletes data when they are no longer required. Availability of retained data is critical when an emergency exists. This is true whether the problem is a systems outage or a legal process, whether caused by a natural disaster or by a terrorist attack (e.g., September 11, 2001).

- Data classification is a security service that identifies the sensitivity and confidentiality of information. The service provides guides for information labeling, and for protection during the information's life.

Once an e-commerce application has been identified, the team must identify the security issues with that specific application and the necessary security services. Not all of the services will be relevant, but using a complete list and excluding those that are not required will assure a comprehensive assessment of requirements, with appropriate security built into the system's development. In fact, management can reconcile the services accepted with their level of risk acceptance.

30.2.3 Step 3: Select Security Service Options Based on Requirements.

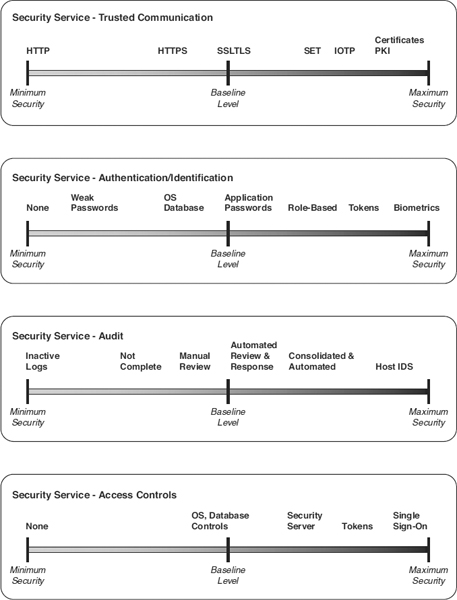

The third step uses classical cost-benefit and risk management analysis techniques to make a final selection of security service options. However, we recommend that all options identified in Step 3 be distributed along a continuum, such as shown in Exhibit 30.2, so that they can be viewed together, and compared.

Gauging and comparing the level of security for each security service and the data within the transaction will facilitate the decision process. Feasible alternatives can then be identified and the best solution selected based on the requirements. The most significant element to consider is the relative reduction in risk of each option, compared with the other alternatives. The cost-benefit analysis is based on the risk versus reward issues. The effectiveness information is very useful in a cost-benefit model.

Four additional concepts drive the security service option selection:

- Implementation risk, or feasibility

- Cost to implement and support

- Effectiveness in increasing control, thereby reducing risk

- Data classification

Implementation risk considers the feasibility of implementing the security service option. Some security systems are difficult to implement due to factors such as product maturity, scalability, complexity, and supportability. Other factors to consider include skills available, legal issues, integration required, capabilities, prior experience, and limitations of the technology.

Cost to implement and support measures the costs of hardware and software implementation, support, and administration. Consideration of administration issues is especially critical because high-level support of the security service is vital to an organization's success.

Effectiveness measures the reduction of risk proposed by a security service option once it is in production. Risk can be defined as the impact and likelihood of a negative event occurring after mitigating strategies have been implemented. An example of a negative event is the theft of credit card numbers from a business's database. Such an event causes not only possible losses to consumers but also negative public relations that may impact future business. Effective security service options reduce the risk of a negative event occurring.

EXHIBIT 30.2 Continuum of Options

Data classification measures the sensitivity and confidentiality of the information being processed. Data must be classified and protected from misuse, disclosure, theft, or destruction, regardless of storage format, throughout their life (from creation through destruction). Usually the originator of the information is considered to be the owner of the information and is responsible for classification, identification, and labeling. The more sensitive and confidential the information, the more information security measures will be required to safeguard and protect it.

30.2.4 Step 4: Ensures the Ongoing Attention to Changes in Technologies and Requirements.

The only constant in this analysis is the need to evolve and to address ever-increasing threats and technologies. Whatever security approaches the preceding steps identify, they must always be considered in the context of the continuing need to update the selected approaches. Changes will be inevitable, whether they arrive from compliance, regulation, technological advances, or new threats.

30.2.5 Using the Security Services Framework.

The next two sections are examples to demonstrate the power of the security services methodology. The first example is a B2C model; the business could be any direct-selling application. The second example is a B2B model. Both businesses take advantage of the Internet to improve their product value chain. The examples are a short demonstration of the security services methodology, neither complete nor representative of any particular application or business.

30.2.5.1 Business-to-Customer Security Services.

The B2C company desires to contact customers directly through the Internet, and allow them to enter their information into the application. These assumptions are made to prepare this B2C system example:

- Internet-facing business

- Major transactions supported

- External customer-based system, specifically excluding support, administration, and operations

- Business-critical application

- Highly sensitive data classification

- Three-tiered architecture

- Untrusted clients, because anyone on the Internet can be a customer

Five layers must be secured:

- The presentation layer is the customer interface, what the client sees or hears using the Web device. The client is the customer's untrusted PC or other device. The security requirements at this level are minimal because the company will normally not dictate the security of the customer. The identification and authentication done at the presentation level are those controls associated with access to the device. The proliferation of appliances in the client space (e.g., traditional PCs, thin desktops, and PDAs) makes it difficult to establish a uniform access control procedure at this level. Although this layer is the terminal endpoint of the secure connection, it is not inherently trustworthy, as has been illustrated by all too many incidents involving public computers in cafes, hotels, and other establishments.

- The network layer is the communication connection between the business and the customer. The client, or customer, uses an Internet connection to access the B2C Web applications. The security requirements are minimal, but sensitive, and confidential traffic will need to be encrypted.

- The middle layer is the Web server that connects to the client's browser and can forward and receive information. The Web server supports the application by being an intermediary between the business and the customer. The Web server needs to be very secure. Theft, tampering, and fraudulent use of information needs to be prevented. Denial of service and Web site defacement are also common risks that need to be prevented in the middle layer.

- The application layer is where the information is processed. The application serves as an intermediary between the customer requests and the fulfillment systems internal to the business. In some examples, the application server and database server are the same because both the application and database reside on the same server. However, they could reside within the Web server in other cases.

- The internal layer is comprised of the business's legacy systems and databases that support customer servicing. Back-end servers house the supporting application, including order processing, accounts receivable, inventory, distribution, and other systems.

For each of these five levels, we need four security services:

- Trusted communications

- Authentication/identification

- Audit

- Access controls

Step 1: Define Information Security Concerns Specific to the Application. Defining security issues will be particular to the system being implemented. To understand the risk of the system, the best starting place is with the business risk; then defining risks at each element of the architecture.

Business Risk

- The application needs high availability, because customers will demand contact at off-hours and on weekends.

- The Web pages need to be secure from tampering and cybervandalism, because the business; is concerned about the loss of customer confidence as a result of negative publicity.

- Customers must be satisfied with the level of service.

- The system will process customer credit card information and will be subject to the privacy regulations of the Gramm-Leach-Bililey Act, 15 USC §§ 6801–09.

Technology Concerns

Of the five architectural layers in this example, four will need to be secured:

- The presentation layer will not be secured or trusted. Communications between the client and the Web server will be encrypted at the network layer.

- The network layer will need to filter unwanted traffic, to prevent denial of service (DoS) attacks and to monitor for possible intrusions.

- The middle layer will need to prevent unauthorized access, be tamper-proof, contain effective monitoring, and support efficient and effective processing.

- The application layer will need to prevent unauthorized access, support timely processing of transactions, provide effective audit trails, and process confidential information.

- The internal layer will need to prevent unauthorized access, especially through Internet connections, and to protect confidential information during transmission, processing, and storage.

Step 2: Develop Security Services Options. The four security services reviewed in this example are the most critical in an e-commerce environment. Other services such as nonrepudiation and data classification are important but not included in order to simplify the example. Services elected are:

- Trusted communication

- Authentication and identification

- Monitoring and auditing

- Access control

Many security services options are available for the B2C case, with more products and protocols on the horizon. There are five architectural layers for each of the services defined in Step 1.

- Presentation layer. Several different options can be selected for trusted communication. Hypertext transfer protocol (HTTP) is the most common, with secure socket layer (SSL) certificates in a PKI, or digital signatures, being even less common, and WAP for wireless communications and transport security layer protocol (TLS) for encrypted Internet communications being the most rare. Because the client is untrusted, the client's authentication, audit, or access control methods cannot be relied on.

- Network layer. Virtual private networks (VPNs) are considered best practice for secure network layer communication. Firewalls are effective devices for securing network communication. The client may have a personal firewall. If the client is in a large corporation, there is a significant likelihood that a firewall will intervene in communications. If the client is using a home or laptop computer, then a personal firewall may protect traffic. There will also be a firewall on the B2C company side of the Internet.

- Middle layer. The Web server and the application server security requirements are significant. Unauthorized access to the Web server can result in Web site defacement by hackers who change the Web data. More important, access to the Web or application server can lead to theft, manipulation, or deletion of customer or proprietary data. Communication between the Web server and the client needs to be secure in e-commerce transactions. HTTP is the most common form of browser communication. In 2000, it was reported that over 33 percent of credit card transactions were using unsecured HTTP.3 In 2007, VISA reported that compliance with the Payment Card Industry Data Security Standard (PCI DSS) had improved to 77 percent of the largest merchants in the United States,4 still far from universal. This lack of encryption is a violation of both the merchant's agreement and the PCI, but episodes continue to occur. In the same vein, it is not unusual for organizations to misuse encryption, whether it involves self-signed, expired, or not generally recognized certificates. (See Chapter 37 in this Handbook.) SSL and HTTPS are the most common secure protocols, but the encryption key length and contents is critical: The larger the key, the more secure the transaction is from brute-force decryption. (See Chapter 7.) Digital certificates in a PKI and digital signatures are not as common, but they are more effective forms of security.

- Application layer. The application server provides the main processing for the Web server. This layer may include transaction processing and database applications; it needs to be secure to prevent erroneous or fraudulent processing. Depending on the sensitivity of the information processed, the data may need to be encrypted. Interception and the unauthorized manipulation of data are the greatest risks in the application layer.

- Internal layer. In a typical example, the internal layer is secured by a firewall protecting the external-facing system. This firewall helps the B2C company protect itself from Internet intrusions. Database and operating system passwords and access control measures are required.

Management can decide which security capability is required and at what level. This format can be repeated to discuss security services at all levels and all systems, not just e-commerce-related systems.

Step 3: Select Security Service Options Based on Requirements. In Step 3 the B2C company can analyze and select the security services that best meet its legal, business, and information security requirements. There are four stages required for this analysis:

- Implementation risk, or feasibility

- Cost to implement and support

- Effectiveness in increasing control, thereby reducing risk

- Data classification

Implementation risk is a function of the organization's ability to effectively roll out the technology. In this example, we assume that implementation risk is low and resources are readily available to implement the technology.

Costs to implement and support are paramount to the decision making process. Both costs need to be considered together. Unfortunately, the cost to support is difficult to quantify and easily overlooked. In this example, resources are available to both implement and support the technology.

Effectiveness in increasing control is an integral part of the benefit and risk management decisions. Each component needs to be considered in order to determine cross-benefits where controls overlap and supplement other controls. In this case, the control increase is understood and supported by management.

Data classification is the foundation for requirements. It will help drive the cost-benefit discussions because it captures the value of the information to the underlying business. In this example, the data are considered significant enough to warrant additional security measures to safeguard the data against misuse, theft, and loss.

There are many technology decisions required to secure the example environment. Management can use this approach to plot the security levels required by the system. For example, for system audit services, in order of minimal service to maximum:

- The minimal level of security is to have systems with a limited number of system events logged. For example, the default level of logging from the manufacturer is used but does not contain all of the required information. The logs are not reviewed but are available for forensics in the event of a security incident.

- A higher level of security is afforded with a log that records more activities based on requirements and not, for example, the manufacturer's default level. As in the minimal level of security, the activities are logged and available to support forensics but are not reviewed. In this case, more types of information are recorded in the system log, but it still may not contain all that is required.

- A sufficient log is kept on each server and is manually monitored for anomalies and potential security events.

- The log is automatically reviewed by software on each server.

- System logs are consolidated onto a centralized security server. Data from the system logs are transmitted to the centralized security server, and software is then used to scan the logs for specific events that require attention. Events such as attempts to gain escalated privileges to root or administrative access can be flagged for manual review.

- The maximum service level is a host-based intrusion detection system (IDS) used to scan the system logs for anomalies and possible security events. Once detected, action needs to be taken to resolve the intrusion. The procedure should include processes such as notification, escalation, and automated defensive response.

30.2.5.2 Business-to-Business Security Services.

The second case study uses the security services framework in a B2B example. Following is a theoretical discussion of how the framework can be applied to B2B e-commerce security. These assumptions may be made in this B2B system example:

- Internet-facing.

- Supports major transactions.

- Descriptions will be external and customer based (excluding support, administration, and operations security services).

- Trusted communication is required.

- Three-tier architecture.

- Untrusted client.

- Business-critical application.

- Data are classified as highly sensitive.

There are five layers in this example that need to be secured:

- The presentation layer is the customer interface and is what the client sees or hears using the Web device. The client is the customer's untrusted PC, but more security constraints can be applied because the business can dictate enhanced security. As noted previously, the security of the presentation layer is complicated by the wide range of potential client devices that may be employed.

- The application layer is where the information is processed. The application serves as an intermediary between the business customer's requests and the fulfillment systems internal to the business (the back-end server). The application server is the supporting server and database.

- The customer internal layer is the interface between the application server supporting the system at the customer's business location, and the customer's own internal legacy applications and systems.

- The network layer is the communication connection between the business and another business. The Internet is used to connect the two businesses. Sensitive and confidential traffic will need to be encrypted. Best practice is to have the traffic further secured using a firewall.

- The internal layer is the business's legacy systems that support customer servicing. The back-end server houses the supporting systems, including order processing, accounts receivable, inventory, distribution, and other systems.

The four security services are:

- Trusted communications

- Authentication/identification

- Audit

- Access controls

Step 1: Define Information Security Concerns Specific to the Application. Defining security issues will be particular to the system being implemented. To understand the risk of the system, it is best to start with the business risk, then define risk at each element of the architecture.

Business Risk

- Communication between application servers needs to be very secure. Data must not be tampered with, stolen, or misrouted.

- Availability is critical during normal business hours.

- Cost savings realized by switching from electronic data interchange (EDI) to the Internet is substantial, and will more than cover the costs of the system.

Technology Concerns. There are six architectural layers in this example, five of which need to be secured:

- The presentation layer will not be secured or trusted. The communication between the client and the customer application is trusted because it uses the customer's private network.

- The application server will need to be secure. Traffic between the two application servers will need to be encrypted. The application server is inside the customer's network and demonstrates a potentially high, and perhaps unnecessary degree of trust between the two companies (see § 30.6.5).

- The customer's internal layer will be secured by the customer.

- The network layer needs to filter out traffic that is not required, prevent DoS attacks, and monitor for possible intrusions. Two firewalls are shown: one to protect the client and the other to protect the B2B company.

- The application layer will need to prevent unauthorized access, support timely processing of transactions, provide effective audit trails, and process confidential information.

- The internal layer will need to prevent unauthorized access (especially through Internet connections) and protect confidential information during transmission, processing, and storage.

Step 2: Develop Security Services Options. There are four security services reviewed in this example. Others could have been included, such as authenticity, nonrepudiation, and confidentiality, but they have been excluded to simplify this example. Elected security services include:

- Trusted communication

- Authentication/identification

- Audit

- Access control

Many security services options are available for B2B environments.

- Presentation layer. Several different options can be selected for communication. HTTP is the most common. The communications between the presentation layer residing on the client device and the application server, in this example, are internal to the customer's trusted internal network and will be secured by the customer.

- Application layer. Communication between the two application servers needs to be secure. The easiest and most secure method of peer-to-peer communication is via a VPN.

- Customer internal. Communications between the customer's application server and the customers back-end server are internal to the customer's trusted internal network and will be secured by the customer.

- Network layer. It is common in a B2B environment that a trusted network is created via a VPN. The firewalls will probably participate in these communications, but hardware solutions are also possible.

- Application layer. The application server is at both the customer and B2B company sites. VPN is the most secure communication method. The application server also needs to communicate with the internal layer, and this traffic should be encrypted as well.

- Internal layer. The internal layer may be secured with another firewall from the external-facing system. This firewall helps the B2B company to protect itself from intrusions and unauthorized access. In this example, a firewall is not assumed so the external firewall and DMZ need to be very secure.

Intrusion detection, log reading, and other devices can easily be added and discussed with management. This format can be repeated to discuss security services at all levels and all systems, not just e-commerce-related systems.

Step 3: Develop Security Service Options. In Step 3, the B2B company can analyze and select the security services that best meet its legal, business, and information security requirements. The biggest difference between B2C and B2B systems is that the B2C system assumes no level of trust. The B2B system assumes trust, but additional coordination and interface with the B2B customer or partner is required. This coordination and interoperability must not be underestimated, because they may prove difficult and expensive to resolve. There are four stages required for this analysis:

- Implementation risk, or feasibility

- Cost to implement and support

- Effectiveness in increasing control, thereby reducing risk

- Data classification

Implementation risk is a function of the organization's ability to effectively roll out the technology. In this example, we assume that implementation risk is low and resources are readily available to implement the technology.

Cost to implement and support are paramount to the decision-making process. Both businesses' costs need to be considered. Unfortunately, the cost to support is difficult to quantify and easily overlooked. In this example, resources are available both to implement and to support the technology.

Effectiveness in increasing control is an integral part of the benefit and risk management analysis. Each security component needs to be considered in order to determine cross-benefits where controls overlap and supplement others. In this example, increased levels of control are understood and supported by management.

Data classification is the foundation for requirements and will help drive the cost-benefit discussions because it captures the value of the information to the underlying business. In this example, the data are considered significant enough to warrant additional security measures to safeguard the data against misuse, theft, and loss.

Each security service can be defined along a continuum, with implementation risk, cost, and data classification all considered. Management can use this chart to plot the security levels required by the system. This example outlines the effectiveness of security services options relative to other protocols or products. Each organization should develop its own continuums and provide guidance to Web developers and application programmers as to the correct uses and standard settings of the security services. For example, for authentication/identification services, in order of minimal service to maximum:

- The minimal level of security is to have no passwords.

- Weak passwords (e.g., easy to guess, shared, poor construction) are better than no passwords but still provide only a minimal level of security.

- Operating system or database level passwords usually allow too much access to the system but can be effectively managed.

- Application passwords are difficult to manage but can be used to restrict data access to a greater degree.

- Role-based access distinguishes users by their need to know to support their job function. Roles are established and users are grouped by their required function.

- Tokens are given to users and provide for two-part authentication. Passwords and tokens are combined for strong authentication.

- Biometrics are means to validate the person claiming to be the user via fingerprints, retina scans, or other unique body function.

For more information on identification and authentication, see Chapters 28 and 29 in this Handbook.

30.2.6 Framework Conclusion.

Internet e-commerce has changed the way corporations conduct business with their customers, vendors, suppliers, and business units. The B2B and B2C sectors will likely continue to grow. Despite security concerns, the acceleration toward increased use of the Internet as a sales, logistics, and marketing channel continues. The challenge for information security professionals' is to keep pace with this change from a security perspective, but not to impede progress. Another equal challenge is that the products that secure the Internet are new and not fully functional or mature. The products will improve, but meanwhile, existing products must be implemented, and later retrofitted, with improved and more secure security services. This changing environment, including the introduction of ever more serious and sophisticated threats, will remain difficult to secure.

The processes described in this section will allow the security practitioner to provide business units with a powerful tool to communicate, select, and implement information security services. Three steps were described and demonstrated with two examples. The process supports decision making. Decisions can be made and readily documented to demonstrate cost effectiveness of the security selections. The risk of specific decisions can be discussed and accepted by management. The trade-offs between cost and benefit can be calculated and discussed. Therefore, it becomes critical that alternatives be reviewed and good decisions made. The processes supporting these decisions need to be efficient and quickly applied. The information security services approach will allow companies to implement security at a practical pace. Services not selected are easily seen. The risk of not selecting specific security services needs to be accepted by management.

30.3 RULES OF ENGAGEMENT.

The Web is a rapidly evolving, complex environment. Dealing with customers electronically is a challenge. Web-related security matters raise many sensitive security issues. Attacks against a Web site always need to be taken seriously. Correctly differentiating “false alarms” from real attacks continues to present a challenge. As an example, the Victoria's Secret online lingerie show, in February 1998, exceeded even the most optimistic expectations of its creators, and the volume of visitors caused severe problems. Obviously, the thousands of people were not attacking the site; they were merely a virtual mob attempting to access the same site at the same time. Similar episodes have occurred when sites were described as interesting on Usenet newsgroups. This effect also occurs with social networking sites such as YouTube, Facebook, and others, where a virtual tidal wave of requests can occur without warning. Physical mobs are limited by transportation, timing, and costs; virtual mobs are solely limited by how many can attempt to access a resource simultaneously, from locations throughout the world.

30.3.1 Web Site–Specific Measures.

Protecting a Web site means ensuring that the site and its functions are available 24 hours a day, seven days a week, and 365 days a year. It also means ensuring that the information exchanged with the site is accurate and secure.

The preceding section focused on protecting Internet-visible systems, predominantly those systems used within the company to interact with the outside world. This section focuses on issues specific to Web interactions with customers as well as to supply and distribution chains. Practically speaking, the Web site is an important, if not the most important, component of an organization's interface with the outside world.

Web site protection lies at the intersection of technology, strategy, operations, customer relations, and business management. Web site availability and integrity directly affect the main streams of cash flow and commerce: an organization's customers, production chains, and supply chains. This is in contrast to the general Internet-related security issues examined in the preceding section, which primarily affect those inside the organization.

Availability is the cornerstone of all Web-related strategies. Idle times have become progressively rarer. Depending on the business and its markets, there may be some periods of lower activity. In the financial trading community, there remain only a few small windows during a 24-hour period when updates and maintenance can be performed. As global business becomes the norm, customers, suppliers, and distributors increasingly expect information, and the ability to effect transactions, at any time of the day or night, even from modest-size enterprises. On the Internet, “nobody knows that you are a dog” also means “nobody knows that you are not a large company.” The playing field has indeed been leveled, but it was not uniformly raised or lowered, but expectations have increased while capital and operating expenses have dramatically dropped.

Causation is unrelated to impact. The overwhelming majority of Web outages are caused by unglamorous problems. High-profile, deliberate attacks are much less frequent than equipment and personnel failures. The effect on the business organization is indistinguishable. Having a low profile is no defense against random scanning attack.

External events and their repercussions can also wreak havoc, both directly and indirectly. The September 11, 2001, terrorist attacks that destroyed New York City's World Trade Center complex had worldwide impact, not only on systems and firms located in the destroyed complex. Telecommunications infrastructure was damaged or destroyed, severing Internet links for many organizations. Parts supply and all travel was disrupted when North American airspace was shutdown. Manhattan was sealed to exits and entries, while within the city itself, and throughout much of the world, normal operations were suspended. The September 11 attacks were extraordinarily disruptive, but security precautions similar to those described throughout this Handbook served to ameliorate damage to Web operations and other infrastructure elements of those concerns that had implemented them. Indeed, the existence of the Web and the resulting ability to organize groups without physical presence proved a means to ameliorate the damage from the attacks, even to firms that had a major presence in the World Trade Center. In the period following the attacks on the World Trade Center, Morgan Stanley and other firms that had offices in the affected area implemented extensive telecommuting and Web-based interactions first to account for their staffs5 and then to enable work to continue.6

Best practices and scale are important. Some practices, issues, and concerns at first glance appear relevant only to very large organizations, such as Fortune 500 companies. In fact, this is not so. Considering issues in the context of a large organization permits them to appear magnified and in full detail. Smaller organizations are subject to the same issues and concerns but may be able to implement less formal solutions. “Formal” does not necessarily imply written procedures. It may mean that certain computer-related practices, such as modifying production facilities in place, are inherently poor ideas and should be avoided. Very large enterprises might address the problems by having a separate group, with separate equipment, responsible for operating the development environment.

30.3.2 Defining Attacks.

Repeated, multiple attempts to connect to a server could be ominous, or they could be nothing more than a customer with a technical problem. Depending on the source, large numbers of failed connects or aborted operations coming from gateway nodes belonging to an organization could represent a problem somewhere in the network, an attack against the server, or anything in between. It could also represent something no more ominous than a group of users within a locality accessing a Web resource through a firewall.

30.3.3 Defining Protection.

There is a difference between protecting Internet-visible assets and protecting Web sites. For the most part, Internet-visible assets are not intended for public use. Thus, it is often far easier to anticipate usage volumes and to account for traffic patterns. With Web sites, activity is subject to the vagaries of the worldwide public. A dramatic surge in traffic could be an attack, or it could be an unexpected display of the site's URL in a television program or in a relatively unrelated news story. Differentiating between belligerence and popularity is difficult.

Self-protective measures that do not impact customers are always permissible. How-ever, care must be exercised to ensure that the measures are truly impact free. As an example, some sites, particularly public FTP servers, often require that the Internet protocol (IP) address of the requesting computer have an entry in the inverse domain name system, which maps IP addresses to host names (e.g., node 192.168.0.1 has a PTR [pointer record] 1.0.168.192.in-addr.arpa) (RFC1034, RFC1035; Mockapetris 1987a, 1987b) as opposed to the more widely known domain name system database, which maps host names into IP addresses. It is true that many machines do have such entries, but it is also true that many sites, including company networks, do not provide inverse DNS information. Whether this entire population should be excluded from the site is a policy and management decision, not a purely technical decision. Even a minuscule incident rate on a popular WWW site can be catastrophic, both for the provider and for the naive end user who has no power to resolve the situation.

30.3.4 Maintaining Privacy.

Logging interactions between customers and the Web site is also a serious issue. A Web site's privacy policy is again a managerial, legal, and customer relations issue with serious overtones. Technical staff needs to be conscious that policies, laws, and other issues may dictate what information may be logged, where it can be stored, and how it may be used. For example, the 1998 Children's Online Privacy Protection Act (COPPA) (15 U.S.C. § 6501 et seq.) makes it illegal to obtain name and address information from children under the age of 13 in the United States. Many firms are party to agreements with third-party organizations such as TRUSTe,7 governing the use and disclosure of personal information. For more information on legal aspects of protecting privacy, see Chapter 69 in this Handbook.

30.3.5 Working with Law Enforcement.

Dealing with legal authorities is similarly complicated. Attempts at fraudulent purchases and other similar issues can be addressed using virtually the same procedures that are used with conventional attempts at mail or phone order fraud. Dealing with attacks and similar misuses is more complicated and depends on the organization's policies and procedures, and the legal environment. The status of the Web site is also a significant issue. If the server is located at a hosting facility, or is owned and operated by a third party, the situation becomes even more legally complicated. Involving law enforcement in a situation will likely require that investigators have access to the Web servers and supporting network, which may be difficult. Last, there is a question of what information is logged, and under what circumstances. For more information on working with law enforcement, see Chapter 61 in this Handbook.

30.3.6 Accepting Losses.

No security scheme is foolproof. Incidents will happen. Some reassurance can be taken from the fact that the most common reasons for system compromises in 2001 appear to remain the same as when Clifford Stoll wrote The Cuckoo's Egg in 1989. Then and now, poorly secured systems have:

- Obvious passwords into management accounts

- Unprotected system files

- Unpatched known security holes

However, eliminating the simplest and most common ways in which outsiders can compromise Web sites does not resolve all problems. The increasing complexity of site content, and of the applications code supporting dynamic sites, means that there is an ongoing design, implementation, testing, and quality assurance challenge. Server-based and server-distributed software (e.g., dynamic www sites) is subject to the same development hazards as other forms of software. Security hazards will slip into a Web site, despite the best efforts of developers and testers. The acceptance of this reality is an important part of the planning necessary to deal with the inevitable incidents. When it is suspected that a Web site, or an individual component, has been compromised, the reaction plans should be activated. The plans required are much the same as those discussed in Chapter 21 in this Handbook. The difference is that the reaction plan for a Web site has to take into consideration that the group primarily impacted by the plan will be the firm's customers. The primary goal of the reaction plan is to contain the damage. For more information on computer security incident response, see Chapter 56.

30.3.7 Avoiding Overreaction.

Severe reactions may create as much, if not more, damage than the actual attack. The reaction plan must identify the decision-making authority and the guidelines to allow effective decisions to be made. This is particularly true of major sites, where attacks are likely to occur on a regular basis. Methods to determine the point at which the Web site must be taken off-line to prevent further damage need to be determined in advance.

In summary, when protecting Web sites and customers, defensive actions are almost always permissible and offensive actions of any kind are almost always impermissible. Defensive actions that are transparent to the customer are best of all.

30.3.8 Appropriate Responses to Attacks.

Long before the advent of the computer, before the development of instant communications, international law recognized that firing upon a naval vessel was an act of war. Captains of naval vessels were given standing orders summarized as fire if fired upon. In areas without readily accessible police protection, the right of citizens to defend themselves is generally recognized by most legal authorities. Within the body of international law, such formal standards of conduct for military forces are known as rules of engagement, a concept with global utility.

In cyberspace, it is tempting to jettison the standards of the real world. It is easy to imagine oneself master of one's own piece of cyberspace, without connection to real-world laws and limitations on behavior. However, information technology (IT) personnel do not have the standing of ships' captains, with no communications to the outside world. Some argue that fire if fired upon is an acceptable standard for online behavior. Such an approach does not take into account the legal and ethical issues surrounding response strategies and tactics.

Any particular security incident has a range of potential responses. Which response is appropriate depends on the enterprise and its political, legal, and business environment. Acceptability of response is also a management issue as well as potentially a political issue. Determining what responses are acceptable in different situations requires input from management on policy, from legal counsel on legality, from public relations on public perceptions, and from technical staff on technical feasibility. Depending on the organization, it also may be necessary to involve unions and other parties in the negotiation of what constitutes appropriate responses.

What is acceptable or appropriate in one area is not necessarily acceptable or appropriate in another. Often the national security arena has lower standards of proof than would be acceptable in normal business litigation. In U.S. civil courts, cases are decided upon a preponderance of evidence. Standards of proof acceptable in civil litigation are not conclusive when a criminal case is being tried, where guilt must be established beyond a reasonable doubt.

Gambits or responses that are perfectly legal in a national security environment may be completely illegal and recklessly irresponsible in the private sector, exposing the organization to significant legal liability.

Rules of etiquette and behavior are similarly complex. The rights of prison inmates in the United States remain significant, even though they are subject to rules and regulations substantially more restrictive than for the general population. Security measures, as well, must be appropriate for the persons and situations to which they are applied.

30.3.9 Counter-Battery.

Some suggest that the correct response to a perceived attack is to implement the cyberspace equivalent of counter-battery, that is, targeting the artillery that has just fired upon you. However, counter-battery tactics, when used as a defensive measure against Internet attacks, will be perceived, technically and legally, as an attack like any other.

Counter-battery tactics may be emotionally satisfying but are prone to both error and collateral damage. Counter-battery can be effective only when the malefactor is correctly identified and the effects of the reciprocal attack are limited to the malefactor. If third parties are harmed in any way, then the retaliatory action becomes an attack in and of itself. One of the more celebrated counter-battery attacks gone awry was the 1994 case when two lawyers from Phoenix, Arizona, spammed over 5,000 Usenet newsgroups to give unsolicited information on a U.S. Immigration and Naturalization Service lottery for 55,000 green cards (immigration permits). The resulting retaliation—waves of e-mail protests—against the malefactors flooded their Internet service provider (ISP) and caused at least one server to crash, resulting in a denial of service to all the other, innocent, customers of the ISP.8

30.3.10 Hold Harmless.

It is critical that Internet policies adopt a hold harmless position. Dealing with an Internet crisis often requires fast reactions. That is, if employees act in good faith, in accordance with their responsibilities, and within documented procedures, management should never punish them for such action. If the procedures are wrong, managers should improve the rules and procedures, not blame the employees for following established policies. Disciplinary actions are manifestly inappropriate in such circumstances.

30.4 RISK ANALYSIS.

As noted earlier in this chapter, protecting an organization's Web sites depends on an accurate, rational assessment of the risks. Developing effective strategies and tactics to ensure site availability and integrity requires that all potential risks be examined in turn.

Unrestricted commercial activity has been permitted on the Internet since 1991. Since then, enterprises large and small have increasingly integrated Web access into their second-to-second operations. The risks inherent in a particular configuration and strategy are dependent on many factors, including the scale of the enterprise and the relative importance of the Web-based entity within the enterprise. Virtually all high-visibility Web sites (e.g., Yahoo, America Online, cnn.com, Amazon.com, and eBay) have experienced significant outages at various times.

The more significant the organization's Web component, the more critical is availability and integrity. Large, traditional firms with relatively small Web components can tolerate major interruptions with little damage. Firms large or small that rely on the Web for much of their business must pay greater attention to their Web presences, because a serious outage can quickly escalate into financial or public relations catastrophe.

For more details of risk analysis and management, see Chapters 62 and 63 in this Handbook.

30.4.1 Business Loss.

Business losses fall into several categories, any of which can occur in conjunction with an organization's Web presence. In the context of this chapter, customers are both outsiders accessing the Internet presence and insiders accessing intranet applications. In practice, insiders using intranet-hosted applications pose the same challenges as the outside users.

30.4.2 PR Image.

The Web site is the organization's public face 24/7/365. This ongoing presence is a benefit, making the firm visible at all times, but the site's high public profile also makes it a prime target.

Government sites in the United States and abroad have often been the targets of attacks. In January 2000, “Thomas,” the Web site of the U.S. Congress, was defaced. Earlier, in 1996, the Web site of the U.S. Department of Justice was vandalized. Sites belonging to the Japanese, U.K., and Mexican governments also have been vandalized.

These incidents have continued, with hackers on both sides of various issues attacking the other side's Web presence. While no conclusive evidence of the involvement of nation states in such activities has become generally known, it is inevitable. Surges of such activity have coincided with major public events, including the 2001 U.S.-China incident involving a aerial collision between military aircraft, the Afghan and Iraqi operations, and the Israeli-Lebanese war of 2006. Such activity has not been limited to the national security arena, however. Many sites were defaced during the incidents following publication of cartoons in a Danish newspaper that some viewed as defaming the prophet Muhammad.

In some cases,9 companies have suffered collateral damage from hacking contests, where hackers prove their mettle by defacing as many sites as they can. The fact that there is no direct motive or animus toward the company is not relevant; the damage has still been done.

In the corporate world, company Web sites have been the target of attacks intended to defame the corporation for real or imagined slights. Some such episodes have been reported in the news media, whereas others have not been the subjects of extensive reporting. The scale and newsworthiness of the episode is unimportant; the damage done to the targeted organization is the true measure. An unreported incident that is the initiating event in a business failure is more damaging to the affected parties than a seemingly more significant outage with less severe consequences.

Other cybervandals (e.g., sadmind/IIS) have used address scanners to target randomly selected machines. Obscurity is not a defense against address-scanner attacks.

30.4.3 Loss of Customers/Business.

Internet customers are highly mobile. Web site problems quickly translate into permanently lost customers. The reason for the outage is immaterial; the fact that there is a problem is often sufficient to provoke erosion of customer loyalty.

In most areas, there is competitive overlap. Using the overnight shipping business as an example, in most U.S. metropolitan areas there is (in alphabetical order) Airborne Express, Federal Express, United Parcel Service, and the United States Postal Service. All of the firms offer Web-based shipment tracking, a highly popular service. Problems or difficulties with shipment tracking will quickly lead to a loss of business in favor of a different company with easier tracking.

30.4.4 Interruptions.

Increasingly, modern enterprises are being constructed around ubiquitous 24/7/365 information systems, most often with Web sites playing a major role. In this environment, interruptions of any kind are catastrophic.

Production. The past 20 years have seen a streamlining of production processes in all areas of endeavor. Twenty years ago, it was common for facilities to have multiday supplies of components on-hand in inventory. Today, zero latency or just-in-time (JIT) environments are common, permitting large facilities to have no inventory. Fiscally, zero latency environments may be optimally cost efficient, yet the paradigm leaves little margin for disruptions of the supporting logistical chain. This chain is sometimes fragile, and subject to disruption by any number of hazards.

Supply Chain. Increasingly, it is common for Web-based sites to be an integral part of the supply chain. Firms may encourage their vendors to use a Web-based portal to gain access to the vendor side of the purchasing system. XML10-based gateways and service-oriented architecture (SOA) approaches, together with other Web technologies, are used to arrange for and manage the flow of raw materials and components required to support production processes. The same streamlining that speeds information between supplier and manufacturer also provides a potential for serious mischief and liability.

Delivery Chain. Web-based sites, both internal and external, also have become the vehicle of choice for tracking the status of orders and shipments, and increasingly as the backbone of many enterprises' delivery chain management and inquiry systems.

Information Delivery. Banks, brokerages, utilities, and municipalities are increasingly turning to the Web as a convenient, low-cost method for managing their relationships with consumers. Firms are also supporting downloading records of transactions and other relationship information in formats required by personal database programs and organizers. These outputs, in turn, are often used as inputs to other processes, which then generate other transactions. Not surprisingly, as time passes, more and more people and businesses depend on the availability of information on demand. Today's Web-based customers presume that information is accessible wherever they can use a personal computer or even a Web-enabled cellular telephone. This is reminiscent of usage patterns of automatic teller machines in an earlier decade, which allowed people to base their plans on access to teller machines, often making multiple $20 withdrawals instead of cashing a $200 check weekly.

30.4.5 Proactive versus Reactive Threats.

Some threats and hazards can be addressed proactively, whereas others are inherently reactive. When strategies and tactics are developed to protect a Web presence, the strategies and tactics themselves can induce availability problems.

As an example, consider the common strategy of having multiple name servers responsible for providing the translation of domain names to IP addresses. It is required before a domain name (properly referred to as a domain name service [DNS] zone) can be entered into the root-level name servers, that at least two name servers be identified to process name resolution requests. Name servers are a prime example of resources that should be geographically diverse.

Updating DNS zones requires care. If an update is performed improperly, then the resources referenced via the symbolic DNS names will become unresolvable, regardless of the actual state of the Web server and related infrastructure. The risk calculus involving DNS names is further complicated by the common, efficient, and appropriate practice of designating ISP name servers as the primary mechanism for the resolution of domain names. In short, name translation provides a good example of the possible risks that can affect a Web presence.

30.4.6 Threat and Hazard Assessment.

Some threats are universal, whereas others are specific to an individual environment. The most devastating and severe threats are those that simultaneously affect large areas or populations, where efforts to repair damage and correct the problem are hampered by the scale of the problem.

On a basic level, threats can be divided into several categories. The first is between deliberate acts and accidents. Deliberate acts comprise actions done with the intent to damage the Web site or its infrastructure. Accidents include natural phenomena (acts of God) and clumsiness, carelessness, and unconsidered consequences (acts of clod).

Broadly put, a deliberate act is one whose goal is to impair the system. Deliberate acts come in a broad spectrum of skill and intent. For the purpose of risk analysis and planning, deliberate acts against infrastructure providers can often appear to be acts of God. To an organization running a Web site, an employee attack against a telephone carrier appears simply as a service interruption of unknown origin.

No enterprise or agency should consider itself an unlikely target. Past high-profile incidents have targeted the FBI (May 26 and 27, 1999), major political parties, and interest groups in the United States. On the consumer level, numerous digital subscriber line (DSL)-connected home systems have been targeted for subversion as preparation for the launching of DDoS attacks. In 2007, several investigations resulted in the arrest of several individuals for running so-called botnets (ensembles of compromised computers). These networks numbered hundreds of thousands of machines.11 The potential for such networks to be used for mischief cannot be underestimated.

For more details of threats and hazards, see many other chapters in this Handbook, including 1, 2, 4, 5, 13, 14, 15, 16, 17, 18, 19, 20, 22, and 23.

30.5 OPERATIONAL REQUIREMENTS.

Internet-visible systems are those with any connection to the worldwide Internet. It is tempting to consider protecting Internet-visible systems as a purely technical issue. However, technical and business issues are inseparable in today's risk management. For example, as noted earlier in this chapter, the degree to which systems should be exposed to the Internet is fundamentally a business risk-management issue. Protection technologies and the policies behind the protection can be discussed only after the business risk questions have been considered and decided, setting the context for the technical discussions. In turn, business risk-management evaluation (see Chapter 62) must include a full awareness of all of the technical risks. Ironically, nontechnical business managers can accurately assess the degree of business risk only after the technical risks have been fully exposed.

Additional business and technical risks result from outsourcing. Today, many enterprises include equipment owned, maintained, and managed by third parties. Some of this equipment resides on the organization's own premises and other equipment resides off site: for example, at application service provider facilities.

Protecting a Web site begins with the initial selection and configuration of the equipment and its supporting elements, and continues throughout its life. In general, care and proactive consideration of the availability and security aspects of the site from the beginning will reduce costs and operational problems. Although virtually impossible to achieve, the goal is to design and implement an automatic system, with a configuration whose architecture and implementation operates even in the face of problems, with minimal customer impact.

That is not to say that a Web site can operate without supervision. Ongoing, proactive monitoring is critical to ensuring the secure operation of the site. Redundancy only reduces the need for real-time response by bypassing a problem temporarily; it does not eliminate the underlying cause. The initial failure must be detected, isolated, and corrected as soon as possible, albeit on a more schedulable basis. Otherwise, the system will operate in its successively degraded redundancy modes until the last redundant component fails, at which time the system will fail completely.

30.5.1 Ubiquitous Internet Protocol Networking.

Business has been dealing with the security of internets (i.e., interconnected networks) since the advent of internetworking in the late 1960s. However, the growing use of Transmission Control Protocol/Internet Protocol (TCP/IP) networks and of the public Internet has exposed much more equipment to attack than in the days of closed corporate networks. In addition, a much wider range of equipment, such as voice telephones based on voice-over IP (VoIP), fax machines, copiers, and even soft drink dispensers, are now network accessible.

IP connectivity has been a great boon to productivity and ease of use, but it has not been without a darker side. Network accessibility also has created unprecedented opportunities for improper, unauthorized access to networked resources and other mischief. It is not uncommon to experience probes and break-in attempts within hours or even minutes of unannounced connection to the global Internet.

Protecting Internet-visible assets is inherently a conflict between ease of access and security. The safest systems are those unconnected to the outside world. Similarly, the easiest to use systems are those that have no perceivable restrictions on use. Adjusting the limits on user activities and access must balance conflicting requirements. As an example, many networks are managed in-band, meaning that the switches, routers, firewalls and other elements of the network infrastructure are managed using the actual network as the connection medium. If the management interfaces were not managed over properly encrypted connections, management passwords would be visible on the network. If an outsider, or an unauthorized insider, monitors the network, information sufficient to paralyze the network and, in turn, the organization may be gained.

30.5.2 Internal Partitions.

Complex corporate environments can often be secured effectively by dividing the organization into a variety of interrelated and nested security domains, each with its own legal, technical, and cultural requirements. For example, there are specific legal requirements for medical records (see Chapters 71 in this Handbook) and for privacy protection (see Chapter 69 and 70). Partners and suppliers, as well as consultants, contractors, and customers, often need two-way access to corporate data and facilities. These diverse requirements mean that a single corporate firewall is often insufficient. Different domains within the organization will often require their own firewalls and security policies. Keeping track of the multitude of data types, protection and access requirements, and different legal jurisdictions and regulations makes for previously unheard-of degrees of complexity.

Damage control is another property of a network with internal partitions. A system compromised by an undetected malware component will be limited in its ability to spread the contagion beyond its own compartment.

30.5.3 Critical Availability.

Networks are often critical for second-to-second operations; as a result, the side effects of ill-considered countermeasures may be worse than the damage from the actual attack. For example, shutting down the network, or even part of it, for maintenance or repair can wreak more havoc than penetration by a malicious hacker.

30.5.4 Accessibility.

Users must be involved in the evolution of rules and procedures. Today, it is still not unheard of for a university faculty to take the position that any degree of security will undermine the very nature of their community, compromising their ability to perform research and inquiries. This extreme position persists despite the attention of the mass media, the justified beliefs of the technical community, and documented evidence that lack of protection of any Internet-connected system undermines the safety of the entire connected community.

Connecting a previously isolated computer system or network to the global Internet creates a communications pathway to every corner of the world. Customers, partners, and employees can obtain information, send messages, place orders, and otherwise interact 24 hours a day, seven days a week, 365 days a year, from literally anywhere on or near Earth. Even the Space Shuttle and the International Space Station have Internet access. Under these circumstances, the possibilities for attack or inadvertent misuse are limitless.

Despite the vast increase in connectivity, some businesses and individuals do not need extensive access to the global Internet for their day-to-day activities, although they may resent being excluded. The case for universal access is therefore a question of business policy and political considerations.

30.5.5 Applications Design.

Protecting a Web site begins with the most basic steps. First, a site processing confidential information should always support the secure hypertext transfer protocol (HTTPS), typically using TCP port 443. Properly supporting HTTPS requires the presence of an appropriate digital certificate (see Chapter 37).

When the security requirements are uncertain, the site design should err on the side of using HTTPS for communications. Although the available literature on the details of Internet eavesdropping is sparse, the Communications Intelligence and Signals Intelligence (COMINT/SIGINT) historical literature from World War II makes it abundantly clear that encryption of all potentially sensitive traffic is the only way to protect information.

Encryption also should be used within an organization, possibly with a different digital certificate, for sensitive internal communications and transactions. Earlier, it was noted that organizations are not monolithic security domains. This is nowhere more true than when dealing with human resources, employee evaluations, compensation, benefits, and other sensitive employee information. There are positive requirements that this information be safeguarded, but few organizations have truly secured their internal networks against internal monitoring. It is far safer to route all such communications through securely encrypted channels. Such measures also demonstrate a good faith effort to ensure the privacy and confidentiality of sensitive information.